TOUCH-LESS PALM PRINT BIOMETRIC SYSTEM

Michael Goh Kah Ong, Connie Tee and Andrew Teoh Beng Jin

Multimedia University, Jalan Ayer Keroh Lama, 75450, Melaka, Malaysia

Keywords: Palm print recognition, hand tracking, local binary pattern (LBP), gradient operator, probabilistic neural

networks (PNN).

Abstract: In this research, we propose an innovative touch-less palm print recognition system. This project is

motivated by the public’s demand for non-invasive and hygienic biometric technology. For various reasons,

users are concerned about touching the biometric scanners. Therefore, we propose to use a low-resolution

web camera to capture the user’s hand at a distance for recognition. The users do not need to touch any

device for their palm print to be extracted for analysis. A novel hand tracking and palm print region of

interest (ROI) extraction technique are used to track and capture the user’s palm in real time video streams.

The discriminative palm print features are extracted based on a new way that applies local binary pattern

(LBP) texture descriptor on the palm print directional gradient responses. Experiments show promising

result by using the proposed method. Performance can be further improved when a modified probabilistic

neural network (PNN) is used for feature matching.

1 INTRODUCTION

Palm print recognition is a biometric technology

which recognizes a person based on his/her palm

print pattern. Palm print serves as a reliable human

identifier because the print patterns are not

duplicated in other people, even in monozygotic

twins. More importantly, the details of these ridges

are permanent. The ridge structures are formed at

about thirteenth weeks of the human embryonic

development and are completed by about eighteenth

week (C. Harold and M. Charles, 1943). The

formation remains unchanged from that time on

throughout life except for size. After death,

decomposition of the skin is last to occur in the area

of the palm print. Compared with the other physical

biometric characteristics, palm print authentication

has several advantages: low-resolution imaging,

low-intrusiveness, stable line features and low-cost

capturing device.

Currently, most of the palm print biometrics

utilize scanner or CCD camera as the input sensor.

The users must touch the sensor for their hand

images to be acquired. In public areas, like the

hospital especially, the sanitary issue is of utmost

importance. People are concerned about placing

their fingers or hands on the same sensor where

countless others have also placed theirs. This

problem is particularly exacerbated in some Asian

countries at the height of the SARS epidemic.

Besides, latent palm prints which remain on the

surface could be copied for illegitimate uses. Apart

from that, the surface will get contaminated easily if

not used right, especially in harsh, dirty, and outdoor

environments. In addition, some conservative

nations may resist placing their hands after a user of

the opposite sex has touched the sensor. Therefore,

there is pressing need for a biometric technology

which is flexible enough to capture the users’ hand

images without having the users to touch the

platform of the sensor.

1.1 Related Work

A number of palm print recognition research have

been reported in the literature and most of them

address the efficiency of the feature extraction

algorithms. The proposed palm print representation

schemes include Eigenpalms (C. Harold and M.

Charles, 1943), Fisherpalms (X. Wu et al., 2003),

Gabor code (D. Zhang et al., 2003), Competitive

Code (W. K. Kong and D. Zhang, 2004), Ordinal

feature (Z. Sun et al., 2005), line features (J. Funada

et al., 1998), and feature points (D. Zhang and W.

Shu, 1999). However, not much detail of the palm

print acquisition method was provided although the

acquisition process is one of the key considerations

in developing a fast and robust online recognition

423

Goh Kah Ong M., Tee C. and Teoh Beng Jin A. (2008).

TOUCH-LESS PALM PRINT BIOMETRIC SYSTEM.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 423-430

DOI: 10.5220/0001073504230430

Copyright

c

SciTePress

system. In earlier study, inked-based palm print

images (J. Funada et al., 1998) (D. Zhang and W.

Shu, 1999) were used. The palm prints were inked to

paper and digitized using scanner. The two-step

process was slow and is not suitable for online

system. Recently, various input sensor technology

like flatbed scanner, CCD camera, CMOS camera,

and infrared sensor have been introduced for more

straight-forward palm print acquisition. Among the

technology, scanner and CCD camera are the

commonly used input devices (C. Harold and M.

Charles, 1943) (X. Wu et al., 2003). Scanner and

CCD camera are able to provide very high quality

images with little loss of information. However, the

process of scanning a palm image requires some

time (a few seconds) and the delay cannot cope with

the requirement of an online system. Zhang et al. (D.

Zhang et al., 2003) proposed the use of CCD camera

in semi-closed environment for online palm print

acquisition and good results had been reported by

using this approach. In this paper, we explore the use

of a low-resolution web-cam for palm print

acquisition and recognition in real-time system.

1.2 Challenges

There is high demand for touch-less biometrics due

to various social and sanitary issues. However, the

design of touch-less palm print system is not easy.

Since the touch-less system does not restrict the user

to touch or hold any platform and guidance peg, the

system must be able to detect the existence of hand

once the hand is presented on the input sensor. The

main challenges in designing the touch-less system

are highlighted as follow:

Distance between the hand and input sensor –

Since the user’s hand is not touching any

platform, the distance of the hand from the input

sensor may vary. If the hand is placed too far

away from the input sensor, the palm print details

will be lost. On the other hand, if the hand is

positioned too near to the input sensor, the sensor

may not be able to capture the entire hand image

and some area of the palm print maybe missing.

Thus, a system which allows flexible range of

distance between the hand and the input sensor

should be designed.

Clenched fingers/palm – Some users may overly

clench their fingers and palm due to nervousness

or other factors. If the user’s fingers and palm are

hold tightly together, the skin surface of the palm

tends to crumple and fold up and produce some

non-permanent wrinkles that may perturb the

performance of system. Therefore, a robust

algorithm that could tackle this situation must be

devised.

Hand position and rotation – As no guidance

peg is used to constraint the user’s hand, the user

may place his/her hand in various directions and

position. The system must be able to cope with

changes in position and orientation of the user’s

hand in a less restrictive environment.

Lighting illumination - Variation in lighting can

have significant effect on the ability of the system

to recognize individuals. Thus, the system must be

capable of generalizing the palm print images

across lighting changes.

1.3 Contributions

In this paper, we have endeavoured to develop an

online touch-less palm print recognition system that

attempts to confront the challenges above. A touch-

less palm print recognition system is designed by

using low-resolution CMOS web camera to acquire

real-time palm print images. A novel hand tracking

algorithm is developed to automatically track and

detect the region of interest (ROI) of the palm print.

A pre-processing step is proposed to correct the

illumination and orientation in the image. As edges

(principal lines, wrinkles and ridges) capture the

most important aspects of the palm print images, an

algorithm is developed to preserve and enhance the

line structures under varying illumination and pose

changes. We have proposed a new feature extraction

method to extract the distinguishing palm print

feature for representation. Gradient operator is

applied to obtain the directional responses of the

palm print and LBP is used to obtain the texture

description of the palm pattern in different

directions. Besides, a modified PNN is also devised

as the real-time feature matching tool in this

research.

2 PROPOSED SYSTEM

In this paper, we propose a touch-less online palm

print recognition system. We describe a flexible

hand tracking and ROI locator to detect and extract

the palm print in real-time video stream. The

algorithm works under typical office lighting and

daylight conditions. Figure 1 shows the framework

of the proposed system.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

424

Figure 1: The proposed touch-less palm print recognition

system.

2.1 Hand Tracking and ROI

Extraction

The hand tracking and ROI extraction step consists

of three stages. First, we segment the hand image

from the background by using the skin-colour

thresholding method. After that, a valley detection

algorithm is used to find the valleys of the fingers.

These valleys serve as the base points to locate the

palm print region. The details of the steps are

provided in the following sections.

2.1.1 Skin-Colour Thresholding

In order to segment human hand from the

background, the skin colour modal proposed by

(internet: Face Detection, 2000) is used. The human

skin colour can be modelled as a Gaussian

distribution,

(, )N

μ

σ

, in the chromatic colour space,

x. The chromatic colour space can remove

luminance from the colour representation. To

segment the hand from the background, the

likelihood of the skin colour, L, can be computed by

as

()()

1

exp 0.5

T

Lxx

μ

σμ

−

⎡⎤

=− − −

⎣⎦

where

μ

and

σ

are the mean and covariance of the skin colour

distribution. We use samples from 1005 skin colour

images to determine the values of

μ

and

σ

. After the

skin likelihood value is determined, the hand is

segmented from the background by using the

thresholding method. Figure 2 depicts the result of

binarizing a hand image.

(a)

(b)

Figure 2: Skin-colour thresholding: (a) The original hand

image; (b) segmented hand image in binary form.

2.1.2 Valley Detection

We propose a novel competitive hand valley

detection (CHVD) algorithm to locate the ROI of the

palm. We trace along the contour of the hand to find

possible valley locations. A pixel is considered a

valley if it has some neighboring points lying in the

non-hand region while the majority neighboring

points are in the hand region (Figure 3). If a line is

directed outwards from the pixel, the line must not

cross any hand region along the way. Based on these

assumptions, four conditions are formulated to test

the existence of a valley. A pixel must satisfy all the

four conditions to be qualified as a valley location. If

it fails one the conditions, the pixel will be

disregarded and the algorithm proceeds to check for

valley-existence in the next pixel. Rather than

scanning the entire hand image for valley location,

the competitive valley checking method greatly

speeds up the valley detection process.

(a)

(b)

(c)

Figure 3: The proposed competitive hand valley detection

algorithm.

The four conditions to check the current pixel

for valley existence are:

▪ Condition 1: Four checking-points with equal

distance are placed around the current pixel

(Figure 3(a)). The four points are placed β pixels

away from the current pixel. If one of the points

falls in the non-hand region (pixel value = 1),

while the remaining within the hand region (pixel

values = 0), this pixel is considered a candidate

for valley and we proceed to check for Condition

2. Otherwise, the test stops and the algorithm

proceeds to check the next pixel.

▪ Condition 2: The distance of the checking-points

from the current pixel is increased to β+α pixels,

and the number of checking-points is increased to

eight (Figure 3(b)). If there is at least 1 and not

more than 4 consecutive neighbouring points

falling in the non-hand region, while the

remaining within the hand region, this pixel

satisfies the second condition and we proceed to

the next condition.

▪ Condition 3: The number of checking-points is

increased to 16. The distance of the points from

the current pixel is β+α+µ pixels. If there is at

least 1 and not more than 7 points falling in the

TOUCH-LESS PALM PRINT BIOMETRIC SYSTEM

425

non-hand region, while the remaining points

within the hand region, this pixel is considered a

candidate for valley and we proceed to the last

condition.

▪ Condition 4: To complete the test, a line is drawn

from the current pixel towards the non-hand

region (Figure 3(b)). This is to avoid erroneous

detection of a gap /loop-hole in the hand as valley.

If this line does not pass through any hand-region

along the way, the current pixel is asserted as a

valley point.

In this research, the values of β, α, and µ are set

to 10. We set the range of the number of checking-

points in the non-hand region in the three conditions

to be 1, 1 ≤points< 4, and 1 ≤points< 7, respectively.

This is based on the assumption that nobody can

stretch his/her finger apart beyond 120

o

. For

example, the angle between the 2 fingers illustrated

in Figure 3(c) is approximately 90

o

estimated based

on the sectors of the circle between the fingers (each

sector = 22.5

o

).

2.1.3 ROI Location

After obtaining the valleys of the finger, P

1

, P

2

, P

3

,

and P

4

, a line is formed between P

2

and P

4

. After

that, a square is drawn below the line as shown in

Figure 4(b). The square represents the region of

interest (ROI) of the palm. Based on the experiment,

the average time taken to detect and locate the ROI

is less than 1 millisecond.

(a) (b) (c)

Figure 4: The ROI location technique: (a) Locations of the

4 valleys, (b) a line is drawn to connect P

2

and P

4

. A

square is drawn from the line. This square forms the ROI

of the palm, (c) the ROI detected in the other side of the

hand.

2.2 Image Pre-processing

As the ROIs are of different sizes and orientations,

the pre-processing job is performed to align all the

ROIs into the same locations. First, the images are

rotated to the right-angle position by using the Y-

axis as the rotation-reference axis. After that, as the

size of the ROIs vary from hand to hand (depending

on the sizes of the palms), they are resized to a

standard image size by using bicubic interpolation.

In this research, the images are resized to 150 x 150

pixels.

We enhance the contrast and sharpness of the

palm print images so that the dominant palm print

features like principal lines and ridges can be

highlighted and become disguisable from the skin

surface. The Laplacian isotropic derivative operator

is used for this purpose. After that, the Gaussian

low-pass filter is applied to smooth the palm print

images and bridge some small gaps in the lines.

Figure 5(a) shows the original palm print image and

Figure 5(b) depicts the result of applying the image

enhancement operators. The detail in the enhanced

image is clearer and sharper in which fine details

like the ridges are more visible now.

(a)

(b)

Figure 5: (a) The original palm print, (b) palm print after

the contrast adjustment and smoothing effect.

2.3 Feature Extraction

We propose a new way to apply the Local Binary

Patterns (LBP) texture descriptor (T. Ojala et al.,

2002) on the directional responses of gradient

operator. Unlike fingerprint which flows in uniform

structure with alternating ridges and furrows, the

texture of palm print is irregular and the lines and

ridges can flow in various directions. This motivates

us to decompose the line patterns into four directions

and study them separately. LBP is then used to

analyze and describe the texture of the palm print in

the various directions.

The Sobel operator is deployed in this work to

obtain the palm print responses in different

orientations. The Sobel operator is a well-known

filter that can be used to detect discrete directional

gradient. We applied Sobel operator to find palm

print responses along the horizontal, vertical and

diagonal in minus and plus 45 degree directions. The

Sobel masks used are illustrated in Figure 6.

(a) (b) (c) (d)

Figure 6: The Sobel masks used to detect the palm print

(a) horizontally, (b) vertically, (c) diagonally at positive

45

o

, and (d) diagonally at negative 45

o

.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

426

For computational efficiency and noise reduction

purposes, we first decompose the palm print image

into lower resolution images by using wavelet

transformation before applying the Sobel operator.

Refer (T. Connie et al., 2005) for the detail of

applying wavelet transformation on palm print

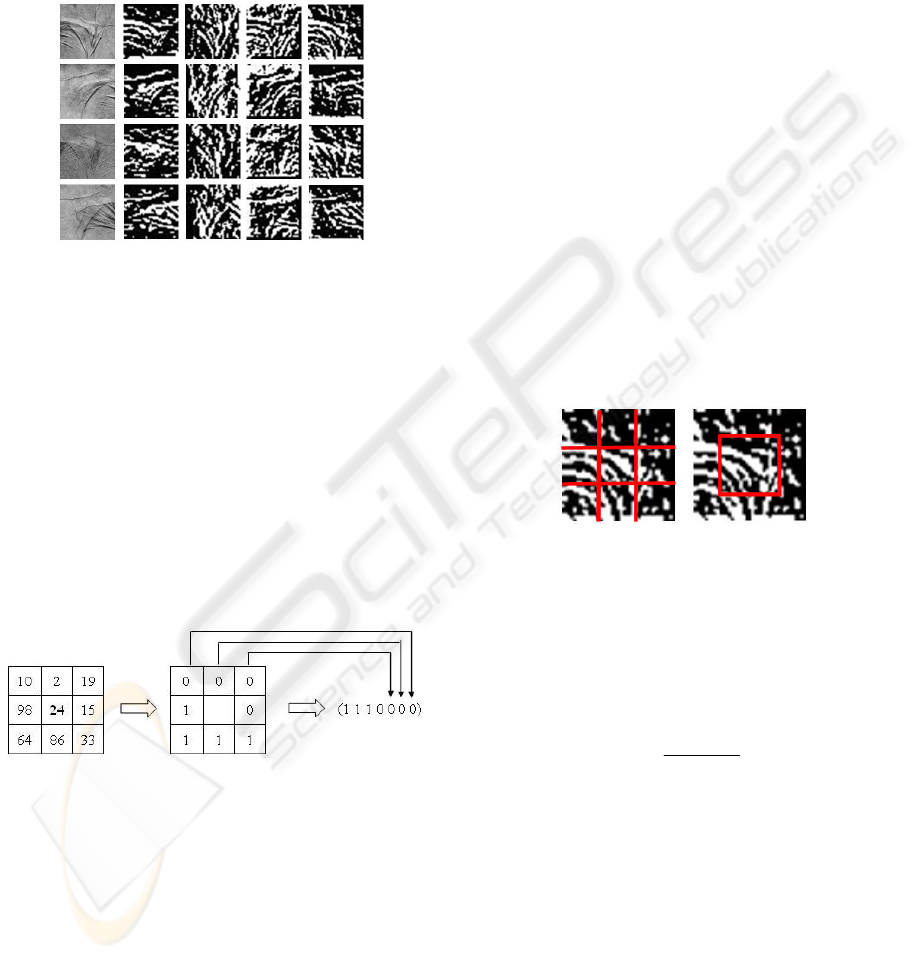

images. Figure 7 shows the components of the palm

print in four directions by applying Sobel operator.

Figure 7: Examples of directional responses derived using

Sobel operator. (a) Original palm print images, (b) to (e)

components of the images in the horizontal, vertical,

positive 45

o

, and negative 45

o

directions.

2.3.1 Local Binary Patterns

The LBP operator (T. Ojala et al., 2002) is a simple

yet powerful texture descriptor that has been used in

various applications. Its high discrimination ability

and simplicity in computation have made it very

suitable for online recognition system. LBP operator

labels every pixel in an image by thresholding its

neighboring pixels with the center value. Figure 8

illustrates an example how the binary label for a

pixel value is obtained.

Figure 8: Example to calculate the binary label in LBP.

It is found that certain fundamental patterns in

the bit string account for most of the information in

the texture [15]. These fundamental patterns are

termed as “uniform” patterns and they are bit strings

with at most 2 bitwise transitions from 0 to 1 and

vice versa. Examples of uniform patterns include

00000000, 11110000, and 00001100. A label is

given to each of the uniform patterns and the other

“non-uniform” patterns are assigned to a single

label. After the labels have been determined, a

histogram of the labels is constructed as:

{

}

,

(, ) ,

l

ij

HLijl==

∑

0,... 1ln=−

(1)

where n is the number of different labels produced

by the LBP operator. The histograms of the labels

are used as the texture descriptor. It contains

information about the local descriptions in the

image.

In this work, we divide the palm print images

into several local regions,

12

, ,...,

m

R

RR

, and extract

the texture descriptor from each region

independently. The local texture descriptors are then

concatenated to form a global descriptor of the

image. We subdivide the image into 9 equally-sized

sub-windows, and an overlapping window in the

centre (Figure 9). The reason we form a window in

the centre is because we believe that the region

encodes important information of edge flow of the

three principal lines. The same operation is

performed on the other palm print components in the

three other directions. Therefore, the texture

descriptor for a given palm print will have a size of n

(the number of labels) x m (the number of sub-

windows) x 4 (the components of palm print in 4

directions).

Figure 9: A palm print image is divided into rectangular

sub-windows. (We show the sub-windows in two separate

images for clearer illustration).

2.3.2 Feature Matching

In this research, the Chi-square measure is deployed

as the feature matching tool:

()

2

2

0

(, )

n

ii

i

ii

PG

PG

PG

χ

=

−

=

+

∑

(2)

where n is the number of length of the feature

descriptor, P is the probe set, and G denotes the

gallery set. We have also deployed a modified

Probabilistic neural network (PNN) to classify the

palm print texture descriptors using the neural

networks approach. The motivations of using PNN

are driven by its good generalization property and its

ability to classify dataset in just one training epoch.

PNN is a kind of radial basis network primarily

based on the Bayes-Parzen classification. Besides

the input layer, it contains a pattern, summation and

output layers (T. Andrew et al., 2006). The pattern

TOUCH-LESS PALM PRINT BIOMETRIC SYSTEM

427

layer consists of one neuron for each input vector in

the training set, while the summation layer contains

one neuron for each class to be recognized. The

output layer merely holds the maximum value of the

summation neurons to yield the final outcome

(probability score). To tailor the specific

requirement of the proposed online palm print

recognition system, the formula to calculate the

outcome of the pattern layer is modified to

2

1

exp (( ) /( )) /out

ω

ωσ

=

⎛⎞

=− − +

⎜⎟

⎜⎟

⎝⎠

∑

n

jiijiij

i

PP

. In this

case, out

j

is the output of neuron j in pattern layer; P

i

refers to the probe set of user i, ω

ij

denotes the

weight between i

th

neuron of the input layer and j

th

neuron in the pattern layer. σ is the smoothing

parameter of the Gaussian kernel and is also the only

parameter dependent on the user’s choice. In this

paper, the value of σ is set to 0.1 (T. Andrew et al.,

2006).

3 EXPERIMENT SETUP

In this experiment, a standard PC with Intel Pentium

4 HT processor (3.4 GHz) and 1024 MB random

access memories is used. Our capturing device is a

1.3 mega pixel web camera. The palm print is

detected in real-time video sequence at 25 fps. The

image resolution is 640 x 480 pixels, with color

output type in 256 RGB (8 bits-per-channel). The

interval between capturing the next ROI is 2

seconds. The exposure parameter of the web-cam is

set to low to reduce the effect of background light as

the background light may disrupt the quality of the

palm print image. We place a 9 watt warm-white

light bulb beside the camera. The bulb emits

yellowish light source that enhances the lines and

ridges of the palm. A black cardboard is placed

around the web-cam and light bulb to set up a semi-

controlled environment as shown in Figure 10. The

black cardboard can absorb some reflectance from

the light bulb so that the palm image will not appear

too bright.

Figure 10: The experiment setup.

The proposed methodology is tested on a

database containing palm images from 320

individuals. 147 of them are females, 236 of them

are less than 30 years old, and 15 of them are more

than 50 years old. The testing subjects come from

different ethnic groups: 136 Chinese, followed by

125 Malays, 45 Indians, 6 Arabians, 2 Indonesians,

2 Pakistanis, a Africans, a Mongolian, a Sudanese

and a Punjabi. Most of them are students and

lecturers from Multimedia University. To investigate

how well the system can identify unclear or worn

palm prints due to laborious work, we have also

invited ten cleaners to contribute their palm print

images to our system.

The users can place their hands about 40cm to

60 cm above the input sensor. The users are

requested to stretch their fingers during the image

capturing process. They are allowed to wear rings

and other ornaments. Besides, users with long finger

nails can also be detected by the system. Twenty

palm print images were captured from each hand

and this yields a total of 12, 800 palm print images

in the database.

4 RESULTS AND DISCUSSION

In this section, we conduct extensive experiments to

evaluate the effectiveness and robustness of the

proposed system. We first carried out palm print

tracking in dynamic environment to validate the

robustness of the proposed hand tracking technique.

After that, we performed offline testing to evaluate

the performance of the proposed algorithm.

4.1 Online Palm Print Tracking

The first experiment is conducted in the semi-

controlled environment shown in Figure 10. A user

was asked to present his hand above the web-cam

and slowly rotate his hand to the left and right

directions. The user was also asked to move his hand

closer and gradually away from the web-cam. Some

tracking results of the palm print region are shown in

Figure 11.

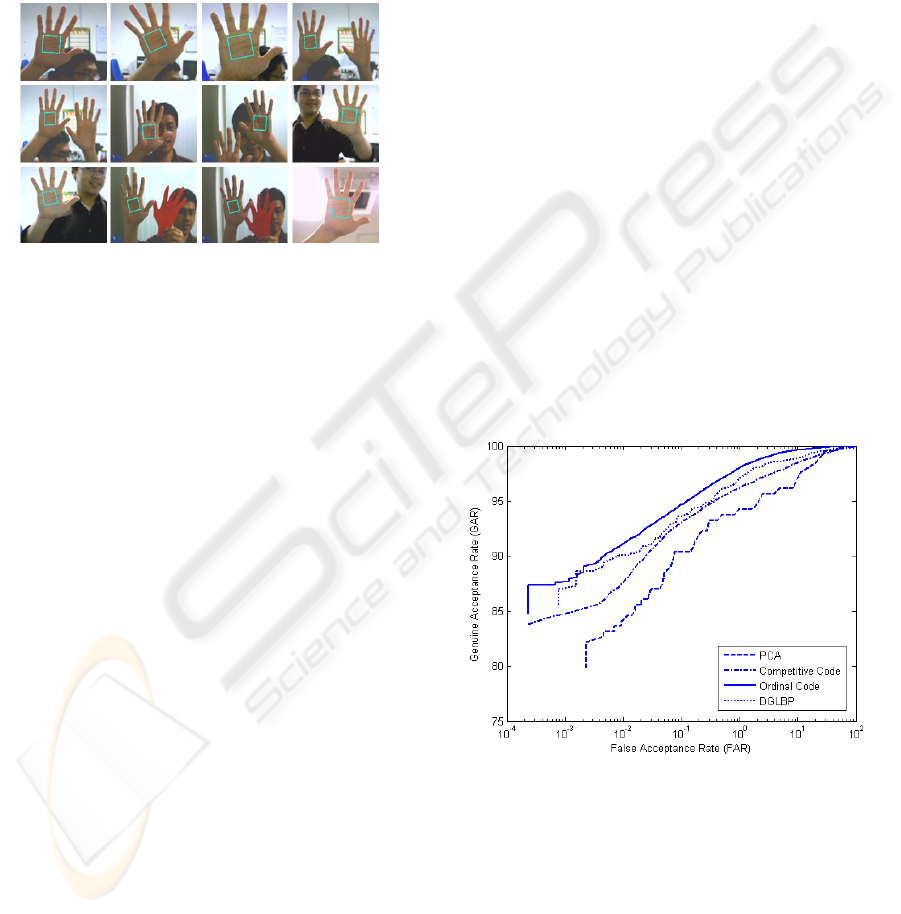

Figure 11: Some tracking results of the proposed palm

print tracking algorithm in semi-controlled environment.

The proposed palm print tracking method

performs quite well as the ROI of the palm print can

Enclosure

Web camera

Hand

Light bulb

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

428

be located regardless of changes in size and

direction. The average time to track and locate the

ROI is 12 milliseconds. We further assessed the

effectiveness of the algorithm in dynamic

environment. In this video sequence, the user had

continuous body movements, and the image was

disrupted by other background objects and varying

illumination conditions. Figure 12 displays the test

sequence to locate the palm print in dynamic

environment.

Figure 12: Some tracking result of the proposed palm print

tracking algorithm in dynamic environment.

Based on the tracking result, the proposed

algorithm performs well in dynamic environment.

The images in the top row, for example, contain

other background objects like calendar, whiteboard,

computer and even the face of the user. The

algorithm was able to locate the palm print region

among the cluttered background. When both hands

were present in the image (for example, the first

image from the right in the first row), the algorithm

detected one of the palm prints. We designed the

system in such a way that only one hand is required

to access the application. Therefore, the first palm

detected in the video sequence was used for further

analysis. Besides, we tried to spoof the algorithm by

presenting a fake hand made from Manila paper.

Some lines were drawn on the fake image to make it

more “palm-like”. Nevertheless, the algorithm still

managed to recognize the real palm based on the

colour cue. Apart from that, we wanted to

investigate how well the tracking algorithm

performs under adverse lighting condition. When the

palm was placed under a bright light exposure (the

first image from the right in the last row), the

algorithm could locate the palm print region

accurately.

4.2 Verification

The experiment was conducted based on the palm

print images captured in the setting described in

Section 3. Among the 20 images provided by each

user for each hand, 10 images are used as gallery set

while the others as probe set. Equal error rate (ERR)

is used as the evaluation criteria in the experiment.

EER is the average value of two error rates: false

acceptance rate (FAR) and false rejection rate

(FRR).

The proposed method is compared against other

representative techniques in palm print recognition

which include PCA (C. Harold and M. Charles,

1943), Competitive Code (W. K. Kong and D.

Zhang, 2004) and Ordinal Code (Z. Sun et al.,

2005). To differentiate our method from the others,

we name it directional gradient based local binary

pattern (DGLBP) thereafter. Figure 13 depicts the

comparison among the four techniques. It is shown

that DGLBP is comparable to that of Competitive

Code and Ordinal Code. Apart from the promising

result, DGLBP has a big advantage over the other

methods because of its simplicity in computation.

LBP operator only requires time complexity of

O(2

n

), where n equals the number of

neighbourhoods, to generate the labels once.

Depending on the number of sub-regions formed in

an image, the time complexity to produce the LBP

descriptor is O(mhw), where m denotes the number

of sub-regions, while, h and w refer to the height

and width of a sub-region, respectively. The

complexity of the algorithm can be reduced to

O(mn) if the sizes of h and w are small.

Figure 13: ROC which compares the performance of four

palm print representative methods.

A comparative study was also conducted by

using Chi-square measure and modified PNN. This

is to investigate how well the modified PNN can

improve the performance of the system. The

comparison is provided in Table 1. Three training

samples were used in training the modified PNN.

TOUCH-LESS PALM PRINT BIOMETRIC SYSTEM

429

Table 1: The EER and the execution time taken for

verification of each user.

Image Resolution EER (%) Average time

(sec.)

Modified PNN 0.74 0.73

Chi-square measure 1.52 0.22

PNN had demonstrated superior performance as

compared to Chi-square measure as PNN possesses

better generalization property. However, the speed

of training was achieved at the cost of increase in

complexity and computational/ memory

requirements. The time complexity for training by

using PNN is O(mp), where m denotes the input

vector dimension and p is the number of training

samples. The time recorded in Table 1 is the speed

taken for PNN and Chi-square measure to run the

verification test using 20 palm print samples. It can

be observed that PNN indeed took longer time than

Chi-square measure. However, the gain in

performance is significant as the EER could be

reduced from 1.52% to 0.74%. Therefore, PNN is

still favoured over Chi-square measure in this

research.

5 CONCLUSIONS

This paper presents an innovative touch-less palm

print recognition. The proposed touch-less palm

print recognition system offers several advantages

like flexibility and user-friendliness. We proposed a

novel palm print tracking algorithm to automatically

detect and locate the ROI of the palm. The proposed

algorithm works well under dynamic environment

with cluttered background and varying illumination.

A new feature extraction method has also been

introduced to extract the palm print effectively. In

addition, we applied a modified PNN to tailor the

requirement of the online recognition system for

palm print matching. Extensive experiments have

been conducted to evaluate the performance of the

system. Experiment results show that the proposed

system is able to produce promising result. Apart

from that, another valuable advantage is that the

proposed system could perform very fast in real-time

application. It takes less than 3 seconds to capture,

process and verify a palm print image in a database

containing 12, 800 images.

REFERENCES

C. Harold, and M. Charles, “Finger Prints, Palms and

Soles An Introduction To Dermatoglyphics,” The

Blakiston Company Philadelphia, 1943.

G. Lu, D. Zhang, K. Wang, “Palmprint Recognition Using

Eigenpalms Features,” Pattern Recognition Letters,

vol. 24, issues 9-10, pp. 1473-1477, 2003.

X. Wu, D. Zhang, K. Wang, “Fisherpalms Based

Palmprint Recognition,” Pattern Recognition Letters,

vol. 24, pp. 2829–2838, 2003.

D. Zhang, W. Kong, J. You, M. Wong, “On-line Palmprint

Identification,” IEEE Transaction on PAMI, vol. 25

(9), pp. 1041-1050, 2003.

W.K. Kong, D. Zhang, “Competitive Coding Scheme for

Palm print Verification,” Proceedings of the 17th Int’l

Conf. on Pattern Recognition, ICPR’04, 2004.

Z. Sun, T. Tan, Y. Wang, S.Z. Li, “Ordinal Palmprint

Representation for Personal Identification,” CVPR,

pp. 279-284, 2005.

J. Funada, N. Ohta, M. Mizoguchi, T. Temma, K.

Nakanishi, A. Murai, T. Sugiushi, T. Wakabayashi, Y.

Yamada, “Feature Extraction Method for Palmprint

Considering Elimination of Creases,” Proceedings of

the 14

th

International Conference on Pattern

Recognition, vol. 2, pp. 1849-1854, 1998.

D. Zhang, W. Shu, “Two Novel Characteristics in

Palmprint Verification: Datum Point Invariance and

Line Feature Matching,” Pattern Recognition, vol. 32,

pp. 691-702, 1999.

Face Detection - http://www-cs-students.stanford.edu/

~robles/ee368/main.html, 2000.

T. Ojala, M. Pietikäinen, T. Mäenpää, “Multiresolution

Gray-scale and Rotation Invariant Texture

Classification With Local Binary Patterns,” IEEE

Transaction on Pattern Analysis and Machine

Intelligence, vol. 24(7), pp. 469-481, 2002.

T. Connie, T. Andrew, K.O. Goh, “An Automated

Palmprint Recognition System,” Image and Vision

Computing, vol. 23 (5), pp. 501-515, 2005.

T. Andrew, T. Connie, N. David, “Remarks on BioHash

and Its Mathematical Foundation,” Information

Processing Letters, vol. 100(4), pp. 145-150, 2006.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

430