INTELLWHEELS

A Development Platform for Intelligent Wheelchairs for Disabled People

Rodrigo A. M. Braga

1,2

, Marcelo Petry

2

, Antonio Paulo Moreira

2

and Luis Paulo Reis

1,2

1

Artificial Intelligence and Computer Science Lab-LIACC

2

Faculty of Engineering of University of Porto, Rua Dr. Roberto Frias, s/n 4200-465, Porto, Portugal

Keywords: Intelligent Wheelchair, Intelligent Robotics, Human-Robots Interfaces.

Abstract: Many people with disabilities find it difficult or even impossible to use traditional powered wheelchairs

independently by manually controlling the devices. Intelligent wheelchairs are a very good solution to assist

severely handicapped people who are unable to operate classical electrical wheelchair by themselves in their

daily activities. This paper describes a development platform for intelligent wheelchairs called IntellWheels.

The intelligent system developed may be added to commercial powered wheelchairs with minimal

modifications in a very straightforward manner. The paper describes the concept and design of the platform

and also the intelligent wheelchair prototype developed to validate the approach. Preliminary results

concerning automatic movement of the IntellWheels prototype are also presented.

1 INTRODUCTION

Wheelchairs are important locomotion devices for

handicapped and senior people. With the increase in

the number of senior citizens and the increment of

people bearing physical deficiencies in the social

activities, there is a growing demand for safer and

more comfortable Intelligent Wheelchairs (IW) for

practical applications. The main functions of IWs

are (Jia, 2005) (Faria, 2007a) (Faria, 2007b):

• Interaction with the user, including hand based

control (such as, joystick, keyboard, mouse,

touch screen); voice based control; vision based

control and other sensor based control (such as

pressure sensors);

• Autonomous navigation (with safety, flexibility

and robust obstacle avoidance);

• Communication with other devices (like

automatic doors and other Wheelchairs).

This paper discusses the concept and the design

of a development platform for intelligent

wheelchairs. The project, called IntellWheels, is

composed of a control software, simulator/

supervisor and a real prototype of the intelligent

wheelchair. In the study, shared control and high-

level planning algorithms applied in an IW operating

in a hospital environment were developed and

tested. A shared control algorithm was tested,

allowing the IW to automatically avoid dangerous

situations. Also, typical algorithms used in most

intelligent robotics applications were applied in the

control of the IW and simulated in a hospital

scenery. Blended with the control, a motion planner

was developed capable of generating the

behavior/path commands according to an a-priori

created map of the world. This motion planner is

capable of instructing the low-level motion

controller module to achieve the high-level

commands desired by the user (Luo, 1999).

The rest of the paper is subdivided as following

different sections: Section 2 presents some related

work; section 3 explains the hardware desing of our

development plataform; section 4 and 5 contain a

complete description of the software design and

control system; section 6 provides experimental tests

and result discussion and section 7 presents the final

conclusions and points out some future research

topics.

2 RELATED WORK

This section presents a brief to the state of the art

about Intelligent Wheelchairs.

In recent years, many intelligent wheelchair have

been developed (Simpson, 2005). Only in the year of

115

A. M. Braga R., Petry M., Paulo Moreira A. and Paulo Reis L. (2008).

INTELLWHEELS - A Development Platform for Intelligent Wheelchairs for Disabled People.

In Proceedings of the Fifth International Conference on Informatics in Control, Automation and Robotics - RA, pages 115-121

DOI: 10.5220/0001501701150121

Copyright

c

SciTePress

2006 more than 30 publications in IEEE, about IW,

may be found.

One of the first concept projects of an

autonomous wheelchair for handicapped physicists

was proposed by Madarasz (Madarasz, 1986). He

presented a wheelchair equipped with a

microcomputer, a digital camera, and ultrasound

scanner. His objective was to develop a vehicle

capable to operate without human intervention in

populated environments with little or no collision

with the objects or people contained in it.

Hoyer and Holper (Hoyer, 1993) presented an

architecture of a modular control for a omni-

directional wheelchair. NavChair is described in

(Simpson, 1998), (Bell, 1994), (Levine, 1997) and

has some interesting characteristic such as wall

following, automatic obstacle avoidance, and

doorways passing capabilities.

Miller and Slak (Miller, 1995) (Miller, 1998)

projected the Tin Man I system, initially with three

ways of operation: human guided with obstacle

avoidance, move forward along a heading, move to a

point (x, y). Afterwards, the project Tin Man I

evolved, resulting in several new functions,

extended in the Tin Man II, by including new

capabilities such as: backup, backtracking, wall

following, doorway passing, and docking.

Wellman (Wellman, 1994) proposed a hybrid

wheelchair which is equipped with two legs

additionally to the four wheels, which enable the

wheelchair to climb over steps and move through

rough terrain.

Some projects presented solutions for people

with tetraplegia, by using the recognition of facial

expressions as the main input to guide the

wheelchair (Jia, 2006), (Pei, 2001), (Adachi, 1998).

Another method of control is by using the user

“thoughts”. This technology typically uses sensors

that measure the electromagnetic waves of the brain

(Lakany, 2005) (Rebsamen, 2007).

ACCoMo (Hamagami, 2004) is a prototype of an

IW that allows for safe movement in indoor

environments for the handicapped physicists.

ACCoMo is an agent based prototype with simple

autonomous, cooperative and collaborative

behaviors.

Although several prototypes have been

developed and different approaches have been

proposed for IWs, there is not, at the moment, a

proposal for a platform enabling easy development

of Intelligent Wheelchairs using common electric

powered wheelchairs with minor modifications.

3 HARDWARE DESIGN

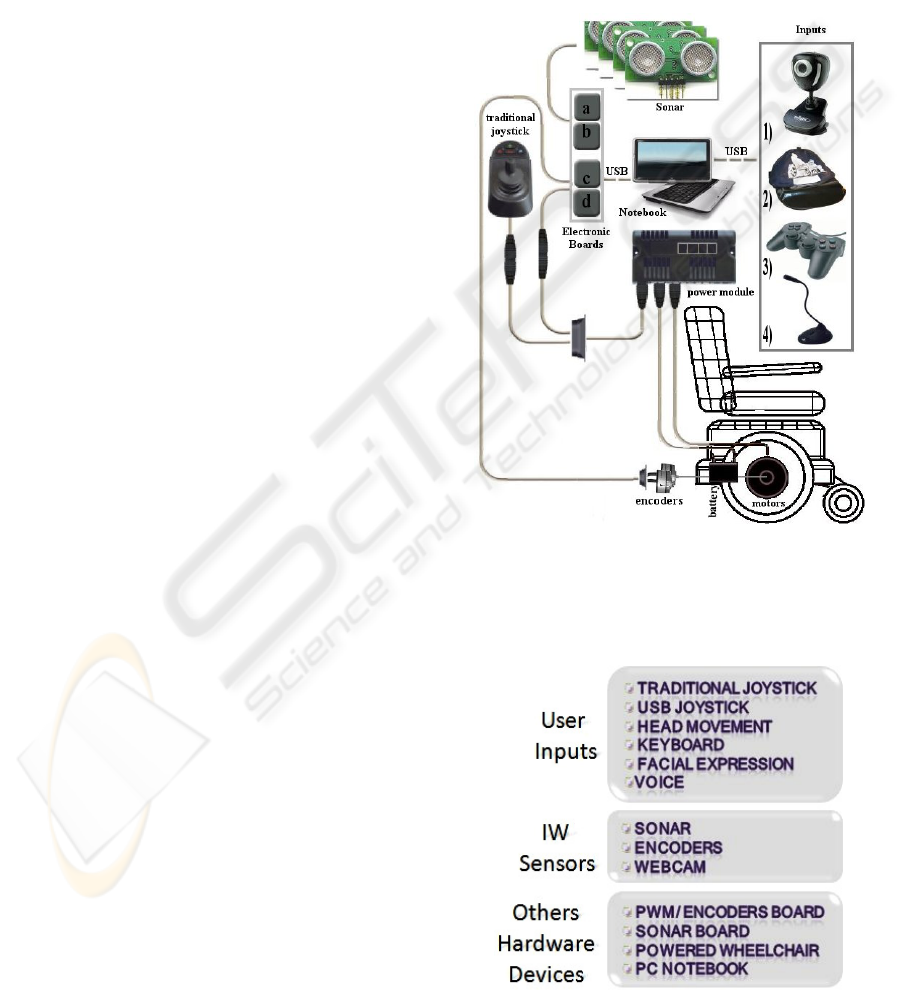

The hardware architecture of IntellWheels prototype

is shown in Figure 1. The IntellWheels_chair1 is

based on a commercial electrical wheelchair model

Powertec, manufactured by Sunrise in England

(Sunrise, 2007). The Powertec wheelchair has

following features: Two differentially driven rear

wheels; Two passive castor front; Two 12V batteries

(45Ah); Traditional Joystick; Power Module.

Figure 1: Hardware Architecture of IntellWheels.

The IntellWheels hardware parts are divided in three

functional blocks: user inputs, IW sensor, hardware

devices. This blocks are depicted in Fig. 2.

Figure 2: Hardware Functional Blocks.

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

116

3.1 User Inputs

To enable people with different kinds of disabilities

to drive the intelligent wheelchair, this project has

incorporated several sorts of user inputs. The idea is

to give options to the patients, and let them choose

what control is more comfortable and safer. Besides

that, multiple inputs makes possible the IntellWheels

integration with an intelligent input decision control,

which is responsible to cancel inputs in the case its

perception recognizes conflicts, noise and danger.

For example, in a noisy environment, voice

recognition would have low rate or even would be

canceled. The inputs implemented go from

traditional joysticks until head movement control,

and are explained bellow:

• Traditional Joysticks. These inputs present in

ordinary wheelchairs are a robust way to drive a

wheelchair. However they may not be accessible to

paraplegic or cerebral palsy people. They are present

in the prototype due to its simplicity;

• USB Joystick. The USB joysticks are a little bit

more sophisticated than traditional joysticks. This

game joystick has many configurable buttons that

makes the navigation easier;

• Head Movement. This input device is mounted in

a cap making it possible to drive the wheelchair just

with head movement;

• Keyboard. This device enables that the

wheelchair control can be made just pushing some

keys in the keyboard;

• Facial Expressions. By using a simple webcam,

present in most of the notebooks, this software

recognize simple facial expressions of the patient,

using them as inputs to execute since basic

commands (like: go forward, right and left) to high

level command (like: go to nursery, go to bedroom);

• Voice. Using commercial software of voice

recognition we developed the necessary conditions

and applications to command the wheelchair using

the voice as an input.

The use of a vast set of input options enables the

prototype to be easily controlled by patients

suffering from distinct disabilities.

3.2 Sensors

The purpose of this project is to develop an

intelligent wheelchair. The distinction between an

IW and a robotic wheelchair is not just semantic. It

means that we want to keep the wheelchair

appearance, reducing the visual impact that the

sensors mounted on the device produce but, at the

same time, increasing the wheelchair regular

functionalities. This statement limits the number and

the kind of sensors we are able to use due to size,

appearance and assembly constraints. To compose

the intelligent wheelchair ten sonar sensors were

mounted (giving the ability to avoid obstacles,

follow walls and perceive unevenness in the

ground), two encoders were assembled on the

wheels (providing the tools to measure distance,

speed, position) and a webcam were placed directed

to the ground (capable to read ground marks and

refine the odometry).

3.3 Hardware Devices

The hardware devices block is composed of (Fig. 3):

• 2 sonar board (Electronic board ‘a’ and ‘b’

illustrated in the Figure 1 and Figure 2), the function

‘a’ and ‘b’ boards are receiving information of the

ten sonars and sending it to the PC;

• 2 PWM/Encoders board (Electronic board ‘c’

and ‘d’), these boards have speed control function,

as well as sending to the PC the displacement

information to enable the odometry;

• Commercial electrical wheelchair and commercial

notebook.

The core of IntellWheels prototype is a PC notebook

(HP Pavilion tx1270EP,AMD Turion 64 X2 TI60),

although other notebooks could be used without any

loss of capabilities.

Figure 3: Devices installed in IW.

4 SOFTWARE DESIGN

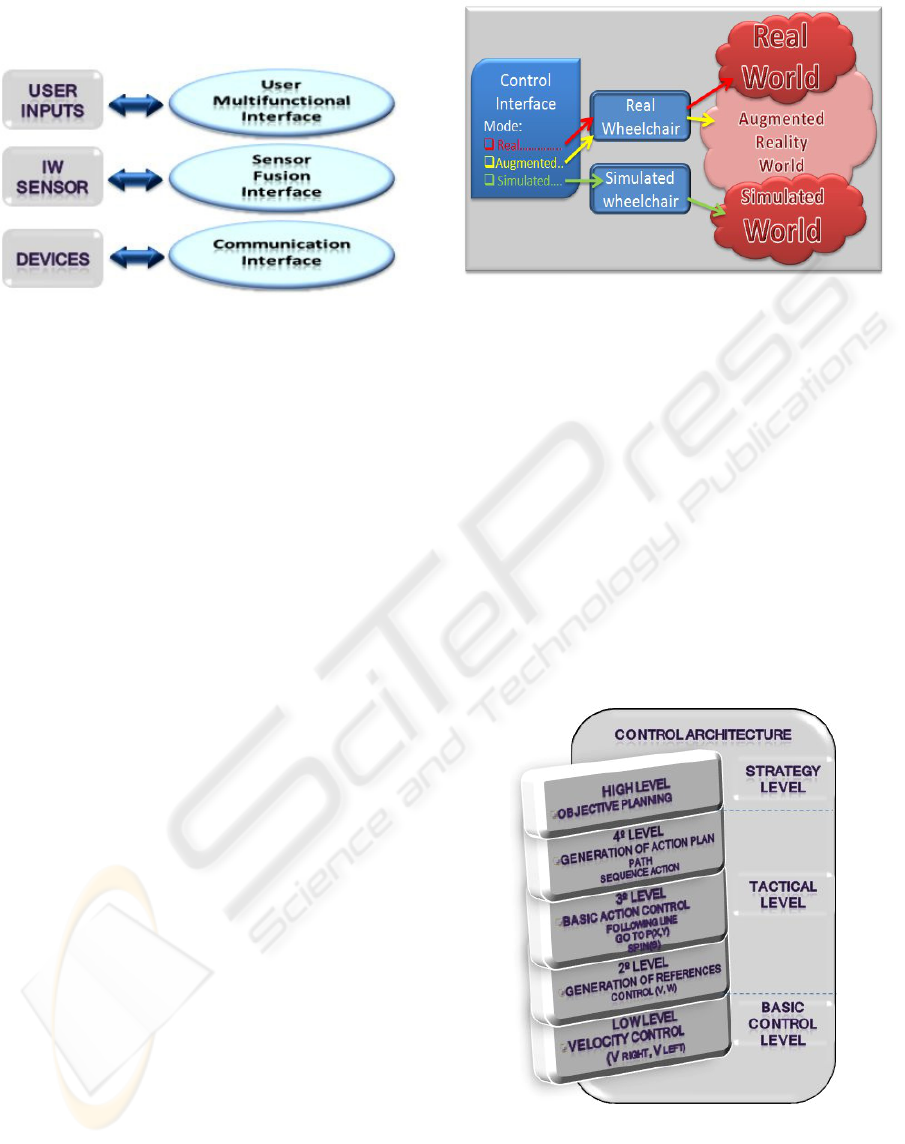

The software in this project is composed by a

modular system, where each group of tasks interact

with the main application. Figure 4 shows the

specific functions and the software responsible to

INTELLWHEELS - A Development Platform for Intelligent Wheelchairs for Disabled People

117

convert data from hardware to be used in the control

level.

Figure 4: Intelligent Wheelchair Software.

The main application has the function to gather

all information from the system, communicate with

the control module and set the appropriate output.

Moreover, it has other different functions depending

on what is the control interface mode set:

• Real. If this option is selected, all data

comes from the real world. The main application

collects real information from sensors through the

Sensor User Interface, calculate the output through

the Control module and send these parameters to the

PWM Boards;

• Simulated. In this mode, the system works

just with virtual information and is used for two

purposes: generate the same behavior of a real

wheelchair and to test control routines. The main

application collects virtual information directly from

the simulator, calculates the output through the

Control module and send back these parameters to

the simulator;

• Augmented Reality. This mode creates an

interaction between real and virtual objects,

changing a real wheelchair’s path to avoid collision

with a virtual wall (or with a virtual wheelchair) for

example. The objective of the augmented reality is

to test the interaction between wheelchairs and

environment, reducing its costs once major agents

can be simulated. The main application collects real

information from the sensors through the Sensor

User Interface and mix it with virtual information

collected from the simulator, calculate the output

through the Control module and send the parameters

to the simulator and the PWM Boards.

Due to its capabilities to interact with real,

simulated and augmented reality worlds we call this

whole system: IW Platform (Figure 5). In other

words, the Platform is the fusion of the Simulator,

Software modules, Real Wheelchair and Hardware

Devices, to test, preview, understand and simulate

the behaviour of Intelligent Wheelchairs.

Figure 5: Intelligent Wheelchair Platform Architecture.

5 CONTROL

IntellWheels has a multi-level control architecture

subdivided in three levels: a basic control level, a

tactical level and strategy level, as shown in Fig. 6.

As focus is primarily testing the Platform, classic

algorithms were chosen to validate the system, some

other issues are left outside the scope of this paper,

such as analyses of its limitations and its

performance. High Level strategy plan is responsible

to create a sequence of high level actions needed to

achieve the global goal. Actually the algorithm

implemented to fulfill this task is based on the

STRIPS planning algorithm (Fikes, 1971).

Figure 6: Control architecture.

In the Generation of Action Plans, we order the

system to generate a sequence of basic actions

aiming to satisfy the objectives proposed previously.

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

118

To find a path from a given initial node to a given

goal node the system has a simple A* Algorithm

implemented (Shapiro, 2000). Once the path is

calculated, it is subdivided into basic forms (lines,

circles, points) that are afterwards followed by the

wheelchair.

Each basic form represents a basic action to be

executed on time in the Basic Action Control

module. Following that the system executes the

Generation of References, which is responsible to

estimate the wheelchair linear and angular speeds,

putting the wheelchair into motion.

The lowest level of control is designed through a

Digital PID implemented in the PWM/Encoders

Boards. References from Basic Action are

transferred by serial communication to the boards

and then contrasted with real time data to control the

speed.

6 EXPERIMENTS AND RESULTS

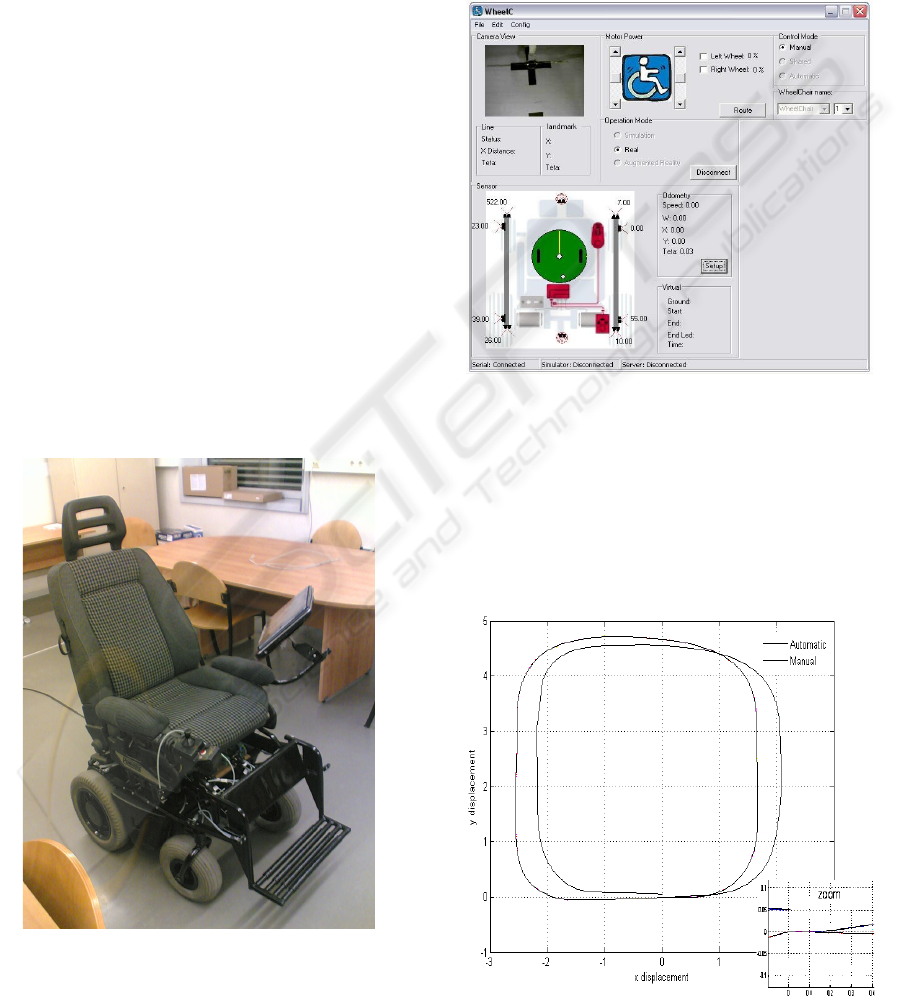

This section presents the prototype implementation

and a simple experiment and the results achieved.

Figure 7 shows the mechanical structure and the

hardware implementation. As we can see, the

prototype is a commercial electric powered

wheelchair with minimal modifications.

Figure 7: IntellWheels_Chair1 Prototype.

User interface software was designed to be as

friendly as possible and it is shown in Figure 8. In

the main window it contains a webcam window,

which is used to recognize landmarks, and the

results of its localization. A schematic figure with

the position of the sonar mounted in the wheelchair

easily shows the distance to nearby objects. By the

side, we have a panel with the information provided

by the odometry. The application still displays a

scrollbar indicating the speed of each wheel as well

as buttons to choose the operation mode.

Figure 8: Interface Control of IntellWheels.

Some basic tests were performed to validate

odometry, and consisted in moving the wheelchair

around a rectangle path, starting and stopping in the

same point. In Figure 9 the results of an automatic

test (red line) and a manual test (blue line) are

presented. In the manual test the user had all control

of the wheelchair.

Figure 9: Path Followed by Wheelchair in the Tests.

INTELLWHEELS - A Development Platform for Intelligent Wheelchairs for Disabled People

119

In the automatic mode the final point of the path

of the wheelchair presented a little displacement

regarding its start point (physical mark point on the

ground). As this error is not displayed in the

odometry graph, a Manual test was executed to

evaluate the results. In this test, the user drove the

wheelchair following the same path, but at this time

stopping in the same physical mark point on the

ground. Results of this test presented the error

displayed in the odometry graph.

The error presented is admissible since it is just 5

cm in a total amount of 1500 cm of displacement,

and can be explained by the integration of the

odometry systematic error.

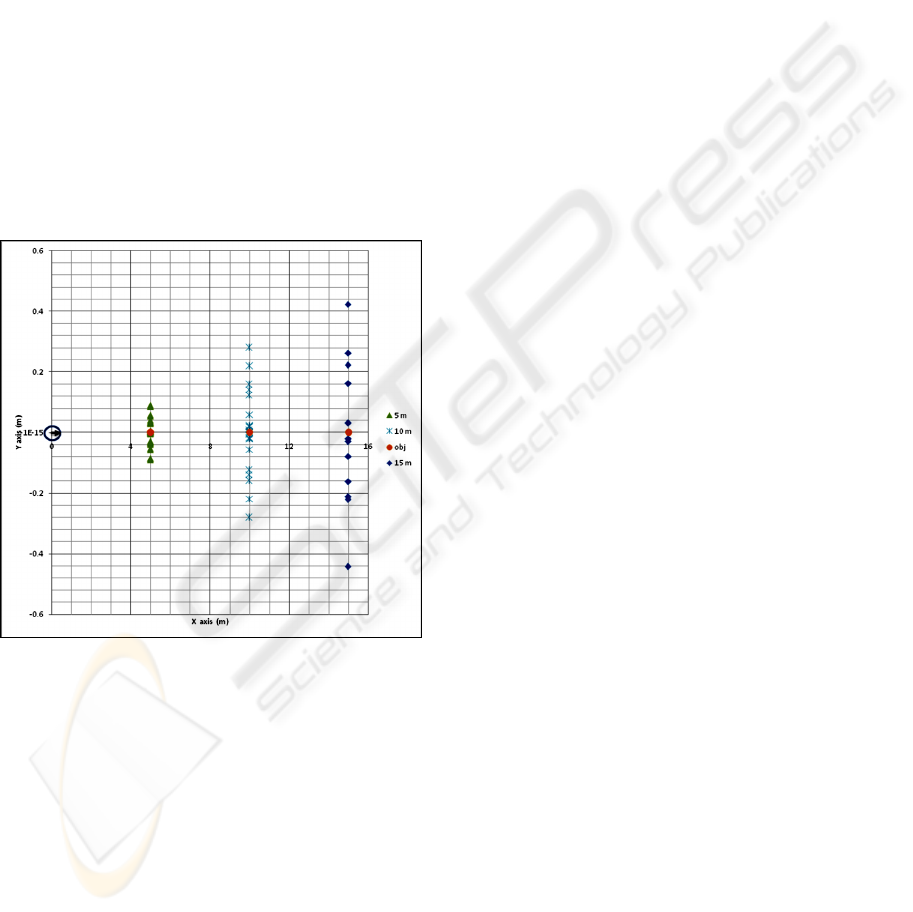

Figure 10 shows the results of distinct tests of

displacement in straight line. The objective was

evaluate odometry dispersion error for different

distances. In this test was valued displacement of 5,

10 and 15 meters, with approximately 20 samples

for each path.

Figure 10: Odometry dispersion error for 5m, 10m and

15m of displacement in straight line.

7 DISCUSSION AND

CONCLUSIONS

This paper presented the design and implementation

of a development platform for Intelligent

Wheelchairs called IntellWheels.

This platform facilitates the development and test

of new methodologies and techniques concerning

Intelligent Wheelchairs. We believe that this new

techniques can bring to the wheelchairs real

capacities of intelligent action planning, autonomous

navigation, and mechanisms to allow the execution

in a semi-autonomous way of the user desires,

expressed in a high-level language of command.

Future research will aim the test and validation

for the other sensors mounted in the wheelchair.

Moreover, like in many other systems, cooperative

and collaborative behaviours are desired to be

present in the IW and need to be incorporated in the

Platform. Another important improvement to be

pursued includes a comparative study of the classic

implemented algorithms and the new proposal to

solve these issues.

The platform will allow that real and virtual IWs

interact with each other. These interactions make

possible high complexity tests with a substantial

number of devices and wheelchairs, representing a

reduction in the project costs, once there is not the

necessity to build a large number of real IW.

ACKNOWLEDGEMENTS

The first author would like to thank for CAPES for

doctoral course financing.

REFERENCES

Adachi,Y., Kuno, Y., Shimada, N., Shirai, Y., 1998.

Intelligent wheelchair using visual information on

human faces. International Conference in Intelligent

Robots and Systems. Oct. Vol. 1. pp. 354-359.

Bell, D.A., Borenstein, J., Levine, S.P., Koren, Y., and

Jaros, L., 1994. An assistive navigation system for

wheelchairs based upon mobile robot obstacle

avoidance. IEEE Conf. on Robotics and Automation.

Faria, P. M., Braga, R A. M., Valgôde, E., Reis L. P.,

2007a. Platform to Drive an Intelligent Wheelchair

using Facial Expressions. Proc. 9th International

Conference on Enterprise Information Systems -

Human-Computer Interaction (ICEIS 2007). pp. 164-

169. Funchal Madeira, Portugal. June 12–16. ISBN:

978-972-8865-92-4

Faria, P. M., Braga, R. A. M., Valgôde, E., Reis, L. P.,

2007b. Interface Framework to Drive an Intelligent

Wheelchair Using Facial Expressions. IEEE

International Symposium on Industrial Electronics. 4-

7 de June de 2007, pp. 1791-1796, ISBN: 1-4244-

0755-9.

Fikes, R., Nilsson, N. J., 1971. STRIPS: A New Approach

to the Application of Theorem Proving to Problem

Solving. IJCAI 1971. pp.608-620.

Hamagami, T., Hirata, H., 2004. Development of

intelligent wheelchair acquiring autonomous,

cooperative, and collaborative behavior. IEEE

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

120

International Conference on Systems, Man and

Cybernetics. Oct. Vol. 4. pp. 3525 - 3530.

Hoyer, H. and Hölper, R., 1993. Open control architecture

for an intelligent omnidirectional wheelchair. Proc.1st

TIDE Congress, Brussels. pp. 93-97.

Jia, P. and Hu, H., 2005. "Head Gesture based Control of

an Intelligent Wheelchair", CACSUK - 11th Annual

Conference of the Chinese Automation and

Computing Society in the UK, Sheffield, UK, Sept. 10.

Jia, P., Hu, H., Lu, T. and Yuan, K., 2006. Head Gesture

Recognition for Hands-free Control of an Intelligent

Wheelchair. Journal of Industrial Robot.

Lakany, H. 2005. Steering a wheelchair by thought. The

IEEE International Workshop on Intelligent

Environments. June. pp. 199 - 202.

Levine, S.P., Bell, D.A., Jaros, L.A., Simpson, R.C.,

Koren, Y., Borenstein, J., 1997. The NavChair

assistive wheelchair navigation system. IEEE

Transactions on Rehabilitation Engineering pp. 443-451.

Luo, R. C., Chen, T. M. and Lin, M. H., 1999. Automatic

Guided Intelligent Wheelchair System Using

Hierarchical Grey-Fuzzy Motion Decision-Making

Algorithms, Proc. of IEEVRSJ’99 - International

Conference on Intelligent Robots and Systems.

Madarasz, R.L., Heiny, L.C., Cromp, R.F. and Mazur,

N.M., 1986. The design of an autonomous vehicle for

the disabled, IEEE J. Robotics and Automat.

September, pp. 117 - 126.

Miller, D. and Slack, M., 1995. Design and testing of a

low-cost robotic wheelchair prototype. Autonomous

Robots. Vol. 2, pp. 77-88.

Miller, D., 1998. Assistive Robotics: An Overview.

Assistive Technology and AI. pp. 126-136.

Pei Chi Ng; DeSilva, L.C., 2001. Head gestures

recognition. Proceedings International Conference on

Image Processing. Oct. Vol. 3. pp. 266-269. ISBN: 0-

7803-6725-1.

Rebsamen, B., Burdet, E., Guan, C., Zhang, H., Teo, C. L.,

Zeng, Q., Laugier, C., Ang Jr., M. H., 2007.

Controlling a Wheelchair Indoors Using Thought.

IEEE Intelligent Systems and Their Applications. Vol.

22, pp. 18 – 24.

Shapiro S.C., 2000. Encyclopedia of Artificial

Intelligence. Wiley-Interscience. May.

Simpson, R. C., 2005. Smart wheelchairs: A literature

review. Journal of Rehabilitation Research &

Development. August. Vol. 42. pp. 423-436.

Simpson, R. et al. 1998. NavChair: An Assistive

Wheelchair Navigation System with Automatic

Adaptation. [ed.] Mittal et al. Assistive Technology

and AI. pp. 235-255.

Sunrise, 2007. Sunrise Medical manufacturers

www.sunrisemedical.co.uk

Wellman, P., Krovi, V. and Kumar, V., 1994. An adaptive

mobility system for the disabled. Proc. IEEE Int. Conf.

on Robotics and Automation.

INTELLWHEELS - A Development Platform for Intelligent Wheelchairs for Disabled People

121