ASSESSING THE QUALITY OF ENTERPRISE SERVICES

A Model for Supporting Service Oriented Architecture Design

Matthew Dow

1

, Pascal Ravesteijn

2

1

Accenture, Amsterdam, Gustav Mahlerplein 90, 1082 MA Amsterdam, The Netherlands

2

University of Applied Science, Utrecht, Nijenoord 1, 3552 AS Utrecht, The Netherlands

Johan Versendaal

Department of Computing Science, Utrecht University, Padualaan 14, 3584 CH Utrecht, The Netherlands

Keywords: Service Oriented Architecture, SOA, web services, enterprise services, business services, design and

development methodologies.

Abstract: Enterprise Services have been proposed as a more business-friendly form of web services which can help

organizations bridge the gap between the IT capabilities and business benefits of Service Oriented

Architecture. However up until now there are almost no methodologies for creating enterprise services, and

no lists of definite criteria which constitute a “good” enterprise service. In this paper we present a model

which can aid Service Oriented Architecture designers with this by giving them a set of researched criteria

that can be used to measure the quality of enterprise service definitions. The model and criteria have been

constructed by interviewing experts from one of the big five consultancy firms and by conducting a

literature study of software development lifecycle methods and Service Oriented Architecture

implementation strategies. The results have been evaluated using a quantitative survey and qualitative

expert interviews, which have produced empirical support for the importance of the model criteria to

enterprise service design. The importance of business ownership and focusing on business value of

enterprise services is stressed, leading to suggestions of future research that links this area more closely with

Service Oriented Architecture governance, Service Oriented Architecture change management, and

Business Process Management.

1 INTRODUCTION

Service Oriented Architecture (SOA) development

and deployment generally builds on a service view

of the world in which a set of services are assembled

and often reused in different ways, allowing

organizations to quickly adapt to changing business

needs (Cox and Kreger, 2005). This type of

architecture ideally allows IT systems to be

integrated and re-used in a standardized way

bringing benefits to businesses such as faster time to

market and lower development costs. SOA is not

just yet another change in your IT systems

architecture: it also requires that organizations

evaluate their business models, come up with

service-oriented analysis and design techniques,

deployment and support plans, and carefully

evaluate partner, customer, and supplier

relationships (Papazoglou and van den Heuvel,

2006). However despite the perceived importance

of business involvement in SOA design,

organizations are easily tempted to approach SOA

from an IT perspective, and ignoring the business

models and needs.

For the most part the problem is not that web

service technology can’t support more granular

business functionality. As early as 2003, IBM

researchers noted that web services were moving

from their initial “describe, publish, interact”

capability to a new phase in which robust business

interactions are supported (Curbera et al., 2003).

The last few years this has led to the start of a new

trend in the SOA market to create more granular

services called “enterprise services” (SAP, 2004;

Freemantle et al., 2002), “meta-services”

50

Dow M., Ravesteijn P. and Versendaal J. (2008).

ASSESSING THE QUALITY OF ENTERPRISE SERVICES - A Model for Supporting Service Oriented Architecture Design.

In Proceedings of the Tenth International Conference on Enterprise Information Systems - ISAS, pages 50-57

DOI: 10.5220/0001685100500057

Copyright

c

SciTePress

(Cherbakov et al., 2005; Crawford et al., 2005) or

even “business services” (Wang et al., 2005). In this

paper we promote the use of the term “enterprise

services”, because this term has the most value to

business users, where as “meta” is not as clear to

most people and the term “business service” can be

confused with more traditional types of services not

related to SOA.

For clarity within this paper, we have defined an

enterprise service as:

“A special type of web service where the

operations form a functional piece (steps or tasks) of

a business process. Enterprise service operations

may be composed of more fine-grained web services

which provide business-agnostic functionality, such

as basic data access.”

It has been stated that business services execute

the functionality of the steps, tasks and activities of

one or more business processes and that fine-grained

components and services provide a small amount of

business-process usefulness, where as larger

granularities can be compositions of smaller grained

components or other artifacts (Papazoglou and van

den Heuvel, 2006). It has also been said that coarse

granularity can be defined as such that services are

related to the individual steps of a business process

(Kimbell et al., 2005).

One of the problems though for enterprise

architects is that an enterprise service has many

characteristics that must be considered, and it is a

fallacy to believe that all services require the same

level of definition (Jones, 2005). It is therefore not a

trivial exercise to determine what the necessary

criteria are for a “good” enterprise service, to ensure

the expected value is achieved for organizations.

This paper focuses on this problem by addressing

the following research question:

What are the (quality) criteria and method by

which an organization is able to accurately

create and assess high quality Enterprise service

definitions?

In this paper a model is presented containing

criteria that can be used by organizations to evaluate

the quality of their enterprise service definitions and

align them with their Business Process Management

(BPM) initiatives in order to increase business

understanding and value of SOA implementations.

The research of identifying the right phases and

criteria for the model was done in consultation with

a focus group of SOA experts at one of the big five

consultancy firms.

We assess the research question by constructing

a model which provides SOA designers with a set of

criteria they can use to measure the quality of

enterprise service definitions. The model is framed

through interviewing experts at one of the big five

consultancy firms and by conducting a literature

study of software development lifecycle methods

and Service Oriented Architecture implementation

strategies. The results are evaluated using a

quantitative survey and qualitative expert interviews.

In section 2 we present a generic approach for

enterprise service application design, resulting in the

Enterprise Value Delivery Lifecycle. Section 3

provides the model (Enterprise Service Definition

Model), which includes the criteria for “good

quality” enterprise service determination. In section

4 we present the validation of the model, followed

by a discussion in section 5. Section 6 contains

conclusions and further research.

2 ENTERPRISE SERVICE

CREATION: APPROACH

In essence there are three main strategies for

developing SOA-based enterprise applications: top-

down, bottom-up, and meet-in-the-middle

(Perepletchikov, 2005; Arsanjani, 2004). It is a key

decision whether service creation should begin from

the bottom-up or top-down. Since this research

focuses on enterprise services, which are defined to

be in line with “steps or tasks” of business

processes, a more top-down approach is taken. In

practice it will always be a balancing game as to

how extensive the top-down modeling should be. A

wide scale business modeling exercise could be too

expensive and make the SOA implementation have a

low ROI. This can be minimized if certain business

domains/processes are selected as a focus in order to

narrow the scope of the top-down approach. It is still

important though that organizations are aware of

their existing legacy systems and plan “agile”

activities accordingly. The real goal is to try at all

cost to avoid a pure bottom-up approach, which will

certainly miss out on achieving valuable benefits for

the business.

In order to utilize a top-down approach to

enterprise service creation, research was done on

lifecycle methodologies. The lifecycle phases for the

Enterprise Service Definition Model (ESDM) we

developed during this research are based on the

successful Enterprise Value Delivery (EVD)

lifecycle model, which is a implementation method

satisfactorily used by one of the big five consultancy

firms. This lifecycle model uses the following

phases:

ASSESSING THE QUALITY OF ENTERPRISE SERVICES - A Model for Supporting Service Oriented Architecture

Design

51

• Vision

• Plan

• Design

• Build

• Deliver

• Operate

The choice of using this approach to enterprise

service design was confirmed by a group of 7 SOA

experts at this firm who participated early on in this

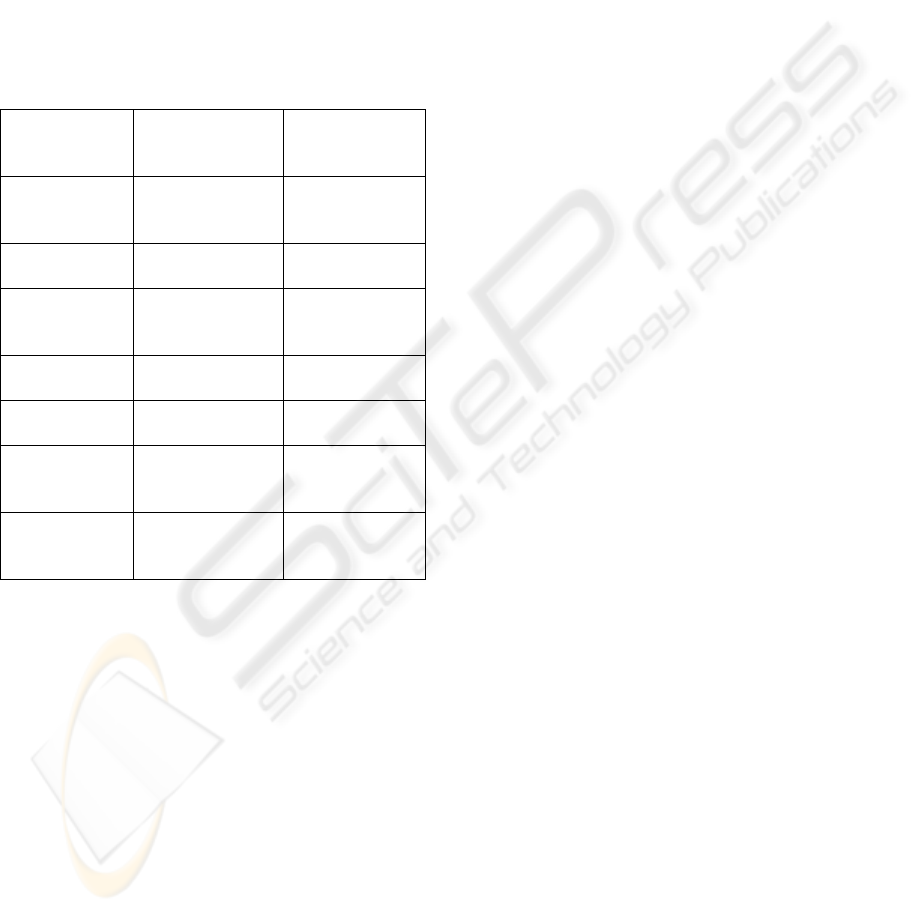

research. Table 1 describes their roles in the

organization and experience with SOA.

Table 1: SOA experts participating in Research.

Position

in the Firm

Division Main

Expertise related

to SOA

Partner BusinessIT

Strategy

Business Value of

technology,

Financial Services

Senior Manager BusinessIT

Strategy

Strategic SOA

decision making

Senior Manager Enterprise

Applications

SAP and SOA

Lead for

Europe region

Senior Manager Enterprise

Applications

Oracle Lead for

NL SOA

Consultant Enterprise

Applications

SAP and SOA

Research

Manager Development

Integration

Various SOA

Integration

Projects

Manager

Specialist

Development

Integration

Various SOA

Integration

Projects

The use of the lifecycle phases for a top-down

approach to enterprise service development has been

justified by other similar models used for service

and component based design, including the top-

down process steps of (Erl, 2005), the Rational

Unified Process (RUP, 2001), and the web service

development lifecycle of (Papazoglou and van den

Heuvel, 2006).

After discussions with the 7 SOA experts it was

further decided to combine the phases of the EVD

lifecycle into the following: Vision & Plan, Design,

Build, and Deliver & Operate. This would create 4

primary stages for developing successful enterprise

services starting with a vision based on the

established business process models of the

organization.

The combined phase of Vision & Plan includes

the planning and goal setting for the enterprise

services being created, and will include a top-down

business process analysis that should result in a set

of enterprise service candidates.

The Design phase refers to the stage where

enterprise service candidates from the Vision & Plan

phase are taken and further refined and detailed.

This includes the creation of functional and non-

functional requirements, inputs, outputs, and formal

descriptions of how to use the service. This is a

distinct activity that is separate from the business

process analysis, and for this reason it was decided

to separate it into a different phase than the “Vision

& Plan” phase. This also emphasizes the importance

of business involvement in the top-down approach,

which is strongest in the first two phases.

The Build phase is the most technical phase, as it

involves the coding of enterprise services based on

the business designed service candidates. This

phase focuses on the fact that enterprise services are

built technically the same as web services, and as a

result they should comply with industry standards,

and whether the proposed business design of the

enterprise service is technically feasible to be

implemented. Enterprise service candidates will not

always be in line with the existing applications, and

some negotiation will have to take place between

business and IT in order to make sure the services

are in line with business needs but also can be

feasibly delivered.

The final phase of the ESDM combines the

Deliver and Operate phases of the EVD. It was

recognized that the “Deployment” phases of other

service design methods are important, but

nevertheless rather trivial to the importance of

creating high quality enterprise service definitions.

It was therefore decided that this could be combined

together with the Operate phase. During Operation

is when performance and business value of the

services must be monitored.

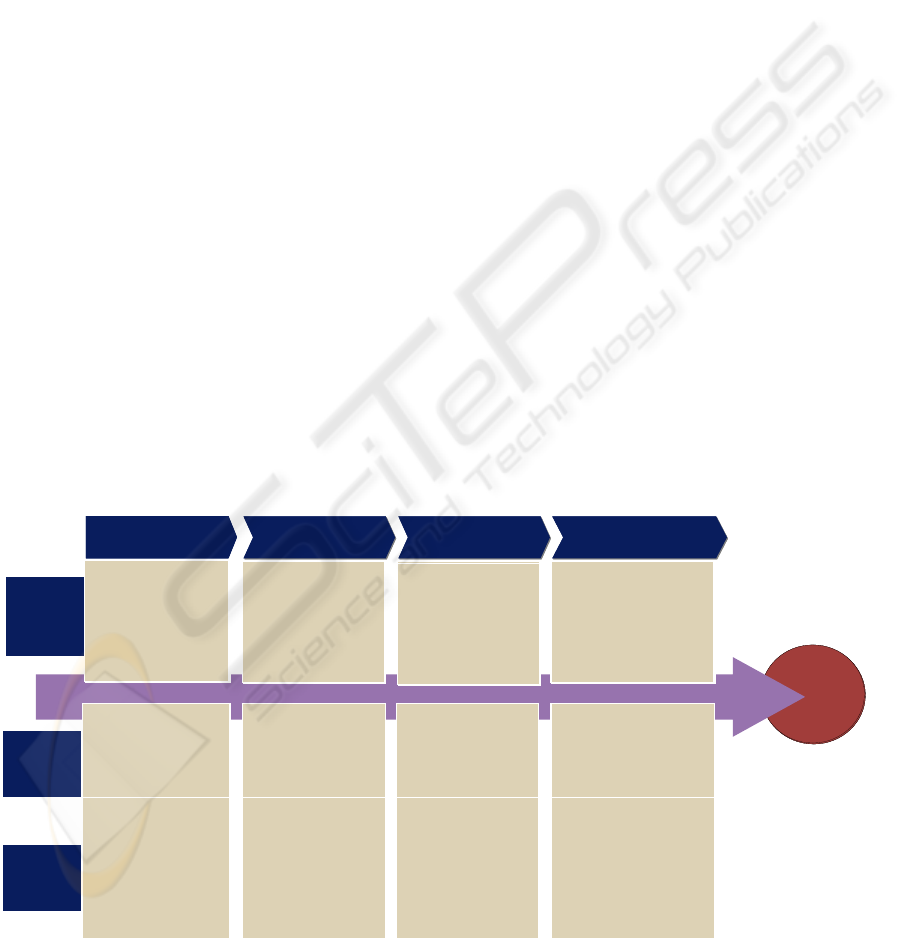

3 MODEL CONSTRUCTION

Initially several literature sources were examined as

a way of collecting lists of the most commonly

referenced service criteria (Papazoglou and van den

Heuvel, 2006; Erl, 2005; Bloomburg, 2005; Deloitte,

2004; Freemantle, 2002). The originating separate

lists were merged into one.

This list was further refined and challenged by

the group of 7 SOA experts. After several interviews

and brainstorming sessions with these experts, the

ICEIS 2008 - International Conference on Enterprise Information Systems

52

enterprise service design criteria were refined and

enhanced from the initial literature search. Figure 1

shows the model created including which criteria

belonged in which of the service design lifecycle

phases, as agreed upon by the experts. It should be

noted that several of the criteria repeat in various

phases of the model. During discussions with the

experts, it was concluded that many of the important

criteria, i.e. “reusable”, should be re-visited several

times at different points of the design process. This

list of criteria was combined with a list of key

questions and tasks for each phase to form the

Enterprise Service Definition Model (ESDM),

shown in figure 1.

The model consists of 20 criteria for the

enterprise service creation phases (vision & plan,

design, build). These include criteria for example

that say services should be designed to be Reusable,

and they should Business Owners who are heavily

involved in the visioning of their design. Along with

this, 5 key aspects to monitor services were created

(e.g. Services should follow Service Level

Agreements (SLA)). The monitoring aspects apply

once the enterprise services are delivered and in

operation. Each phase also contains key questions

that should be addressed by service designers during

the creation of new services. It should be noted that

some of the criteria identified were deemed to be

important in multiple phases of the design process,

and hence are revisited multiple times (e.g.

Reusable). Also, the validation process produced

evidence showing that some criteria are more

important than others in each phase (this is described

in more detail in section 4.3).

It may be noticed that some commonly identified

service criteria were left out of the model. In

particular, we discuss the ideas of “loose-coupling”

and “coarse granularity” more closely, because these

are often cited criteria which were not included. In

fact in (Erl, 2005) loose coupling is cited as one of

the four most important principles of service

orientation. After extensive research though, it turns

out there are several good reasons for leaving these

criteria off the list. One of the problems with both of

these terms is that nobody can define them in an

objective manner easily. Both of these terms are

subjective trade-offs without useful metrics, and

furthermore they are very application context

dependent (OASIS, 2006; Papazoglou and van den

Heuvel, 2006). Coarse Granularity of services is

relative to the level of problem being addressed and

defining the optimal level is not as simple as

counting the number of interfaces that a service has.

Loose Coupling can be difficult to determine.

“This is because loose coupling is a methodology or

style, rather than a set of established rules and

specifications” (Kaye, 2003). In this research we

consider the more tangible criteria that we defined as

this established set of specifications. So, although

Loose Coupling and Coarse Granularity are

necessary for a well-defined enterprise service, the

proper level will be obtained when the other (easier

measured) criteria are adhered to.

Questions

in each

phase

Criteria to

follow in

each

phase

Vision & PlanVision & Plan

•What services

need to be built in

which layers, and

what value can

they provide?

• What logic should

be encapsulated

by each service?

•What services

need to be built in

which layers, and

what value can

they provide?

• What logic should

be encapsulated

by each service?

•What services

need to be built in

which layers, and

what value can

they provide?

• What logic should

be encapsulated

by each service?

•What services

need to be built in

which layers, and

what value can

they provide?

• What logic should

be encapsulated

by each service?

Design

Design

Design

Design

•How can service

interface definitions

be derived from

service candidates?

•What SOA

Characteristics do

we want to support?

•How can service

interface definitions

be derived from

service candidates?

•What SOA

Characteristics do

we want to support?

•How can service

interface definitions

be derived from

service candidates?

•What SOA

Characteristics do

we want to support?

•How can service

interface definitions

be derived from

service candidates?

•What security and

usage requirements

should be

supported?

Build

Build

Build

Build

• What technical

industry standards

will be required?

• Can the functional

designs be

technically

implemented as the

business proposes?

• What technical

industry standards

will be required?

• Can the functional

designs be

technically

implemented as the

business proposes?

• What technical

industry standards

will be required?

• Can the functional

designs be

technically

implemented as the

business proposes?

• What technical

industry standards

will be required?

• Can the functional

designs be

technically

implemented as the

business proposes?

Value

Adding

Services

Deliver & Operate

Deliver & Operate

Deliver & Operate

Deliver & Operate

• Continuous evaluation of

service level objectives

and performance

• Monitoring Metrics for

Business Value, and

planning for future

changes or updates

• Continuous evaluation of

service level objectives

and performance

• Monitoring Metrics for

Business Value, and

planning for future

changes or updates

• Continuous evaluation of

service level objectives

and performance

• Monitoring Metrics for

Business Value, and

planning for future

changes or updates

• Continuous evaluation of

service level objectives

and performance

• Monitoring Metrics for

Business Value, and

planning for future

changes or updates

addressed

•Reusable

• Autonomous

•

Semantics

•

•

Roles

•

• Cohesive

•

Reusable

• Autonomous

•BusinessNaming

Semantics

•ServiceOperations

Represent Steps in

aBusinessProcess

•

Business

Ownership Roles

• Existing Services

Taken into account

•Cohesive

Functionality

•Reusable

• Autonomous

• Security Policies

•Formal Usage

Requirements

•Discoverable

•Vendor

Independence

•

Reusable

• Autonomous

•Security Policies

•

Formal Usage

Requirements

•Discoverable

•Vendor

Independence

•Reusable

• Autonomous

• Security Policies

•Statelessness

•Interoperable

•Vendor

Independence

• Existing Services

•Reusable

• Autonomous

•Security Policies

•Statelessness

•Interoperable

•Vendor

Independence

• Existing Services

Taken into account

Criteria:

High

Importance

Criteria:

Medium

Importance

Monitoring

Aspects

•Security

• Risk Traceability

•SLA

•

Discoverable

Monitor

Business Value

Figure 1: Enterprise Service Definition Model (ESDM).

ASSESSING THE QUALITY OF ENTERPRISE SERVICES - A Model for Supporting Service Oriented Architecture

Design

53

4 MODEL VALIDATION

Two methods of empirical research were done to

validate the phases for enterprise service design and

their criteria. The first method was a quantitative

analysis, which consisted of a survey that was

distributed to SOA experts from consultancy firms

worldwide. The results will be discussed below,

including reliability test and factor analyses that

were done to examine the data. In order to avoid

bias, the respondents for this survey did not include

the initial group of 7 experts who offered input into

the model creation. The second method was a more

qualitative analysis, which consisted of expert

interviews that were conducted with SOA industry

experts. The interviews were used to gain insight on

the state of SOA in these organizations, and at the

same time to present the ESDM and get feedback on

how useful they felt this type of design model would

be for their organizations. Subsequently we will

discuss the approaches of both validations.

4.1 Survey Design & Population

Sample

In total 306 invitations to conduct in the survey were

sent to big-five consultancy firm employees. The

respondents were received from the community of

practice “eRooms” on SOA, as well as from direct

contact of several consultancy firm partners around

the world.

The survey created consisted of 27 questions.

The first 5 were intended to gather background

information, and the remaining 22 questions made

up a measurement questionnaire for the criteria of

the Vision & Plan, Design, and Build phases of the

ESDM (not including the Delivery&Operate phase;

this phase was not tested as we focus on creating

and assessing enterprise services during

development). These three phases of the ESDM

have 20 criteria and every one had one (in two cases

two) questions designed to measure it on the survey.

The questionnaire was reviewed over a period of

two months by the 7 SOA experts, as the criteria for

the model were developed and changed. Each

question used a 1-7 Likert scale to ask the

participant how important they felt the measurement

question (and hence the criteria it was measuring)

was to each phase of enterprise service development.

The average scores would be used to determine

whether certain criteria are more important than

others to enterprise service design, and also whether

some were of a very low importance and should be

removed completely from the model.

The survey data was collected over a 3 week

time period, and the total number of respondents was

99 (a response rate of 32%). The survey contained a

very good distribution of respondents, with

knowledge of SOA being almost evenly split

between minimal, average, and advanced. There

was a good balance between very technical SOA

experts (those who have programmed and built

services) and more functionally oriented experts

who have spent more time designing service

functionality.

4.2 Reliability Test

One of the popular methods to determine reliability

of survey results is through “internal consistency”,

which is reflected in the high correlation among

items or subsets of items, signifying that the items

on a scale behave equivalently as though they were a

single measure (Tinsley and Brown, 2000). In order

to test internal consistency, it was decided to first

test the Cronbach’s alpha for all variables together,

and then for each dimension of the model separately.

Overall the results were very high, with α = .851

when all 22 questions in the model were taken

together. This indicates that the survey taken as a

whole is reliable in measuring enterprise service

definitions. This doesn’t in any way indicate if it is

valid, but it does show a high level of consistency

amongst the survey respondents (N=99).

The second step was to measure the reliability of

each of the 3 tested dimensions of the model

separately: vision & plan, design, and build. It is

desirable to show reliable results within each

dimension as well, because the big challenge is to

try to show that these identified criteria are valid to

measure the creation of enterprise services during

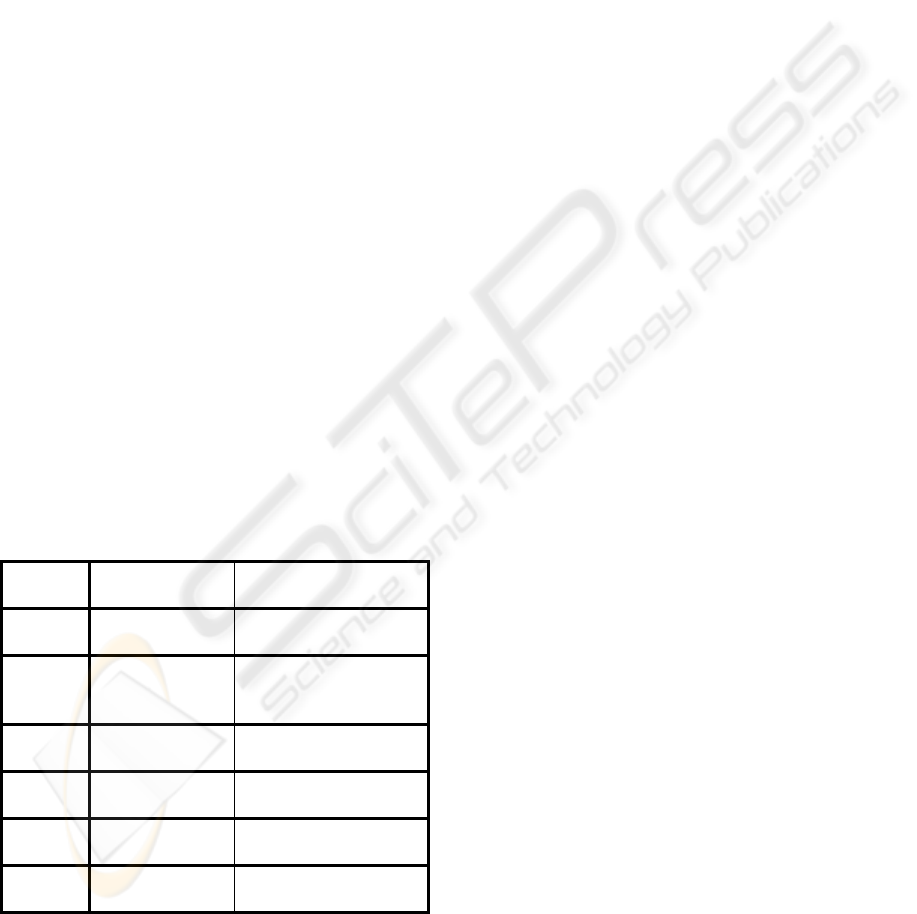

each phase of service creation. Table 2 shows the

alpha statistics for all dimensions of the model.

Table 2: Overall Reliability statistics for ESDM.

Model Phase Cronbach's

Alpha

N of Items

Overall .851 22

Vision & Plan .665 9

Design .706 6

Build .747 7

As can be seen in the table, the alpha for the

Design and Build dimensions is slightly higher than

0.7, whereas the vision & plan dimension is slightly

under. This implies that each dimension of the

ICEIS 2008 - International Conference on Enterprise Information Systems

54

model consistently was scored similarly, and the

data can be taken as reliable.

4.3 Criteria Weighting

The survey was also used as a means to weight the

various criterions to determine which ones were

more important than the others in each of the phases.

It was decided that a simple classification of “low”,

“medium”, or “high” importance would be sufficient

to differentiate between the criteria. In order to

make the model practical and easy to use, it was

determined that categorizing the criteria into easy to

remember importance groups was much better than

numerically ordering them using the weighted

average scores. Also since this is a new area of

research and the difference in weight between

criteria is relatively small, this made the most sense.

Any criteria that had an average score of “low”

would be re-evaluated to determine whether it really

belonged in that phase. The weightings were

defined, based on the 1-7 Likert scale. Any criteria

that had an average score of less than 2.5, would be

categorized as “low” importance. 2.5-5.5 would be

“medium” importance, and over 5.5 “high”

importance.

4.4 Industry Expert Interviews

The following table (Table 3) shows where the

industry experts came from and what their position

was.

Table 3: Industry experts interviewed.

Industry

Sector

Organization Size

(empl.)

Expert position

Financial

Services

98,000 Chief Enterprise

Architect

Financial

Services

115,000 Manager Information

& Application

Architecture

Manufact. 24,000 Chief Information

Officer (CIO)

IT

Consult.

100 Principal consultant

Acc /

Consult.

135,000 Manager Technology

Integration Consulting

Public

Sector

10,000 Senior Advisor

Corporate IT

Note that the experts were mostly employed in

large organizations. The interviews focused on the

practical aspects and ‘face-validity’ of the model.

The interviews lasted about 1 hour, and were held in

a two-weeks period time-frame at the location of the

experts. Leading questions for each of the interviews

were: 1) is the phasing of the model recognizable; 2)

do you expect the model to address all important

criteria per phase; 3) would you expect the model to

be useful.

5 RESULTS OF QUANTITATIVE

AND QUALITATIVE

VALIDATION

Based on the weighted scores as determined from

the survey responses, the full ESDM was

constructed to include weighted criteria, as shown in

Figure 1. From the calculated average scores of all

the criteria on the survey there were 2 “high”

importance criteria in each of the creation phases,

with the rest being of “medium” importance. There

are a few major conclusions that can be made based

on the results.

Based on the results of the survey, the first major

finding is that none of the criteria should be dropped

from the model. If any of the scores were

significantly lower than medium importance (less

than 4.0), than it was going to be debated whether

that criterion should be included. However the

lowest average score for any of the criteria was 4.60

(4.0 = medium importance, 7.0 = very high

importance). Even after removing any statistical

outliers, the scores did not change very much. It

was therefore concluded that none of the criteria

were “bad” and should be removed, based on these

scores. Although the results of the validity analysis

were mixed and produced some factors not

identified by the ESDM, this does not affect the

question of whether to delete any one specific

criteria.

There were two criteria in particular which

generated discussion both in the survey comments

and during a few of the expert interviews. These

were “vendor independence” and “statelessness”.

Most people felt that these were important to their

respective phases, however difficult to realize in

many practical situations. Here is a brief description

of the problems seen with these criteria:

Vendor Independence. According to some of the

more technical SOA experts, one of the problems

with “vendor independence” is that many of the

large software vendors claim to allow their products

to work with others, but in reality it is against their

interests as a software company to make their

ASSESSING THE QUALITY OF ENTERPRISE SERVICES - A Model for Supporting Service Oriented Architecture

Design

55

products too friendly with competitors. So as a

result the enterprise services they provide follow de-

facto web service standards for “interoperable”

communication, but they are not truly “vendor

independent” because there are still some

implementation specifics which make them very

difficult and expensive to use with other competitors

products. Along with this, many organizations are

very committed to one major vendor for the

enterprise applications, and building enterprise

services that are “vendor independent” is a much

lower priority for them. Practical experience will

tell how important this criterion is to enterprise

service development. For now it is too soon to tell

of its impact, and it should be left in the model.

Case studies might likely determine what its

practical value is for different organizations.

Statelessness. Most of the technical SOA experts

agree that it is desirable to have stateless services

that are independent from one another and do not

maintain information about process orchestration.

However again in reality this can be quite difficult,

and there may be some situations where it is

desirable to maintain state at the individual service

level. According to one expert, this can be true in

transaction heavy systems where thousands or even

millions of messages have to be sent across a

network of services. In situations like this, if state

management is centralized, it can mean a huge

performance bottleneck on the services that must

keep track of this information for the whole system.

In these complex situations individual state

management may be desirable. It is because of this

reason that “statelessness” should be evaluated

closely on a situational basis. Since it did receive a

“medium-high” importance score on the survey

(5.28 out of 7), it will definitely be left in the model,

but future users of the ESDM should be aware of the

situational impact of this criterion.

Another important comment is the necessary

focus on Business Process Management (BPM),

which was acknowledged with the criterion “Service

Operations Represent Steps in a Business Process”.

Most of those interviewed for this research like the

idea of the top-down strategy and they see the value

of it. But all of those involved in this project

acknowledge that it doesn’t work well if the

organization has not spent sufficient time developing

a sound BPM strategy with properly modeled

business processes. Otherwise it is impossible to

create high quality enterprise services using this

approach. We recognize the inherent link between

these two, particularly at the enterprise service

vision & plan level.

Common enterprise services must have defined

owners with established ownership and governance

responsibilities. These owners are responsible for

gathering requirements, development, deployment,

the boarding process, and operations management

for a service. The survey confirmed this importance

of “business ownership roles” to enterprise services.

This criteria was rated as highly important to the

Vision & Plan phase of service design (a score of

5.71 out of 7). Many comments were made that the

value of a SOA to the business really depends on

who has responsibility for promoting its use. Also if

ownership is in the hands of business users, they

have a greater incentive to bring the value of the

SOA design beyond the project level. This might

also explain the significance of the factor

“ownership & scope” which was discovered in the

validity analysis. The owner of a service goes a long

way in determining the breadth of its use and scope

in the organization, as well as how well it is “taken

into account” in future SOA designs.

This being said there is a learning curve that

must take place, and according to one expert

interviewed there are recognized challenges where

the business might be comfortable owning the

design of a service but the implementation done by

IT should be owned separately. These are issues

that must be worked out as part of SOA Governance

procedures. Also the improvement of business value

metrics for enterprise services will go a long way in

convincing business users the importance of them

taking ownership responsibilities in order to promote

the widespread use of SOA and enterprise services.

Separate conclusions from the industry expert

interviews were: 1) reusability is probably the most

important criterion; 2) build/buy decisions for

enterprise services will increase in importance; 3)

the Vision & Plan phase is probably the most

important phase in constructing enterprise services;

4) SOA is considered important also in the long run

and future. Finally, all interviewees liked the model,

but some stated their worries for its technical

emphasis. The conclusions from the expert

interviews indicate no specific remarks on particular

criteria, yet, additional emphasis on finding more

business-value metrics is a valid point.

6 CONCLUSIONS AND FUTURE

RESEARCH

Companies more and more see the advantages of

SOA; it is increasingly accepted and adopted as an

ICEIS 2008 - International Conference on Enterprise Information Systems

56

enterprise architecture paradigm, and is expected to

be continuously applied in the long term. The

challange is in finding a ‘best’ set of enterprise

services. We have constructed the ESDM model that

is interpreted applicable and useful by way of

quantititive survey and qualitative analysis; the

model is believed to support and be able to monitor

the definition, identification and assessment of

enterprise services. Utilizing a top-down approach of

SOA-based enterprise application development

using the EVD lifecycle, the identified criteria in the

ESDM can be leveraged.

The following points summarize the areas, which

can be researched further to follow up on the results

found:

Business Value Metrics for Enterprise Services

should be developed

Link the ESDM to SOA Governance, Change

Management, and BPM modeling techniques

Conduct Case Studies with the ESDM

One of the biggest conclusions to come out of

the expert interviews was the fact that the ESDM

provides organizations with a solid structure for

creating enterprise services, however in order for it

to have maximum usefulness there must be clear

business value metrics associated with it in order to

allow organizations to see the incentives for them to

take this approach to SOA design. Along with this,

there could be more SOA governance and change

management procedures linking to the ESDM. A

second area of consideration is the

operationalization of the linkage to Business Process

Management (BPM), as the top-down approach to

enterprise service development requires companies

to really have a handle on their business process

modeling. Also detailed case studies are vital to

understanding how practical it is to use this model in

actual enterprise service development.

REFERENCES

Arsanjani, A., 2004. Service-oriented modeling and

architecture: how to identify, specify, and realize

services for your SOA.” IBM - whitepaper. Retrieved

November 2007 from

ftp://www6.software.ibm.com/software/developer/libr

ary/ws-soa-design1.pdf

Bloomburg, J., 2005. Webify Webinar: Improve Reuse and

Control Rogue Services within your SOA. Published

by Zapthink.

Cherbakov, L., Galambos, G., Harishankar, R., Kalyana,

S., Rackham, G., 2005. Impact of Service Orientation

at the Business Level. IBM Systems Journal. Vol. 44,

No 4.

Cox, D. and Kreger, H., 2005. Management of the service-

oriented-architecture life cycle, IBM Systems Journal,

Vol 44, No 4.

Crawford, C. H., Bate, G.P., Cherbakov, L., Holley, K.

and Tsocanos, C., 2005. Toward an on demand service

oriented architecture. IBM Systems Journal, Vol. 44,

No 1.

Curbera, F., Duftler, M., Khalaf, R., Nagy, W., Mukhi, N.

and Weerawarana, S., 2002. Unraveling the Web

Services Web: An introduction to SOAP, WSDL, and

UDDI. IEEE Internet Computing, Vol 6, No 2, 86-93.

Deloitte 2004. Consulting internal document: EBI SOA

Guiding Principles and Best Practices. April 2004.

Erl, T., 2005. Service-Oriented Architecture: Concepts,

Technology, and Design. Pearson Education, Inc.,

Upper Saddle River, NJ.

Freemantle, P., Weerawarana, S. and Khalaf, R., 2002.

Enterprise services, Communications of the ACM,

Vol 45, No 10, 77–82

Jones, S., 2005. Toward an acceptable definition of

service,. IEEE Software Vol 22 No 3, 87-93.

Kaye, D., 2003. Loosely Coupled—The Missing Pieces of

Web Services, RDS Press.

Kimbell, I. and Vogel, A. 2005. mySAP ERP for

Dummies. Wiley Publishing, Chichester, England.

OASIS, 2006. OASIS SOA Reference Model. Retrieved

27 November 2007, from: http://www.oasis-

open.org/committees/tc_home.php?wg_abbrev=soa-

rm

Papazoglou, M. and van den Heuvel, W., 2006. Service-

Oriented Design and Development Methodology,

International Journal of Web Engineering and

Technology (IJWET). Vol 2, No 4, 412-422.

Perepletchikov, M., Ryan, C. and Tari, Z., 2005. The

Impact of Software Development Strategies on Project

and Structural Software Attributes in SOA”. In:

Second INTEROP Network of Excellence

Dissemination Workshop (INTEROP'05). INTEROP

Press. Ayia Napa, Cyprus.

RUP, 2001. Rational Software Corporation “Rational

Unified Process: Best Practices for Software

Development Teams”, Technical Paper TP026B, Rev.

11/01, November 2001.

SAP, 2004.

Enterprise Services Architecture – An

Introduction. SAP White Paper. Retrieved November

2007,from:

http://www.sap.com/solutions/esa/pdf/BWP_WP_Ente

rprise_Services_Architecture_Intro.pdf.

Tinsley, H. E. A., Brown, S. D., 2000. Handbook of

applied multivariate statistics and mathematical

modeling. Academic Press, San Diego, California.

Wang, Z., Xu, X. and Zhan, D. 2005. Normal Forms and

Normalized Design Method for Business Service.

Proceedings of the 2005 IEEE International

Conference on e-Business Engineering (ICEBE’05),

79-86.

ASSESSING THE QUALITY OF ENTERPRISE SERVICES - A Model for Supporting Service Oriented Architecture

Design

57