AN EVALUATION INSTRUMENT FOR LEARNING OBJECT

QUALITY AND MANAGEMENT

Erla M. Morales Morgado

1

, Francisco J. García Peñalvo

2

and Ángela Barrón Ruiz

1

1

Department of Theory and History of Education

University of Salamanca, Pº de Canalejas, 169, 37008, Salamanca, Spain

2

Department of Computing Science, University of Salamanca, Plaza de los Caídos, S/N, 37008, Salamanca, Spain

Keywords: Metadata, learning objects, knowledge management.

Abstract: Being able to evaluate the quality of LOs is crucial for reliable LO management. LO quality evaluation must

take into account both their pedagogical and the technical characteristics. It also needs to consider them as

units of learning and to determine their level of granularity (size). This paper describes a proposal for a

specific LO quality evaluation instrument and presents the results of its assessment by a panel of experts.

1 INTRODUCTION

Information represents one of the world’s most

important sources of power today, and Internet

growth is geared to promoting better services that

will enable users to find and retrieve the information

they really need.

The concept of learning objects (LOs) was

devised in order to manage and reutilize existing e-

learning resources without interoperability problems.

However, the capacity of LOs to achieve that goal

will be diminished if their quality is poor. With that

in mind, we designed a quality evaluation instrument

and had it assessed by a panel of pedagogical and

technical experts.

This paper presents the background to our work,

describing the quality evaluation instrument we

developed and summarizing the experts’ comments,

before culminating in a round-up of our conclusions

and future work plans.

2 THE LO QUALITY

EVALUATION INSTRUMENT

Given that LO quality is crucial to LO management,

surprisingly little work has been done in this area,

resulting in just a handful of proposals for

developing and enhancing LO design and evaluation

tools and processes. Williams (2000), for example,

proposes a process to desegregate and rebuild LOs

using instructional design; and, as far as we are

aware, the only evaluation instruments currently in

use consider highly imprecise, general criteria such

as motivation, interaction, feedback and so on: the

Learning Object Review Instrument (LORI), for

example, considers a basic set of nine such criteria,

yet is used to evaluate LOs by major repositories

such as the Co-operative Learning Object Exchange

(CLOE) (http://cloe.on.ca/), the Digital Library

Network for Engineering and Technology (DLNET)

(http://www.dlnet.vt.edu/), and Multimedia

Educational Resource for Learning and Online

Teaching (MERLOT) (www.merlot.org).

The imprecision of those instruments is all the

more serious in the light of the huge number of

definitions of LOs. This makes it hard, in any

endeavour to develop a means of enhancing LO

quality and, hence, effective LO management for e-

learning systems, to have a clear idea of what

actually constitutes an LO.

As LOs come in many different sizes (levels of

granularity), we decided to produce a precise

definition of LO granularity based on IEEE LOM

(2002) and featuring the following additional details

(Morales, García and Barrón, 2007c):

• Level 1: the lowest level of granularity, e.g. an

image used in a lesson (photo, video, etc).

• Level 2: a lesson – possibly a group of level 1 LOs

– focusing on a specific learning objective with a

specific kind of content (data and concepts, process

and procedure, principles, etc.), with optional

exercises (for practice and/or examination).

327

M. Morales Morgado E., J. García Peñalvo F. and Barrón Ruiz Á. (2008).

AN EVALUATION INSTRUMENT FOR LEARNING OBJECT QUALITY AND MANAGEMENT.

In Proceedings of the Tenth International Conference on Enterprise Information Systems - AIDSS, pages 327-332

DOI: 10.5220/0001712303270332

Copyright

c

SciTePress

D/N= Don't know,

1=Very Disagree,

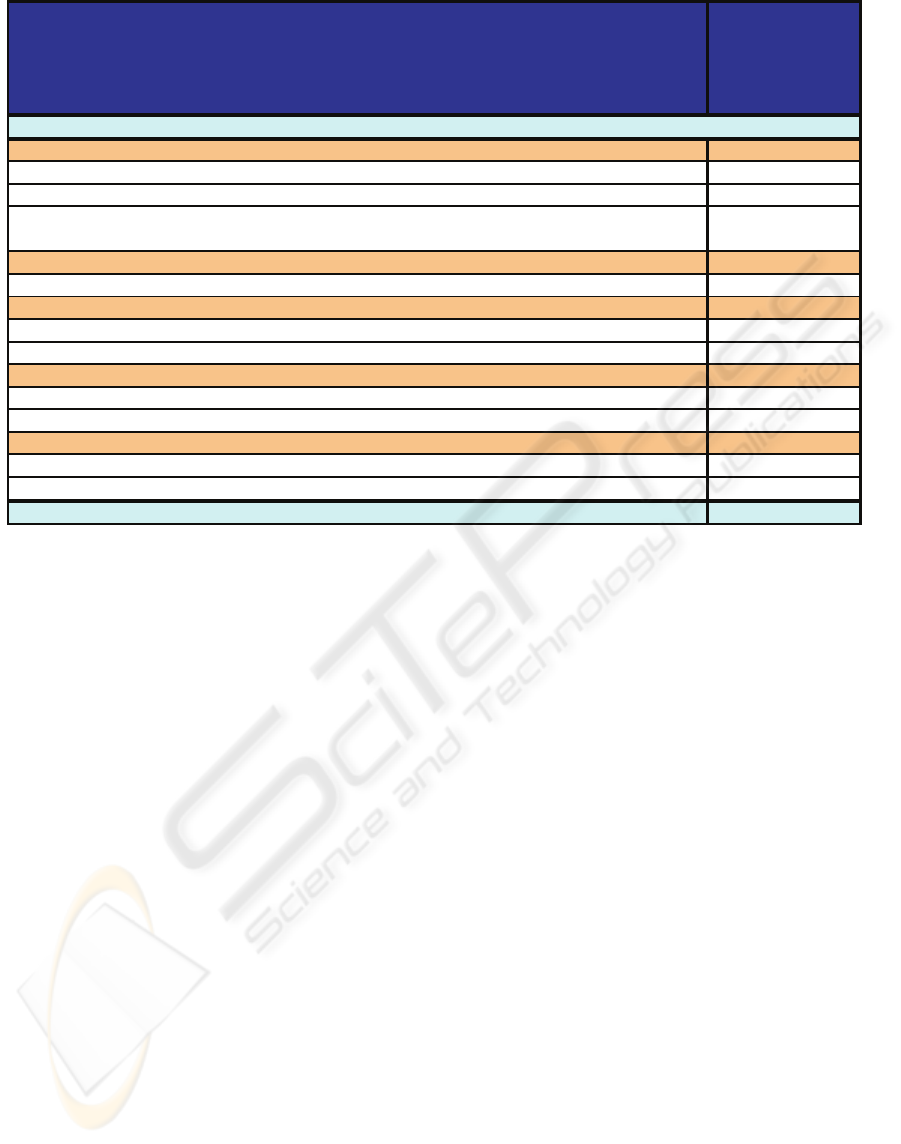

PEDAGOGICAL CRITERIA FOR LEARNING OBJECTS EVALUATION

2=Disagree,

3=Agree

4=Very Agree

PSYCHOPEDAGOGICAL

Motivation and Attention 3,7

Presentation: Capture learners attention mantaning their motivation

3,6

Add important information: Information need to be relevant according to the LO subject

3,7

Learners participation: LO explains very clear how learners can to participate in the lesson

3,8

Professional competence 3,9

Learning objectives help users to achieve their professional competences

3,9

Difficulty level

3,6

Contents difficulty level: It needs to be suitable for user cognitive domain

3,6

Language: It needs to be suitable for previous users knowledge

3,5

Interactivity

3,5

Interactivit

y

Level: It

p

romotes o

pp

ortunities to interact with LO in different wa

y

s

3,6

Interactivity type: LO interaction aims to achieve learning objectives

3,4

Creativity 3,8

It promotes self-learning

3,8

It promotes cognitive domain development

3,7

GENERAL COMMENTS

(Describe some examples where this LO can be reused)

3,7

Figure 1: Psychopedagogical item into Pedagogical criteria for LO evaluation.

• Level 3: a learning module made up of a set of

level 2 LOs (lessons) plus at least two or three

kinds of content (data and concepts, process and

procedure, principles, etc.), with optional

exercises (for practice and/or examination).

• Level 4: a learning course made up of a set of

level 3 LOs (modules) plus at least two or three

kinds of content (data and concepts, process and

procedure, principles, etc.), and optional

exercises (for practice and/or examination).

LOs must be clearly defined in order to be able to

establish specific quality evaluation criteria. We

must know exactly what we are evaluating. To

define a quality evaluation instrument, we decided to

consider LOs as basic units of learning – as in the

case of the prototype LOs in our Salamanca

University pilot project (Morales, García and

Barrón, 2008) – because we believed them to be

consistent with the core idea of the LO concept. We

used this as a basis upon which to determine the

kind of criteria that would serve to evaluate the

pedagogical and technical aspects of LO quality, i.e.

whether or not, and to what degree, the learning

resources in question displayed characteristics

suggesting that they could effectively achieve the

specified educational goals.

To achieve those goals, LOs must embrace a

specific range of curricular and psycho-pedagogical

issues. Curricular issues concern the subject matter,

the goals and so on, while psycho-pedagogical

issues concern the user’s characteristics (age,

learning ability, motivation, etc.).

We established a set of quality criteria for

evaluating pedagogical aspects of LOs (figure 1),

and then divided those criteria into categories and

sub-categories containing more detailed criteria.

Each of these was assessed by a panel of ten

educational and technical experts who rated each

item according to the following scale: D/N=don’t

know; 1=very disagree; 2=disagree; 3=agree; 4=very

agree.

Once the experts had rated the criteria, they each

had the chance to comment on them and suggest

ways to improve them in a face-to-face interview.

The overall rating (3.7) reflects their unanimous

agreement that the proposed criteria were sound.

The highest scoring criterion was ‘professional

skills’ (3.9), meaning that the LOs were considered

very well suited to helping students achieve their

objectives and enhance their professional skills.

The next highest scoring items were ‘creativity’

(3.8), which the experts said would be useful if the

LO promoted meta-cognitive skills and self-

learning; ‘motivation’ (3.7), which was considered

crucial for any kind of learning material; and

‘interactivity level’ (3.5), which was regarded as

critical for promoting active student participation,

but whose evaluation depended on the learning

objectives because the LOs could include lectures or

other ‘passive’ activities.

ICEIS 2008 - International Conference on Enterprise Information Systems

328

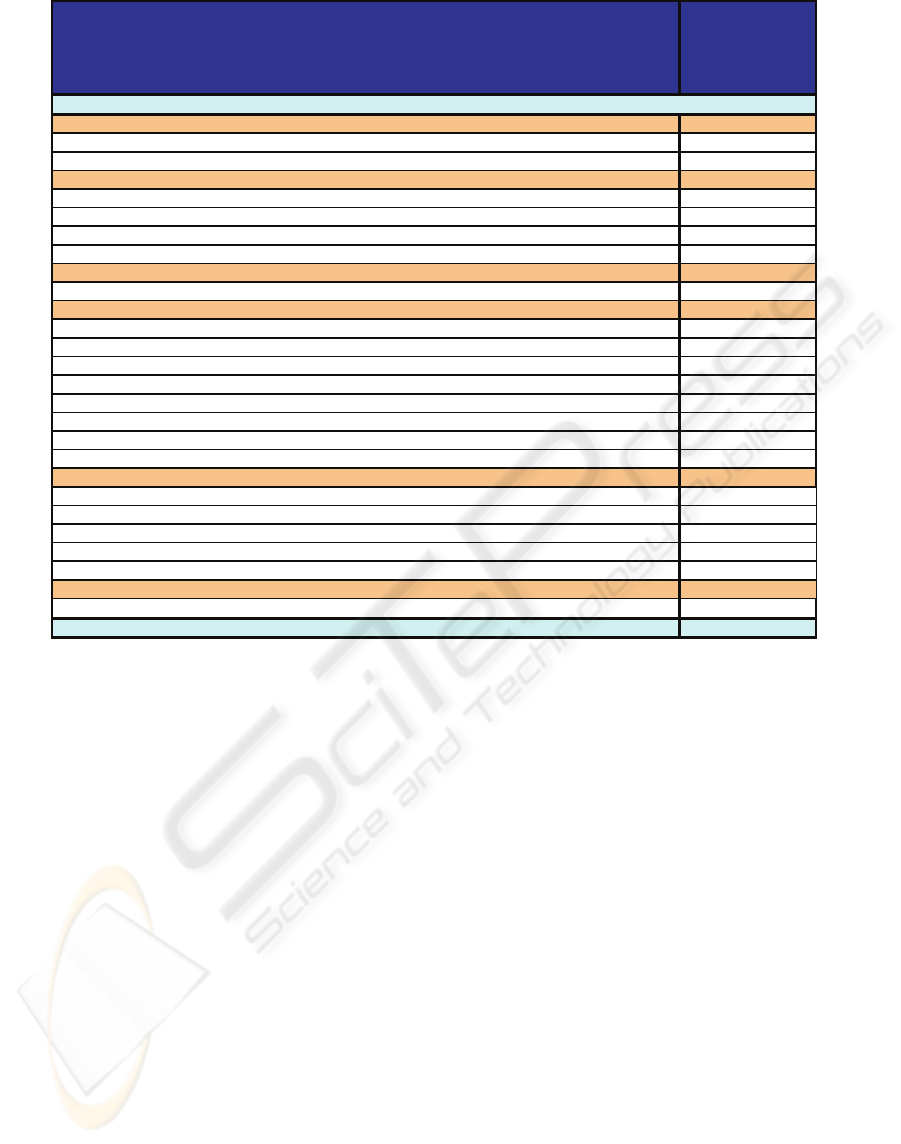

D/N= Don't know,

1=Very Disagree,

PEDAGOGICAL CRITERIA FOR LEARNING OBJECTS EVALUATION

2=Disagree,

3=Agree

4=Very Agree

DIDACTIC-CURRICULAR

Context

3,8

Formative level 3,5

Subject description 4

Objectives

3,7

Formulation 3,6

Factibility 3,7

Describe the materia that need to be learned 3,8

Coherent with general objectives 3,7

Learning Time

3,5

Time destined for learning activities may be congruent with the time disponible 3,5

Contents 3,7

Present enou

g

h information and suitable for the educational level

3,7

Contents are suitable for the proposed objective 3,7

LO

p

resent information considerin

g

different kind of formats

(

text, audio, etc.

)

3,6

LO provide contents interacion by some links 3,6

LO

p

resent com

p

lementar

y

information

(g

lsosar

y

, aids, etc.

)

3,8

The information is reliable 3,9

The information presentation aims to obtain a better contents comprehension 3,7

Language is suitable respect on learning objectives 3,5

Activities 3,8

They help to reinforce the concepts 3,9

Promotes an active participation 3,7

Presents different kind of learning strategies 3,9

Presents evaluation and practice activities 4

They proposes work modality (if if is the needed) 3,7

Feedback

3,9

Knowledge is reinforced by exercises and activities, self-evaluation, etc.

3,9

GENERAL COMMENTS

(Describe some examples where this LO can be reused)

3,7

Figure 2: Didactic-curricular section of pedagogical criteria for LO evaluation.

The next section of the LO quality evaluation

instrument focuses on didactic-curricular items, a

sub-category of pedagogical criteria (figure 2).

Once again the overall rating was very high

(3.7). The highest scoring item was ‘feedback’ (3.9),

which the experts said was especially important for

LOs because it provided pointers for reinforcing

them and their capacity to serve as basic units of

learning and knowledge containers, thereby helping

prepare students for other, more advanced and

complex, LOs.

A similarly high score was given to ‘context’

and ‘activities’ (3.8), with the experts saying that

context was crucial to an LO’s value and reusability.

The LO quality evaluation instrument must therefore

assess whether an LO is suitable for the formative

level and provides a clear enough description of its

subject.

Next came ‘objectives’ and ‘content’ (3.7). The

experts approved of the fact that these criteria sought

to assess formulation, feasibility and other such

fundamental issues. It is interesting to consider the

evaluation of specific objectives and how they relate

to general objectives because the aim is to rate the

consistency and sequence of a group of LOs (a

module, didactic unit, course, etc.)

Finally, the lowest scoring didactic-curricular

item was ‘learning time’ (3.5). The experts described

this as crucial to learning activities but said that in

some cases learning time needed to be modified

during the activities according to the objectives, the

students’ characteristics and so on. They approved

of this criterion because it was designed to assess

whether enough time was devoted to learning to

complete the allotted tasks.

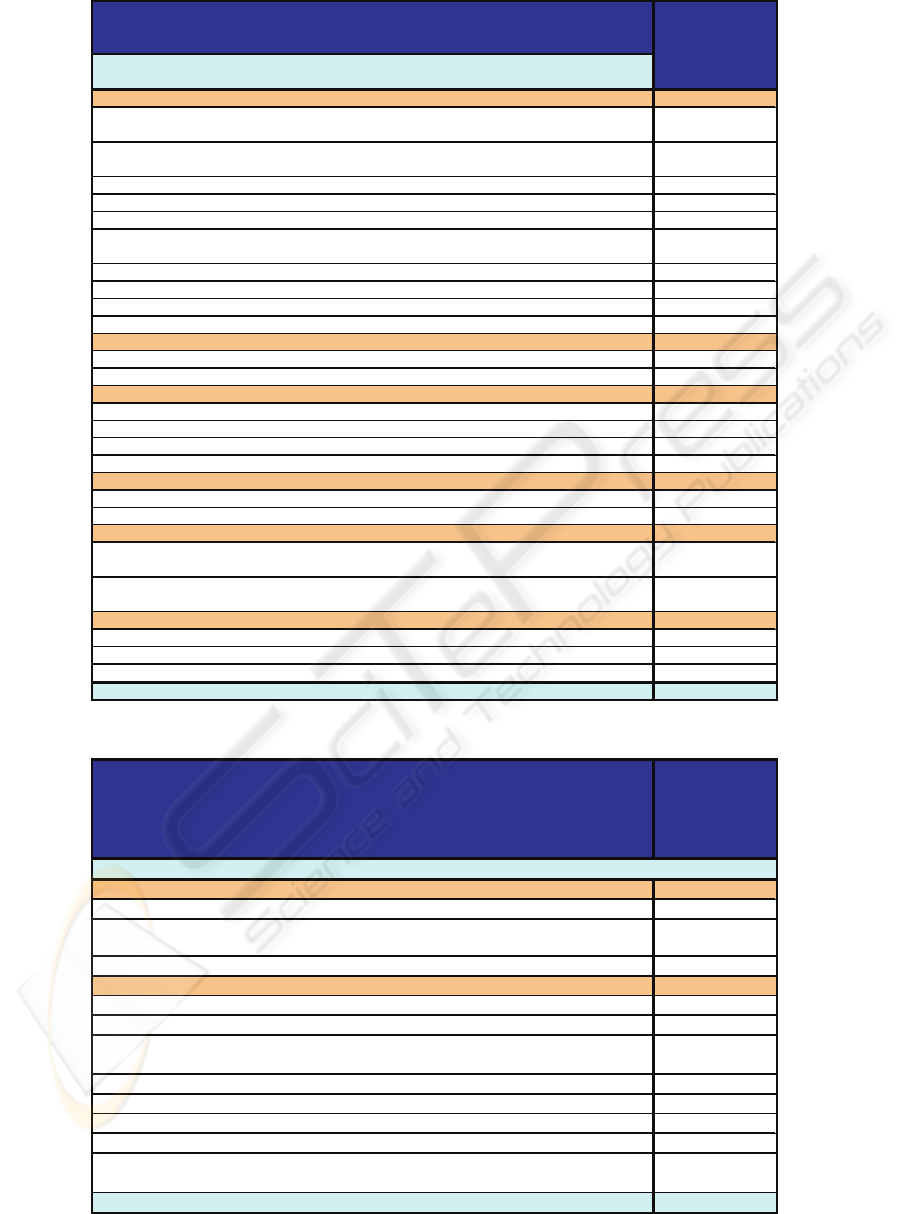

As mentioned earlier, an LO quality evaluation

instrument must also cover technical criteria. In

order to identify these we analysed a number of

proposals for rating such items as multimedia

sources (Marques, 2000), educational web pages

(Marques, 2003; Torres, 2003) and usability

(Nielsen, 2000). On this basis, and with the LO as

we define it serving as a digital basic unit of

learning, we decided to sort technical issues into two

categories: interface design (figure 3) and navigation

design (figure 4) (Morales. García and Barrón,

2007a).

The highest scoring items into interface design

were ‘images’ and ‘animations’ (3.7), and the

AN EVALUATION INSTRUMENT FOR LEARNING OBJECT QUALITY AND MANAGEMENT

329

D/N= Don't know,

USABILITY CRITERIA FOR LEARNING OBJECTS EVALUATION

1=Very Disagree,

2=Disagree,

INTERFAZ DESIGN

3=Agree

4=Very Agree

Text 3,5

Text need to

b

eorganizedi

n

short paragraphs. I

t

is importan

t

to don't rompe

r

the patagraphs

continuity and the ideas which they contain. 3,4

It is recomendable that LOs consider at leas

t

the half part of a text which could

b

eusedfor

printed publication. 3,4

It is advisable to use hipertext to divide large ionformation in multiples pages. 3,6

Marc contents using titles, epighaphs, etc. 3,5

Different pages need to have different titles. 3,5

The use of capital letter need to be used only for titles, headlines or for points out specific text. 3,4

It is advisable to avoid underline text when there are not text links. 3,3

Text need to have legible kind of letter and suitable size. 3,5

The colours and kind of letters provide information by itself. 3,6

Text don't have to present any ortographic mistake. 3,9

Imagen 3,7

Images complement and support the information. 3,6

Images don't are superflues, they need to be present only if it is necessary. 3,7

Animations 3,7

The animations used need to be justified. 3,7

They need to atract the users attention to point out important information. 3,6

Animations don't have to take a long upload time. 3,7

It is advisable to avoid animations which are repeiting all the time (loop). 3,7

Multimedia

3,5

Multimedia use need to aport and be justified. 3,6

The format and file size need to mention if its download time take more than 10 seconds. 3,4

Sound 3,5

Sound need to

b

eusedonl

y

if it is necessary. Users need to have the opportunit

y

to listen the

sound in an optional way. 3,5

Users need to

b

e informed if the sound file characterisctics

b

efore to dowlown it (size, kind o

f

conection, etc. 3,4

Vídeo

3,6

Need to serve as a complement of vídeo and images. 3,6

They use need to be justified. 3,6

The images and audio need to be as clear as possible.

3,5

GENERAL COMMENTS

(Describe some examples where this LO can be reused)

3,6

Figure 3: Interfaz design item into Usability criteria for LOs evaluation.

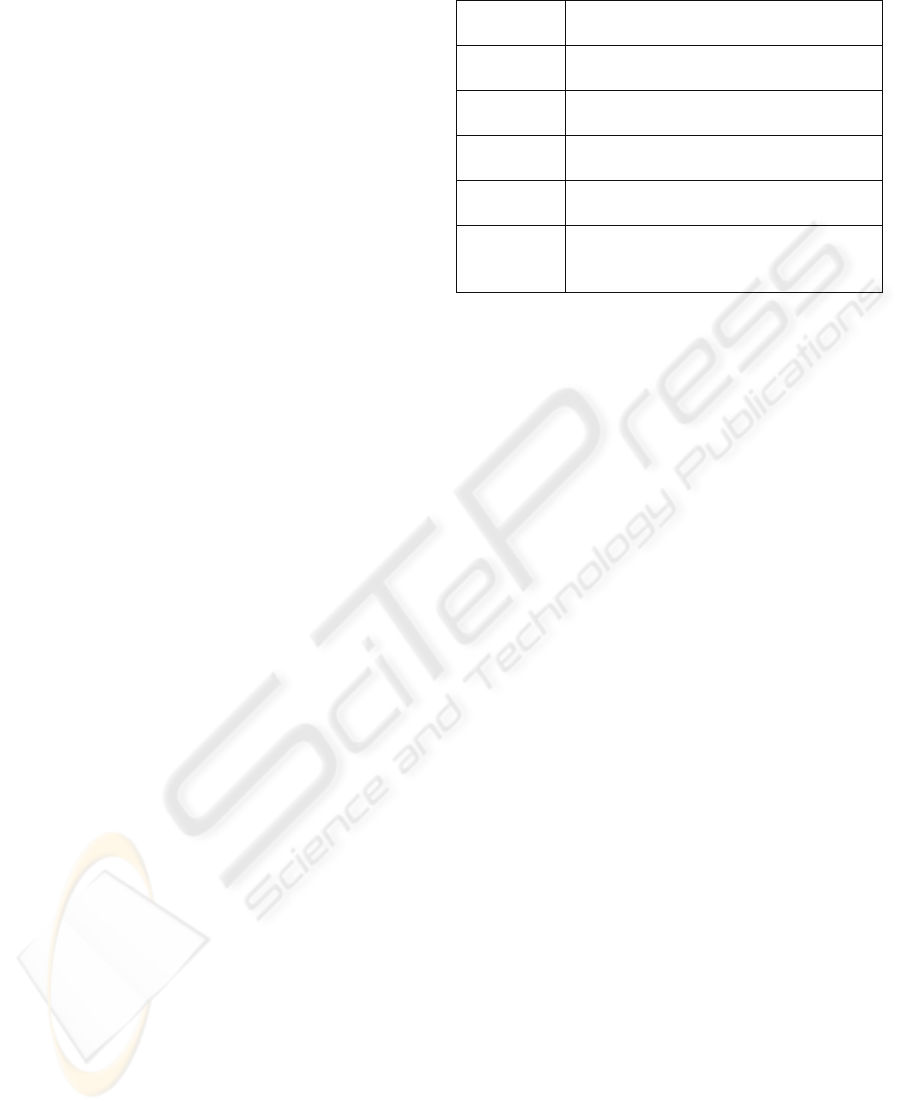

D/N= Don't know,

USABILITY CRITERIA FOR LEARNING OBJECTS EVALUATION

1=Very Disagree,

2=Disagree,

3=Agree

4=Very Agree

NAVIGATION DESIGN

HOME PAGE

3,7

Users need to be clear what places are visiting and the what are the LO objectives 3,9

Page need to presen

t

a director

y

considering the main contents are

a

and links to complemen

t

the

information 3,6

The welcome pages do'nt have to slow down the users interaction 3,8

NAVIGABILITY

3,7

The structure need to be flexible in order to aim users to control their navigation. 3,8

Titles need to be present and be clear into ecah one of LOs pages 3,7

La intefaz de navegación muestra todas las alternativas posibles al mismo tiempo, para que los

usarios puedan escoger su opción 3,7

Users need to be clear where are the

y

in each

p

a

g

e

3,7

Users need to be clear where are the

y

into site structure

3,8

The

p

a

g

e desi

g

n is directed in

g

rant

p

art to the sub

j

ect contents.

3,7

Pa

g

es need to be sim

p

le no rechar

g

ed

4

The page design need to be strong into each one of the pages (size, colours, iconos, kind of

letter, etc.

)

.

3,7

GENERAL COMMENTS (Describe some examples where this LO can be reused)

3,7

Figure 4: Navigation design item into Usability criteria for LOs evaluation.

ICEIS 2008 - International Conference on Enterprise Information Systems

330

experts approved of the proposed criteria because

such resources could help boost the learner’s

motivation and provide useful information to

complement the text. Similar comments were made

in regard to the next highest scoring item: ‘vídeo’

(3.6).

Then came ‘text’, ‘sound’ and ‘multimedia’

(3.5), whose slightly lower score reflected the fact

that these are highly specialized domains with which

some of the experts were unfamiliar.

The experts deemed it important, therefore, that

those using the evaluation instrument either be

acquainted with the subjects covered by the various

criteria, or to omit those criteria from their

evaluation.

The experts approved or fully approved of all of

the items in the final section of the instrument,

covering usability criteria with a specific focus on

navigational aspects (figure 4).

The highest scoring item was ‘are web pages simple

and devoid of heavy graphics’ (4.0). Next came

‘does the user know the places they are visiting and

the objectives of the LO’ (3.9). This was followed

by three separate items: ‘do home pages slow down

the user’s interaction with those pages’ (potentially

demotivating); ‘is the page structure flexible enough

to allow the user to play an active role during

navigation’; and ‘is the user aware of where they are

within the site architecture’ (3.8).

The remaining five navigation-relation items all

achieved a score of 3.7.

The post-evaluation face-to-face interviews with

the experts enabled us to gather qualitative

information on the instrument itself together with a

number of suggestions for their improvement. All of

the experts agreed with the criteria proposed and the

items considered.

They also suggested editorial changes in the

wording of the items. Some said that it was

advisable to avoid the use of expressions such as

“must have” because they were too imposing and

could complicate matters for the evaluators.

On the other hand, the items should be worded

briefly and should avoid using examples likely to

influence the evaluation. All figures in this paper

have been corrected according to the experts’

qualitative evaluation.

In the final comments, some of the experts

suggested other kinds of scales aimed at rating

specific aspects of LO quality. Based on their

suggestions we have decided to introduce the

following version into our instrument as shows the

Table 1.

Table 1: LOs evaluation rating scale.

Scale

Range

Value

1,0 – 1,5 Very Low: LO quality is too bad, it

need to be eliminated

1,6 – 2,5 Low: LO quality is bad, it requires a

big improvement

2,6 – 3,5 Acceptable: LO quality is not bad,

however it needs to be improved

3,6 – 4,5 High: LO quality is good but can be

improved

4,6 – 5,0 Very High: LO quality is quite

good, it does not need to be

improved

For a balanced LO quality evaluation we

suggest calculating an average final score for each of

the four sections of the instrument so as to be able to

extract a specific value to add to our LO metadata

typology based on the LOM 9. Classification

metadata category (Morales, García and Barrón,

2007b). The aim here is to introduce numeric values

that will help the user find and retrieve LOs

according to quality-related criteria, and enable us to

develop more sophisticated LO management

capabilities, e.g. automated means using intelligent

agents that will pave the way for new quality-based

LO management tasks (Gil, García and Morales,

2007), (Morales, Gil and García, 2007).

3 CONCLUSIONS

Our model LO quality evaluation instrument

contains a wide variety of criteria aimed at

enhancing the core pedagogical quality of LOs:

meaningful logical and psychological criteria. The

first set of criteria concerns curricular issues, i.e.

whether the LO is consistent with the study

programme objectives, content, activities and so on.

The second centres on the learners’ characteristics:

learning ability, motivation, interactivity, and so on.

In order to produce a holistic evaluation of an

LO’s quality as a pedagogical digital resource (in

line with our definition of what constitutes an LO),

the instrument also focuses on assessing technical

criteria. As these types of resources can consist of

different kinds of media, our model therefore takes

into consideration the most commonly used

multimedia resources: images, video, etc.

Finally, since LOs are composed of different

kinds of media, it is important to ensure that each is

rendered accessible: e.g. an Internet site or web page

designed to enable all kinds of users to access them,

AN EVALUATION INSTRUMENT FOR LEARNING OBJECT QUALITY AND MANAGEMENT

331

taking into account personal characteristics,

navigation context, etc.

If we want to promote holistic LO quality, we

must seek to evaluate their accessibility, bearing in

mind the basic technical rules for accessible web

development: e.g. the Web Content Accessibility

Guidelines

(WCAG), which promote the Web

Accessibility Initiative (WAI)

(http://www.w3.org/WAI/). In so doing, we can help

enhance LO quality not only for existing resources,

but also for those under development.

ACKNOWLEDGEMENTS

This work was partly financed by Ministry of

Education and Science as well as FEDER KEOPS

project (TSI2005-00960) and the the Junta de

Castilla y León Project (SA056A07).

REFERENCES

Gil, A. B., Morales, E., García, F.J. 2007. E-Learning

Multi-agent Recommender for Learning Objects. En

SIIE’07. Actas del IX Simposio Internacional de

Informática Educativa, ISBN:978-972-8969-04-2, pp.

163-169.

IEEE LOM. 2002. IEEE 1484.12.1-2002 Standard for

Learning Object Metadata. Retrieved June, 2007, from

http://ltsc.ieee.org/wg12.

Marquèz, P. 2000. Elaboración de materiales formativos

multimedia. Criterios de calidad. Disponible en

http://dewey.uab.es/pmarques.

Marquèz, P. 2003. Criterios de calidad para los espacios

Web de interés educativo. Disponible en

http://dewey.uab.es/pmarques/caliWeb.htm

Morales, E. M., García, F. J., Barrón, Á. 2007a. Key

Issues for Learning Objects Evaluation. ICEIS'07, 9th

International Conference on Enterprise Information

Systems. Vol 4. pp. 149-154. INSTICC Press.

Morales, E. M., García, F. J., Barrón, Á. 2008. Research

on Learning Object Management. ICEIS'08, 10th

International Conference on Enterprise Information

Systems. (unpublished).

Morales, E. M., García, F. J. & Barrón, Á. 2006. Quality

Learning Objects Management: A proposal for e-

learning Systems. In ICEIS’06. 8th International

Conference on Enterprise Information Systems

Artificial Intelligence and Decision Support Systems

Volume Pages 312-315. INSTICC Press. ISBN 972-

8865-42-2. 2006. (B3).

Morales, E. M., García, F. J., Barrón, A. 2007b. Improving

LO Quality through Instructional Design Based on an

Ontological Model and Metadata J.UCS. Journal of

Universal Computer Science, vol 13. nº 7. pp. 970-

979. ISSN 0948-695X

Morales, E. M., García, F. J. & Barrón, A. (2007c).

Definición pedagógica del nivel de granularidad de

Objetos de Aprendizaje. EuniverSALearning’07. Actas

del I Congreso Internacional de Tecnología,

Formación y Comunicación. En Prensa.

Nielsen, J. 2000. Usabilidad Diseño de Sitios Web.

Prentice Hall PTR.

Torres, L. 2005. Elementos que deben contener las

paginas web educativas. Pixel-Bit: Revista de medios

y educación, Nº. 25, 2005, pags. 75-83.

Williams D. D. 2000 Evaluation of learning objects and

instruction using learning objects. In D. A. Wiley (Ed.),

The instructional use of LOs,

http://reusability.org/read/chapters/williams.doc

ICEIS 2008 - International Conference on Enterprise Information Systems

332