Touch & Control: Interacting with Services by Touching

RFID Tags

Iván Sánchez, Jukka Riekki and Mikko Pyykkönen

Dept. of Electrical and Information Engineering and Infotech Oulu. P.O. Box 4500

University of Oulu, Finland, 90014

Abstract. We suggest controlling local services using an NFC capable mobile

phone and RFID tags placed in the local environment behind control icons.

When a user touches a control icon, a control event is sent to the corresponding

service. The service processes the event and performs the requested action. We

present a platform realizing this control approach and a prototype service

playing videos on a wall display. We compare this touch-based control with

controlling the same service using the mobile phone's keypad.

1 Introduction

When RFID tags started to become popular in 90’s they were seen only as a way of

identifying objects. RFID tags can be embedded in any good easily. Each tag has a

unique serial number which identifies the object univocally. Furthermore, the

communication between tag and reader is done using radio signal, so there is no need

to have line of sight as is the case with barcode.

Recently RFID technology's potential has been recognized by ubiquitous

computing researchers, in implementing physical user interfaces [1]. In ubiquitous

computing, objects in our everyday environment are associated with services. But

how can a user identify the object to service associations? We suggest using icons to

advertise the services. Those icons contain visual information which allows the users

to perform these associations [2]. When RFID tags are located under those icons, and

the reading distance is short, service-related data can be read by simply touching an

icon with an RFID reader. The service can then be commanded by sending this data to

the computing device realizing the service. This can be classified as physical mobile

interaction [18]; user interacts with a mobile device and the device interacts with

objects in the environment.

To become really ubiquitous, this approach requires that a widely used device is

equipped with an RFID reader. Mobile phone is an obvious selection, as it also

contains an Internet connection. This connection enables communication with the

computing devices realizing the advertised services using any of the physical network

bearers that the mobile phone supports, for example GPRS, UMTS or WiFi.

To show the potential of RFID tags in building physical user interfaces we have

built the REACHeS platform [4]. This platform changes any mobile terminal with an

Internet access into a universal remote control for remote services and local resources.

Sánchez I., Riekki J. and Pyykkönen M. (2008).

Touch & Control: Interacting with Services by Touching RFID Tags.

In Proceedings of the 2nd International Workshop on RFID Technology - Concepts, Applications, Challenges, pages 53-62

DOI: 10.5220/0001731500530062

Copyright

c

SciTePress

In our first prototype [5] we use RFID tags to start services. Touching a tag with

the mobile phone triggers a start event that is sent to REACHeS. It redirects the event

to the target service. The service allocates resources and waits for commands from the

mobile phone. The mobile phone keypad is used to command the service, for

example, to stop a video. In this paper we propose an innovative new way of using

RFID tags, the Touch & Control approach. Instead of using RFID tags only to start

services, we build a complete user interface from the tags. We create a touchable

control panel that contains a control icon for each command. RFID tags are placed

under the icons. When a user touches an icon the corresponding command is sent to

the REACHeS platform which redirects the command to the corresponding service.

For example, if a wall display is playing a video the pause icon on the panel can be

touched to freeze the video. Touch & Control approach is the main contribution of

this paper – we are not aware of any related work presenting a similar approach.

The rest of the paper is organized as follows. Section 2 describes the REACHeS

platform and Section 3 presents the two control approaches, explaining in depth the

Touch & Control approach. Section 4 presents a usability test. Section 5 presents the

discussion and comparison to related work and Section 6 concludes the paper.

2 REACHeS Platform

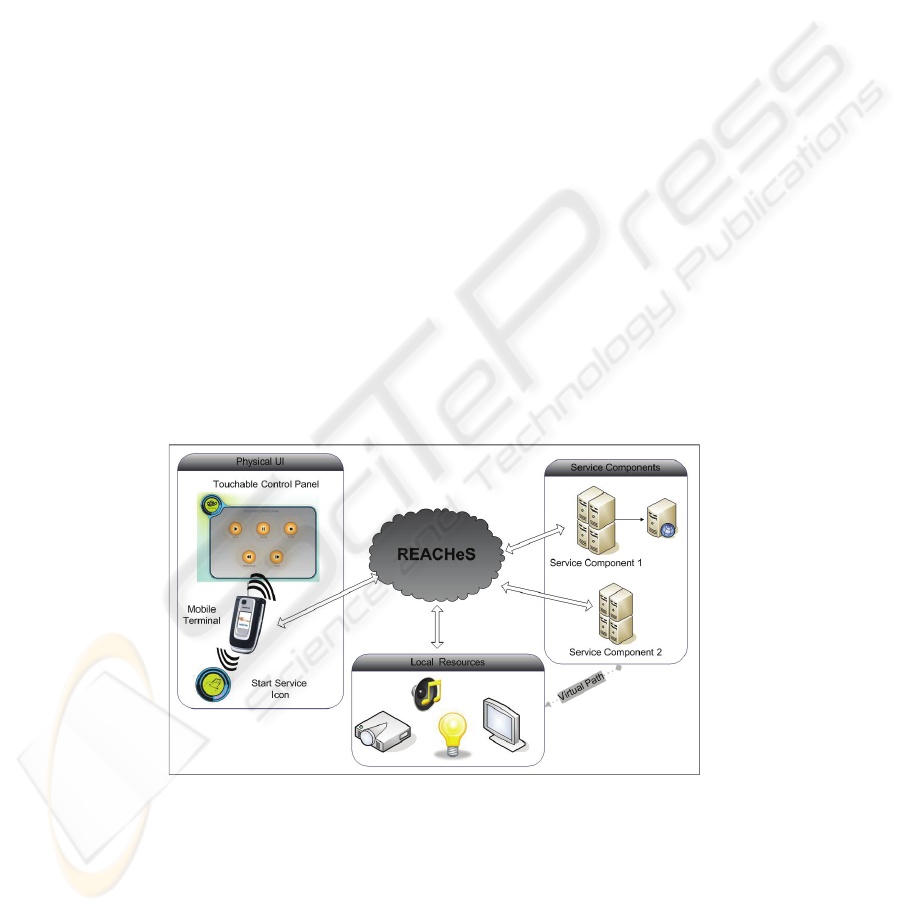

The REACHeS platform connects a remote control and the controlled services. Figure

1 shows the REACHeS architecture. The main components are: the Physical UI for

controlling the services, the Local Resources registered into the REACHeS and used

by the services, the Service Components implementing the services, and the

REACHeS platform interconnecting all the other components.

Fig. 1. The REACHeS Architecture.

REACHeS provides an interface for the services to create user interfaces in the

remote control. It also allocates local resources and allows the services to control

54

them. At the moment, only wall displays can be controlled but also other types of

resources can be easily integrated into this system.

We build physical user interfaces from control icons, RFID tags and NFC enabled

[3] mobile phones. These phones contain an RFID reader reading tags from a close

distance. Mobile phones send commands to the REACHeS by means of HTTP

requests. Those requests contain necessary data (target service, event and extra data)

as request parameters. An RFID tag, placed under a control icon, contains the

information that is needed to trigger the action the control icon advertises. When a

user touches an icon, data is read from the tag and a command (i.e. event) is sent to

REACHeS. Tag data is included in the request as request parameters.

Figure 2 illustrates how REACHeS works. The sequence starts when a user

touches with a mobile phone a tag associated with a service's start event. This event is

sent to REACHeS (1) which allocates the resources needed by the service and binds

the resource to the service (2). The event is sent to the Service Component (3) that

updates the mobile phone's GUI (4) and configures the resource (5). From now on, the

user can send events to the service (6). The service performs the requested actions,

updates the mobile phone's GUI (7), and sends commands to the controlled resources

(8). This process continues until the user sends a close event (9). The service is then

stopped (10), resources are released (11) and the mobile phone is informed (12).

More information about the REACHeS platform and example applications can be

found from our previous work [4, 5].

Fig. 2. REACHeS sequence diagram.

The main advantages of REACHeS system are lightweight implementation and

off-the-shelf components. New technologies under development are not used; instead

existing mature technologies are used to produce a light, flexible, robust, and

compatible system. HTTP is used for communication between REACHeS and the

other system components (remote controls, external services, and local resources),

AJAX is used for communication between REACHeS and external displays, and NFC

is used for creating physical user interfaces.

55

3 Interacting with Services

REACHeS supports two different approaches of controlling services: the mobile

phone GUI-based approach and the Touch & Control approach. In both approaches a

service starts when a start tag is touched.

When a Graphical User Interface (GUI) on the mobile phone's display is used to

control a service, the user operates the GUI with the mobile phone keypad. When the

user presses buttons on the GUI, events are sent (by means of HTTP requests) to

REACHeS which redirects the events to services. So, in our first approach, a user

controls a service using a mobile phone keypad.

All user actions generate similar HTTP messages. Hence, it is easy to extend the

usage of tags from the start command to further commands after starting a service.

We can build a touchable control panel that presents the available commands as

control icons; RFID tags are placed under those icons. Every time a user touches one

of the icons the mobile phone sends an event to the service. This is the Touch &

Control approach, our innovation and our second approach, where a user controls a

service by touching icons in a control panel.

We use a Multimedia Player service to explain these two approaches in detail. The

REACHeS Multimedia Player allows the user control remotely a video player. Videos

are shown on a wall display that is registered in REACHeS. When the service starts it

loads the playlist specified in the start command. The playlist determines the locations

of the files that can be played. A user can play, pause, restart and stop a video. He/she

can also move to the next and previous files in the playlist.

3.1 Controlling Services with the Mobile Phone GUI

The mobile phone generates the GUI according to the service’s responses to the

commands (i.e. to the HTTP Requests sent by the phone). The service sends to the

mobile phone either an XHTML webpage or a text string. In the first case, the mobile

phone’s browser renders the webpage. In the second case, a MIDlet shows the

Displayable object associated to the received string message. The user interacts with

the GUI using the keypad: the arrow keys and the soft keys.

With this approach we can adapt the mobile phone's GUI dynamically according to

the responses received from the service. We use the phone only as a basic interface

between the user and the service. The main service content is shown on external

screens at the users’ environment. The mobile phone offers the command UI and

provides only basic feedback, for example, to inform the user when communication

problems have been detected or an event was not recognized by a service.

The Multimedia Player GUI is shown in Figure 3. The UI on the left shows the

initial state; the UI on the right shows that play has been selected. The buttons on the

GUI are bound to the commands that can be sent to the service: play current video,

pause current video, stop current video, start playing next video in the playlist and

start playing previous video in the playlist. The user can select a button using the

arrow keys and send the selected command to the Multimedia Player service by

pressing the Press button (at the bottom, middle). The GUI was developed using a

MIDlet; the same interface can be implemented easily as a webpage.

56

Fig. 3. The GUI for the Multimedia Player.

When the user presses the Press key, the MIDlet generates an HTTP request

specifying the selected command and sends it to the REACHeS. When the service

responses, REACHeS redirects the HTTP Response to the mobile phone. The

response specifies how the UI should be modified. In the case of the Multimedia

Player the UI is modified only when the user needs to be informed about error

situations, e.g. in the communication.

We learnt from the first usability tests [6] that the users do not pay much attention

to the feedback given on the mobile phone’s display, instead they stay focused on the

wall display. To improve the usability we have started to present feedback both on the

mobile phone’s display and on the wall display. We suggest that all services using an

external display give feedback to the user on both displays.

3.2 Controlling Services with Touch & Control

One strength of REACHeS is the uniform and simple message format based on HTTP

requests. RFID tags can store the request (i.e. command) parameters. Hence, we can

create several tags for the same service. Each one can store a different command, so

when the user touches a tag, the mobile phone sends the corresponding command as

an HTTP request to REACHeS. From the user point of view, the tag is like a

mechanical button. When the button is touched a command is performed and the user

can see the effect, for example a video being started on a wall display.

The Touch & Control user interface is not created on a display but embedded into

the users’ physical environment. The UI can be placed wherever it is accessible by the

users; on walls, posters, books, and so on. There is no need of extra infrastructure. Of

course, the controlled devices need to be installed as usual. The small cost of RFID

tags allows creating a large set of user interfaces for various services. This user

interface can be personalized very easily. As the UI is not coded in any software

component, only the control panel cover needs to be changed.

We have implemented a Touch & Control prototype for controlling the Multimedia

Player service. A control panel contains six control icons: start/close, play, pause,

stop, next and previous (see Figure 4). The start/close button is located at top left. The

RFID tags containing the HTTP request parameters are placed under the icons. Basic

feedback is shown on the mobile phone screen, so we can inform the user about errors

57

that prevent accessing the big display or how do they need to use the mobile phone to

proceed. Moreover, users can always check the status from the phone.

Fig. 4. The Multimedia Player control panel.

Figure 5 shows some of the feedback shown on the mobile phone's display. On the

left, the user has started the application but has not sent any commands yet. On the

right the user has already sent a play command. The UI informs the user that to send a

new command he/she must touch another icon.

Fig. 5. Feedback given on the mobile phone when using Touch & Control.

It is important that the user is informed clearly when a tag has been read correctly.

A user can try to touch a tag with a wrong part of the mobile phone or perform the

touching too quickly, for example. When this happens the mobile phone fails to read

the tag and so, the service does not receive any command. We use vibration to inform

the user: when a tag has been read successfully the mobile phone vibrates.

58

3.3 Comparison

Touch & Control has several advantages over the mobile phone GUI-based control.

First, it allows the user to focus on the main screen. There is no need to study the

mobile phone's screen or to use the keypad when the service is controlled. The user

needs just to place the mobile phone over the correct control icon. Feedback is given

on the big display where users focus their attention according to our previous

experiments.

Second, the service does not need to control a service-specific user interface.

Instead, a general UI suffices (Figure 5) and the service must only inform the user

about errors and perhaps about the latest received command. The service-specific user

interface is embedded in the environment. From the software developer's perspective,

when the user interface is created on the mobile phone’s display, a separate MIDlet

(or a set of webpages) is needed for each service. With Touch & Control a single

MIDlet is in charge of reading tags and sending commands to the services. Only the

contents of the responses received by the MIDlet are service-specific, but the format

is general and a single general MIDlet can present all services' feedback messages to

the user.

Third, Touch & Control allows big icons to be used; this improves the usability

particularly for elderly and visually impaired people. Icons might even contain Braille

representations for blind people in addition to the visual symbol. The GUI can be

easily changed as well, for example, by replacing a poster presenting the control

icons. Finally, Touch & Control does not even require a mobile phone or any other

personal terminal. An RFID reader able to communicate with an IP network is the

minimum requirement. For example, a wireless reader communication over

6LoWPAN [7] or over Bluetooth [8] with a local access point could be used.

However, Touch & Control has also some disadvantages. First, the GUI cannot be

changed dynamically, for example depending on the state of the service. Hence, when

the UI is designed a careful analysis is needed to guarantee that the UI contains all the

necessary items (control icons). Second, the interaction is limited to touching.

Additional displays and input devices are needed to input text or select elements from

a list. However we do not see this as a significant deficiency, because the GUI is used

only as a remote control; more complex user interfaces can be built from the locally

available I/O devices.

4 Usability Test

We have carried out a preliminary usability test to measure the usability of the control

methods proposed in the article. The usability test was performed by 10 participants

with ages between 23 and 44 years. All of them felt comfortable or very comfortable

using a mobile phone and 7 of them have used, at least once, RFID technology before.

The participants were asked to use the Multimedia Player with both control

methods. We did not impose a time limit but asked the users to stop using the

application when they feel they understand how it works. Basic instructions to start

and control the Multimedia Player were given in a small poster. After testing, the

users filled in a questionnaire. We only present the main results here; further analysis

59

and more comprehensive usability tests will be presented in future publications. In the

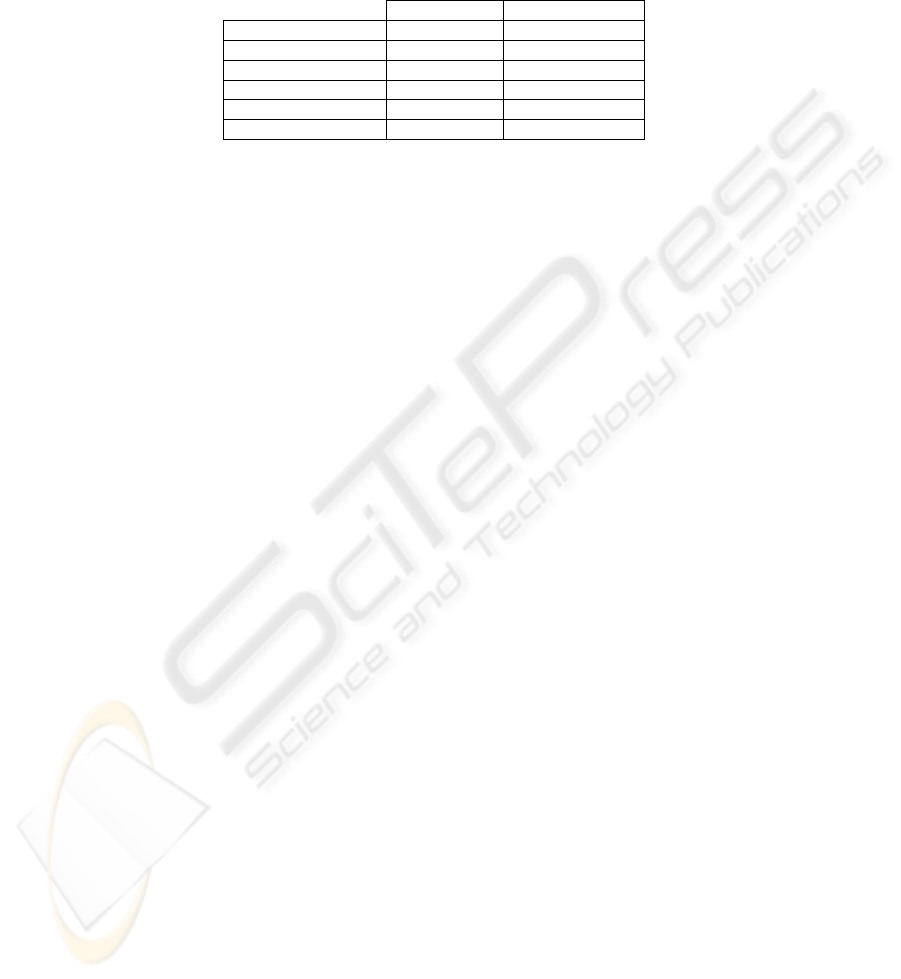

questionnaire the participants had to evaluate the methods separately. Table 1 lists

average values of the grades given by the users, on scale [1, 10].

Table 1. Grades given by the users.

Phone GUI Touch & Control

Reliability

8,1 8,6

Easiness

8,7 9,4

Speed

6,8 7,6

Intuitiveness

8,4 9,2

Cognitive load

8,2 8,8

Final grade 8,35 8,6

As Table 1 shows, Touch & Control is better in all analyzed aspects, getting a

grade above 9 in easiness and intuitiveness. However, the marks obtained by phone

GUI-based method are also quite high, what tell us that both methods are suitable for

controlling resources remotely. Speed was given the lowest grade for both methods.

This is due to using a GPRS connection to connect with servers. A WiFi connection

would solve this problem, but unfortunately there are no yet on the market mobile

phones with both NFC and WiFi capabilities.

We asked also the users to suggest a good position for the Control Panel. The

majority of the users suggested that the control panel should be in the field of view

when the display is watched. Some of the participants also suggested that the panel

could be movable, so the users could adapt the position according to their preferences.

During the tests we observed that the users did not pay attention at all to the

messages appeared in the mobile phone screen when the Touch & Control method

was used. In general the users state in the questionnaire that information provided in

the mobile phone was almost useless as they could see if the system worked or not

looking at the screen. However, they valued as very positive that the phone vibrates to

indicate correct reading when a tag is touched.

5 Discussion and Future Work

We presented a novel approach for controlling the services available in user's local

environment. In this Touch & Control approach, control panels are placed in the

environment and a user controls a service by touching control icons in the panel.

Although a GUI shown on the mobile phone's display is a valid approach the small

size of the device sets challenges to the usability. Particularly elderly and visually

impaired people can find the mobile phone's display too small. Furthermore, the

keypad may also be difficult to use for them as Häikiö at al suggest [17]. Control

panels have no such size restrictions – but on the other hand they can not be adapted

dynamically.

Touch & Control introduces a new paradigm in the use of RFID tags for Physical

UI. We do not use tags to request services only as in [1], but we create the whole UI

with the tags. Mobile phone acts only as a command transmitter. Feedback can be

shown on the phone's display, but a better alternative is to show the feedback in the

60

environment. GUIs created using this approach are very configurable and can be fully

integrated in the environment. Although we have used only mobile phones so far, any

mobile device with Internet access and RFID reader can be used as remote control.

Our REACHeS platform facilitates building remote controls for the services in the

Internet. Using mobile devices as universal control devices has been reported earlier

by [9,10,11]. Raghunath et al [12] propose controlling external displays using mobile

phones and Broll et al [13] present a system that allows web services to manipulate

mobile phone displays. However none of them integrate remote controls, external

services and local resources into a single system as we do.

Some related work proposes tagging objects to create a physical user interface

[14,15,16]. However, we are not aware of other work about sending commands to

services by just touching tags. Häikio et al. propose and evaluate a touch based UI for

elderly users [17]. Their system lets elderly people order food by touching a menu

card that is equipped with RFID tags. This menu card is a kind of control panel but its

visual appearance is not emphasized as in our case. The main difference is that in

their system the life cycle of a service consists of a single touch whereas in Touch &

Control the service accepts several other commands in addition to the one starting it.

Our system uses mature existing technologies. This factor and the good acceptance

in the usability test suggest that Touch & Control could already be used in

commercial applications and not only in laboratory prototypes. Further work is

needed in icon design – to guarantee that the users interpret the icons correctly.

Another important issue to study is placing the control panels. For the Multimedia

Player the main requirement is that the control panel should be near the wall display

in the user's visual field. Furthermore, the feedback needs to be clear and consistent.

We will tackle these challenges by implementing more services and control panels

and testing them in real life settings with genuine end users. Moreover, we will extend

usability tests to compare Touch & Control with other approaches such as gesture

sensors.

6 Conclusions

In this paper we gave an overview of the REACHeS a platform to control remote

services using wireless handhelds. We introduced Touch & Control, a new paradigm

to create physical user interfaces for controlling locally available services. This

approach is inexpensive and easy to implement and can hence be embedded nearly

anywhere. When compared with our previous approach of using the mobile phone's

screen to present the UI, the Touch & Control approach has several advantages. The

preliminary usability test indicates that Touch & Control is easy to use.

References

1. Riekki, J., Salminen, T., and Alakärppä, I. 2006. Requesting Pervasive Services by

TouchingRFID Tags. IEEE Pervasive Computing (Jan-Mar 2006) vol. 5, issue 1, pp 40-46.

2. Riekki, J. 2007. RFID and Smart Spaces. International Journal of Internet Protocol

Technology. Volume 2. Number 3-4 / 2007 pp 143-152.

61

3. NFC Technology Architecture Specification. NFC Forum, 2006.

4. Riekki, J. Sánchez, I. & Pyykkönen, M. Universal Remote Control for the Smart World. In

Proceedings of the 5th International Conference on Ubiquitous Intelligence and Computing

(UIC08), Oslo, Norway. June 23-25 2008.

5. Sánchez, I., Cortés, M., & Riekki, J. (2007) Controlling Multimedia Players using NFC

Enabled mobile phones. In Proceedings of 6th International Conference on Mobile and

Ubiquitous Multimedia(MUM07), Oulu, Finland, December 12-14 2007.

6. Pirttikangas, S., Sánchez, I., Kauppila, M. & Riekki, J. Comparison of Touch, Mobile

Phone, and Gesture Based Controlling of Browser Applications on a Large Screen. In

Adjunt Proceedings of PERVASIVE 2008, Sidney, Australia, May 19-22 2008

7. Seppänen S, Ashraf MI & Riekki J (2007) RFID Based Solution For Collecting Information

And Activating Services In A Hospital Environment. Proc 2nd Intl Symposium on Medical

Information and Communication Technology (ISMICT’07), Oulu, Finland, Dec 11-13

2007.

8. IDBlue: An efficient way to add RFID reader/encoder to Bluetooth PDA and mobile

phones (29.2.2008) http://www.baracoda.com/baracoda/products/p_21.html.

9. Christof, R.(2006) The Mobile Phone as a Universal Interaction Device – Are There

Limits? Proc. Workshop Mobile Interaction with the Real World, 8th International

Conference on Human Computer Interaction with Mobile Devices and Services Espoo,

Finland, 2006.

10. Ballagas, R., Borchers, J., Rohs, M. and Sheridan, J.G. (2006) The Smart Phone:A

Ubiquitous Input Device. Pervasive Computing, IEEE, vol. 5, no. 1, Jan.-March 2006. pp.

70-77.

11. Nichols, J. and Myers, B. (2006) Controlling Home and Office Appliances with Smart

Phones. IEEE Pervasive Computing (July-Sept 2006) vol. 5, no. 3, 60-67.

12. Raghunath, M., Ravi, N., Rosu, M.-C. and Narayanaswami, C. (2006) Inverted browser: a

novel approach towards display symbiosis. Proc Fourth Annual IEEE Intl Conference on

Pervasive Computing and Communications. (Pisa, Italy). PerCom 2006. IEEE Computer

Society, pp. 71-76.

13. Broll, G., Siorpaes, S., Rukzio, E., Paolucci, M., Haamard, J., Wagner, M., and Schmidt, A.

(2007) Supporting Mobile Services Usage through Physical Mobile Interaction. In

Proceedings of the 5th IEEE Intl Conference on Pervasive Computing and Communications

(White Plains, NY, USA). PerCom 2007. IEEE Computer Society. pp. 262-271.

14. Want, R., Fishkin, K.P., Gujar, A. and Harrison, B.L. (1999) Bridging Physical and Virtual

Worlds with Electronic Tags, Proc SIGCHI conference on Human factors in computing

systems, Pittsburgh, Pennsylvania, United States, pp. 370-377.

15. Ailisto, H., Pohjanheimo, L., Välkkynen, P., Strömmer, E., Tuomisto, T. and Korhonen, I.

(2006) Bridging the physical and virtual worlds by local connectivity-based physical

selection, Personal Ubiquitous Computing, Vol. 10, No. 6, pp.333–344.

16. Tungare, M., Pyla, P.S., Bafna, P., Glina, V., Zheng, W., Yu, X., Balli, U. and Harrison, S.

(2006) Embodied data objects: tangible interfaces to information appliances’, 44th ACM

Southeast Conference ACM SE’06, 10–12 March, Florida, USA, pp.359–364.

17. Häikiö, J., Isomursu, M., Matinmikko, T., Wallin, A., Ailisto, H., Huomo, T.

Touch-based user interface for elderly users. Proceedings of MobileHCI, Singapore,

September 9-12, 2007.

18. Rukzio, E., Broll, G., Leichtenstern, K., Schmidt, A. (2007) Mobile Interaction with the

Real World: An Evaluation and Comparison of Physical Mobile Interaction Techniques. In

Proc European Conference on Ambient Intelligence, AmI-07: Darmstadt, Germany,

November 2007, pp 7-10.

62