AN ARCHITECTURE FOR DYNAMIC INVARIANT GENERATION

IN WS-BPEL WEB SERVICE COMPOSITIONS

M. Palomo Duarte, A. Garca Domnguez and I. Medina Bulo

Department of Computer Languages and Systems, E.S.I., University of C´adiz

C/ Chile s/n. 11002 C´adiz, Spain

Keywords:

Web Services, service composition, WS-BPEL, white-box testing, dynamic invariant generation.

Abstract:

Web services related technologies (especially web services compositions) play now a key role in e-Business

and its future. Languages to compose web services, such as the OASIS WS-BPEL 2.0 standard, open a

vast new field for programming in the large. But they also present a challenge for traditional white-box

testing, due to the inclusion of specific instructions for concurrency, fault compensation or dynamic service

discovery and invocation. Automatic invariant generation has proved to be a successful white-box testing-

based technique to test and improve the quality of traditional imperative programs. This paper proposes a

new architecture to create a framework that dynamically generates likely invariants from the execution of web

services compositions in WS-BPEL to support white-box testing.

1 INTRODUCTION

Web Services (WS) and Service Oriented Architec-

tures (SOA) are, according to many authors, one of

the keys to understand e-Business in the early fu-

ture (Heffner and Fulton, 2007). But as isolated

services by themselves are not usually what cus-

tomers need, languages to program in the large com-

posing WS into more complex ones, like the OA-

SIS standard WS-BPEL 2.0 (OASIS, 2007), are be-

coming more and more important for e-Business

providers (Curbera et al., 2003).

There are two approaches to program test-

ing (Bertolino and Marchetti, 2005): black-box test-

ing is only concerned about program inputs and out-

puts, while white-box testing takes into account the

internal logic of the program. The latter produces

more refined results, but it requires access to the

source code. However, WS-BPEL presents a chal-

lenge for traditional white-box testing, due to the in-

clusion of WS-specific instructions to handle concur-

rency, fault compensation or dynamic service discov-

ery and invocation (Bucchiarone et al., 2007).

Automatic invariant generation (Ernst et al., 2001)

has proved to be a successful technique to assist in

white-box testing of programs written in traditional

imperative languages. Let us note that, throughout

this work, to be consistent with related work (Ernst

et al., 2001) we use invariant and likely invariant in its

broadest sense: properties which hold always or in a

specified test suite at a certain program point, respec-

tively. We consider that dynamically generating such

invariants, backed by a good test suite, can become an

interesting help in WS-BPEL white-box testing.

We propose a new architecture for a framework to

dynamically generate likely invariants of a WS-BPEL

composition from actual execution logs of a test suite

running on a WS-BPEL engine. These invariants can

be an interesting aid for white-box testing the compo-

sition.

The rest of the paper is organized as follows. First,

we explain the particularities of WS compositions

built with WS-BPEL. The next section shows how dy-

namic generation of invariants can succesfully help in

WS composition white-box testing. In the following

main section we introduce our proposed architecture,

discuss how to solve some technical problems that

might arise during its implementation, and show an

example of the expected results. Finally, we compare

our proposal with other alternatives and present some

conclusions and an outline of our future work.

37

Palomo Duarte M., Garca Dominguez A. and Medina Bulo I. (2008).

AN ARCHITECTURE FOR DYNAMIC INVARIANT GENERATION IN WS-BPEL WEB SERVICE COMPOSITIONS.

In Proceedings of the International Conference on e-Business, pages 37-44

DOI: 10.5220/0001908800370044

Copyright

c

SciTePress

2 WS COMPOSITIONS AND

WS-BPEL

The need to compose several WS to offer higher level

and more complex services to suit customers require-

ments was detected and satisfied by the leading com-

panies in the IT industry with the non-standard spec-

ification BPEL4WS, which was submitted to OASIS

in 2003 (OASIS, 2003). Soon after, OASIS created

the WS Business Process Execution Language Techni-

cal Committee to work in its standardization, releas-

ing the first standard specification, WS-BPEL 2.0, in

2007 (OASIS, 2007).

Standardization has been an important mile-

stone for WS-BPEL wide adoption by SOA lead-

ing tools, being a key interoperability feature of-

fered by many e-Business systems (Domnguez Jim-

nez et al., 2007). This way, companies providing

services can take advantage of the new possibilities

that this programming-in-the-largetechnology allows

for: concurrency, fault recovery and compensation or

dynamic composition using loosely coupled services

from different providers selected through several cri-

teria (such as cost, reliability or response time).

WS-BPEL is an XML-based language using XML

Schema as its type system. WS-BPEL specifies ser-

vice composition logic through XML tags defining

activities like assignments, loops, message passing or

synchronization. It is independent of the implemen-

tation and platform of both the service composition

system and the different services used in it. There

are other W3C-standardized XML-related technolo-

gies (W3C, 2008) at its foundation:

XPath. allows us to query XML documents in a flex-

ible and concise way. Although the latest version

of the language is XPath 2.0, WS-BPEL uses by

default XPath 1.0.

SOAP. is an information exchange protocol com-

monly used in WS. It is platform independent,

flexible and easy to extend.

WSDL. is a language for WS interface description,

detailing the structure every message to be ex-

changed, the interfaces and locations of the ser-

vices offered, their bindings to specific protocols,

etc.

There are several technologies that can extend WS-

BPEL. They are usually referred to as the WS-

Stack (Papazoglou, 2007). One of the most interesting

is UDDI, a protocol that allows maintaining reposito-

ries of WSDL specifications, easing the dynamic dis-

covery and invocation of WS and the access to their

specifications. UDDI 3.0 is an OASIS Standard (OA-

SIS, 2008).

But the inherent dynamic nature of these technolo-

gies also implies new challenges for program testing,

as most traditional white-box testing methodologies

cannot be directly applied to this language.

3 WS-BPEL WHITE-BOX

TESTING

WS composition testing is one of the challenges for

its full adoption in industry in the forthcoming years.

The dynamic nature of WS poses a challenge for test-

ing, having to cope with aspects like run-time discov-

ery and invocation of new services, concurrency and

fault compensation.

Little research has been done on applying white-

box testing directly on WS-BPEL code. Main ap-

proaches (Bucchiarone et al., 2007) create simulation

models in testing-oriented environments. But simu-

lating a WS-BPEL engine is very complex, as there

is a wide array of non-trivial features to be imple-

mented, such as fault compensation, concurrency or

event handlers. In addition, it is sometimes necessary

to translate the code of the WS-BPEL composition to

a second language to check its internal logic.

In case any of these features is not properly imple-

mented, compositions would not be accurately tested.

So we consider that this is an error-proneprocess, as it

is not based on the actual execution of the WS-BPEL

code in a real environment, that is a WS-BPEL engine

invoking actual services.

Therefore, we propose using dynamically gener-

ated invariants from actual execution traces as a more

suitable approach.

3.1 Using Invariants

An invariant is a property that holds at a certain

point in a program. Classical examples are function

pre-conditions and post-conditions, that is, assertions

which hold at the beginning and end of a sequence of

statements. There are also loop invariants, which are

properties that hold before every iteration and after

the last one.

Let us illustrate these concepts with a simple ex-

ample. Suppose that we want to sum all the integers

from 0 to a given natural n. In this case, we could

define this simple algorithm:

1. r ← 0

2. From i ← 1 to n:

r ← i + r

3. Return r

ICE-B 2008 - International Conference on e-Business

38

This algorithm has the pre-condition n > 0 in step 1,

as n is natural by definition. Looking at the loop in

step 2, we can tell that the loop invariant r =

∑

i−1

j=0

j

holds before every iteration. Since in step 3 we have

exited the loop, we will have i = n + 1, which we can

substitute in the previous loop invariant to formulate

the post-condition for step 3 and the whole algorithm,

r =

∑

n

j=0

j. From this post-condition we can tell that

the algorithm really does what it is intended to do.

Manually generated invariants have been success-

fully used as above to prove the correctness of many

popular algorithms to this day. Nonetheless, their

generation can be automated. Automatic invari-

ant generation has proved to be a successful tech-

nique to test and improve programs written in tra-

ditional structured and object-oriented programming

languages (Ernst et al., 2001).

Invariants generated from a program have many

applications:

Debugging. An unexpected invariant can highlight a

bug in the code which otherwise might have been

missed altogether. This includes, for instance,

function calls with invalid or unexpected param-

eter values.

Program Upgrade Support. Invariants can help de-

velopers while upgrading a program. After check-

ing which invariants should hold in the next ver-

sion of the program and which should not, they

could compare the invariants of the new version

with those of the original one. Any unexpected

difference would indicate that a new bug had been

introduced.

Documentation. Important invariants can be added

to the documentation of the program, so develop-

ers will be able to read them while working on it.

Verification. We can compare the specification of the

program with the actual invariants obtained to see

if they satisfy.

Test Suite Improvement. A wrong likely invariant

dynamically generated, as we’ll see in next sec-

tion, can demonstrate a deficiency in the test suite

used to infer it.

3.2 Automatic Invariant Generation

Basically, we there are two approaches when generat-

ing invariants automatically: static and dynamic.

Static invariant generators (Bjørner et al., 1997)

are most common: invariants are deduced statically,

that is, without running the program. To deduce in-

variants, its source code is analyzed (specially data

and control flows). On one hand, invariants generated

this way are always correct. But, on the other hand,

its number and quality is limited due to the inner lim-

itations of the formal machinery which analyzes the

code, specially in unusual languages like WS-BPEL.

Conversely, a dynamic invariant generator (Ernst

et al., 2001) is a system that reports likely program

invariants observed on a set of execution log files. It

includes formal machinery to analyze the information

in the logs about the values held by variables at differ-

ent program points, such as the entry and exit points

for functions or loops.

The process to generate dynamic invariants is di-

vided into three main steps:

1. An instrumentation step where the original pro-

gram is set up so that, during the later execution

step, it generates the execution log files. This step

is called instrumentation step because the usual

way to do it is by adding, at the desired program

points, logging instructions. These instructions

write to a file the name and value of the variables

that we want to observe at those points and other-

wise have no effect on the control and data flow

of the process. Sometimes it is also necessary to

modify the environment where the program is go-

ing to be executed.

2. An execution step in which the instrumented pro-

gram will be executed under a test suite. During

each test case an execution log is generated with

all the necessary data and program flow informa-

tion for later processing.

3. An analysis step where formal methods tech-

niques are applied to obtain invariants of the vari-

ables logged at the different program points.

Thus, the dynamic generation of invariants does not

analyze the code, but a set of samples of the values

held by variables in certain points of the program.

Wrong invariants do not necessarily mean bugs in the

tested program, but rather they might come from an

incomplete test suite. If the input x is a signed integer

and we only used positive values as test inputs, we

will probably obtain the invariant x > 0 at some pro-

gram point. Upon inspection, we would notice that

invariant and improve our test suite to including cases

with x < 0.

3.3 Dynamic Invariant Generation in

WS-BPEL Compositions

We consider the dynamic generation of invariants to

be a suitable technique to support WS-BPEL compo-

sition white-box testing. If we use a good test suite,

all of the complex internal logic of our BPEL compo-

sition (compensation, dynamic discovery of services,

etc.) will be reflected in the log files of the different

AN ARCHITECTURE FOR DYNAMIC INVARIANT GENERATION IN WS-BPEL WEB SERVICE COMPOSITIONS

39

executions, and the generator will infer significant in-

variants.

Generally, due to its dynamic nature, the more

logs we provide the generator, the better results it will

produce. In case we obtain seemingly false invariants

in a first run, we will be able to certify if they were

due to an incomplete test suite or to actual bugs in a

second run with an improved test suite including ad-

ditional suitable test cases.

Another important benefit is that all the informa-

tion in the logs is collected from direct execution of

the composition code, using no intermediate language

of any sort. This way we avoid errors that could arise

in any translation of the WS-BPEL code or the simu-

lation of the real-world environment, that is, the WS-

BPEL engine and invoked services.

An important problem to solve is that usually, all

external services will not be available for testing, due

to access restrictions, reliability issues or resource

constraints. Or it could also just be that we wanted

to define several what-if scenarios with specific re-

sponses from several external WS. Thus, we will also

have to allow for replacing some external services

with mockups, that is, dummy services which will re-

ply our requests with predefined messages.

4 PROPOSED ARCHITECTURE

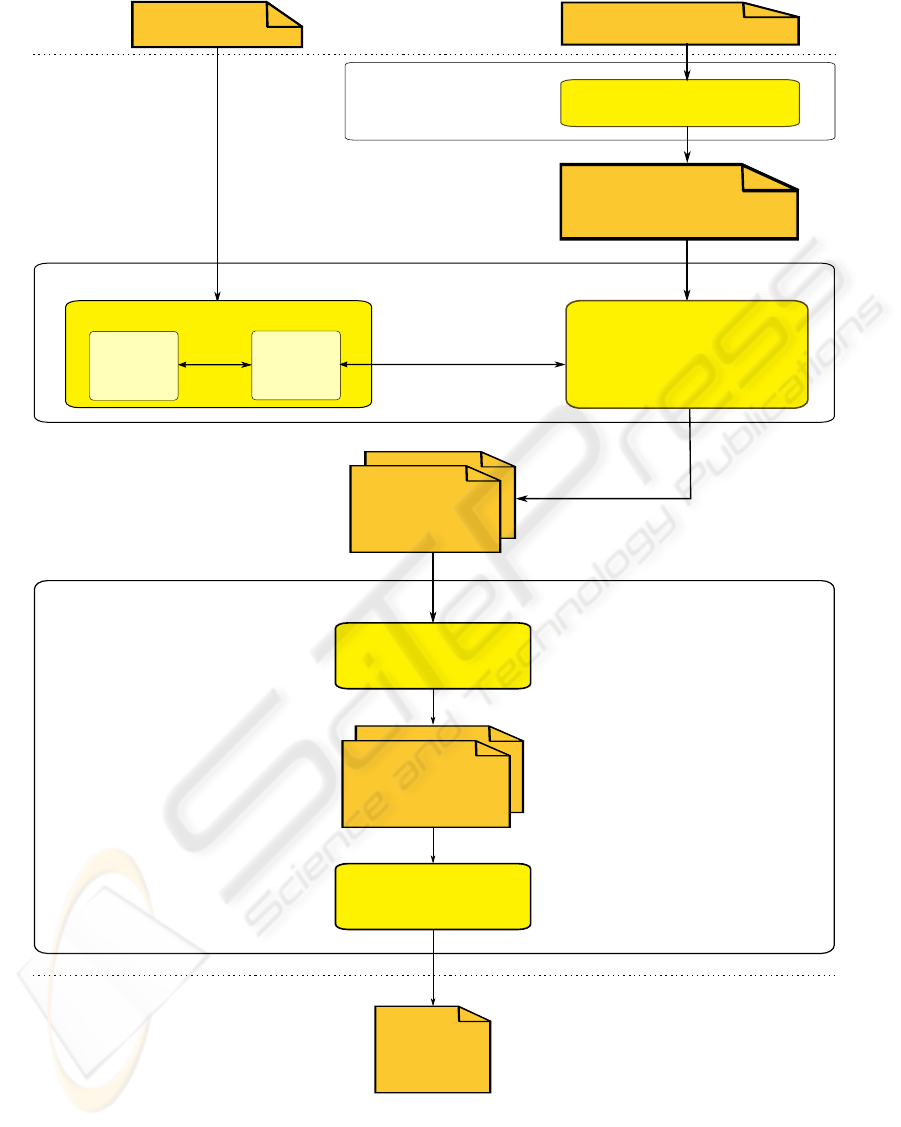

An outline of our proposed architecture for a WS-

BPEL dynamic invariant generator is shown in the

figure 1.

We are using a classical pipeline-based architec-

ture which has, in general terms, three coarse-grained

steps corresponding to the three general steps of the

dynamic invariant generation process which we de-

scribed above.

We detail further their WS-BPEL specific issues

below.

4.1 Instrumentation Step

In this is the step where we take the original program

and perform the necessary changes on it in order to

produce the information that the invariant generator

needs.

In our case, we will take the original WS-BPEL

process composition specification with its dependen-

cies and automatically instrument it. If necessary we

will create any additional files needed for its execu-

tion in the specific WS-BPEL engine which we will

be using.

Additional logic to generate the logs that we need

can be added in three ways:

1. Firstly, we could modify the execution environ-

ment itself. We could use an existing open-source

WS-BPEL engine and modify it in order to pro-

duce the log files that we need for any process ex-

ecuted in it.

The degree of effort involvedwould depend on the

logging capabilities already implemented in the

engine. Most engines include facilities to track

process execution flow, but tracking variable val-

ues is not widely implemented. Of course, modi-

fying the code would take a considerable effort.

2. Secondly, we could modify the source code of the

WS-BPEL composition to be tested, adding calls

to certain logging instructions at the desired pro-

gram points. These instructions would not change

the behavior of the process, being limited to trans-

parently inspect and log variable values.

In a similar way to the previous method, we might

be able to use any existing engine-specific WS-

BPEL logging extension. It could append mes-

sages to a log file, access a database or invoke an

external logging web service, for instance.

Using this approach we would have to instrument

the two differentlanguages used by the WS-BPEL

standard: the WS-BPEL language itself, which is

XML-based, and the XPath language that is used

to construct complex expressions for conditions,

assignments, and so on.

3. And finally, we could implement our own logging

XPath extension functions.

These new functions would be called from an

instrumented version of the original WS-BPEL

composition source code, and included in exter-

nal modules which are reasonably easy to auto-

matically plug into most WS-BPEL engines. This

is a hybrid approach, as both the composition and

the engine need to be modified.

It is quite likely that using both internal (that is,

inside the WS-BPEL engine) and external mod-

ifications (using new XPath extension functions)

will allow us to obtain more detailed logs with less

effort than any of the previous approaches.

We also take into account the fact that there are

many WS-BPEL engines currently available. Engine-

specific files for the deployment of the composition

under the selected engine may have to be generated

automatically on the fly, to abstract the user from the

technical details involved.

4.2 Execution Step

In this step, we will take the previously instrumented

program and run it under a test suite to obtain the logs

ICE-B 2008 - International Conference on e-Business

40

WS−BPEL engine

Execution step

Inputs

Outputs

WS−BPEL Unit Test Library

Director

process

Mockup

server

SOAP

messages

Process instance

execution logs

Analysis step

Preprocessor

Invariant

generator

Inferred

invariants

WS−BPEL process

Test case suite

specification

Generator−specific

input files

Instrumenter

Instrumentation step

WS−BPEL

instrumented process

Figure 1: Proposed architecture for dynamic invariant generation.

and other information that we need for the next step.

Specifically, we will take our instrumented WS-BPEL

composition and run it under each test case detailed

in the test suite specification. Each of them will de-

fine the initial input message that will cause a new in-

stance of the WS-BPEL process to be started, as well

AN ARCHITECTURE FOR DYNAMIC INVARIANT GENERATION IN WS-BPEL WEB SERVICE COMPOSITIONS

41

as the outputs of the external services required by the

WS-BPEL process that we wish to model as mock-

ups.

The ability to model none, some or even all of the

external services as mockups will enable us to obtain

invariants reflecting different situations. On one hand,

if we do not use mockups at all, but only invoke ac-

tual services, we will be studying the complete WS-

BPEL composition in the real-world environment. On

the other, if every external service is replaced with a

mockup with predefined responses, we will be focus-

ing on the internal logic of the composition itself and

how it behaves in certain scenarios. We can also settle

for a middle point in a hybrid approach.

After this step is done, every test case will have

generated its own execution log, which we will pass

on to the next step. We will need roughly two compo-

nents to make this possible:

• A WS-BPEL engine for running the process itself,

which will invoke the external services as needed.

Making the engine use a mockup for an external

service could be achieved in basically two ways:

by modifying the service address included in the

WSDL source files, or by creating (or modifying)

the engine-specific files with the proper values.

• A WS-BPEL unit test library which will deploy

and act both as a client, invoking our composi-

tion with the desired parameters, and as the exter-

nal mockup services for the WS-BPEL process.

These services will behave according to the exter-

nal test case specification described above.

This unit test library has to be quite more complex

than similar libraries for other languages. It can be

divided once more into the following subcomponents:

• A director which will prepare and monitor the ex-

ecution of the whole test suite according to each

test case specification. Ideally, it should also be

able to deploy and undeploy the WS-BPEL pro-

cess from our selected engine.

• A mockup server, properly set up by the director,

which will handle incoming requests and act as

the external mockup services required by the WS-

BPEL process that we choose to model.

Mockups have no internal logic of their own, be-

ing limited to either replying with a predefined

XML SOAP message or failing as indicated in the

test case specification.

There are several lightweight Java-based web

servers available for this role, but, if necessary,

we believe it would also be feasible to develop it

from scratch, being a simple URL → SOAP mes-

sage matching system.

4.3 Analysis Step

After all the test cases have been executed and logs

have been collected, it would seem at first glance to

be a matter of just handing them to our invariant gen-

erator.

However, it will not be so simple in most cases,

because the invariant generator could require certain

additional information about the data to analyze to

work properly. All information would have to be re-

formatted according to the input format expected by

the invariant generator. This reformatting could range

from a simple translation to a thorough transforma-

tion of the XML data structures to those available to

the invariant generator.

The invariant generator may even accept not only

logs, but also a list of constraints already known, and

which thus do not need to be generated again as in-

variants. This would reduce its output size and make

it easier to understand for its users. To generate this

constraint list, we would have to analyze the XML

Schema files contained in the WS-BPEL process and

all of its dependencies.

All these obstacles can be overcome through a

preprocessor. It could even call the invariant gener-

ator with the generated files to finally obtain the in-

variants of the WS-BPEL composition.

Depending on the number and complexity of the

invariants produced by the generator, we could even

need to pass them later to a simplifier. It is a program

based on formal methods that receives a set of invari-

ants and removes those logically inferred by others.

4.4 Example

We comment briefly an example of the invariants we

could infer in the classical WS-BPEL example of the

Loan Approval Service included in the WS-BPEL 2.0

specification (OASIS, 2007).

This WS-BPEL composition receives loan re-

quests from costumers. Each request includes the

amount and certain personal information. The

WS-BPEL composition simply notifies the costumer

whether his loan request has been approved or re-

jected. The approval of the loan is based on the

amount requested and the risk that a risk assessment

WS determines for the costumer according to his per-

sonal information. If the amount is below $10,000

and the risk assessment WS considers the applicant a

low-risk costumer the loan is automatically approved.

In case the amount is below the threshold but risk

is considered medium or high, the composition in-

vokes an external loan approval WS, and its answer

is passed to the costumer as the response of the com-

ICE-B 2008 - International Conference on e-Business

42

position. Finally, in case the requested amount is over

the threshold no risk checking is done, and the answer

of the composition is also that of the external loan ap-

proval WS.

Our architecture could infer the following invari-

ants for this example, it were backed by an exhaustive

and high-quality test suite:

$request.amount < 10000∧ $risk.level = ’low’

=⇒ $approval.accept = ’yes’

$request.amount < 10000∧ $risk.level 6= ’low’

=⇒ $approval.accept = response(approver)

$request.amount ≥ 10000

=⇒ $approval.accept = response(approver)

invoke(customer) = $approval

We can clearly see that the system could infer that

approval depends on the amount requested and the re-

sponse provided by the approver when invoked. The

system could also detect that the response we provide

the costumer is always the value of the variable ap-

proval.

Of course, to get results this fine-grained we will

need a good test suite. For our example, the test suite

would have to contain test cases with amounts over

and under the threshold (including the limit values

$9,999, $10,000 and $10,001). Personal information

causing the risk assessment WS answers both affir-

matively and negatively must also be provided, spe-

cially for those under the threshold. At any rate, as

discussed before, in case we do not obtain the de-

sired invariants in the first run, we could extend the

test suite with more test cases refining the invariants

obtained. This way, in the next run, we would obtain

more accurate invariants.

5 RELATED WORK

In this section we present some related works. There

is a wide variety of topics related to our architecture,

mainly dynamic invariant generation, WS composi-

tion testing and test case generation:

An interesting proposal to use dynamically invari-

ants for WS quality testing is (SeCSE, 2007). It

collects several invocations and replies from a WS-

BPEL composition to an external WS and dynami-

cally generates likely invariants to check its Service

Level Agreement. Therefore, it constitutes a black-

box testing technique. In contrast, our architecture

follows a white-box testing approach, oriented to the

generation of the invariants from the internal logic of

a WS-BPEL composition.

The relation between the test cases used for dy-

namically generating invariants and the quality of the

invariants derived is studied in (Gupta, 2003). Aug-

menting a test suite with suitable test cases can be an

interesting way to increase the accuracy of the invari-

ants inferred by our architecture.

Dynamo (Baresi and Guinea, 2005) is a proxy-

based system to monitor if a WS-BPEL composition

holds several restrictions during its execution. We

think it might be useful as a way to check if the in-

variants obtained from our architecture hold while it

is running in a real-world environment.

Test cases for a WS-BPEL composition are auto-

matically generated in (Zheng et al., 2007) according

to state and transition coverage criteria. We could as-

sure the quality of the invariants generated by using

them as inputs for our architecture.

6 CONCLUSIONS AND FUTURE

WORK

The fact that WS are the future of e-Business is al-

ready a given, thanks to their platform independence

and the abstractions that they provide. The need

to orchestrate them to provide more advanced ser-

vices suiting costumer’s requirement has been satis-

fied through the WS-BPEL 2.0 standard.

However, WS-BPEL compositions are difficult to

test, since traditional white-box testing techniques are

difficult to apply to them. This is because of the un-

usual mix of features present in WS-BPEL, such as

concurrency support, event handling or fault compen-

sation. We have showed how dynamically generated

likely invariants backed by a good test suite can be-

come a suitable and successful help to solve these dif-

ficulties, thanks to their being based on actual execu-

tion logs.

In this work, we have proposed a pipelined archi-

tecture for dynamically generating invariants from a

WS-BPEL composition. Requirements on every of

the components have been identified, leaving as our

next future work finding suitable systems for each

one.

Once the architecture is completely implemented we

will perform an experimental evaluation of the frame-

work under several compositions. This way we will

test its reliability and evaluate its results through met-

rics such as quality of the invariants generated or time

AN ARCHITECTURE FOR DYNAMIC INVARIANT GENERATION IN WS-BPEL WEB SERVICE COMPOSITIONS

43

taken to infer them.

Looking further ahead, we will later study the re-

lation between the quality of the invariants generated

and the test case suite used to infer them. For this

we can use different WS-BPEL compositions with

their specifications and different test suites providing

certain coverage criteria (branch coverage, statement

coverage, ...) of them.

Finally, we could use the invariants generated by

our proposal to support WS-BPEL white-box testing

and check if it improves its results.

ACKNOWLEDGEMENTS

This work has been financed by the Programa Na-

cional de I+D+I of the Spanish Ministerio de Edu-

cacin y Ciencia and FEDER funds through SOAQSim

project (TIN2007-67843-C06-04).

REFERENCES

Baresi, L. and Guinea, S. (2005). Dynamo: Dynamic mon-

itoring of WS-BPEL processes. In Benatallah, B.,

Casati, F., and Traverso, P., editors, ICSOC, volume

3826, pages 478–483. Lecture Notes in Computer Sci-

ence, Springer.

Bertolino, A. and Marchetti, E. (2005). A brief essay on

software testing. In Thayer, R. H. and Christensen,

M., editors, Software Engineering, The Development

Process. Wiley-IEEE Computer Society Pr, 3 edition.

Bjørner, N., Browne, A., and Manna, Z. (1997). Automatic

generation of invariants and intermediate assertions.

Theoretical Computer Science, 173(1):49–87.

Bucchiarone, A., Melgratti, H., and Severoni, F. (2007).

Testing service composition. In ASSE: Proceedings of

the 8th Argentine Symposium on Software Engineer-

ing.

Curbera, F., Khalaf, R., Mukhi, N., Tai, S., and Weer-

awarana, S. (2003). The next step in Web Services.

Communications of the ACM, 46(10):29–34.

Domnguez Jimnez, J. J., Estero Botaro, A., Medina Bulo,

I., Palomo Duarte, M., and Palomo Lozano, F. (2007).

El reto de los servicios web para el software libre. In

Proceedings of the FLOSS International Conference

2007, pages 117–132, Jerez de la Frontera. Servicio

de Publicaciones de la Universidad de Cdiz.

Ernst, M. D., Cockrell, J., Griswold, W. G., and Notkin,

D. (2001). Dynamically discovering likely program

invariants to support program evolution. IEEE Trans-

actions on Software Engineering, 27(2):99–123.

Gupta, N. (2003). Generating test data for dynamically dis-

covering likely program invariants. In ICSE, Work-

shop on Dynamic Analysis.

Heffner, R. and Fulton, L. (2007). Topic overview: Service-

oriented architecture. Forrester Research, Inc.

OASIS (2003). OASIS members form web ser-

vices business process execution language (WS-

BPEL) technical committee. http://www.oasis-

open.org/news/oasis

news 04 29 03.php.

OASIS (2007). WS-BPEL 2.0 standard. http://docs.oasis-

open.org/wsbpel/2.0/OS/wsbpel-v2.0-OS.html.

OASIS (2008). OASIS standards. http://www.oasis-

open.org/specs/.

Papazoglou, M. (2007). Web services technologies and

standards. computing surveys (enviado para revisin).

SeCSE (2007). A1.D3.3: Testing method

definition V3. http://secse.eng.it/wp-

content/uploads/2007/08/a1d33-testing-method-

definition-v3.pdf.

W3C (2008). W3C technical reports and publications.

http://www.w3.org/TR/.

Zheng, Y., Zhou, J., and Krause, P. (2007). An automatic

test case generation framework for web services. Jour-

nal of Software, 2(3):64–77.

ICE-B 2008 - International Conference on e-Business

44