MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION

Artur Ferreira

1,2

, Andr´e Lourenc¸o

1,2

, B´arbara Pinto

1

and Jorge Tendeiro

1

1

Instituto Superior de Engenharia de Lisboa, Lisboa, Portugal

2

Instituto de Telecomunicac¸˜oes, Lisboa, Portugal

Keywords:

Iris Recognition, Biometrics, Image Processing, Image Segmentation.

Abstract:

Iris recognition is a well-known biometric technique. John Daugman has proposed a method for iris recogni-

tion, which is divided into four steps: segmentation, normalization, feature extraction and matching. In this

paper, we evaluate, modify and extend John Daugman’s method. We study the images of CASIA and UBIRIS

databases to establish some modifications and extensions on Daugman’s algorithm. The major modification is

on the computationally demanding segmentation stage, for which we propose a template matching approach.

The extensions on the algorithm address the important issue of pre-processing, that depends on the image

database, being especially important when we have a non infra-red red camera (e.g. a WebCam). For this

typical scenario, we propose several methods for reflexion removal and pupil enhancement and isolation. The

tests, carried out by our C# application on grayscale CASIA and UBIRIS images, show that our template

matching based segmentation method is accurate and faster than the one proposed by Daugman. Our fast

pre-processing algorithms efficiently remove reflections on images taken by non infra-red cameras.

1 INTRODUCTION

Human authentication is of central importance in

modern days (Maltoni et al., 2005)(Jain et al., 2004).

Instead of passwords, or magnetic cards, biometric

authenticationis based onphysical or behavioralchar-

acteristics of humans. From the set of biological char-

acteristics, such as face, fingerprint, iris, hand geom-

etry, ear, signature, and voice, iris recognition is con-

sidered extremely accurate and fast. From its charac-

teristics the fact that it is considered unique to an in-

dividual, its epigenetic pattern remains stable through

life, and the pattern variability is enormous among

different persons make iris very attractive for use as

biometric for authentication and identification.

The problem of iris recognition attracted a lot

of attention in the literature: John Daugman (Daug-

man, 1993), Boles (Boles, 1997), and Wildes (Wildes,

1997) were the precursors of the area. Several mod-

ifications on work of Daugman have been proposed

in the last decade: (Yao et al., 2006) uses different

filters;(Greco et al., 2004) applies Hidden Markov

Models to choose a set of local frequencies;(Joung

et al., 2005) modifies the normalization stage; (Ar-

vacheh, 2006) changes segmentation and normaliza-

tion; (J.Huang et al., 2004) modifies segmentation.

In this paper we follow John Daugman approach

(Daugman, 1993) introducing several variations on

the segmentation step based on templates and fo-

cusing the tuning of the algorithm for the UBIRIS

database (Proenc¸a and Alexandre, 2005)(Proenc¸a,

2007)

1

. We also propose pre-processing techniques

for reflexion removal,and pupil enhancement and iso-

lation. The proposed algorithms are also evaluated on

the CASIA (Chinese Academy of Sciences Institute

of Automation) database

2

.

The paper is organized as follows. Section 2

presents the steps of an iris recognition algorithm

and details Daugman’s approach. Section 3 describes

the standard test images from CASIA and UBIRIS

databases. Modifications to Daugman’s method and

new approaches for pre-processing are proposed in

Section 4, along with a study of CASIA and UBIRIS

images. Discussion of experimental results and con-

clusions are drawn in Sections 5 and 6, respectively.

2 IRIS RECOGNITION

The process of iris recognition is usually divided into

four steps

1

iris.di.ubi.pt/index.html

2

www.sinobiometrics.com

72

Ferreira A., Lourenço A., Pinto B. and Tendeiro J. (2009).

MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION.

In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, pages 72-79

DOI: 10.5220/0001536100720079

Copyright

c

SciTePress

(Vatsa et al., 2004):

• segmentation - localization of iris region in a eye

image, that is the inner and outer boundaries of

the iris (see figure 1); can be preceeded by a pre-

processing stage to enhance image quality;

• normalization - create a dimensionality consistent

representation of the iris region (see figure 2);

• feature extraction - extracting informationthat can

be used to distinguished different subjects, creat-

ing a template that represents the most discrimi-

nant features of the iris; typically it uses texture

information;

• matching - the feature vectors are compared using

a similarity measure.

2.1 Daugman’s Approach

Consider an intensity image I(x,y), where x and y de-

note respectively the rows and columns of an image.

The problem is to automatically find the iris and ex-

tract its characteristics.

The segmentation step of (Daugman, 1993), local-

izes the inner and outer boundaries of the iris with the

integro-differential operator

max

r, x

0

, y

0

G

σ

(r) ∗

∂

∂r

I

r,x

0

,y

0

I(x,y)

2πr

∂s

, (1)

in which r represents the radius, x

0

,y

0

the central

pixel and G

σ

(r) a gaussian filter used to soften the im-

age (with σ standard deviation). The operator formu-

lates the problem as the search for the circle (center:

x

0

,y

0

and radius) where occurs a maximum change in

pixel values between adjacent circles. Fixing differ-

ent centers, first derivatives are computed varying the

radius; its maximum corresponds to a boundary.

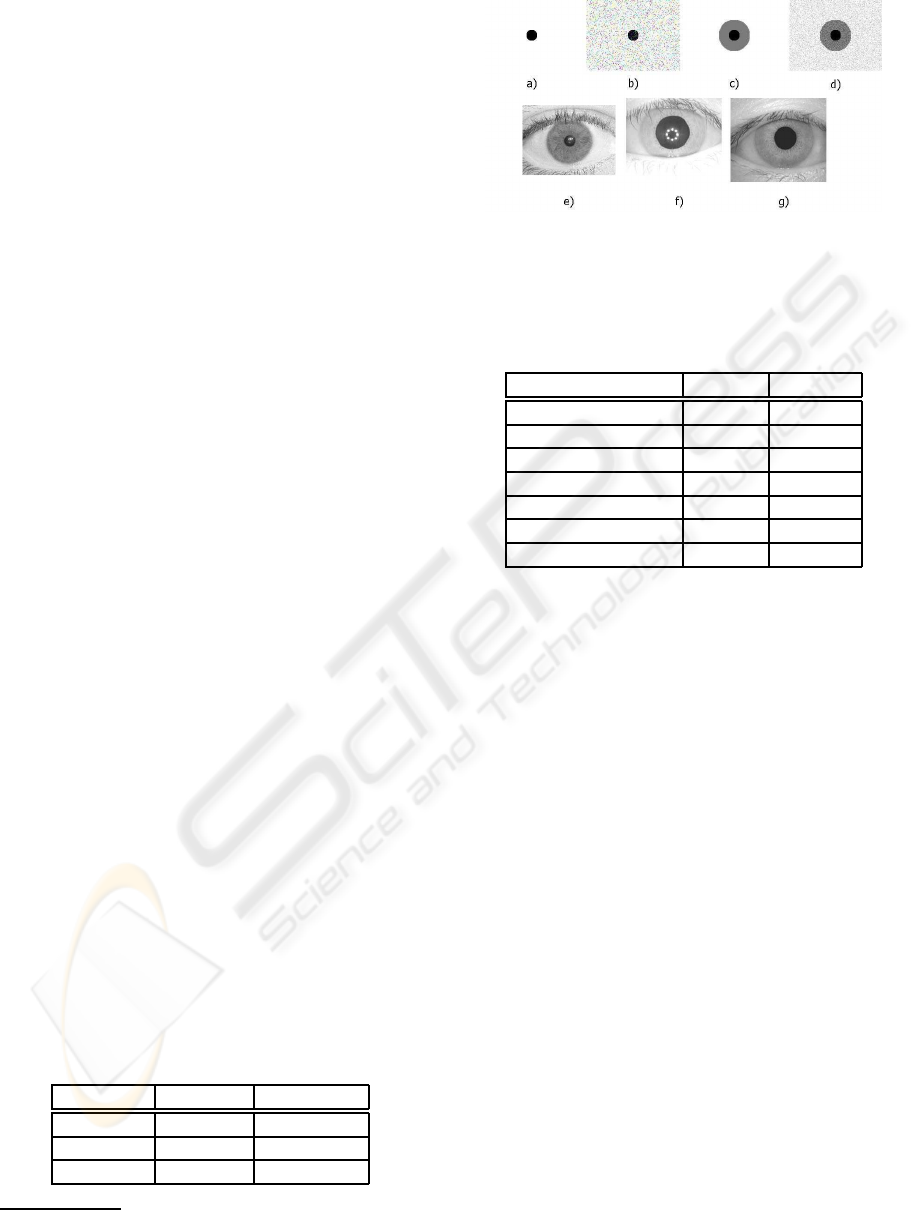

Figure 1 shows an eye image with red and yellow

circles representing the iris and pupil boundaries, re-

spectively. On the right is illustrated the segmented

iris; note the presence of the upper eyelids.

Figure 1: Segmentation Step: a) radius of the iris b) radius

of the pupil c) center d) segmented iris.

The normalization step transforms the iris region

into a normalized image, with fixed size, allowing

comparisons of different iris sizes. Iris may have dif-

ferent sizes due to pupil dilation caused by varying

levels of illumination. The rubber sheet model (Daug-

man, 1993), remaps each point (x,y) of the iris image,

into an image I(r,θ) where r ∈ [0, 1] and θ ∈] − π,π],

according to

I(x(r,θ),y(r,θ)) → I(r,θ). (2)

The transformation from cartesian to normalized

polar coordinates, uses the mapping

x(r,θ) = (1− r)x

pupil

(θ) + rx

iris

(θ)

y(r,θ) = (1− r)y

pupil

(θ) + ry

iris

(θ),

(3)

where (r,θ) are the corresponding normalized co-

ordinates, and x

pupil

,y

pupil

and x

iris

,y

iris

the coordi-

nates of the pupil and iris boundary along θ angle.

Figure 2 presents an example of the produced normal-

ized representation; it is possible to observe in the left

image that the center of the pupil can be displaced

with respect with the center of the iris. The right im-

age represents the normalized image: on the x-axis

are represented the angles (θ), and on the y-axis the

radius (r). Observe that the upper eyelids are depicted

on the lower right corner.

Figure 3 shoes the segmentation and normaliza-

tion steps for the 3 considered databases.

To encode the iris pattern, 2D Gabor filters are em-

ployed

G(r,θ) = e

−iω

0

(θ

0

−θ)

e

−

(r

0

−r)

α

2

· e

−

(θ

0

−θ)

2

β

2

. (4)

These filters are considered very suitable to en-

code texture information and are characterized by

three parameters: spacial localization x

0

,y

0

, spacial

frequency w

0

and orientation θ

0

, and gaussian param-

eters (α,β). Figure 4 presents the individual compo-

nents of the filter.

The application of this filter on the image gener-

ates a complex image representing the relevance of

Figure 2: Normalization Step: Daugman’s rubber sheet

model.

MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION

73

the texture for a given frequency and orientation. Sev-

eral filters are applied in order to analyze different tex-

ture information. This set of filters is defined by

GI =

Z

ρ

Z

φ

e

−iω(θ

0

−φ)

e

−

(r

0

−ρ)

α

2

−

(θ

0

−φ)

2

β

2

I(ρ,φ)ρdρdθ

(5)

Figure (5) shows the Gabor filters considered for

this purpose.

The output of the filters is quantized using the real

and imaginary part, Re(GI) and Im(GI), respectively

by

h

Re

=

1, if Re(GI) ≥ 0

0, if Re(GI) < 0,

h

Im

=

1, if Im(GI) ≥ 0

0, if Im(GI) < 0.

(6)

These four levels are quantized using two bits.

The so called “IrisCode” has 2048 bits (256 bytes),

computed for each template, corresponding to the

quantization of the output of the filters for different

orientations and frequencies (1024 combinations).

The matching step (Daugman, 2004), computes

the differences between two iriscodes (codeA and

codeB) using the Hamming Distance (HD)

HD =

k(codeA⊗ codeB) ∩ maskA∩ maskBk

kmaskA ∩ maskk

(7)

where ⊗ denotes the XOR operator, while the

AND operator ∩ selects only the bits that have not

been corrupted by eyelashes, eyelids, specular reflec-

tions, etc, and (kk) represents the norm of the vectors.

To take into account possible rotations between two

iris images, Daugman compares the obtained HD with

Figure 3: Segmentation and normalization steps: a)

CASIAv1 b) CASIAv3 c) UBIRISv1.

Figure 4: Feature Extraction with 2D Gabor filters: a) gaus-

sian; b) 2D sinusoid; c) the resulting filter.

Figure 5: Gabor filters with eight orientations for texture

extraction.

the one obtained using a cyclic scrolling versions of

one of the images. The minimum HD gives the final

matching result.

3 IMAGE DATABASES

This section describes the main features of the test

images of CASIA and UBIRIS databases.

3.1 CASIA

The well-known CASIA-Chinese Academy of Sci-

ences Institute of Automation database from Beijing,

China

3

has two versions:

• CASIAv1 - 756 images of 108 individuals;

• CASIAv3 - 22051 images of over 700 individuals.

On CASIAv3, the images are divided into the follow-

ing categories:

• interval - digitaly manipulated such that the pupil

was replaced by a circular shape with uniform in-

tensity, eliminating undesired illumination effects

3

http://www.sinobiometrics.com

BIOSIGNALS 2009 - International Conference on Bio-inspired Systems and Signal Processing

74

and artifacts such as reflection; CASIAv1 is a sub-

set of this category;

• lamp - images acquired under different illumi-

nation conditions, in order to produce intra-class

modifications (images of a given eye taken in dif-

ferent sessions);

• twins - images of 100 pairs of twins.

These 320 × 280, 8 bit/pixel images were acquired

with an IR camera; we have used their grayscale ver-

sions.

3.2 UBIRIS

The UBIRIS database (Proenc¸a and Alexandre,

2005)

4

, was developed by the Soft Computing and

Image Analysis Group of Universidade da Beira Inte-

rior, Covilh˜a, Portugal. This database was created to

provide a set of test images with some typical pertur-

bations such as blurred images, with reflex and eyes

almost shut, being a good benchmark for systems that

minimize the requirement of user cooperation. The

images are captured at-a-distance and minimizing the

required degree of cooperation from the users, proba-

bly even in the covert mode. Version 1 of the database

(UBIRIS.v1) has 1877 images of 241 individuals, ac-

quired in two distinct sessions:

• session 1 - acquisition in a controlled environ-

ment, with a minimum of perturbation, noise, re-

flection and non-uniform illumination;

• session 2 - acquisition under natural light condi-

tions.

The images, taken with a NIKON E5700 digital RGB

camera

5

, have a resolution of 200 × 150 pixels and a

pixel-depth of 8 bit/pixel, were converted to 256-level

grayscale images. Recently, a second version of the

database (UBIRIS.v2) was released to be used in the

Noisy Iris Challenge Evaluation challenge - Part I 11

(NICE.I). Figure 6 shows some test images: synthetic

(with and without noise); real images from CASIA

and UBIRIS databases.

The main features of these databases are presented

in table 1.

Table 1: Comparison of CASIA and UBIRIS database.

Database # Images Resolution

CASIAv1 756 320× 280

CASIAv3 22051 320× 280

UBIRIS 1877 200× 150

4

http://iris.di.ubi.pt/index.html

5

www.nikon.com/about/news/2002/e5700.htm

Figure 6: Test images: a) simple b) simple with noise c)

pupil and iris d) pupil and iris with noise e) UBIRISv1 f)

CASIAv3 g) CASIAv1.

Table 2: Study of CASIA and UBIRIS database. Statistical

description of the pupil diameter (in pixels).

Measure CASIA UBIRIS

Mean 86.2 23.7

Median 87 24

Mode 77 25

Minimum 65 17

Maximum 119 31

Standard Deviation 11.5 2.9

Sample Variance 132.7 8.3

4 MODIFICATIONS AND

EXTENSIONS

4.1 Study of UBIRIS and CASIA

The accuracy of pupil and iris detection is a crucial

issue in an iris recognition system. Our proposed

template matching based approach estimates the pupil

and the iris. In order to check for the performance of

this new approach, we carried out a statistical study

over UBIRIS and CASIA databases to estimate the

range of pupil diameters. For both databases, we ran-

domly collect N=90 images to carry out this study.

For each image, we have computed the center and the

radius of the pupil. Table 2 shows a statistical analysis

of the pupil diameter.

For both databases, the increase of N over 90 (pix-

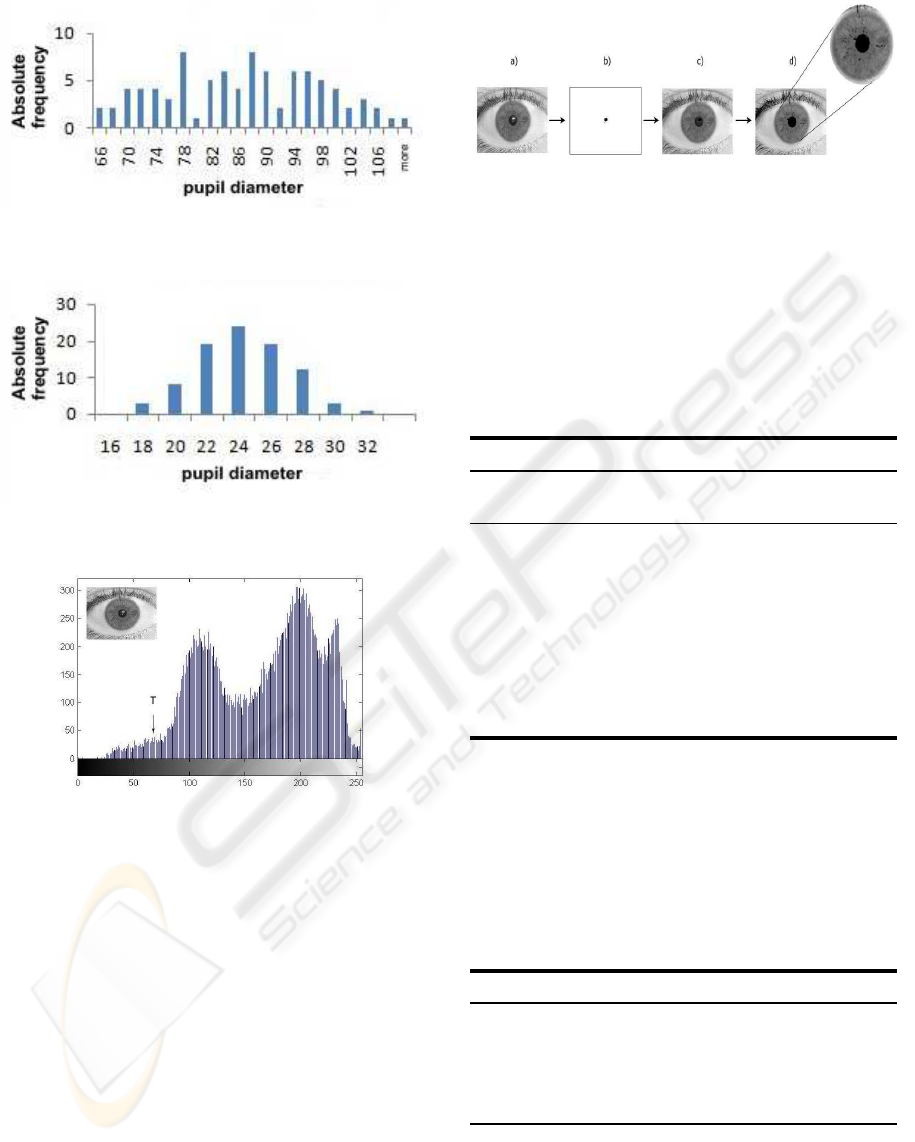

els) does not change these statistical results. Figure 7

shows the histogram of the diameters for the CASIA

database, while figure 8 does the same for UBIRIS;

in this case, we see that the histogram is well approx-

imated by a normal distribution.

4.2 Pre-processing

This section describes pre-processing techniques that

we propose for UBIRIS images. The pre-processing

MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION

75

Figure 7: Histogram of pupil diameter for CASIA.

Figure 8: Histogram of pupil diameter for UBIRIS.

Figure 9: Typical histogram of an iris image.

stage removes (or minimizes) image impairments

such as noise and light reflections. Studying the im-

ages of both databases, we conclude that it is nec-

essary to use different pre-processing strategies for

each database. The pre-processing algorithms that we

have devised are intended to eliminate reflections and

to isolate the pupil. For this purpose, we propose 3

methods, named A, B and C. For pupil enhancement,

we propose another method. These methods are nec-

essary for UBIRIS database. The CASIAv1 images

are already pre-processed.

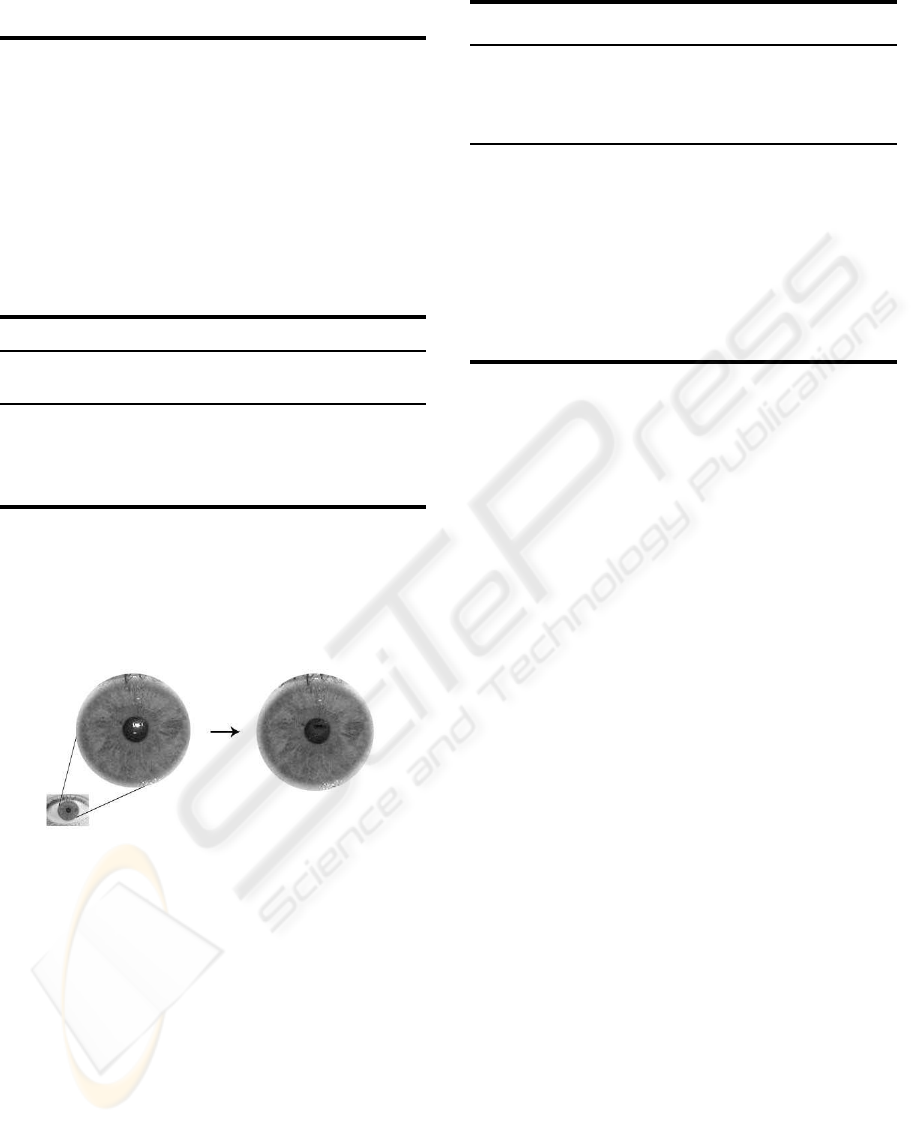

4.2.1 Method A

Taken a histogram analysis, from a set of 21 images,

we conclude that the pupil has low intensity values,

corresponding to 7% to 10% of the image. This way,

Figure 10: Reflex removal by method A: a) original image;

b) isolated reflection c) image without reflection d) image

with an uniform pupil.

we found the range of intensities between the pupil

and the iris. From this range of intensities, we com-

pute a threshold between the pupil and the iris, as de-

picted by T in figure 9.

The actions taken by method A for reflection re-

moval is as follows.

Method A for reflection removal

Input: I

in

- input image, with 256 gray-levels

Output: I

out

- image without reflections on the pupil

1. From the histogram of I

in

compute a threshold T

(as in figure 9) to locate the pupil pixels.

2. Set the pupil pixels (gray level below T) to zero.

3. Locate and isolate the reflection area, with a edge

detector and a filling morphologic filter (Lim,

1990).

4. I

out

← image with the reflection area pixels set to

zero.

Figure 10 illustrates the application of method A: on

stage a) we see a white reflection on the pupil; this

reflection is removed on stage d).

4.2.2 Method B

This method uses a threshold to set to a certain value

the pixels on a given Region of Interest (ROI).

Method B for reflection removal

Input: I

in

- input image, with 256 gray-levels

X,Y - upper-left corner of ROI

W,H - width and height of ROI

T - threshold for comparison

Output: I

out

- image without reflections on the pupil

1. Over I

in

locate the set of pixels below T.

2. Isolate this set of pixels, to form the ROI.

3. Horizontally, make top-down scan of the ROI and

for each line, replace each pixel in the line by the

average of the pixels at both ends of that line.

BIOSIGNALS 2009 - International Conference on Bio-inspired Systems and Signal Processing

76

4. I

out

← image with the ROI pixels set to this aver-

age value.

4.2.3 Method C

This third method uses morphologic filters (Lim,

1990) to fill areas with undesired effects, such as a

white circumference with a black spot. In this situa-

tion, the morphologic filter fills completely the white

circumference. This filter is applied on the negative

version of the image and after processing, the image

is put back to its original domain.

Method C for reflection removal

Input: I

in

- input image, with 256 gray-levels

Output: I

out

- image without reflections on the pupil

1.

˜

I

in

← negative version of I

in

(Lim, 1990).

2.

˜

I

p

← output of the morphologic filling filter on

˜

I

in

.

3. I

out

← negative version of

˜

I

p

.

Figure 11 shows the results obtained by this method;

we can see clearly the removal of the white reflection.

Among these three methods, this one is the fastest; it

takes (on average) about 40 ms to run on a UBIRIS

image.

Figure 11: Illustration of reflex removal by method C.

4.2.4 Pupil Isolation

After reflection removal, we introduced a pupil en-

hancement algorithm to obtain better results in the

segmentation phase. This way, we isolate the pupil

from the rest of the image. The algorithm for pupil

isolation is divided into four stages: enhancement

and smooth - apply a reflex removal method and a

gaussian filter to smooth the image; detection - edge

and contour detection of the pupil, producing a binary

image; isolation - remove the contours outside a spe-

cific area, isolating the pixels along the pupil; dilation

and filling - dilate the image and fill the points across

the pupil.

Pupil isolation

Input: I

in

- input image, with 256 gray-levels

σ - standard deviation for the gaussian filter

T - minimum number of pupil pixels

Output: I

out

- binary image with an isolated pupil

1. Remove reflections (methods A, B or C) on I

in

.

2. Apply a gaussian filter G

σ

to smooth the image.

3. Apply the Canny Edge (Lim, 1990) detector for

pupil and iris detection; retain only the pupil area.

4. While the number of white pixels is below T, di-

late the detected contours.

5. I

out

← output of the filling morphologic filter.

4.3 Segmentation

The segmentation phase is of crucial importance, be-

cause without a proper segmentation it is impossible

to perform recognition. We propose the following

methods for segmentation: versions of the integro-

differential operator; template matching. For the first

we propose the following options: version 1 - sim-

plified version of (2) without the gaussian smoothing

function; version 2 - finite difference approximation

to the derivative and interchangingthe order of convo-

lution and integration as in (Daugman, 1993); version

3 - the operator as in (2). In order to speed up the per-

formance of the operator we have considered a small

range of angles to compute the contour integral: 180

o

(θ ∈ [−π/4,π/4] ∪ [3π/4,5π/4]).

For UBIRIS, we devised a new strategy for the

segmentation phase, based on a template matching

approach. We propose to automatically segment the

image using cross-correlation between the iris im-

ages and several templates and finding the maxi-

mums of this operation. Template matching is an ex-

tensively used technique in image processing (Lim,

1990). Since the iris and pupil region have a cir-

cle format (or approximate) this technique is consid-

ered very suitable, being only necessary to use cir-

cle templates with different sizes (it not necessary

to take into account rotations of the templates). To

cope with the range of diameters, we have used sev-

eral versions of the templates. Supported by the

study presented on section 4.1, we considered a range

of diameters that covers 90% of the diameters dis-

played in figure 8, to narrow the number of tem-

plates. This way, we have choosen the set of diam-

eters D = {20,22,24, 26, 28, 30}; four of these tem-

plates are depicted in figure 12.

MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION

77

Figure 12: Templates used for the proposed template

matching-based segmentation stage on UBIRIS database.

Figure 13: Developed application.

The difference between two consecutivetemplates

is two pixels. We have found that is not necessary to

consider the entire set of integers, in order to have

an accurate estimation of the diameter (this way, we

decrease the number of comparisons to half). For

the CASIA database, we proceed in a similar fash-

ion obtaining diameters D = {70,72,74, ...,124}.

The cross-correlation based template matching tech-

nique has an efficient implementation using FFT (Fast

Fourier Transform) and its inverse (Lim, 1990).

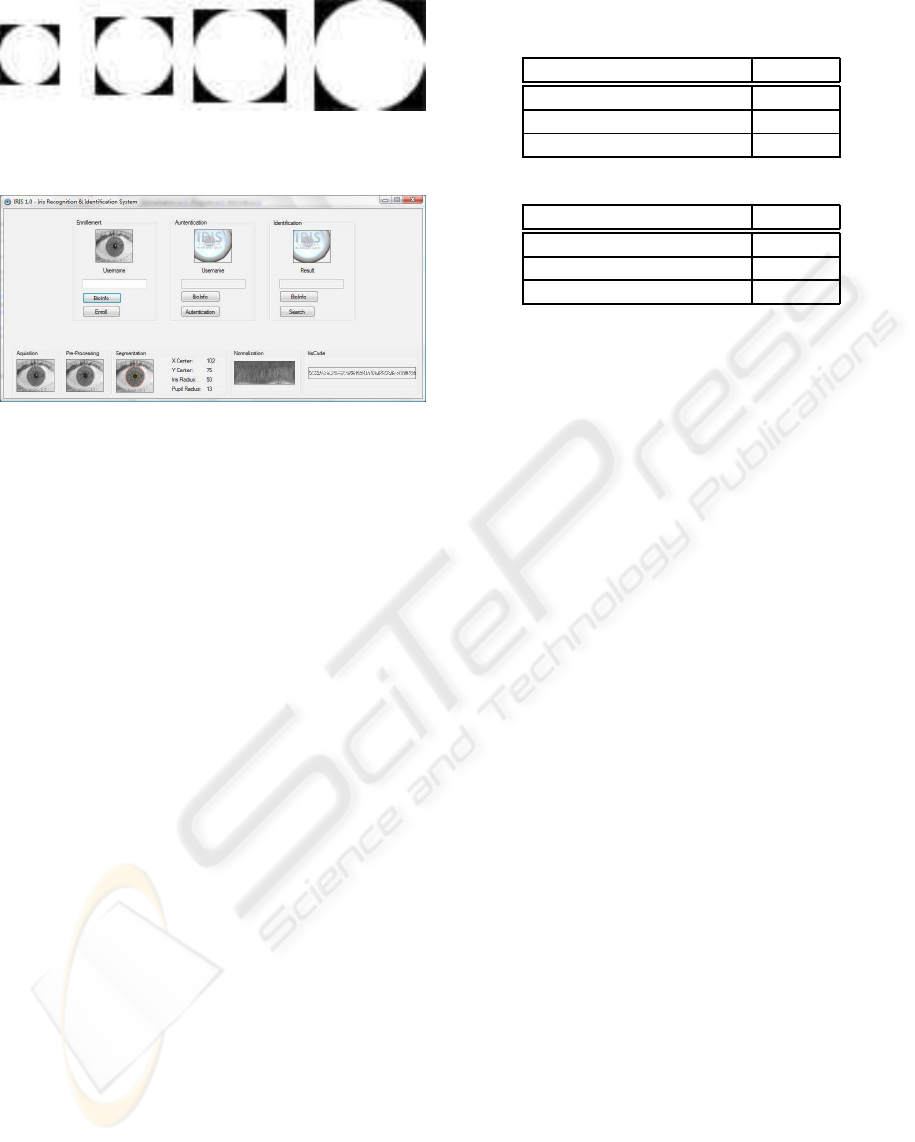

5 EXPERIMENTAL RESULTS

This section reports our experimental results obtained

with our variants and modifications to Daugman ap-

proach, obtained with our C# application. Figure 13

shows a screen shot of the developedapplication, with

the following functionalities: enrollment - register an

individual in the system; authentication - verify the

identity of an already registered user; identification -

search for an individual.

Regarding the pre-processing stage, we have

found that the reflection removal methods A, B and C

presented in section 4.2 attained good similar results

as can be seen by figures 10 and 11. The pupil en-

hancement algorithm also got good results improving

the segmentation stage.

5.1 Segmentation

For the segmentation phase of Daugman’s algorithm,

the variants described in section 4.3, regarding the

Table 3: Integro-differential operator - version 3. From

CASIAv3, the 756 images were randomly selected.

Database Success

UBIRISv1 (1877 images) 95.7 %

CASIAv1 (756 images) 98.4 %

CASIAv3 (756 images) 94 %

Table 4: Template Matching for segmentation.

Database Success

UBIRISv1 (1877 images) 96.3 %

CASIAv1 (756 images) 98.5 %

CASIAv3 (756 images) 98.8 %

integro-differential operator and template matching

were tested.

5.1.1 Integro-differential Operator

We have found that the (fast) first version of the

integro-differential operator, obtained satisfactory re-

sults only for synthetic images. The second version

performed a little better, but the results still were un-

satisfactory. Table 3 shows the percentage of success

in the detection of the diameter and center of pupil and

iris, for the third version of the operator (the slowest

and most accurate version).

On table 3, the worst results for CASIAv3 and

UBIRIS are justified by the fact that these images con-

tain reflections. The already pre-processed CASIAv1

images, as stated in section 3.1, are easier to segment,

justifying better results.

5.1.2 Template Matching

Replacing the integro-differential operator by the

template matching technique to perform the segmen-

tation, we get the results displayed in table 4.

Comparing the test results of tables 4 and 3, we

have a better results and lower processing time. In

our tests, on both databases the template matching ap-

proach runs about 7 to 10 times faster than the third

version of the integro-differential operator. The tem-

plate matching segmentation takes 0.1 and 1.5 sec-

onds for UBIRIS and CASIA, respectively. This (big)

difference is due to the larger resolution CASIA im-

ages; we have to use a larger number of templates

(4 times as for UBIRIS). Finally, considering the se-

quence template matching followed by the integro-

differential operator we run the same tests, obtaining

the results on table 5.

Comparing tables 5 and 4, we conclude that this

combination has a very small gain, only for CASIAv1

and has larger computation time than the previous ap-

BIOSIGNALS 2009 - International Conference on Bio-inspired Systems and Signal Processing

78

Table 5: Template Matching followed by integro-

differential operator for segmentation.

Database Success

UBIRISv1 (1877 images) 96.3 %

CASIAv1 (756 images) 98.7 %

CASIAv3 (756 images) 98.8 %

proach. This way, it is preferable to use solely the

template matching technique.

5.2 Recognition Rate

Using Gabor filters with eight orientations (see fig-

ure 5) and four frequencies, our implementation got

a recognition rate of 87.2% and 88%, for UBIRISv1

and CASIAv1, respectively. This recognition rate

can be improved; it is known that it is possible

to achieve higher recognition rate with Daugman’s

method on CASIAv1, using a larger IrisCode (Masek,

2003). Our main goal in this work was to show that

when we do not have infra-red already pre-processed

(CASIAv1-like) images: the reflection removal pre-

processing stage is necessary; sometimes pupil en-

hancement methods are also necessary; the segmen-

tation stage can be performed much faster with an ef-

ficient FFT-based template matching approach.

6 CONCLUSIONS

We addressed the problem of iris recognition, by

modifying and extending the well-known Daugman’s

method. We have developed a C# application and

evaluated its performance on the public domain

UBIRIS and CASIA databases. The study that was

carried out over these databases allowed us to pro-

pose essentially two new ideas for: reflex removal;

enhancement and isolation of the pupil and iris. For

the reflex removal problem, we have proposed 3 dif-

ferent methods. The enhancement and isolation of the

pupil, based on morphologic filters, obtained good re-

sults for both databases. It is important to stress that

this pre-processing algorithms depend on the image

database. Regarding the segmentation stage, we re-

placed the proposed integro-differential operator by

an equally accurate and faster cross-correlation tem-

plate matching criterion, which has an efficient imple-

mentation using the FFT and its inverse. This way, we

have improved the segmentation stage, because the

template matching algorithm is more tolerant to noisy

images, when compared to the integro-differentialop-

erator and runs faster. As future work we intend to

tune the algorithm for the noisy UBIRIS database.

REFERENCES

Arvacheh, E. M. (2006). A study of segmentation and nor-

malization for iris recognition systems. Master’s the-

sis, University of Waterloo.

Boles, W. (1997). A security system based on iris identi-

fication using wavelet transform. In L.C.Jain, editor,

First Int Conf on Knowledge-Based Intelligent Elec-

tronic Systems, pages 533–541, Adelaide, Australia.

Daugman, J. (1993). High confidence visual recognition of

persons by a test of statistical independence. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 25(11):1148–1161.

Daugman, J. (2004). How iris recognition works. IEEE

Transactions on Circuits and Systems for Video Tech-

nology, 14(1):21–30.

Greco, J., Kallenborn, D., and Nechyba, M. C. (2004). Sta-

tistical pattern recognition of the iris. In 17th an-

nual Florida Conference on the Recent Advances in

Robotics (FCRAR).

Jain, A. K., Ross, A., and Prabhakar, S. (2004). An intro-

duction to biometric recognition. IEEE Transactions

on Circuits and Systems for Video Technology, 14(1).

J.Huang, Y.Wang, T.Tan, and J.Cui (2004). A new iris seg-

mentation method for recognition. In 17th Int Conf on

Pattern Recognition (ICPR’04).

Joung, B. J., Kim, J. O., Chung, C. H., Lee, K. S., Yim,

W. Y., and Lee, S. H. (2005). On improvement for

normalizing iris region for a ubiquitous computing.

Computational Science and Its Applications ICCSA

2005, pages 1213–1219.

Lim, J. (1990). Two-dimensional Signal and Image Pro-

cessing. Prentice Hall.

Maltoni, D., Maio, D., Jain, A. K., and Prabhakar, S. (2005).

Handbook of Fingerprint Recognition. Springer, 1th

edition.

Masek, L. (2003). Recognition of human iris patterns for

biometric identification. Master’s thesis, University

of Western Australia.

Proenc¸a, H. (2007). Towards Non-Cooperative Biometric

Iris Recognition. PhD thesis, Universidade da Beira

Interior.

Proenc¸a, H. and Alexandre, L. A. (2005). UBIRIS: a noisy

iris image database. Lecture Notes in Computer Sci-

ence ICIAP 2005 - 13th International Conference on

Image Analysis and Processing, 1:970–977.

Vatsa, M., Singh, R., and Gupta, P. (2004). Comparison of

iris recognition algorithms. In Proceedings of Inter-

national Conference on Intelligent Sensing and Infor-

mation Processing, pages 354– 358.

Wildes, R. (1997). Iris recognition: an emerging biomet-

ric technology. Proceedings of the IEEE, 85(9):1348–

1363.

Yao, P., Li, J., Ye, X., Zhuang, Z., and Li, B. (2006). Anal-

ysis and improvement of an iris identification algo-

rithm. In 18th International Conference on Pattern

Recognition (ICPR’06).

MODIFICATIONS AND IMPROVEMENTS ON IRIS RECOGNITION

79