ARGUING OVER MOTIVATIONS WITHIN

THE V3A-ARCHITECTURE FOR SELF-ADAPTATION

Maxime Morge

1

, Kostas Stathis

2

and Laurent Vercouter

3

1

Dipartimento di Informatica, Universit`a di Pisa, Largo B. Pontecorvo, 3, Italy

2

Department of Computer Science, Royal Holloway, University of London, Egham, TW20 0EX, U.K.

3

Centre G2I, Ecole des Mines de Saint Etienne, 158, cours Fauriel, F-42023 Saint-Etienne, France

Keywords:

Multiagent systems, Agents models of motivation and personality, Agent architecture, Argumentation, Self-

adaptation.

Abstract:

The Vowel Agent Argumentation Architecture (V3A) is an abstract model by means of which an autonomous

agent argues with itself to manage its motivations and arbitrate its possible internal conflicts. We propose

an argumentation technique which specifies the internal dialectical process and a dialogue-game amongst

internal components which can dynamically join/leave the game, thus having the potential to support the

development of self-adaptive agents. We exemplify this dialectical representation of the V3A model with a

scenario, whereby components of the agent’s mind called facets can be automatically downloaded to argue an

agent’s motivation.

1 INTRODUCTION

Component-based engineering is a promising ap-

proach to develop adaptive systems. Adaptation is

obtained by replacing some components by others

or just by changing the connections between com-

ponents. This approach has already been adopted

to build agents (Ricordel and Demazeau, 2000;

Meurisse and Briot, 2001; Vercouter, 2004) to ease

the modification of the internal structure of an agent.

A current challenge is to automate this process in or-

der to provide self-adaptive agents. During this pro-

cess, the coherence of an assembly of components

must be warranted, i.e when the assembly is changed

(addition/removalof components and/or connections)

the result must be coherent. Solutions have been pro-

posed to deal with these issues but are not completely

satisfactory. The work of (Meurisse and Briot, 2001)

proposes to foresee all the possible assemblies and

seeks to describe what should be done in each case.

This approach reduces the openness of the system

only to foreseen situations. Other works rely on hu-

man intervention (Ricordel and Demazeau, 2000) or

they do not warranty the coherence of the resulting

behaviour (Vercouter, 2004).

In this paper we propose the Vowel Agent Argu-

mentation Architecture (V3A) to design self-adaptive

agents. Inspired by the Vowels approach (Demazeau,

1995), V3A is built upon an intentional stance. Addi-

tionally, V3A is divided in components called facets

encapsulating the different motivations of an agent.

We view these motivations as arguments describ-

ing the conditional decisions to achieve goals. A

facet can join the internal debate to argue for/against

the adoption of a motivation. Then, the personal-

ity arbitrates the possible conflicts. Regarding self-

adaptation, this personality corresponds to high-level

guidelines to solve conflicts appearing when modify-

ing the component assembly. The contribution of the

work is an abstract model of agency and the defini-

tion of a dialogue-game that can be played by facets

which can dynamically join or leave the game. For

this purpose, we consider an argumentation frame-

work (Dung, 1995) built to realize this internal dialec-

tical process within this modular architecture. A sce-

nario illustrates the use of this mechanism.

The paper is organised as follows. Section 2 intro-

duce the walk-through example to motivate our pro-

posal. Section 3 presents the V3A model, Section 4

presents the argumentation framework used to sup-

port it, and Section 5 describes the dialogue-game

facets play. We conclude in Section 6 where we also

present related work and we discuss our plans for the

future.

214

Morge M., Stathis K. and Vercouter L. (2009).

ARGUING OVER MOTIVATIONS WITHIN THE V3A-ARCHITECTURE FOR SELF-ADAPTATION.

In Proceedings of the International Conference on Agents and Artificial Intelligence, pages 214-219

DOI: 10.5220/0001657802140219

Copyright

c

SciTePress

2 WALK-THROUGH EXAMPLE

We motivate our approach with the following sce-

nario. Max, who lives in Pisa, must reach London

for a meeting. Since he does not like to spend too

much time in queues in order to buy tickets, he has

a PDA equipped with a personal service agent (PSA)

in order to automatically buy a ticket when Max is in

a train station. The PSA has been set up by the con-

structor to respect the user’s preferences and to use

the computing system in order to assist the user.

Our starting point is the Vowels approach of multia-

gent oriented programming (Demazeau, 1995), which

has shown to be suited for developing agent platforms

e.g. see (Ricordel and Demazeau, 2000) and (Ver-

couter, 2004). In this approach a multiagent system

(MAS) is analysed across five dimensions: Users,

Agents, Environments, Interactions, and Organiza-

tions. The Vowels approach is generally used in the

analysis stage of the building process in order to di-

vide the problem according to these dimensions.

Users. Max has configured his PSA for this travel

and does not allow his PSA for paying extra commis-

sions since he will not be refunded for these. When

Max arrives in a railway station, the PSA automati-

cally downloads the four following components.

Agents. The PSA has a representation of the avail-

able seller agent (SA).

Environments. The PSA have the knowledge about

the current location, the current time, the possible

traffic troubles, and the train time schedule.

Interactions. The PSA has a representation of the

protocol required for communicating with the SA.

Organizations. The PSA has a representation of the

norms adopted by the system for the automatic pay-

ment.

In this way, when Max arrives in the railway station of

Pisa, the PSA is able to request a train ticket to reach

Pisa airport. Since the train is not fully booked the

SA sells one to the PSA and the payment is performed

within the computing system. Therefore, Max has a

reservation and can show its PDA to the controller

agent on board. When Max arrives in Stansted, the

PSA automatically replaces the four previous compo-

nents. Since the SA overcharges the train ticket price

with extra fees, the PSA does not register the user.

Therefore, Max will pay the train ticket on board to

the controller agent.

3 THE V3A MODEL

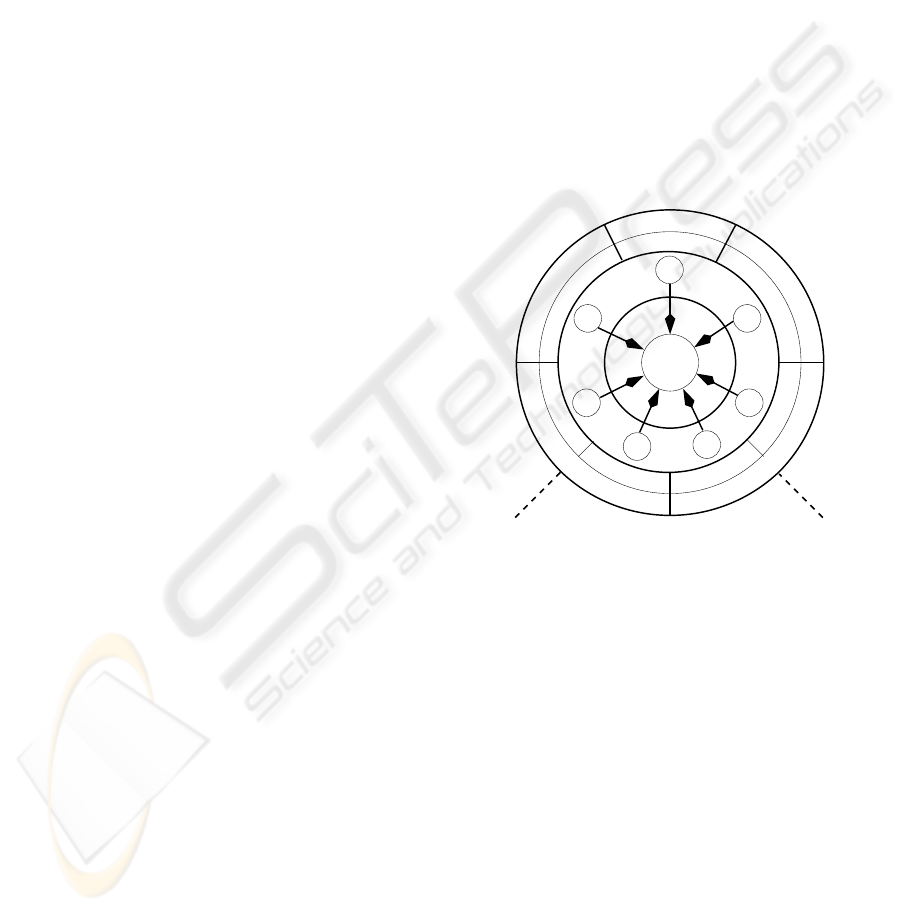

Our agent architecture (Fig. 1) consists of: a KBase

(KB) partitioned according to the vowel dimensions

(

KB

user

,

KB

agt

,

KB

env

,

KB

int

,

KB

org

), an Argu-

mentation State (

AS

) and a personality (

pers

). The

KBase is a repository of rules and assumptions pos-

sibly conflicting. The parameter related to a dimen-

sion can still be too large and needs to be decom-

posed in different facets (

f

1

, . . . ,

f

7

). We partition

further the dimensions linked to the external world

to distinguish perceptions and capabilities. For in-

stance,

KB

env

contains the representation of the phys-

ical laws in the environment (

KB

env

1

) and the ob-

servations/actions perceived/performed by the body

(

KB

env

2

). Similarly,

KB

int

contains the protocols

(

KB

int

1

) and the messages received/sent by the agent

(

KB

int

2

). Facets joint (respectively leave) the game

for adding (respectivelydeleting) particular aspects of

the whole possible agenda of the agent, which taken

together will comprise every aspects that the agent

can address.

AS

Body

pers

KBenv

KBint

KBorg

KBagt

KBenv1

KBenv2

KBuser

f1

f2

f3

f4

f5

f6

f7

KBint1

KBint2

Figure 1: The V3A agent architecture.

The interaction between facets is organized as an

argumentation game, consisting of dialogical moves

about statements. The dialogue is regulated by pro-

cedural rules.

AS

is a shared memory structure. Its

current state includes a (partial) argument not yet de-

feated or subsumed. At the end of the game,

AS

con-

tains the action(s) to perform. That is, each facet

argues for or against motivations. The facet objec-

tives consist of promoting (or demoting) the goals

to reach, the actions to performed (or not) and the

beliefs to grant (or not) in order to actively achieve

some aspects of the agent’s agenda or avoid some

situations that would be detrimental. In addition,

facets monitor the statements proposed by other ones

in

AS

to determine whether these statements inter-

act with their own agenda. Essentially each facet

can have an opinion of what is best for the agent

as a whole, but from its limited viewpoint. A facet

must argue its case against/with other possibly com-

ARGUING OVER MOTIVATIONS WITHIN THE V3A-ARCHITECTURE FOR SELF-ADAPTATION

215

peting/completing views for this to become incorpo-

rated into the agent overt behavior. This behavior is

determined by the personality that specifies how to

give priority to the facets and arbitrate amongst them

to resolve the possible conflicts.

4 ARGUMENTATION

FRAMEWORK

Our argumentation framework (

AF

) is based on the

opposition calculus of (Dung, 1995), where argu-

ments are reasons which can be defeated by other ar-

guments.

Definition 1 (AF). An argumentation framework is a

pair AF = hA , defeats i where A is a finite set of

arguments and defeats is a binary relation over

A . We say that an argument b defeats an argument a

if (b, a) ∈ defeats . Additionally, we say that a set

of arguments S defeats a if (b, a) ∈ defeats and

b ∈ S.

(Dung, 1995) analysis if a set of arguments is collec-

tively justified.

Definition 2 (Semantics). A set of arguments S ⊆ A

is:

• conflict-free if ∀a, b ∈ S it is not the case that a

defeats b;

• admissible if S is conflict-free and S defeats every

argument a which defeats some arguments in S.

Amongst the semantics proposed in (Dung, 1995),

we restrict ourself to the admissible one.

We use

AF

to model the reasoning within the V3A

agent architecture.

Definition 3 (KF). A knowledge representation

framework is a tuple KF = hL , A sm, I , T , Pi where:

• L is a formal language consisting of a finite set of

sentences, called the representation language;

• A sm is a set of atoms in L , called assumptions,

which are taken for granted;

• I is a binary relation over atoms in L , called the

incompatibility relation, which is asymmetric;

• T is a finite set of rules built upon L , called the

theory;

• P ⊆ T × T is a transitive, irreflexive and asym-

metric relation over T , called the priority rela-

tion.

L admits strong negation (classical negation) and

weak negation (negation as failure). A strong literal

is an atomic first-order formula, possible preceded by

strong negation ¬. A weak literal is a literal of the

form ∼ L, where L is strong.

We adopt an assumption-based argumentation ap-

proach (Dung et al., 2007) to reason about beliefs,

goals, decisions and priorities (Morge and Man-

carella, 2007). That is, agents can reason under

uncertainty. Actually, certain literals are assumable,

meaning that they can be assumed to hold in the

KB as long as there is no evidence to the contrary.

Decisions (e.g.

request

(

psa

,

sa

, ticket)) as well as

some beliefs (e.g. ∼

strike

) are assumable literals.

The incompatibility relation captures conflicts.

We have L I ¬L, ¬L I L and L I ∼ L. It

is not the case that ∼ L I L. For instance,

paycom

(

psa

,

sa

, price) I ¬

paycom

(

psa

,

sa

, price)

and ¬

paycom

(

psa

,

sa

, price) I

paycom

(

psa

,

sa

, price) whatever the price is.

We say that two sets of sentences Φ

1

and Φ

2

are

incompatible (Φ

1

I Φ

2

) iff there is at least one

sentence φ

1

if Φ

1

and one sentence φ

2

in Φ

2

such that

φ

1

I φ

2

.

A theory is a collection of rules with priorities

over them.

Definition 4 (Theory). A theory T is an extended

logic program, i.e a finite set of rules s.t. R: L

0

←

L

1

, . . . , L

j

, ∼ L

j+1

, . . . , ∼ L

n

with n ≥ 0, each L

i

being

a strong literal in L . The literal L

0

, called head of

the rule (denoted head(R)), is a statement. The finite

set {L

1

, . . . , ∼ L

n

}, called body of the rule, is denoted

body(R). R, called name of the rule, is an atom in L .

All variables occurring in a rule are implicitly uni-

versally quantified over the whole rule. A rule with

variables is a scheme standing for all its ground in-

stances.

For simplicity, we will assume that the names of rules

are neither in the bodies nor in the head of the rules

thus avoiding self-reference problems. We consider

the priority relation P on the rules in T , which is

transitive, irreflexive and asymmetric. R

1

PR

2

can be

read “R

1

has priority over R

2

”. There is no priority

between R

1

and R

2

, either because R

1

and R

2

are ex

æquo, or because R

1

and R

2

are not comparable. For

instance,

user

(f

1

, x

1

)P

agt

(f

2

, x

2

)) means that the

rules in

KB

user

have priority over the rules in

KB

agt

.

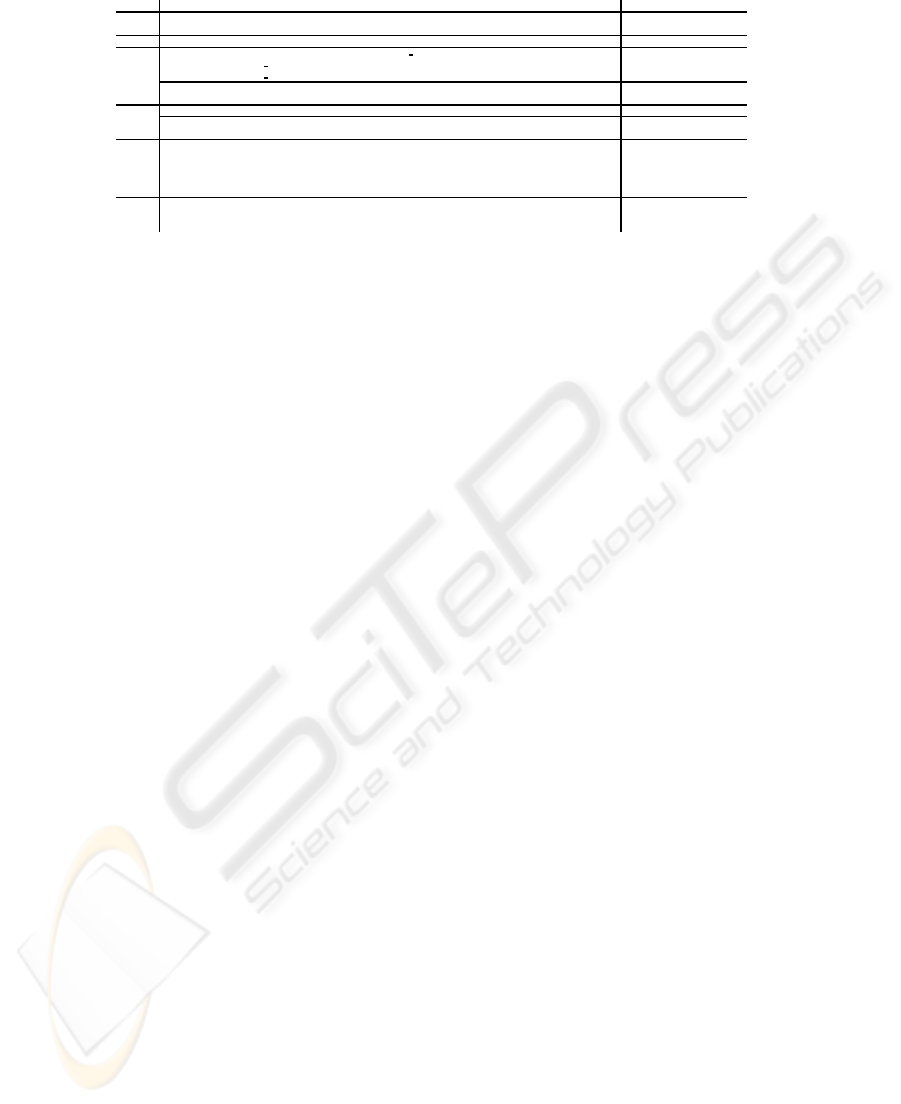

The KBases and the personality of the PSA in Pisa

are depicted in Tab. 1.

Users KB. The user desires to reach the next travel

step without paying any extra commissions.

Agents KB. The PSA knows that the SA can deliver

tickets after requesting him. Contrary to Italy, utilis-

ing the services of a SA in UK requires to pay an extra

commission. The cost of tickets also depends on the

context.

Environments KB. These facets work on percepts

ICAART 2009 - International Conference on Agents and Artificial Intelligence

216

e.g. the time and the location of the PSA (cf

env

(

f

4

,

a

2

)) and beliefs about the instant location,

e.g. the time schedule (cf

env

(

f

3

,

r

1

)).

Interactions KB. These facets also work on percepts

e.g. messages which will be received/sent and be-

liefs about protocols which depends on the context

e.g.

int

(

f

6

,

r

1

(aid

1

, aid

2

, ticket, price, comm)).

Organisations KB. The PSA must pay a ticket to

confirm its reservation.

Personality. Since the PSA has been set up by the

constructor to respect the user’s preferences and as-

sist him, the facets related to the user are preferred

than those that are related to other agents. In order

to utilise the computing system, the PSA prefers the

organisation facet rather the ones which are related to

the environment. Contrary to other components, the

personality is not embodied by facets but these pref-

erences are encoded in the procedural rules described

informally in Section 5.

In this scenario, self-adaptation is crucial since, when

Max is arriving in a new location, new KBases can be

downloaded and they replace the previous one. It is

worth noticing that the users KB and the personality

are not modified.

Due to the abductive nature of proactive agent reason-

ing, arguments are built by reasoning backward.

Definition 5 (Argument). An argument a for a state-

ment α ∈ L (denoted conc(a)) is a deduction of

that conclusion whose premise is a set of rules (de-

noted rules(a)) and assumptions (denoted asm(a))

of KF.

The top-level link of a (denoted ⊤(a)) is a rule s.t. its

head is conc(a).

The sentences of a (denoted sent(a)) is the set of

literals of L in the bodies/heads of the rules including

the assumptions of a.

In Pisa, the argument

a

concludes

motive

since the

PSA does not register. This argument is defined.t.

rules

(

a

)={

user

(

f

1

,

r

1

),

env

(

f

3

,

r

1

),

env

(

f

4

,

a

1

),

env

(

f

4

,

a

2

),

env

(

f

3

,

r

3

(

lipifi

))},

⊤(

a

) =

user

(

f

1

,

r

1

), and

asm

(

a

) = {∼

strike

,

register

(

lipifi

,

no

),

be

(

pisa

, 17)}.

By contrast, the argument

b

concludes

motive

if

the PSA request a ticket, pays it and registers. This

argument is defined s.t.

rules

(

b

)={

user

(

f

1

,

r

1

),

env

(

f

3

,

r

1

),

env

(

f

3

,

r

2

(

lipifi

)),

org

(

f

7

,

r

1

(

lipifi

, ticket, price, comm)),

agt

(

f

2

,

r

1

(ticket)),

int

(

f

6

,

r

1

(

sa

,

psa

, ticket, price, comm))

}

⊤(

b

) =

user

(

f

1

,

r

1

), and

asm

(

b

) = {∼

strike

,

be

(

pisa

, 17),

pay

(

psa

,

sa

, 4, 0),

request

(

psa

,

sa

, ticket)}. After self-adaptation, similar

arguments exist in Stansted.

We define here the defeat relation. Firstly, we define

the attack relation to deal with conflicting arguments.

Definition 6 (Attacks). Let a and b be two argu-

ments. a attacks b iff sent(a) I sent(b).

This relation encompasses both the rebuttal attack

due to the incompatibility of conclusions, and the un-

dermining attack, i.e. directed to a “subconclusion”.

The strength of arguments depends on the priority of

their sentences. In order to give a criterion that will

allow to prefer one argument over another, we con-

sider here the last link principle to promote high-level

goals.

Definition 7 (Strength). Let a and b be two argu-

ments. a is stronger than b (denoted prior(a, b)) if

it is the case that ⊤(a)P⊤(b).

The two previous relations can be combined.

Definition 8 (Defeats). Let a and b be two argu-

ments. a defeats b iff: i) a attacks b; ii) it is

not the case that prior(b, a).

In Pisa, {

b

} is in an admissible set since the organ-

isation has priority over the environment. This argu-

ment describes the motivation for registering. After

the self-adaptation of the PSA in London, even if a

new travel connection is considered, this argument is

no more admissible since an extra commission is re-

quired. Due to the agent personality, argument

a

is

reinstantiated and the PSA does not register.

5 DIALOGUE-GAME

The result of the debate amongst facets is an argument

sketched in

AS

. We consider here the procedural rules

which regulate the exchanges of moves to reach an

agreement. For this purpose, we instantiate a dialecti-

cal framework (Prakken, 2006).

Definition 9 (Dialectical Framework). Let

us consider the topic, i.e. a statement in

L , and F C L a facet communication lan-

guage. The dialectical framework is a tuple

DF(topic, KF)=hP, AS, Ω

M

, H, T, proto, Zi

where:

• P = {p

1

, . . . , p

n

} is a set of n players;

• AS = hPro, Oppi is composed of two boards Pro

and Opp which contains the literals held by the

proponents and the opponents respectively;

• Ω

M

⊆ F C L is a set of well-formed moves;

• H is a set of histories, the sequences of well-

formed moves s.t. the speaker of a move is de-

termined at each stage by the turn-taking function

T and the moves agree with the protocol proto;

ARGUING OVER MOTIVATIONS WITHIN THE V3A-ARCHITECTURE FOR SELF-ADAPTATION

217

Table 1: The KBases of the PSA in Pisa.

T A sm

KB

user

user

(

f

1

,

r

1

):

motive

←

be

(

apisa

, 18) ¬

pay

(

psa

, aid, price, comm)

user

(

f

1

,

r

2

):

motive

←

be

(

london

, 22) with comm 6= 0

KB

agt

agt

(

f

2

,

r

1

(ticket)):

buy

(

psa

,

sa

, ticket, 4, 0) ←

accept

(

psa

,

sa

, ticket, 4, 0)

KB

env

env

(

f

3

,

r

1

):

be

(

apisa

, 18) ←

be

(

pisa

, 17), ∼

strike

,

take train

(

lipifi

)

register

(train,

no

)

env

(

f

3

,

r

2

(train)):

take train

(train) ←

register

(train,

yes

)

env

(

f

3

,

r

3

(train)):

take train

(train) ←

register

(train,

no

)

∼

strike

be

(

pisa

, 17)

KB

int

request

(

psa

, aid

1

, ticket)

int

(

f

6

,

r

1

(aid

1

, aid

2

, ticket, price, comm)):

accept

(aid

1

, aid

2

, ticket, price, comm) ←

request

(aid

2

, aid

1

, ticket)

int

(

f

6

,

r

2

(aid

1

, aid

2

, ticket, price, comm)): ¬

accept

(aid

1

, aid

2

, ticket) ←

request

(aid

2

, aid

1

, ticket)

KB

org

org

(

f

7

,

r

1

(train, ticket, price, comm)):

register

(train,

yes

) ←

buy

(

psa

,

sa

, ticket, price, comm),

pay

(

psa

,

sa

, price, comm)

org

(

f

7

,

r

2

(train, ticket, price, comm)): ¬

register

(train,

yes

) ← ¬

buy

(

psa

,

sa

, ticket, price, comm)

pay

(

psa

,

sa

, price, comm)

org

(

f

7

,

r

3

(train, ticket, price, comm)): ¬

register

(train,

yes

) ←

buy

(

psa

,

sa

, ticket, price, comm),

¬

pay

(

psa

,

sa

, price, comm)

pers user

(f

1

, x

1

)P

agt

(f

2

, x

2

)

int

(f, x),

org

(f, x),

org

(f

1

, x

1

)P

env

(f

2

, x

2

)

agt

(f, x),

env

(f, x),

user

(f, x)

• T: H → P is the turn-taking function;

• proto: H× AS → Ω

M

is the function determin-

ing the moves which are allowed to expand an his-

tory;

• Z is the set of dialogues, i.e. the terminal histo-

ries where the proponent (respectively opponent)

board is a set of assumable literals (respectively

empty).

In the V3A architecture, the

DF

allows multi-party

dialogues amongst facets (the players) about

motive

(the

topic

) within

KF

. Players claim literals during

dialogues. Each dialogue is a maximally long se-

quence of moves. We call line the sub-sequence of

moves where backtracking is ignored. Amongst play-

ers, the proponents argue for an initial claim while the

opponents argue against it.

We define here the syntax and the semantics of moves.

The syntax of moves is in conformance with a com-

mon facet communication language, F C L . A move

at time t: has an identifier,

mv

t

; is uttered by a speaker

(

sp

t

∈

P

); eventually

rp

t

is the identifier of the mes-

sage to which

mv

t

responds and the speech act is com-

posed of a locution

loc

t

and a content

content

t

. The

locutions are

claim

,

concede

,

oppose

,

deny

, and

unknown

. The content is a set of atoms in L .

The semantics of speech acts is public since all play-

ers confer the same meaning to the moves. The se-

mantics is defined in terms of pre/post-conditions.

Definition 10 (Semantics of F C L ). Le t be the time

of a history h in H (0 ≤ t < |h|). AS

0

= h{topic},

/

0i.

The semantics of the utterance by the facet f at time t

is defined s.t.:

1. f may utter unknown(

/

0) and so AS

t+1

= AS

t

;

2. considering L ∈ Pro

t

,

(a) f may utter claim(P), if ∃r ∈ T

f

head(r) =

L, body(r) = P, P ∩ Pro

t

=

/

0, and it is not

the case that P I Pro

t

. Therefore, AS

t+1

=

hPro

t

∪ P− {L}, Opp

t

i,

(b) f may utter concede({L}), if L ∈ A sm

f

.

Therefore, AS

t+1

= AS

t

,

(c) f may utter oppose({L

′

}), if L

′

I L. Therefore,

AS

t+1

= hPro

t

− {L}, Opp

t

∪ {L

′

}i;

3. considering L ∈ Opp

t

,

(a) f may utter claim(P), if ∃r ∈ T

f

head(r) =

L, body(r) = P and P ∩ Opp

t

=

/

0. Therefore,

AS

t+1

= hPro

t

, Opp

t

∪ P− {L}i,

(b) f may utter deny({L

′

}), if L

′

I L. Therefore,

AS

t+1

= hPro

t

∪ {L

′

}, Opp

t

− {L}i.

The rules to update

AS

incorporates a filtering (in

case 2a and 3a) to be more efficient. Concretely, the

set of literals in

Pro

and

Opp

are filtered, so they are

not repeated more than once, and finally the literals in

Pro

are not incompatible with each other. The speech

act

unknown

(

/

0) has no preconditions. Neither con-

cessions nor pleas of ignorance have effect on

AS

.

In order to be uttered, a move must be well-formed.

The initial moves are initial claims and pleas of igno-

rance:

mv

0

∈ Ω

M

iff

loc

(

mv

0

) =

claim

or

loc

(

mv

0

) =

unknown

. The replying moves are well-formed iff

they refer to an earlier move:

mv

j

∈ Ω

M

iff

rp

j

=

mv

i

with 0 ≤ i < j. Notice that backtracking is allowed.

Each dialogue is a sequence h = (

mv

0

, . . . ,

mv

|h|−1

)

with

proto

(h) =

/

0. In this way, the set

Z

of dialogues

is a set of maximally long histories, i.e. which cannot

be expanded even if backtracking is allowed.

The turn-taking function

T

determines the speaker

of each move. If h ∈

H

,

sp

0

=

p

i

and j − i =

|h|(mod n), then T(h) =

p

j

.

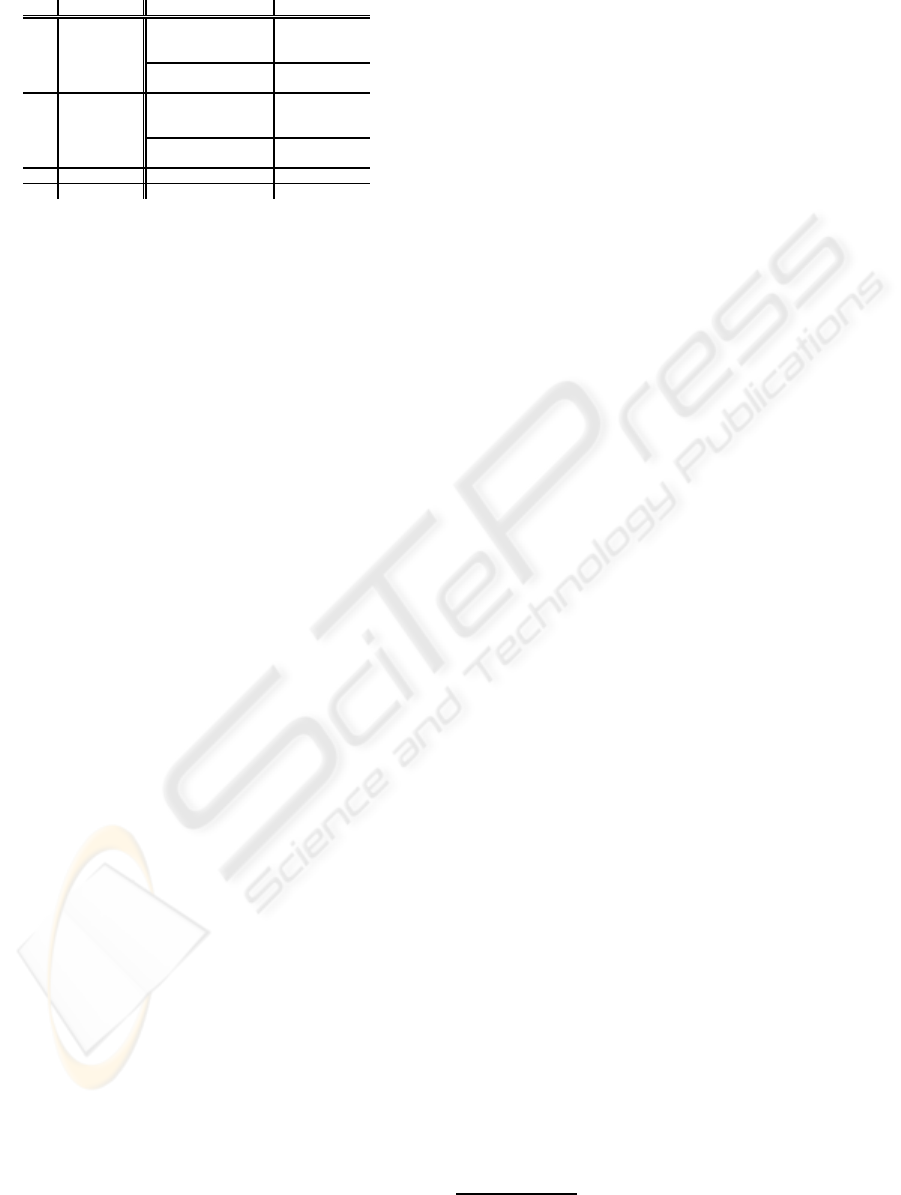

The protocol (

proto

) consists of a set of sequence

rules (e.g.

sr

1

, ...

sr

4

represented in Tab. 2) spec-

ifying the legal replying moves. For example,

sr

1

specifies the legal moves replying to a previous claim

(

claim

(P)). The speech acts resist or surrender, i.e.

close the line. Players resist as much as possible.

The locutions

concede

and

unknown

are utilised to

manage the sequence of moves since they surren-

der, and so close the line but not necessarily the dia-

logue (backtracking is allowed). By contrast, a claim

(

claim

(P

′

)) and an opposition (

oppose

({L

′

})) resist

to the previous claim. The moves replying to a deny

(

deny

({L

′

})) are the same as the replying move of a

ICAART 2009 - International Conference on Agents and Artificial Intelligence

218

Table 2: Speech acts and the potential replies.

Speech acts Resisting Surrendering

sr

1

claim

(P)

claim

(P

′

), with

r

s.t

concede

({L}),

L =

head

(

r

) ∈ P and with L ∈ P

body

(

r

) = P

′

oppose

({L

′

}), with

unknown

(P)

L

′

I L and L ∈ P

sr

2

oppose

({L})

claim

(P

′

), with

r

s.t.

unknown

(P)

L =

head

(

r

) and

body

(

r

) = P

′

deny

({L

′

}),

with L

′

I L

sr

3

concede

(P)

/

0

/

0

sr

4

unknown

(P)

/

0

/

0

claim (

claim

({L

′

})). At the end of the game,

Pro

may contains the assumptions of an argument deduc-

ing

motive

.

6 CONCLUSIONS

We have proposed a dialectical argumentation frame-

work allowing an agent to argue with itself about its

motivations. The framework relies upon the admis-

sibility semantics and uses an assumption-based ar-

gumentation approach to support reasoning about the

knowledge, goals, and decisions held in the agent’s

mental facets. These modules interact via a dialogue-

game which is formally defined and exemplified via a

concrete scenario. The contribution of the work is a

modular model that allows the facets and the person-

ality of an agent to be specified declaratively, man-

ages potential conflicts and replaces components at

runtime, thus avoiding to restart the agent’s reason-

ing process whenever a component joins or leaves the

game.

Some of the concepts utilized here have been intro-

duced in the AAA model (Witkowski and Stathis,

2004). However, here we provide a formal defini-

tion of the argumentation game that the original AAA

model abstracted away from. We have also reinter-

preted the original model by using the Vowels ap-

proach, which has an agent-oriented software engi-

neering foundation.

Our work is also related to the KGP (Kakas et al.,

2004b) model of agency and in particular the mod-

elling of the personality of the agent (Kakas et al.,

2004a) through preferences. One important differ-

ence with (Kakas et al., 2004a) comes from our de-

composition which distinguishes explicitly the differ-

ent aspects, possibly conflicting, that the agent must

arbitrate. These aspects are embodied by faculties that

are more amenable to be plug-and-play components at

run-time using a multi-threaded implementation.

Future work includes investigating the properties

of different dialogue-games for different semantics

and properties. We also plan to extend the current

prototype using CaSAPI

1

to allow an internal dialec-

tic that is multi-threaded and relies on facets that are

interpreted by different proof systems implementing

different kinds of reasoning such as epistemic reason-

ing, practical reasoning and normative reasoning.

ACKNOWLEDGEMENTS

This work is supported by the Sixth Framework IST

035200 ARGUGRID project.

REFERENCES

Demazeau, Y. (1995). From interactions to collective be-

haviour in agent-based systems. In Proc. of the First

European Conference on Cognitive Science, pages

117–132, Saint Malo.

Dung, P. M. (1995). On the acceptability of arguments and

its fundamental role in nonmonotonic reasoning, logic

programming and n-person games. Artificial Intelli-

gence, 77(2):321–357.

Dung, P. M., Mancarella, P., and Toni, F. (2007). Com-

puting ideal sceptical argumentation. Artificial In-

telligence, Special Issue on Argumentation, 171(10-

15):642–674.

Kakas, A. C., Mancarella, P., Sadri, F., Stathis, K., and Toni,

F. (2004a). Declarative agent control. In Leite, J. A.

and Torroni, P., editors, CLIMA V, volume 3487 of

LNCS, pages 96–110.

Kakas, A. C., Mancarella, P., Sadri, F., Stathis, K., and Toni,

F. (2004b). The KGP model of agency. In Proc. of

ECAI, pages 33–37.

Meurisse, T. and Briot, J.-P. (2001). Une approche `a base de

composants pour la conception d’agents. Technique et

Science Informatiques (TSI), 20(4).

Morge, M. and Mancarella, P. (2007). The hedgehog and

the fox. An argumentation-based decision support sys-

tem. In Proc. of ArgMAS, pages 55–68.

Prakken, H. (2006). Formal systems for persuasion dia-

logue. The Knowledge Engineering Review, 21:163–

188.

Ricordel, P.-M. and Demazeau, Y. (2000). From analysis to

deployment: A multi-agent platform survey. In Proc.

of ESAW, volume 1972/2000 of LNCS, pages 93–105,

Berlin, Germay. Springer Berlin / Heidelberg.

Vercouter, L. (2004). MAST: Un mod`ele de composants

pour la conception de SMA. In Actes de JMAC’04,

Paris, France.

Witkowski, M. and Stathis, K. (2004). A dialectic archi-

tecture for computational autonomy. In Agents and

Computational Autonomy, pages 261–273. Springer

Berlin.

1

http://www.doc.ic.ac.uk/∼dg00/casapi.html

ARGUING OVER MOTIVATIONS WITHIN THE V3A-ARCHITECTURE FOR SELF-ADAPTATION

219