AGENT APPROACH TO SITUATION ASSESSMENT

Giuseppe Paolo Settembre, Daniele Nardi

Department of Computer and System Science, Sapienza University, Rome, Italy

Roberta Pigliacampo

SESM s.c.a.r.l. - a Finmeccanica company, Via Tiburtina km 12.4, Rome, Italy

Keywords:

Knowledge Representation and Reasoning, Industrial Application of AI.

Abstract:

The Situation Assessment process is evolving from signal-analysis based centralized models to high-level

reasoning based net-centric models, according to new paradigms of information fusion proposed by recent

research.

In this paper we propose a knowledge-based approach to Situation Assessment, and we apply it to maritime

surveillance. A symbolic model of the world is given to an agent based framework, that use Description

Logics based automatic reasoning to devise on estimate of the situation. The described approach potentially

allows distributed Situation Assessment through agent collaboration. The goal is to support the understanding

of the situation by relying on automatic interpretation processes, in order to provide the human operators with

a synthetic vision, pointing out which are the elements on the scenario that require human intervention.

The success of high level reasoning techniques is shown through experiments in a real maritime scenario, in

which our approach is compared to the performances of human operators which monitor the situation without

any support of an automatic reasoning system.

1 INTRODUCTION

Data Fusion is the basis for a huge industrial research

field (e.g. signal processing and sensor fusion) whose

aim is to develop techniques for security related sys-

tems.

According to 1999 revision of JDL Data Fusion

Model and its recent reformulations (Llinas et al.,

2004), the purpose of the third level of information

fusion (Situation Assessment) is “estimation and pre-

diction of relations among entities, to include force

structure and cross force relations, communications

and perceptual influences, physical context, etc.”; it is

also pointed out that understanding and predicting re-

lations among entities within a scenario have become

critical issues in surveillance activities. In fact, the

quality of information provided by low level analysis

(level 1 and 2) does not suffice anymore the applica-

tions’ requirements, due to the increasing complexity

of the scenarios and the high operators’ workload.

The success of new technologies and standards

for formal knowledge representation offers the mo-

tivation to explore new approaches in order to pro-

vide effective solutions to Situation Assessment. In

our approach, we consider Situation Assessment as

the problem of extracting the best explanations of the

extensional knowledge of each agent, through situa-

tion classification, providing meaningful aggregated

information, to improve situation comprehension and

support decision making activities.

The approach described by this paper includes

the following innovative aspects. (1) It partially

fills the lacking of a formal definition of the Situa-

tion Assessment process. In our formalization, the

new standards of reasoning techniques (e.g. OWL,

http://www.w3.org/TR/owl-guide/), and the evolution

to distributed paradigms are taken into account. (2)

It provides an algorithm to perform Situation Assess-

ment with a single agent, with a solution which is suit-

able for an evolution to multi-agent contexts.

The proposed approach allows a synergy between

autonomous and standard human analysis, since the

extraction of a high level description of the evolving

situation helps operators to focus only on few poten-

tially relevant elements of the global scenario. This is

coherent with the situation awareness theory (Endsley

and Garland, 2000), and with cognitive experiments

(Giompapa et al., 2006).

287

Settembre G., Nardi D. and Pigliacampo R. (2009).

AGENT APPROACH TO SITUATION ASSESSMENT.

In Proceedings of the International Conference on Agents and Artificial Intelligence, pages 287-290

DOI: 10.5220/0001664302870290

Copyright

c

SciTePress

In order to show the advantages of our approach to

perform Situation Assessment, we made experiments

in a real world seacoast scenario. We modeled some

complex relationships which are monitored in a pro-

tected area, comparing the agent-based system’s per-

formances to the human operators’ ones, in collabo-

ration with a company working on radar surveillance.

2 PROBLEM FORMALIZATION

We considered a certain number of objects V =

{v

1

, ..., v

n

} moving in the scenario each with a private

goal, and a set of agents A

1

, ..., A

m

. Each agent has a

world model, has perceptions, communicates with the

others, and takes part pro-actively in the classification

process. The intensional knowledge is shared among

all the agents, and constitutes a common language for

communication.

We define an event (perception)e

i

as a logical con-

dition, which is the output of a feature extraction pro-

cess, based on the observations of the environment. A

situation at a certain time t

i

is commonly defined as

the state of all the observed variables in the world at

time t

i

and in the past (at time t

j

, j ∈ {0, ..., i − 1}).

However, this definition doesn’t suit in the scope of

Situation Assessment, because such a process does

not aims at considering all the variables in the sce-

nario, but instead at estimating the state of subsets of

these variables, in order to extract a few number of

relevant elements. Hence we consider the set of the

relevant situation classes Σ = {S

1

, . . . , S

n

}, in which

S

i

is a class of situations, significant with respect to

the context. Basically, each relevant situation class

represents a group of semantically equivalent circum-

stances of the world.

We say that s

i

∈ S

j

(s

i

is an instance of S

j

, where

S

j

≡ f(e

1

, . . . , e

n

)), if ∃e

1

, . . . , e

n

s.t. f(e

1

, . . . , e

n

) =

true, where f(e

1

, . . . , e

n

) is a logical definition in

a given formalism. From the point of view of

the agent’s knowledge, with K

p

(s

i

∈ S

j

) we indi-

cate that an agent A

p

knows that s

i

∈ S

j

, where

S

j

≡ f(e

1

, . . . , e

n

). This happens iff ∃e

1

, . . . , e

n

∈

KB

p

s.t. f(e

1

, . . . , e

n

) = true, where KB

p

is the

knowledge base of that agent. A situation class

S

i

≡ f(e

p

1

, ..., e

p

n

) is more specific than S

j

≡

g(e

q

1

, ..., e

q

m

), indicated with S

i

⊆ S

j

, iff every in-

stance of S

i

is also an instance of S

j

.

We can now proceed in providing a formal defini-

tion of the Situation Assessment process.

Definition 1. We say that s

i

is classified as S

i

by an

agent A

p

iff K

p

(s

i

∈ S

i

) and ∄S

j

s.t. K

p

(s

i

∈ S

j

)∧S

j

⊆

S

i

. We indicate with c

p

(KB

p

, s

i

) = S

i

that the agent

A

p

, given its knowledge base KB

p

and a situation in-

Figure 1: Organization of components of Situation Assess-

ment (UML inspired by (Matheus et al., 2003)).

stance s

i

, classifies s

i

as S

i

.

Definition 2. We say that a classification c

p

(KB, s

i

) =

S

i

is correct if s

i

∈ S

i

.

Definition 3. We define Situation Assessment (SA) the

classification of all the instances s

i

. SA is correct

iff ∀s

i

, c

p

(KB, s

i

) = S

i

is correct. SA is complete if

∀s

i

∈ S

i

, c

p

(KB, s

i

) = S

i

.

The relation occurring among the elements of the

Situation Assessment process is illustrated in Fig.1.

By classifying the significant situations as instances

of the Relevant Situation concepts that are represented

into a taxonomy of situations (see Sec.3), and thus

using the Description Logics (DL) inference capabil-

ities, we did not need to use any rule propagation en-

gine (which would not be supported by reasoners).

3 SITUATION CLASSIFICATION

We modeled the agent’s knowledge us-

ing ontologies formalized in OWL DL

(http://www.w3.org/TR/owl-features), through

the editor Proteg´e (http://protege.stanford.edu), while

inference was performed through the RacerPro

reasoner (http://www.racer-systems.com). In our

approach, each agent is provided with two ontologies,

the domain ontology and the situation ontology. The

first is a content ontology (see (Llinas et al., 2004)),

while the second is a process ontology.

The “domain” Ontology. The domain ontol-

ogy is the symbolic representation of the elements

of the world, which have to be extracted from the

scenario and organized in a taxonomic structure.

Once the intensional knowledge (concepts,

relations) has been defined, the ontology is popu-

lated using data extracted from its own information

sources, or provided by other agents. The main issues

about the population of the ontology (building of

the so-called ABOX) tipically concern the mapping

from numeric data into their symbolic representation

(e.g., map coordinates into regions). This is solved at

ICAART 2009 - International Conference on Agents and Artificial Intelligence

288

sub-symbolic level, through specific procedures.

The “situation” Ontology. Once the domain

ontology has been populated, we can use our knowl-

edge on the scenario to identify the set of Relevant

Situation classes (see Sec.2), and organize them in

a second taxonomy. Each relevant situation class is

defined with constraints expressed in DL formalism,

using the terms (concepts, relations) included into

the domain ontology. Note that these definitions are

not rules expressed in an external formalism, but

DL defined concepts (rules are not included in the

system).

At this point, we are able to use automatic reasoning

and DL capabilities (Nardi and Brachman, 2003)

on the available knowledge to classify the current

situations instances. Now, the assessment of the

situation is simply the most specific classification of

each situation instance among the relevant situation

classes.

From the point of view of the extensional knowledge,

we must address carefully the creation of the correct

number of situation instances. We decided to have

as many situation instances as many independent

circumstances are present in the world, and examine

them separately. In this way, different aspects of

the same global situation are perceived as different

instances. Moreover, separating independent situa-

tion instances gives us a substantial advantage when

we would start dialogues among different agents to

compare their classifications.

Defining the right number of situation instances is

not easy to solve. For example, if we would declare

different situation instances for each location of

the environment, then we would not be able catch

those relationships (situation classes) which are

aggregation of events in different locations. The

solution we propose consists in identifying a subset

E of event classes which trigger the generation of

a new situation instance. When a new event e

i

∈ E

is detected, a new situation instance s

i

is created, is

declared as being a member of the generic Situation

class, and it is connected through a relation (hasOb-

ject in Fig.1) with the event e

i

. Whenever events are

detected and for agent p verify the definition of a

certain situation class S, s

i

is classified as member of

S, and K

p

(s

i

∈ S) holds.

4 VALIDATION IN A HARBOUR

SURVEILLANCE SCENARIO

Experiments have been performed on a middle size

italian harbour, in which an average of 80 vessels

Figure 2: A splitting situation. Two boats (red circles) per-

formed a splitting close to a surveilled area (red arc) and

one of them is directed to the critical point (in purple).

(military or civil) were moving at the same time with

different goals. A radar was able to perceive on a

10km x 10km area.

We considered 2 suspect operations to be detected:

Splitting: it is the manoeuver of remaining hidden

staying close to another vessel, then suddenly move

away directed to a critical area (see Fig.2).

Suspect Approach: it is verified if a suspect vessel

is approached by other vessels. A suspect vessel is a

vessel whose identification is not known, which stays

near the border of a surveilled zone.

We compared the performances of human opera-

tors, provided with 5 different support systems. Every

test session had a lenght of about 15 minutes.

In the first configuration, that we will call Agent

Support, we provided the operator with the agent

based system which performs autonomous Situation

Assessment as described in this paper. The situa-

tion Splitting is defined by the constraint “classify as

member, if and only if current situation contains a

track, which was first detected close to a zone bor-

der and close to another vehicle, and either one or the

other vehicle approaches a critical area”, expressed

with the DL formalism.

Whenever a vehicle v

1

is detected as appear-

ing close to another vehicle v

2

, a new situation in-

stance s

v

1

is created, and it is populated the relation

hasObject(s

v

1

, v

1

). When the other properties which

are in the definition are verified, s

v

1

will be classified

as splitting. A similar constraint is used for the class

suspect approach.

The 4 other configurations are:

No Support: the operator is provided with the out-

put of a multi-tracking system, with no elaboration to

support Situation Assessment.

Still Tracks Visualization: the system provides an

additional information, visualizing the still vehicles

with a different colour.

Story Vis: the system graphically shows also the tra-

jectory, average and current speed.

1/3 Tracks: the same as the previous policy, but the

AGENT APPROACH TO SITUATION ASSESSMENT

289

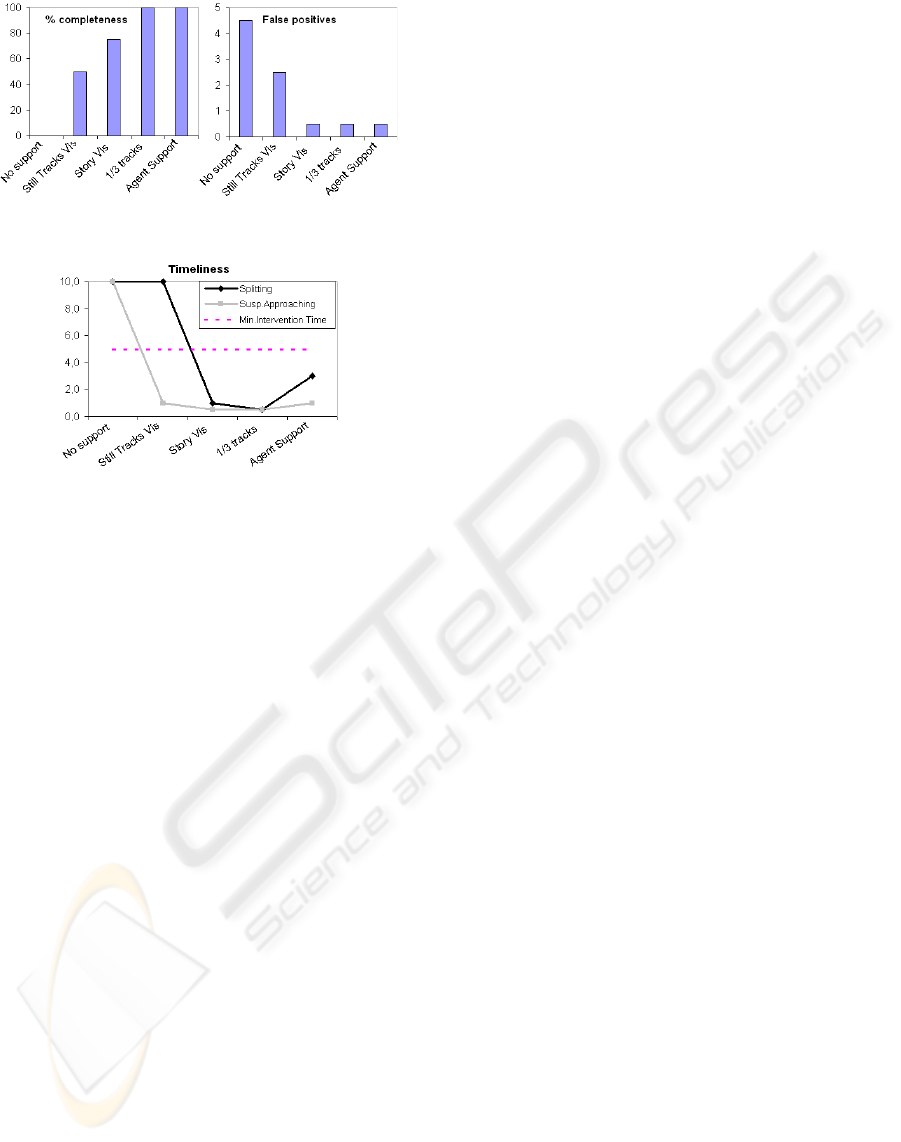

Figure 3: Comparison of completeness and correctness of

Situation Assessment within 5 different configurations.

Figure 4: Comparison of timeliness of Situation Assess-

ment within 5 different configurations.

number of tracks in the scenario is reduced to 1/3.

First, we measured the completeness of the ap-

proach, in terms of how many correct (splitting or

suspect approach) situations have been detected by

operators (Fig.3a). In Fig.3b, the correctness of the

approach is measured, in terms of the average num-

ber of incorrect detections of splitting and suspect ap-

proach situations (false positives). Both graphs show

that the “Agent support” configuration performs sim-

ilarly to the one in which operators are watching over

only 1/3 of the real everyday amount of traffic. In

the first 2 configurations, humans’ conclusions were

completely unreliable.

Notice that the high absolute value of the shown

results is biased by the unusually high (1) probability

of the presence of anomalies during the test session,

(2) attention level of the human operator.

Finally, we measured the timeliness with which

the situations are revealed. In Fig.4, we show how

long it took human operators to detect the presence

of the malicious situations. The minimal intervention

time threshold is the maximum available time to allow

a prompt intervention. From the graph, it is shown

that operators with few system support will not reveal

the situations in time, even in our ideal setting. Notice

also that the agent based reasoning can take more time

to detect a situation: this happens because the agent

will detect a certain situation only when all the events

of a specific symbolic definition have been verified,

while the operator conclusions are much more guided

by an intelligent or skilled observation. A less strict

definition would cause more prompt detections, but

higher number of false positives.

5 DISCUSSION

In this paper we introduced a new model to approach

Situation Assessment, based on agent knowledge rea-

soning. We validated our approach with experiments

performed with real data in a maritime surveillance

scenario.

With respect to state of art knowledge based ap-

proaches (Matheus et al., 2003), we succeed in avoid-

ing the use of rules, which are not supported by cur-

rent state-of-art reasoners. The main limit of our ap-

proach is that situations are defined using true-false

membership of individuals to properties, therefore in-

formation uncertainty can be only partially included

into the model.

Finally, it is valuable that the described approach

potentially allows distributed Situation Assessment,

which is a current study of different communities

((Llinas et al., 2004),(Mastrogiovanni et al., 2007)).

REFERENCES

Endsley, M. and Garland, D. (2000). Situation Awareness:

Analysis and Measurement. Lawrence Erlbaum Asso-

ciates, Mahway, New Jersey.

Giompapa, S., Farina, A., et al. (2006). A model for a hu-

man decision-maker in a command and control radar

system: Surveillance tracking of multiple targets. In

Proc. of 9th Int. Conf. on Information Fusion.

Llinas, J., Bowman, C., et al. (2004). Revisiting the jdl

data fusion model ii. In Proc. of 7th Int. Conf. on

Information Fusion.

Mastrogiovanni, F., Sgorbissa, A., and Zaccaria, R. (2007).

A distributed architecture for symbolic data fusion. In

Proc. of 20th Int.Joint Conf. on Artificial Intelligence,

IJCAI-07, pages 2153–2158.

Matheus, C. J., Baclawski, K., and Kokar, M. M. (2003).

Derivation of ontological relations using formal meth-

ods in a situation awareness scenario. In Proc. of

SPIE Conference on Multi-sensor, Multi-source Infor-

mation Fusion, Orlando.

Nardi, D. and Brachman, R. J. (2003). An introduction to

description logics. In The DL Handbook: Theory, Im-

plem. and Applications. Cambridge University Press.

ICAART 2009 - International Conference on Agents and Artificial Intelligence

290