RETARGETING MOTION OF CLOTHING

TO NEW CHARACTERS

Yu Lee and Moon-Ryul Jung*

Department of Media Technology, Graduate School of Media, Sogang University, Seoul, Korea

Keywords: Retargeting, Cloth, Deformation, Body-oriented Cloth, Collision Detection.

Abstract: We show how to transfer the motion of cloth from a source to a target body. The obvious method is to add

the displacements of the source cloth, calculated from their position in the initial frame, to the target cloth;

but this can result in penetration of the target body, because the shape of the target body. To overcome this

problem, we compute an approximate source cloth motion, which maintains the initial spatial relationship

between the cloth and the source body; then we obtain a detailed set of correction vectors for each frame,

which relate the exact cloth to this approximation. We then compute the approximate target cloth motion in

the same way as the source cloth motion; and finally we apply the detail vectors that we generated for the

source cloth to the approximate target cloth, thus avoiding penetration of the target body. We demonstrate

the retargeting of cloth using figures engaged in dance movements.

1 INTRODUCTION

Clothing simulation is an area in which physically

based techniques pay off, and quite a lot of work

along these lines has been reported (Terzopoulos and

Fleicher, 1988, Breen et al, 1994, Carignan et al,

1992, Vollino and Thalmann, 1995, Baraff and

Witkin, 1998 , Zhang and Yuen, 2000). Tools

derived from physically based techniques are

available in commercial modeling animation

packages, such as Maya and Syflex. These tools are

a great help to animators, but physically realistic

cloth simualtion remains difficult and time

consuming, even using commercial packages. So, it

would be useful to be able to capture cloth motion in

the same way as we capture human body motion

(Pritchard and Heidrich, 2003, White et al, 2007). At

the moment, there are a few systems for capturing

cloth motion, but there will appear some in the

future.

Assuming that capturing cloth motion is feasible

in the near future, we want to devise a method of

reusing, or more precisely retargeting, a cloth

motion from one body to another.

Although a cloth motion obtained this way will

be less physically realistic than the original, in most

cases all that is required is plausible cloth behavior,

* corresponding author ( moon@sogang.ac.kr)

not strict physical accuracy.

Motion retargeting techniques have been

developed for the body (Gleicher, 1998, Lee and

Shin, 1999, Choi and Ko, 2000) and the face (Noh

and Neumann, 2001, Pyun and Shin, 2003, Na and

Jung, 2004), but there is no published work on

retargeting cloth motions to new characters.

The basic method of retargeting the motion of

one character to another is to transform the source

motion so that the resulting motion satisfies

constraints imposed by the new character, while

preserving the characteristics of the source motion as

far as possible. To retarget a cloth motion we need to

consider the source body motion, the source cloth

motion, the target body motion, and the target cloth

configuration at the initial frame, as shown in Figure

1. This is a more constrained problem than that of

retargeting facial motions, where only the source

facial motion and the configuration of the target face

at the initial frame are used in a typical

implementation.

The simplest way to obtain a target cloth motion

is to compute the displacement of the source cloth at

each frame from the initial frame, and add that

displacement to the position of the target cloth at the

initial frame. But when we see the resulting target

cloth motion together with the target body motion,

we find that the cloth penetrate the body. That is

280

Lee Y. and Jung M. (2009).

RETARGETING MOTION OF CLOTHING TO NEW CHARACTERS .

In Proceedings of the Fourth International Conference on Computer Graphics Theory and Applications, pages 280-285

Copyright

c

SciTePress

because the target cloth motion was created without

considering the target body motion at all.

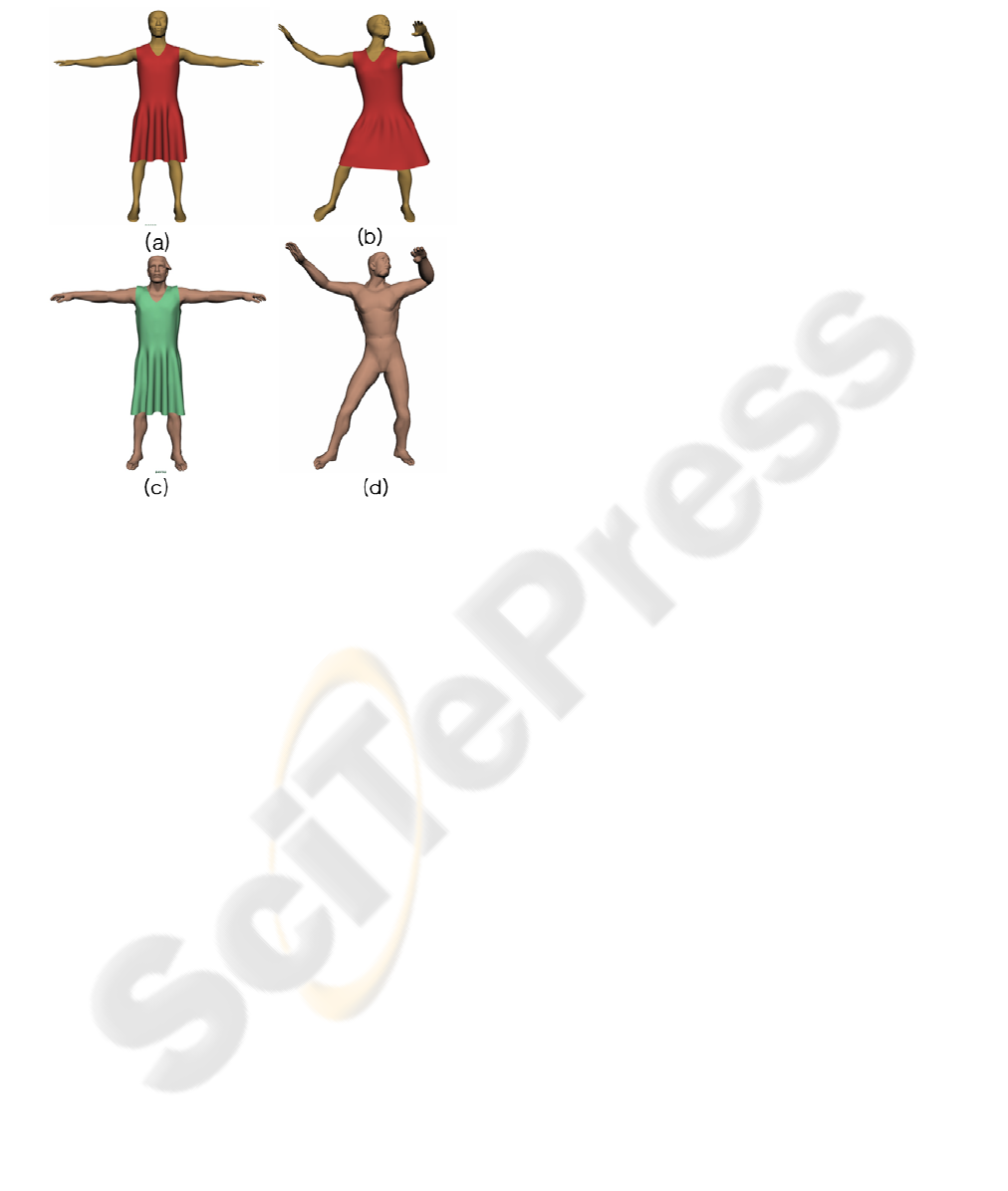

Figure 1: The input to and output of cloth motion

retargeting: (a) the source cloth at the initial frame; (b) the

source cloth at the k-th frame; (c) the target cloth at the

initial frame; (d) the target body at the k-th frame for

which the cloth have to be computed. The initial

configurations (a) and (c) are supposed to correspond to

each other.

To overcome this problem, we retarget the

source cloth motion to the target body in two phases.

First, we extract an approximate source cloth motion

from the original motion, so as to capture the overall

behavior of the source cloth without colliding with

the source body. Then we compute the detailed

motion of the source cloth by subtracting the

approximate source motion from the original source

cloth motion. In the same way, we find an

approximate target motion that captures the overall

behavior of the cloth while avoiding collision with

the target body. Adding the detailed motion to the

approximate target cloth motion produces the target

cloth motion. The new cloth may still penetrate the

target body slightly, so collisions should be detected

and resolved. We only need to deal with the

collision in a single frame; Collisions do not

propagate as they would in a true simulation as

described by Baraff et al, 1998.

In our experiments, the motion of the target body

is obtained from the motion of the source body using

the commercial Maya software. This could be done

using any method of body motion retargeting

(Gleicher, 1998, Lee and Shin, 1999, Choi and Ko,

2000). The cloth motion is obtained by using Syflex,

a plug-in in Maya. We assume that the source and

target cloth meshes have the same number of

vertices and the same connectivity.

2 THE PROPOSED METHOD

2.1 Body-oriented Cloth

To compute the approximate motion of cloth, we

adopt the surface-oriented deformation technique

(Singh et al, 2000), in which an object is deformed

so that it follows the motion of a deformer object.

The motion of the deformable object is computed in

such a way that (the surface of) the deformable

object maintains its initial spatial relationship with

(the surface of) the deformer object as that object

moves. The deformer and deformable meshes do not

need to have the same connectivity. Although the

deformer object should be a single contiguous mesh,

the deformable object may consist of several

patches. When the technique is applied to movement

of cloth, the body is the deformer, and the cloth is

the deformable object. In this paper, we will use

terms “body-oriented cloth” and “deformed cloth”

interchangeably.

The surface-oriented deformation involves two

steps: registration and deformation. During the

registration step, the extent to which each “control

element” (triangular face) k of the deformer object

influences each vertex P of the deformable object, is

computed. The weight in which control element k

influences vertex P,

P

k

w , is inversely proportional

to the distance from P to the plane of the face k. The

influence weight

P

k

w , k = 1; n, where n is the

number of control elements in the deformer object,

are normalized. In practice,

P

k

w , k = 1; n are set to

zero except for a few control elements which are

selected by the user.

At the registration step, the local coordinates,

, of each vertex P of the deformable object are

also computed, with respect to the reference system

defined on each control element k of the deformer

object. These local coordinates represent the

initial spatial relationship between the deformer and

deformable objects.

O

k

P

O

k

P

RETARGETING MOTION OF CLOTHING TO NEW CHARACTERS

281

As the deformer object moves, the deformation

step computes the world space coordinates, , of

each vertex P of the deformable object with respect

to control element k of the deformer object, so that

has the same local coordinates as in the

coordinate system of control element k of the

changed deformer object. Now, the new position

of vertex P is computed by averaging over all

control elements, as follows:

def

k

P

O

k

P

def

k

P

def

P

1

n

def p def

kk

k

PwP

=

=

∑

(1)

Note again that

P

k

w

, k = 1; n, are zero except for

a few control elements. Although simple in principle,

this surface-oriented deformation algorithm

produces good results in many situations. The

algorithm is also implemented in Maya and called

the “wrap deformer”.

2.2 The Retargeting Procedure

Using the surface-oriented deformation, cloth

motion retargeting can now be achieved by the

following procedure:

1. Set up the initial configurations of the source

cloth and the target cloth so that they correspond to

each other. The initial configurations should not

contain deep wrinkles, because they are supposed to

be representative configurations of the approximate

motions of the source and target cloth.

2. Perform an appropriate registration with

respect to the source body (the deformer object) and

the source cloth (the deformable object), using their

initial configurations.

3. Determine the body-oriented source cloth at

each frame, as shown in Figure 2, by performing

surface-oriented deformation. At the initial frame,

the deformed cloth has the same configuration as the

original cloth.

4. Find the body-oriented target cloth at each

frame, by using the same registration technique as

Step 3 (See Figure 3). This causes the body-oriented

target cloth to have the same relationship with the

target body as the body-oriented source cloth have

with the source body. It means that the body-

oriented target and source cloth are making the same

approximate motion. But the approximate target

motion does not collide with the target body because

the rough source motion does not collide with the

source body.

5. Compute the detail vectors that position the

source cloth relative to the body-oriented source

cloth, by subtracting the body-oriented cloth (as

shown in Figure 2) from the original source cloth at

each frame. This process is illustrated in Figure 4.

We assume that the original cloth mesh and the

body-oriented cloth mesh have the same

connectivity, and so the detail vectors are simply the

differences between the corresponding vertices on

both meshes.

6. Add the detail vectors of the source cloth to

the body-oriented target cloth, to produce the target

cloth at each frame. This process is illustrated in

Figure 6.

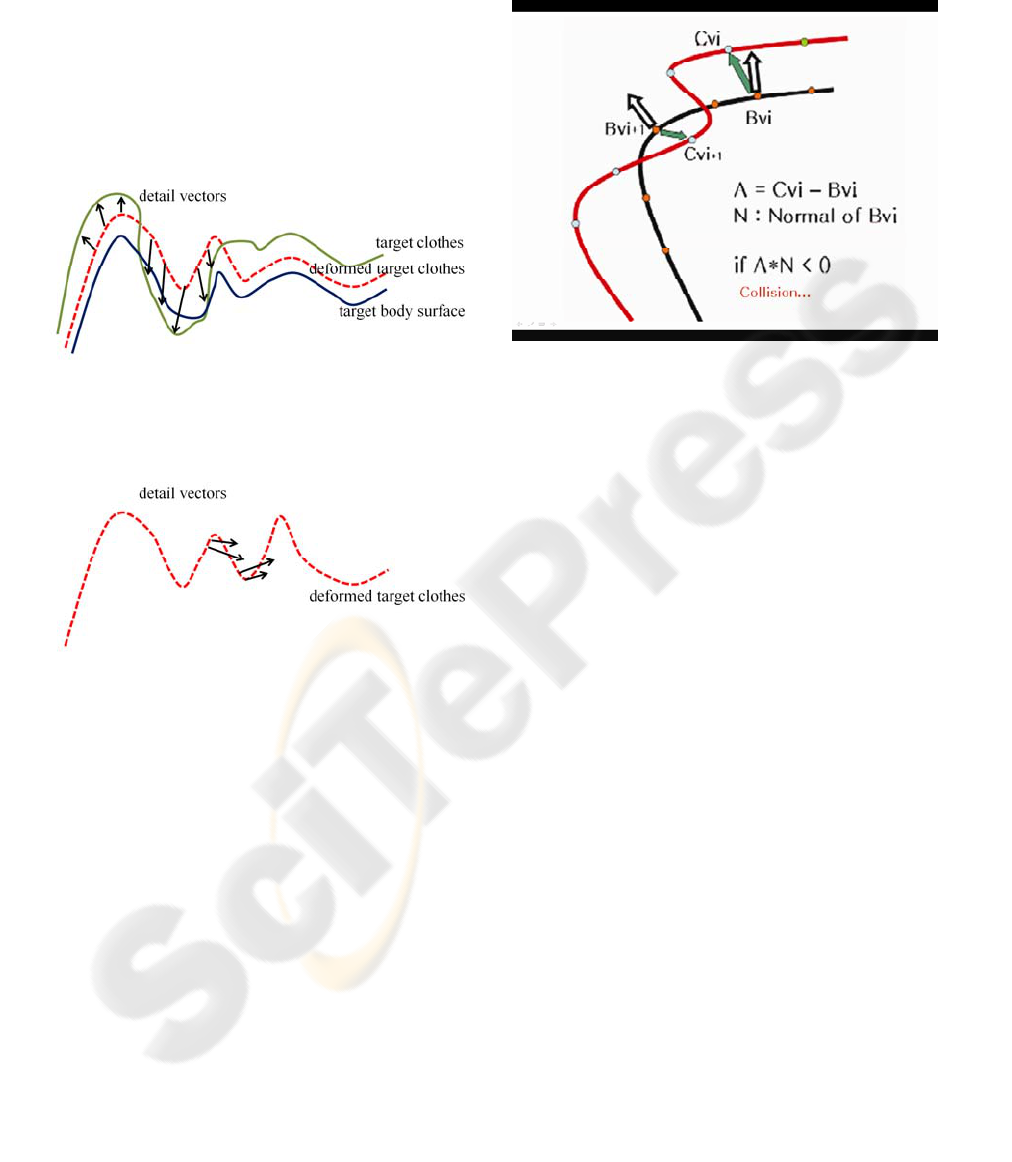

Figure 2: The body-oriented source cloth.

Figure 3: The body-oriented target cloth.

Figure 4: The detail vectors of the source cloth relative to

the body-oriented (deformed) source cloth.

3 RESOLVING COLLISIONS

The body-oriented target cloth does not penetrate

themselves or the target body, because of the way

GRAPP 2009 - International Conference on Computer Graphics Theory and Applications

282

they are generated. But when we add the detail

vectors of the source cloth to the body-oriented

target cloth, the resulting target cloth may penetrate

themselves or the target body, as shown in Figures 5

and 6.

The problem of collision detection and avoidance

in cloth simulation has been a hot issue (Baraff et al,

2003). But we are able to address the problem using

a relatively simple technique. We justify it by

observing that errors affect just one frame, and do

not propagate forward as they would in a simulation.

Figure 5: Adding the detail vectors of the source cloth to

the body-oriented target cloth(deformed target cloth), to

produce the target cloth motion. The target cloth may

penetrate the target body.

Figure 6: Some detail vectors intersect some part of the

deformed target cloth. Adding the detail vectors to the

deformed target cloth would cause the resulting target

cloth to intersect themselves unless the affected part would

not move away by its own detail vectors.

3.1 Self-collision

Self-intersection of the target cloth may be caused

when the detail vectors penetrate the approximate

target cloth, as shown in Figure 5. We have observed

no self-collision, and we assume that they are

unlikely, provided that the initial configuration of

the target cloth does not have deep wrinkles, a

property which is inherited by the deformed cloth,

because they have the same spatial relationship with

the body. Although we set up the initial

configurations of the source and target cloth so as

not to have deep wrinkles, we do not know what

depth of wrinkles would cause self-collision in the

resulting target cloth. When situations as Figure 5

occur, we avoid them by reducing the lengths of the

detail vectors. Self-penetrations are unavoidable at

the bend of a knee or an elbow. But any self-

penetration would tend to be invisible in such areas.

Figure 7: Collision detection and its resolution. The black

line represents the body and the red line the target cloth.

The green arrow, which connects a cloth vertex to the

nearest body vertex, represents a penetration vector. The

white arrow represents a vector normal to the cloth surface.

If the dot product of normal vector and the penetration

vector is negative, collision has occurred.

3.2 Cloth-to-body Collision

Figure 7 shows a situation where the target cloth

penetrates the target body. This situation can be

reversed by moving the penetrating cloth vertices in

the direction of the normal vectors at these vertices

by the extent of the penetrations (See Figure 7).

Even if collisions are avoided for each cloth vertex,

it does not resolve all collisions. The supplementary

video shows that there is residual penetration. We

are implementing collision avoidance techniques

proposed by Zhang et al(2000) and Volino et

al(2000) to see if they help remove residual

penetration.

4 RESULT

The results of our experiments on retargeting cloth

are shown in Figures 8, 9, and 10. The

accompanying video demonstrates that these results

are visually satisfactory. Though the retargeted

motion will be less natural than the original motion,

this disadvantage will often be outweighed by an

ability to create cloth motion cheaply for characters

in crowd scenes, avatars on the internet, and game

characters.

RETARGETING MOTION OF CLOTHING TO NEW CHARACTERS

283

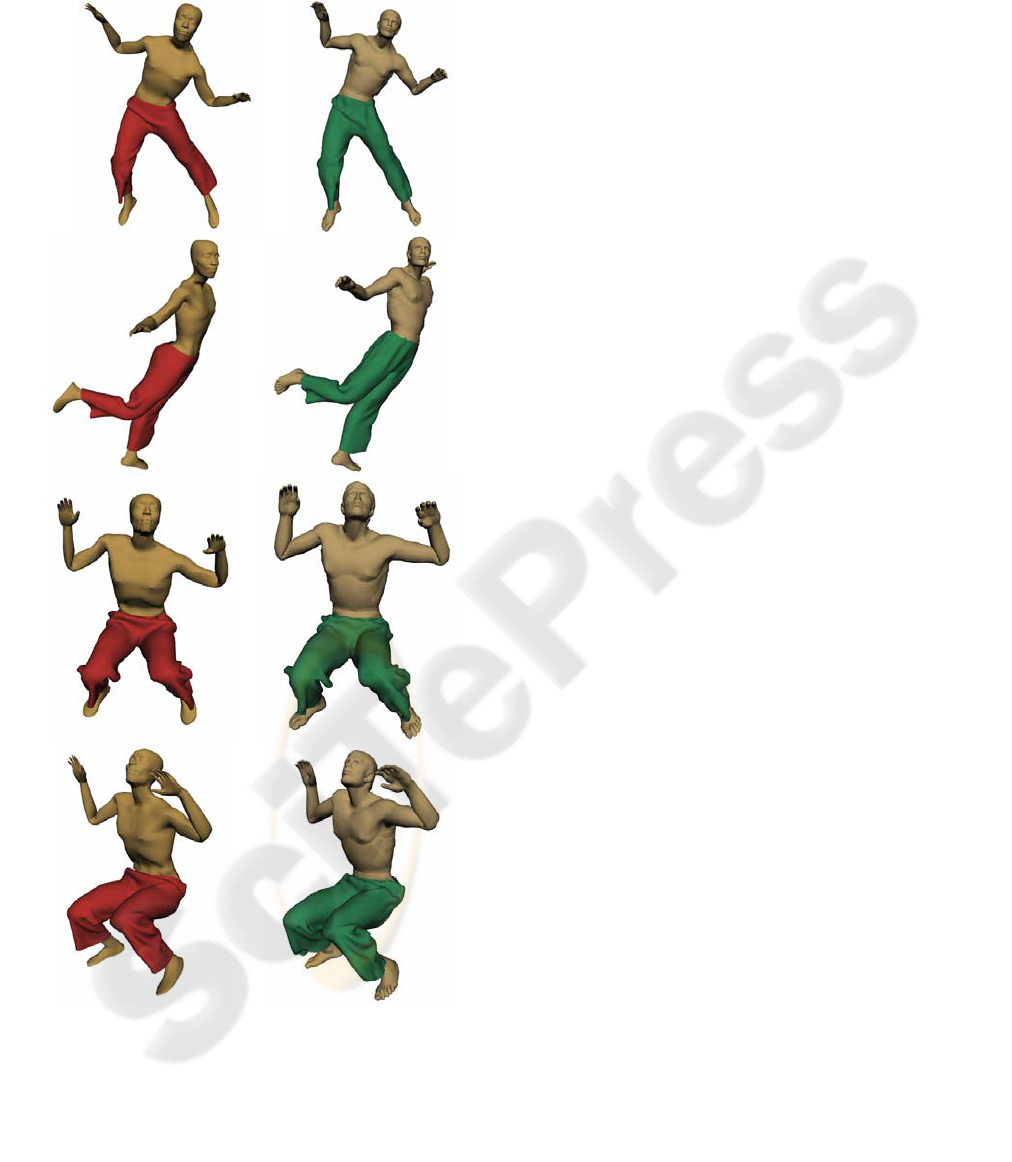

Figure 8: A cha-cha: (left) the source motion(a woman)

and (right) the target motion(a baby).

5 CONCLUSIONS

We have presented a simple but effective method of

retargeting cloth motion from one character to

another that meets two objectives: the resulting cloth

motion does not penetrate the target body but

follows the movement of the target body smoothly;

the details of the source cloth motion is reflected in

the target cloth motion. The first objective has been

achieved by creating “body-oriented” source cloth

which moves over the source body, and “body-

oriented” target cloth which move in the same way.

The body-oriented cloth motion is approximate, and

the details of the source cloth motion can be

captured by subtracting the body-oriented motion

from the original source cloth motion. The target

cloth motion is then obtained by adding these details

to the body-oriented target cloth motion. Potential

collisions between the computed target cloth motion

and the target body are resolved by simple and cheap

methods.

Cloth motion retargeting problem is similar to

facial motion retargeting, in that both cloth and faces

are represented by meshes. But there is a crucial

difference. Facial motion retargeting involves only a

Figure 9: A jive (faster even than the cha-cha): (left) the

source motion (a man) and (right) the target motion (a

woman). Though this dance is so fast, the wrinkles of the

source cloth transfer well to the target cloth.

single surface, i.e. the skin of the face. But cloth

motion retargeting involves two surfaces, the surface

of the cloth and the surface of the underlying body.

Our contribution is to handle both surfaces

satisfactorily.

Our method relies on the assumption that the

body-oriented deformation of the initial

configuration of the cloth produces a good

approximate motion of the cloth, relative to which

the details of the cloth motion can be measured. This

assumption is reasonable and our experimental

results confirm it, but there will probably be better

ways to compute the approximate version of a given

cloth motion without collision. Finding those better

ways is interesting future work.

Cloth-body collision detection and avoidance in

the target cloth motion have not been fully dealt with.

We need to devise better ways to relocate the cloth

that have penetrated the body, so that the resulting

configuration is sufficiently smooth as well as

collision-free. Another goal is to retarget cloth

motion when the source cloth and the target cloth do

not have the same connectivity. We can still

represent the body-oriented source and target cloth

by creating a new mesh for each, with the same

GRAPP 2009 - International Conference on Computer Graphics Theory and Applications

284

ACKNOWLEDGEMENTS

connectivity; but then representation of the detail

vectors from the body-oriented surface to the

original surface is still a problem.

This is supported by research funds from Korea

Science and Engineering Foundation(2008) and

from Sogang University, Korea(2008)

REFERENCES

Baraff, D. and Witkin, A., 1998. Large steps in cloth

simulation. In SIGGRAPH’98. ACM Press.

Baraff, D., Witkin, A., and Kass, M., 2003. Untangling

cloth. In SIGGRAPH’03, ACM Press.

Breen, D., House, D., and Wozny, M., 1994. Predicting

the drape of woven cloth using interacting particles. In

SIGGRAPH’94, ACM Press.

Carignan, M., Yang, Y., Magnenat-Thalmann, N., and

Thalmann, D., 1992. Dressing animated synthetic

actors with complex deformable cloth. In

SIGGRAPH’92, ACM Press.

Choi, K. and Ko, H., 2000. On-line motion retargeting. In

JVCA’00, The Journal of Visualization and Computer

Animation.

Choi, K. and Ko, H., 2002. Stable but responsive cloth. In

SIGGRAPH’02, ACM Press.

Gleicher, M., 1998. Retargeting motion to new characters.

In SIGGRAPH’98, ACM Press.

Lee, J. and Shin, S., 1999. A hierarchical approach to

interactive motion editing for human-like figures. In

SIGGRAPH’99, ACM Press.

Na, K. and Jung, M., 2004. Hierarchical retargeting of fine

facial motion. In Eurographics’04.

Noh, J. and Neumann, U., 2001. Expression cloning. In

SIGGRAPH’01, ACM Press.

Pyun, H. and Shin, S., 2003. An example-based approach

for facial expression cloning. In Eurographics’03.

Singh, S. and Kokkevis, E., 2000. Skinning characters

using surface-oriented free-form deformation. In

Graphics Interface’00.

Terzopoulos, D. and Fleicher, K., 1988. Modeling

inelastic deformations: visoelasticity, plasticity,

fracuture. In SIGGRAPH’88, ACM Press.

Vollino, P., Courchesne, M. and Magnenat-Thalmann, M.,

1995. Versatile and efficient technique for simulating

cloth and other deformable objects. In SIGGRAPH’95,

ACM Press.

Vollino, P. and Magnenat-Thalmann, M., 2000.

Implementing fast cloth simualation with collision

response. In Computer Graphics International’00. .

Zhang, D. and Yuen, M., 2000. Collision detection for

cloth human animation. In Pacific Graphics’00.

Pritchard, D. and Heidrich, W., 2003. Cloth Motion

Capture. In Eurographics’03.

Figure 10: A very fast house dance: (left) the source

motion and (right) the target motion. The results are as

good as those of Figure 9.

White, R., Crane, K. and Forsyth, D., 2007. Capturing and

Animating Occluded Cloth. In SIGGRAPH’07, ACM

Press.

RETARGETING MOTION OF CLOTHING TO NEW CHARACTERS

285