AUTOMATIC MULTILINGUAL LEXICON GENERATION USING

WIKIPEDIA AS A RESOURCE

Ahmad R. Shahid and Dimitar Kazakov

Department of Computer Science, The University of York, YO10 5DD, York, U.K.

Keywords:

Multilingual Lexicons, Web Crawler, Wikipedia, Natural Language Processing, Web mining, Data mining.

Abstract:

This paper proposes a method for creating a multilingual dictionary by taking the titles of Wikipedia pages

in English and then finding the titles of the corresponding articles in other languages. The creation of such

multilingual dictionaries has become possible as a result of exponential increase in the size of multilingual

information on the web. Wikipedia is a prime example of such multilingual source of information on any

conceivable topic in the world, which is edited by the readers. Here, a web crawler has been used to traverse

Wikipedia following the links on a given page. The crawler takes out the title along with the titles of the

corresponding pages in other targeted languages. The result is a set of words and phrases that are translations

of each other. For efficiency, the URLs are organized using hash tables. A lexicon has been constructed which

contains 7-tuples corresponding to 7 different languages, namely: English, German, French, Polish, Bulgarian,

Greek and Chinese.

1 INTRODUCTION

The main goal of this project is to attract the attention

to and demonstrate the feasibility of creating a mul-

tilingual dictionary using Wikipedia. Here, English,

German, French and Polish were chosen for their

wealth of information and another three languages to

demonstrate that our program could also handle dif-

ferent writing systems and alphabets: Greek, Bulgar-

ian and Chinese in this case. The technique can be

applied to a number of other online resources where

versions of the same article appear in different lan-

guages; one such example is the Southeast European

Times news site (http://www.setimes.com/). In this

case, and many others, the use of crawlers is unavoid-

able as an off-line version of the resource is not at

hand.

2 LITERATURE REVIEW

There have been efforts in the past to build multi-

lingual dictionaries with varying degrees of success,

and the ones we know of are only extensions of bilin-

gual dictionaries already available. Yet none of them

had tried to use Wikipedia as the potential source of

lexical information. Only very recently, there have

been attempts to tap into multilingual dimension of

Wikipedia, which has been used to identify named en-

tities Richman et al. (2008) .

Lafourcade (1997) carried out two multilingual

construction projects: French-English-Malay (FeM),

and French-English-Thai (FeT). The FeM data was

created by crossing of French-English and English-

Malay lexical resources. the source language. For

each word in French, one or several meanings in the

target language were grouped together in so called

blocks. Thus there were equivalent blocks for En-

glish, Malay and Thai. In the case that more than

one translation existed, one entry was restricted to just

one meaning, with extra entries for extra meanings.

Sometimes only one entry was used even in the case

of several alternative meanings, leaving it to the dis-

cretion of the lexicographers to decide which alterna-

tive meaning to use. Two kinds of dictionaries were

targeted: the general dictionary with about 20,000 en-

tries and a Computer Science domain specific dictio-

nary with 5,000 entries.

Boitet et al. (2002) worked on the PAPIL-

LON project. The project covered seven different

languages: English, French, Japanese, Thai, Lao,

Vietnamese, and Malay. They started with open

source data, known as “raw dictionaries”. Some of

them were monolingual: 4,000 French entries from

357

R. Shahid A. and Kazakov D. (2009).

AUTOMATIC MULTILINGUAL LEXICON GENERATION USING WIKIPEDIA AS A RESOURCE.

In Proceedings of the International Conference on Agents and Artificial Intelligence, pages 357-360

DOI: 10.5220/0001783003570360

Copyright

c

SciTePress

UdM, and 10,000 Thai entries from Kasetsart Univer-

sity; some were bilingual: 70,000 Japanese-English

entries, plus 10,000 Japanese-French entries in J.

Breen’sJDICT XML format, 8,000 Japanese-Thai en-

tries in SAIKAM XML format, and 120,000 English-

Japanese entries in KDD-KATE LISP format. Finally,

there were 50,000 French-English-Malay entries in

FeM XML format. The authors defined a macrostruc-

ture, with a set of monolingual dictionaries of word

senses, called “lexies” linked together through a set

of interlingual links, called “axies”. In the next step

the “raw dictionaries” were transformed into a “lex-

ical soup”, in the (Mangeot-Lerebours, 2001) inter-

mediate DML format, which comprised of the XML

schema and the namespace. The star-like structure

thus created made it easier to add new languages.

Breen (2004) built a multilingual dictionary, JM-

dict, with Japanese as the pivot language and trans-

lations in several other languages. The project was

an extension of an earlier Japanese-English dictio-

nary project (EDICT: Electronic Dictionary) (Breen,

1995), that began in the early 1990s to create a

Japanese-English dictionary, which grew to 50,000

entries by the late ’90s. Yet its structure was found

to be inadequate to represent the orthographical com-

plexities of the language, as many Japanese words can

be written with alternative kanji and kana and may

have alternative pronounciations. The kanji came

from the ancient Chinese and kana was a derivative

of it. In modern use they are used to describe dif-

ferent parts of speech. For French translations they

used two projects: 17,500 entries from Dictionnaire

franc¸ais-japonais (Desperrier, 2002) and 40,500 en-

tries from French-Japanese Complementation Project

at http://francais.sourceforge.jp/. For German trans-

lations they used WaDokuJT Project (Apel, 2002).

XML (Extensible Markup Language) was used to

format the file on account of the flexibility it pro-

vides. The JMdict XML structure contained an ele-

ment type: <entry>, which in turn contained: the se-

quence number, the kanji word, the kana word, infor-

mation, and translation information. The translation

part consisted of one or more sense elements. The

combining rules were used to weed out unnecessary

entries. The rule stated in short: treat each entry as

a triplet of kanji, kana and senses; if for any two or

more entries, two or more members of the triplet are

the same, combine them into one entry. Thus if the

kanji and kana in different entries are included as al-

ternative forms, and if they differ in sense, they are

included as polysemous words. The entry also stored

information regarding the meanings of the word in

different languages. The JMDict file contained over

99,300 entries in both English and Japanese, while

83,500 keywords/phrases had German translations,

58,000 had French translations, 4,800 had Russian

translations, and 530 had Dutch translations. A set

of 4,500 Spanish translations was being prepared.

3 MULTILINGUAL LEXICON

GENERATION

Since its humble beginnings in 2001, Wikipedia has

emerged as a huge online resource attracting over

684 million visitors yearly by 2008. There are more

than 75,000 active contributors working on more

than 10,000,000 articles in more than 250 languages

(Wikipedia, August 3, 2008). Each Wikipedia page

has links to pages on the same topic in other lan-

guages.and combined in the form of 7-tuples, which

are entries in the lexicon, detailing a word in English

and its translations in the six other languages. The

aim was to extract as many such 7-tuples as possible.

3.1 Web Crawler

A web crawler is a computer program that follows

links on web pages to automatically collect data (hy-

pertext) off the internet. We use it here to move from

one Wikipedia article to another, collecting the above

mentioned tuples of word/phrase translations in the

process.

Our version of the web crawler takes the starting

page as an input from the user. It visits the given

page, and extracts all the links on that page and ap-

pends them to a list. Then it repeats the process for

each link collected earlier, and visits them one by one,

extracting the links and once again appending them

to the list. Putting them at the end (e.g., making the

list a queue) ensures that the search method adopted

is Breadth First Search (BFS). In our context follow-

ing BFS will explore a number of related concepts

consecutively while Depth First Search (DFS) would

drift-off any given topic. There may be technical as-

pects related to the use of memory by each approach

but we will not discuss them here.

The BFS approach was used in the following ex-

periments. With the BFS capability thus incorpo-

rated, other lists were defined that would keep track of

all the web pages that have already been visited thus

keeping the code from revisiting them and extracting

repeatedly the same 7-tuples into the lexicon. Apart

from ensuring that there was no redundancy within

the lexicon, either purely numeric or had a null entry

for any of the seven languages.

The program picks up a URL from the top of the

queue, expands in terms of URLs by exploring new

ICAART 2009 - International Conference on Agents and Artificial Intelligence

358

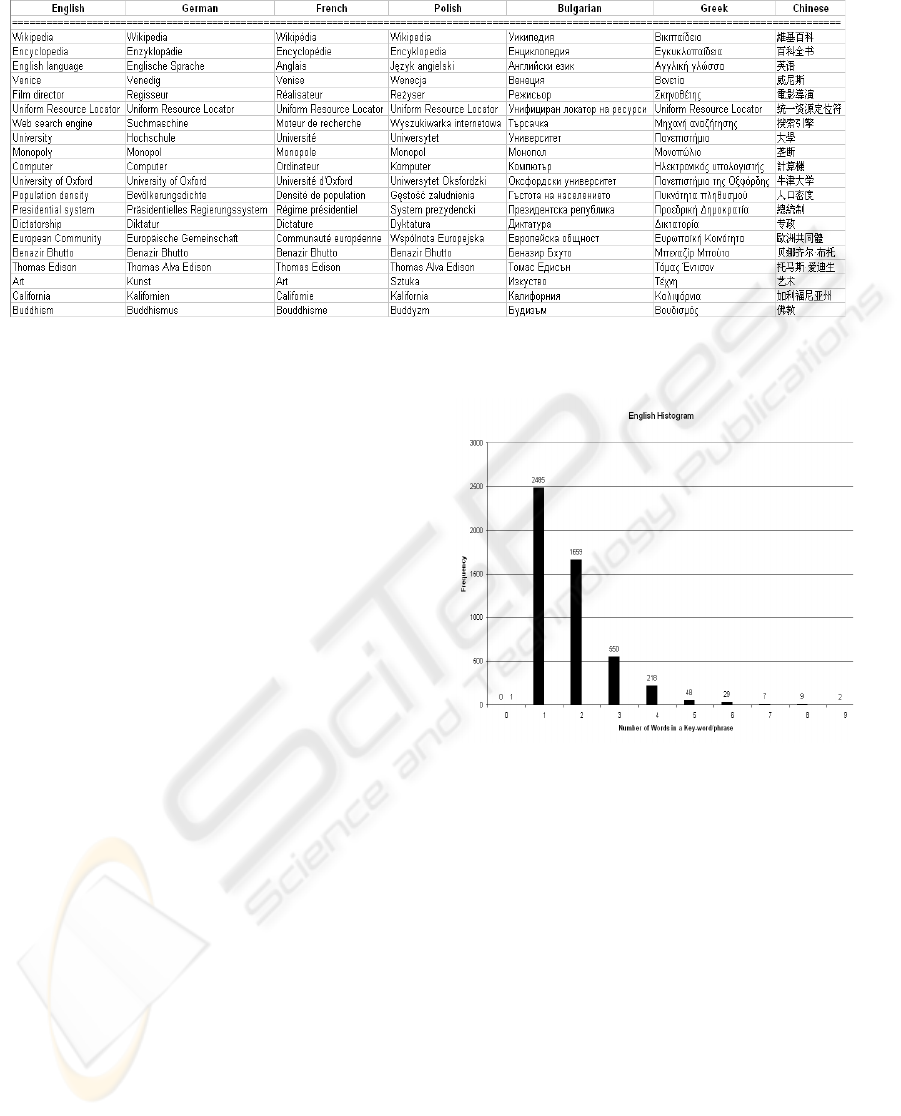

Figure 1: A Snapshot of the Lexicon.

links on the given URL, and then extracts titles in the

given languages. An essential first step was to sep-

arate the tracking of the URLs from the code itself.

Thus a database in Access was created, comprising of

28 different tables. One table stored all the URLs to

be visited, and the rest implemented a hash table, to

store the already visited URLs, indexed by the first

character of the page title. 26 different tables were

created for the 26 different letters in the English al-

phabet. Another table was created, URLExtra, which

would store all the URLs with Wikipedia page titles

starting with anything but English letters, including

numbers.

The program starts with a user-provided URL,

then looks for potential URLs to be used for extrac-

tion of titles. These URLs are added to the queue. For

each page that is visited and removed from the queue,

the URLs of several pages (contained in that page)

are typically added. Thus the number of URLs to be

searched rises quickly and might yield a huge list. In

order to avoid such a scenario and keep the size of

the URL list within reasonable limits, an upper limit

was set to the number of URLs at a time. Similarly a

lower limit was set so that the program could be pre-

scient and started looking for more URLs before it ran

out of them. The lower and upper limits were set to 50

and 1,000 respectively. Thus barring an exhaustion of

all potential URLs, the program would never run out

of URLs to be searched for.

3.2 Results

Figure 1 shows a snap shot of the lexicon in a table.

UTF-8 was used as the coding scheme, which makes

possible writing characters in other non-English lan-

guages.

Figure 2: The English Histogram.

A total of 5 runs were carried out to get a total of

8,748 entries, out of which 5,006 were unique (57%

of the total). In order to get that many entries the pro-

gram visited 726,715 English language articles, not

everyone of which was unique. Despite the checks

on revisiting pages some of them were re-visited due

to a bug in the code. Only a little more than 1% of

the total had corresponding pages in all the other six

languages. The crawler still had to visit more than a

quarter of all Wikipedia articles in English.

Using the least-prolific language as a pivotal lan-

guage would have saved us a lot of time in search-

ing for lexical entries since most pages in the least-

frequently occurring language would probably have

corresponding pages in other languages, yet English

was chosen as the pivotal language. The reason being

that English is easy to play with, with its very famil-

iar alphabet-set and thus building hashing tables was

easily done. Also we were more interested in English

AUTOMATIC MULTILINGUAL LEXICON GENERATION USING WIKIPEDIA AS A RESOURCE

359

at the first instance and different languages were in-

corporated at a later stage.

Looking at Figure 2 one can see that unigrams

make the bulk of entries (2,485 - almost 50% of the

total), followed by bigrams (1,659 - 33% of the total).

In terms of semantics, the resulting lexicon is a

mix of: toponyms, names of famous people, names of

languages, and general concepts, such as “rock mu-

sic” and “fire fighter”, among others.

4 USES OF A MULTILINGUAL

DICTIONARY

It can help the lexicographers build traditional dictio-

naries, as a starting point for their work. Another im-

portant use is for Cross-Lingual Information Retrieval

(CLIR). Prikola (1998), compared the performance of

translated Finnish queries against English documents

to the performance of original English queries against

English documents, by using a general dictionary and

a domain specific dictionary (a medical dictionary in

this case). It was found that a cross-lingual IR sys-

tem based on Machine Readable Dictionary (MRD)

translation was able to achieve the performance level

of monolingual IR.

The lexicon containing more than

5,000 entries is available at http://www-

users.cs.york.ac.uk/∼ahmad/index.htm for free

use under GNU Free Documentation License.

5 FUTURE WORK

A useful thing to do would be to create domain

specific dictionaries based on the categories defined

within Wikipedia, according to which each article be-

longs to one or more categories. A domain could be

defined using a set of categories and only those arti-

cles could be used for building the lexicons belonging

to that particular domain.

ACKNOWLEDGEMENTS

This research was carried in the period Nov 2007 -

July 2008, and was partly sponsored by the Higher

Education Commission in Pakistan.

REFERENCES

Apel, U. (2002). WaDokuJT - A Japanese-German Dictio-

nary Database. In Papillon 2002 Seminar, Tokyo.

Boitet, C., Mangeot-Lerebours, M., and Serasset, G. (2002).

The PAPILLON Project: Cooperatively Building

a Multilingual Lexical Data-base to Derive Open

Source Dictionaries & Lexicons. In Proceedings of

the 2nd Workshop NLPXML 2002, Post COLING 2002

Workshop, Taipei.

Breen, J. (1995). Building an Electronic Japanese-English

Dictionary. In Japanese Studies Association of Aus-

tralia Conference.

Breen, J. (2004). JMdict: a Japanese-Multilingual Dictio-

nary. In Coling 2004 Workshop on Multilingual Lin-

guistic Resources, pages 71–78, Geneva.

Desperrier, J.-M. (2002). Analysis of the Results of a

Collaborative Project for the Creation of a Japanese-

French Dictionary. In Papillon 2002 Seminar, Tokyo.

Lafourcade, M. (1997). Multilingual Dictionary Construc-

tion and Services Case Study with the Fe* Projects. In

Proc. PACLING’97, pages 173–181.

Mangeot-Lerebours, M. (2001). Environnements Centraliss

et Distribus pour Lexicographes et Lexicologues en

Contexte Multilingue. PhD thesis, Universite Joseph

Fourier.

Pirkola, A. (1998). The Effects of Query Structure and Dic-

tionary Setups in Dictonary-Based Cross-Language

Information Retrieval. In Proceedings of the 21st

annual international ACM SIGIR conference on Re-

search and development in information retrieval,

pages 55–63, Melbourne.

Richman, A. and Schone, P. (2008). Mining Wiki

Resrouces for Multilingual Named Entity Recogni-

tion. In Proceedings of ACL-08: HLT, pages 1–9,

Columbus, Ohio, USA.

ICAART 2009 - International Conference on Agents and Artificial Intelligence

360