A FEATURE-BASED DENSE LOCAL REGISTRATION OF PAIRS OF

RETINAL IMAGES

M. Fernandes, Y. Gavet and J. C. Pinoli

Centre Ing´enierie et Sant´e, Ecole Nationale Sup´erieure des Mines, 158 cours Fauriel, 42023 Saint-Etienne cedex 2, France

Laboratoire des Proc´ed´es en Milieux Granulaires (LPMG), UMR CNRS 5148, France

Keywords:

Feature-based registration, Retinal images, Opthalmology, Local transformation, Dense transformation.

Abstract:

A method for spatial registering pairs of digital images of the retina is presented, using intrinsic feature points

(landmarks) and dense local transformation. First, landmarks, i.e. blood vessel bifurcations, are extracted

from both retinal images using filtering followed by thinning and branch point analysis. Correspondances are

found by topological and structural comparisons between both retinal networks. From this set of matching

points, a displacement field is computed and, finally, one of the two images is transformed. Due to complex

retinal registration problem, the presented transformation is dense, local and adaptive. Expermimental results

established the effectiveness and the interest of the dense registration method.

1 INTRODUCTION

The problem of image registration is fundamental

to many applications of computer vision. Solving

this problem requires estimating transformation(s)be-

tween images and applying them in order to place

the data in a common coordinate system (Zitova and

Flusser, 2003). In retinal imaging (Figure 1), dis-

ease diagnosis and treatment planning are facilitated

by multiple spatial images acquired from the same

patient. Spatial registration techniques allow to in-

tegrate informations into a comprehensive single im-

age. They are typically classified as feature-based or

area-based.

In area-based techniques, a similarity measure

quantifies the matching between images under an as-

sumption of global transformation and is generally

optimized with global search algorithms (Ritter et al.,

1999). There are many factors that may degrade the

performance of area-based methods: large textureless

regions, nonconsistent contrast and nonuniform illu-

mination within images.

Feature-based methods focus on aligning ex-

tracted features of the images, i.e bifurcations of the

retinal vasculature. From landmarks between both

images and with the assumption of correspondances,

a global transformation is estimated. These methods

are usually more reliable and faster in the case of suf-

ficient and accurate landmarks but a global transfor-

mation is always applied. Indeed, image distorsions

Figure 1: Pair of retinal images of size 924×912 pixels.

and aberrations come from different sources:

• changes in head posture and eye movements,

• projection of the retinal surface on the camera

plane,

• optical systems (camera, cornea and crystalline),

• eye deformations due to defects and diseases.

In order to obtain an accurate registration, a global

transformation must consider all this considerations

with a limited overlapping regions between images.

This is why this problem requires intuitively an adap-

tive local dense approach.

First, the developed feature-based technique using

a dense local transformation will be described. Next,

we will discuss the results obtained. Finally, conclu-

sions and possible extensions will be given.

265

Fernandes M., Gavet Y. and Pinoli J. (2009).

A FEATURE-BASED DENSE LOCAL REGISTRATION OF PAIRS OF RETINAL IMAGES.

In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, pages 265-268

DOI: 10.5220/0001797102650268

Copyright

c

SciTePress

(a) (b) (c) (d) (e)

Figure 2: (a) Part of a retinal image. (b) Result of morphological contrast and median filter. (c) Result of matched filters,

thresholding and cleaning. (d) Thinned and pruned binary image. (e) Detected landmarks (white dots).

2 FEATURE-BASED TECHNIQUE

A feature-based registration method is made of three

main steps: landmarks extraction, matching between

landmarks and image transformation.

2.1 Landmarks Extraction

Traditionnally, bifurcations are extracted automati-

cally by a retinal vessels segmentation, followed by

thinning and branch point analysis. For example,

Zana and Klein (Zana and Klein, 1999) enhanced

vessels with a sum of top-hats with linear revolving

structuring elements and detected bifurcations using

a supremum of openings with revolving structuring

elements with T shape. In (Becker et al., 1998), the

boundaries of retinal vessels are detected using stan-

dard Sobel filter and the vasculature is thickened us-

ing a minimum filter.

Proposed Methods. Retinal vessels can be approx-

imated by a succession of linear segments (of length

L) at different orientations. Afterwards, all used pa-

rameter values are experimetal. First, retinal ves-

sels are emphasized using a minimum of morpholog-

ical contrasts with linear (L = 9 pixels) and revolving

(30˚ increments) structuring elements. A median fil-

ter smoothed the result image (Figure 2.b). Second,

retinal vessels, whose cross section can be approxi-

mated by a Gaussian shaped curve (standard deviation

σ), are detected by matched filters with 6 orientations,

and with L = 9 pixels and σ = 2 (Chaudhuri et al.,

1989). Next, the thresholded image is cleaned: small

objects (≤ 200 pixels) and small holes (≤ 15 pixels)

are deleted (Figure 2.c). Third, the centreline of the

vascular tree is obtained with a thinning operation and

is pruned so as to eliminate small branches (≤ 15 pix-

els) (Figure 2.d). Fourth, bifurcations are extracted as

skeleton pixels with at least six binary transitions be-

tween adjacent pixels of V8 or V16 neighbourhoods.

Finally, adjacent and closer landmarks (≤ 10 pixels)

are joined: the new landmark corresponds to the cen-

tre of mass of the system with equal weights and may

not belong to the skeleton (Figure 2.e).

2.2 Landmarks Matching

After extraction, pairs of matching landmarks need

to be determined between both images. (Can et al.,

2002) and (Zana and Klein, 1999) suggested similar-

ity measures between bifurcations depending on sur-

roundingvessels angles. Due to nonuniformillumina-

tions, a similarity measure may not be robust. (Becker

et al., 1998) and (Ryan et al., 2004) computed simple

transformation parameters from all possible combina-

tions of landmarks. From this data set, matched land-

mark pairs form a tight cluster which is unfortunately

demarcated with difficulty.

Proposed Methods. Let I

p

and I

q

denote both im-

ages called arbitrarily reference and transformed im-

age respectively and with P and Q extracted land-

mark sets respectively. (u,v) and (u

′

,v

′

) are the coor-

dinates systems of I

p

and I

q

respectively. In this paper,

the matching technique proceeds in two steps.

The first step is a similarity measure between land-

mark signatures of both images and results in an ini-

tial couples set S. For a landmark p, the signature

is the number of surrounding vessels n

p

and the an-

gles between them θ

p

1

,··· ,θ

p

n

p

obtained by comput-

ing the intersection between the pruned skeleton and

a circle of fixed diameter o = 24 pixels centred on it.

For each (p,q) belonging to P × Q , (p,q) belongs to

S if and only if n = n

p

= n

q

≤ 5 and θ

q

i

− α ≤ θ

p

i

≤

θ

q

i

+ α for i = 1,· ·· ,n and with α = 10˚ . This step

restricts landmarks sets P and Q before the second

step which is more time consuming.

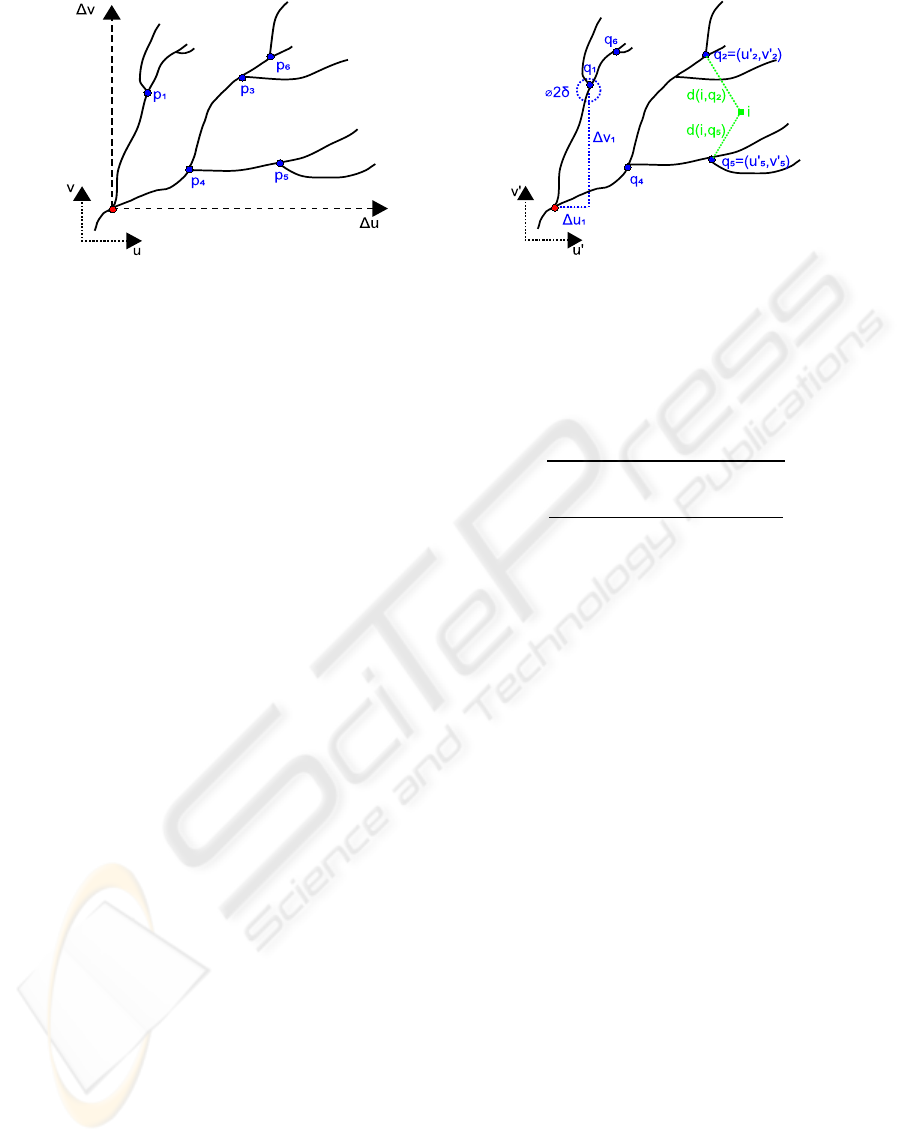

The second step consists in estimating, for each

initial couple, the spatial agencement of landmarks

between the two images (Figure 3). For an initial

couple (p

S

j

,q

S

j

) belonging to S, whose locations

(u

S

j

,v

S

j

) and (u

′

S

j

,v

′

S

j

) constitute now images origins,

landmarks from the two images that have the same lo-

cations with a given tolerance value δ are preserved :

C

j

=

(p,q) ∈ P × Q | q = (u

′

,v

′

)

∈ [u

′

S

j

+ ∆u− δ ; u

′

S

j

+ ∆u+ δ]

×[v

′

S

j

+ ∆v− δ ; v

′

S

j

+ ∆v+ δ]

, (1)

with ∆u = u − u

S

j

, ∆v = v− v

S

j

, p = (u,v) and δ = 8

pixels. The final matching set C corresponds to the

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

266

(a) Reference image I

p

(b) Transformed image I

q

Figure 3: Illustrating the second point matching step and the displacement vector computation. Red dots indicate one initial

couple belonging to S . Blue dots indicate the others landmarks belonging to P or to Q . All landmarks locations of I

p

are

verified in I

q

with a given tolerance distance δ. Initial couple, pairs 1, 2, 4 and 5 are preserved and landmarks p

3

and q

6

are

eliminated. For the displacement vector computation, 2 and 5 are the two nearest preserved pairs of the pixel i of I

q

.

best spatial agencement similarity set:

C = C

argmax

j=1,···,cardS

card(C

j

)

. (2)

This step increases reliable matching and robustness.

2.3 Image Transformation

Having established landmark matchings, the next task

is to identify a suitable transformation. All cited refer-

ences employed global linear or not transformations.

According to (Becker et al., 1998), the affine model

is appropriate because the retina is roughly planar

over small regions and, consequently, parallel with

the camera plane. In (Can et al., 2002), the retina is

modelled by a quadratic surface, the camera move-

ments by rigid transformations and the projections

on the camera plane by weak perspective projections.

The resulting quadratic transformation have 12 pa-

rameters. However, the difficult problem of spatial

retinal registration leads to produce local adaptive de-

formations and motivates the following transforma-

tion.

Proposed Methods. To match I

p

, a dense displace-

ment vector field, function of C , locally deforms I

q

.

For one pixel i of I

q

, first, the two nearest couples be-

longing to C are sought:

(p

1

,q

1

) = argmin

(p

C

,q

C

)∈C

d(i,q

C

) (3)

(p

2

,q

2

) = argmin

(p

C

,q

C

)∈C −{(p

1

,q

1

)}

d(i,q

C

) , (4)

with d the Euclidean distance defined on the spatial

support of I

q

(Figure 3). Then, the distinct displace-

ment vector of i is:

−→

T

i

=

1/d(i,q

1

).(u

1

−u

′

1

)+1/d(i,q

2

).(u

2

−u

′

2

)

1/d(i,q

1

)+1/d(i,q

2

)

1/d(i,q

1

).(v

1

−v

′

1

)+1/d(i,q

2

).(v

2

−v

′

2

)

1/d(i,q

1

)+1/d(i,q

2

)

, (5)

with p

1

= (u

1

,v

1

), q

1

= (u

′

1

,v

′

1

), p

2

= (u

2

,v

2

) and

q

2

= (u

′

2

,v

′

2

).

When all pixels of I

q

are computed, a dense dis-

placement vector field is obtained which is regular-

ized using a Gaussian filter. Finally, I

q

is transformed:

the mapped positions of pixels is calculated as the

sum of their original locations and their correspond-

ing displacement vectors.

3 RESULTS

All visible bifurcations are globally extracted except

ones belonging to very narrow vessels due to the un-

scalability of used matched filters. The matching pro-

cess is able to match landmarks with large coordinates

differences between images (typically the case of a

spatial registration) and, intrinsically, to obtain corre-

spondances which are very rarely incorrect.

In this paper, visual assessment on the fused im-

age between reference and transformed images is

adopted. With limited overlapping regions (typically

the case of spatial registration), i.e with few matching

landmarks, the dense local registration outperforms

the quadratic model estimated using linear regression

(Laliberte et al., 2003) (Ryan et al., 2004) (Figure 4).

The quadratic model estimated using linear regression

needs larger number of matching landmarks in order

to obtain conveniently optimal parameters estimation

and to achieve an accurate result. However, both reg-

istration methods are affected by the lack of matching

A FEATURE-BASED DENSE LOCAL REGISTRATION OF PAIRS OF RETINAL IMAGES

267

(a) Quadratic transformation (b) Dense local transformation

Figure 4: Overlapping regions of registration for a pair of images (Figure 1) and with 15 extracted matching couples.

informations in some areas. Therefore, in the case

of the presented dense local registration, vessel mis-

alignments appear in some peripheral areas.

4 CONCLUSIONS

In this paper, we have described a feature-based,

dense, local registration for eye fundus images. This

method is efficient and avoids iterations or heavy cal-

culations. It allows to cope with complex recogni-

tions of global transformation. On the one hand, it

avoids high-order global transformation due to com-

plex retinal registration problem. On the other hand,

obtaining conveniently optimal parameters with few

matching landmarks is a difficult task due to limited

overlapping regions of spatial registration.

In order to minimize misalignments due to the

lack of intrinsic landmarks uniformity, we are cur-

rently investigating the use of additional regional

landmarks like the whole vessels. We are also de-

veloping a protocol so as to quantitatively compare

different registration processes.

ACKNOWLEDGEMENTS

The authors wish to thank Pr. Gain from University

Hospital Centre, Saint-Etienne, France for supporting

this work and for providing pictures.

REFERENCES

Becker, D., Can, A., Turner, J., Tanenbaum, H., and

Roysam, B. (1998). Image processing algorithms for

retinal montage synthesis, mapping, and real-time lo-

cation determination. IEEE Transactions on Biomed-

ical Engineering, 45(1):105–118.

Can, A., Stewart, C., Roysam, B., and Tanenbaum, H.

(2002). A feature-based, robust, hierarchical algo-

rithm for registering pairs of images of the curved hu-

man retina. IEEE Transactions on Pattern Analysis

and Machine Intelligence, 24(3):347–364.

Chaudhuri, S., Chatterjee, S., Katz, N., Nelson, M., and

Goldbaum, M. (1989). Detection of blood vessels in

retinal images using two-dimensional matched filters.

IEEE Transactions on Medical Imaging, 8(3):263–

269.

Laliberte, F., Gagnon, L., and Sheng, Y. (2003). Registra-

tion and fusion of retinal images - an evaluation study.

IEEE Transactions on Medical Imaging, 22(5):661–

673.

Ritter, N., Owens, R., Cooper, J., Eikelboom, R., and

Van Saarloos, P. (1999). Registration of stereo and

temporal images of the retina. IEEE Transactions on

Medical Imaging, 18(5):404–418.

Ryan, N., Heneghan, C., and de Chazal, P. (2004). Regis-

tration of digital retinal images using landmark corre-

spondence by expectation maximization. Image and

Vision Computing, 22(11):883–898.

Zana, F. and Klein, J. (1999). A multimodal registration al-

gorithm of eye fundus images using vessels detection

and hough transform. IEEE Transactions on Medical

Imaging, 18(5):419–428.

Zitova, B. and Flusser, J. (2003). Image registration

methods: a survey. Image and Vision Computing,

21(11):977–1000.

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

268