COMPLEXITY REDUCTION OF REAL-TIME DEPTH SCANNING

ON GRAPHICS HARDWARE

Sammy Rogmans

1,2

, Maarten Dumont

1

, Tom Cuypers

1

, Gauthier Lafruit

2

and Philippe Bekaert

1

1

Hasselt University – tUL – IBBT, Expertise centre for Digital Media, Wetenschapspark 2, 3590 Diepenbeek, Belgium

2

Multimedia Group, IMEC, Kapeldreef 75, 3001 Leuven, Belgium

Keywords:

Complexity reduction, Real-time, Depth scan, Graphics hardware.

Abstract:

This paper presents an intelligent control loop add-on to reduce the total amount of hardware operations – and

therefore the resulting execution speed – of a real-time depth scanning algorithm. The analysis module of the

control loop predicts redundant brute-force operations, and dynamically adjusts the input parameters of the

algorithm, to avoid scanning in a space that lacks the presence of objects. Therefore, this approach reduces

the algorithmic complexity in proportion with the amount of void within the scanned volume, while remaining

fully compliant with stream-centric paradigms such as CUDA and Brook+.

1 INTRODUCTION

For many years, depth map estimation has been an

active research topic to progressively drive a vast

amount of applications such as robot navigation, im-

mersive teleconferencing and 3DTV with autostereo-

scopic displays. Although depth information can be

derived in a variety of ways, when real-time perfor-

mance is considered, many researchers still tend to

prefer local algorithms instead of global optimization

schemes. The reason is that local algorithms are data-

parallel and can therefore be highly accelerated by

many-core platforms such as the Graphics Process-

ing Unit (GPU). However, these algorithms often re-

sort to brute-force approaches, resulting in a large

amount of redundant operations. Although real-time

performance is still achieved, the redundant brute-

force operations significantly suppress the high-speed

and low-power potential of these local algorithms.

This paper presents an intelligent control loop

add-on to reduce the intrinsic complexity of real-time

depth scanning algorithms, overcoming their ‘unin-

telligent’ brute-force approach. The algorithms their-

selves remain completely unaltered by predicting the

redundant operations in advance, while dynamically

changing the input parameters similar to a regulator

in control systems. The proposed approach is there-

fore compliant with stream-centric paradigms such as

CUDA and Brook+, but also with fixed-functionality

hardware (e.g. ASICs).

The layout of the paper is as follows: Section 2 ex-

plains the principles of depth scanning, and presents

the reader with a complexity analysis of one of the

currently most promising state-of-the-art local algo-

rithms. Consequently, Section 3 describes the control

loop add-on to reduce the total algorithmic complex-

ity, while Section 4 exposes optimization schemes

that can be applied specifically on the GPU. The re-

sults are discussed in Section 5, and the paper is con-

cluded in Section 6.

2 DEPTH SCANNING

In the most general case, local algorithms determine

depth information by sweeping a plane through the

3D volume (Yang et al., 2002), checking each voxel

(i.e. pixel-sized 3D elementary volume) for objects in

a brute-force manner. This general approach can be

applied to multi-camera setups, but a rectified two-

fold camera setup is often used to simplify the 3D

scan, which is known as stereo matching. In a stereo

camera setup, the depth of objects is determined by

estimating the amount of pixels they have shifted

from the left to the right image. As depicted in Fig. 1,

this shift is consistently known as the motion paral-

547

Rogmans S., Dumont M., Cuypers T., Lafruit G. and Bekaert P. (2009).

COMPLEXITY REDUCTION OF REAL-TIME DEPTH SCANNING ON GRAPHICS HARDWARE.

In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, pages 547-550

DOI: 10.5220/0001806505470550

Copyright

c

SciTePress

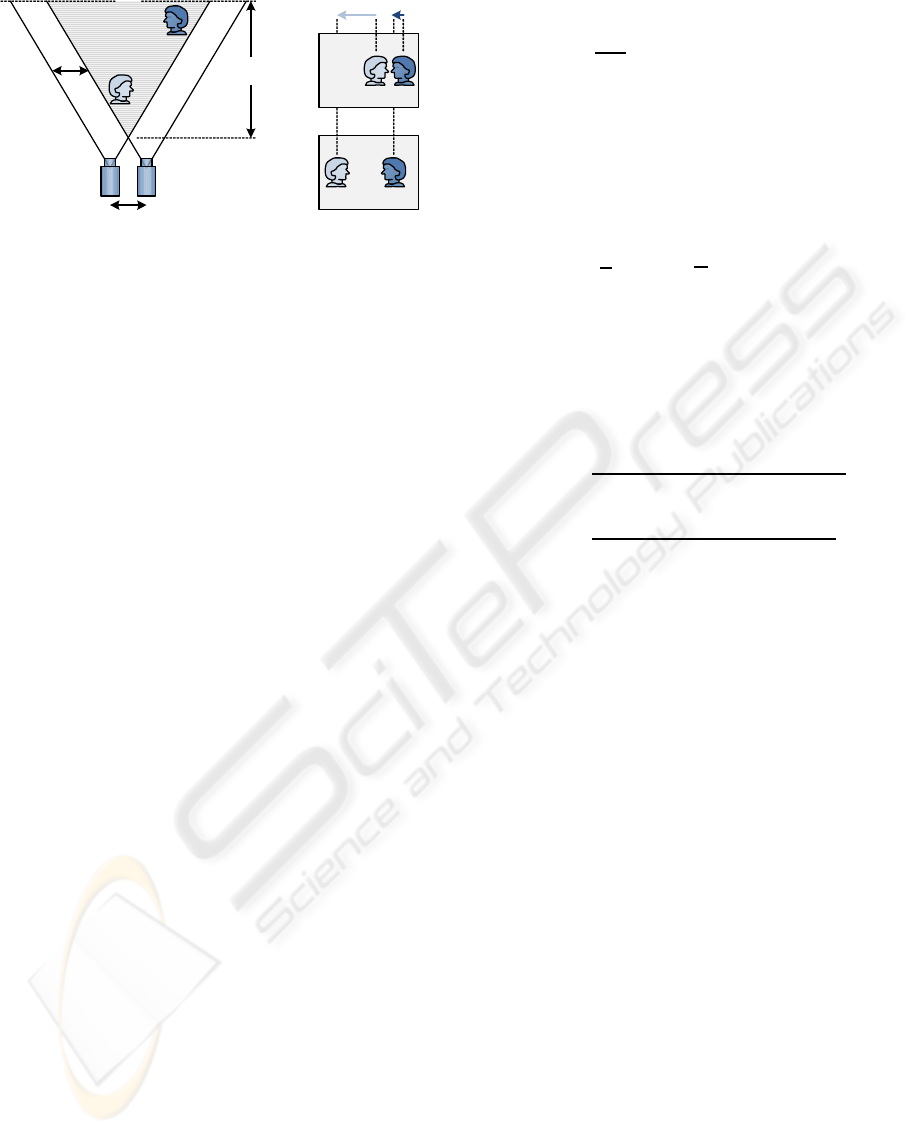

Right View

Depth

Scan Range

Max.

Parallax

Camera Baseline

Left View

Motion Parallax

8

Figure 1: Basic principles of stereo matching, that can be

seen as a simplified 3D depth scan.

lax or disparity, and directly correlates to the objects’

depth. Objects close to the camera exhibit a large

parallax and vice versa, with the maximum parallax

determined by the baseline distance between the two

cameras. Looking for matches in the stereo pair –

using a systematically decreasing disparity, starting

from the maximum value – can therefore be seen as

a simplified front-to-back depth scan.

Consistently surveyedby (Scharstein and Szeliski,

2002), stereo matching algorithms can be divided into

a cost computation, cost aggregation, disparity se-

lection and disparity refinement processing module.

Local algorithms tend to put their focus on the ag-

gregation, and generally discard the refinement. As

recently indicated by a performance study of (Gong

et al., 2007), one of the most promising local algo-

rithms is the adaptive weights approach. Therefore,

this approach is used as a case-study. For the inter-

ested reader, the complete algorithmic description can

be found in the paper of (Yoon and Kweon, 2006).

However, our paper only presents a high-level de-

scription of the adaptive weights algorithm, to obtain

a basic analysis of its current complexity.

The GPU implementation of the adaptive weights

is composed out of three different types of floating

point-based operations. These are the texture (i.e.

memory read), render (i.e. memory write), and com-

putational operations. The matching cost C

AD

com-

putation inputs an RGB-colored left/right image pair

I

∗

0

(x,y) and I

∗

1

(x,y), disparity hypothesis d, and inte-

grates the absolute differences of each color channel

according to the dot product

C

AD

(x,y,d) = g· |I

∗

0

(x,y) − I

∗

1

(x− d,y)| , (1)

with a grey-scaling vector g = h0.299, 0.587, 0.114i.

The matching cost is computed for each hypothesis d

from the search range S, regardless of the presence of

objects at the examined depth. Consequently, the cost

is truncated to a given maximum τ and normalized

(toward 255 when considering 24-bit color images),

following

C

TAD

(x,y,d) =

255

τ

· min(C

AD

(x,y,d) , τ) , (2)

to reduce the impact of noise inside the source im-

ages. The cost is then aggregated by a 33 × 1 hori-

zontal and 1 × 33 vertical separated 1D convolution

kernel w

H

(x,y,u), respectively w

V

(x,y,v). The com-

position of these kernels are based on the Gestalt prin-

ciples of similarity and proximity, and are therefore

computed in a preprocessing step according to

w

H

(x,y,u) = e

−

1

γ

s

|∆c

xu

|

+ e

−

1

γ

p

|u|

, (3)

with |∆c

xu

| being the Euclidean color distance be-

tween I

∗

0

(x,y) and I

∗

0

(x + u,y), and γ

s

= 17.6, γ

p

=

40.0 being a fall-off rate for the similarity, respec-

tively proximity term. The vertical kernel w

V

is com-

puted in a similar manner. Consequently, the aggre-

gated cost A

WE

is defined as

A

′

WE

(x,y,d) =

w

H

(x,y,u) ∗

u

C

TAD

(x+ u, y,d)

µ

H

xy

, (4)

A

WE

(x,y,d) =

w

V

(x,y,v) ∗

v

A

′

WE

(x,y+ v,d)

µ

V

xy

, (5)

where µ

H

xy

, µ

V

xy

are normalization constants, and A

′

WE

stores the intermediate result of the horizontal aggre-

gation. Finally, the best matching cost is selected

out of all disparity hypotheses conceived in S, on a

winner-takes-all (WTA) basis. The resulting depth

map D(x,y) is therefore composed according to

D(x,y) = arg min

d∈S

A

WE

(x,y,d) . (6)

Table 1 reflects our analysis of the type and

amount of operations that are involved to compute

a depth map with the adaptive weights approach us-

ing a 33× 33 convolution kernel, where p defines the

amount of pixels in the image, and h the amount of

disparity hypotheses, i.e. the cardinality of S. Hence

the total amount of operations O to generate a depth

map can be described as

O = (1027+ 23· h)· p , (7)

resulting in a little over 17.6 Gops for the 1800 ×

1500 Teddy scene (Middlebury, 2003).

3 COMPLEXITY REDUCTION

The proposed method can reduce the total complex-

ity of the adaptive weights algorithm, or any other

local stereo matching algorithm for that matter. To

achieve this, the algorithm is executed twice, but with

different inputs. In the first pass, the complete volume

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

548

Table 1: Amount of operations needed to generate a depth map with the adaptive weights algorithm using a 33× 33 convolu-

tion kernel, in function of the amount of image pixels p and disparity hypotheses h.

Preprocessing Matching Cost Cost Aggregation Disparity Selection

Texture Ops 198 · p 6· p· h 132· p · h 1· p· h

Computational Ops 764· p 13· p · h 256· p · h 1· p· h

Render Ops 64 · p 1· p· h 1· p· h 1· p

TOTAL OPS 1026· p 20· p· h 1· p · h (2· h+ 1)· p

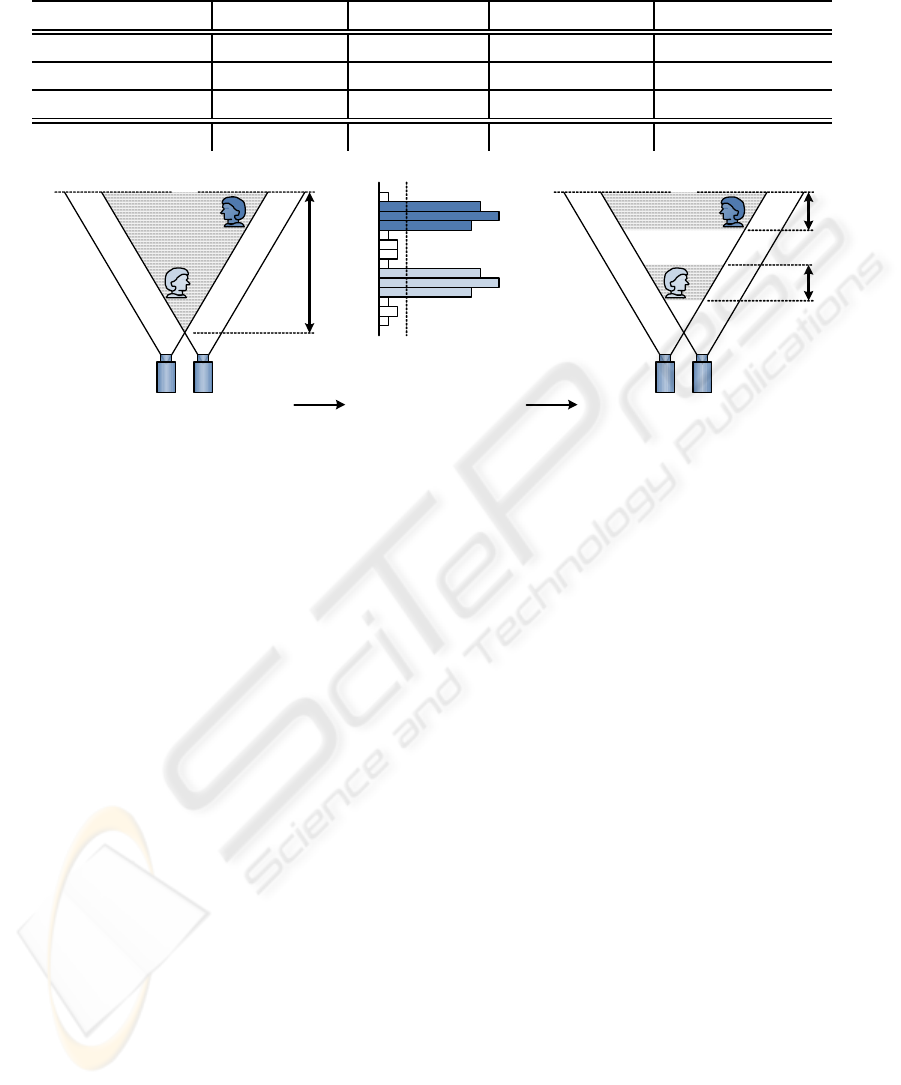

Adaptive Scan Range

8

Full Scan Range

8

Histogram

Noise Threshold

ANALYSIS FEEDBACK

Figure 2: The principle of our proposed complexity reduction. The full 3D volume is scanned with a low-complexity approach,

and analyzed through a histogram. Consequently, an accurate high-complexity scan is performed on an adaptive range.

is scanned over the entire search range S, but with

a smaller input image resolution. Consequently, an

analysis on the depth map D is performed by comput-

ing the histogram H (d). As shown in Fig. 2, the his-

togram indicates the depths where objects are actually

located. In (Gallup et al., 2007), the histogram has

already been used to find the best orientation of the

depth sweep, by performing the sweep for multiple

orientations and selecting the orientation that leads

to the minimum data-entropy of the histogram. Fur-

thermore, (Geys et al., 2004) also used histogram in-

formation, but similar to (Gallup et al., 2007), this

is mainly to lever the resulting quality of the algo-

rithm. In our proposed approach, the histogram infor-

mation acquired from a coarse-grained full range scan

leads to an accurate fine-grained scan over an adaptive

range. As the complexity of the histogram computa-

tion is fairly low itself, the potential to accelerate a

local stereo matching algorithm becomes very high.

In general, the less pronounced foreground fatten-

ing (Scharstein and Szeliski, 2002) an algorithm ex-

hibits, the more the input image resolution can be re-

duced in the first pass, while still maintaining a rep-

resentative histogram. In case of the adaptive weights

approach, the input image dimensions can be reduced

up to four times, resulting in a 16-fold reduction of

the original complexity.

To determine which subset S

sub

⊆ S to take, the

values of the histogram are compared with a given

threshold δ. Instead of fixing the treshold δ, it can

be made dynamic by setting the level proportional

to the data-entropy of the histogram. Therefore, the

threshold will be set lowfor complex scenes with fine-

grained geometry, and high when little geometry is

present. Hence, this efficiently filters out noise due to

mismatches inherent to the local algorithm.

4 OPTIMIZATIONS

The aforementioned algorithmic complexity is only

valid when considering the use of conventional Gen-

eral Purpose GPU (GPGPU) computing (Owens et al.,

2007). However, several optimization schemes can

be applied to further reduce the complexity of lo-

cal stereo algorithms. For the cost aggregation mod-

ule, the amount of texture operations can be reduced

by half, when bilinear sampling is used to aggre-

gate two values with a single memory lookup. Fur-

thermore, in the next-generation GPGPU paradigm

CUDA, 16 memory operations can be coalesced into

a single memory read operation. For the disparity se-

lection, the depth test of the GPU can be exploited to

avoid all explicit operations (Lu et al., 2007). How-

ever, the depth test can only be exploited through

the traditional GPGPU paradigm using Direct3D or

OpenGL. Nevertheless, thanks to the recent intro-

duction of CUDA v2.0 and the complete support of

interoperability between CUDA and Direct3D, both

paradigms can be combined to form a highly opti-

mized implementation.

COMPLEXITY REDUCTION OF REAL-TIME DEPTH SCANNING ON GRAPHICS HARDWARE

549

(b)(a)

(d) (e)

(c)

Figure 3: (a) The Teddy scene, (b) low-complexity depth

map, (c) joint histogram, (d) the resulting high-quality depth

map with reduced operations, and (e) the original result.

5 EXPERIMENTAL RESULTS

The proposed method was tested on the 1800× 1500

Teddy scene (see Fig. 3a) of the Middlebury dataset

(Middlebury, 2003), with disparity search range S =

{0,... , 240}. The image resolution was lowered

16 times to 450 × 375, which yields the dispar-

ity map depicted in Fig. 3b. After the histogram

(see Fig. 3c) analysis, the detected subset S

sub

=

{60, . . . ,96,108,...,180} is only 45% of the orginal

search range S. As the first scan is only 1/16

th

(or

6.25%) of the original complexity, a total complex-

ity reduction of about 48% is harnessed. The dispar-

ity map in Fig. 3d is therefore generated in about 8.5

Gops, instead of the original result in Fig. 3e, which

takes 17.6 Gops. Our control-loop scheme therefore

yields a two-fold complexity reduction, and is able to

double the execution speed of the algorithm.

Considering that the complexity of the first pass is

almost negligible, the proposed control loop add-on

will allow for a speedup, proportional to the amount

of void within the scanned volume. This is particu-

larly useful in eye-gaze corrected video chat (Dumont

et al., 2008), as only the chat participant needs to be

scanned in a rather large office space.

6 CONCLUSIONS

We have proposed a control loop add-on to reduce the

complexity of local real-time stereo matching algo-

rithms. The histogram of a coarse-grained depth scan

is analyzed to adjust the input parameters of a con-

sequent fine-grained accurate scan, causing the algo-

rithm to avoid scanning in spaces that lack the pres-

ence of objects. Hence, the orignal brute-force al-

gorithmic complexity can be reduced in proportion

with the amount of void within the volume, while

still remaining fully compliant with stream-centric

paradigms such as CUDA or Brook+.

ACKNOWLEDGEMENTS

Sammy Rogmans would like to thank the IWT for

the financial support under grant number SB071150.

Tom Cuypers and Philippe Bekaert acknowledge the

financial support by the European Commission (FP7

IP

¨

2020 3D media

¨

). Furthermore, all authors recog-

nize the financial support from the IBBT.

REFERENCES

Dumont, M., Maesen, S., Rogmans, S., and Bekaert, P.

(2008). A prototype for practical eye-gaze corrected

video chat on graphics hardware. In Proc.of SIGMAP.

Gallup, D., Frahmand, J., Mordohai, P., Yang, Q., and Polle-

feys, M. (2007). Real-time plane-sweeping stereo

with multiple sweeping directions. In Proc. of CVPR.

Geys, I., Koninckx, T. P., and Gool, L. V. (2004). Fast in-

terpolated cameras by combining a GPU based plane

sweep with a max-flow regularisation algorithm. In

Proc. of 3DPVT.

Gong, M., Yang, R., Wang, L., and Gong, M. (2007). A

performance study on different cost aggregation ap-

proaches used in real-time stereo matching. IJCV,

75(2):283–296.

Lu, J., Rogmans, S., Lafruit, G., and Catthoor, F. (2007).

High-speed dense stereo via directional center-biased

support windows on programmable graphics hard-

ware. In Proc. of 3DTV-CON.

Middlebury (2003). http://vision.middlebury.edu/stereo.

Owens, J., Luebke, D., Govindaraju, N., Harris, M., Kruger,

J., Lefohn, A., and Purcell, T. (2007). A survey of

general-purpose computation on graphics hardware.

CG Forum, 26(1):80–113.

Scharstein, D. and Szeliski, R. (2002). A taxonomy and

evaluation of dense two-frame stereo correspondence

algorithms. IJCV, 47(1-3):7–42.

Yang, R., Welch, G., and Bishop, G. (2002). Real-time

consensus-based scene reconstruction using commod-

ity graphics hardware. In Proc. PG.

Yoon, K.-J. and Kweon, I.-S. (2006). Adaptive support-

weight approach for correspondence search. IEEE

PAMI, 28:650–656.

VISAPP 2009 - International Conference on Computer Vision Theory and Applications

550