SELF-SIMILARITY MEASURMENT

USING PERCENTAGE OF ANGLE SIMILARITY

ON CORRELATIONS OF FACE OBJECTS

Darun Kesrarat and Paitoon Porntrakoon

Autonomous System Research Laboratory, Faculty of Science and Technology, Assumption University

Ramkumhaeng 24, Huamark, Bangkok, Thailand

Keywords: Self- Similarity, Face objects, Correlations.

Abstract: A 2D face image can be used to search the self-similar images in the criminal database. This self-similar

search can assist the human user to make the final decision among the retrieved images. In previous self-

similar search, a 2D face image comprises of objects and object correlations. The attribute values of objects

and their correlations are measured and stored in the face image database. The similarity percentage is

specified to retrieve the self-similar images from the database. The problem of previous self-similar search

is that the percentage of the angle differentiation among the objects in different part is different although

their angle differentiation is exactly the same. The proposed model is introduced to improve the stability of

the similarity percentage by reducing the number of face objects, object correlations, and the degree

calculation. After testing over 100 samples, the proposed method illustrated that the stability of similarity

percentage is improved especially for the left side objects of the face image.

1 INTRODUCTION

The face image is two dimensional, vertical and

horizontal. For each image, there are 10 objects –

Face, Right Eyebrow, Left Eyebrow, Right Eye, Left

Eye, Right Ear, Left Ear, Nose, Mouth, and Scar that

are identified and the size from the center toward the

0, 90, 180, and 270 degrees of each object are

recorded in the database. The Face object is used as

the reference object. There are 9 object correlations

– Face against Right Eyebrow, Face against Left

Eyebrow, Face against Right Eye, Face against Left

Eye, Face against Right Ear, Face against Left Ear,

Face against Nose, Face against Mouth, and Face

against Scar – in which their distance and angle

toward the Face object are recorded in the database

as well. The self-similar images in which all the

attribute values of objects and object correlations are

not exceed the specified similarity percentage will

be retrieved from the database by using the

following formula (P. Porntrakoon, 1999; V.

Srisarkun, 2001 & 2002).

()

100

,max

__ ×

−

≥

rq

rq

percentagesimilarityangle

ii

(1)

where q is an attribute value of the object of the key

image and r is an attribute value of the object of

stored image.

It is obvious that the degree calculation of each

object – in different part of the face – toward the

reference object is unstable. Therefore the

percentage of the angle differentiation among the

objects in different part will be different although

their angle differentiation is exactly the same – e.g.,

2 degrees on the face.

The proposed mothod reduces the number of

objects to 8 objects, reduces number of object

correlations to 7 correlations, and introduces the new

calculations of the object correlations. The proposed

method presents a more stable ratio of angle

similarity among objects in different part of the face

although their angle differentiation is exactly the

same. Moreover, the proposed method requires less

attributes to represent the content of the face image.

The attribute number is adequate to retrieve the

similar face images from the database. The space

required to store the attribute values is less and the

search time is much improved.

369

Kesrarat D. and Porntrakoon P.

SELF-SIMILARITY MEASURMENT USING PERCENTAGE OF ANGLE SIMILARITY ON CORRELATIONS OF FACE OBJECTS.

DOI: 10.5220/0002164203690373

In Proceedings of the 6th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2009), page

ISBN: 978-989-674-000-9

Copyright

c

2009 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2 PROPOSED METHOD

2.1 Face Image Conversion

The face image is segmented into closed contours

corresponding to the dominant image objects. Each

object contains its object correlation and attributes

(I. Kapouleas, 1990; S. Dellepiane, 1992; A.V.

Ramen, 1993). In the proposed method, 8 objects –

Nose, Right Eyebrow, Left Eyebrow, Right Eye,

Left Eye, Right Ear, Left Ear, and Mouth are

detected by specifying the top, bottom, leftmost, and

rightmost positions of each object. Nose will be used

as the reference object that has the correlation with

the remaining objects.

Therefore, a face image has 7 object correlations

and each correlation (Right Eyebrow versus Nose,

Left Eyebrow versus Nose, Right Eye versus Nose,

Left Eye versus Nose, Right Ear versus Nose, Left

Ear versus Nose, Mouth versus Nose).

Each correlation has angular direction and

distance to the center of the reference object. The

distance is measured in pixel while the direction is

measured in degrees.

In this paper, the object and the object

correlation are estimated prior to the storing. The

attributes include size, distance, and angle.

2.2 Specify the Positions of Objects on

the Face Image and Calculate the

Object’s Center Coordinate (x, y)

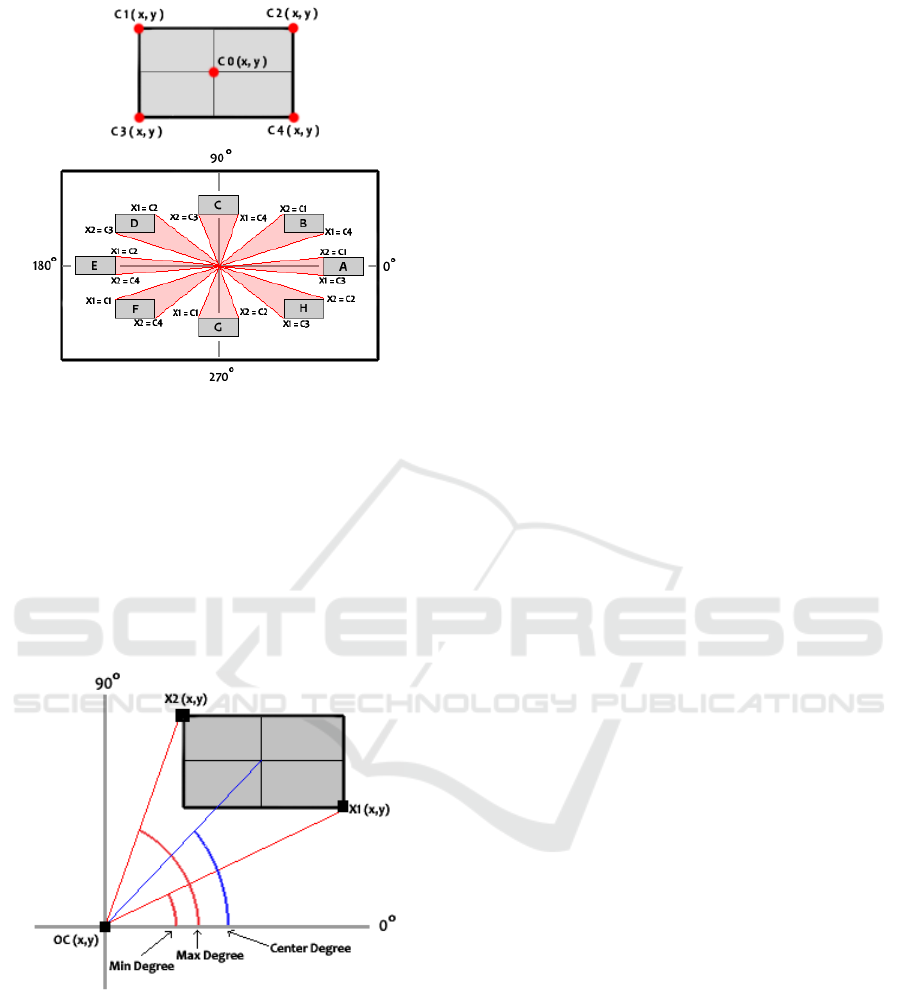

Figure 1: Specified positions of the top, bottom, leftmost,

and rightmost of each object.

For each object, the coordinate (x, y) position of

the top, bottom, leftmost, and rightmost are

specified as shown in Figure 1. Then the coordinate

(x, y) of each object’s center is calculated as

follows:

OCx = (OLx + ORx) / 2 (2)

OCy = (OTx + OWx) / 2 (3)

where OCx,y is the center, OLx,y is the leftmost,

ORx,y is the rightmost, OTx,y is the top, and OWx,y

is the bottom coordinate (x, y) of the object.

2.3 Calculate the Distance of Each Face

Object based on the

Reference Object

After the boundary and the center of each object are

identified, the distances and angles from the center

of reference object toward the remaining objects are

calculated as follows:

Distance = (OCy - Oy) / TAN((OCy - Oy) /

(OCx - Ox))

-1

(4)

Where OCx and OCy are the center coordinate

(x, y) of the reference object, Ox and Oy are the

center coordinate (x, y) of the correlated object.

Figure 2: Distance of each object based on the reference

object.

2.4 Calculate the Angle of Each Face

Object based on the

Reference Object

In the angle calculation, the direction of each object

except the nose is measured – in degrees – from its

center coordinated (x, y) to the center coordinated

(x, y) of the reference object (Nose). This model

considers the widest range of each object to the

center object based on the location of that object

toward the reference object as shown in Figure 3.

ICINCO 2009 - 6th International Conference on Informatics in Control, Automation and Robotics

370

Figure 3: Distance of each object based on the reference

object.

The object in different location from the center

object will use the different coordinate positions

(x, y) to calculate the Minimum and Maximum

degrees toward the reference object.

Then, the widest position of each object toward

the reference object is used to calculate the

maximum and minimum degrees as shown in Figure

4 and is calculated as follows:

Figure 4: Maximum and Minimum degrees of each object

toward the reference object.

Min = ATAN((OCy – X1y) / (OCx – X1x)) (5)

Max = ATAN((OCy – X2y) / (OCx – X2x)) (6)

Where X1x, X1y, X2x, and X2y are the widest

positions – coordinate (x, y) – of the correlated

object.

3 EXPERIMENTS

The experiments were performed to test the stability

of similarity percentage by testing over 100 samples

of front face image (640*480 resolution) which

consider the object correlation one by one (Right

Eyebrow, Left Eyebrow, Right Eye, Left Eye, Right

Ear, Left Ear, and Mouth) toward the reference

object (Nose). Then compare the similarity

percentage of its objects by simulating that the

object is compared with the same object size when it

is simulated to locate at different degree (range from

± 1-10 degrees) from its own original position to

prove that the percent of the similarity from the

proposed method is more stable than the old method

“A Model for Similarity Searching in 2D Face

Image Data” (P. Porntrakoon, 1999), “A model for

Self-Similar Searching in Face Image Data

Processing” (V. Srisarkun, 2001), “Self-Similar

Searching in Image Database for crime

Investigation” (V. Srisarkun, 2001), “A model for

Self-Similar Search in Image Database with Scar”

(V. Srisarkun, 2002), and “Face Recognition Using a

Similarity-based Distance Measure for Image

Database” (V. Srisarkun, 2002).

Then the processes used to perform the

experiments are as follows.

3.1 Resize the Position and Proportion

of the Face Objects

To avoid the problem of the different object size

caused by the distance of the captured images, the

position and proportion of the objects are resized by

adjusting the width of the reference object (Nose) in

the captured images to have the same width. Then

recalculate the top, bottom, leftmost, rightmost, and

center coordinate (x,y) positions of each object

based on the new proportion of the reference object

as follows:

NOx = (iw + (100 / ((NLx –NRx) / iw *100)

* (dw - (NLx –NRx) ))) / iw * Ox

(7)

NOy = (iw + (100 / ((NLx –NRx) / iw *100)

* (dw - (NLx –NRx) ))) / iw * Oy

(8)

Where NOx is the new x coordinate after

resizing, NOy is the new y coordinate after resizing,

NLx is the leftmost, NRx is the rightmost x

coordinate of the reference object (Nose), iw is the

original image width in pixel, and dw is the default

width value in pixel for resizing.

SELF-SIMILARITY MEASURMENT USING PERCENTAGE OF ANGLE SIMILARITY ON CORRELATIONS OF

FACE OBJECTS

371

3.2 Calculate the Similarity Percentage

of Angle of Each Object between

Faces

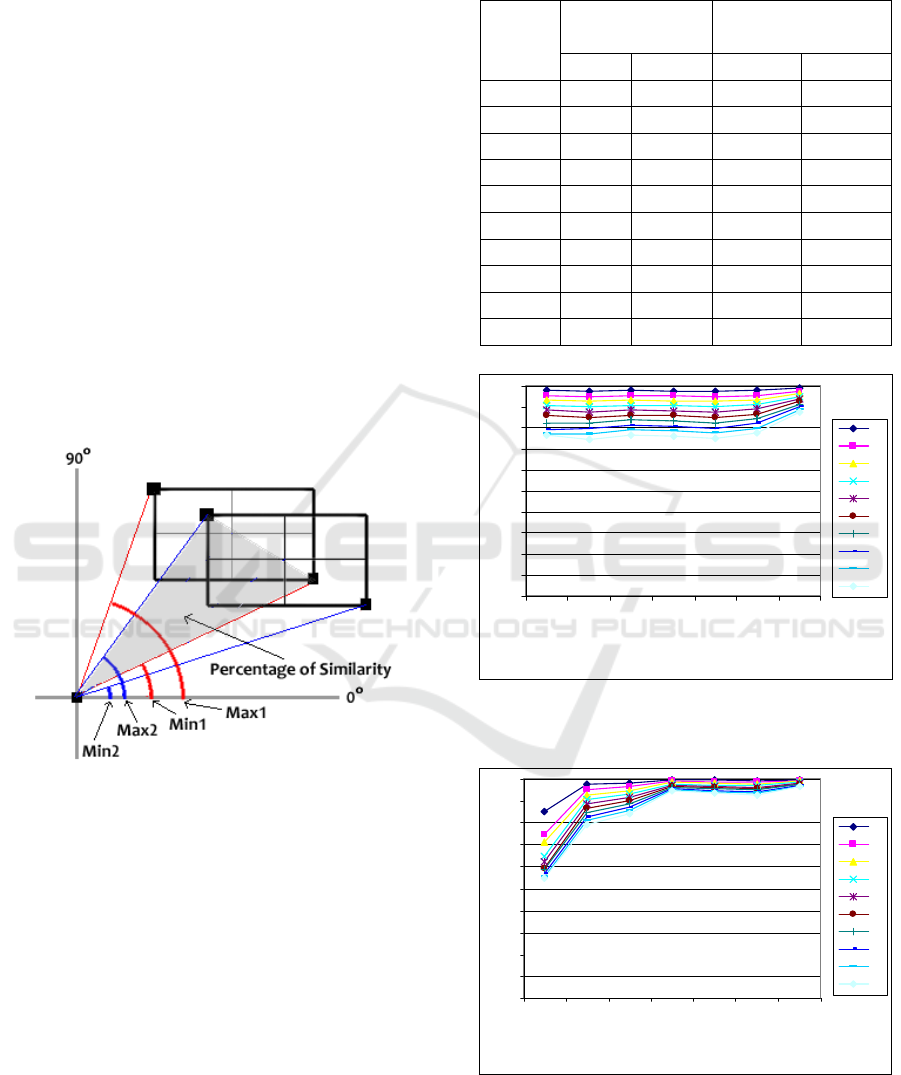

According to the Maximum and Minimum degrees

of each correlated object toward the reference object

in each face image, this model will use the minimum

and maximum degrees of the correlated object

toward the reference object from the same object

correlation number in different face images to

calculate the similarity percentage of as shown in

Figure 5 and is calculated as follows:

Percent of similarity = ((Min1, Max1) ∩

(Min2, Max2)) * 2 / ((Max1 – Min1) +

(Max2 – Min2)) *100

(9)

Where Min1, Max1, Min2, and Max2 are the

Minimum and Maximum degrees of the same

correlated object toward the reference object in

different face images.

Figure 5: Percentage of Similarity.

4 EXPERIMENT RESULTS

From the experiment, we have summarized the

results in average of percentage of angle similarity

among object correlations on the face and standard

deviations that compares the proposed method with

the old one. The results are shown in Table 1, Figure

6 and Figure 7.

Table 1: Average Percentage of Angle Similarity result

and standard deviation of the proposed method and the old

method (P. Porntrakoon, 1999; V. Srisarkun, 2001&2002).

Degree

Different

Average Similarity

(%)

Average STD

Propose Old Propose Old

1 97.7895 96.8704 0.43391 5.32916

2 95.5776 94.4426 0.86811 8.91565

3 93.3558 93.0225 1.30500 9.97539

4 91.1348 91.2665 1.74134 11.9929

5 88.9097 90.0068 2.17840 12.8165

6 86.6606 88.7411 2.62243 13.7332

7 84.2427 87.8864 3.15865 13.6906

8 81.9148 86.8308 3.65496 14.2223

9 79.6666 85.8518 4.08442 14.6272

10 77.6822 85.0452 4.38132 14.6733

0

10

20

30

40

50

60

70

80

90

100

Lef

t

ear

Left

e

y

e

Left eye b

r

ow

R

i

g

ht ea

r

R

i

gh

t e

y

e

Right

eyebr

o

w

Mouth

Degree

Different

Percent of Similarity

1

2

3

4

5

6

7

8

9

10

Figure 6: Average Similarity Percentage of the proposed

method.

0

10

20

30

40

50

60

70

80

90

100

Le

ft

ear

Lef

t

eye

Le

f

t e

y

eb

r

ow

Right ear

Right eye

R

ig

h

t

ey

eb

r

o

w

M

ou

t

h

Degree

Different

Percent of Similarity

1

2

3

4

5

6

7

8

9

10

Figure 7: Average Similarity Percentage of the Old

method (P. Porntrakoon, 1999; V. Srisarkun, 2001&2002).

ICINCO 2009 - 6th International Conference on Informatics in Control, Automation and Robotics

372

We found that the proposed method provides

more stable of the angle similarity percentage among

object correlations compared to the old method that

presents unstable (reference from the result of

standard deviation value in Table 1) especially the

left side object correlation that presents high

deviation from the other correlated objects.

Moreover, the old method still presents a little

deviation when the actual different in degrees is

increased which presents unstable result of the

method.

5 CONCLUSIONS

In this paper, we proposed a method to handle

approximate searching by image content in an image

database. Older method, such as 2D string (S.-K.

Change, 1987), giving binary answer is slow and not

scaleable (S.-Y. Lee 1992). In addition, image

content representation methods based on strings

have been proven to be ineffective in capturing

image content and may yield inaccurate retrieval

(Petrakis, 1997). Our method allows querying the

image database with the degree of similarity. And

we do propose the method which considers the

stability of the angle similarity percentage among

object correlations. Older method, (P. Porntrakoon,

1999; V. Srisarkun, 2001&2002) also gave the

unstable results.

The proposed method can reduce the instability

in the angle similarity percentage for a better

subsequent decision making process in similarity

searching and reduce the number of object

correlations which fasten the searching time.

6 FUTURE WORK

We plan to continue our research work by replace

the proposed model which provided more stable

result in percentage of angle similarity among object

correlations over the full sequence reference from

the old model (P. Porntrakoon, 1999; V. Srisarkun,

2001&2002) under the sample images of the same

person which are taken at different time

(approximately 2 -20 weeks). We believe that the

front face photos that are taken from the same

person at different time are not exactly the same .We

will perform the experiments to prove the overall

result of similarity between the future model and the

old model.

ACKNOWLEDGEMENTS

We would like to thank Assumption University for

this research funding.

REFERENCES

Petrakis, Euripides G.M., and Christos Faloutsos, 1997. In

IEEE trans. On Knowledge and data Engineering,

“Similarity Searching in Medical Image Databases”.

I. Kapouleas, 1990. In Proc. 10

th

Int’l Conf. Pattern

reconition, “Segmentation and Feature Extraction for

Magnetic Resonance Brain Image Analysis”.

S. Dellepiane, G. Venturi, and G. Vernazza, 1992. In

Pattern Recognition, “Model Generation and Model

Matching of Real Images by Fuzzy Approach”.

A.V. Ramen, S. Sarkar, and K.L. Boyer, 1993. In CVGIP:

Image Understanding, “Hypothesizing Structure in

Edge-Focused Cerebral Magnetic Image Using

Graph-Theoretic Cycle Enumeration”.

S.-K. Change, Q.-Y. Shi, and C.-W. Yan, 1987. In IEEE

Trans. Pattern Analysis and Machine Intelligence,

“Iconic Indexing by 2-D Strings”.

S.-Y. Lee and F.-J. Hsu, 1992. In Pattern Recognition,

“Spatial Reasoning and Similarity Retrieval of Images

Using 2D C-String Knowledge Representation”.

P. Porntrakoon, and C. Jittawiriyanukoon, 1999. In

IASTED International Conference On Applied

Modelling and Simulation, “A model for Similarity

Searching in 2D Face Image Data”.

V. Srisarkun, and C. Jittawiriyanukoon, 2001. In the Sixth

INFORMS Conference on Information System &

Technology, “A model for Self-Similar Searching in

Face Image Data Processing”.

V. Srisarkun, J. Cooper and C. Jittawiriyanukoon, 2001. In

the Twentieth IASTED International Conference On

Applied Informatics, “Self-Similar Searching in Image

Database for crime Investigation”.

V. Srisarkun, J. Cooper and C. Jittawiriyanukoon, 2002. In

ECIS International Conference On Applied Modelling

and Simulation, “A model for Self-Similar Search in

Image Database with Scar”.

V. Srisarkun, J. Cooper and C. Jittawiriyanukoon, 2002. In

the Proceeding of the VIPromCom 2002-4

th

EURASIP-IEEE Region 8 International Symposium on

Video Processing and Multimedia Communications,

“Face Recognition Using a Similarity-based Distance

Measure for Image Database”.

SELF-SIMILARITY MEASURMENT USING PERCENTAGE OF ANGLE SIMILARITY ON CORRELATIONS OF

FACE OBJECTS

373