RefLink: An Interface that Enables People with Motion

Impairments to Analyze Web Content and Dynamically

Link to References

Smita Deshpande and Margrit Betke

Boston University, Department of Computer Science, 111 Cummington Street

Boston, MA 02215, U.S.A.

Abstract. In this paper, we present RefLink, an interface that allows users to

analyze the content of web page by dynamically linking to an online encyclo-

pedia such as Wikipedia. Upon opening a webpage, RefLink instantly provides

a list of terms extracted from the webpage and annotates each term by the num-

ber of its occurrences in the page. RefLink uses the text-to-speech interface to

read out the list of terms. The user can select a term of interest and follow its

link to the encyclopedia. RefLink thus helps the users to perform an informed

and efficient contextual analysis. Initial user testing suggests that RefLink is a

valuable web browsing tool, in particular for people with motion impairments,

because it greatly simplifies the process of obtaining reference material and

performing contextual analysis.

1 Introduction

Many people find it difficult to understand all the terms used in a webpage, for exam-

ple, medical terminology. The traditional way of learning about a term in page is to

search a definition of the term using some search engine, read about it, and under-

stand it in the context of the web document; this may be a time consuming process,

especially for computer users who are physically impaired and unable to use the stan-

dard mouse and keyboard. Worldwide, millions of people are affected with disorders

such as cerebral palsy, multiple sclerosis, and traumatic brain injuries and are in need

of assistive software that helps them interact with the outside world through the inter-

net.

There has been extensive research in the domain of human computer interaction

for people with special needs (e.g., [1, 2, 3, 4, 5, 6, 7]). Existing tools include mouse

replacement systems such as the CameraMouse [4, 7], web browsers to make the

internet more accessible, on-screen keyboards [3], and games [5, 6, 7]. There has

been significant work in the field of evaluating such input systems and devices [8, 9,

10].

In this paper, we introduce a new technique that automatically extracts terms of

user interest from a webpage. The terms are grouped based on predefined categories

and listed on the side of the webpage. The user can select a term and follow its link to

Betke M. and Deshpande S. (2009).

RefLink: An Interface that Enables People with Motion Impairments to Analyze Web Content and Dynamically Link to References.

In Proceedings of the 9th International Workshop on Pattern Recognition in Information Systems, pages 28-37

DOI: 10.5220/0002180700280037

Copyright

c

SciTePress

an encyclopedia such as Wikipedia [11]. RefLink thus helps users to navigate through

the web document, recognize the important terms and their relevance to the context.

In this way, users can perform an informed and efficient contextual analysis of the

webpage. Our work relates to research in the field of entity extraction. Techniques in

this field include the reduction of cursor travel and the number of clicks to copy a

term from one document to another [12], and the interpretation of web pages via the

semantic web browser [13]. To the best of our knowledge, entity extraction from web

pages has not addressed the needs of users with motion impairments. RefLink pro-

vides an opportunity for these users to increase their speed of navigating the web.

RefLink allows the user to look up a definition of a term with one click as opposed to

selecting the text from the article, copying it to the clipboard, pasting the text into the

search box and finally browsing the results.

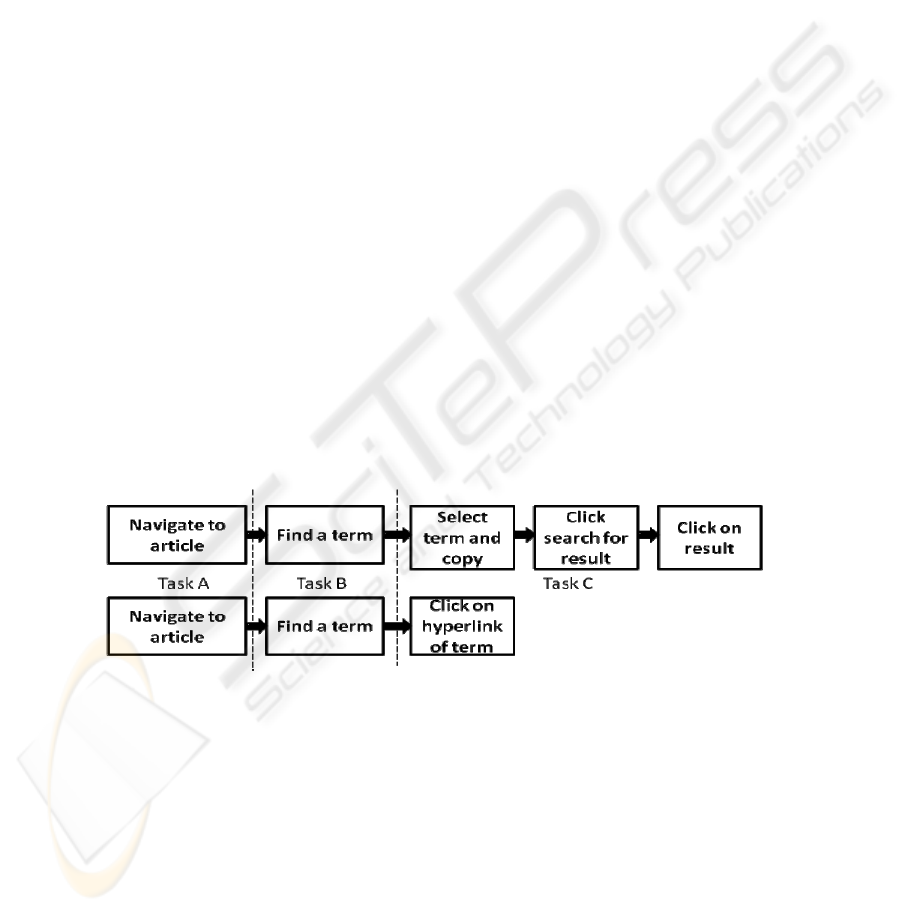

To demonstrate how a user would search a text with and without our system, we

define three tasks: navigating to an article (task A), finding a term of interest (task B),

and looking up the term in an online encyclopedia (task C). Our system greatly simpl-

ifies task C, as seen in Fig. 1. We explain the difference with an example of an inter-

net user reading an article in a medical manual about Pericarditis. In the course of

reading the article, the user comes across the term Dressler’s Syndrome with which

she is unfamiliar. Without our system, she would have to follow the steps below to

look up a definition of Dressler’s Syndrome:

1. Select the text Dressler’s Syndrome from the article, copy the text (Dress-

ler’s Syndrome) into the clipboard and paste it on the search bar of a search

engine.

2. Click on search for results.

3. Click on the result.

For a person with severe motion impairments, it will be difficult to execute the

above steps. With RefLink, the three steps can be done in one step by clicking on the

encyclopedia link to “Dressler Syndrome” that RefLink produces automatically.

Fig. 1. The subtasks involved in navigating without (upper) and with (lower) RefLink. The

dotted line demarcates the different tasks.

Another important benefit of our system is that is helps users with contextual anal-

ysis of web pages. A user can easily see and hear all the relevant topics in the page. If

a lawyer is looking for one of the laws in an article about Crimes Against Govern-

ment, she has to read through the entire article and execute the previously explained

steps for the description of the law. RefLink greatly simplifies the user’s process of

obtaining reference material about the law and performing contextual analysis of the

29

article. Our system may provide a testbed for studies that evaluate tools that may

improve readers’ comprehension of text and WebPages in particular.

In this paper, we discuss the advantages of using RefLink for efficient navigation

and search for a desired term. In section 2, we describe the architecture of the system.

In particular, we discuss the requirements of the system so that it can function effi-

ciently. In section 3, different features of the system architecture and the system func-

tionalities are explained. The experimental setup is described in section 4. We con-

clude by discussing the results and ideas for future work in the field of computer-

vision interfaces and software for users with motion impairments.

2 System Architecture

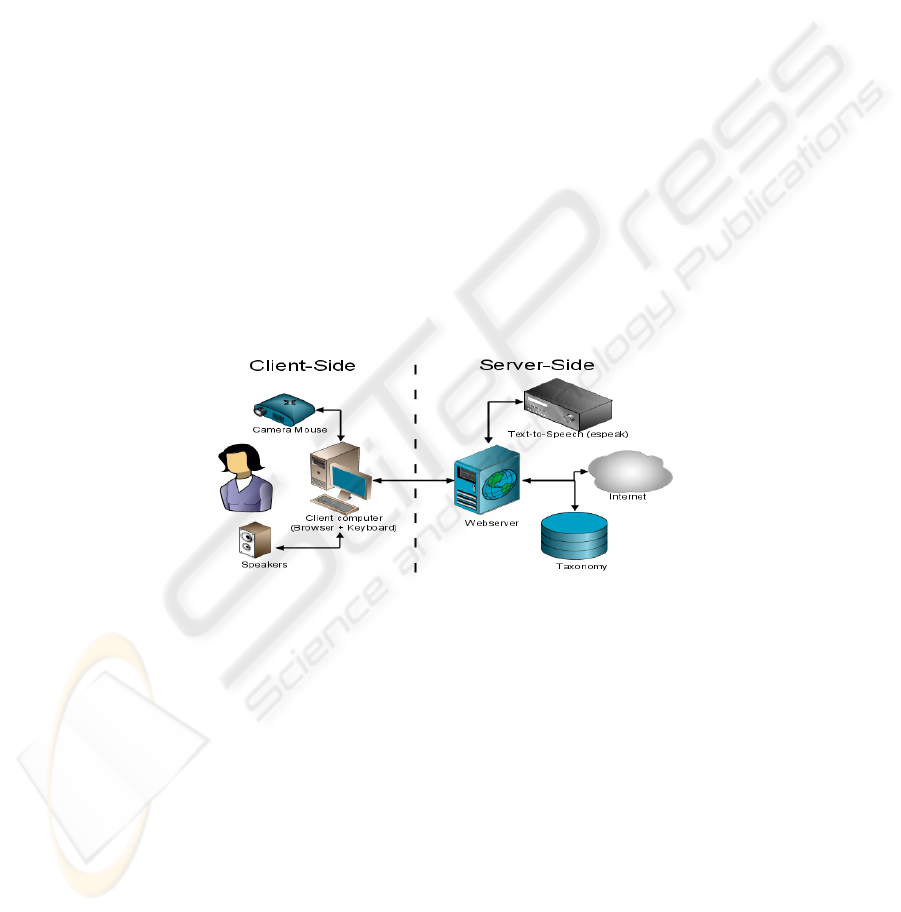

RefLink was implemented using PHP5 on an Apache 2.1 server. The minimum re-

quirements of the system are 64 MB allocatable RAM, preferably high-speed internet

connection, a web-cam, the e-speak text-to-speech library [14], and the Camera-

Mouse software [4] (see Fig.2 for the arrangement of the system components). Came-

raMouse is a mouse replacement system for computers with the Windows operating

system [4, 7]. CameraMouse works best with application programs that use the

mouse for positioning of the pointer on targets and a left click as selection command.

It is easier to use CameraMouse with application programs that do not require ex-

treme accuracy in positioning the pointer (e.g., tiny buttons).

Fig. 2. Overview of the RefLink system components and architecture.

As an input to RefLink, the system administrator must initially specify a multi-

level taxonomy of categories and the associated topics contained within them. We

tested RefLink with a taxonomy involving medical terminology; in particular, we

used the categories “organs” and “disease.” The topics under category “organs” are

“chest,” “upper limbs and lower limbs,” “abdomen,” “head and neck,” etc. Some of

the topics included subtopics, for example: topic “chest” has a subtopic “lung,” topic

“abdomen” has subtopics, “kidney,” “colon,” etc. and topic “head and neck” has

subtopics “ears,” “skin,” etc.

Given the medical taxonomy described above and an URL of the page to process

and display, our system executes the following procedure:

1. RefLink downloads the contents of the page.

30

2. It strips all hypertext mark-up, retaining only the actual textual content of the

page.

3. By trying to match each word in the processed webpage with some term in

the taxonomy, RefLink identifies all topics from the taxonomy that occur in

the processed webpage and also counts their number of occurrences.

4. RefLink renders a version of the webpage that shows the original webpage

on the left and list of processed results on the right. RefLink redirects the us-

er to an online encyclopedia when he or she clicks on one of the hyperlinks

that the list contains (see details in Section 3). In designing RefLink, we se-

lected the Wikipedia encyclopedia [11]. Other online reference websites

could be substituted.

3 Description of the RefLink Interface

The interface of RefLink consists of different features: the original webpage on the

left, the list of detected topics on the right, an address bar, and arrows. A screenshot

of the interface simulating a web page is shown in Fig. 3. The list on the right con-

tains the different topics and subtopics we have defined in our taxonomy and that

appeared in the webpage (in the example in Fig. 3, head and neck, ears, chest, heart,

abdomen, colon, kidney). The address bar on the top contains the original address of

the webpage that is now simulated by our system. The arrows on the right indicate the

up and down scrolling through the list of topics. The numbers in the brackets next to

the topics reports the number of times the topic has been mentioned in the article. The

text-to-speech module is located at the right lower corner under the list of topics.

When the RefLink interface is rendered, the text-to-speech module reads through the

categories or the topics mentioned in the article. When the user selects the topic

“heart” in the example in Fig. 3, RefLink displays the simulation of the encyclopedia

web page for the word “heart” as shown in Fig. 4.

Fig. 3. A screenshot of the RefLink interface when used with the PubMed webpage [11].

31

In order to simulate the natural browser interface, RefLink can be run in a full-

screen mode using function key F11. In browsers such as Internet Explorer or Fire-

fox, this will hide the native address bar, and RefLink’s simulated address bar will

visually replace the native one. As a result, users can feel they are operating within a

standard web browser despite them working within RefLink.

Fig. 4. Screenshot of Wikipedia page for “Heart” as simulated by RefLink.

4 Experimental Setup

We conducted two experiments with five subjects. Four subjects (average age: 25, 3

males and 1 female) were physically capable to control the standard mouse and were

proficient in browsing and searching on the web. Subject 5 (age: 60, male) had mo-

tion impairments due to a median ischemic stroke. He was not proficient in browsing

and searching on the web.

Fig. 5. Subject 5 while performing the evaluation test with only his right hand. The subject has

motion impairments of the left side of his body due to a median ischemic stroke.

In the first experiment, we asked each subject to navigate the web using the fol-

lowing two input methods:

1. Using standard keyboard and mouse (see Fig. 1 upper)

2. Using RefLink and the CameraMouse in lieu of a standard mouse (see Fig. 1

lower)

32

Fig. 6. Experimental Setup and testing phase of RefLink using CameraMouse. The small

square shown in the CameraMouse interface indicates the selected feature to track, here the tip

of the nose.

The subjects were asked to perform the following tasks for both methods:

A. Select either the PubMed Test Link [15] or Lung Imaging Test Link [16] as

the start page and navigate to it.

B. Find a term of interest on the page.

C. Navigate to the corresponding Wikipedia page containing the definition and

description for the term.

We recorded the time taken for each subtask.

Without RefLink, people with motion impairments would have to use an on-screen

keyboard to reach the Wikipedia page. We therefore developed a second experiment

where we asked each subject to control an on-screen keyboard with the Camera-

Mouse. We measured the time it took them to type out the hyper-text link to the Wi-

kipedia page of the word of interest. We asked each subject to perform each experi-

ment three times and report the average of these three timing measurements.

5 Experimental Results

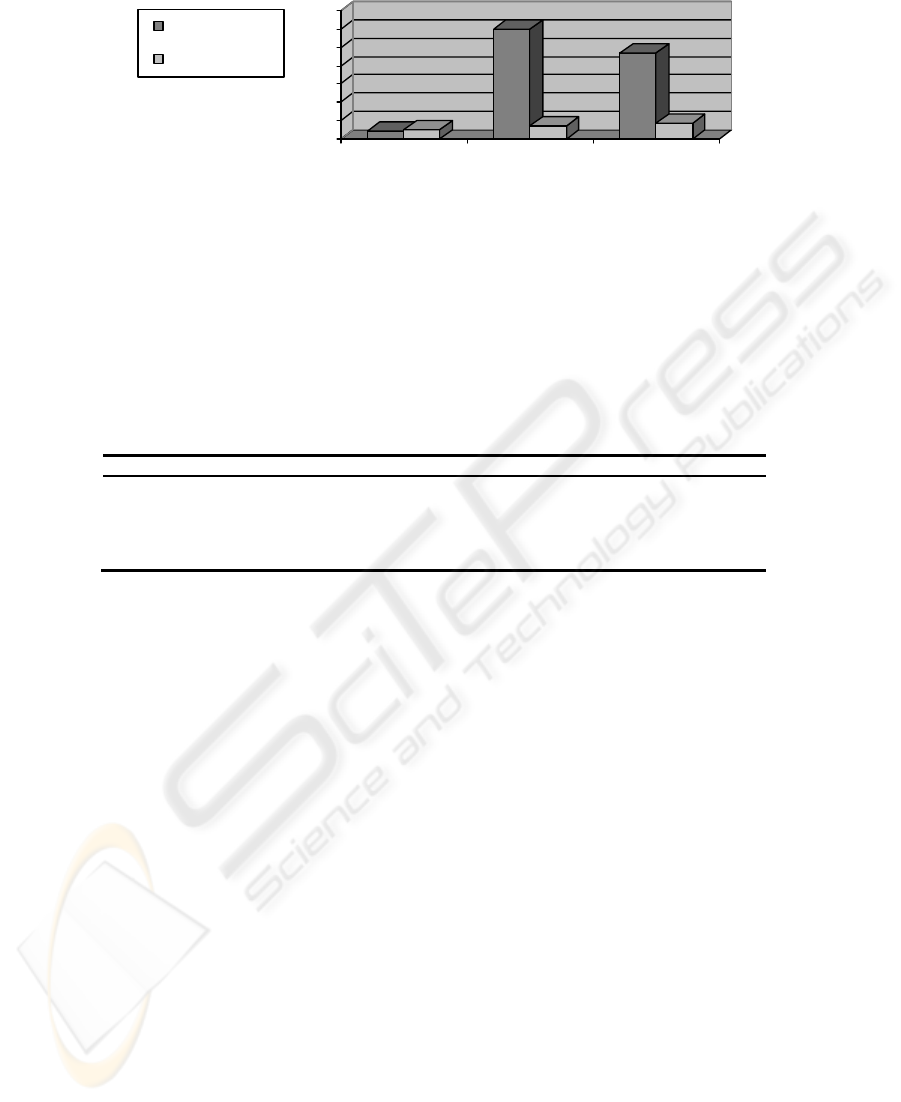

Navigating the web using RefLink as the interface tool was significantly faster than

navigating the web with a standard keyboard and mouse (see Fig. 7). We observed

that users roughly took the same amount of time to navigate to the start page (task A)

with both methods: 4 seconds without RefLink and 5 seconds with RefLink. Howev-

er, to find a term of interest in the web page (task B), there was a notable difference

of 53 seconds between the two methods. For the final task, navigating to the Wikipe-

dia page of the term, there was again a difference of 24 seconds.

33

0

10

20

30

40

50

60

70

Avg.TimeTaken

(Seconds)

NavigatingtoPage FindingTermof

Intere st

NavigatingtoTerm

Definition

Subtas

k

WithoutRefLink

WithRefLink

Fig. 7. Average times taken by 5 subjects to perform subtasks A-C with and without RefLink.

In the second experiment (see Table 1), we found that the use of the on-screen

keyboard extends the browsing time significantly which highlights the value of Ref-

Link for people who need to rely on such an on-screen keyboard. It took 182 seconds

to execute Subtask C without RefLink and 9 seconds using RefLink.

Table 1. Test Results: Average times for Subject 5 to perform the three evaluation tests using

an on-screen keyboard with the CameraMouse in replacement of the standard keyboard and

mouse. Each evaluation test was performed with and without RefLink

Part Subtasks Without RefLink

W

ith RefLink

A Navigate to webpage 4 s 5 s

B Find a term of interest 61 s 7 s

C Type term into search bar 162 s -

Click on Search 13 s -

Click on Results 7 s 9 s

6 Discussions

In our first experiment, the 54-second decrease in time taken to perform task B with

RefLink can be explained by the benefits of automatic contextual analysis. Without

RefLink, users are required to read through the page to find a term of interest. This

can be cognitively taxing and time-consuming for the user. With RefLink, however,

users were able to instantly see topics of interest on the side column without the over-

head of perusing the article or scrolling. The one second increase in time that we

measured for task A when using RefLink can be attributed to the fact that RefLink

downloads and processes a page prior to displaying it to the user. For task C, we

observed a 173-second decrease in the time taken when using RefLink. This is be-

cause users were able to simply click on the term of interest from the side column of

RefLink. Without RefLink, users were required to perform three time-consuming

subtasks of task C (see Fig. 1 upper).

In this paper, the categories and taxonomies we use pertain to the medical domain;

however, our system is domain independent and can support any taxonomy that the

system administrator chooses to specify. Another taxonomy that could be specified

could include chemicals as categories for browsing text related to material science.

Another example could be a taxonomy that specifies the names of political leaders

and organizations to help browsing political science texts. The user may be interested

34

in a term that is not included the stored taxonomy or a Wikipedia entry does not exist

for the term (the latter is less likely because we have relied on Wikipedia to create the

taxonomy). When entity extraction fails in this manner, the user must rely on the

original method to search for the term (cut-and-paste term into a search engine, etc.).

To reduce such instances, the system administrator must ensure to implement a tax-

onomy that addresses the needs of specific users. Our results showed that RefLink,

with an appropriate taxonomy, enabled users to work efficiently, especially users with

disabilities.

7 Conclusions and Future Work

Our system RefLink enables users to rapidly see terms of interest upon browsing to a

page, allowing them to navigate to the description of the term. Our results show that

users found the search for and navigation to the term of interest time-consuming

without the use of our system. When RefLink was used, there was a drastic decrease

in the amount of time taken to perform the same tasks. The time savings are even

more pronounced when motion impaired users must rely on an on-screen keyboard.

In the future, we plan to extend our system to include taxonomies for other subject

areas, not just the medical domain. We will also investigate automated approaches to

extract taxonomies, e.g., [17, 18, 19], which learn domain specific patterns and sub-

classes in web documents, or which use web search engines and statistical analysis to

discover representative terms and build a taxonomy of web resources. Further, we

plan to support workflow efficiency of users by integrating RefLink with a speech

recognition system. This would allow users who have the ability to speak to navigate

and select links without the use of a mouse.

8 Acknowledgements

The authors thank the subjects for their efforts in testing RefLink. The paper is based

upon work supported by the National Science Foundation under Grant IIS-0713229.

Any opinions, findings, and conclusions or recommendations expressed in this paper

are those of the authors and do not necessarily reflect the views of the National

Science Foundation.

9 References

1. Assistive Technologies, http://www.assistivetechnologies.com, accessed January 2009.

2. J. J. Magee, M. Betke, J. Gips, M. R. Scott, B. N. Waber, "A human-computer interface

using symmetry between eyes to detect gaze direction," IEEE Transactions on Systems

Man and Cybernetics: 38(6):1-14, November 2008

3. R. Heimgärtner, A. Holzinger and R. Adams, “From Cultural to Individual Adaptive End-

User Interfaces: Helping People with Special Needs,” Proceedings of 11th International

Conference on Computers Helping People with Special Needs, Book Series: Lecture Notes

35

in Computer Science, Volume: Volume 5105/2008, pages 82-89, Springer Berlin / Heidel-

berg

4. CameraMouse: http://www.cameramouse.org, accessed January 2009

5. M. Chau and M. Betke, “Real Time Eye Tracking and Blink Detection with USB Cam-

eras,” Boston University Computer Science Technical Report 2005-012, May 2005.

6. J. Abascal and A. Civit, “Mobile Communication for People with Disabilities and Older

People: New Opportunities for Autonomous Life,” Proceedings of the 6th ERCIM Work-

shop on ‘User Interfaces for All’ Italy, October 2000.

7. M. Betke, J. Gips, and P. Fleming, “The camera mouse: Visual tracking of body features to

provide computer access for people with severe disabilities,” IEEE Trans. Neural Systems

and Rehabilitation Eng., 10(1):1-10, 2002.

8. W. Akram, L. Tiberii and M. Betke, “Designing and Evaluating Video-based Interfaces for

Users with Motion Impairments.” In review.

9. R. W. Soukoreff and I. S. MacKenzie, “Towards a standards for pointing device evaluation,

perspectives on 27 years of Fitts’ law research in HCI.” International Journal of Human-

Computer Studies 61(6):751-789, December 2004.

10. J. O. Wobbrock and K. Z. Gajos, “Goal crossing with mice and track-balls for people with

motion impairments: Performance, submovements, and design directions,” ACM Transac-

tions on Accessible Computing 1(1):1-37, 2008.

11. Wikipedia: http://www.wikipedia.org/, accessed January 2009.

12. E. A. Bier, E. W. Ishak, and E. Chi, “Entity quick click: rapid text copying based on auto-

matic entity extraction.” In Proceedings of the Conference on Human Factors in Computing

Systems 2006, pages 562-567, ACM.

13. M. Dzbor, J. Domingue and E. Motta, “Magpie- Towards a Semantic Web Browser.” Book

Series: Lecture Notes in Computer Science, Volume: Vo-lume 2870/2003, pages 690-705,

Springer Berlin/ Heidelberg.

14. Text-to-speech Interface, http://espeak.sourceforge.net/, accessed January 2009.

15. PubMed Link : Online library of Medical Science articles http://www.pubmed.gov, ac-

cessed January 2009.

16. Lung Imaging Test Link: contains information about Medical Image Analysis.

http://www.cs.bu.edu/faculty/betke/research/lung.html

17. K. Punera, S. Rajan and J. Ghosh, “Automatically Learning Document Taxonomies for

Hierarchical Classification,” Special Interest Tracks and Posters of the 14th International

Conference on World Wide Web, pages 1010-1011, 2005.

18. O. Etzioni, M. Cafarella, D. Downey, A. Shaked, S. Soderland, D. Weld and A. Yates,

“Unsupervised Named- Entity Extraction from the Web,” Artificial Intelligence, 165:91-

134, June 2005.

19. D. Sánchez and A. Moreno, “Automatic Generation of Taxonomies from the WWW,”

Practical Aspects of Knowledge Management, Proceedings 5th International Conference,

pages 208-220, Vienna, Austria, December 2004.

36