LARGE-SCALE DEXTEROUS HAPTIC INTERACTION

WITH VIRTUAL MOCK-UPS

Methodology and Human Performance

Damien Chamaret, Paul Richard

LISA Laboratory, University of Angers, 62 avenue Notre Dame Du Lac, Angers, France

Sehat Ullah

IBISC Laboratory, University of Evry, 40 rue du Pelvoux, Evry, France

Keywords: Virtual Environment, Virtual Mock-up, Large-scale, Haptic Interaction, Human Performance.

Abstract: We present a methodology for both the efficient integration and dexterous manipulation of CAD models in

a physical-based virtual reality simulation. The user interacts with a virtual car mock-up using a string-

based haptic interface that provides force sensation in a large workspace. A prop is used to provide grasp

feedback. A mocap system is used to track user’s hand and head movements. In addition a 5DT data-glove

is used to measure finger flexion. Twelve volunteer participants were instructed to remove a lamp of the

virtual mock-up under different conditions. Results revealed that haptic feedback was better than additional

visual feedback in terms of task completion time and collision frequency.

1 INTRODUCTION

Nowadays, Car manufacturers use Computer Aided

Design (CAD) to reduce costs, time-to-market and to

increase the overall quality of products. In this

context, physical mock-ups are replaced by virtual

mock-ups for accessibility testing, assembly

simulations, operation training and so on. In such

simulations, sensory feedback must be provided in an

intuitive and comprehensible way. Therefore, it is of

great importance to investigate the factors related to

information presentation modalities that affect human

performance. This paper presents a methodology for

both the efficient integration and dexterous

manipulation of CAD models in a physical-based

virtual reality simulation. The user interacts with a

virtual car mock-up by using a string-based haptic

interface that provides force sensation in a large

workspace. An experimental study was carried out to

validate the proposed methodology and evaluate the

effect of sensory feedback on operator’s

performance. Twelve participants were instructed to

remove a car’s lamp from a virtual mock-up. Three

experimental conditions were tested concerning

sensory feedback associated with collisions with the

virtual mock-up: (1) no-feedback (only graphics), (2)

additional visual feedback (colour) and (3) haptic

feedback. Section 2 describes the CAD-to-VR

methodology. Section 3 presents the virtual

environment (VE) that allows large-scale haptic

interaction with the virtual car mock-up. In section 4,

the experimental study and the results are presented.

The paper ends by a conclusion and gives some

tracks for future work.

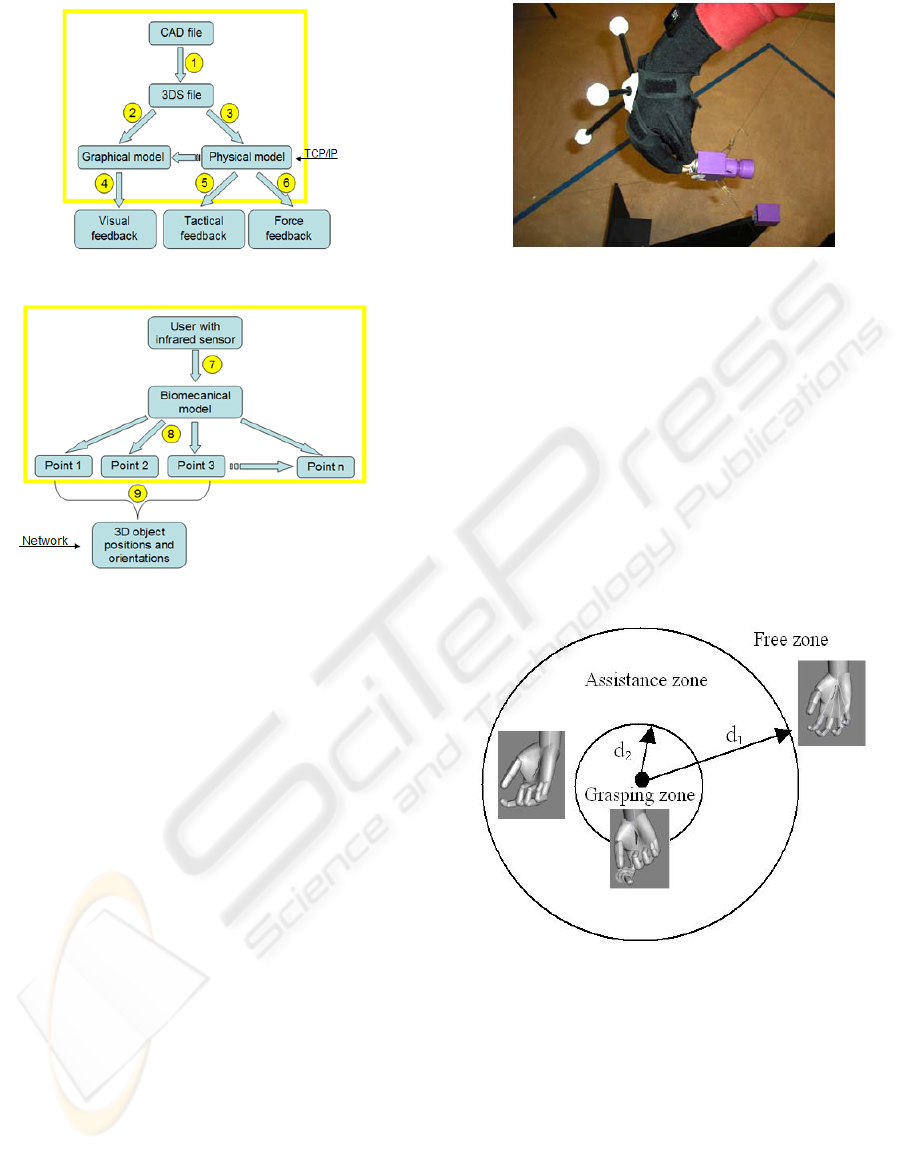

2 CAD-TO-VR METHODOLOGY

The proposed CAD-to-VR methodology involves

different steps (illustrated in Figure 1a), such as

model simplification (1), model integration (2-3).

The graphical model is used for visual display of the

virtual mock-up (4), while the physical one is used

for both tactile and kinaesthetic feedback (5-6). Our

methodology for model simplification allows to

decrease the number of polygons of the CAD models

while keeping the same level of visual quality. Model

integration allows to obtain both graphical and

physical models of CAD data. Physical models are

built using PhysX engine (www.nvidia.com).

453

Chamaret D., Richard P. and Ullah S. (2009).

LARGE-SCALE DEXTEROUS HAPTIC INTERACTION WITH VIRTUAL MOCK-UPS - Methodology and Human Performance .

In Proceedings of the 6th International Conference on Informatics in Control, Automation and Robotics - Robotics and Automation, pages 453-456

DOI: 10.5220/0002219404530456

Copyright

c

SciTePress

(a)

(b)

Figure 1: Schematic of the CAD-to-VR methodology (a)

and human interaction using the mocap system (b).

3 VIRTUAL ENVIRONMENT

Our methodology also allows the integration of both

the graphical and physical models of users (Figure

1b). A biomechanical model is used for the animation

of operator’s hand and arm (7). In order to get

accurate position and orientation tracking of the user,

an infrared camera-based motion capture system is

used. Six reflected markers are placed on the

operator’s body (8): three markers on the data-glove

to assess hand position and orientation (9), one

marker on a cap worn by the operator for head

tracking, and two markers on the operator’s arm.

The large-scale VE provides force feedback using

the SPIDAR system (Space Interface Device for

Artificial Reality) (Ishii and Sato, 1994).

Stereoscopic images are displayed on a rear-

projected large screen (2m x 2.5m) and are viewed

using polarized glasses. The SPIDAR system uses a

SH4 controller from the Cyverse (Japan). In order to

provide force feedback to both hands, a total of 8

motors are placed on the corners of a cubic frame

surrounding the user. In order to provide haptic

grasping feedback to the operator, a prop (see Figure

2) was used (Chamaret et al., 2008).

Figure 2: The prop (real car lamp inside a plastic cap) used

for grasping feedback.

Poor grasp of the prop or a bad calibration due to

unexpected movements may cause problems of

feedback coherency between grasping (prop) and

simulated forces (SPIDAR). To avoid these

problems, three zones were defined: (a) a free zone

where the user can freely moves his/her hand (hand

position/orientation and fingers flexion) using a 5DT

data glove, (b) an assistance zone (d

1

= 10 cm from

the virtual lamp) where the user is no more able to

change fingers flexion, and (c) a grasping zone (d

2

=

5 mm from the virtual lamp) where the grasping

gesture is realized (Figure 3):

Figure 3: Illustration of the three zones used for the

grasping simulation and assistance.

4 EXPERIMENTAL STUDY

The aim of this experiment is twofold: (1) validate

the proposed CAD-to-VR methodology including

operator’s biomechanical model integration, and (2)

investigate the effect of haptic and visual feedback

on operator performance in a task involving

extraction and replacement of a car’s lamp in a

virtual car mock-up.

ICINCO 2009 - 6th International Conference on Informatics in Control, Automation and Robotics

454

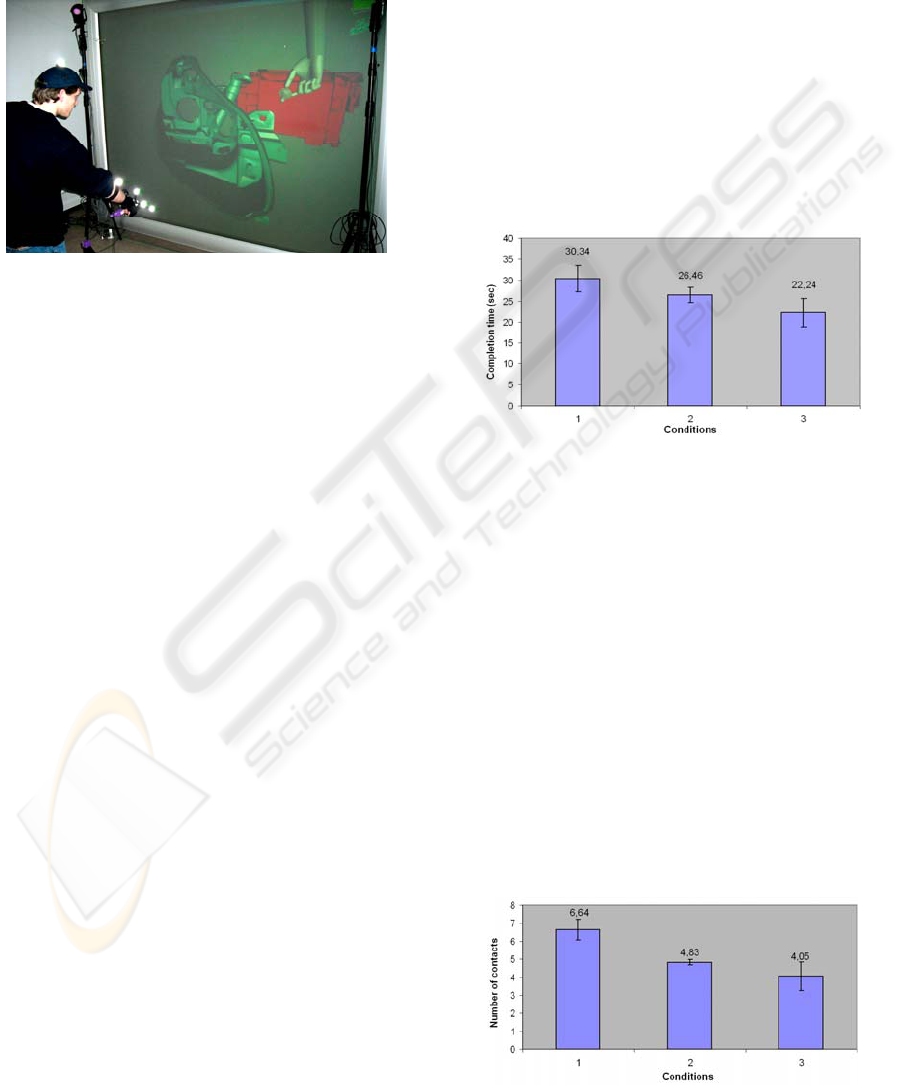

4.1 Experimental Set-up

The experimental set-up is illustrated in Figure 4.

The user interaction with the virtual mock-up using

the camera-based mocap system. Global force

feedback is provided using the SPIDAR system.

Local (grasp) feedback is achieved using the prop.

Figure 4: Illustration of a user performing the task.

4.2 Procedure

Twelve volunteer students participated in the

experiment. They were naives in the use of virtual

reality technique. Each participant had to perform the

maintenance task in the following conditions:

- C1: no additional feedback (only graphics);

- C2: additional visual feedback (colour);

- C3: haptic feedback (from SPIDAR).

The task has to be repeated three times for each

condition. Conditions were presented in different

order to avoid any training transfer. Participants were

in front of a large rear-projected screen at a distance

of approximately 1.5 meter. They worn a 5DT data

glove equipped with three reflective balls (Figure 2).

In order to get acquainted with the system each

participant performed a pre-trial of the task in C1

condition.

4.3 Data Collection

The following data were collected during the

experiment for each single trial:

- task completion time

- number of collisions

4.4 Results

Results were analysed through ANOVA. We

examine the effect sensory feedback on (a) task

completion time and (b) collision time. Then, we

look into the learning process associated with the

different sensory feedback.

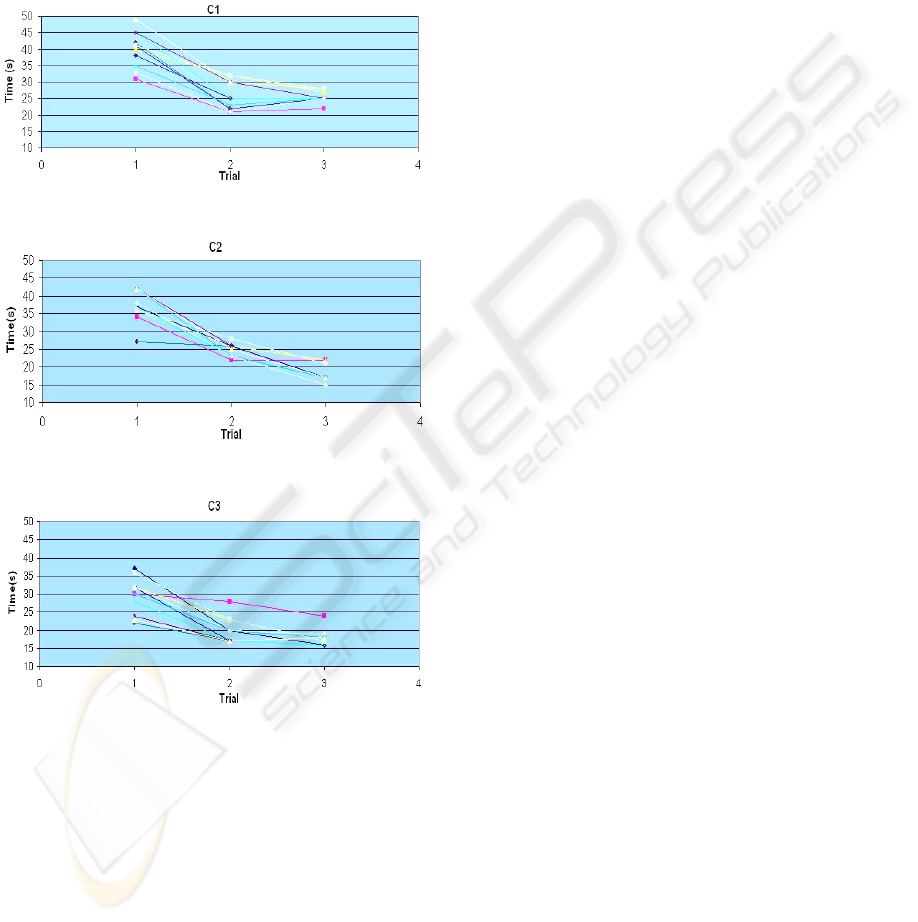

4.4.1 Task Completion Time

Results, illustrated in Figure 5, revealed that sensory

feedback has a significant effect on task completion

time: (F(2,11)=14.08; p<0.005). A statistical

difference between conditions C1, C2 and C3 was

observed. In C1 condition the average completion

time was 30.34 sec (STD = 3.1). Average completion

time was 26.45 sec (STD = 1.8) for C2 (additional

visual feedback) and 22.24 sec (STD = 3.4) for C3

(haptic feedback). Thus visual and haptic feedbacks

allow increasing performance, as compared with the

open-loop case (no additional feedback), by 12.8 %

and 16 % respectively. Haptic feedback increase

performance by 15.6 % as compared to additional

visual feedback. However, participants’ performance

was more disparate.

Figure 5: Completion time versus conditions.

4.4.2 Number of Collisions

Results, illustrated in Figure 6, revealed that sensory

feedback has a significant effect on the number of

collisions: (F(2,11)=63.70; p < 0.005). As previously,

a statistical difference between C1, C2 and C3

conditions was observed. In C1 the average number

of collisions was 6.64 (STD = 0.58). The average

number of collisions was 4.83 sec (STD = 0.15) for

C2 and 4.05 (STD = 0.8) for C3. Thus visual and

haptic feedbacks led to a significant reduction of the

number of collisions as compared to the open-loop

case, by 27.3 % and 39.0 % respectively. Haptic

feedback increase performance by 16.2 % as

compared with additional visual feedback. As for

task completion time, participants’ performance was

more disparate in condition C3.

Figure 6: Number of collisions versus conditions.

LARGE-SCALE DEXTEROUS HAPTIC INTERACTION WITH VIRTUAL MOCK-UPS - Methodology and Human

Performance

455

4.4.3 Learning Process

The learning process is defined here by the

improvement of participant performance associated

with task repetitions. We analysed the learning

process associated with both task completion time

and number of collisions. Although each participant

repeated the task three times only, a learning process

was observed for all conditions (Figure 7, 8, and 9).

Figure 7: Learning process associated with condition 1.

Figure 8: Learning process associated with condition 2.

Figure 9: Learning process associated with condition 3.

Average task completion time was 40.2 sec at the

first trial and 25.4 sec at the last trial for condition

C1, 36.7 sec at the first trial and 18.1 sec at the last

trial for condition C2, and 29.2 sec at the first trial

and 17.9 sec at the last trial for condition C3. This

results in a performance improvement of about 37%,

50%, and 48% for conditions C1, C2 and C3

respectively.

Concerning the number of collisions, we

observed a poor learning process for each condition.

This result is not very surprising for C1 condition

since no feedback was displayed for collisions. In the

C3 condition, participants were good at the first trial.

This shows that the haptic interface is user-friendly

and efficient. The poor learning process associated

with C2 condition may be explained by the lack of

spatial information as is it the case with force

feedback (sensation of force direction during

collision).

5 CONCLUSIONS

This paper presented a methodology for both the

integration and dexterous manipulation of CAD

models with biomechanical model in a physical-

based virtual reality simulation. The user interacts

with a virtual car mock-up using a string-based

haptic interface that provides force sensation in a

large workspace. Twelve participants were instructed

to remove a lamp of the virtual mock-up under

different conditions. Results revealed that haptic

feedback was better than additional visual feedback

to reduce both task completion time and collision

frequency. In the near future we plan to integrate

haptic guides in order to assist the users to reach and

grasp the cars lamps in a more efficient way.

REFERENCES

Ishii M., Sato M., 1994. A 3d Spacial interface Device

using Tensed Strings. Presence, 3(1).

Chamaret D. Richard P. Ferrier J.L., 2008. From CAD

Model to Human-Scale Multimodal Interation with

Virtual Mockup: An Automotive Application. 5

th

International Conference on Informatics in Control,

Automation and Robotics (ICINCO 2008) Madeira,

Portugal.

ICINCO 2009 - 6th International Conference on Informatics in Control, Automation and Robotics

456