A NOVEL REGION BASED IMAGE FUSION METHOD USING

DWT AND REGION CONSISTENCY RULE

Tanish Zaveri

Electronics & Communication Engineering Dept., Institute of Technology, Nirma University, Ahmedabad, India

Mukesh Zaveri

Department of Computer Engineering, Sardar Vallabhbhai National Institute of Technology, Surat, India

Keywords: Normalized cut, Discrete wavelet transform, Region consistency.

Abstract: This paper proposes a novel region based image fusion scheme using discrete wavelet transform and region

consistency rule. In the recent literature, region based image fusion methods show better performance than

pixel based image fusion methods. The graph based normalized cutset algorithm is used for image

segmentation. The region consistency rule is used to select the regions from discrete wavelet transform

decomposed source images. The new MMS fusion rule is also proposed to fuse multimodality images.

Proposed method is applied on large number of registered images of various categories of multifocus and

multimodality images and results are compared using standard reference based and nonreference based

image fusion parameters. It has been observed that simulation results of our proposed algorithm are

consistent and more information is preserved compared to earlier reported pixel based and region based

methods.

1 INTRODUCTION

In the recent years image fusion has emerged as an

important research area because of its wide

application in many image analysis task such as

target recognition, remote sensing, wireless sensor

network and medical image processing. We use the

term image fusion to denote a process by which

multiple images or information from multiple

images is combined. These images may be obtained

from different types of sensors. There has been a

growing interest in the use of multiple sensors to

increase the capabilities of intelligent machines and

systems. Actually the computer systems have been

developed those are capable of extracting

meaningful information from the recorded data

coming from the different sources. In other words,

image fusion is a process of combining multiple

input images of the same scene into a single fused

image, which preserves full content information and

also retains the important features from each of the

original images. The integration of data, recorded

from a multisensor system, together with

knowledge, is known as data fusion (Devid, 2001).

Because of the limited depth of focus in digital

camera, one part of the object is well focused while

the other parts are being out of focus. Parts of the

image which are out of focus have less depth of field

so in order to get all the detailed information from

out of focus area, the image fusion method is used.

The image fusion method is used to combine

relevant information from two or more source

images into one single image such that the single

image contains most of the information from all the

source images. Most of advance sensors used in

recent years have capability to produce an image.

The sensors like optical cameras, millimetre wave

cameras, infrared cameras, x-ray cameras and radar

cameras are examples of it. In this paper, IR camera

and MMW camera images are used to apply our

proposed method.

Image fusion methods (Piella, 2003) are

classified mainly into two categories: (i) pixel based

and (ii) region based. Pixel based methods generally

deal with pixel level information directly. In pixel

based image fusion method, the average of the

source images is taken pixel by pixel. However this

339

Zaveri T. and Zaveri M. (2009).

A NOVEL REGION BASED IMAGE FUSION METHOD USING DWT AND REGION CONSISTENCY RULE.

In Proceedings of the International Joint Conference on Computational Intelligence, pages 339-346

DOI: 10.5220/0002283203390346

Copyright

c

SciTePress

leads to undesired side effects in the resultant image.

There are various techniques for image fusion at

pixel level available in literature (Zhong, 1999)

(Anna, 2006). The region based algorithm has many

advantages over pixel base algorithm. It is less

sensitive to noise, better contrast, less affected by

misregistration but at the cost of complexity (Piella,

2003). Researchers have recognized that it is more

meaningful to combine objects or regions rather than

pixels. Piella (Piella, 2002) has proposed a

multiresolution region based fusion scheme using

link pyramid approach. Recently, Li and Young

(Shutao, 2008) have proposed region based

multifocus image fusion method using spatial

frequency as a fusion rule.

Zhang and Blum (Zhong, 1999) had proposed a

categorization of multiscale decomposition based

image fusion schemes for multifocus images. As per

the literature, large part of research on

multiresolution (MR) image fusion has emphasized

on choosing an appropriate representation which

facilitates the selection and combination of salient

features. The issues to be addressed are how to

choose the specific type of MR decomposition

methods like pyramid, wavelet, morphological etc.

and the number of decomposition levels. More

decomposition levels do not necessarily produce

better results (Zhong, 1999) but by increasing the

analysis depth, neighbouring features of lower band

may overlap. This gives rise to discontinuities in the

composite representation and thus introduces

distortions, such as blocking effect or ringing

artifacts into the fused image. The first level discrete

wavelet transform (DWT) based decomposition is

used in proposed algorithm to mitigate the drawback

of Multiscale transform. Also a region is more

meaningful structure in multifocus image and it has

many advantages over pixel based algorithm.

Proposed algorithm uses region based approach. The

heart of our algorithm is the segmentation of an

image. The normalized cut based (Shi, 2000) image

segmentation method is used in proposed algorithm.

The new region consistency and mean max and

standard deviation (MMS) fusion rule are proposed

in this algorithm to improve the fusion image

quality.

The paper is organized as follows. Proposed

algorithm is described in the following section. The

reference based and nonreference based image

fusion evaluation parameters are introduced in

section 3. The simulation results and assessment are

described in Section 4. It is followed by the

conclusion.

2 PROPOSED ALGORITHM

In this section first framework of proposed region

based image fusion method using DWT and region

consistency rule is introduced. The discrete wavelet

transform divides the source image into sub images

details are explained in (Mallat, 1989). The sub

images arise from separable applications of vertical

and horizontal filter. The resultant first level

decomposed four images include LL

1

sub band

image corresponding to coarse level approximation

image. Also the other three decomposed image

include (LH

1

, HL

1

, HH

1

) sub band images

corresponding to finest scale wavelet coefficient

detail images. Most image fusion method based on

DWT apply max or average fusion rule on DWT

decomposed approximate and detailed images to

generate final fused image. This fusion rule based on

DWT produces significant distortion in final fused

image as described in Fig. 1.

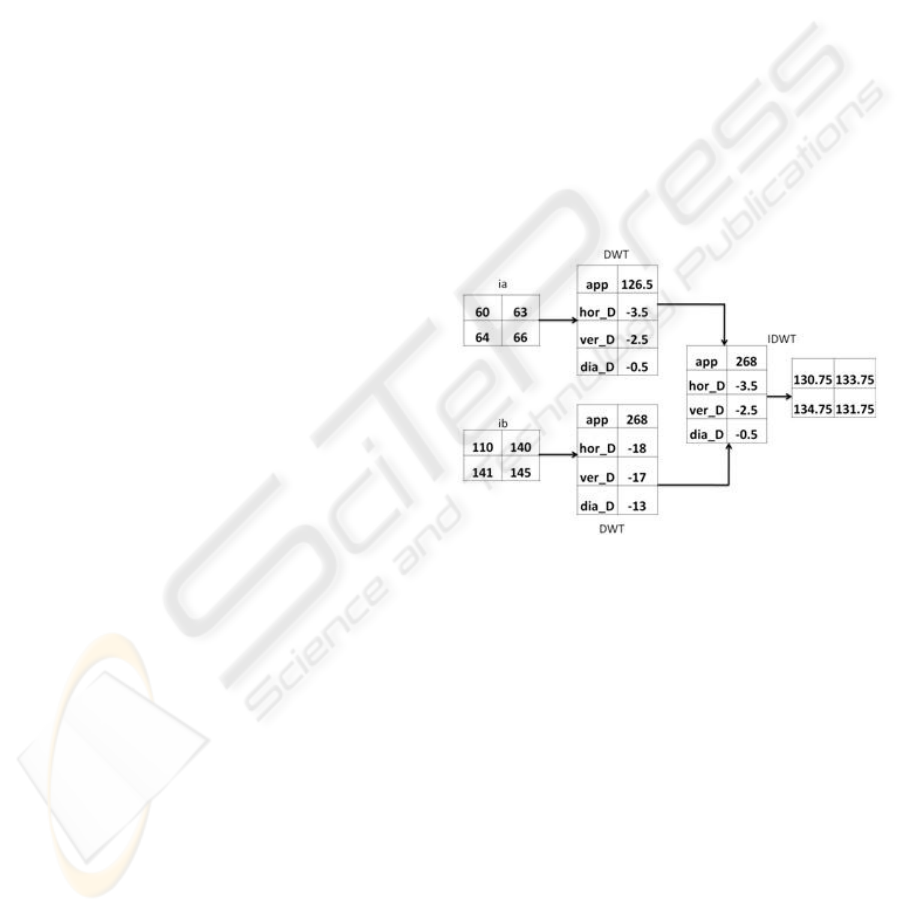

Figure 1: DWT computation process for fusion.

In Fig. 1, two image ia & ib of 2 x 2 size are

considered as the input source images. After

applying DWT, image is decomposed into four

components which are represented app, hor_D,

ver_D and dia_D. Among these four coefficients;

one app is approximate coefficient and hor_D,

ver_D and dia_D are three detail coefficients as

shown in the Fig. 1. Now if we apply Max rule to

select fused coefficient to decomposed images;

hor_D, ver_D, dia_D coefficient will be selected

from image ia while approximate coefficient will be

selected from image ib. So when inverse DWT is

applied on these images, it adds undesired

information distortion in final fused image. After

applying inverse DWT, the pixel values of resultant

fused matrix are shown in Fig. 1 which is not related

to any of the input images and the difference

between two pixels also changed. Therefore, it is

IJCCI 2009 - International Joint Conference on Computational Intelligence

340

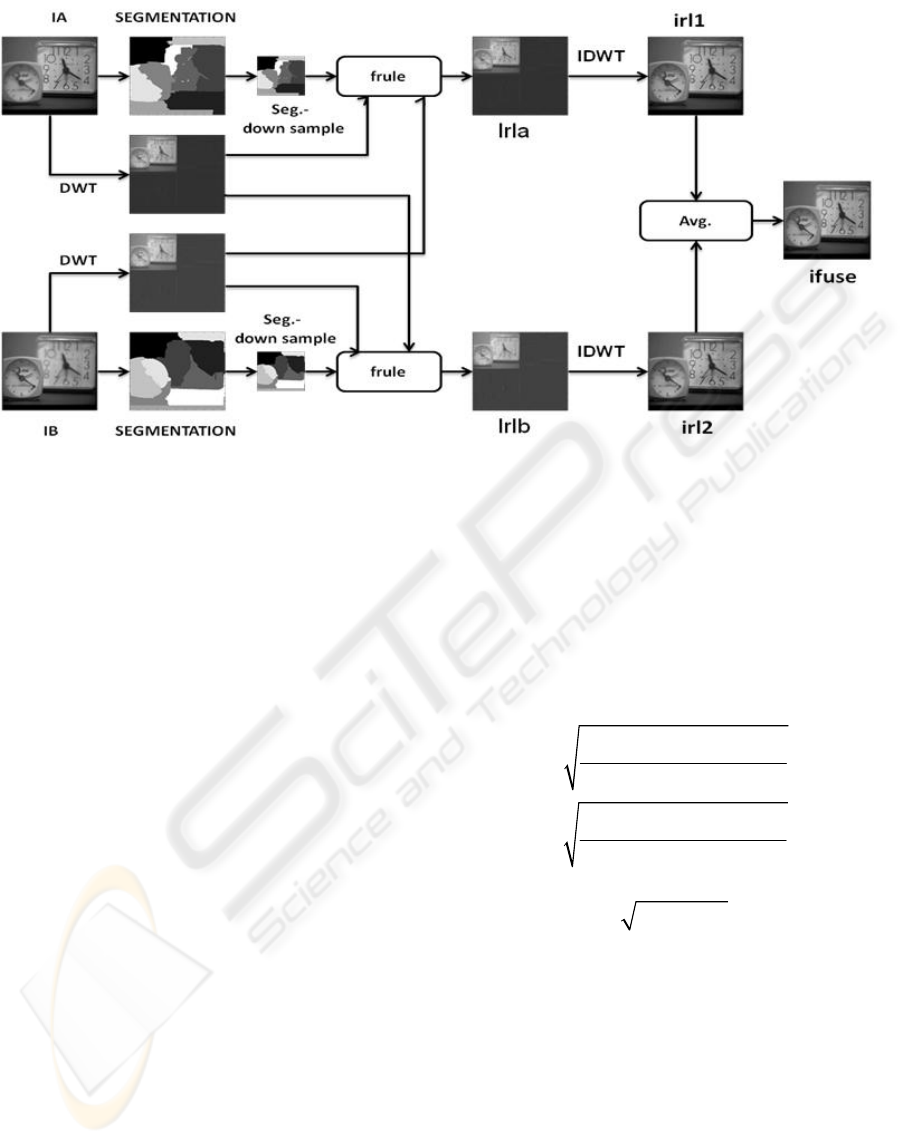

Figure 2: Block diagram of the proposed method.

necessary to design a technique which can solve this

problem and generate consistent pixel value related

to either of the input images. The effect of this

problem is more severe in region based image fusion

scheme. To solve this problem region consistency

rule is introduced in the proposed method which is

explained in detail later in this section. The block

diagram of proposed algorithm is shown in Figure 2.

The fused image can be generated by following

steps as describe below.

Step 1 The DWT explained in (Gonzalez, 2006) is

applied on image IA which gives first level

decomposed image of one approximate image

(LL

1A

) and three detail images (LH

1A

, HL

1A

, HH

1A

).

Step 2 The Normalized cut segmentation algorithm

is applied on image IA. Segmentation is then down

sampled to match the size of DWT decomposed

image.

Step 3 The n numbers of segmented regions are

extracted from approximate component of image IA

and IB using segmented image. How the value of n

is decided is explained later in this step when fusion

rules are explained.

We have used two different fusion rules to

compare extracted regions from different kind of

source images. The SF is widely used in many

literatures (Shutao, 2008) to measure the overall

clarity of an image or region. The n

th

region of an IA

image is defined by RA

n

. The spatial frequency of

that region is calculated using Row frequency (RF)

and Column frequency (CF) as described in (1) and

finally SF is calculated using (2). SF parameter

presents the quality of details in an image. Higher

the value of SF, more details of image are available

in that region extracted. First fusion rule is region

based image fusion rule with spatial frequency (SF)

as described in (3), which is used to identify good

quality region extracted from multifocus source

images and image I

rla1

is generated. SF of nth region

of Image IA and IB is defined as SF

An

and SF

Bn

respectively.

2

2

[F(i,j)-F(i,j-1)]

RF =

MN

[F(i,j)-F(i-1,j)]

CF =

MN

∑∑

∑∑

(1)

22

SF = RF +SF

(2)

1

A

nAnBn

rla

B

nAnBn

RA SF SF

I

RA SF SF

≥

=

<

⎧

⎨

⎩

(3)

Here An and Bn are number of regions in image

IA and IB respectively. The value of n varies from 1

to i, where n = {1, 2, 3….i}. The value of i equals to

9 produces best results and it is determined after

analyzing many simulation results of various

categories of source image dataset. Regions

extracted after applying normalized cut set

segmentation algorithm on approximate image LL

1A

are represented as RA

An

and RA

Bn

respectively. I

rla1

A NOVEL REGION BASED IMAGE FUSION METHOD USING DWT AND REGION CONSISTENCY RULE

341

is resultant fused image after applying fusion rule 1

as described in (3). This rule or any other existing

fusion parameter is not enough to capture desired

region from all the type of source images especially

multisensor images so new mean max and standard

deviation (MMS) rule is proposed in our algorithm.

MMS is an effective fusion rule to capture

desired information from multimodality images.

This proposed fusion rule exploits standard

deviation, max and mean value of images or regions.

The MMS is described as

max/*An An An AnMMS ME SD R=

(4)

Where

An, An ME SD are mean, standard deviation

of nth region of image LL

1A

and

AnmaxR

is

maximum intensity value of same region of image

LL

1A

. The advantage of using MMS is that it

provides a good parameter to extract a region which

has more critical details.

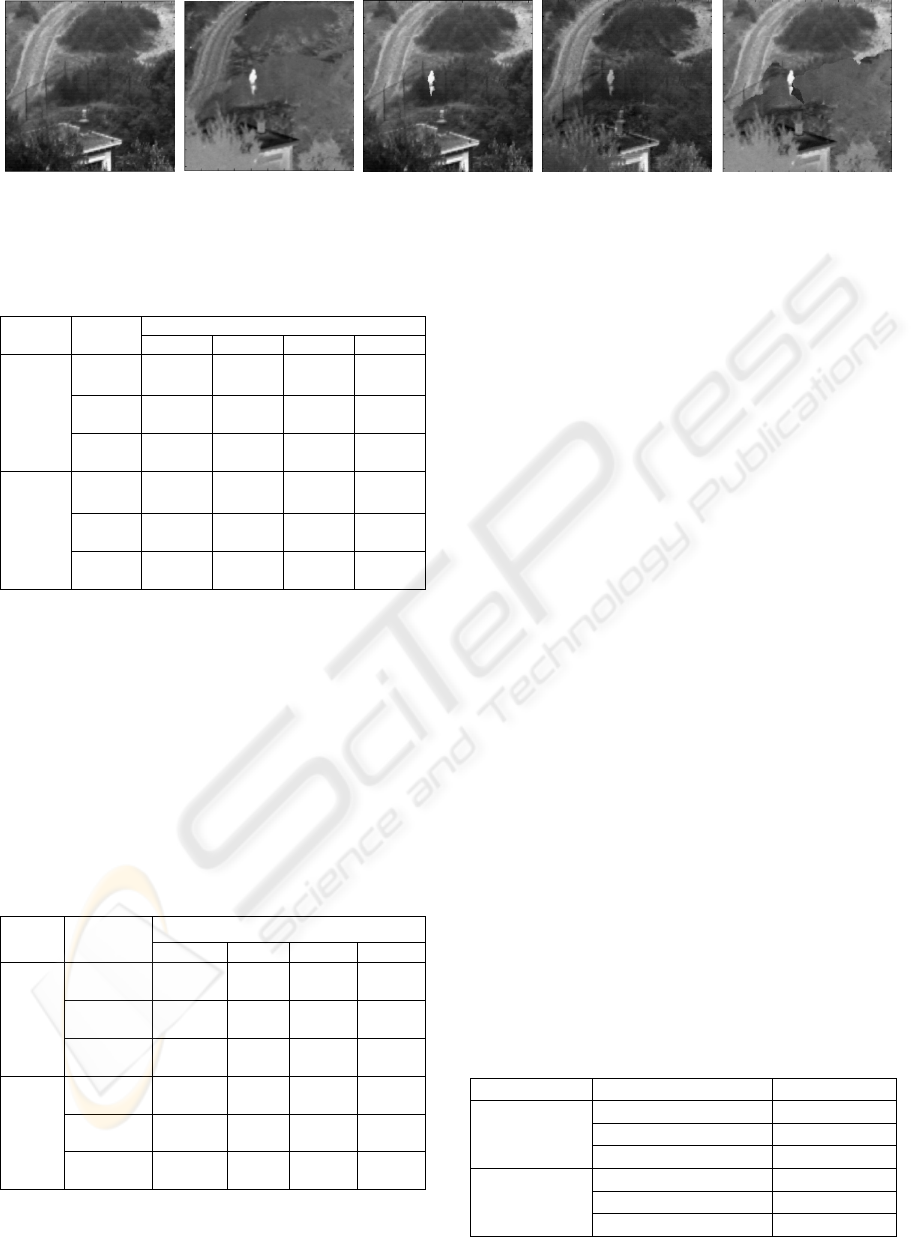

(a) (b)

(c) (d)

Figure 3: Input source multimodality IR image (a) visual

image (b) IR image ((c) Region method (d) Proposed

method.

This evident from simulation results described

later in this paper. MMS based fusion rule is very

important in the case of multimodality multisensor

images shown in Figure 3. This is evident from the

following example. In this example, two source

images (i) using visual camera & (ii) using IR

camera for surveillance application as shown in Fig.

3 (a) & (b) respectively. In visual image,

background is visible but a person is not visible

which an object of interest is. In IR image this man

is visible.

From our study, it is analyzed that for visual

images, SD is high and ME is low where in

multisensor images captured using sensors like

MMW & IR have ME value high & SD is low so in

our algorithm we have used both SD & ME with

maximum intensity value

AnmaxR to derive new

parameter MMS. From the experiments, it is

observed that the low value of MMS is desired to

capture critical regions especially man in this

multisensor images. The fusion rule 2 is described as

below

1

An An Bn

rla

Bn An Bn

RA MMS MMS

I

RA MMS MMS

≤

=

>

⎧

⎨

⎩

(5)

Intermediate fused image

1rla

I

is generated by

fusion rule 2 which is applied for multimodality

images and first fusion rule is applied for multifocus

images. In Fig. 3(c), only region based image fusion

algorithm is applied as described in (Shutao, 2008)

with SF fusion rule. The fusion result generated after

applying MMS fusion rule is shown in the Fig. 3 (d).

It is clearly seen from the results that the MMS rule

is very effective to generate good quality fused

image for multimodality source images. After taking

approximate component by above method, region

consistency rule is applied to select detail

component from both decomposed images, which is

described in (6).

Region consistency rule states that chose detail

components from the results of fusion rule 1 of

approximate component.

rla1 An

1

rla1 Bn

if I =RA

if I =RA

An

rlv

Bn

RD

I

RD

=

⎧

⎨

⎩

(6)

Where

1rlv

I

is first region of vertical component of

image HL

1A

. Similarly

1rlh

I

and

1rld

I

is computed

from image LH

1A

and HH

1A

respectively. If after

applying fusion rule first region is selected from

image IA then all the detail component regions are

also selected from the same image IA. Fused Images

generated with and without applying region

consistency rule are shown in Fig. 4 (c) & (f)

respectively.

Step 4 IDWT is performed to generate Irl1.

Step 5 Repeat the step 1 to 4 for image IB and

generate intermediate fused image Irl2

Step 6 Both Irl1 and Irl2 are averaged to improve

the resultant fused image IFUSE.

This new frame work is an efficient way to

improve the consistency in final fused image and it

avoids distortion due to unwanted information added

without using region consistency rule. The activity

level measured in each region is decided by the

spatial frequency and novel MMS statistical

parameter which is used to generate good quality

IJCCI 2009 - International Joint Conference on Computational Intelligence

342

fused image for all categories of multimodality and

multifocus images. The next section describes image

fusion evaluation criteria in brief.

3 EVALUATION PARAMETERS

We evaluated our algorithm using two categories of

performance evaluation parameters; subjective and

objective for the set of images of various categories.

The objective image fusion parameters are further

divided into reference and non reference based

quality assessment parameters. Fusion performance

can be measured correctly by estimating how much

information is preserved in the fused image

compared to source images.

3.1 Reference based Image Fusion

Parameters

Most widely used reference based image fusion

performance parameters are Entropy, Structural

Similarity Matrix (SSIM), Quality Index (QI),

Mutual Information (MI), Root mean square error

(RMSE). The RMSE and Entropy are well known

parameters to evaluate the amount of information

present in an image (Gonzalez, 2006). Mutual

information (MI) indices are also used to evaluate

the correlative performances of the fused image and

the reference image as explained in (Zheng, 2006)

which is used in this paper as MIr. A higher value of

mutual information (MIr) represents more similar

fused image compared to reference image.

The structural similarity index measure (SSIM)

proposed by Wang and Bovik (Zhou, 2002) is based

on the evidence that human visual system is highly

adapted to structural information and a loss of

structure in fused image indicates amount of

distortion present in fused image. It is designed by

modelling any image distortion as a combination of

three factors; loss of correlation, radiometric

distortion, and contrast distortion as mentioned in

(Zhou, 2002). The dynamic range of SSIM is [-1, 1].

The higher value of SSIM indicates more similar

structures between fused and reference image. If two

images are identical, the similarity is maximal and

equals 1.

3.2 Non Reference Based Image Fusion

Parameter

The Mutual information (MI), the objective image

fusion performance metric (Q

AB/F

), spatial frequency

(SF) and entropy are important image fusion

parameters to evaluate quality of fused image when

reference image is not available. MI described

(Xydeas, 2000) can also be used without the

reference image by computing the MI between

reference image IA, IB and fused image IFUSE. MI

between image IA and IFUSE called as

AFI and

similarly compute

BFI between image IB and IFUSE.

Total MI is computed as described by (7)

A

FBF

M

II I

=

+

(7)

The objective image fusion performance metric

Q

AB/F

which is proposed by Xydeas and Petrovic

(Xydeas, 2000) reflects the quality of visual

information obtained from the fusion of input

images and can be used to compare the performance

of different image fusion algorithms. The range of

Q

AB/F

is between 0 and 1. The value 0 means all

information is lost and 1 means all information is

preserved.

4 SIMULATION RESULTS

The novel region based image fusion algorithm as

described in section 2 has been implemented using

Matlab 7. The proposed algorithm is applied and

evaluated using large number of dataset images

which contain broad range of multifocus and

multimodality images of various categories like

multifocus with only object, object plus text, only

text images and multi modality IR (Infrared) and

MMW (Millimetre Wave) images. The large image

dataset is required to verify the robustness of an

algorithm. The simulation results are shown in Fig. 4

to 8. Proposed algorithm is applied on various

categories of images for different segmentation

levels and after analyzing the results, we have

considered nine segmentation levels for all our

experiments which improve visual quality of final

fused image. The performance of proposed

algorithm is evaluated using standard reference

based and nonreference based image fusion

evaluation parameters explained in previous section.

4.1 Fusion Results of Multi-focus

Images

The multifocus images available in our dataset are of

three kinds (1) object images (2) only text images

and (3) object plus text images which are shown in

Fig. 4 (a) & (b) clock image, Fig. 5 (a) & (b) pepsi

image, Fig. 6 (a) & (b) book image and Fig. 7 (a) &

A NOVEL REGION BASED IMAGE FUSION METHOD USING DWT AND REGION CONSISTENCY RULE

343

(a) (b)

(c) (d) (e)

(f)

Figure 4: Fusion results of multi-focus clock image (a), (b) Multi-focus source images (c) Proposed method (d) DWT & (e)

Li’s method (f) Without region consistancy rule.

(a) (b)

(c) (d) (e)

Figure 5:Fusion results of multi-focus pepsi image (a), (b) Multi-focus source images (c)Proposed method (d)DWT & (e)

Li’s method.

(a) (b)

(c) (d) (e)

Figure 6:Fusion results of multi-focus book image (a), (b) Multi-focus source images (c) Proposed method (d) DWT & (e)

Li’s method.

(a) (b)

(c) (d) (e)

Figure 7:Fusion results of multi-focus text image (a), (b) Multi-focus source images (c) Proposed method (d) DWT & (e)

Li’s method.

(b) text images respectively. In Fig. 4 to 7 column

(a) multifocus images, left portion is blurred and in

column (b) of same figure, right portions of images

is blurred and column (c) shows the corresponding

fused image obtained by applying proposed method

and column (d) and (e) are resultant fused image

obtained by applying pixel based DWT method

proposed by Wang (Anna, 2006) and region based

fusion method proposed by Li and Yang (Shutao,

2008).

The visual quality of the resultant fused image of

proposed algorithm is better than the fused image

obtained by other reported methods. The reference

based and non reference based image fusion

IJCCI 2009 - International Joint Conference on Computational Intelligence

344

(a) (b)

(c) (d) (e)

Figure 8 Fusion results for multimodality IR image (a) visible image (b)IR source image (c)Proposed method (d) DWT &

(e) Li’s method.

Table 1: Ref. based image fusion parameters.

Image

Fusion

Methods

Fusion Parameters

SF MIr RMSE SSIM

Text

image

DWT

Based

8.1956 1.5819 6.3682 0.9259

Li’s

Method

10.405 1.4713 5.2671 0.9749

Proposed

Method

10.736 1.9997 2.8313 0.9904

Book

Image

DWT

Based

12.865 3.8095 7.5994 0.9274

Li’s

Method

16.416 3.6379 5.0847 0.9782

Proposed

Method

17.415 6.5173 1.6120 0.9974

parameter comparisons are depicted in Table 1 and

Table 2. All reference based image fusion

parameters SF, MIr, RMSE and SSIM are

significantly better for proposed algorithm compared

to other methods as depicted in Table 1. Also non

reference based image fusion parameters as depicted

in Table 2 are better than compared methods. In

Table 2, SF and

AB/F

Q

are remarkably better than

other compared fusion methods which is also

evident from the visual quality of resultant fused

image.

Table 2: Non Ref. Based Image Fusion Parameters.

Image

Fusion

Methods

Fusion Parameters

SF MI Q

AB/F

Entropy

Clock

image

DWT

Based

8.1501 5.848 0.5696 8.1506

Li’s

Method

10.113 7.356 0.7156 8.7803

Proposed

Method

10.8792 7.713 0.6850 8.7813

Pepsi

image

DWT

Based

11.6721 2.521 0.9287 8.7293

Li’s

Method

13.532 2.703 0.9683 7.1235

Proposed

Method

13.648 5.863 0.7857 7.2350

4.2 Fusion of Multimodality Images

The effectiveness of the proposed algorithm can be

proved by extending its application to the

multimodality concealed weapon detection (MMW

images) and IR images. MMW camera image with

the gun is shown in Fig. 9 (a) and visible images of a

group of persons are shown in Fig. 9 (b). Here the

aim is to detect gun location in the image by using

the visible image.

In visual camera image details of surrounding

area can be observed in shown Fig. 8 (a) and IR

camera detect the human in captured image as

shown in Fig. 8 (b). The aim of applying fusion

algorithm on IR image is to detect the human and its

location using both source images information. The

visual quality of resultant fused images generated by

applying proposed method is better than other

reported methods. New MMS fusion rule is used in

proposed algorithm. This rule preserves critical

regions in fused image which is also evident in

Table 3. The entropy is significantly better than

region based methods as depicted in Table 3.

Entropy is considered to evaluate the final fusion

results of both IR and MMW multimodality source

images because in both the cases IR and MMW

sensor source images are blurred however in that

case SF and Q

AB/F

do not give significant values for

comparison. The simulated results depicted in Table

1, 2 and 3 show that proposed method performs

better than other compared methods for broad

categories of multifocus and multimodality images.

Table 3: Fusion Parameter for Multisensor Images.

Image Fusion Method Entropy

IR Image

DWT Based

6.6842

Li’s Method

6.0472

Proposed Method

6.7814

MMW Image

DWT Based

4.9802

Li’s Method

3.7593

Proposed Method

6.9502

A NOVEL REGION BASED IMAGE FUSION METHOD USING DWT AND REGION CONSISTENCY RULE

345

(a) (b)

(c) (d) (e)

Figure 9: Fusion results for multimodality MMW image(a)visual image(b)MMW image(c)Proposed method (d) DWT & (e)

Li’s method.

5 CONCLUSIONS

In this paper, new region based image fusion method

using region consistency rule is described. This

novel idea is applied on large number of dataset of

various categories and simulation results are found

with superior visual quality compared to other

earlier reported pixel and recently proposed Li’s

region based image fusion methods. The novel

MMS fusion rule is introduced to select desired

regions from multimodality images. Proposed

algorithm is compared with standard reference based

and nonreference based image fusion parameters and

from simulation and results, it is evident that our

proposed algorithm preserves more details in fused

image. Proposed algorithm can be further improved

by designing more complex fusion rule.

REFERENCES

Anna, Wang, Jaijining, Sun, Yueyang, Guan, 2006. The

application to wavelet transform to multimodality

medical image fusion. IEEE International Conference

on Networking, Sensing and Control, pp. 270-274.

Devid, Hall, James, LLians, 2001. Hand book of

multisensor data fusion. CRC Press LLC,

Gonzales, Rofael, Richard, Woods, 2006. Digital Image

Processing, Pearson Education, 2nd ed.

Mallat, S., 1989. A theory for multiresoultuion signal

decomposition: the wavelet representation, IEEE

Trans. On Pattern Analysis and Machine Intelligence,

Vol. 2(7), pp. 674-693.

Miao, Qiguang, Wang, Baoshul, 2006. A novel image

fusion method using contourlet transform.

International Conference on Communications, Circuits

and Systems Proceedings, Vol. 1, pp 548-552.

Piella, G., 2003. A general framework for multiresolution

image fusion: from pixels to regions. Journal of

Information Fusion, Vol. 4 (4), pp 259-280.

Piella, Gemma, 2002. A region based multiresolution

image fusion algorithm. Proceedings of the Fifth

International Conference on Information Fusion, Vol.

2, pp 1557- 1564.

Shi, J., Malik, J., 2000. Normalized cuts and image

segmentation, IEEE Transactions on Pattern Analysis

and Machine Intelligence, Vol. 22 (8), 888–905.

Shutao, Li, Bin, Yang, 2008. Multifocus image fusion

using region segmentation and spatial frequency,

Image and Vision Computing, Elsevier, Vol. 26, pp.

971–979.

Timothee Cour, Florence Benezit, Jianbo Shi, Multiscale

Normalized Cuts Segmentation Toolbox for

MATLAB, available at http://www.seas.upenn.edu/

~timothee.

Xydeas, C., S., V., Petrovic, 2000. Objective image fusion

performance measure, Electronics Letters, Vol. 36 (4),

pp 308-309. February 2000.

Yin Chen, Rick S. Blum, 2008 A automated quality

assessment algorithm for image fusion,” Image and

vision computing, Elsevier.

Zheng, Liu, Robert, Laganiere, 2006. On the use of phase

congruency to evaluate image similarity”, IEEE

International Conference on Acoustics, Speech and

Signal Processing, ICASSP, Vol. 2, pp 937-940.

Zhong, Zhang, Rick, Blum, 1999. A categorization of

multiscale decomposition based image fusion schemes

with a performance study for digital camera

application. Proceedings of IEEE, Vol. 87 (8), pp

1315-1326.

Zhou Wang, Alan Bovik, 2002. A universal image quality

index, IEEE signal processing letters, Vol. 9, pp. 81-

81.

IJCCI 2009 - International Joint Conference on Computational Intelligence

346