SOME RESULTS ON A MULTIVARIATE GENERALIZATION

OF THE FUZZY LEAST SQUARE REGRESSION

Francesco Campobasso, Annarita Fanizzi and Marina Tarantini

Department of Statistical Sciences “Carlo Cecchi”, University of Bari, Italy

Keywords: Fuzzy least square regression, Multivariate generalization, Total deviance, Decomposition, Goodness of

fitting.

Abstract: Fuzzy regression techniques can be used to fit fuzzy data into a regression model, where the deviations

between the dependent variable and the model are connected with the uncertain nature either of the

variables or of their coefficients. P.M. Diamond (1988) treated the case of a simple fuzzy regression of an

uncertain dependent variable on a single uncertain independent variable, introducing a metrics into the space

of triangular fuzzy numbers. In this work we managed more than a single independent variable, determining

the corresponding estimates and providing some theoretical results about the decomposition of the sum of

squares of the dependent variable according to Diamond’s metric, in order to identify its components.

1 INTRODUCTION

Modalities of quantitative variables are commonly

given as exact single values, although sometimes

they cannot be precise. The imprecision of

measuring instruments and the continuous nature of

some observations, for example, prevent researcher

from obtaining the corresponding true values.

On the other hand qualitative variables are

commonly expressed using common linguistic

terms, which also represent verbal labels of sets with

uncertain borders. This is the case of the answers

provided in the customer satisfaction surveys, which

are collected through ordered categories from “not at

all” to “completely”.

The appropriate way to manage such an

uncertainty of observations is provided by fuzzy

theory.

In 1988 P. M Diamond introduced a metric onto

the space of triangular fuzzy numbers and derived

the expression of the estimated coefficients in a

simple fuzzy regression of an uncertain dependent

variable on a single uncertain independent variable.

Starting from a multivariate generalization of this

regression, we give important results about the

decomposition of the deviance of the dependent

variable according to Diamond’s metric.

2 THE FUZZY LEAST SQUARE

REGRESSION

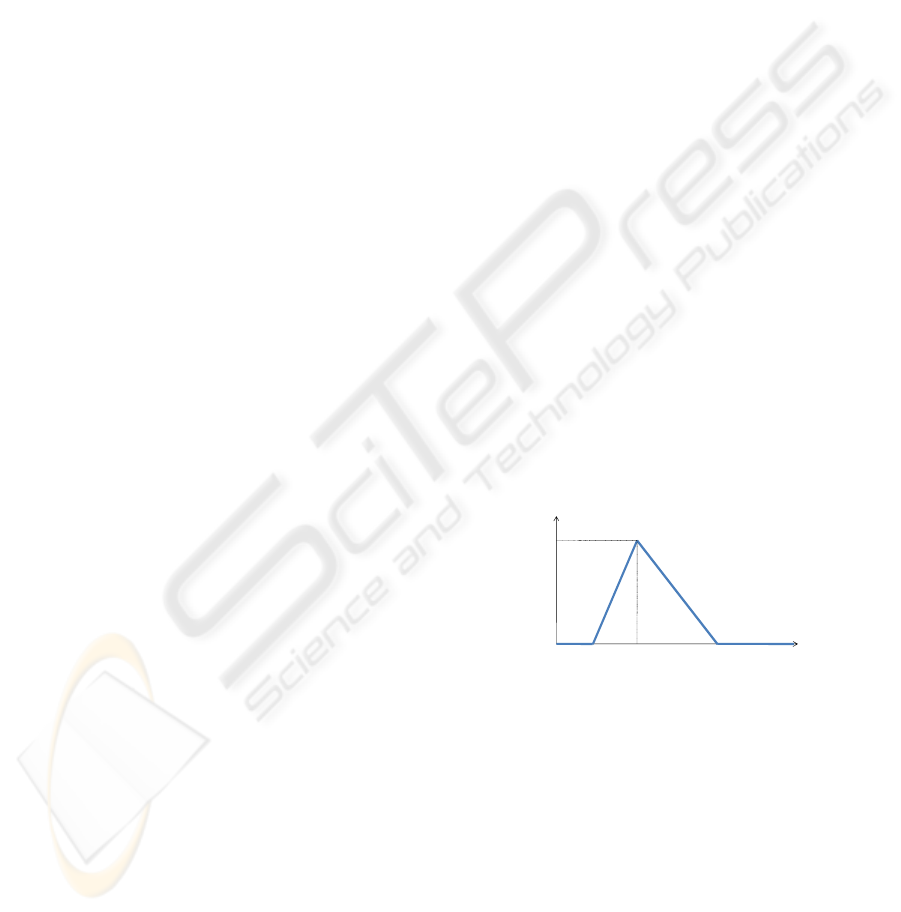

A triangular fuzzy number

T

RL

)x,x,x(X

~

= for the

variable X is characterized by a membership

function

)(xμ

i

X

~

like the one represented in Fig.1.

x

L

x x

R

X

X

~

μ

1

Figure 1: Representation of a triangular fuzzy number.

The accumulation value x is considered the centre of

the fuzzy number, while x-x

L

and x

R

-x are

considered the left spread and the right spread

respectively. Note that x belongs to

X

~

with the

highest degree, while the other values included

between the extremes x

L

and x

R

belong to

X

~

with a

gradually lower degree.

The set of triangular fuzzy numbers is closed

with respect to sum: given two triangular fuzzy

numbers

T

RL

)x,x,x(X

~

= and

T

RL

)y,y,y(Y

~

= , their

sum is still a triangular fuzzy number

=Z

~

Y

~

X

~

+ =

T

RRLL

)yx,yx,yx( +++ . Moreover the

75

Campobasso F., Fanizzi A. and Tarantini M. (2009).

SOME RESULTS ON A MULTIVARIATE GENERALIZATION OF THE FUZZY LEAST SQUARE REGRESSION.

In Proceedings of the International Joint Conference on Computational Intelligence, pages 75-78

DOI: 10.5220/0002321200750078

Copyright

c

SciTePress

product of a triangular fuzzy number

T

RL

)x,x,x(X

~

=

and a real number k depends on the sign of the latter,

resulting equal to

T

RL

)kx,kx,kx(X

~

k =

if k is positive

or

T

LR

)kx,kx,kx(X

~

k = if k is negative.

P.M. Diamond (1988) introduced a metric onto

the space of triangular fuzzy numbers; according to

this metric, the distance between

X

~

and Y

~

is

()

2RR2LL2

2

T

RL

T

RL2

)yx()yx()yx()y,y(y,,)x,x,x(d)Y

~

,X

~

(d −+−+−==

.

In particular Diamond analysed the regression

model of a fuzzy dependent variable

Y

~

on a single

fuzzy independent variable

X

~

:

Y

~

= a + b X

~

+ ε.

The expression of the corresponding parameters

is derived from minimizing the sum

∑

+

=

n

1i

2

ii

)Y

~

,X

~

ba(d

of the squared distances between theoretical and

empirical values in n observed units of the fuzzy

dependent variable

Y

~

with respect to a and b.

Such a sum takes different forms according to

the signs of the coefficient b, as the product of a

fuzzy number

T

RL

)x,x,x(X

~

= and a real number k

depends on whether the latter is positive or negative.

Therefore, multiplying by a negative real number,

the right extreme of the fuzzy number is obtained by

adding the left spread to the centre, while its left

extreme is obtained by subtracting the right spread

from the centre.

Diamond demonstrated that the optimization

problem has a unique solution under certain

conditions.

3 A MULTIVARIATE

GENERALIZATION OF THE

REGRESSION MODEL

Recently we generalized this estimation procedure to

the case of k independent variables (k≥1). Let’s

assume to observe a fuzzy dependent variable

Ti

i

ii

)μ,μ,(yY

~

= and two fuzzy independent variables,

Ti

i

ii

),,x(X

~

ξξ= and

Ti

i

ii

)δ,δ,(zZ

~

= , on a set of n

units. The linear regression model is given by

i

Y

~

*= a +b

i

X

~

+c

i

Z

~

, i=1,2, ...,n; a,b,c ∈ IR.

The corresponding parameters are determined by

minimizing the sum of Diamond’s distances

between theoretical and empirical values of the

dependent variable

∑

=

++

n

1i

2

iii

)Y

~

,Z

~

cX

~

bd(a

(1)

respect to a, b and c. As we stated above, such a

sum assumes different expressions according to the

signs of the regression coefficients b and c. This

generates the following four cases

Case 1

: b≥0, c≥0

∑

=

=++

n

1i

2

iii

)Y

~

,Z

~

cX

~

ba(d

(

)

()

()

[

]

∑

=

−−−+−−−+−−−=

n

1i

2

R

i

R

i

R

i

2

iii

2

L

i

L

i

L

i

czbxayczbxayczbxay

where

y

L

i

= y

i

-

i

μ

, y

R

i

= y

i

+

i

μ and x

L

i

, x

R

i

, z

L

i

, z

R

i

have

similar meanings.

Case 2

: b<0, c≥0

∑

=

=++

n

1i

2

iii

)Y

~

,Z

~

cX

~

ba(d

(

)

()

()

[

]

∑

=

−−−+−−−+−−−=

n

1i

2

R

i

L

i

R

i

2

iii

2

L

i

R

i

L

i

czbxayczbxayczbxay

Case 3

: b≥0, c<0

∑

=

=++

n

1i

2

iii

)Y

~

,Z

~

cX

~

ba(d

(

)

()

()

[

]

∑

=

−−−+−−−+−−−

n

1i

2

L

i

R

i

R

i

2

iii

2

R

i

L

i

L

i

czbxayczbxayczbxay

Case 4

: b<0, c<0

∑

=

=++

n

1i

2

iii

)Y

~

,Z

~

cX

~

ba(d

(

)

()

()

[

]

∑

=

−−−+−−−+−−−

n

1i

2

L

i

L

i

R

i

2

iii

2

R

i

R

i

L

i

czbxayczbxayczbxay

Let’s consider, as an example, case 3. The

expression to be minimized is given by

(y

L

-X

LR

β)'(y

L

-X

LR

β)+(y-Xβ)'(y-Xβ)+(y

R

-X

RL

β)'(y

R

-X

RL

β) (2)

in matricial terms, where

y

L

e y

R

are n-dimensional vectors, whose elements

are the lower extremes y

L

i

= y

i

-

i

μ

and the upper

extremes y

R

i

= y

i

+

i

μ

respectively;

X

LR

is the n×3 matrix, formed by vectors 1,

x

L

=[x

L

i

= x

i

-

i

ξ

] and z

R

=[z

R

i

= z

i

+

i

δ ];

X

RL

is the n×3 matrix (analogous to X

LR

), formed by

vectors 1, x

R

, z

L

;

y is the n-dimensional vector of centres y

i

;

X is the n×3 matrix formed by vectors 1, x

C

=[x

i

],

z

C

=[z

i

];

β is the vector (a, b, c) '.

Similarly to OLS estimation procedure, the

optimization problem admits a single and finite

solution if [(X

LR

)' X

LR

+ (X)' X + (X

RL

)' X

RL

] is

invertible and the hessian matrix [2(X

LR

)' X

LR

+

2(X)' X + 2(X

LR

)' X

RL

] is definite positive. The

matricial expression of the fuzzy least square (FLS)

estimator is given by

β=[(X

LR

)'X

LR

+X'X+(X

RL

)'X

RL

]

-1

[(X

LR

)'y

L

+X'y+(X

RL

)'y

R

].

IJCCI 2009 - International Joint Conference on Computational Intelligence

76

It’s worth noticing that the FLS estimator would

equal the OLS one if the observed variables were

crisp. The found solution β

*

=(a

*

,b

*

, c

*

) is admissible

if the signs of the regression coefficients are

coherent with basic assumptions (b

*

≥0 and c

*

<0).

In the remaining three cases the expression (2)

to be minimized is obtained after replacing X

LR

and

X

RL

by X

LL

and X

RR

(case 1), by X

RL

and X

LR

(case 2), X

RR

and X

LL

(case 4) respectively.

The optimum solution corresponds to that

(admissible) one which makes minimum (1) among

all.

Note that the generalization of such a procedure

to the case of several independent variables is

immediate and that the number of solutions to

analyse, in order to identify the optimum one,

growths exponentially with the considered number

of variables. For example, if the model includes k

independent variables, 2

k

possible cases must be

taken into account, which derive from combining the

signs of the regression coefficients.

4 DECOMPOSITION OF TOTAL

DEVIANCE OF THE

DEPENDENT VARIABLE

In this section two important theoretical results will

be demonstrated: the first one regards the inequality

between theoretical and empirical values of the

average fuzzy dependent variable (unlike in the OLS

estimation procedure for crisp variables); the second

one regards the decomposition of the total deviance

of the dependent variable, which involves other two

additive components besides the regression and the

residual deviances.

It is necessary to obtain preliminary results for

this purpose. After considering, only for example,

the case 3 and in particular rewriting (2) as

[(X

LR

)'X

LR

+(X)'X+(X

RL

)'X

RL

]β=[(X

LR

)'y

L

+(X)'y+(X

RL

)'y

R

],

we can obtain the following system of equations:

⎪

⎪

⎪

⎩

⎪

⎪

⎪

⎨

⎧

∑

++=

=

∑

+++

∑

+++

∑

++

∑

++=

=

∑

+++

∑

+++

∑

++

∑

++=

∑

+++

∑

+++

)zyzyzy(

))z()z()z((c)zxzxzx(b)zzz(a

)xyxyxy(

)zxzxzx(

c))x()x()x((b)xxx(a

)yyy()zzz(c)xxx(bna3

L

i

R

iii

R

i

L

i

2L

i

2

i

2R

i

L

i

R

iii

R

i

L

i

L

ii

R

i

R

i

R

iii

L

i

L

i

L

i

R

iii

R

i

L

i

2R

i

2

i

2L

i

R

ii

L

i

R

ii

L

i

L

ii

R

i

R

ii

L

i

⇔

⎪

⎪

⎪

⎪

⎩

⎪

⎪

⎪

⎪

⎨

⎧

∑∑

+

∑

+=

=

∑

+++

∑

+++

∑

++

∑∑

+

∑

+=

=

∑

+++

∑

+++

∑

++

∑∑

+

∑

+=

=

∑

+++

∑

+++

∑

++

L

i

R

iii

R

i

L

i

L

i

L

i

R

iiii

R

i

R

i

L

i

R

i

R

iii

L

i

L

i

R

i

L

i

R

iiii

L

i

R

i

L

i

R

ii

L

i

L

i

R

iii

R

i

L

i

zyzyzy

z)czbxa(z)czbxa(z)czbxa(

xyxyxy

x)czbxa(x)czbxa(x)czbxa(

yyy

)czbxa()czbxa()czbxa(

⇔

(3)

(4)

(5)

***

***

***

yyyyyy

yx yx yx yx yx yx

yz yz yz yz yz yz

LRLR

i

iiiii

LL RR LL RR

i

ii iii ii iiii

LR RL LR RL

i

ii iii ii iiii

⎧

++ = ++

⎪

⎪

⎪

++ = ++

⎨

⎪

⎪

++ = ++

⎪

⎩

∑∑∑∑∑∑

∑∑∑∑∑∑

∑∑∑∑∑∑

Equation (3) shows that the total sum of lower

extremes, centres and upper extremes of the

theoretical values of the dependent variable

coincides with the same amount referred to the

empirical values. Such an equation does not allow us

to say that theoretical and empirical values of the

average fuzzy dependent variable coincide.

Let’s examine how the total deviance of Y can

be decomposed according to Diamond’s metric:

Dev(Tot)=

∑

−+−+− ])yy()yy()yy[(

2RR

i

2

i

2LL

i

.

Adding and subtracting the corresponding

theoretical value within each square and developing

all the squares, the total deviance can be expressed

as

=−−+−+

+−+−−+−+−+

+−−+−+−=

∑

)]yy)(yy(2)yy(

)yy()yy)(yy(2)yy()yy(

)yy)(yy(2)yy()yy([ Dev(Tot)

RR*

i

R*

i

R

i

2RR*

i

2R*

i

R

i

*

i

*

ii

2*

i

2*

ii

LL*

i

L*

i

L

i

2LL*

i

2L*

i

L

i

[

]

[]

[]

.)yy)(yy(2)yy)(yy(2)yy)(yy(2

)yy()yy()yy(

)yy()yy()yy(

RR*

i

R*

i

R

i

*

i

*

ii

LL*

i

L*

i

L

i

2RR*

i

2*

i

2LL*

i

2R*

i

R

i

2*

ii

2L*

i

L

i

∑

∑

∑∑∑

−−+−−+−−+

+−+−+−+

+−+−+−=

Adding and subtracting the theoretical average

values of the lower extremes, of the centres and of

the upper extremes of the dependent variable within

each square and solving all the squares, the previous

expression becomes

[

]

[]

[]

=−−+−−+−−+

+−+−+−+−+−+−+

+−+−+−=

∑

∑

∑∑∑

)yy)(yy(2)yy)(yy(2)yy)(yy(2

)yyyy()yyyy()yyyy(

)yy()yy()yy(Dev(Tot)

RR*

i

R*

i

R

i

*

i

*

ii

LL*

i

L*

i

L

i

2RR*R*R*

i

2***

i

2LL*L*L*

i

2R*

i

R

i

2*

ii

2L*

i

L

i

[

]

[]

)]yy)(yy(2)yy)(yy(2

)yy)(yy(2)yy)(yy(2

)yy)(yy(2)yy)(yy(2[

])yy()yy()yy[(n

)yy()yy()yy(

)yy()yy()yy(

RR*

i

R*

i

R

i

*

i

*

i

i

LL*

i

L*

i

L

i

RR*R*R*

i

***

i

LL*L*L*

i

2RR*2*2LL*

2R*R*

i

2**

i

2L*L*

i

2R*

i

R

i

2*

i

i

2L*

i

L

i

−−+−−+

+−−+−−+

+−−+−−+

+−+−+−+

+−+−+−+

+−+−+−=

∑

∑

∑

(6)

where

SOME RESULTS ON A MULTIVARIATE GENERALIZATION OF THE FUZZY LEAST SQUARE REGRESSION

77

Dev(Res)=])yy()yy()yy[(

2R*

i

R

i

2L*

i

L

i

2*

ii

∑

−+−+−

represents the residual deviance,

Dev(Regr)=])yy()yy()yy[(

2R*R*

i

2**

i

2L*L*

i

∑

−Σ+−Σ+−

represents the regression deviance and

])yy()yy()yy[(

2RR*2*2LL*

−+−+−

=

2*

)Y,Y(d

represents the distance between theoretical and

empirical average values of Y.

Synthetically the expression (6) can be written as:

Dev(Tot) η+++=

2*

)Y,Y(nd)gr(ReDev)s(ReDev

where:

)].yy)(yy()yy)(yy()yy)(yy([2

)]yy)(yy()yy)(yy()yy)(yy[(2

RR*

i

R*

i

R

i

*

i

*

ii

LL*

i

L*

i

L

i

RR*R*R*

i

***

i

LL*L*L*

i

−−+−−+−−+

+−−+−−+−−=η

∑

∑

As the sums of deviations of each component

from its average equal zero, then it is

[]

=−−+−−+−−

∑

)yy)(yy()yy)(yy()yy)(yy(2

RR*R*R*

i

***

i

LL*L*L*

i

0)]yy()yy()yy()yy()yy()yy[(2

R*R*

i

RR***

i

*L*L*

i

LL*

=−−+−−+−−=

∑∑∑

and the amount η is reduced to

=−−+

+−−+−−=η

∑

∑∑

)yy)(yy(2

)yy)(yy(2)yy)(yy(2

RR*

i

R*

i

R

i

*

i

*

ii

LL*

i

L*

i

L

i

.y)yy(2y)yy(2y)yy(2

y)yy(2y)yy(2y)yy(2

RR*

i

R

i

R*

i

R*

i

R

i

*

ii

*

i

*

ii

LL*

i

L

i

L*

i

L*

i

L

i

∑∑∑

∑∑∑

−−−+−−

+−+−−−=

(7)

Moreover, as it is

L*

i

y =

R

i

L

i

czbxa ++ ,

*

i

y =

ii

czbxa ++ ,

R*

i

y =

L

i

R

i

czbxa ++

it is also

0y)yy(2y)yy(2y)yy(2

R*

i

R*

i

R

i

*

i

*

ii

L*

i

L*

i

L

i

=−+−+−

∑∑∑

.

By replacing expressions of the theoretical values in

the latter equation, we obtain

=++−+

+++−+++−=

∑

∑∑

])czbxa)(yy(

)czbxa)(yy()czbxa)(yy([2

L

i

R

i

R*

i

R

i

ii

*

ii

R

i

L

i

L*

i

L

i

)]}zyzyzy()zyzyzy[(c

)]xyxyxy()xyxyxy[(b

)]yyy()yyy[(a{2

L

i

R*

ii

*

i

R

i

L*

i

L

i

R

iii

R

i

L

i

R

i

R*

ii

*

i

L

i

L*

i

R

i

R

iii

L

i

L

i

R*

i

*

i

L*

i

R

ii

L

i

Σ+Σ+Σ−Σ+Σ+Σ+

+Σ+Σ+Σ−Σ+Σ+Σ+

+Σ+Σ+Σ−Σ+Σ+Σ=

where

()( )

0yyyyyy

R*

i

*

i

L*

i

R

ii

L

i

=Σ+Σ+Σ−Σ+Σ+Σ

for (3),

()

(

)

0xyxyxyxyxyxy

R

i

R*

ii

*

i

L

i

L*

i

R

i

R

iii

L

i

L

i

=Σ+Σ+Σ−Σ+Σ+Σ

for (4),

()

(

)

0zyzyzyzyzyzy

L

i

R*

ii

*

i

R

i

L*

i

L

i

R

iii

R

i

L

i

=Σ+Σ+Σ−Σ+Σ+Σ

for (5).

Finally the expression (7) can be reduced to

=−−−−−−=η

∑∑∑

RR*

i

R

i

*

ii

LL*

i

L

i

y)yy(2y)yy(2y)yy(2

)eyeyey(2

R

i

R

i

L

i

L

Σ+Σ+Σ− .

Note that, if the residual deviance equals zero, also

η and

2*

)Y,Y(d equal zero, because theoretical and

empirical average values of Y coincide for each

observation.

Therefore:

- if the regression deviance equals zero, then the

model has no forecasting ability, because the sum of

the components of the i-th estimated fuzzy value

equal the sum of the sample average components (i

= 1 ,..., n). Actually, if Dev (regr) = 0, for each i we

have

∑∑∑∑∑∑

++=++

R

ii

L

i

R*

i

*

i

L*

i

yyyyyy =>

=>

RLR*

i

*

i

L*

i

ynynynnynyny ++=++ =>

=>

RLR*

i

*

i

L*

i

yyyyyy ++=++ ;

- if the residual deviance equals zero, the

relationship between dependent variable and

independent ones is well represented by the

estimated model. In this case, the total deviance is

entirely explained by the regression deviance.

As usual, the largest the regression deviance is

(the smallest the residual deviance is), the better the

model fits data.

5 CONCLUSIONS

In this work, starting from a multivariate

generalization of the Fuzzy Least Square

Regression, we have decomposed the total deviance

of the dependent variable according to the metric

proposed by Diamond (1988). In particular we have

obtained the expression of two additional

components of variability, besides the regression

deviance and the residual one, which arise from the

inequality between theoretical and empirical values

of the average fuzzy dependent variable (unlike in

the OLS estimation procedure for crisp variables).

REFERENCES

Campobasso, F., Fanizzi, A., Tarantini, M., 2008, Fuzzy

Least Square Regression,

Annals of Department of

Statistical Sciences, University of Bari, Italy, 229-243.

Diamond, P. M., 1988. Fuzzy Least Square, Information

Sciences

, 46:141-157.

Kao, C.,Chyu, C.L., 2003, Least-squares estimates in

fuzzy regression analysis,

European Journal of

Operational Research

, 148:426-435.

Takemura, K., 2005. Fuzzy least squares regression

analysis for social judgment study,

Journal of

Advanced Intelligent Computing and Intelligent

Informatics, 9(5), 461:466.

IJCCI 2009 - International Joint Conference on Computational Intelligence

78