SMART RECOGNITION SYSTEM FOR THE ALPHANUMERIC

Content in Car License Plates

A. Akoum, B. Daya

Lebanese University, Institute of Technology, P.O.B. 813, Saida, Lebanon

P. Chauvet

Institut de Mathématiques Appliquées, UCO, CREAM/IRFA, 3, place André-Leroy

BP10808 – 49008 Angers - France

Keywords: License plate, Image processing, Segmentation, Extraction, Character recognition, Artificial neural network.

Abstract: A license plate recognition system is an automatic system that is able to recognize a license plate number,

extracted from an image device. Such system is useful in many fields and places: parking lots, private and

public entrances, border control, theft and vandalism control. In our paper we designed such a system. First

we separated each digit from the license plate using image processing tools. Then we built a classifier,

using a training set based on digits extracted from approximately 350 license plates. Our approach is

considered to identify vehicle through recognizing of its license plate using two different types of neural

networks: Hopfield and the multi layer perceptron "MLP". A comparative result has shown the ability to

recognize the license plate successfully.

1 INTRODUCTION

Optical character recognition has always been

investigated during the recent years, within the

context of pattern recognition (Swartz, 1999). The

broad interest lies mostly in the diversity and

multitude of the problems that may be solved (for

different language sets), and also to the ability to

integrate advanced machine intelligence techniques

for that purpose; thus, a number of applications has

appeared (Park, 2000; Omachi, 2000).

The steps involved in recognition of the license

plate are acquisition, candidate region extraction,

segmentation, and recognition. There is batch of

literature in this area. Some of the related work is as

follows: (Hontani, 2001) has proposed method heart

extracting characters without prior knowledge of

their position and size. (Cowell, 2002) has discussed

the recognition of individual Arabic and Latin

characters. Their approach identifies the characters

based on the number of black pixel rows and

columns of the character and comparison of those

values to a set of templates or signatures in the

database. (Yu, 2000) have used template matching.

In the proposed system high resolution digital

camera is used for heart acquisition.

The intelligent visual systems are requested more

and more in applications to industrial and deprived

calling: biometrics, ordering of robots, substitution

of a handicap, plays virtual, they make use of the

last scientific projections in vision by computer

(Daya, 2006), artificial training and pattern

recognition (Prevost, 2005).

The present work examines the recognition and

identification -in digital images- of the alphanumeric

content in car license plates. The images are

obtained from a base of abundant data, where

variations of the light intensity are common and

small translations and or rotations are permitted. Our

approach is considered to identify vehicle through

recognizing of it license plate using, two processes:

one to extract the block of license plate from the

initial image containing the vehicle, and the second

to extract characters from the licence plate image.

The last step is to recognize licence plate characters

and identify the vehicle. For this, we used two

different neural networks with 42x24 neurons as the

dimension of each character. The network must

memorize all the Training Data (36 characters). For

the validation of the network, we have built a

program that can read the sequence of characters,

565

Akoum A., Daya B. and Chauvet P. (2009).

SMART RECOGNITION SYSTEM FOR THE ALPHANUMERIC - Content in Car License Plates.

In Proceedings of the International Joint Conference on Computational Intelligence, pages 565-568

DOI: 10.5220/0002321805650568

Copyright

c

SciTePress

split each character, re-size it and finally display the

result on a Notepad editor.

The rest of the paper is organized as follows: In

Section 2, we present the real dataset used in our

experiment. We give in section 3 the description of

our algorithm which extracts the characters from the

license plate. Section 4 gives the experimental

results the recognizing of characters using two types

of neural networks architecture. Section 5 contains

our conclusion.

2 DATABASES

The database (Base Images with License) contains

images of good quality (high-resolution: 1280x960

pixels resizes to 120x180 pixels) of vehicles seen of

face, more or less near, parked either in the street or

in a car park, with a negligible slope.

The images employed have characteristics which

limit the use of certain methods. In addition, the

images are in level of gray, which eliminates the

methods using color spaces.

Figure 1 : Some examples from the database training.

3 LICENSE PLATE

CHARACTERS EXTRACTING

Our algorithm is based on the fact where an area of

text is characterized by its strong variation between

the levels of gray and this is due to the passage from

the text to the background and vice versa (see fig.

1.). Thus by locating all the segments marked by this

strong variation and while keeping those that are cut

by the axis of symmetry of the vehicle found in the

preceding stage, and by gathering them, one obtains

blocks to which we consider certain constraints

(surface, width, height, the width ratio/height,…) in

order to recover the areas of text candidates i.e. the

areas which can be the number plate of the vehicle

in the image.

Figure 2: Selecting of license plate.

Figure 3: Extracting of license plate.

We digitize each block then we calculate the

relationship between the number of white pixels and

that of the black pixels (minimum/maximum). This

report/ratio corresponds to the proportion of the text

on background which must be higher than 0.15 (the

text occupies more than 15% of the block).

First, the block of the plate detected in gray will

be converted into binary code, and we construct a

matrix with the same size block detected. Then we

make a histogram that shows the variations of black

and white characters.

To filter the noise, we proceed as follows: we

calculate the sum of the matrix column by column,

then we calculate the min_sumbc and max_sumbc

representing the minimum and the maximum of the

black and white variations detected in the plaque.

All variations which are less than 0.08 * max_sumbc

will be considered as noises. These will be canceled

facilitating the cutting of characters.

Figure 4: Histogram to see the variation black and white of

the characters. The characters are separated by several

vertical lines by detecting the columns completely black.

To define each character, we detect areas with

minimum variation (equal to min_sumbc). The first

detection of a greater variation of the minimum

value will indicate the beginning of one character.

And when we find again another minimum of

IJCCI 2009 - International Joint Conference on Computational Intelligence

566

variation, this indicates the end of the character. So,

we construct a matrix for each character detected.

The Headers of the detected characters are

considered as noise and must be cut. Thus, we make

a 90 degree rotation for each character and then

perform the same work as before to remove these

white areas.

Figure 5: Extraction of one character.

A second filter can be done at this stage to

eliminate the small blocks through a process similar

to that of extraction by variations black white

column.

Finally, we make the rotation 3 times for each

image to return to its normal state. Then, we convert

the text in black and change the dimensions of each

extracted character to adapt it to our system of

recognition (Hopfield and MLP neural network).

4 RECOGNIZING OF

CHARACTERS USING OUR

APPROACH NEURAL

The character sequence of license plate uniquely

identifies the vehicle. It is proposed to use artificial

neural networks for recognizing of license plate

characters, taking into account their properties to be

as an associative memory. Using neural network has

advantage from existing correlation and statistics

template techniques (B. Kroese, 1996) that allow

being stable to noises and some position

modifications of characters on license plate.

Our approach is considered to identify vehicle

through recognizing its license plate using, Hopfield

networks with 42x24 neurons as the dimension of

each character. The network must memorize all the

Training Data (36 characters). For the validation of

the network we have built a program that reads the

sequence of characters, to cut each character and

resize it and put the result on a Notepad editor. A

comparison with an MLP network is very

appreciated to evaluate the performance of each

network.

For this analysis a special code has been

developed in MATLAB. Our Software is available

to do the following:

1) Load a validation pattern.

2) Choose architecture for solving the character

recognition problem, among these 6 architectures:

"HOP112": Hopfield architecture, for pictures

of 14x8 pixels (forming vector of length 112).

"HOP252": Hopfield, for 21x12 pixels.

"HOP1008": Hopfield, for 42x24 pixels.

"MLP112": Multi Layer Perceptron

architecture, for pictures of 14x8 pixels.

"MLP252": MLP, for 21x12 pixels.

"MLP1008": MLP, for 42x24 pixels.

3) Calculate time of processing of validation

(important for “real applications”).

Figure 6: The graphic interface which recognizes the plate

number and posts the result in text form.

For our study, we used 3 kinds of Hopfield

Networks and 3 kinds of MLP Networks, always

with one hidden layer. In the case of MLPs, we train

one MLP per character; it means that there are 36

MLPs for doing the recognition. In the case of

Hopfield there is only one network that memorizes

all the characters.

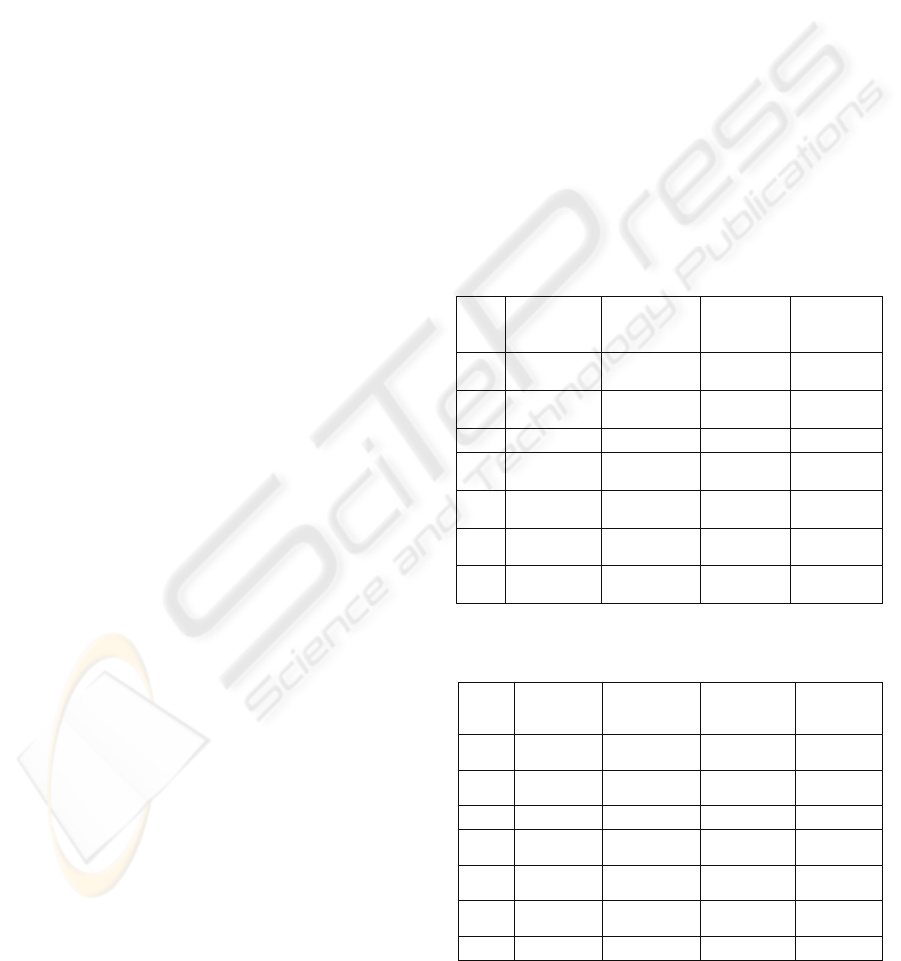

Table 1: The performance of each Neural Network

Architecture (multi layer perceptron and Hopfield).

Neural

Network

Number

of

neurons

Total

Symbols

Total

Errors

Perf

(%)

HOP 1008 1130 144 87 %

MLP 1008 1130 400 64 %

HOP 252 1130 207 84%

MLP 252 1130 255 80 %

HOP 112 1130 342 69 %

`MLP 112 1130 355 68 %

The table 1 shows the performance of each

neural architecture for the six different cases. Tables

2 and 3 “see appendix” shows all the recognitions

for all the patterns. First column corresponds to the

file's name of the plate number; second column the

plate number observed with (our eye) and from

SMART RECOGNITION SYSTEM FOR THE ALPHANUMERIC - Content in Car License Plates

567

columns 3 to 5 there is the plate number that each

architecture has recognized. The last row

corresponds to the average processing time that

takes for each network.

In the case of Hopfield recognition, when the

network doesn’t reach a known stable state it gives

the symbols “?”. Hopfield Networks have

demonstrated better performance 87% than MLPs

regarding OCR field. A negative point in the case of

Hopfield is the processing time, in the case of

pictures of 42x24 pixels (90 seconds average, versus

only 3 seconds in the case of pictures of 21x12

pixels). It can be observed also that cases

"HOP1008" and "HOP252" don’t present an

appreciable difference regarding performance.

5 CONCLUSIONS

The purpose of this paper is to investigate the

possibility of automatic recognition of vehicle

license plate.

Our algorithm of license plate recognition,

allows to extract the characters from the block of the

the plate, and then to identify them using artificial

neural network. The experimental results have

shown the ability of Hopfield Network to recognize

correctly characters on license plate with probability

of 87% more than MLP architecture which has a

weaker performance of 80%.

The proposed approach of license plate

recognition can be implemented by the police to

detect speed violators, parking areas, highways,

bridges or tunnels.

REFERENCES

J. Swartz, 1999. “Growing ‘Magic’ of Automatic

Identification”, IEEE Robotics & Automation

Magazine, 6(1), pp. 20-23.

Park et al, 2000. “OCR in a Hierarchical Feature Space”,

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 22(4), pp. 400-407.

Omachi et al, 2000. Qualitative Adaptation of Subspace

Method for Character Recognition. Systems and

Computers in Japan, 31(12), pp. 1-10.

B. Kroese, 1996. An Introduction to Neural Networks,

Amsterdam, University of Amsterdam, 120 p.

J. Cowell, and F. Hussain, 2002. “A fast recognition

system for isolated Arabic characters”, Proceedings

Sixth International Conference on Information and

Visualisation, England, pp. 650-654.

H. Hontani, and T. Kogth, 2001. “Character extraction

method without prior knowledge on size and

information”, Proceedings of the IEEE lnternational

Vehicle Electronics Conference (IVEC’OI). pp. 67-72.

C. Nieuwoudt, and R. van Heerden, 1996. “Automatic

number plate segmentation and recognition”, In

Seventh annual South African workshop on Pattern

Recognition, l April, pp. 88-93.

M., Yu, and Y.D. Kim, 2000. ”An approach to Korean

license plate recognition based on vertical edge

matching”, IEEE International Conference on

Systems, Ma, and Cybernerics, vol. 4, pp. 2975-2980.

B. Daya & A. Ismail, 2006. A Neural Control System of a

Two Joints Robot for Visual conferencing. Neural

Processing Letters, Vol. 23, pp.289-303.

Prevost, L. and Oudot, L. and Moises, A. and Michel-

Sendis, C. and Milgram, M., 2005. Hybrid

generative/discriminative classifier for unconstrained

character recognition. Pattern Recognition Letters,

Special Issue on Artificial Neural Networks in Pattern

Recognition, pp. 1840-1848.

APPENDIX

Table 2: The recognition for some patterns with different

numbers of neurons (Hopfield Network).

Seq Real plate

number

(eye)

HOP1008 HOP252 HOP112

'p1' '9640RD9' '9640R094' '9640R09

4'

'9640R?94

'

'p2' '534DDW7

7'

'534DD?77' '534DD?7

7'

'534DD?7

7'

'p3' '326TZ94' '326TZ94' '326TZ94' '325TZ9?'

'p4' '6635YE93

'

'66J5YES?' 'B??5YE?

?'

'B???YE??

'

'p5' '3503RC94

'

'3503RC94' '35O3RC9

4'

'3503RC9

4'

'p6' '7874VT94

'

'7874VT94' '7874VT9

4'

'7874VT9

4'

Tim

e

-- 90 sec 3 sec 2 sec

Table 3: The recognition for all some patterns with

different numbers of neurons (MLP network).

Seq Real plate

number

(eye)

MLP1008 MLP252 MLP112

'p1' '9640RD9

4'

'964CR094' '56409D94' '2B40PD3

4'

'p2' '534DDW

77'

'53CZD677' '53CDD877

'

'53WDD9

77'

'p3' '326TZ94' '32SSZ8C' '326T794' '328TZ3C'

'p4' '6635YE9

3'

'8695YE8O' 'BE3SYE98

'

'E535YEB

3'

'p5' '3503RC9

4'

'35CO3C94' '5503RC94' '3503PC2

4'

'p6' '7874VT9

4'

'F674VT94' '7574V394' '7S74VT3

4'

Time -- 25 sec 3 sec 2 sec

IJCCI 2009 - International Joint Conference on Computational Intelligence

568