RESEARCH AND DEVELOPMENT OF CONSCIOUS ROBOT

Mirror Image Cognition Experiments using Small Robots

Takashi Komatsu and Junichi Takeno

Robot Science Laboratory, Meiji University, 1-1-1 Higashimita, Tama-ku, Kawasaki-shi, Kanagawa, 214-8571, Japan

Keywords: Cognition and Behavior, Consciousness, Mirror image, Self aware, Neural Networks, Robotics.

Abstract: The authors are trying to transplant a function similar to human consciousness into a robot to elucidate the

mystery of human consciousness. While there is no universally accepted definition of consciousness, we

believe that a consistency of cognition and behavior generates consciousness, based on the knowledge we

gained in such fields as brain science and philosophy in the course of our study. Based on this idea, we have

developed a module, named MoNAD, which comprises recurrent neural networks and can be used to

elucidate the phenomena of human consciousness. We focused on mirror image cognition and imitation

behavior, which are said to be high-level functions of human consciousness, and conducted experiments that

had the robot imitate the behavior of its mirror image. This paper reports on the results of our additional

tests using a new type of robot and basic experiments on discriminating a part of the self from another robot.

We found that our experiments on mirror image cognition did not depend on the type of robot used,

however the time delay when transmitting control signals to the other robot was an important factor that

affected our evaluation of the robot’s discriminating the self from the other robots.

1 INTRODUCTION

Human consciousness has been studied in

philosophy, psychology, brain science in the past. In

recent years, there has been an awareness that

human consciousness is closely related to

embodiment. From this new perspective, researchers

have started to study consciousness using robots

with bodies. As mentioned above, although there is

no clear-cut definition of consciousness, we will

gradually understand what human consciousness, the

mind and feelings are through our efforts toward

achieving the function of consciousness in a robot.

Eventually, we will be able to develop a perfect

robot that possesses high-level, human-like

functions.

Believing that achieving self-consciousness is

the most important goal, we decided to simulate the

phenomenon of being aware of one’s image in a

mirror, which is a proof of the existence of self-

consciousness, using a robot. As the first step, we

studied several aspects related to consciousness and

imitation behaviors of humans. An example of

imitation behavior is a mirror neuron (Gallese,

1996). The authors believe that the mirror neuron

mechanism has a relationship with imitation

behavior. The authors further believe that

development of consciousness is closely related to

imitation as evidenced by many studies on

consciousness and imitation behavior typically

represented by Mimetic theory. (Donald, 1991)

Based on this belief, we devised the conscious

system Module of Nerves for Advanced Dynamics,

or MoNAD . The robot equipped with this conscious

system calculates data obtained from sensors using

the MoNADs of the conscious system, and

represents the condition of the self and the other.

The robot imitates the behavior of the other and

cognizes the self using information obtained as

feedback from its imitation behavior. Mirror image

cognition, one of the functions of consciousness,

will be achieved using imitation behavior and

cognition of the self. We have already succeeded in

experiments on mirror image cognition using a

robot. That was a commercial small robot Khepera

II. (Takeno, 2003), (Takeno, 2005) Later, the robot’s

feelings were incorporated into the conscious system

as a subsystem (Shirakura, 2006), (Igarashi, 2007).

Additional mirror image tests and new experiments

on evaluating the robot’s discrimination of the self

from others were conducted using new robots. The

mirror image cognition experiments were again

successful thanks to the functions of emotion and

feelings incorporated in the new conscious system.

479

Komatsu T. and Takeno J. (2010).

RESEARCH AND DEVELOPMENT OF CONSCIOUS ROBOT - Mirror Image Cognition Experiments using Small Robots.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Artificial Intelligence, pages 479-482

DOI: 10.5220/0002702504790482

Copyright

c

SciTePress

2 MONAD CONSCIOUS

MODULE

The MoNAD conscious module is a computation

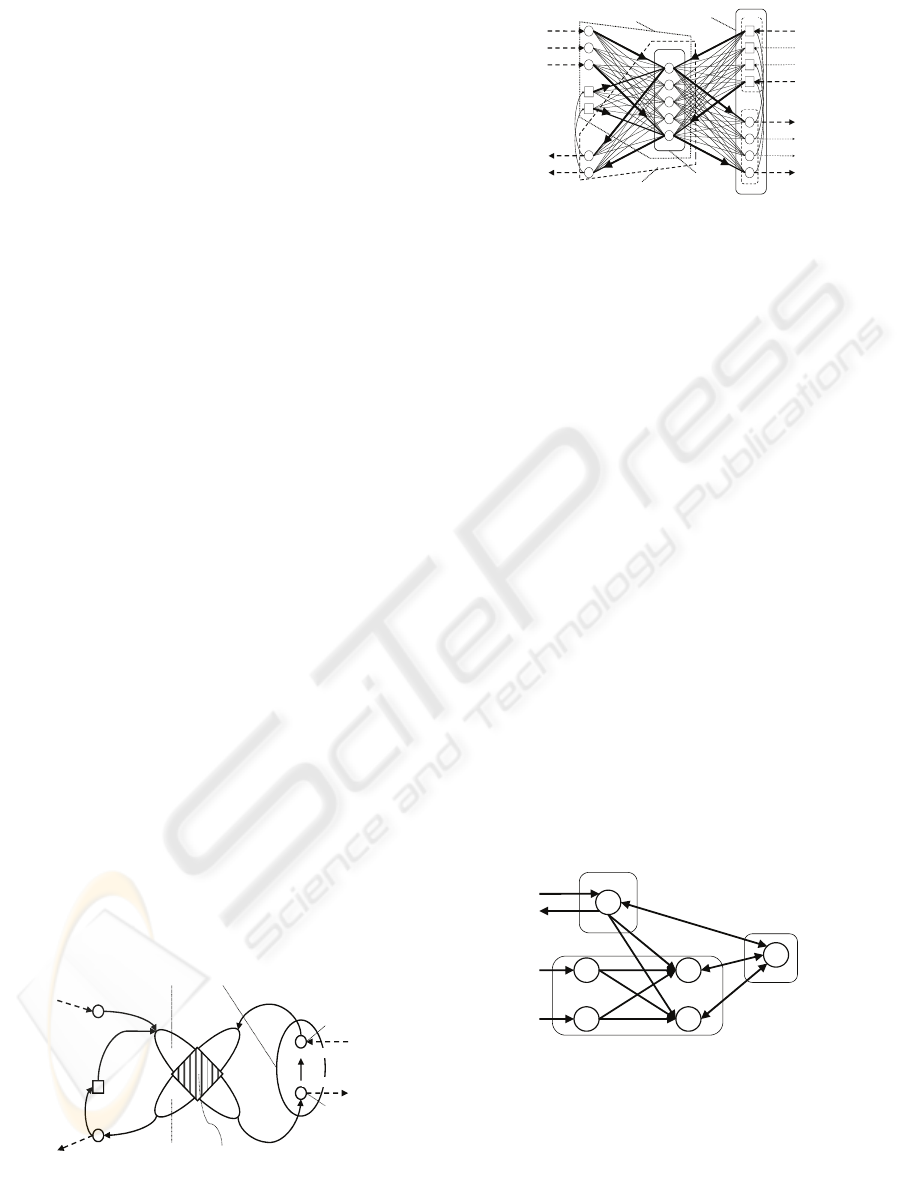

model using neural networks (Figure 1) (Figure 2).

Specifically, it consists of a cognition system (a),

behavior system (b), primitive representation (c),

symbolic representation (d), and two sets of inputs

and outputs. The cognition system (a) calculates the

current cognition using information p1 from external

sensors (or information from other conscious

modules), feedback information M’ from own

behavior, and cognition information p2 from one

step before. The behavior system (b) calculates the

behavior from input information (S), M’ and p2. The

primitive representation (c), where both the

information (a) and (b) coexist, is the central core of

the MoNAD that achieves consistency of cognition

and behavior. The symbolic representation (d) is the

layer where the information cognized by the

cognition system (a) is represented. There are two

types of representation: cognition representation

(RL) and behavior representation (BL). Cognition

representation (RL) is the representation currently

being cognized. RL information is constantly being

copied to the behavior representation (BL) and is

used for generating the next cognition, provided that

no other information is given to BL from other

conscious modules. RL and BL are terminals that

connect other MoNADs. They support exchange of

information with higher-level modules.

A robot can be embodied (capable of

acknowledging its own somatic sensation as

information) by inputting the feedback information

(M’) from the output into the MoNAD module that

comprises the conscious system and is described in

the next chapter. A high-level conscious function

can be achieved by connecting multiple MoNADs.

Each MoNAD has its own target orientation. The

MoNAD is proactively taught about its orientation

through supervised learning.

Output(M)

Input(S)

Input

Output

p1

p2

p4

p3

(d)

(a)

(b) (c)

RL

BL

M’

Figure 1: MoNAD Concept Model.

Output(M)

Input(S)

Input

Output

p1

p2

p4

p3

(d)

(a)

(b)

(c)

RL

M’

BL

Figure 2: MoNAD Circuitry.

3 CONSCIOUS SYSTEM

3.1 Structure of the Conscious System

The conscious system comprises multiple MoNADs.

Specifically, the conscious system consists of three

subsystems: reason (e), emotion-feelings (f) and

association (g) (Figure 3). The reason subsystem (e)

is responsible for conducting and representing

rational behavior for the target orientation in

response to external stimuli and somatic sensory

information. The emotion-feelings subsystem (f)

represents feelings in accordance with emotional

information generated by external stimuli and

changes in the state of the robot’s body. The

association subsystem (g) integrates the rational and

emotional thoughts. Living organisms are said to

generate emotions in response to external and

internal stimuli, and the emotions are deeply related

to decision-making and adjustment of bodily

functions. The authors therefore developed the

emotion-feelings subsystem by combining MoNADs

that represent fundamental emotions and feelings

(Shirakura, 2006), (Igarashi 2007).

(h) Pain

(i) Solitude

(j) Pleasant

(k) Unpleasant

(g) Associatio

n

(e) Imitation Behavior

Input(External)

Input(External)

Input(Internal)

Output

Reason

Emotion

-Feeling

Association

(f)

Figure 3: Conscious System Performing Mirror Image

Cognition.

3.2 Development of Conscious System

for Performing Mirror Image

Cognition

To successfully develop a robot with self-awareness,

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

480

the authors think it necessary to have the robot

achieve the phenomenon of being aware of its self

image in a mirror using the conscious system. Six

MoNADs were used in the conscious system in our

experiments (Figure 3). MoNAD (e), a reason

MoNAD, imitates the behavior of the image in the

mirror. MoNADs (h), (i), (j) and (k) are emotion and

feeling MoNADs. MoNAD (h) represents “Pain”

which the robot feels when it collides with the

mirror. MoNAD (i) represents “Solitude” which the

robot senses when it moves too far away from the

mirror and misses its mirror image. MoNADs (j) and

(k) are feeling MoNADs. They represent “Pleasant”

and “Unpleasant” feelings based on the information

from MoNADs (h) and (i), or whether the imitation

was successful or not. MoNAD (g), an association

MoNAD, integrates the imitation behavior

information and the information from the feeling

MoNADs.

External inputs entering the conscious system

include information on the state of the mirror image

and information captured by external sensors.

Internal inputs are somatic sensations and

information from the emotion-feelings subsystem.

The input values are calculated at the respective

MoNADs. The information represented by the

results of the calculation is transmitted to the higher-

level MoNADs and finally integrated at the

association MoNAD to calculate the representation.

The representation is an integration of rational and

emotional thoughts.The behavior information

generated in the association MoNAD is reflected

from the association MoNAD to the respective

lower-level MoNADs. The behavior of the lower-

level MoNADs is determined by this information.

Eventually, a command to implement the selected

behavior is given to the drive motors embedded in

the robot body. This describes the steps comprising

the entire process of cognition behavior of the

conscious system.

4 MIRROR IMAGE COGNITION

EXPERIMENTS

The authors conducted the mirror image cognition

experiments using the e-puck mini mobile robots.

We installed the conscious system introduced in the

preceding chapter in these robots. The robot

cognizes its surrounding environment using the

built-in infrared sensors and the robot’s action is

decided by the conscious system. The robot

advances, backs up and stops using the built-in

motors. A touch sensor is mounted on the front of

the robot to detect collision with the mirror.

Experiment 1: Conscious robot A is positioned in

front of a mirror where it conducts imitation

behavior while watching its mirror image A’.(Figure

4)

Figure 4: Experiment 1.

Experiment 2: Conscious robot A and Robot B

are connected by control cables. Robot B is

physically almost same as robot A. Robot B behaves

in the same manner as Robot A in response to

commands from Robot A. The two robots are

positioned facing each other, and Robot A watches

Robot B and imitates its behavior. (Figure 5)

Figure 5: Experiment 2 and 3.

Experiment 3: A time delay was intentionally given

to the control signals transmitted via the cables. All

other conditions were identical with Experiment 2.

Experiment 4: Conscious robots A and C were

placed facing each other. Each robot imitated the

behavior of the other robot (Figure 6).

Conscious robot A measures the rate of

coincidence of mutual imitation behavior between

the two robots.

Figure 6: Experiment 4.

RESEARCH AND DEVELOPMENT OF CONSCIOUS ROBOT - Mirror Image Cognition Experiments using Small

Robots

481

5 CONCLUSIONS

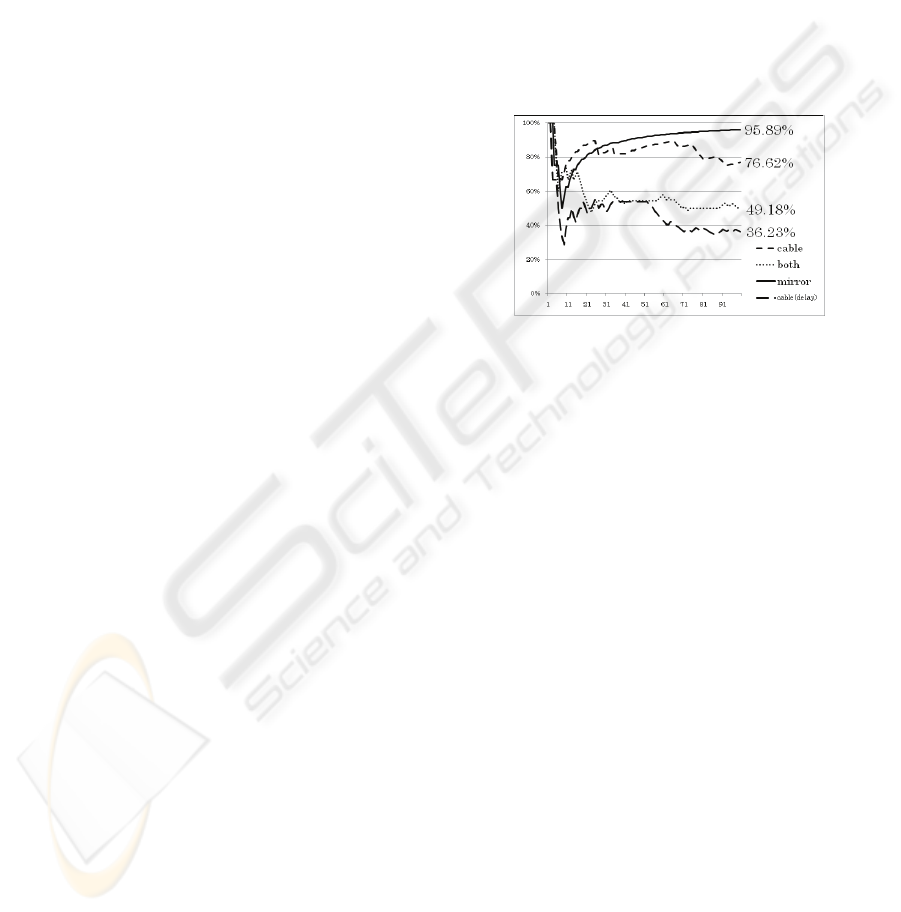

In Experiments 1 through 4 described above, the

conscious robot measured the behavior coincidence

rate between the self and the other robot (Figure 7).

The coincidence rate was about 95% in

Experiment 1, and about 76% in Experiment 2, and

about 36% in Experiment 3, and about 49% in

Experiment 4.In Experiment 1, successful mutual

imitation continued steadily without the robots

missing the image of the self in the mirror, except in

the initial stage where false detection occurred. In

Experiment 2, the coincidence rate was temporarily

higher than that of Experiment 1, but gradually

dropped due to shifting of motion between the two

robots and the resulting false detection. In

Experiment 3, where a time delay was intentionally

given to the control signals (to delay the motion of

the other robot), the coincidence rate dropped

continually. This particular experiment was

conducted to identify to what extent the robot could

cognize a part of its body to be a part of itself. In the

graph, we can see the point where the coincidence

rate with the time delay dipped lower than the

coincidence rate of Experiment 2. This is the point

where the robot had to abandon the cognition of the

image being a part of the self, and it started

considering the image to be the other. We have not

yet drawn any conclusions about this evaluation, and

acknowledge that further study is necessary.

The results of these experiments show that robot

A, with its built-in conscious system, could

determine that its mirror image A’ (Experiment 1)

was closer to itself than a part of its body B

(Experiment 2), and that robot C, with nearly

identical functions to robot A (Experiment 4), was

able to determine that mirror image A’ was none

other than itself. The authors believe that it is

possible to achieve self-consciousness in their

conscious system because of these successful mirror

image cognition experiments. Following on the

Khepera II experiments, we successfully conducted

more advanced experiments using the e-puck robot.

These results show that our conscious system has the

potential of generating conscious functions not only

in one type of robot but also universally on all types

of robots.

6 ADDITIONAL DISCUSSION

The conscious robot developed by the authors

cognizes mirror images with a high success rate of

95%. The robot avoided disturbances consciously

because we added feelings to the conscious system.

However, the robot did not change its behavior until

it actually encountered a disturbance. (Igarashi,

2007)

In the future, if the conscious system can learn

by itself the various disturbances that the robot may

encounter, the robot may be able to change its

behavior by anticipating such disturbances. The

authors believe that robots will eventually be able to

avoid unknown disturbances. Expectation and

prospect are functions of human consciousness and

are important themes in the study of human

consciousness. An expectation function is already

implemented in the MoNAD proposed by the

authors, but further study is needed to achieve long-

term expectation in robots using this MoNAD.

Figure 7: Result of Experiments.

REFERENCES

Igarashi, R, Suzuki, T, Shirakura, Y, Takeno, J., 2007.

Realization of an emotional robot and imitation

behavior. The 13th IASTEND International

Conference on Robotics and Applications, RA2007,

pp.135-140.

Donald, M., 1991. Origins of the Modern Mind. Harvard

University Press, Cambridge.

Gallese, V, Fadiga, L, Fogassi, L, Rizzolatti, R., 1996.

Action recognition in the premoter cortex. Brain 119,

pp.3-368.

Shirakura, Y, Suzuki, T, Takeno, J., 2006. A Conscious

Robot with Emotion. The 3rd International Conference

on Robots and Agents, ICARA2006, pp. 299-304,

Dec.

Takeno, J, Inaba, K., 2003. New Paradigm ‘Consistency of

cofnition and behavior’. CCCT2003, Proceeding Vol.1,

ISBN 980-6560-05-1, pp.389-394.

Takeno, J, Inaba, K, Suzuki, T., 2005. Experiments and

examination of mirror image cognition using a small

robot. The 6th

IEEE International Symposium on

Computational Intelligence in Robotics and

Automation, pp.493-498 CIRA 2005, IEEE Catalog:

05EX1153C, ISBN 0-7803-9356-2, June 27-30, Espoo

Finland.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

482