MULTIAGENT COORDINATION IN AD-HOC NETWORKS

BASED ON COALITION FORMATION

1

1

Samir Aknine,

2

2

Usama Mir and

3

Luciana Bezerra Arantes

1

LIESP, Université Claude Bernard Lyon1, 43 Boulevard de 11 Novembre, 1918, 69622, Lyon, France

2

ICD/ERA, FRE CNRS 2848, Université de Technologie de Troyes, 12 rue Marie Curie, 10010 Troyes Cedex, France

3

LIP6/INRIA, CNRS, Université Pierre et Marie Curie, 104 avenue de President Kennedy, 75016 Paris Cedex, France

Keywords: Multiagent Systems, Coalition FORMATION, Mobile Ad-hoc Networks.

Abstract: This research investigates the problems of agent coordination when deployed in highly dynamic

environments such as MANETs (Mobile Ad-hoc NETworks). Several difficulties arise in these

infrastructures especially when the devices with limited resources are used. All the constraints of agents’

development must thus be reexamined in order to deal with such situations, especially due to the

opportunistic mobility of nodes. In this paper, we thus propose a new multiagent coordination mechanism

through agent coalition formation for such an environment. In order to validate it, evaluation performance

tests have been conducted on an application devoted for the assistance of hospital patients and their results

are also presented in the paper.

1 INTRODUCTION

This paper addresses the problem of dynamic

coalition formation in multiagent systems deployed

on ad-hoc networks. Several intrinsic difficulties

arise in using these original infrastructures, which

require reexamining in depth agent coordination

issues. Indeed ad-hoc networks (Akyildiz, 2009) or

mobile ad-hoc networks (MANET), comprise a set

of mobile, autonomous nodes, which are

interconnected using wireless links. The adaptive

behavior of MANETS allows a network quickly

reorganize itself even under the most unfavorable

conditions. The topology in MANETs is dynamic,

and to coordinate, nodes need sophisticated

protocols that can cope with topology changing

problems.

On the other hand, the main purpose of using

multiagent systems (MAS) is to collectively reach

goals that are difficult to achieve by an individual

agent or in other words to achieve coordination

(Hsieh, 2009)(Jennings, 1994) amongst the agents.

In systems composed of multiple autonomous

agents, coordination is a key form of interaction that

enables groups of agents to arrive at a mutual

agreement regarding some beliefs, goals or plans.

However due to resource constraints, agents are

generally selfish and try to maximize their benefits.

In this paper, we study agent coordination based on

coalitions. An attractive question is the way in which

these coalitions are formed in these specific

infrastructures. Furthermore, one of the central

problems is the study of the agents’ payoffs whether

the proposed solution is efficient.

For coordination between the agents in

traditional wired networks such as Ethernet, ADSL,

there already exist several mechanisms like contract

net and its extensions (Hsieh, 2009), coalition

formation (Tsvetova, 2001), etc. However, such

protocols are not suitable to MANET since they do

not handle the dynamics of this networks where

nodes can move, join or live the network. In fact,

some related research on this problem is done in

(Wang, 2005)(Christine, 2004), but these works

don’t really address the problems of mobility of

nodes i.e. nodes leaving and joining the

environment. In this paper, we propose a new

mechanism which handles the problem of nodes

mobility and agent coalition formation.

The novelty in our work is the introduction of

dynamic coalition formation mechanism for solving

nodes’ mobility problem in MANET. We show that

this mechanism is time efficient and it provides

better payoff for the nodes in much sophisticated

and generalized way. The rest of the paper is

structured as follows: Next section presents the

review of the existing solutions. Section 3 describes

our context with the help of an example. Section 4

241

Aknine S., Mir U. and Bezerra Arantes L. (2010).

MULTIAGENT COORDINATION IN AD-HOC NETWORKS BASED ON COALITION FORMATION.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Artificial Intelligence, pages 241-246

Copyright

c

SciTePress

focuses on the proposed mechanism and section 5

and 6 delineate the implementation results, and a

conclusion respectively.

2 RELATED WORK

One of the approaches used for object oriented

coordination in ad-hoc networks is presented in

(Cutsem, 2007). This work considers a loosely

coupled object-oriented coordination abstraction,

named as an ambient reference (AR). AR initiates a

service discovery request for a remote object

exported as a music player and whenever a node

leaves the environment, the AR is rebounded to point

to another principal object in the network. A similar

sort of solution is proposed in (Christine, 2004),

where the concept of Egospace (which is a kind of

middleware for addressing the specific needs of the

agents) is explored. All the available data in the

network is stored in a common data structure and

whenever the agents move within their

communication range their local data structure is

merged to form a global view. Some other related

solutions based on the common data structure for

handling nodes’ inaccessibility in an ad-hoc network

are considered in (Cao, 2006) and (Sislak, 2005).

The above mentioned solutions fulfill few of

their results by handling some aspects related to

mobility, but still there exists a problem of agents’

shallow knowledge which does not represent their

preferences, intentions and allocation of resources.

To address this issue, Advertising on Mobile phones

ADOMO (which is a partially agents’ coordination

approach) is proposed in (Carabelea, 2005).

ADOMO uses sending and receiving of agents’

messages to address mobility issues. Another ad-hoc

coordination approach has been proposed in (Wang,

2005), where the concept of agent based Peer-to-

Peer (P2P) fostering is used for handling the

problems like nodes dropping out and mobility.

Other then ad-hoc networks, work done by Soh

et al, related to MAS learning via coalition

formation is also worth mentioning here. In (Soh,

2006), they have proposed a computer-supported

cooperative learning system in education and the

results of its deployment. The system consists of a

set of teacher, group, and student agents.

Specifically, their appraoch uses a Vickrey auction-

based and learning-enabled algorithm called

VALCAM to form student groups in a structured

cooperative learning setting. The approach has the

traditional limitation of Vickrey auctions which does

not allow agents for price discovery, that is,

discovery of the market price if the agents are unsure

of their own valuations, without sequential auctions.

Nevertheless, all the aforementioned coordination

solutions do not behave well on environment with

highly dynamics where agents need to have both the

capabilities of coordination and device failure

handling, these approaches fall short of giving a

generalized solution.

3 PROBLEM DESCRIPTION

This section explains the mobility arising problem

and presents an example to illustrate our approach.

We are considering here the example of a hospital

where software agents are deployed on several

devices (figure. 1). In this figure, the chargers (Cr

1

,

Cr

2

… Cr

i

), patients’ wheelchairs (W

1

, W

2

… W

j

),

laptops (L

1

, L

2

… L

k

), cardiac monitors (M

1

, M

2

…

M

f

), etc., are mobile in a sense that the staff or the

patients can move them from one place to another,

according to their requirements of use or charging.

For the purpose of coordination and for

exchanging energy (charge) between the devices,

agents are deployed at each of them. Agents need

coordination if they require more energy in their

devices or if they need to remain connected with

other agents in the MANET. Each agent a

i

has its

payoff function F

i

, which is maximized by its charge

consumption and the rewards it gets after performing

its tasks. Deployed agents start coordination if they

consider that there is not enough energy left to move

further and to achieve their tasks or if they require

multi-hop communications with other agents in their

environment.

Figure 1: Agents’ connections in ad-hoc infrastructure.

To give an example, let’s consider a wheelchair,

a cardiac monitors, and a charger which have agreed

upon communication and charge sharing using some

protocols, and suddenly a staff member comes and

takes the charger away for giving charge to some

other wheelchairs, at a different place. One node of

this MANET can tell the other nodes by using some

sort of routing protocols e.g. ADODV and DSR or by

using agent communication approaches, that it is

leaving the environment. However the problems of

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

242

charge losing and communication breakage still

remain there, since current approaches and protocols

do not guarantee the resolution of such problems

under these conditions.

4 METHOD OF RESOLUTION

As mentioned in previous sections, the main concern

of our work are to handle the mobility of nodes in a

MANET, so that the agents deployed on them can

still communicate and coordinate effectively and

their energy sharing continues without any breakage.

In this section, we present our solutions to the

mentioned problem mainly based on coalition

formation.

4.1 Notations and Definitions

Before going further in the presentation of our

mechanism, let’s introduce some definitions. Let A

be a set of agents. The payoff function F

i

of each

agent a

i

measures the expected achiveable payoff of

a

i

for each proposal p

j

. Every agent a

i

knows its

reference state r

i

for which the expected payoff is

minimal and tries to maximize F

i

. We assume that

each a

i

of A is selfish and has a set of goals G

i

= {g

1

,

g

2

, ..., g

m

} which it aims to achieve. There is one

agent per node and agents communicate through

message passing. Every agent a

i

of A holds in its

view, v(a

i

), a list of agents it can contact, as well as

the communication cost for contacting them. This

cost varies depending on the type of communication

used (either single or multi-hop communication). H

is the function that measures the cost of these

communication hops. However, as nodes can leave

or join the system, the view of an agent changes

dynamically. By periodically checking the aliveness

of its neighbors, an agent can update its view and

exclude those agents that do not belong to the

system anymore. In this model:

A coalition is a tuple C= <A

c

, G

c

> where A

c

= {a

1

,

a

2

... a

k

} is a set of agents of A that agreed to perform

a set of goals G

c

.

Every agent might simultaneously belong to several

coalitions in order to reach its own goals. We

consider then that:

A coalition structure, CS, is a set of coalitions {c

1

,

c

2

… c

k

} such that

A

c

(c

i

)

A, and

G

c

(c

i

)

G

where c

i

CS.

As the system is deployed on an ad-hoc

infrastructure and the agents are selfish, a coalition

formation process does not necessarily search to

satisfy all goals of every agent in A. Mainly, each

agent a

i

approves the coalition structures that satisfy

its own payoff function F

i

. Hence:

An approved coalition structure CS is considered

by each agent a

j

of CS to be either a complete

solution, if all a

j

’s goals in G

j

are satisfied by CS, or

a partial solution, if some of its goals are not

considered by CS.

An agent a

i

that has approved some partial solutions

k

is totally satisfied if

k

k

deals with all its

constraints on its goals in G

i

.

4.2 Coalition Formation Mechanism

Before defining our protocol, let’s introduce other

concepts:

Coalition agreement. An agent participating in a

coalition formation process approves a coalition

structure or a coalition (i.e. singleton coalition

structure) either because this structure represents a

partial solution or a complete solution for it.

An agreement on a coalition structure, CS, is

reached if all the agents of the CS have approved

this structure, i.e.

a

i

CS, F

i

(a

i

) is higher for

the CS when compared to its reference state r

i

.

Coalition concessions. Making concessions is

certainly the best way to reach agreements on a

coalition formation. A trivial concession is the one

where an agent a

i

approves a coalition structure CS*

for which F

i

(a

i

) is inferior to another previously

approved structure CS. Moreover, an agent can make

Pareto concessions where the payoff of at least one

participant agent can be improved with a new

approved coalition structure without deteriorating the

payoff of others. Other kinds of concessions can also

be considered, such as egalitarian measurements of

agents’ utilities, etc. It is worth noting that in

economic theory, we can find several sorts of

concessions that an agent can make within a

negotiation. In the proposed mechanism, agents focus

on different forms of concessions.

In our pessimistic ad-hoc coordination protocol

which is proposed for solving the mobility problem

by the means of coordination, each of the nodes

maintains a cache for saving temporary data about

its mobility. The agents deployed on them update

this cache with the latest probability information

about their movements or stability (Prob

mins

). In the

coalition formation problem, each agent makes its

proposals of coalition structures and must reach with

others agreements on those which will be adopted

such that the following conditions are satisfied: (1)

validity: if an agent adopts a CS then this CS have

been agreed on by its forming agents; (2) Agreement:

no agent reaches an incoherent state after deciding;

and (3) termination: every agent eventually decides.

MULTIAGENT COORDINATION IN AD-HOC NETWORKS BASED ON COALITION FORMATION

243

Let us now present the steps of the protocol:

(1) In the first round each agent a

i

, which has some

goals G

i

to achieve, initiates a coalition

formation process, p

i

, by contacting some or all

the agents of its view, i.e., its neighbors within its

one-hop communication range. a

i

builds

proposals to submit to these agents using the

information provided on their goals and

resources. Just before starting it, the initiator

agent (a

i

) sets a timer with a timeout value which

is an upper bound estimation of the time delay

that the coalition formation will take.

(2) Each agent a

j

є v(a

i

), interested in the coalition

p, seeks to make proposals either just based on

its own goals, resources and its Prob

mins

, or

initiates one or more sub-coalition formation

processes, p

i

k

, with its other one-hop neighbors

in order to be able to make further proposals to

a

i

in the current coalition process p

i

.

(3) In each subsequent round, each agent can keep

its previous proposals, or make some

concessions, or even make a new proposal. For

an agent a

j

, if its Prob

mins

is lower than an

acceptable threshold, a

j

initiates a sub-coalition

formation process, p

j

-

, with its one-hop

neighbors. The process p

j

-

allows that the

commitments of a

j

with a

i

will not be withdrawn

since at least one agent of p

j

-

will perform them if

a

i

’s node moves and F

i

(a

i

) has decreased due to

multi-hop communications with a

j

.

(4) An agent ends its negotiation phase of a given

coalition formation process in one of the

following cases: (a) a complete solution which

handles all its goals is found; (b) an agreement

is reached on a partial solution which comprises

some of its goals; (c) a conflict arises and no

agent’s concession is possible (d) the timeout

delay for the coalition formation expired.

(5) For each negotiation that successfully ended, the

agents involved in the agreed coalitions apply an

atomic commitment phase in order to validate or

give up the negotiation phase. The latter takes

place either because one or more of the involved

agents can not keep their agreements or because

the nodes have moved.

5 PERFORMANCE EVALUATION

We present in this section our results and

experiments where all the agents are in operation

together in a coordinated way under the supervision

of the proposed coalition formation model. For the

results to be efficient, the agents must provide better

payoffs of each requester which asks for its goals to

be performed. Also, total number of goals achieved

(number of successful charge sharing agreements)

and negotiation time (i.e. the total time for

communication and goal solving measured in

minutes) are the other two important parameters.

The parameters are chosen as they will testify our

approach in terms of its feasibility, efficiency,

accuracy and scalability.

The whole scenario is simulated based on the

hospital example. The simulation starts with a set of

agents in which any random agents a

i

, have some

goals G

i

to achieve (or to get charged). They initiate

a coalition formation process, p

i

, by firstly searching

and then contacting some or all the agents in their

view. After contacting, a

i

sends these agents the

information about the charge they need, setting a

timeout for reciving response. Each of the interested

agents a

j

є v(a

i

), make their proposals based on their

goal solving abilities (or charging capabilities). The

factor of Prob

mins

is considered for sending

proposals. Necessary sub-coalitions are also formed

in case the value of Prob

mins

< threshold. The main

purpose of these experiments is to show the

improvement with the required payoff (the charge

needed in the beginning) of agents and their

achieved goals or tasks (the number of charge

sharing agreements successfully completed).

0

20

40

60

80

100

120

140

0

2000

4000

6000

0

2

4

6

8

10

Number of agents

Payoffs

Negotiation time

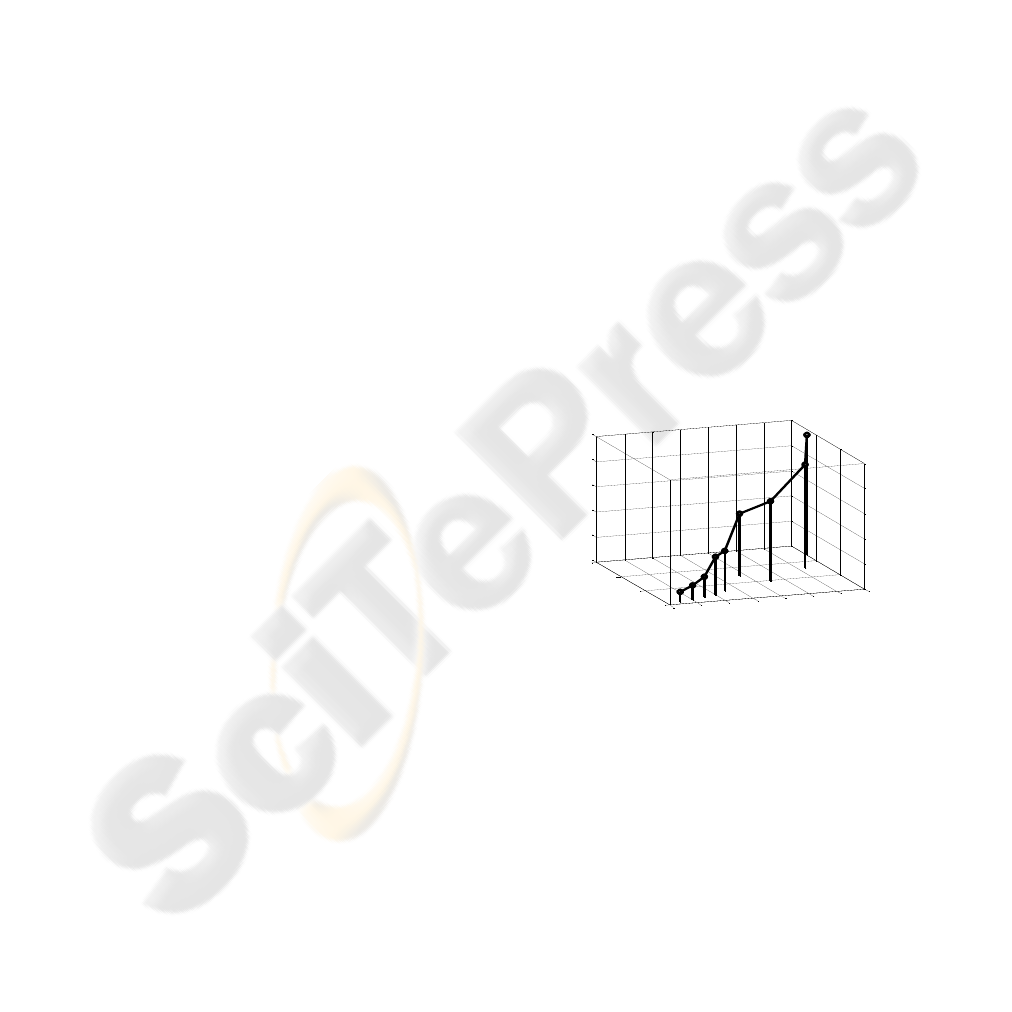

Figure 2: Comparison of achieved payoffs and negotiation

time.

Figure 2 shows a graph of several values of

achieved payoffs in relation to time by running the

simulation with varying number of agents. The

maximum time taken for negotiation and goal

solving is not more than 9.59 minutes with the

highest payoff value of 4725. Figure 3 depicts the

number of messages exchanged between the agents

when both the values of agents and their achieved

payoffs increase.

One of our objectives is to evaluate the different

values of payoffs of agents in terms of number of

messages required to achieve these values.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

244

0

20

40

60

80

100

120

140

0

50

100

150

200

0

1000

2000

3000

4000

5000

Number of agents

Number of messages exchanged

Payoffs achieved

Figure 3: Achieved payoffs with number of messages

exchanged.

Relating this aspect, two experimental graphs

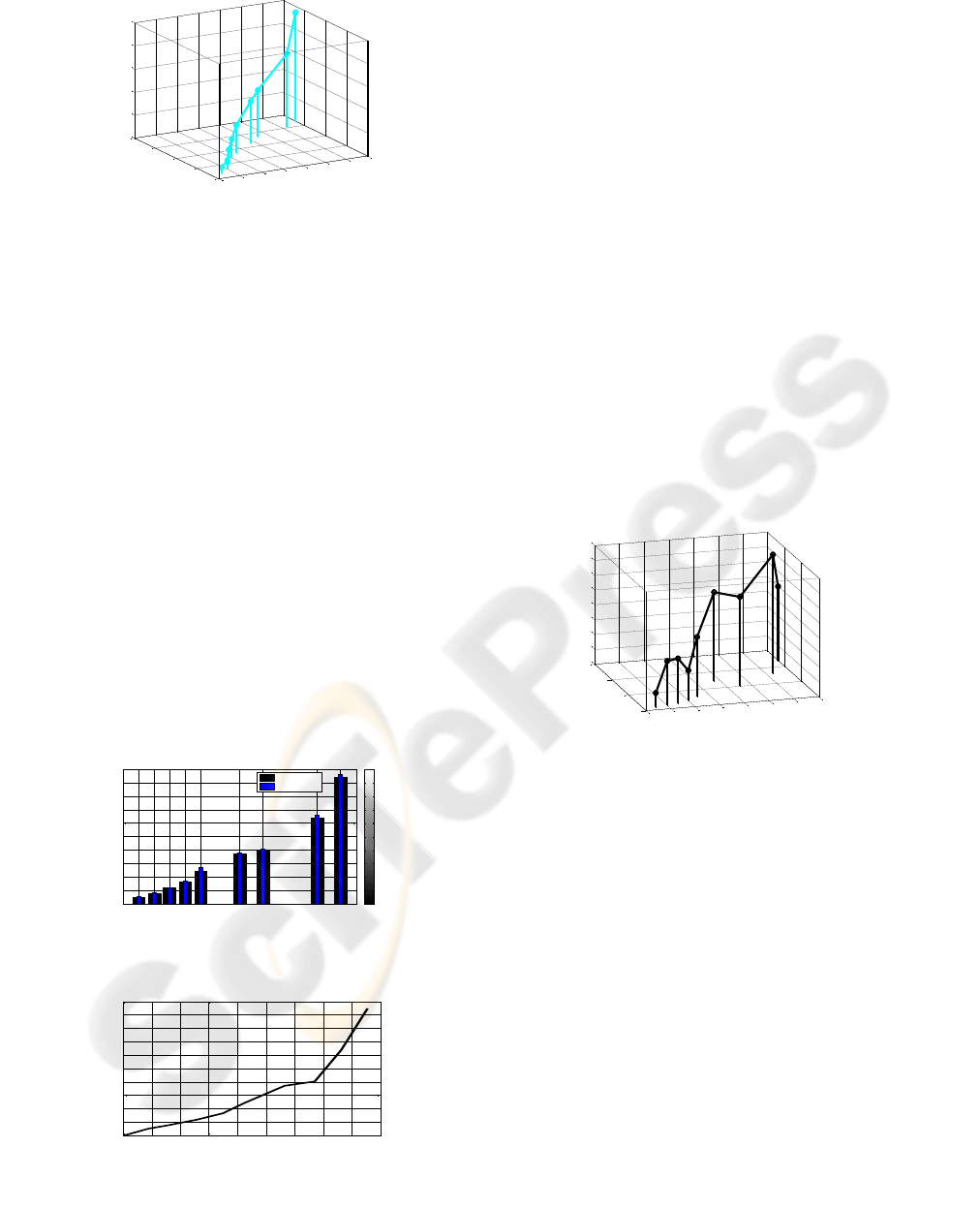

have been set up. A first experiment (Figure 4) has

been conceived to compare the initial required

values of agents’ payoffs (blue lines) and the values

of payoffs they have achieved at the end (black

lines). Here, by required payoffs, we mean the sum

of various amounts of charge needed by the

participating wheelchairs, while the achieved values

of payoffs are the several amounts of charge gained

at the end. Thus, different random sets of agents

(from 10 up to 140) are generated and represented in

a 2D form. The achieved payoffs are at maximum of

1211 (over a total of 1350) when the number of

agents is in the range from 0 to 50 and exhibits less

variability on the average. Beyond 50 agents, there

is a rapid boost in the achieved payoffs reaching to a

peak value of 4725 (over total of 4800). Thus, it is

clear that almost 90-95% of the total required

payoffs have been achieved efficiently. Figure 5

depicts the number of messages exchanged between

agents, that grows with increasing values of the

achieved payoffs.

10 20 30 40 50 75 90 125 140

0

500

1000

1500

2000

2500

3000

3500

4000

4500

5000

Number of agents

Payoffs

Payoffs obtained

Total required payoffs

1

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

1.9

2

Figure 4: A graph comparison: Total required payoffs

versus achieved payoffs.

0 20 40 60 80 100 120 140 160 180

0

500

1000

1500

2000

2500

3000

3500

4000

4500

5000

Number of messages

Payoffs achieved

Figure 5: Achieved payoffs with number of messages

exchanged.

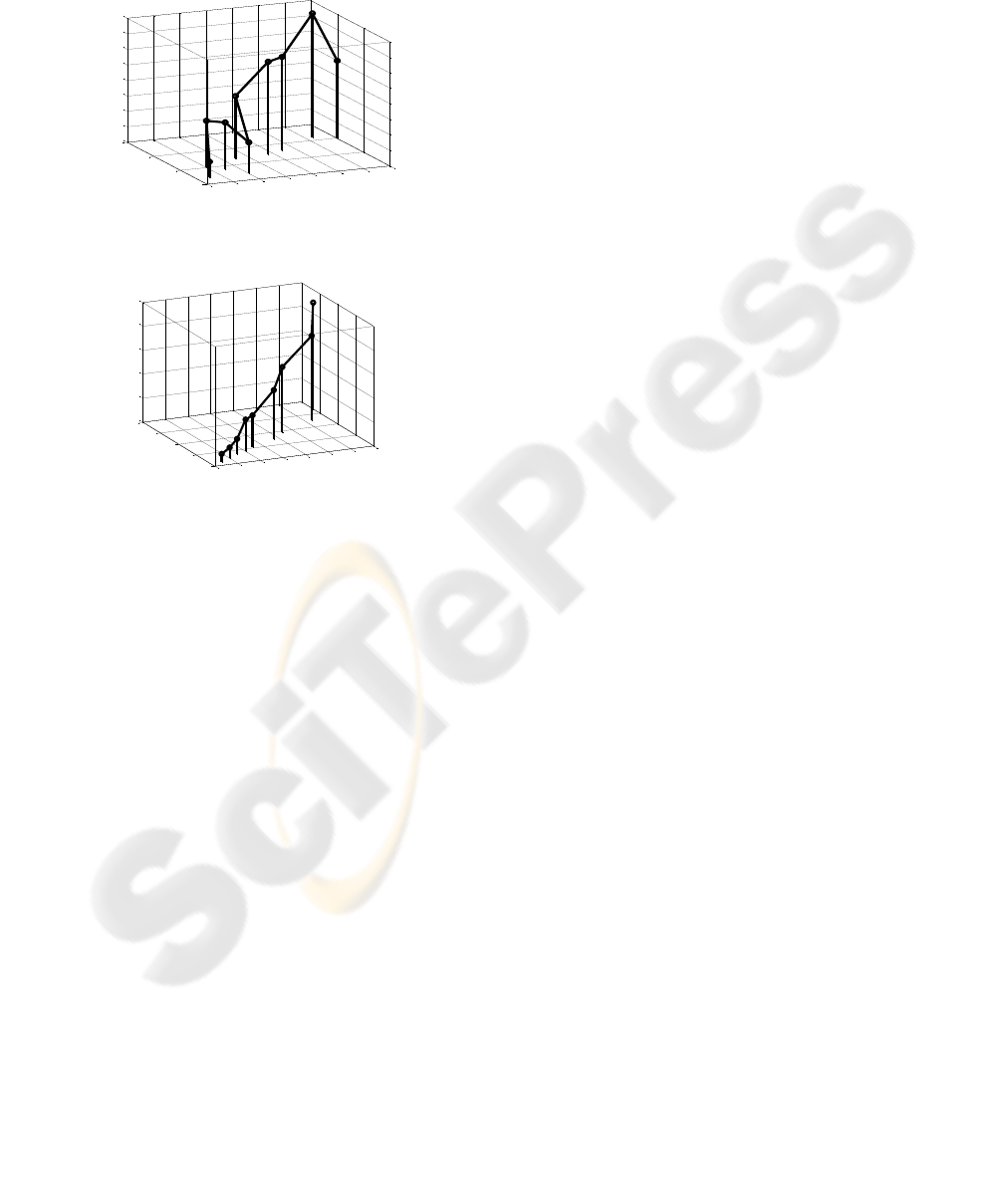

Next we present three different results that

illustrate the agents loss which they have caused in

terms of the unachieved goals (or failed tasks), time

and number of messages. In figure 6, a graph is

given with the achieved payoffs and the number of

unachieved goals. By unachieved goals we refer to

as the number of unsuccessful agreements. This

difficulty arrives when charger agents become

mobile and later they cannot find their replacements

in case of mobility. It is clear that the values of

unachieved goals are not even in the double figures,

while the achieved values of payoffs are at their

maximum peak range. Thus the flow of achieved

payoffs is higher and there are not much goal losses

when running the simulation with increased values

of agents. The experiment of Figure 7 determines

different values of unachieved goals with regard to

the number of message loss. In the figure, the losses

in terms of tasks and messages are almost leveled off

for various sets of agents (from 10 to 140) and

exhibits less fluctuating pattern on average. Even

with maximum of 140 agents the values of tasks and

message losses are 5 and 11 respectively.

0

20

40

60

80

100

120

140

0

2000

4000

6000

0

1

2

3

4

5

6

7

8

Number of agents

Payoffs

Number of tasks failed

Figure 6: A graph with the achieved payoffs and number

of unachieved goals (failed tasks).

Figure 8 is drawn to compare the values of

achieved goals with their associated time values

respectively. In the figure, there is a continuous

boost in terms of achieved goals. For example if

with 10 agents, the numbers of achieved goals are 5

(out of 6), then this phenomenon continues up till

maximum of 140 agents where the achieved goals

are 67 (over 72 initiated goals). Thus a climbing

percentage of efficient results is maintained

continuously. Similarly the total time taken for 10

and 140 agents is 0.68 and 8.92 respectively, which

can also be considered as continuous.

Briefly, we studied in this section, how the

increased values of achieved goals can influence the

efficiency of our agents. Since, with the increase in

number of agents, the results are more efficient, we

expected to find that the higher the values of agents,

the higher the average values of achieved payoffs.

MULTIAGENT COORDINATION IN AD-HOC NETWORKS BASED ON COALITION FORMATION

245

Thus when using bigger scenarios (with huge

number of agents) where time is not a highly

concerned issue, with the main focus on achieving

higher values of goals and payoffs, our ad-hoc

coalition formation approach seems still an efficient

solution.

0

20

40

60

80

100

120

140

0

5

10

15

0

1

2

3

4

5

6

7

8

Number of agents

Number of tasks failed

Number of messages

Figure 7: Number of unachieved goals (failed tasks) with

the messages lost.

0

20

40

60

80

100

120

140

0

20

40

60

80

0

2

4

6

8

10

Number of agents

Tasks completed

Time

Figure 8: Number of achieved goals (successful tasks)

with their associated time.

6 CONCLUSIONS

This paper addresses the problem of nodes mobility

and the communication breakage costs, in MANET

using coalition formation through MAS

coordination. Several difficulties arise in these

infrastructures especially when devices with limited

resources are used. In order to cope with those

problems, we have developed a new coalition

formation mechanism, which addresses these issues

in an efficient manner

and increases the payoffs of each node according to

its needs. In essence, the implementation and test

results have shown that our approach can form

coalition structures in a regular and effective manner

in highly mobile conditions. The simulation results

are based on several parameters including: payoff,

coordination time, number of achieved and

unachieved goals, number of messages. Our

approach converges to an efficient position with the

increase in number of agents, reaching to even better

coordination and payoff at higher stages.

REFERENCES

Hsieh, F.-S., 2009. Developing cooperation mechanism

for multi-agent systems with Petri nets. In,

International Journal of Engineering Applications of

Artificial Intelligence. ACM Press.

Akyildiz, I. F., Lee, W-Y., and Chowdhury, K. R,, 2009.

TP-CRAHN: A transport protocol for cognitive radio

ad-hoc networks. In. INFOCOM’09, 29th Conference

on Computer Communications. IEEE Press.

Cutsem, T. V., Dedecker, J., and Meuter, W. D., 2007.

Object oriented coordination in mobile ad-hoc

networks. In. 9

th

International Conference on

Coordination Models and Languages. Springer Press.

Soh, L-K., Khandaker, N., and Jiang, H., 2006.

Multiagent coalition formation for computer-supported

cooperative learning. In. AAAI’06, 21

st

International

Conference on Artificial Intelligence. AAAI Press.

Cao, W., Bian, C- G., and Hartvigsen, G., 2006.

Achieving efficient cooperation in a multiagent

system: the twin-base modeling. In. WCIA’06, Ist

International Workshop on Cooperative Information

Agents. Springer/ACM Press.

Carabelea, C., and Berger, M., 2005. Agent negotiation in

ad-hoc networks. In. MOBIQUITOUS’05, Second

Annual International Conference on Mobile and

Ubiquitous Systems. ACM/IEEE Press.

Sislak, D., Pechoucek, M., and Rehak, M., et al, 2005.

Solving inaccessibility in multi-agent systems by

mobile middle-agents. In. International Journal on

Multiagent and Grid Systems. ACM Press.

Wang, M., Wolf, H. and Purvis, M., et al, 2005. An

agent-based approach to assist collaborations in a

framework for mobile p2p applications. In.

AAMAS’05, Autonomous Agents and MultiAgent

Systems. Springer Press.

Christine, J., and Catalin, R.G., 2004. Active coordination

in ad-hoc networks. In. 6

th

International Conference

on Coordination Models and Languages. Springer

Press.

Tsvetova, M., Sycara, K., and Chen, Y., et al, 2001.

Customer coalitions in electronic markets. In.

AMC’01, Agent-Mediated Electronic Commerce III.

Springer/ACM Press.

Jennings, N. R., 1994. Cooperation in industrial

multiagent systems. World Scientific Publishing,

London, 2

nd

edition.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

246