COMPLEXITY OF STOCHASTIC BRANCH AND BOUND

METHODS FOR BELIEF TREE SEARCH IN BAYESIAN

REINFORCEMENT LEARNING

Christos Dimitrakakis

University of Amsterdam, The Netherlands

Keywords:

Exploration, Bayesian reinforcement learning, Belief tree search, Complexity, PAC bounds.

Abstract:

There has been a lot of recent work on Bayesian methods for reinforcement learning exhibiting near-optimal

online performance. The main obstacle facing such methods is that in most problems of interest, the optimal

solution involves planning in an infinitely large tree. However, it is possible to obtain stochastic lower and

upper bounds on the value of each tree node. This enables us to use stochastic branch and bound algorithms to

search the tree efficiently. This paper proposes some algorithms and examines their complexity in this setting.

1 INTRODUCTION

Various Bayesian methods for exploration in Markov

decision processes (MDPs) and for solving known

partially-observable Markov decision processes

(POMDPs), were proposed previously (c.f. (Poupart

et al., 2006; Duff, 2002; Ross et al., 2008)). How-

ever, such methods often suffer from computational

tractability problems. Optimal Bayesian exploration

requires the creation of an augmented MDP model in

the form of a tree (Duff, 2002), where the root node

is the current belief-state pair and children are all

possible subsequent belief-state pairs. The size of the

belief tree increases exponentially with the horizon,

while the branching factor is infinite in the case of

continuous observations or actions.

In this work, we examine the complexity of ef-

ficient algorithms for expanding the tree. In particu-

lar, we propose and analyse stochastic search methods

similar to the ones proposed in (Bubeck et al., 2008;

Norkin et al., 1998). Related methods have been

previously examined experimentally in the context

of Bayesian reinforcement learning in (Dimitrakakis,

2008; Wang et al., 2005).

The remainder of this section summarises the

Bayesian planning framework. Our main results are

presented in Sect. 2. Section 3 concludes with a dis-

cussion of related work.

1.1 Markov Decision Processes

Reinforcement learning [c.f. (Puterman, 2005)] is

discrete-time sequential decision making problem,

where we wish to act so as to maximise the expected

sum of discounted future rewards E

∑

T

k=1

γ

k

r

t+k

,

where r

t

∈ R is a stochastic reward at time t. We are

only interested in rewards from time t to T > 0, and

γ ∈ [0,1] plays the role of a discount factor. Typically,

we assume that γ and T are known (or have known

prior distribution) and that the sequence of rewards

arises from a Markov decision process µ:

Definition 1 (MDP). A Markov decision process is a

discrete-time stochastic process with: A state s

t

∈ S

at time t and a reward r

t

∈ R, generated by the pro-

cess µ, and an action a

t

∈ A, chosen by the deci-

sion maker. We denote the distribution over next

states s

t+1

, which only depends on s

t

and a

t

, by

µ(s

t+1

|s

t

,a

t

). Furthermore µ(r

t+1

|s

t

,a

t

) is a reward

distribution conditioned on states and actions. Finally,

µ(r

t+1

,s

t+1

|s

t

,a

t

) = µ(r

t+1

|s

t

,a

t

)µ(s

t+1

|s

t

,a

t

).

In the above, and throughout the text, we usu-

ally take µ(·) to mean P

µ

(·), the distribution un-

der the process µ, for compactness. Frequently

such a notation will imply a marginalisation. For

example, we shall write µ(s

t+k

|s

t

,a

t

) to mean

∑

s

t+1

,...,s

t+k−1

µ(s

t+k

,... , s

t+1

|s

t

,a

t

). The decision

maker takes actions according to a policy π, which

259

Dimitrakakis C. (2010).

COMPLEXITY OF STOCHASTIC BRANCH AND BOUND METHODS FOR BELIEF TREE SEARCH IN BAYESIAN REINFORCEMENT LEARNING.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Artificial Intelligence, pages 259-264

DOI: 10.5220/0002721402590264

Copyright

c

SciTePress

defines a distribution π(a

t

|s

t

) over A, conditioned on

the state s

t

. A policy π is stationary if π(a

t

= a|s

t

=

s) = π(a

t

0

= a|s

t

0

= s) for all t,t

0

. The expected util-

ity of a policy π selecting actions in the MDP µ, from

time t to T can be written as the value function:

V

π,µ

t,T

(s) = E

π,µ

T

∑

k=1

γ

k

r

t+k

s

t

!

, (1)

where E

π,µ

denotes the expectation under the Markov

chain arising from acting policy π on the MDP µ.

Whenever it is clear from context, superscripts and

subscripts shall be omitted for brevity. The optimal

value function will be denoted by V

∗

, max

π

V

π

. If

the MDP is known, we can evaluate the optimal value

function policy in time polynomial to the sizes of the

state and action sets (Puterman, 2005) via backwards

induction (value iteration).

1.2 Bayesian Reinforcement Learning

If the MDP is unknown, we may use a Bayesian

framework to represent our uncertainty (Duff, 2002).

This requires maintaining a belief ξ

t

, about which

MDP µ ∈ M corresponds to reality. More precisely,

we define a measurable space (M ,M), where M is a

(usually uncountable) set of MDPs, and M is a suit-

able σ-algebra. With an appropriate initial density

ξ

0

(µ), we can obtain a sequence of densities ξ

t

(µ),

representing our subjective belief at time t, by condi-

tioning ξ

t

(µ) on the latest observations:

ξ

t+1

(µ) ,

µ(r

t+1

,s

t+1

|s

t

,a

t

)ξ

t

(µ)

R

M

µ

0

(r

t+1

,s

t+1

|s

t

,a

t

)ξ

t

(µ

0

)dµ

0

. (2)

In the following, we write E

ξ

to denote expectations

with respect to any belief ξ.

1.3 Belief-augmented MDPs

In order to optimally select actions in this framework,

it is necessary to explicitly take into account future

changes in the belief when planning (Duff, 2002).

The idea is to combine the original MDP’s state s

t

and our belief state ξ

t

into a hyper-state.

Definition 2 (BAMDP). A Belief-Augmented MDP ν

(BAMPD) is an MDP with a set of hyper-states Ω =

S × B, where B is an appropriate set of probability

measures on M and S,A are the state and action sets

of all µ ∈ M . At time t, the agent observes the hyper-

state ω

t

= (s

t

,ξ

t

) ∈ Ω and takes action a

t

∈ A. We

write the transition distribution as ν(ω

t+1

|ω

t

,a

t

) and

the reward distribution as ν(r

t

|ω

t

).

The hyper-state ω

t

has the Markov property. This al-

lows us to treat the BAMDP as an infinite-state MDP

with transitions ν(ω

t+1

|ω

t

,a

t

), and rewards ν(r

t

|ω

t

).

1

When the horizon T is finite, we need only require ex-

pand the tree to depth T − t. Thus, backwards induc-

tion starting from the set of terminal hyper-states Ω

T

and proceeding backwards to T − 1,.. . ,t provides a

solution:

V

∗

n

(ω) = max

a∈A

E

ν

(r|ω) + γ

∑

ω

0

∈Ω

n+1

ν(ω

0

|ω,a)V

∗

n+1

(ω

0

),

(3)

where Ω

n

is the set of hyper-states at time n. We

can approximately solve infinite-horizon problems if

we expand the tree to some finite depth, if we have

bounds on the value of leaf nodes.

1.4 Bounds on the Value Function

We shall relate the optimal value function of the

BAMDP, V

∗

(ω), for some ω(s,ξ), to the value func-

tions V

π

µ

of MDPs µ ∈ M for some π. The opti-

mal policy for µ is denoted as π

∗

(µ). The mean

MDP resulting from belief ξ is denoted as ¯µ

ξ

and

has the properties: ¯µ

ξ

(s

t+1

|s

t

,a

t

) = E

ξ

[µ(s

t+1

|s

t

,a

t

)],

¯µ

ξ

(r

t+1

|s

t

,a

t

) = E

ξ

[µ(r

t+1

|s

t

,a

t

)].

Proposition 1 (Dimitrakakis, 2008). For any ω =

(s,ξ), the BAMDP value function V

∗

obeys:

Z

M

V

π

∗

(µ)

µ

(s)ξ(µ)dµ ≥ V

∗

(ω) ≥

Z

M

V

π

∗

(¯µ

ξ

)

µ

(s)ξ(µ)dµ

(4)

Proof. By definition, V

∗

(ω) ≥V

π

(ω) for all ω, for any

policy π. It is easy to see that the lower bound equals

V

π

∗

(¯µ

ξ

)

(ω), thus proving the right hand side. The up-

per bound follows from the fact that for any function

f , max

x

R

f (x, u) du ≤

R

max

x

f (x, u) du.

If M is not finite, then we cannot calculate the

upper bound of V (ω) in closed form. However,

we can use Monte Carlo sampling: Given a hyper-

state ω = (s,ξ), we draw m MDPs from its belief ξ:

µ

1

,... , µ

m

∼ ξ,

2

estimate the value function for each

µ

k

, ˜v

ω

U,k

,V

π

∗

(µ

k

)

µ

k

(s), and average the samples: ˆv

ω

U,m

,

1

Because of the way that the BAMDP ν is constructed

from beliefs over M , the next reward now depends on the

next state rather than the current state and action.

2

In the discrete case, we sample a multinomial distri-

bution from each of the Dirichlet densities independently

for the transitions. For the rewards we draw independent

Bernoulli distributions from the Beta of each state-action

pair.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

260

1

m

∑

m

k=1

˜v

ω

U,k

. Let v

ω

U

,

R

M

ξ

ω

(µ)V

∗

µ

(s

ω

)dµ. Then,

lim

m→∞

[ ˆv

ω

U,m

] = v

ω

U

almost surely and E[ ˆv

ω

U,m

] = v

ω

.

Lower bounds can be calculated via a similar pro-

cedure. We begin by calculating the optimal policy

π

∗

(¯µ

ξ

) for the mean MDP ¯µ

ξ

arising from ξ. We then

compute ˜v

ω

L,k

, V

π

∗

(¯µ

ξ

)

µ

k

, the value of that policy for

each sample µ

k

and estimate ˆv

ω

L,m

,

1

m

∑

m

k=1

˜v

ω

L,k

.

2 COMPLEXITY OF BELIEF

TREE SEARCH

We now present our main results. Detailed proofs are

available in an accompanying technical report (Dim-

itrakakis, 2009). We search trees which arise in the

context of planning under uncertainty in MDPs us-

ing the BAMDP framework. We can use value func-

tion bounds on the leaf nodes of a partially expanded

BAMDP tree to obtain bounds for the inner nodes

through backwards induction. The bounds can be

used both for action selection and for further tree

expansion. However, the bounds are estimated via

Monte Carlo sampling, something that necessitates

the use of stochastic branch and bound technique to

expand the tree.

We analyse a set of such algorithms. The first is

a search to a fixed depth that employs exact lower

bounds. We then show that if only stochastic bounds

are available, the complexity of fixed depth search

only increases logarithmically. We then present two

stochastic branch and bound algorithms, whose com-

plexity is dependent on the number of near-optimal

branches. The first of these uses bound samples on

leaf nodes only, while the second uses samples ob-

tained in the last half of the parents of leaf nodes, thus

using the collected samples more efficiently.

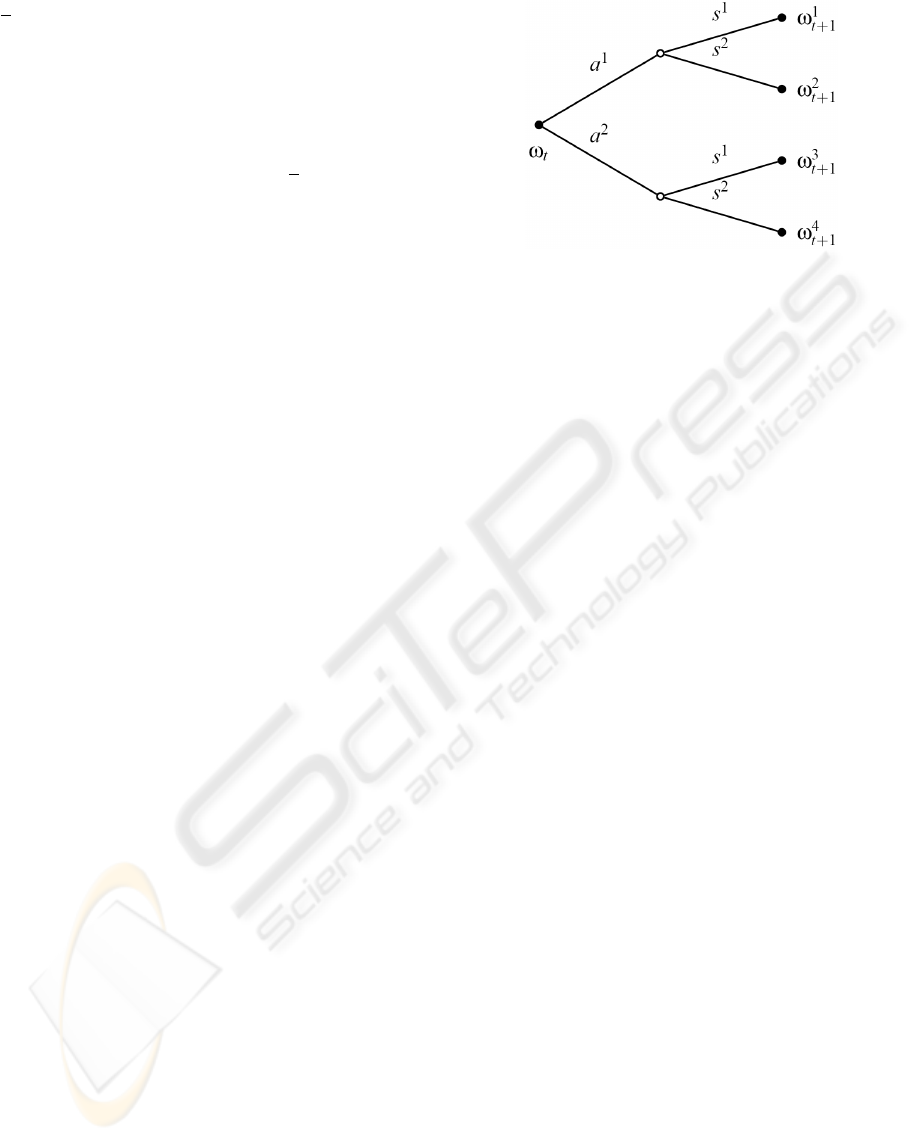

2.1 Assumptions and Notation

We present the main assumptions concerning the tree

search, pointing out the relations to Bayesian RL. The

symbols V and v have been overloaded to make this

correspondence more apparent. The tree that has a

branching factor at most φ. The branching is due to

both action choices and random outcomes (see Fig.1).

Thus, the nodes at depth k correspond to the set of

hyper-states {ω

t+k

} in the BAMDP. By abusing no-

tation, we may also refer to the components of each

node ω = (s,ξ) as s(ω),ξ(ω).

We define a branch b as a set of policies (i.e. the

set of all policies starting with a particular action).

The value of a branch b is V

b

, max

π∈b

V

π

. The

root branch is the set of all policies, with value V

∗

. A

Figure 1: A belief tree, where the rewards are ignored

for simplicity, with actions A = {a

1

,a

2

} and states S =

{s

1

,s

2

}.

hyper-state ω is b-reachable if ∃π ∈ b s.t P

π,ν

(ω|ω

t

) >

0.Any branch b can be partitioned at any b-reachable

ω into a set of branches B(b,ω). A possible parti-

tion is any b

i

=

{

π ∈ b : i = argmax

a

π(a|ω)

}

for any

b

i

∈ B(b, ω). We simplify this by considering only

deterministic policies. We denote the k-horizon value

function by V

b

(k) , max

π∈b

V

π

t,k

(ω

t

). For each tree

node ω = (s,ξ), we define upper and lower bounds

v

U

(ω) , E

ξ

[V

∗

µ

(s)], v

L

(ω) , E

ξ

[V

π

∗

(¯µ

ξ

)

(s)], from (4).

By fully expanding the tree to depth k and perform-

ing backwards induction (3), using either v

U

or v

L

as

the value of leaf nodes, we obtain respectively upper

and lower bounds V

b

U

(k),V

b

L

(k) on the value of any

branch. Finally, we use C (ω) for the set of immediate

children of a node ω and the short-hand Ω

k

for C

k

(ω),

the set of all children of ω at depth k. We assume the

following:

Assumption 1 (Uniform linear convergence). There

exists γ ∈ (0, 1) and β > 0 s.t. for any branch b, and

depth k, V

b

−V

b

L

(k) ≤ βγ

k

, V

b

U

(k) −V

b

≤ βγ

k

.

Remark 1. For BAMDPs with r

t

∈ [0,1] and γ < 1,

Ass. 1 holds, from boundedness and the geometric se-

ries, with β = 1/(1−γ), since V

b

L

(k) and V

b

U

(k) are the

k-horizon value functions with the value of leaf nodes

bounded in 1/(1 − γ).

We analyse algorithms which search the tree and

then select an (action) branch

ˆ

b

∗

. For each algorithm,

we examine the number of leaf node evaluations re-

quired to bound the regret V

∗

−V

ˆ

b

∗

.

2.2 Flat Search

With exact bounds, we can expand all branches to a

fixed depth and then select the branch

ˆ

b

∗

, with the

highest lower bound. This is Alg. 1, with complexity

given by the following lemma.

COMPLEXITY OF STOCHASTIC BRANCH AND BOUND METHODS FOR BELIEF TREE SEARCH IN BAYESIAN

REINFORCEMENT LEARNING

261

Algorithm 1. Flat oracle search.

1: Expand all branches until depth k = log

γ

ε/β or

ˆ

∆

L

> βγ

k

− ε.

2: Select the root branch

ˆ

b

∗

= argmax

b

V

b

L

(k).

Algorithm 2. Flat stochastic search.

1: procedure FSSEARCH(ω

t

,k, m)

2: Let Ω

k

=

ω

i

t+k

: i = 1,... , φ

k

be the set of

all k-step children of ω

3: for ω ∈ Ω

k

do

4: Draw m samples ˜v

ω

L, j

= V

π

µ

, µ ∼ ξ(ω)

5: ˆv

ω

L

=

1

m

∑

m

j=1

˜v

ω

L, j

,

6: end for

7: Calculate

ˆ

V

b

, return

ˆ

b

∗

= argmax

ˆ

V

b

.

8: end procedure

Lemma 1. Alg. 1 on a tree with branching factor φ,

γ ∈ (0,1), samples O(φ

1+log

γ

ε/β

) times to bound the

regret by ε.

Proof. Bound the k-horizon value function error with

Ass. 1 and note that there are φ

k+1

leaves.

In our case, we only have a stochastic lower bound on

the value of each node. Algorithm 2 expands the tree

to a fixed depth and then takes multiple samples from

each leaf node.

Lemma 2. Calling Alg. 2 with k = dlog

γ

ε/2βe, m =

2dlog

γ

(ε/2β)e · log φ, we bound the regret by ε using

O

φ

1+log

γ

ε/2β

log

γ

(ε/2β) · log φ

samples.

Proof. The regret now is due to both limited depth

and stochasticity. We bound each by ε/2, the first via

Lem. 1 and the second via Hoeffding’s inequality.

Thus, stochasticity mainly adds a logarithmic factor

to the oracle search. We now consider two algo-

rithms which do not search to a fixed depth, but select

branches to deepen adaptively.

2.3 Stochastic Branch and Bound 1

A stochastic branch and bound algorithm similar to

those examined here was originally developed by

(Norkin et al., 1998) for optimisation problems. At

each stage, it takes an additional sample at each leaf

node, to improve their upper bound estimates, then

expands the node with the highest mean upper bound.

Algorithm 3 uses the same basic idea, averaging the

value function samples at every leaf node.

In order to bound complexity, we need to bound

the time required until we discover a nearly optimal

branch. We calculate the number of times a subop-

timal branch is expanded before its suboptimality is

discovered. Similarly, we calculate the number of

times we shall sample the optimal node until its mean

upper bound becomes dominant. These two results

cover the time spent sampling upper bounds of nodes

in the optimal branch without expanding them and the

time spent expanding nodes in a sub-optimal branch.

Algorithm 3. Stochastic branch and bound 1.

Let L

0

be the root.

for n = 1,2,. .. do

for ω ∈ L

n

do

m

ω

++, µ ∼ ξ(ω), ˜v

ω

m

ω

= V

∗

µ

(s(ω)).

ˆv

ω

U

=

1

m

ω

∑

m

ω

i=1

˜v

ω

i

end for

ˆ

ω

∗

n

= argmax

ω

ˆv

ω

U

.

L

n+1

= C (

ˆ

ω

∗

n

) ∪ L

n

\

ˆ

ω

∗

n

end for

Lemma 3. If N is the (random) number of samples ˜v

i

from random variable V ∈ [0,β] we must take until its

empirical mean

ˆ

V

k

,

∑

k

i=1

˜v

i

> EV − ∆, then:

E[N] ≤ 1 + β

2

∆

−2

(5)

P[N > n] ≤ exp

−2β

−2

n

2

∆

2

. (6)

Proof. The first inequality follows from the Hoeffding

inequality and an integral bound on the resulting sum,

while the second inequality is proven directly via a

Hoeffding bound.

By setting ∆ to be the difference between the opti-

mal and second optimal branch, we can use the above

lemma to bound the number of times N the leaf nodes

in the optimal branch will be sampled without be-

ing expanded. The converse problem is bounding the

number of times that a suboptimal branch will be ex-

panded.

Lemma 4. If b is a branch with V

b

= V

∗

− ∆, then

it will be expanded at least to depth k

0

= log

γ

∆/β.

Subsequently,

P(K > k) < O

exp

−2β

−2

(k − k

0

)∆

2

. (7)

Proof. In the worst case, the branch is degenerate and

only one leaf has non-zero probability. We then apply

a Hoeffding bound to obtain the desired result.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

262

Algorithm 4. Stochastic branch and bound 2.

for ω ∈ L

n

do

ˆ

V

ω

U

=

1

∑

ω

0

∈C (ω)

m

ω

0

∑

ω

0

∈C (ω)

∑

m

0

ω

i=1

˜v

ω

0

i

end for

Use (3) to obtain

ˆ

V

U

for all nodes.

Set ω

0

to root.

for d = 1,... do

a

∗

d

= argmax

a

∑

ω∈Ω

d

ω

d−1

( j|a)

ˆ

V

U

(ω)

ω

d

∼ ω

d−1

( j|a

∗

d

).

if ω

d

∈ L

n

then,

L

n+1

= C (ω

d

) ∪ L

n

\ω

d

Break

end if

end for

2.4 Stochastic Branch and Bound 2

The degeneracy is the main problem of Alg. 3. Alg. 4

not only propagates upper bounds from multiple leaf

nodes to the root, but also re-uses upper bound sam-

ples from inner nodes, in order to handle the degener-

ate case where only one path has non-zero probability.

(Nevertheless, Lemma 3 applies without modification

to Alg. 4). Because we are no longer operating on

leaf nodes, we can take advantage of the upper bound

samples collected along a given trajectory. However,

if we use all of the upper bounds along a branch, then

the early samples may bias our estimates a lot. For

this reason, if a leaf is at depth k, we only average

the upper bounds along the branch to depth k/2. The

complexity of this approach is given by the following

lemma:

Lemma 5. If b is s.t. V

b

= V

∗

−∆, it will be expanded

to depth k

0

> log

γ

∆/β and

P(K > k) / exp

−2(k − k

0

)

2

(1 − γ

2

)

, k > k

0

Proof. There is a degenerate case where only one sub-

branch has non-zero probability. However we now

re-use the samples that were obtained at previous ex-

pansions, thus allowing us to upper bound the bias

by

∆(1−γ

k+1

)

(k−k

0

)(1−γ)

. This allows to use a tighter Hoeffding

bound and so obtain the desired outcome.

This bound decreases faster with k. Furthermore,

there is no dependence on ∆ after the initial transi-

tory period, which may however be very long. The

gain is due to the fact that we are re-using the upper

bounds previously obtained in inner nodes. Thus, this

algorithm should be particularly suitable for stochas-

tic problems.

2.5 Lower Bounds for Bayesian RL

We can reduce the branching factor φ, (which

is |A × S × R | for a full search) by employing

sparse sampling methods (Kearns et al., 1999) to

O{|A| exp[1/(1 − γ)]}. This was essentially the ap-

proach employed by (Wang et al., 2005). However,

our main focus here is to reduce the depth to which

each branch is searched.

The main problem with the above algorithms is

the fact that we must reach k

0

= dlog

γ

∆e to discard

∆-optimal branches. However, since the hyper-state

ω

t

arises from a Bayesian belief, we can use an addi-

tional smoothness property:

Lemma 6. The Dirichlet parameter sequence ψ

t

/n

t

,

with n

t

,

∑

K

i=1

ψ

i

t

, is a c-Lipschitz martingale with

c

t

= 1/2(n

t

+ 1).

Proof. Simple calculations show that, no matter what

is observed, E

ξ

t

(ψ

t+1

/n

t+1

) = ψ

t

/n

t

. Then, we

bound the difference |ψ

t+k

/n

t+k

− ψ

t

/n

t

| by two dif-

ferent bounds, which we equate to obtain c

t

.

Lemma 7. If µ, ˆµ are such that kT −

ˆ

T k

∞

≤ ε and

kr − ˆrk

∞

≤ ε, for some ε > 0, then

V

π

−

ˆ

V

π

∞

≤

ε

(1−γ)

2

, for any policy π.

Proof. By subtracting the Bellman equations for

V,

ˆ

V and taking the norm, we can repeatedly apply

Cauchy-Schwarz and triangle inequalities to obtain

the desired result.

The above results help us obtain better lower bounds

in two ways. First we note that initially 1/k converges

faster than γ

k

, for large γ, thus we should be able to

expand less deeply. Later, n

t

is large so we can sample

even more sparely.

If we search to depth k, and the rewards are in

[0,1], then, naively, our error is bounded by

∑

∞

n=k

γ

n

=

γ

k

/(1 − γ). However, the mean MDPs for n > k are

close to the mean MDP at k due to Lem. 6. This means

that β can be significantly smaller than 1/(1 − γ). In

fact, the total error is bounded by

∑

∞

n=k

γ

n

(n − k)/n.

For undiscounted problems, our error is bounded by

T − k in the original case and by T − k[1 + log(T /k)]

when taking into account the smoothness.

3 CONCLUSIONS AND RELATED

WORK

Much recent work on Bayesian RL focused on my-

opic estimates or full expansion of the belief tree

COMPLEXITY OF STOCHASTIC BRANCH AND BOUND METHODS FOR BELIEF TREE SEARCH IN BAYESIAN

REINFORCEMENT LEARNING

263

up to a certain depth. Exceptions include (Poupart

et al., 2006), which uses an analytical bound based

on sampling a small set of beliefs and (Wang et al.,

2005), which uses Kearn’s sparse sampling algo-

rithm (Kearns et al., 1999) to expand the tree. Both

methods have complexity exponential in the horizon,

something which we improve via the use of smooth-

ness properties induced by the Bayesian updating.

There are also connections with work on

POMDPs problems (Ross et al., 2008). However

this setting, though equivalent in an abstract sense,

is not sufficiently close to the one we consider. Re-

sults on bandit problems, employing the same value

function bounds used herein were reported in (Dim-

itrakakis, 2008), which experimentally compared al-

gorithms operating on leaf nodes only.

Related results on the online sample complexity

of Bayesian RL were developed by (Kolter and Ng,

2009), who employs a different upper bound to ours

and (Asmuth et al., 2009), who employs MDP sam-

ples to plan in an augmented MDP space, similarly to

(Auer et al., 2008) (who consider the set of plausible

MDPs) and uses Bayesian concentration of measure

results (Zhang, 2006) to prove mistake bounds on the

online performance of the algorithm.

Interestingly, Alg. 4 resembles HOO (Bubeck

et al., 2008) in the way that it traverses the tree, with

two major differences. (a) The search is adapted to

stochastic trees. (b) We use means of samples of

upper bounds, rather than upper bounds on sample

means. For these reasons, we are unable to simply

restate the arguments in (Bubeck et al., 2008).

We presented complexity results and counting ar-

guments for a number of tree search algorithms on

trees where stochastic upper and lower bounds sat-

isfying a smoothness property exist. These are the

first results of this type and partially extend the re-

sults of (Norkin et al., 1998), which provided an

asymptotic convergence proof, under similar smooth-

ness conditions, for a stochastic branch and bound al-

gorithm. In addition, we introduce a mechanism to

utilise samples obtained at inner nodes when calcu-

lating mean upper bounds at leaf nodes. Finally, we

relate our complexity results to those of (Kearns et al.,

1999), for whose lower bound we provide a small

improvement. We plan to address the online sam-

ple complexity of the proposed algorithms, as well as

their practical performance, in future work.

ACKNOWLEDGEMENTS

This work was part of the ICIS project, supported

by the Dutch Ministry of Economic Affairs, grant nr:

BSIK03024. I would like to thank the anonymous re-

viewers, as well as colleagues at the university of Am-

sterdam, Leoben and TU Crete for their comments on

earlier versions of this paper.

REFERENCES

Asmuth, J., Li, L., Littman, M. L., Nouri, A., and Wingate,

D. (2009). A Bayesian sampling approach to explo-

ration in reinforcement learning. In UAI 2009.

Auer, P., Jaksch, T., and Ortner, R. (2008). Near-optimal

regret bounds for reinforcement learning. In Proceed-

ings of NIPS 2008.

Bubeck, S., Munos, R., Stoltz, G., and Szepesv

´

ari, C.

(2008). Online optimization in X-armed bandits. In

NIPS, pages 201–208.

Dimitrakakis, C. (2008). Tree exploration for Bayesian RL

exploration. In CIMCA’08, pages 1029–1034, Los

Alamitos, CA, USA. IEEE Computer Society.

Dimitrakakis, C. (2009). Complexity of stochastic branch

and bound for belief tree search in Bayesian rein-

forcement learning. Technical Report IAS-UVA-09-

01, University of Amsterdam.

Duff, M. O. (2002). Optimal Learning Computational

Procedures for Bayes-adaptive Markov Decision Pro-

cesses. PhD thesis, University of Massachusetts at

Amherst.

Kearns, M. J., Mansour, Y., and Ng, A. Y. (1999). A sparse

sampling algorithm for near-optimal planning in large

Markov decision processes. In Dean, T., editor, IJCAI,

pages 1324–1231. Morgan Kaufmann.

Kolter, J. Z. and Ng, A. Y. (2009). Near-Bayesian explo-

ration in polynomial time. In ICML 2009.

Norkin, V. I., Pflug, G. C., and Ruszczyski, A. (1998). A

branch and bound method for stochastic global op-

timization. Mathematical Programming, 83(1):425–

450.

Poupart, P., Vlassis, N., Hoey, J., and Regan, K. (2006). An

analytic solution to discrete Bayesian reinforcement

learning. In ICML 2006, pages 697–704. ACM Press

New York, NY, USA.

Puterman, M. L. (1994,2005). Markov Decision Processes

: Discrete Stochastic Dynamic Programming. John

Wiley & Sons, New Jersey, US.

Ross, S., Pineau, J., Paquet, S., and Chaib-draa, B. (2008).

Online planning algorithms for POMDPs. Journal of

Artificial Intelligence Resesarch, 32:663–704.

Wang, T., Lizotte, D., Bowling, M., and Schuurmans, D.

(2005). Bayesian sparse sampling for on-line reward

optimization. In ICML ’05, pages 956–963, New

York, NY, USA. ACM.

Zhang, T. (2006). From ε-entropy to KL-entropy: Analysis

of minimum information complexity density estima-

tion. Annals of Statistics, 34(5):2180–2210.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

264