PEDAGOGICAL SYSTEM IN VIRTUAL ENVIRONMENTS FOR

HIGH-RISK SITES

Kahina Amokrane and Domitile Lourdeaux

Heudiasyc Laboratory UMR 6599 CNRS, University of Technology of Compi

`

egne

Centre de Recherches de Royallieu, 60200 Compi

`

egne, France

Keywords:

Virtual environment, Intelligent tutoring system, Pedagogical feedback, Industrial risks, SEVESO sites.

Abstract:

Training at high risk sites (SEVESO sites) has many difficulties regarding potential risks, high training costs,

etc. Virtual Environments for Training/Learning (VET/L) are best suited to overcome such difficulties. In this

work, we have developed a collaborative VET/L where a learner with other autonomous virtual agents can

work together to achieve a specific goal. We equipped this environment with an Intelligent Tutoring System,

HERA, allowing to track several learners simultaneously, and to show them the consequences of their errors.

HERA provides relevant feedback to learners, in real time or in a replay mode, thanks to its pedagogical model

and module. This feedback depends on predefined pedagogical rules based on learners’ level, their errors, the

pedagogical goal, etc. In this paper, we present our system’s architecture. Then, we give a detailed description

of the pedagogical model, and we explain the pedagogical module functionalities.

1 INTRODUCTION

The application context of our work concerns risk

management and prevention training at subcontract-

ing companies that intervene at high risk sites. These

companies aim to improve the quality of training and

to reduce the number of accident causes related to hu-

man factors. Training in situ does not allow to re-

produce disruptive situations that enable the acquisi-

tion of necessary skills to manage risks. Virtual Re-

ality (VR) allows the construction of custom simula-

tions adapted to needs (Bukhardt et al., 2006; Mellet-

D’huart et al., 2005), and particularly in the area of

risk prevention (Marc and Gardeux, 2007). However,

few Virtual Environments (VE) take into considera-

tion pedagogical or didactic aspects. This type of VE

promotes learning by 1) allowing to better understand

and analyze the learner’s actions thanks to his trace

and to some performance criteria; 2) providing adap-

tive epistemic feedback related to the learning situa-

tion; and 3) proposing an adaptive scripting by modi-

fying the VE and the conduct of the scenario accord-

ing to the behavior of the learner and to his learning

evolution.

The development of such environments for human

learning in the risk area gives rise to several questions:

how to train learners to react to a risky or unexpected

situation? How to help learners to have an idea about

potential risks? How to enable a trainer to follow sev-

eral learners at the same time? etc. The work pre-

sented in this paper is in the area of using the VR

for training, in particular for the implementation of a

pedagogy dedicated to knowledge acquisition within

a collaborative work environment. Our work aims to

assist trainers following learners, and to help them to

analyze and understand the consequences of learners

actions. This analysis will also assist learners in the

debriefing and replay phase.

The feedback on learning and reflexive activities

is important for the learner (Bukhardt et al., 2006).

The first contribution of our work is to propose to the

trainer and the learner individual activity traces, per-

formance criteria, and especially explanations of the

consequences of these activities. In addition, it aims

to alert the trainer in case of difficulties encountered

by a specific learner. Thus, our work intends to pro-

vide a system able to determine the task realized by

each learner, detect committed errors and produced

risks. The second contribution of our work is to pro-

pose adaptive pedagogical feedback learning related

to the activities of each learner. The third contribution

of our work is to propose a tracking system that allows

to maintain the consistency between the scripting and

the training objectives by generating or inhibiting cer-

tain events. Each scenario allows to learn a particular

training objective or several combined objectives (e.g.

371

Amokrane K. and Lourdeaux D. (2010).

PEDAGOGICAL SYSTEM IN VIRTUAL ENVIRONMENTS FOR HIGH-RISK SITES.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Artificial Intelligence, pages 371-376

DOI: 10.5220/0002736003710376

Copyright

c

SciTePress

procedure, safety standard, etc.). It consists of being

able to script events in the VE related to the initial

scenario, but also according to correct or erroneous

actions of the learner. To propose these contributions,

the scientific difficulties are 1) the lack or weakness

of credible and pedagogical description languages of

human activities and scripting, 2) the lack of recog-

nition systems of learner activities in open environ-

ments such as VE, and consideration of dynamic as-

pects of interaction 3) the lack of generic pedagogical

systems in VET/L.

To overcome these scientific difficulties, we pro-

pose a VET/L equipped with an intelligent tutoring

system called HERA (Helpful agent for safEty leaRn-

ing in virtual environment). In this paper, we discuss

mainly the third difficulty.

2 RELATED WORK

Several systems have been proposed in the literature

that integrate pedagogy and didactic in a VE for train-

ing in order to assist the learner. Most of them are

not generic and propose help (replace a learner in a

task, guide him, etc.) and assistances that do not go

beyond the prescribed procedure (Elliott and Leste,

1999) i.e. for a deviation or an unforeseen event, such

systems do not know what to do. Some systems in-

clude a model of the didactic decision-making process

allowing to produce feedback relevant to the user’s

knowledge (Luengo, 2005). Other systems include a

generic pedagogical model that is still dependent on

the trainer. These systems tend to provide a set of as-

sistances for each erroneous situation, but it is up to

the trainer to choose the most relevant among them

(Buche et al., 2004). Other systems assist the learner

using a set of performance criteria that are provided in

a replay mode (Mellet-D’huart et al., 2005). Most of

these systems penalize, disrupt, and help the learner

at each time, which affects the acquisition of knowl-

edge since the training is not progressive. In addi-

tion, although the presence of risks in several systems

(Buche et al., 2004; Elliott and Leste, 1999) the con-

cept of risk was not well enhanced in these systems.

3 OUR VIRTUAL ENVIRONMENT

In this work, we have developed a VET/L using Vir-

tools, dedicated to knowledge acquisition. During

a training session, each learner performs his train-

ing in front of a PC screen. In this environment, a

learner has to conduct a collaborative work with other

autonomous virtual actors. He is represented by an

avatar in the VE, along with other agents with whom

he is in collaboration. The learner can manipulate his

avatar (displacement, changing viewpoint, etc.) us-

ing a mouse and a keyboard. To realize an action,

he can click on the target object and select from the

menu the action he wants (Figure1). The VE is adap-

tive to learners training thanks to the integration of

our intelligent tutoring system HERA. In addition to

HERA, the environment is equipped with an onto-

logical knowledge-based system called COLOMBO

(Amokrane et al., 2008a).

4 HERA ARCHITECTURE

HERA consists of five models representing the

knowledge containers, and of five modules represent-

ing the processes that exchange, analyze, and register

knowledge.

4.1 HERA Models

In addition to the pedagogical model (section 5),

HERA contains the following models:

4.1.1 Activity Model

It contains a detailed description of the activity that

learners must perform during the training. To this

end, we have developed an activity description lan-

guage called HAWAI-DL. HAWAI-DL provides a for-

malism to represent the hierarchical description of

procedure tasks described by experts interviewed by

ergonomists. In addition, it can describe “periph-

eral” tasks called “hyperonymous tasks”. and toler-

ated deviations called “BTCU tasks”(Amokrane et al.,

2008b; Edward et al., 2008).

4.1.2 Errors Model

It contains a generic classification of error types that

may be committed by learners. This classification

consists, first, of the errors classified by Hollnagel

(Hollnagel, 1993). Moreover, we have added the fol-

lowing error types: 1) task-related errors (precondi-

tion, post-condition and constructor errors); 2) target

object and action errors ; 3) role errors ; 4) subjec-

tive errors; and 5) BTCU errors. (Amokrane et al.,

2008b).

4.1.3 Risks Model

It is intended to describe all the risks to which the

learner’s errors may give rise. It consists of the fol-

lowing main concepts: risk, BTCU task, hyperony-

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

372

mous task and environmental condition. These con-

cepts allow to describe the causal relationships be-

tween human errors and risks as well as risks prop-

agation using a Bayesian network. In order to define

these relationships, we interpret the risk analysis sce-

narios realized at the INERIS

1

. (Amokrane and Lour-

deaux, 2009).

4.1.4 Learner Trace

It is used to preserve the “activity trace” of the learner

(what the learner has done), the errors made, the

causes and consequences of the errors, as well as the

risks produced.

4.2 HERA Modules

In addition to the pedagogical module (section 6),

HERA contains the following modules:

• Interface module that acts as an intermediary be-

tween the exterior (VE and COLOMBO) and the

other modules.

• Recognition module that determines what the

learner is supposed to be doing, according to the

observable actions and effects (“print trace”), the

activity model and errors model, based on plan-

recognition techniques.

• Learner module that produces the “activity

trace”

˙

It allows the task plan realized by the learner

to be determined from among all the candidate

task plans provided by the recognition module us-

ing formal approach of plan recognition (heuris-

tics).

• Risks module that determines the risks resulting

from the learner’s errors and their consequences

in real time according to the risks model. In ad-

dition, it calculates the probability of risks using

Bayesian network.

5 PEDAGOGICAL MODEL

In addition to the four models presented previously,

HERA contains a pedagogical model that allows to

determine why, how and when to intervene to assist

learners and to help the trainer. This model consists

principally of:

5.1 Pedagogical Goal

It represents the “why” of a work session. These goals

are determined according to the work environment

1

Industrial Environment and Risk National Institute

and in situ observations and to interviews conducted

by ergonomists of the Paris Descartes University and

by risk analysis experts of INERIS. We distinguish

several pedagogical goals such as: take into account

risks by type (chemical, nuclear, etc.), take human re-

lations into account, etc.

5.2 Pedagogical Situation

In order to allow a progressive training, we define

for each pedagogical goal the situations in which the

learner will be involved allowing him to be evalu-

ated during a training session. In general, pedagog-

ical situations represent BTCU tasks, hypernymous

tasks, etc. For example, in the “dangerous substances

lading” scenario, if the learner does not realize the

BTCU task “accompany valve”, a leak may be pro-

duced. Thus, this task represents a pedagogical sit-

uation whose pedagogical goal is to “take risks into

account”.

5.3 Environmental Conditions

In order to allow the trainer to control a training

session and to verify the knowledge acquired by a

learner, we added the environmental conditions to the

pedagogical model. These conditions represent the

world state (states of the environment’s objects). They

can be the favorable pre-conditions of tasks, for ex-

ample “to free a bolt”, it is preferable that the “bolt

be seized up”. They can also be triggering conditions

of a risk, for example, if the learner does not “accom-

pany the valve”, and “its spring is tired”, then a leak

will be triggered. On the other hand, they play a role

in the triggering of risks due to learner’s errors.

5.4 Learner’s Level

To make the training system adaptive and personal-

ized to the knowledge of each learner, we consider

five levels of learners: novice, intermediate novice,

intermediate expert, expert and very expert. The mass

of knowledge to be acquired increases as the learner’s

level evolves. The pedagogical goals, the relevant

pedagogical situations, the environmental conditions

as well as the learner’s level are initialized by the

trainer at the beginning of a training session.

5.5 Situated, Adaptive and Pedagogical

Feedback

They represent the feedback that the system provides

to the learner and trainer in real time or in replay

mode. We distinguish several types of feedback:

PEDAGOGICAL SYSTEM IN VIRTUAL ENVIRONMENTS FOR HIGH-RISK SITES

373

• Scale modification (enlarging some parts to get a

better view, etc.).

• Reification, i.e. show the learner some concepts

or abstractions in a concrete and intelligible form

(emanation of colourless gas, invisible particles to

naked eye, etc.).

• Restrictions to limit the learners actions, such as

stop messages sent to the novice learner in real

time when he commits a sever error that could

lead to a risk. (Figure 1).

• Superposition of information:

– Comments and argued explanations about the

consequences of learner actions. These mes-

sages are displayed, in real time, to the learner

on the VE (Figure 1) and to the trainer screen

(Figure 3). The messages are displayed also in

replay mode on the learner’s screen, e.g. if the

learner uses a wrong tool, the system displays

to the learner as an explanation: “This is not the

right tool”.

– Warning and attention messages sent to attract

the attention of the learner, e.g. when an in-

termediate novice learner commits an error that

causes or could cause a risk, a warning or atten-

tion message, respectively, is sent (Figure 1).

Figure 1: Object menu, Textual feedback and Performance

criteria in the VE.

5.6 Situated and Adaptive Scripting

Changes and adjustments of the scripting (scenario

and behaviors of virtual actors): the integration of

a situated and adaptive scripting allows to adapt to

the learner’s profile and to the objectives of using a

VE. It also allows to control and modify the scenario

progress in real time, and to guide the activity and

the behaviour of virtual autonomous agents in order

to analyze the learner’s behaviour and to enrich his

learning profile. These changes correspond to:

• Triggering of risks due to learner errors in real

time (Figure 2), e.g. triggering sparks if the

learner uses a non-ATEX tool in an ATEX zone.

• Modifications of the scenario by sending disrup-

tive elements in order to know the reaction of the

learner in front of unforeseen situations.

5.7 Performance Criteria

To assess learners, several criteria may be used. Since

our system is dedicated to SEVESO sites and to orga-

nizations interested in human relations, we have pro-

posed the following criteria to the learner (Figure 2)

and to the trainer (Figure 3):

Figure 2: Fire triggering and Performance criteria.

Risk. This criterion must be respected by learners

going to work in SEVESO sites, i.e. a learner

must have a good level regarding this criterion to

be able to work in situ without any danger. The

evaluation of this criterion is based on risks sever-

ity that is calculated by the risks module.

Errors. Statistical studies have demonstrated that

good operators tend to make errors before reach-

ing the optimal solution. This assumed that the

ability to detect and correct errors is a principal

component of an effective solution. Based on

this assumption, learners in our system, especially

novices, are not penalized for each error commit-

ted, unless it persists or it was a sever error. The

evaluation of this criterion can be expanded across

several sessions in order to verify the evolution of

the learner concerning a specific type of errors.

Productivity. It is an important aspect for industrials

since it represents the amount and the quality of

products.

Time. Learners must respect the constraint of time in

most work situations. In the “industrial mainte-

nance scenario”, each session has a specific dura-

tion, and in other scenarios dealing with risks, the

time must be respected especially when reacting

to a risk situation. For example, in the scenario of

“dangerous substances lading”, we take into ac-

count the period spent by the learner between the

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

374

moment where a risk is triggered and the moment

where the learner reacts to this unexpected situa-

tion. This criterion is calculated as follows:

TC (time criterion) = session duration / time esti-

mated by the expert for this session.

TC = spent time to react to a risk / time estimated

by the expert.

These criteria can be exploited during a training

session or in replay mode, and displayed to learners

and to the trainer. Performance criteria are mostly

useful in replay mode allowing learners to better see

the deviations they made without being disturbed dur-

ing the training session. They are represented by

icons that change colors depending on the severity of

criteria. For example, the icon of the risk criterion is

in green if there is no risk at all (probability 0), in yel-

low if the risk probability is small ] 0, 0.3], or in red

if the probability is higher than 0.6.

Figure 3: Trainer interface.

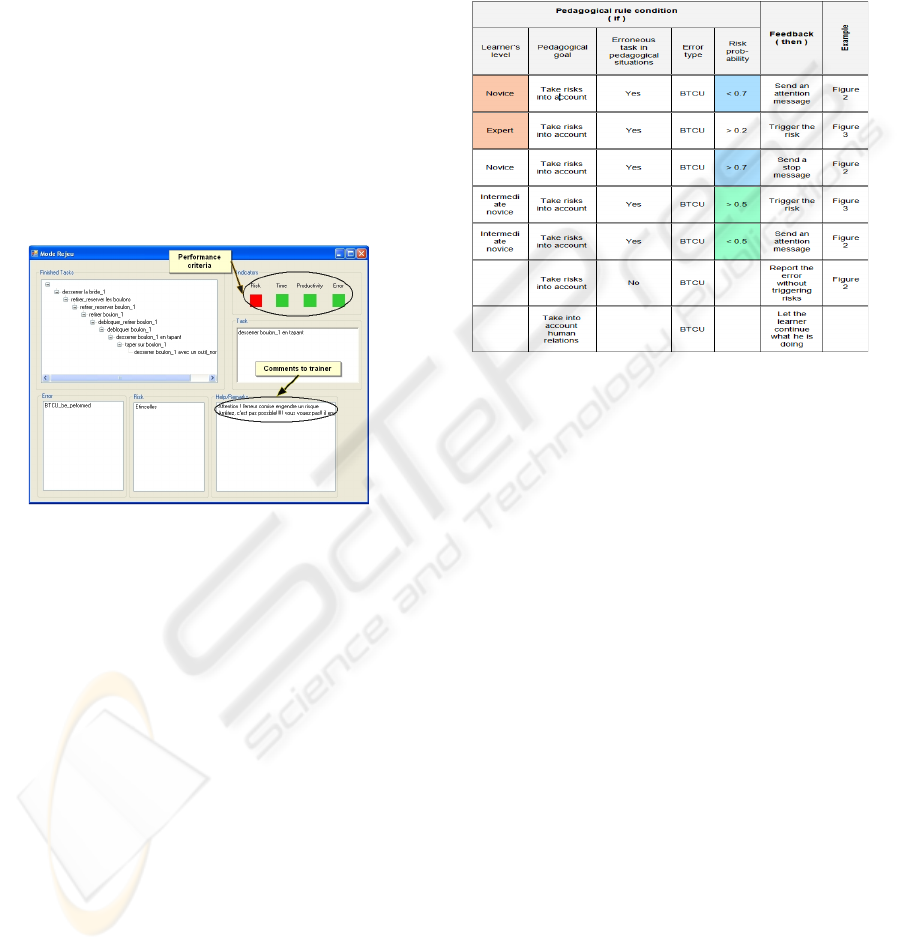

5.8 Pedagogical Rules

To enable our system to reason, we have defined a set

of pedagogical rules allowing to determine pertinent

feedback. Table 1 shows some examples of pedagog-

ical rules. The description of these rules consists of

the following concepts:

• Type of errors committed by the learner (deter-

mined by the tracking system).

• Learner’s level: the choice of feedback is related

to the learner’s level, which allows to have an

adaptive training.

• Risk probability: it is used in the cases where

there are BTCU errors or omission errors of other

safety-related tasks.

• Pedagogical goal and situation: for the choice of

feedback, the pedagogical rules allow to select

some aspects rather than others to highlight cer-

tain consequences of learners actions while main-

taining a pedagogical and scenaristic coherence.

This allows to avoid overburdening the learner,

i.e. to penalize him on-line only for the errors re-

lated to the selected pedagogical goals. For other

errors, their consequences will be recorded in the

learner’s trace to be shown and explained in replay

mode.

Table 1: Examples of pedagogical rules.

6 PEDAGOGICAL MODULE

It is the module that supports the decision of feedback

to be sent to the learner. For each error message re-

ceived from the learner module, this module checks

the error type. If the error type is BTCU, an omission

of a safety-related task, or a subjective viewpoint er-

ror, this module waits a message from the risks mod-

ule to know about the produced risk and its probabil-

ity. Then, it checks the learner’s level, the pedagog-

ical goal and situations selected by the trainer at the

beginning of the training session. According to these

data, the pedagogical module applies the relevant ped-

agogical rule to determine the appropriate feedback.

If the feedback is in real time, it sends a message to

the virtual environment and to the trainer, otherwise,

it records it to be used during the replay mode.

7 CONCLUSIONS

In our work, we have developed a collaborative

VET/L dedicated to industrials dealing with SEVESO

sites and human relations. We have three work scenar-

ios: pipe substitution, lading of dangerous substances,

industrial maintenance. In this VET/L, a learner with

other autonomous virtual agents can work together

to achieve a specific goal. We equipped this envi-

PEDAGOGICAL SYSTEM IN VIRTUAL ENVIRONMENTS FOR HIGH-RISK SITES

375

Figure 4: Data exchange between learner, pedagogical and

risks modules.

ronment with an Intelligent Turning System, called

HERA, allowing to track several learners simultane-

ously.

HERA allows to determine what the learner is do-

ing and to detect committed errors and produced risks.

Thanks to the pedagogical module and model, HERA

provides the necessary feedback to help learners, to

draw their attention, to warn or to stop them, and to

show them the consequences of their errors; and it

allows the trainer to analyze and understand the con-

sequences of learners’ actions on the organizational,

technical, and human systems. HERA’s pedagogical

model consists mainly of a set of rules that allow to

determine the appropriate feedback. These rules are

based principally on the learner’s level, pedagogical

goals, pedagogical situations and environmental con-

ditions.The feedback sent to learners can be in form

of: 1) situated, adaptive and pedagogical feedback ;

2) situated and adaptive scripting; 3) performance cri-

teria. Our system is personalized and adaptive to the

learner’s level. Thus, the feedback varies according to

the learner’s level in order, for example, not to disturb

novices , to penalize experts, etc.

Till now, we have implemented the generic brick

of our system. A preliminary evaluation has been

done to validate the pedagogical model concepts used

to describe the pedagogical rules. In the future we in-

tend to:evaluate the whole system and the feedback

impact over learners and trainers and mprove the sys-

tem according to industrials’ needs.

ACKNOWLEDGEMENTS

This work is part of V3S project(Virtual Reality

for Safe Seveso Substractors). The partners of the

project are: UTC/Heudiasyc UMR6599, INERIS,

Paris Descartes University, CEA-LIST, EMISSIVE,

EBTRANS, CICR, SI-GROUP, TICN and APTH.

We want to thank M. Sbaouni and M. Fraslin who

developed the virtual environment. We thank J.M.

Burkhardt and S. Couix for their contribution to the

design of HAWAI-DL and R. Perney who imple-

mented Visual HAWAI. We would like to thank A.

Ben-Ayed who built the activity model. Finally,

thanks are due to J. Marc for his remarks and expla-

nations.

REFERENCES

Amokrane, K. and Lourdeaux, D. (2009). Virtual Reality

Contribution to Training and Risk Prevention. In Pro-

ceedings of the International Conference of Artificial

Intelligence (ICAI’09), Las Vegas, Nevada, USA.

Amokrane, K., Lourdeaux, D., and Burkhardt, J. (2008a).

Learning and training in virtual environment for risk.

In Proceedings of 20th IEEE Int’l Conference on Tools

with Artificial Intelligence, Dayton, Ohio, USA.

Amokrane, K., Lourdeaux, D., and Burkhardts, J. (2008b).

Learner Behavior Tracking in a Virtual Environment.

In Proceedings of Virtual Reality International Con-

ference, Laval, France.

Buche, C., Querrec, R., De Loor, P., and Chevaillier, P.

(2004). MASCARET: A Pedagogical Multi-Agent

System for Virtual Environment for Training. Inter-

national Journal of Distance Education Technologies

(JDET), pages 41–61.

Bukhardt, J., Lourdeaux, D., and Mellet-d’Huart, D. (2006).

Le trait de la Ralit Virtuelle, Les applications de la

Ralit Virtuelle, volume 4, chapter La conception des

environnements virtuels pour l’apprentissage, pages

43–103. Presses de l’Ecole des Mine.

Edward, L., Lourdeaux, D., Lenne, D., Barthes, J., and

Burkhardt, J. (2008). Modelling autonomous virtual

agent behaviours in a virtual environment for risk. In

International Conference on Intelligent Virtual Envi-

ronments and Virtual Agent, Nanjing, China.

Elliott, C. Rickel, J. and Leste, J. (1999). Pedagogical

Agents and Active Computing: An Exploratory Syn-

thesis. In Artificial Intelligence Today: Recent Trends

and Developments journal.

Hollnagel, E. (1993). The phenotype of erroneous ac-

tions. International Journal of Man-Machine Studies,

39(1):1–32.

Luengo, V. (2005). Design of adaptive feedback in a web

educational system. In Workshop on Adaptive Sys-

tems for Web-Based Education: Tools and Reusabil-

ity, Amsterdam. 12th International Conference on Ar-

tificial Intelligence in Education.

Marc, J. and Gardeux, F. (2007). Contribution of a Vir-

tual Reality environment dedicated to chemical risk

prevention training. In Proceedings of the 9th Virtual

Reality International Conference, pages 45–53.

Mellet-D’huart, D., Michel, G., Steib, D.and Dutilleul, B.,

Paugam, B., and Dasse Courtois, R. (2005). Usages de

la ralit virtuelle en formation professionnelle d’adultes

: Entre tradition et innovation. In First International

VR-Learning Seminar, Laval, France.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

376