A

UTOMATIC TAG IDENTIFICATION

IN WEB SERVICE DESCRIPTIONS

∗

Jean-Rémy Falleri, Zeina Azmeh, Marianne Huchard and Chouki Tibermacine

LIRMM, CNRS and Montpellier II University - 161, rue Ada 34392 Montpellier Cedex 5, France

Keywords:

Tags, Web services, Text mining, Machine learning.

Abstract:

With the increasing interest toward service-oriented architectures, the number of existing Web services is

dramatically growing. Therefore, finding a particular service among this ever increasing number of services

is becoming a time-consuming task. User tags or keywords have proven to be a useful technique to smooth

browsing experience in large document collections. Some service search engines, like Seekda, already propose

this kind of facility. Service tagging, which is a fairly tedious and error prone task, is done manually by the

providers and the users of the services. In this paper we propose an approach that automatically extracts tags

from Web service descriptions. It identifies a set of relevant tags extracted from a service description and

leaves only to the users the task of assigning tags not present in this description. The proposed approach is

validated on a corpus of 146 services extracted from Seekda.

1 INTRODUCTION

Service-oriented architectures (SOA) are achieved by

connecting loosely coupled units of functionality. The

most common implementation of SOA uses Web Ser-

vices. One of the main tasks is to find the relevant

Web services to use. With the increasing interest to-

ward SOA, the number of existing Web services is

dramatically growing. Finding a particular service

among this huge amount of services is becoming a

time-consuming task.

Web services are usually described with a standard

XML-based language called WSDL. A WSDL file in-

cludes a documentation part that can be filled with a

text indicating to the user what the service does. The

potential users of the service spend time to understand

its functionality and to decide whether or not to use it.

A selected service may become unavailable after a pe-

riod of time, and therefore, a mechanism that may fa-

cilitate the discovery of similar services becomes in-

dispensible. Tagging is a mechanism that has been

introduced in search engines and digital libraries to

fulfill exactly this objective.

Tagging is the process of describing a resource by

assigning some relevant keywords (tags) to it. The

∗

France

Télécom R&D has partially supported this work

(contract CPRE 5326).

tagging process is usually done manually by the users

of the resource to be tagged. Tags are useful when

browsing large collections of documents. Indeed, un-

like in traditional hierarchical categories, documents

can be assigned an unlimited number of tags. It allows

cross-browsing between the documents. Seekda

2

, one

of the main service search engines, already allows its

users to tag its indexed services. Tags are also useful

to have a quick understanding of a particular service

and service classification or clustering.

In this paper, we present an approach that auto-

matically extracts a set of relevant tags from a WSDL.

We use a corpus of user-tagged services to learn how

to extract relevant tags from untagged service descrip-

tions. Our approach relies on text mining techniques

in order to extract candidate tags out of a descrip-

tion, and machine learning techniques to select rele-

vant tags among these candidates. We have validated

this approach on a corpus of 146 user-tagged Web ser-

vices extracted from Seekda. Results show that this

approach is significantly more efficient than the tradi-

tional (but fairly efficient) tfidf weight.

The remaining of the paper is organized as fol-

lows. Section 2 introduces the context of our work.

Then, Section 3 details our tag extraction process.

Section 4 presents a validation of this process and dis-

2

http://www

.seekda.com

40

Falleri J., Azmeh Z., Huchard M. and Tibermacine C.

AUTOMATIC TAG IDENTIFICATION IN WEB SERVICE DESCRIPTIONS.

DOI: 10.5220/0002804800400047

In Proceedings of the 6th International Conference on Web Information Systems and Technology (WEBIST 2010), page

ISBN: 978-989-674-025-2

Copyright

c

2010 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

cusses the obtained results. Before concluding and

presenting the future work, we describe the related

work in Section 5.

2 CONTEXT OF THE WORK

Our work focuses on extracting tags from service de-

scriptions. In the literature, we found a similar prob-

lem: keyphrase extraction, which aims at extracting

important and relevant short phrases from a plain-text

document. It is mostly used on journal articles or on

scientific papers in order to smooth browsing and in-

dexing of those documents in digital libraries. Before

starting our work, we analyzed one assessed approach

that performs keyphrase extraction: Kea (Frank et al.,

1999) (Section 2.1). We concluded that a straightfor-

ward application of this approach is not possible on

service descriptions instead of plain-text documents

(Section 2.2).

2.1 Description of Kea

Kea (Frank et al., 1999) is a keyphrase extractor for

plain-text documents. It uses a Bayesian classification

approach. Kea has been validated on several corpora

(Jones and Paynter, 2001; Jones and Paynter, 2002)

and has proven to be an efficient approach. It takes

a plain-text document as input. From this text, it ex-

tracts a list of candidate keyphrases. These candidates

are the

k

S

i=1

k-grams of the text. For instance, let us con-

sider the following sample document: “I am a sam-

ple document”. The candidate keyphrases extracted if

k = 2 are: (I,am,a,sample,document,I am,am a,a sam-

ple,sample document). To choose the most adapted

value of k for the particular task of extracting tags

from WSDL files, we made some measurements and

found that 86% of the tags are of length 1. It clearly

shows that one word tags are assigned in the vast ma-

jority of the cases. Therefore we will fix k = 1 in our

approach (meaning that we are going to find one word

length tags). Nevertheless, our approach, like Kea, is

easily generalizable to extract tags of length k.

Kea then computes two features on every candi-

date keyphrase. First, distance is computed, which is

the number of words that precede the first observa-

tion of the candidate divided by the total number of

words of the document. For instance, for the sam-

ple document, distance(am a) =

1

5

. Second, tfidf,

a standard weight in the information retrieval field,

is computed. It measures how much a given candi-

date keyphrase of a document is specific to this doc-

ument. More formally, for a candidate c in a doc-

ument d, t fid f(c,d) = t f(c,d) ×id f (c). The met-

ric t f(c, d) (term frequency) corresponds to the fre-

quency of the term c in d. It is computed with the

following formula: t f(c,d) =

occurences o f c in d

size of d

. The

metric id f (c) (inverse document frequency) measures

the general importance of the term in a corpus D.

id f (c) = log(

|D|

|{d: c ∈d}|

).

Kea uses a naive Bayes classifier to classify the

different candidate keyphrases using the two previ-

ously described features. The authors showed that this

type of classifier is optimal (Domingos and Pazzani,

1997) for this kind of classification problem. The two

classes in which the candidate keyphrases are classi-

fied are: keyphrase and not keyphrase. Several evalua-

tions on real world data report that Kea achieves good

results (Jones and Paynter, 2001; Jones and Paynter,

2002). In the next section, we describe how WSDL

files are structured and highlight why the Kea ap-

proach is not directly applicable on them.

2.2 WSDL Service Descriptions

We extract tags from the following WSDL elements:

services, ports, port types, bindings, types and mes-

sages. Each element has an identifier, which can op-

tionally come with a plain-text documentation. Figure

3 (left) shows the general outline of a WSDL file.

One simple idea to extract tags from services

would be to use Kea on their plain-text documenta-

tions. Unfortunately, an analysis of our service cor-

pus shows that about 38% of the services are not doc-

umented at all. Another important source of informa-

tion to discover tags are the identifiers contained in

the WSDL. For instance weather would surely be an

interesting tag for a service named WeatherService.

Unfortunately, identifiers are not easy to work with.

Firstly because identifiers are usually a concatenation

of different words. Secondly because they are associ-

ated with different kinds of elements (services, ports,

types, ... ) that have not the same importance in a ser-

vice description. Therefore, extracting candidate tags

from WSDL files is not straightforward. Several pre-

processing and text-mining techniques are required.

Moreover, the previously described features (tfidf and

distance) are not easy to adapt on words from WSDL

files. First because WSDL deals with two categories

of words (the documentation and the identifiers) that

are not necessary related. Second because the dis-

tance feature is meaningless on the identifiers, which

are defined in an arbitrary order.

AUTOMATIC TAG IDENTIFICATION IN WEB SERVICE DESCRIPTIONS

41

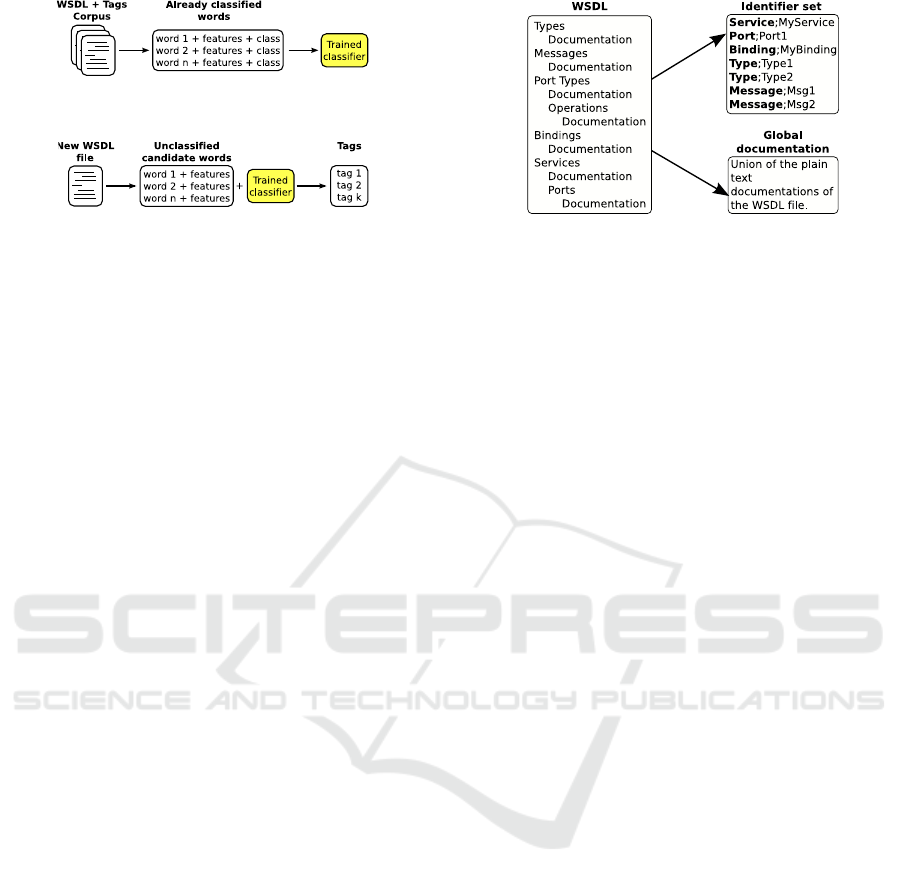

Figure 1: The training phase.

Figure 2: The tag extraction phase.

3 TAG EXTRACTION PROCESS

Similarly to Kea, we model the tag extraction problem

as the following classification problem: classifying a

word into one of the two tag and no tag classes. Our

overall process is divided into two phases: a training

phase and a tag extraction phase.

Figure 1 summarizes the behavior of the training

phase. In this phase we have a corpus of WSDL files

and associated tags, extracted from Seekda, (Section

3.1). From this training corpus, we first extract a list

of candidate words by using text-mining techniques,

(Sections 3.2 and 3.3). Then several features (met-

rics) are computed on every candidate. A feature is

a common term in the machine learning field. As an

example, it may be the frequency of the words in their

WSDL file. Finally, since manual tags are assigned to

those WSDL files, we use them to classify the can-

didate words coming from our WSDL files. Using

this set of candidate words, computed features and as-

signed classes, we train a classifier.

Figure 2 describes the tag extraction phase. First,

like in the training phase, a list of candidate words is

extracted from an untagged WSDL file and the same

features are computed. The only difference with the

training phase is that we do not know in advance

which of those candidates are true tags. Therefore we

use the previously trained classifier to automatically

perform this classification. Finally the tags extracted

from the WSDL file are the words that have been clas-

sified in the tag class.

3.1 Creation of the Training Corpus

As explained above, our approach requires a train-

ing corpus, denoted by T . Since we want to extract

tags from WSDL files, T has to be a set of couples

(wsdl,tags), with wsdl a WSDL file, and tags a set of

corresponding manually assigned tags. We created a

corpus using Seekda. Indeed, Seekda allows its users

to manually assign tags to its indexed services. We

created a program that parses the Seedka result pages

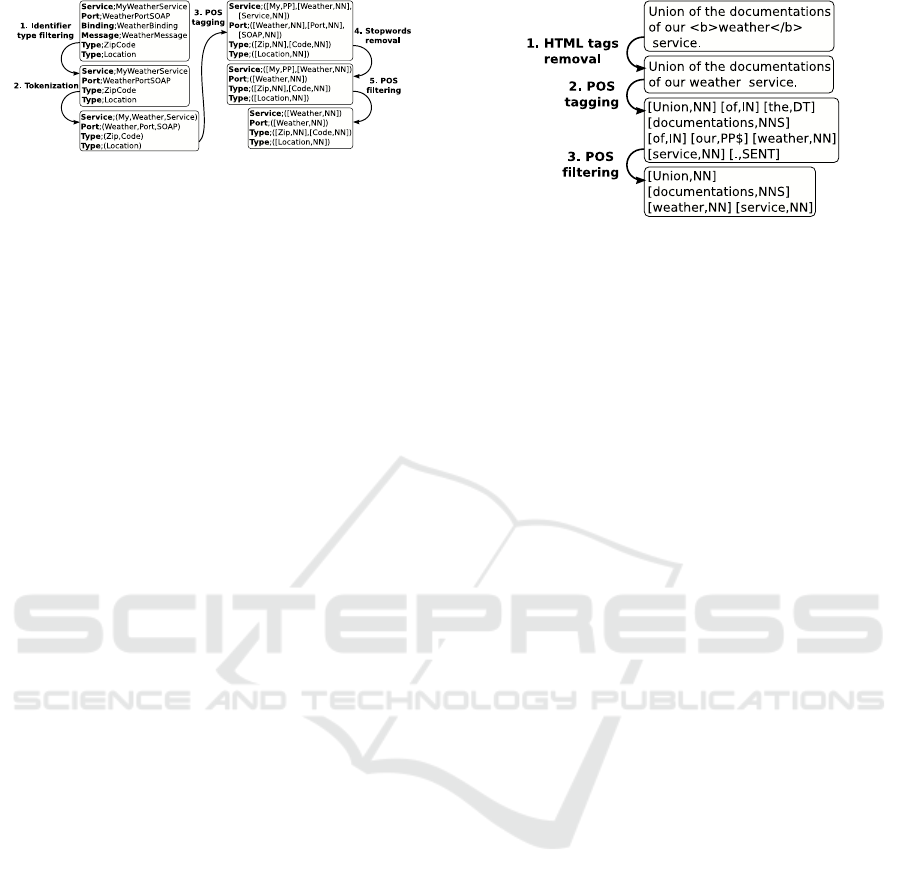

Figure 3: WSDL pre-processing.

to extract WSDL files together with their user tags.

To ensure that the services of our corpus were sig-

nificantly tagged, we only retain the WSDL files that

have at least five tags. Using this program, we ex-

tracted 150 WSDL files. Then, we removed from T

the WSDL files that triggered parsing errors. Finally,

we have a training corpus containing 146 WSDL files

together with their associated tags.

To clean the tags of the training corpus, we per-

formed three operations. We removed the non al-

pha numeric characters from the tags (we found sev-

eral tags like _onsale or :finance). We also removed

a meaningless and highly frequent tag (the _unkown

tag). Finally, we divided the tags with length n > 1

into n tags of length 1, in order to have only tags

of length 1 (the reason has been explained in section

2.1). The length of a tag is defined as the number of

words composing this tag.

Finally, we have a corpus of 146 WSDL files and

1393 tags (average of 9.54 tags per WSDL file). An

analysis of T shows that about 35% of the user tags

are already contained in the WSDL files.

3.2 Pre-processing of the WSDL Files

As we have seen before, a WSDL file contains sev-

eral element definitions optionally containing a plain-

text documentation. The left side of figure 3 shows

such a data structure. In order to simplify the WSDL

XML representation in a format more suitable to ap-

ply text mining techniques, we decided to extract two

documents from a WSDL description. First, a set

of couples (type,ident) representing the different el-

ements defined in the WSDL. We have type ∈ (Ser-

vice,Port,PortType,Message,Type,Binding) the type of

the element and ident the identifier of the element.

We call this set of couples the identifier set. Sec-

ond, a plain text containing the union of the plain-text

documentations found in the WSDL file, called the

global documentation. This pre-processing operation

is summarized in the figure 3.

WEBIST 2010 - 6th International Conference on Web Information Systems and Technologies

42

Figure 4: Processing of the identifiers.

3.3 Selection of the Candidate Tags

As seen in the previous section, we have now two

different sources of information for a given WSDL:

an identifier set and a global documentation. Un-

fortunately, those data are not yet usable to compute

meaningful metrics. Firstly because the identifiers are

names of the form MyWeatherService, and therefore

are very unlikely to be tags. Secondly because these

data contain a lot of obvious useless tags (like the you

pronoun). Therefore, we will now apply several text-

mining techniques on the identifier set and the global

documentation.

Figure 4 shows how we process the identifier set.

Here is the complete description of all the performed

steps:

1. Identifier Type Filtering: during this step,

the couples (type,ident) where type ∈ (Port-

Type,Message,Binding) are discarded. We applied

this filtering because very often, the identifiers

of the elements in those categories are duplicated

from the identifiers in the other categories.

2. Tokenization: during this step, each cou-

ple (type,ident) is replaced by a couple

(type,tokens). tokens is the set of words

appearing in ident. For instance, (Ser-

vice,MyWeatherService) would be replaced

by (Service,[My,Weather,Service]). To split ident

into several tokens, we created a tokenizer that

uses common clues in software engineering to

split the words. Those clues are for instance a case

change, or the presence of a non alpha-numeric

character.

3. POS Tagging: during this step each couple

(type,tokens) previously computed is replaced

by a couple (type, ptokens). ptokens is a set

of couples (token

i

, pos

i

) derived from tokens

where token

i

is a token from tokens and pos

i

the part-of-speech corresponding to this token.

We used the tool tree tagger (Schmid, 1994)

to compute those part-of-speeches. Example:

(Service,[My,Weather,Service]) is replaced by

(Service,[(My,PP),(Weather,NN),(Service,NN)]).

NN means noun and PP means pronoun.

Figure 5: Processing of the global documentation.

4. Stopwords Removal: during this step, we

process each couple (type, ptokens) and re-

move from ptokens the elements (token

i

, pos

i

)

where token

i

is a stopword for type. A stop-

word is a word too frequent to be mean-

ingful. We manually established a stopword

list for each identifier type. Example: (Ser-

vice,[(My,PP),(Weather,NN),(Service,NN)]) is re-

placed by (Service,[(My,PP)(Weather,NN)]) be-

cause Service is a stopword for service identifiers.

5. POS Filtering: during this step, we process

each couple (type, ptokens) and remove from

ptokens the elements (token

i

, pos

i

) where pos

i

/∈

(Noun,Adjective,Verb,Symbol). Example: (Ser-

vice,[(My,PP),(Weather,NN)) is replaced by (Ser-

vice,[(Weather,NN)]) because pronouns are fil-

tered.

Figure 5 shows how we process the global docu-

mentation. Here is the complete description of all the

performed steps:

1. HTML Tags Removal: the HTML tags (words

begining by < and ending by >) are removed from

the global documentation.

2. POS Tagging: similar to the POS tagging step

applied to the identifier set.

3. POS Filtering: similar to the POS filtering step

applied to the identifier set.

The union of the remaining words in the identifier

set and in the global documentation are our candidate

tags. When defining those processing operations, we

took great care that no correct candidate tags (i.e. a

candidate tag that is a real tag) of the training corpus

have been discarded. The next section describes how

we adapted the Kea features to these candidate tags.

3.4 Computation of the Features

We have now different well separated words. There-

fore we can now compute the t fid f feature. But

AUTOMATIC TAG IDENTIFICATION IN WEB SERVICE DESCRIPTIONS

43

Table 1: Excerpt of the ARFF file enriched with the words.

Word

TFIDF

id

TFIDF

doc

IN_SERVICE

.. .

IN_DOC

POS

IS_TAG

Weather [0,0.01] ]0.01,0.04] × NN ×

Location ]0.03,0.1] ]0.04,0.15] × JJ

Code ]0.03,0.1] ]0.01,0.04] × VV

words appearing in documentation or in the identi-

fier names are not the same. We decided (mostly be-

cause it turns out to perform better) to separate the

t fid f value into a t fid f

ident

and a t fid f

doc

which are

respectively the t fid f value of a word over the iden-

tifier set and over the global documentation. Like in

Kea, we used the method in (Fayyad and Irani, 1993)

to discretize those two real-valued features.

The distance feature still has no meaning over the

identifier set, because the elements of a WSDL de-

scription are given in an arbitrary order. Therefore

we decided to adapt it by defining five different fea-

tures: in_service, in_port, in_type, in_operation and

in_documentation. Those features take their values

in the (true, f alse) set. A true value indicates that the

word has been seen in an element identifier of the cor-

responding type. For instance in_service(weather)-

= true means that the word weather has been seen

in a service identifier. in_documentation(weather) =

true means that the word weather has been seen in the

global documentation.

In addition of these features, we compute another

feature called pos, not used in Kea, which signifi-

cantly improves the results. pos is simply the part-

of-speech that has been assigned to the word dur-

ing the POS tagging step. If several parts-of-speech

have been assigned to the same word, we choose

the one that has been assigned in the majority of the

cases. The different values of pos are: NN (noun),

NNS (plural noun), NP (proper noun), NPS (plu-

ral proper noun), JJ (adjective), JJS (plural adjec-

tive), VV (verb), VVG (gerundive verb), VVD (preterit

verb), SYM (symbol).

3.5 Training and using the Classifier

We applied the previously described technique to all

the WSDL files of T . In addition to the previously de-

scribed features, we compute the is_tag feature over

the candidates. This feature takes its values in the

(true, f alse) set. is_tag(word) = true means that

word has been assigned as a tag by Seekda users for

its service description. We have serialized all those

results in an ARFF file compatible with the Weka tool

(Witten and Frank, 1999). Weka is a machine learn-

ing tool that defines a standard format for describing

a training corpus and provides the implementation of

many classifiers. One can use Weka in order to train

a classifier or compare the performances of different

classifiers regarding a given classification problem.

Table 1 shows an excerpt of the ARFF file we pro-

duce, enriched with the words for the sake of clarity.

With this ARFF file, we used Weka to train a

naive Bayes classifier, shown as optimal for our kind

of classification task (Domingos and Pazzani, 1997).

This trained classifier can now be used in the tag ex-

traction phase. As previously said, the beginning of

this phase is the same as the one of the training phase.

It means that the WSDL file goes through the pre-

viously described operations (pre-processing, candi-

dates selection and features computation). Only this

time, the value of the is_tag feature is not available.

This value will be automatically computed by the pre-

viously trained classifier.

4 VALIDATION OF THE

PROPOSED WORK

This section provides a validation of our technique on

real world data from Seekda to assess the precision

and recall of our trained classifier.

Methodology. We conducted two different experi-

ments. In the first one, the trained classifier is applied

on the training corpus T and its output is compared

with the tags given by Seekda users (obtained as de-

scribed in Section 3.1). After having conducted the

first experiment, a manual assessment of the tags pro-

duced by our approach revealed that many tags not

assigned by the user seemed highly relevant. This

phenomenon has also been observed in several human

evaluations of Kea (Jones and Paynter, 2001; Jones

and Paynter, 2002), that inspired our approach. It oc-

curs because tags assigned by the users are not the

absolute truth. Indeed, it is very likely that users have

forgotten many relevant tags, even if they were in the

service description. To show that the real efficiency

of our approach is better than the one computed in

the first experiment, we perform a second experiment,

where we manually augmented the user tags of our

corpus with additional tags we found relevant and ac-

curate by analyzing the WSDL descriptions of the ser-

vices.

Metrics. In the evaluation, we used precision and

recall. First, for each web service s ∈ T , where

T is our training corpus, we consider: A the set

of tags produced by the trained classifier, M the set

of the tags given by Seekda users and W the set of

WEBIST 2010 - 6th International Conference on Web Information Systems and Technologies

44

words appearing in the WSDL. Let I = A ∩M be

the set of tags assigned by our classifier and Seekda

users. Let E = M ∩W be the set of tags assigned

by Seekda users present in the WSDL file. Then we

define precision(s) =

|I|

|A|

and recall(s) =

|I|

|E|

, which

are aggregated in precision(T ) =

∑

s∈T

precision(s)

|T |

and

recall(T ) =

∑

s∈T

recall(s)

|T |

. The recall is therefore com-

puted over the tags assigned by Seekda users that are

present in the descriptions of concerned services.

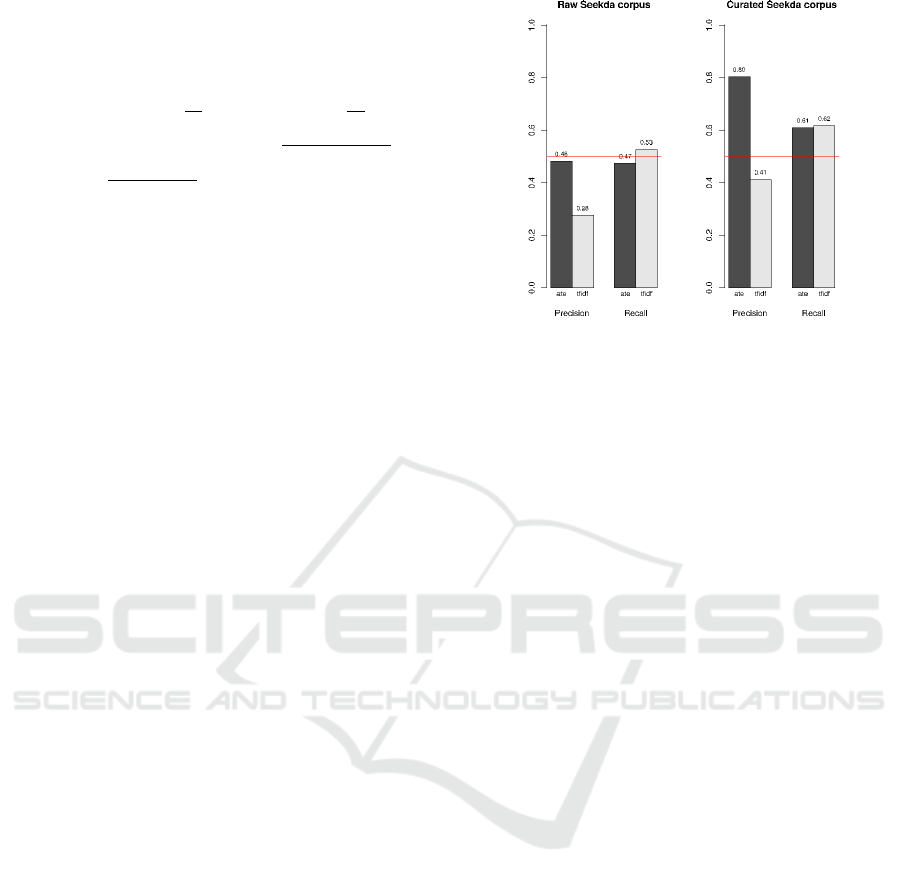

Evaluation. Figure 6 (left) gives results for the first

experiment where the output of the classifier is com-

pared with the tags of Seekda users, while in Figure

6 (right), enriched tags of Seekda users are used in

the comparison (curated corpus). In this figure, our

approach is called ate (Automatic Tag Extraction). To

clearly show the concrete benefits of our approach, we

decided to include in these experiments a straightfor-

ward (but fairly efficient) technique. This technique,

called tfidf in Figure 6, consists in selecting, after

the application of our text-mining techniques, the five

candidate tags with the highest tfidf weight.

In Figure 6 (left), the precision of ate is 0.48.

It is a significant improvement compared to the tfidf

method that achieves only a precision of 0.28. More-

over, there is no significant difference between the re-

call achieved by the two methods. To show that the

precision and recall achieved by ate are not biased by

the fact that we used the training corpus as a testing

corpus, we performed a 10 folds cross-validation. In

a 10 folds cross-validation, our training corpus is di-

vided in 10 parts. One is used to train a classifier,

and the 9 other parts are used to test this classifier.

This operation is done for every part, and then, the

average recall and precision are computed. The re-

sults achieved by our approach using cross-validation

(precision = 0.44 and recall = 0.42) are very similar

to those obtained in the first experiment.

In Figure 6 (right), we see that the precision

achieved by ate in the second experiment is much bet-

ter. It reaches 0.8, while the precision achieved by the

tfidf method increases to 0.41. The recall achieved

by the two methods remains similar. The precision

achieved by our method in this experiment is good.

Only 20% of the tags discovered by ate are not cor-

rect. Moreover, the efficiency of ate is significantly

higher than tfidf.

Threats to Validity. Our experiments use real

world services, obtained from the Seekda service

search engine. Our training corpus contains services

extracted randomly with the constraint that they con-

tain at least 5 user tags. We assumed that Seekda users

Figure 6: Results on the original and manually curated

Seekda corpus.

assign correct tags. Indeed, our method admits some

noise but would not work if the majority of the user

tags were poorly assigned. In the second experiment,

we manually added tags we found relevant by exam-

ining the complete description and documentation of

the concerned services. Unfortunately, since we are

not “real” users of those services, some of the tags we

added might not be relevant.

5 RELATED WORK

In this section, we will present the related work ac-

cording to two fields of research: keyphrase extrac-

tion and web service discovery.

According to (Turney, 2003), there are two gen-

eral approaches that are able to supply keyphrases for

a document: keyphrase extraction and keyphrase as-

signment. Both approaches are using supervised ma-

chine learning approaches, with training examples be-

ing documents with manually supplied keyphrases.

In the keyphrase assignment approach, a list of

predefined keyphrases is treated as a list of classes in

which the different documents are classified. Text cat-

egorization techniques are used to learn models for as-

signing a class (keyphrase) to a document. Two main

approaches of this category are (Dumais et al., 1998;

Leung and Kan, 1997).

In the keyphrase extraction approach, a list of

candidate keyphrases are extracted from a document

and classified into the classes keyphrase and not

keyphrase. There are two main approaches that fall

in this category: one using a genetic algorithm (Tur-

ney, 2000) and one using a naive Bayes classifier (Kea

(Frank et al., 1999)).

Web service discovery is a wide research area

with many underlying issues and challenges. A

AUTOMATIC TAG IDENTIFICATION IN WEB SERVICE DESCRIPTIONS

45

quick overview of some of the works can be acquired

from (Brockmans et al., 2008; Lausen and Steinmetz,

2008).Here, we describe a selection of works, classi-

fied using their adapted techniques.

Many approaches adapt techniques from machine

learning field, in order to discover and group similar

services. In (Crasso et al., 2008a; Heßand Kushm-

erick, 2003), service classifiers are defined depend-

ing on sets of previously categorized services. Then

the resulting classifiers are used to deduce the rele-

vant categories for new given services. In case there

were no predefined categories, unsupervised cluster-

ing is used. In (Ma et al., 2008), CPLSA approach is

defined that reduces a service set then clusters it into

semantically related groups.

In (Lu and Yu, 2007), a web service broker is de-

signed relying on approximate signature matching us-

ing XML schema matching. It can recommend ser-

vices to programmers in order to compose them. In

(Günay and Yolum, 2007), a service request and a

service are represented as two finite state machines

then they are compared using various heuristics to

find structural similarities between them. In (Dong

et al., 2004), the Woogle web service search engine

is presented, which takes the needed operation as in-

put and searches for all the services that include an

operation similar to the requested one. In (Bouillet

et al., 2008), tags coming from folksonomies are used

to discover and compose services.

The vector space model is used for service re-

trieval in several existing works as in (Platzer and

Dustdar, 2005; Wang and Stroulia, 2003; Crasso

et al., 2008b). Terms are extracted from every WSDL

file and the vectors are built for each service. A

query vector is also built, and similarity is calculated

between the service vectors and the query vector.

This model is sometimes enhanced by using Word-

Net, structure matching algorithms to ameliorate the

similarity scores as in (Wang and Stroulia, 2003), or

by partitioning the space into subspaces to reduce the

searching space as in (Crasso et al., 2008b).

A collection of works (Aversano et al., 2006; Peng

et al., 2005; Azmeh et al., 2008), adapt the for-

mal concept analysis method to retrieve web services

more efficiently. Contexts obtained from service de-

scriptions are used to classify the services as a concept

lattice. This lattice helps in understanding the differ-

ent relationships between the services, and in discov-

ering service substitutes.

6 CONCLUSIONS AND FUTURE

WORK

With the emergence of SOA, it becomes important for

developers using this paradigm to retrieve Web ser-

vices matching their requirements in an efficient way.

By using Web service search engines, these develop-

ers can either search by keywords or navigate by tags.

In the second case, it is necessary that the tags char-

acterize accurately this service. Our work contributes

in this direction and introduces a novel approach that

extracts tags from Web service descriptions. This

approach combines and adapts text mining as well

as machine learning techniques. It has been exper-

imented on a corpus of user-tagged real world Web

services. The obtained results demonstrated the effi-

ciency of our automatic tag extraction process. The

proposed work is useful for many purposes. First,

the automatically extracted tags can assist the users

who are tagging a given service, or to “bootstrap” tags

on untagged services. They are also useful to have

a quick understanding of a service without reading

the whole description. They can also be used to help

in building domain ontologies like in (Sabou et al.,

2005) (Guo et al., 2007), also in tasks such as service

clustering (for instance by measuring the similarity of

the tags of two given services), or classification (for

instance by defining association rules between tags

and categories).

With our approach, only tags appearing in the

WSDL files are to be found. This way, we miss some

interesting tags (such as associated words, synonyms

or more general words). Nevertheless, the identified

tags represent a good support to find other relevant

tags by using ontological resources (like WordNet), or

machine learning techniques. This is one of the per-

spectives of our work. We also plan to work on the

extraction of tags composed of more than one word.

Indeed, one-word tags are sometimes insufficient to

describe concepts like exchange rate or Web 2.0.

REFERENCES

Aversano, L., Bruno, M., Canfora, G., Penta, M. D., and

Distante, D. (2006). Using concept lattices to sup-

port service selection. Int. Journal of Web Services

Research, 3(4):32–51.

Azmeh, Z., Huchard, M., Tibermacine, C., Urtado, C., and

Vauttier, S. (2008). WSPAB: A tool for automatic

classification & selection of web services using formal

concept analysis. In Proc. of (ECOWS 2008), pages

31–40, Dublin, Ireland. IEEE Computer Society.

Bouillet, E., Feblowitz, M., Feng, H., Liu, Z., Ranganathan,

A., and Riabov, A. (2008). A folksonomy-based

WEBIST 2010 - 6th International Conference on Web Information Systems and Technologies

46

model of web services for discovery and automatic

composition. In IEEE Int. Conference on Services

Computing (SCC), pages 389–396.

Brockmans, S., Erdmann, M., and Schoch, W. (2008).

Service-finder deliverable d4.1. research report about

current state of the art of matchmaking algorithms.

Technical report, Ontoprise, Germany.

Crasso, M., Zunino, A., and Campo, M. (2008a). Awsc: An

approach to web service classification based on ma-

chine learning techniques. Revista Iberoamericana de

Inteligencia Artificial, 12, No 37:25–36.

Crasso, M., Zunino, A., and Campo, M. (2008b). Query by

example for web services. In SAC ’08: Proc. of the

2008 ACM symposium on Applied computing, pages

2376–2380.

Domingos, P. and Pazzani, M. J. (1997). On the optimality

of the simple bayesian classifier under zero-one loss.

Machine Learning, 29(2-3):103–130.

Dong, X., Halevy, A., Madhavan, J., Nemes, E., and Zhang,

J. (2004). Similarity search for web services. In VLDB

’04: Proc. of the 30th int. conference on Very large

data bases, pages 372–383.

Dumais, S. T., Platt, J. C., Hecherman, D., and Sahami, M.

(1998). Inductive learning algorithms and representa-

tions for text categorization. In CIKM, pages 148–155.

ACM.

Fayyad, U. M. and Irani, K. B. (1993). Multi-interval dis-

cretization of continuous-valued attributes for classifi-

cation learning. In IJCAI, pages 1022–1029.

Frank, E., Paynter, G. W., Witten, I. H., Gutwin, C.,

and Nevill-Manning, C. G. (1999). Domain-specific

keyphrase extraction. In IJCAI, pages 668–673.

Günay, A. and Yolum, P. (2007). Structural and semantic

similarity metrics for web service matchmaking. In

EC-Web, pages 129–138.

Guo, H., Ivan, A.-A., Akkiraju, R., and Goodwin, R. Learn-

ing ontologies to improve the quality of automatic web

service matching. In WWW2007, pages 1241–1242.

ACM.

Heß, A. and Kushmerick, N. (2003). Learning to attach

semantic metadata to web services. In Int. Semantic

Web Conference, pages 258–273.

Jones, S. and Paynter, G. W. (2001). Human evaluation

of kea, an automatic keyphrasing system. In JCDL,

pages 148–156. ACM.

Jones, S. and Paynter, G. W. (2002). Automatic extraction

of document keyphrases for use in digital libraries:

Evaluation and applications. JASIST, 53(8):653–677.

Lausen, H. and Steinmetz, N. (2008). Survey of current

means to discover web services. Technical report, Se-

mantic Technology Institute (STI).

Leung, C.-H. and Kan, W.-K. (1997). A statistical learn-

ing approach to automatic indexing of controlled in-

dex terms. JASIS, 48(1):55–66.

Lu, J. and Yu, Y. (2007). Web service search: Who, when,

what, and how. In WISE Workshops, pages 284–295.

Ma, J., Zhang, Y., and He, J. (2008). Efficiently finding

web services using a clustering semantic approach. In

CSSSIA ’08: Proc. of the 2008 Int. workshop on Con-

text enabled source and service selection, integration

and adaptation, pages 1–8.

Peng, D., Huang, S., Wang, X., and Zhou, A. (2005). Man-

agement and retrieval of web services based on for-

mal concept analysis. In Proc. of the The Fifth Int.

Conference on Computer and Information Technology

(CIT’05), pages 269–275.

Platzer, C. and Dustdar, S. (2005). A vector space search en-

gine for web services. In Third IEEE European Con-

ference on Web Services, 2005. ECOWS 2005., pages

62–71.

Sabou, M., Wroe, C., Goble, C. A., and Mishne, G. Learn-

ing domain ontologies for web service descriptions:

an experiment in bioinformatics. In WWW2005, pages

190–198. ACM.

Schmid, H. (1994). Probabilistic part-of-speech tagging

using decision trees. In Proc. of NeMLaP’94, vol-

ume 12. Pages 44–49.

Turney, P. D. (2000). Learning algorithms for keyphrase

extraction. Inf. Retr., 2(4):303–336.

Turney, P. D. (2003). Coherent keyphrase extraction via

web mining. In IJCAI, pages 434–442.

Wang, Y. and Stroulia, E. (2003). Semantic structure

matching for assessing web service similarity. In 1st

Int. Conference on Service Oriented Computing (IC-

SOC03, pages 194–207. Springer-Verlag.

Witten, I. H. and Frank, E. (1999). Data Mining: Practi-

cal Machine Learning Tools and Techniques with Java

Implementations.

AUTOMATIC TAG IDENTIFICATION IN WEB SERVICE DESCRIPTIONS

47