RESOLVING DATA-ASSOCIATION UNCERTAINTY

In Mutli-object Tracking through Qualitative Modules

Saira Saleem Pathan, Ayoub Al-Hamadi, Gerald Krell and Bernd Michaelis

Institute for Electronics, Signal Processing and Communications (IESK)

Otto-von-Guericke-University Magdeburg, Germany

Keywords:

Multi-object tracking, Data Association, Logical Reasoning, Motion Analysis.

Abstract:

In real-time tracking, a crucial challenge is to efficiently build association among the objects. However, real-

time interferences (e.g. occlusion) manifest errors in data association. In this paper, the uncertainties in data

association are handled when discrete information is incomplete during occlusion through qualitative reason-

ing modules. The formulation of the qualitative modules are based on exploiting human-tracking abilities (i.e.

common sense) which are integrated with data association technique. Each detected object is described as a

node in space with a unique identity and status tag whereas association weights are computed using CWHI and

Bhattacharyya coefficient. These weights are input to qualitative modules which interpret the appropriate sta-

tus of the objects satisfying the fundamental constraints of object’s continuity during tracking. The results are

linked with Kalman Filter to estimate the trajectories of objects. The proposed approach has shown promising

results illustrating its contribution when tested on a set of videos representing various challenges.

1 INTRODUCTION

Practically, tracking is a difficult problem due to di-

rect and indirect influence of real-time factors which

result in ambiguities because the objects lost their

contextual information. In broader aspect, various

kinds of occlusions can be observed in real scenar-

ios such as: 1) object-to-object and 2) object-to-scene

occlusion, we have addressed later type of occlusion.

In this paper, the uncertainties due to incomplete

data which produce plausible association are han-

dled using qualitative modules during entire tracking.

Technically, the ambiguities in data association and

object’s identity management are addressed by pro-

viding the explicit support through qualitative reason-

ing and tracking with linear Kalman filter. The infer-

ence takes place by combining both sources of infor-

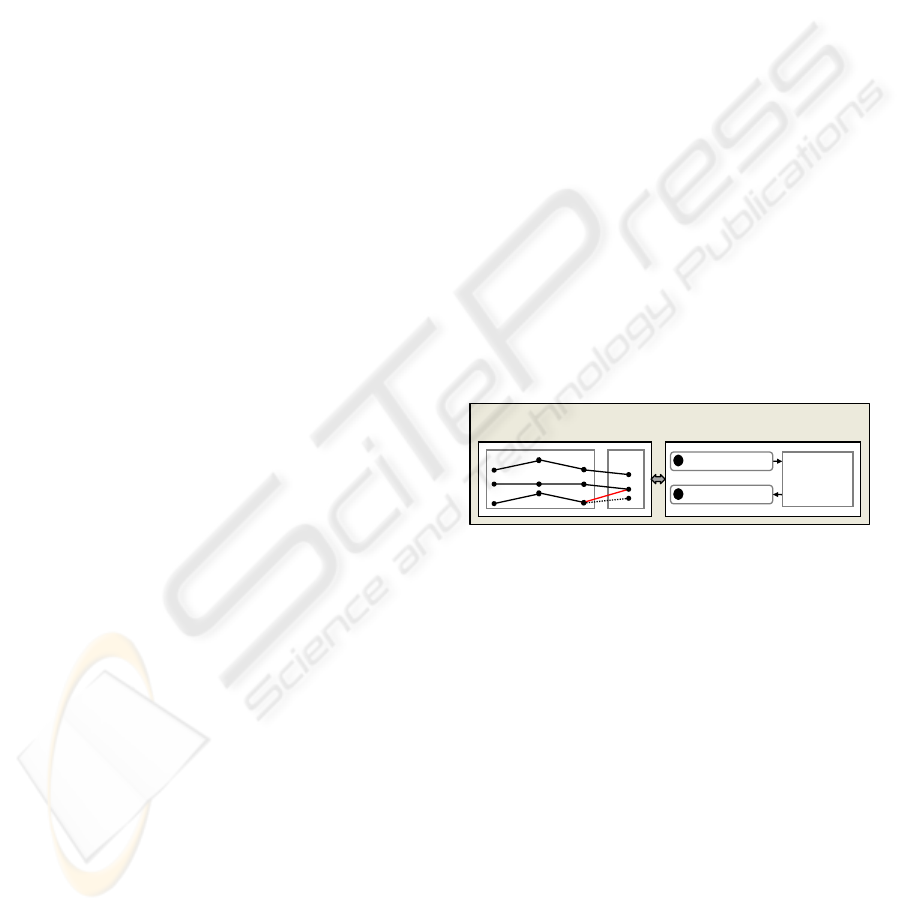

mation during tracking as shown in Figure. 1. Our

goal is to reliably track objects under severe occlu-

sion without any scene restriction and prior training.

The paper is organized as follows: section 2 entails

the reviews of the relevant literature; the proposed ap-

proach is described in section 3. Experimental results

are presented in section 4. Finally, the conclusion and

future directions are sketched in section 5.

Time Data Association with Qualitative Module

t +1t - N

= {id, w

t-N,t+1

}

Reasoning

module

= {id?, w

t-N,t+1

}

Figure 1: In this, nodes represent the detected objects and

the connecting lines indicate the association among the ob-

jects at time t whereas red line shows the wrong associa-

tion. The data association module is linked to the qualitative

module which generates the respective motion status-tags.

2 RELATED WORK

Tracking has been extensively studied; a detailed re-

view on visual tracking is given in (Blake, 2006).

Handling occlusion is challenging when tracking real

scenarios. One of the approaches is global data asso-

ciation such as Probabilistic Data Association (Bar-

Shalom and Fortmann, 1987) which finds the corre-

spondence of target with all possible global explana-

tions. Alternatively, a solution is suggested by (Khan,

2005), taking a time-window N to find the correspon-

dence of target object in i + N space.

Most of the research in vision underpinned the sta-

tistical techniques to build an association among the

moving objects. On the contrary, qualitative reason-

461

Saleem Pathan S., Al-Hamadi A., Krell G. and Michaelis B. (2010).

RESOLVING DATA-ASSOCIATION UNCERTAINTY - In Mutli-object Tracking through Qualitative Modules.

In Proceedings of the International Conference on Computer Vision Theory and Applications, pages 461-466

DOI: 10.5220/0002834604610466

Copyright

c

SciTePress

ing allows an explicit control to determine the consis-

tent generation of possibilities (Bennett, 2008). Sher-

rah and Gong (Sherrah and Gong, 2000) proposed a

view-based approach with Bayesian framework and

explicit probabilistic reasoning to handle the plausi-

ble interpretation of incomplete data due to occlu-

sion. Another example of similar technique is sug-

gested by (Bennett et al., 2008) in which logical rea-

soning engine interprets the spatio-temporal continu-

ity of objects during tracking to overcome the error

due to incomplete data during occlusion. However,

in their work, the ambiguity is handled through long-

term reasoning unlike our proposed work. But, we

are more focused to exploit the logics with likelihood

such as (Halpern, 1990). More recently, Frintrop et

al. (Frintrop et al., 2009) exploited the cognitive ap-

proach to optimally detect object and an observation

model is built on the suitable features which is then

associated with Condensation algorithm. Thus, the

qualitative reasoning provides a powerful mechanism

to handle inconsistent situations and can complement

the performance of statistical techniques.

3 PROPOSED APPROACH

3.1 The Motivation

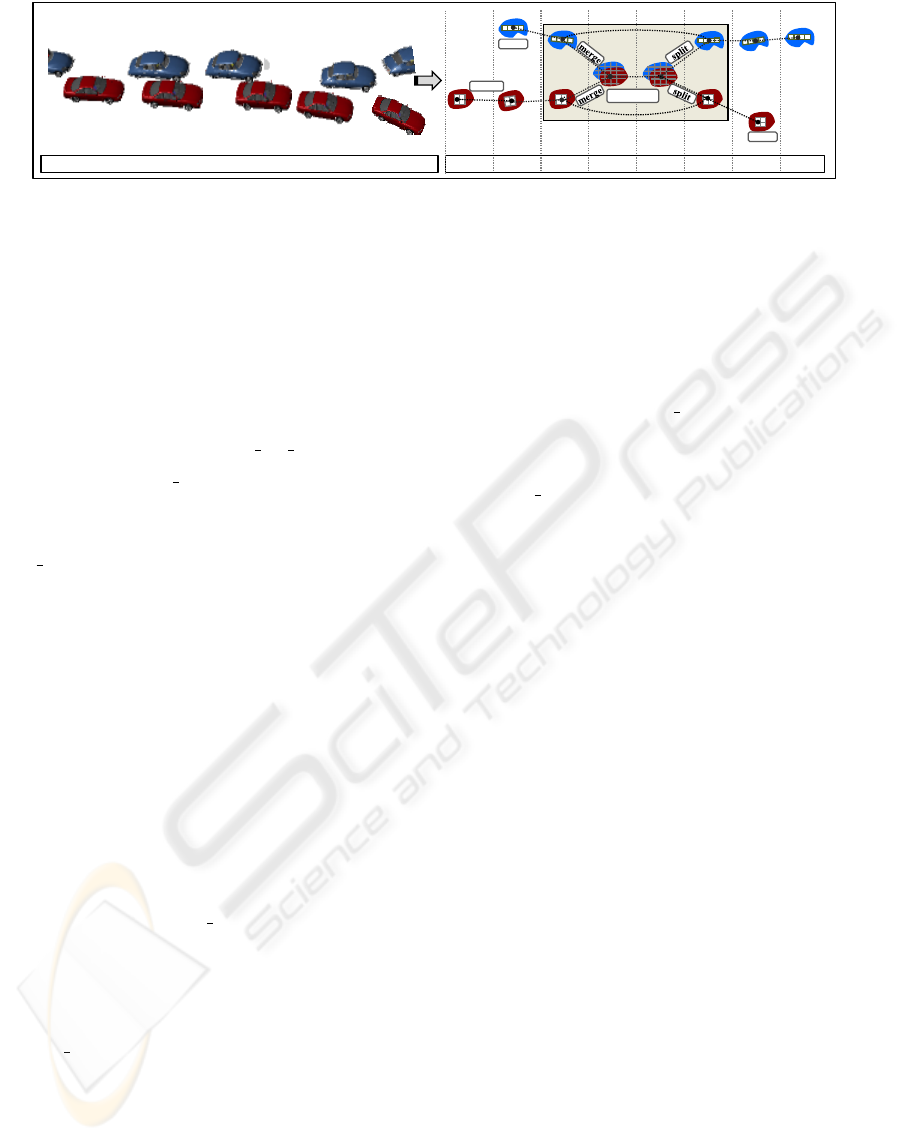

We investigate the behaviors and properties of

moving object in world domain and how a human’s

cognitive system processes that information as shown

in Figure. 2. The qualitative modules interpret the

real-world tracking scenarios and assign the status-

tags to each detected object. It is assumed that the

object’s motion is continuous function of time until it

leaves the scene. In the following, the formulation of

each qualitative module is presented.

Let set of n objects are detected at each time frame

t, so:

O

t

id

= [O

1

, O

2

, .O

id

..., O

n

]

each individual object is indicated by unique identity

id and represented by (id, f , tag, w) structure where

id : is the unique identity which remains the same dur-

ing the entire course of tracking.

f : is the frame number.

tag : represents the motion status-tags which are in-

ferred by the qualitative modules.

tag =

{

norm

id

, new

id

, exit

id

, occ

id

, over

id

, reap

id

}

where tag represents motion status-tag of normal,

new, exit, occludee, overlaper and reappear respec-

tively at time t (explained in section 3.3).

w : indicates the association-weight which is ex-

plained in section 3.2.

3.2 Association-weight Computation

The association-weights among objects are measured

by integrating the Correlation Weighted Histogram

Intersection (CWHI) (Pathan et al., 2009) and Bhat-

tacharyya Coefficient (BC), a general description is

in (Kailath, 1967). The association likelihood is com-

puted iteratively in a time-window N. Search space

criteria are adapted which enables the efficient enu-

meration of multiple possibilities, thus overcome the

search space problem with reliable computation of

likelihood. The formulation is given as:

w = C

bc,cwhi

= BC +CWHI (1)

3.3 Qualitative Modules

In this section, the abstract qualitative reasoning is

presented to infer the status-tags of the moving object

during tracking.

3.3.1 Normal Tag

The normal concept is based on the fact that the object

is moving with ideal pace keeping its visual character-

istics and motion consistency satisfying the continuity

constraints during entire tracking. This module deter-

mines the normal tag of the moving object:

norm(O

t

id

) =

max(O

t

?

, LIST O f OBJ(N))∧

SEARCH SPACE(O

t

?

, LIST o f OBJ(N))

where max(.., ..) computes the maximum likelihood

of the detected object in time t with the list of the ob-

jects. N represents the time-window for data associ-

ation, the SEARCH SPACE(.., ..) function checks the

possibility of existence of an object in the predicted

region.

3.3.2 New Tag

The new tag and new identity is assigned using the

following inference. The association of new object is

determined with the list of objects. Besides, the object

does not fall into the search space of existing objects:

new(O

t

id

new

) =

min(O

t

?

, LIST O f OBJ(N))

∧¬SEARCH SPACE(O

t

?

, LIST o f OBJ(N))

where min(.., ..) shows that the new detected object

(O

t

?

) has the minimum likelihood with the list of ob-

jects. SEARCH SPACE(.., ..) checks the possibility

of existence of objects in the predicted region.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

462

new

exit

t+2 t+3 t+4 t+5 t+6 t+7t+1t

child/parent

normal

t t+1 t+4 t+5 t+7

Figure 2: From the world domain (left) to the proposed domain of object space (right).

3.3.3 Exit Tag

The exit status-tag of object is determined by two

functions. First, the maximum association of the ob-

ject with the list of objects are computed. Second, the

object must fall in exit region. On the basis of the out-

put of these two functions, the exit tag is assigned to

the object:

exit(O

t

id

) =

max(O

t

?

, LIST O f OBJ(N))

∧ EXIT ZONE(O

t

?

)

where max(.., ..) shows that the maximum likeli-

hood of the object (i.e. O

t

?

) with the list of objects.

EXIT ZONE(..) checks the presence of object in exit

zone of the scene.

3.3.4 Overlaper Tag

The participation in the overlap is computed and is

used to decide the status-tag of the moving object.

Both the objects must fall into the conflicted region

and the likelihood weights of both objects are com-

puted with the conflicted object. The object which re-

tains its visual characteristics during occlusion is set

to overlaper and becomes the parent of the Occludee.

The occluded object is updated frame-by-frame using

depth first search strategy:

over(O

t

id

) =

max(O

t−1

id

, O

t∗

?

)

∧(SEARCH SPACE(O

t−1

id

, O

t∗

?

)

where max(.., ..) finds the maximum likelihood

weight with the conflicted object (i.e. O

t∗

?

). This

is a key parameter because on this basis the deci-

sion of overlaper and occludee status-tag is is made.

SEARCH SPACE(.., ..) checks the presence of ob-

jects in conflicted region.

3.3.5 Occludee Tag

This qualitative module determines the status-tag for

occludee. The decision is taken on the basis of two

assumptions. The first assumption is similar to above

module (i.e. Overlaper Tag). However, in the sec-

ond, the participation of occludee in the occlusion

must be less then its overlaper. The object becomes

the child of its overlaper and the visual characteristics

are updated at each successive frame. The qualitative

representation shows the occluded mode of the object

which satisfies the continuity constraints criteria:

occ(O

t

id

) =

min(O

t−1

id

, O

t∗

?

)

∧(SEARCH SPACE(O

t−1

id

, O

t∗

?

)

where min(.., ..) finds minimum likelihood con-

tribution with the conflicted object (i.e. O

t∗

?

).

SEARCH SPACE(.., ..) checks the object presence in

the search space of the conflicted region.

3.3.6 Reappear Tag

The reappeared object’s relation is computed through

backward chaining into the entire history of mov-

ing objects and maximum correspondence is com-

puted. The object is assigned the same identity when

it went to occlusion and the child-parent relationship

is ended. The following formulation determines the

reappear tag:

reap(O

t

id

) =

max(O

t

?

, O

∗

id

)

where max(.., ..) returns the maximum association

weight of reappeared object.

3.4 Tracking Module

Kalman filter used for tracking and is defined in terms

of its states, motion model, and measurement equa-

tions (Welch and Bishop, 1995). In this paper, each

Kalman-based tracker is associated with every mov-

ing object which enters in the video sequence. We

consider the center of gravity of moving objects (i.e.

xc

x

t

and xc

y

t

) at time t as the states for Kalman-based

tracker, hence the state vector and measurement vec-

tor is:

x

t

=

xc

x

t

xc

y

t

T

, z

t

=

yc

x

t

yc

y

t

T

In the following, A is the transition matrix and H is

the measurement matrix of our tracking system along

with the Gaussian process w

t−1

and measurement v

t

noise. These noise values are entirely dependent on

RESOLVING DATA-ASSOCIATION UNCERTAINTY - In Mutli-object Tracking through Qualitative Modules

463

c)

d)

Frame: t+68 Frame: t+76 Frame: t+79 Frame: t+83

Frame: t+88 Frame: t+94 Frame: t+96 Frame: t+103

Frame: t+15

Frame: t+14

Frame: t+21 Frame: t+25

Frame: t+41Frame: t+30 Frame: t+37Frame: t+35

a)

b)

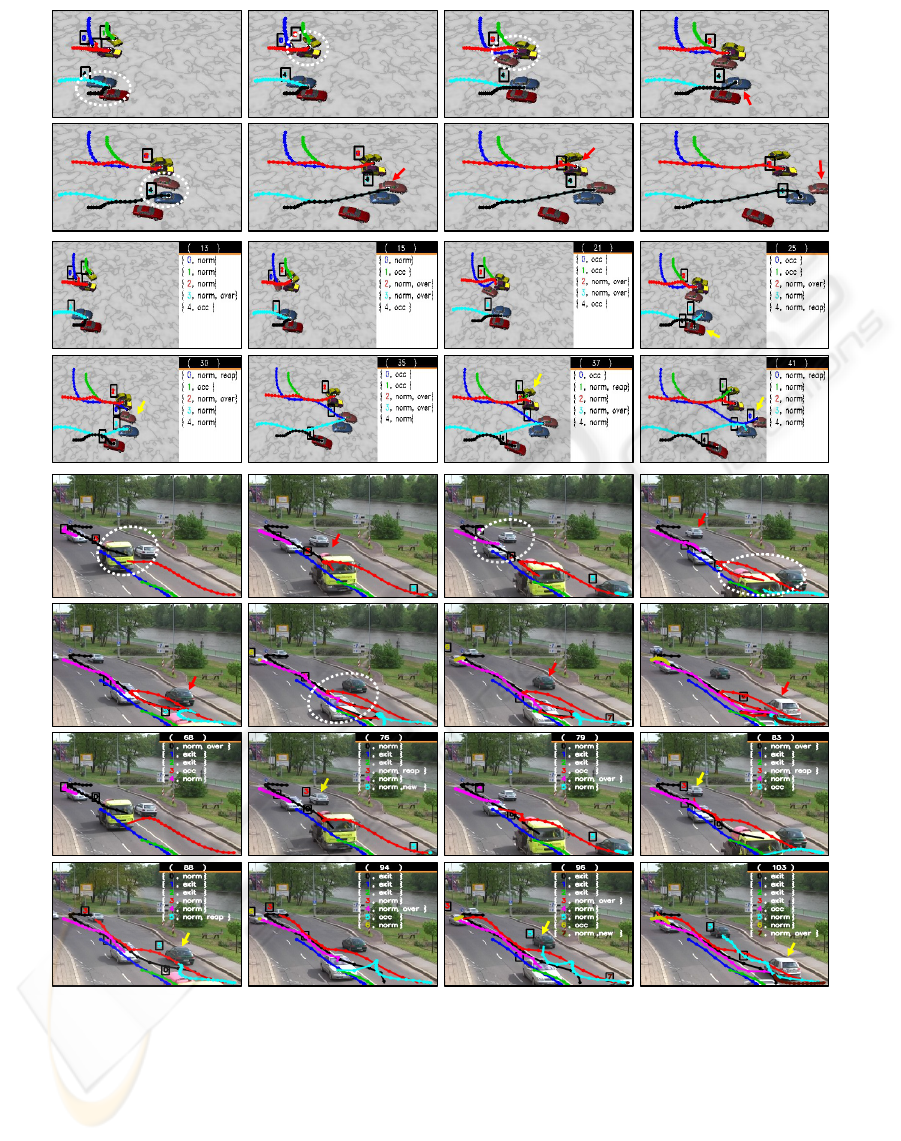

Figure 3: a) Shows the results of tracking with plausible associations which are pointed by red arrows whereas the white

circles indicate the occlusions. b) presents the results of tracking and association of our proposed approach. The status-tags

with identity of the objects are shown in right-side of the images. c) the data association uncertainties during tracking are

pointed by red arrow whereas the white circles point the occlusions. d) presents the results of traffic sequence using proposed

approach. The yellow arrow indicates the resolved data association ambiguities (Please zoom-in both the results for better

visibility).

the system that is being tracked and adjusted empiri-

cally. The equations of our tracking system are:

x

t

= Ax

t−1

+ w

t−1

(2)

z

t

= Hx

t

+ v

t

(3)

When a new moving object is detected, a new

tracker is initiated with associated states (x

t

and z

t

).

In the next frames, normal tracking continues until

any tracking event is observed which is then handled

by the proposed approach.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

464

4 EXPERIMENTAL RESULTS

In this section, the results of our approach are pre-

sented which are applied to two different datasets

taken from our video database. The approach is first

tested on a synthetic video, later applied on real scene.

4.1 Synthetic Sequence

Figure 3 shows the tracking results for the synthetic

video. In this sequence, the segmentation is ideal and

visual features of the detected object during normal

motion remain the same. It can be seen from the

video sequence that the occlusion event is observed

four times in random intervals of time as indicated by

white circles. The labellings and status-tags are pre-

sented along with the tracking paths of moving ob-

jects.

In Figure 3(a), the results of missed associations

are identified in frame t + 25, t + 35, t + 37 and t + 41

which are indicated by red arrows. These ambigui-

ties are observed when the objects split after occlu-

sions. In Figure 3(b), the results of our proposed

approach are presented in which the qualitative rea-

soning modules are used with data association. The

resolved uncertainties are highlighted with yellow ar-

rows whereas the identities with status-tags are shown

on right side of images. It can be observed that the

tracking is successfully done by keeping all the real-

time tracking events under consideration.

4.2 Traffic Sequence

The robustness of the proposed approach is demon-

strated on a real-time traffic sequence where the ob-

jects are moving in both the parallel and opposite

tracks as shown in Figure 3. The multiple occlusions

and separations are taken place in short interval of

time which is the key challenge here. Moreover, the

camera position is not parallel to road but instead, it is

tilted which results in perspective view and therefore

a significant variation in object’s size is observed.

Figure 3(c) shows the outcome of tracking and

identity management before applying our proposed

approach. The errors due to wrong association

weights are indicated by red arrows during split in

t + 76, t + 83, t + 88, t + 96 and t + 103 where the

objects lost their identities when occlusion is over. In

Figure 3(d), these uncertainties are handled by our six

qualitative modules which are integrated for data as-

sociation. For example, it can be seen that in t + 76

and t + 83 the correct identities are successfully iden-

tified after split using our qualitative modules. The

respective identity and status-tag can be seen in right-

side of the frame. Throughout the tracking, it can

been seen that all the real-time events are occurred (

for example new entry, exit entry, occlusion and sep-

aration) in the sequence and plausible interpretations

(i.e. highlighted by yellow arrows) are efficiently han-

dled by our proposed technique.

5 CONCLUSIONS AND FUTURE

WORK

In this paper, limitations of data-association during

conflicted situations are resolved by assigning the log-

ical tags to the moving object which explicitly con-

trol these ambiguities even if the discrete data is in-

complete. The proposed approach is successfully

tested on synthetic simulation and real-time traffic se-

quences. Future work will be focused to interpret the

behaviors of moving objects.

ACKNOWLEDGEMENTS

This work is supported by Forschungspraemie

(BMBF:FKZ 03FPB00213) and Transregional Col-

laborative Research Center SFB/TRR 62 funded by

the German Research Foundation (DFG).

REFERENCES

Bar-Shalom, Y. and Fortmann, T. E. (1987). Tracking and

data association, volume 179 of Mathematics in Sci-

ence and Engineering.

Bennett, B. (2008). Combining logic and probability in

tracking and scene interpretation. In Cohn, A. G.,

Hogg, D. C., M

¨

oller, R., and Neumann, B., editors,

Logic and Probability for Scene Interpretation, num-

ber 08091 in Dagstuhl Seminar Proceedings.

Bennett, B., Magee, D., Cohn, A. G., and Hogg, D. (2008).

Enhanced tracking and recognition of moving objects

by reasoning about spatio-temporal continuity. Image

Vision Computer, 26(1):67–81.

Blake, A. (2006). Visual Tracking: A Short Research

Roadmap. Springer, New York.

Frintrop, S., Knigs, A., Hoeller, F., and Schulz, D. (2009).

Visual person tracking using a cognitive observation

model. In Workshop on People Detection and Track-

ing at the IEEE ICRA.

Halpern, J. Y. (1990). An analysis of first-order logics of

probability. Artificial Intelligence, 46(3):311–350.

Kailath, T. (1967). The divergence and bhattacharyya dis-

tance measures in signal selection. IEEE Transactions

on Communications[legacy, pre - 1988], 15(1):52–60.

RESOLVING DATA-ASSOCIATION UNCERTAINTY - In Mutli-object Tracking through Qualitative Modules

465

Khan, Z. (2005). MCMC-based particle filtering for track-

ing a variable number of interacting targets. IEEE

Transaction of Pattern Analysis and Machine Intelli-

gence, 27(11):1805–1918.

Pathan, S., Al-Hamadi, A., Elmezain, M., and Michaelis,

B. (2009). Feature-supported multi-hypothesis frame-

work for multi-object tracking using kalman filter.

In International Conference on Computer Graph-

ics,Visualization and Computer Vision.

Sherrah, J. and Gong, S. (2000). Resolving visual uncer-

tainty and occlusion through probabilistic reasoning.

In In Proceedings of the British Machine Vision Con-

ference, pages 252–261.

Welch, G. and Bishop, G. (1995). An introduction to the

kalman filter. Technical report, Chapel Hill, NC, USA.

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

466