CALIBRATION-FREE MARKERLESS AUGMENTED REALITY IN

MONOCULAR LAPAROSCOPIC CHOLECYSTECTOMY

H. Djaghloul

1

, M. Batouche

2

and J. P. Jessel

3

1

Ferhat Abbes University, Setif, Algeria

2

King Saoud University, Kingdom of Saudi Arabia

3

IRIT, Toulouse, France

Keywords:

Markerless augmented reality, Wavelets, Multi-resolution analysis, Evolutionary algorithms, Swarm intelli-

gence.

Abstract:

In this paper we present an augmented reality system for laparoscopic cholecystectomy video sequences en-

hancing. Augmented reality allows surgeons to view, in transparency, occluded anatomical and pathological

structures constructed preoperatively using medical images such as MRI or CT-Scan. The deformable nature

of digestive organs leads to a high dimensionality N-degrees of freedom detection and tracking problem. We

describe a knowledge-based construction method of powerful statistical color models for anatomical structures

and surgical instruments classification. Thanks to a new wavelet based multi-resolution analysis of the virtual

reality models and the anatomical color space; we can detect and track digestive organs to ensure marker-

less laparoscopic monocular camera pose and preoperative 3D model registration. Results are shown on both

synthetic and real data.

1 INTRODUCTION

Augmented reality allows viewing in transparency

anatomical and pathological 3D models reconstructed

preoperatively using medical images (MRI, CT-Scan)

in the surgeon field of view. However, one of the ma-

jor challenges that limits augmented reality massive

use in clinical laparoscopic abdominal surgery is the

difficulty of markerless registration of preoperative

digestive organs 3D models within the laparoscopic

view. Indeed, digestive organs are highly deformable

and variable. Therefore, we believe that a clinical

use of augmented reality in abdominal laparoscopic

surgery system has to ensure markerless registration

with knowledge-based interactive functionalities. In

this paper we propose a novel method to ensure 3D

models alignment and tracking of digestive organs di-

rectly onto laparoscopic cholecystectomy videos.

Cholecystectomy is the first surgical intervention

in the United States with more than a half million op-

erations done each year. Since the first cholecystec-

tomy of Langenbuch(Traverso, 1976), it is established

as the standard procedure for surgical treatment of

gallbladder diseases such as gallstones. Cholecystec-

tomy consists in the complete removal of the gallblad-

der with different techniques such as open or laparo-

scopic(Reynolds, 2001; Litynski, 1999) procedures.

However, the video-assisted laparoscopic cholecys-

tectomy is actually the gold standard technique with

more than 98% of interventions(Bittner, 2004).

The rest of the paper is organized as follows. In

the second section, we present a number of signifi-

cant augmented reality systems and methods applied

to digestive surgery. Next, we describe the proposed

method used for statistical abdominal organs color

model construction and its application to detect and

track anatomical structures and surgical instruments

in order to register the preoperative virtual model us-

ing a new wavelet based multi-resolution analysis of

deformable objects and particles swarm optimization

(PSO). In section 4, experimental results on synthetic

and real data show the effectiveness and robustness of

our method. Finally, we present our conclusion and

perspectives.

2 RELATED WORKS

In the last few years, researchers of augmented real-

ity community have proposed many systems for var-

ious medical domains and applications. According

139

Djaghloul H., Batouche M. and P. Jessel J. (2010).

CALIBRATION-FREE MARKERLESS AUGMENTED REALITY IN MONOCULAR LAPAROSCOPIC CHOLECYSTECTOMY.

In Proceedings of the International Conference on Computer Graphics Theory and Applications, pages 139-142

DOI: 10.5220/0002837801390142

Copyright

c

SciTePress

to tracking devices and methods, augmented reality

systems in digestive surgery can be classified in two

categories, vision-based and hybrid systems. In their

work, (Nicolau et al., 2005b) proposed a low cost

and accurate guiding system for laparoscopic surgery

with validation on abdominal phantom. The system

allows real time tracking of surgical tools and regis-

tration using markers by optimization of a given cri-

terion (EPPC)(Nicolau et al., 2005a). In the other

side, (Feuerstein et al., 2008) propose a hybrid sys-

tem composed of optical and electromagnetic track-

ing systems to determine the position and the ori-

entation of the intra-operative imaging devices, such

as mobile C-arm, laparoscopic camera and flexible

ultrasound, allowing direct superimposition of ac-

quired patient data in minimally invasive liver resec-

tion without need of registration.

3 PROPOSED METHOD

In this section we outline the principal components

of our markerless augmented reality system for la-

paroscopic cholecystectomy. Taking into consider-

ation temporal coherence according to the principal

steps of standard laparoscopic cholecystectomy, the

first component detects all anatomical and patholog-

ical structures in the surgical 2D laparoscopic view

using a statistical color model of digestive organs. As

a result, we have for each organ an initial segmenta-

tion represented by a sparse binary image. Then, false

positives are filtered using an adaptation of particles

swarm optimization (PSO) algorithm. thus, we have

a set of particles with different radius in the 2D image

for each organ.

In laparoscopic cholecystectomy, the most impor-

tant organ is the gallbladder and its vascular sup-

ply. The same principle is applied on preoperative

CT-Scan images to build a particles-based 3D model

of the gallbladder and the liver. The novel pro-

posed wavelet-based multi-resolution analysis allows

to have coarse models either of 3D virtual organs or

2D images. Finally, we make a 2D/3D registration for

each resolution level.

In order to build a statistical color model, a set of

16735 colored laparoscopic images (IRCAD source)

from a video of laparoscopic surgeries is used. The

images have 240 x 320 RGB coded pixels with 256

bins per channel (24 bits per pixel). The video se-

quence is acquired at a frame rate of 30 Hz.

3.1 Anatomical Color Model

According to the cholecystectomy intervention work-

flow step (t), we construct for each anatomical region

(i) a statistical color model using a histogram with 256

bins per channel in the RGB color space. Each color

vector (x) is converted into a discrete probability dis-

tribution in the manner:

P

i,t

(x) =

c

i,t

(x)

∑

N

i,t

j=1

c

i,t

(x

j

)

, t = t

1

. . . t

6

, i = 0 . . . S

t

. (1)

where c

i,t

(x) gives the count in the histogram bin rep-

resenting the rgb color triple (x) and N

i,t

is the total

count of the rgb histogram entries returned by the his-

togram bins number of the structure region (i) dur-

ing the intervention step (t). The number of detected

structures S

t

varies according to the step. According

to the European standard and common laparoscopic

cholecystectomy installation and intervention work-

flow, the number of structures classes is limited to

four. In practice, the step (t) denotes a time interval

represented by a set of consecutive laparoscopic im-

ages t =

h

I

t

v,1

. . . I

t

v,n

i

in the videos (v) that compose

the training dataset.

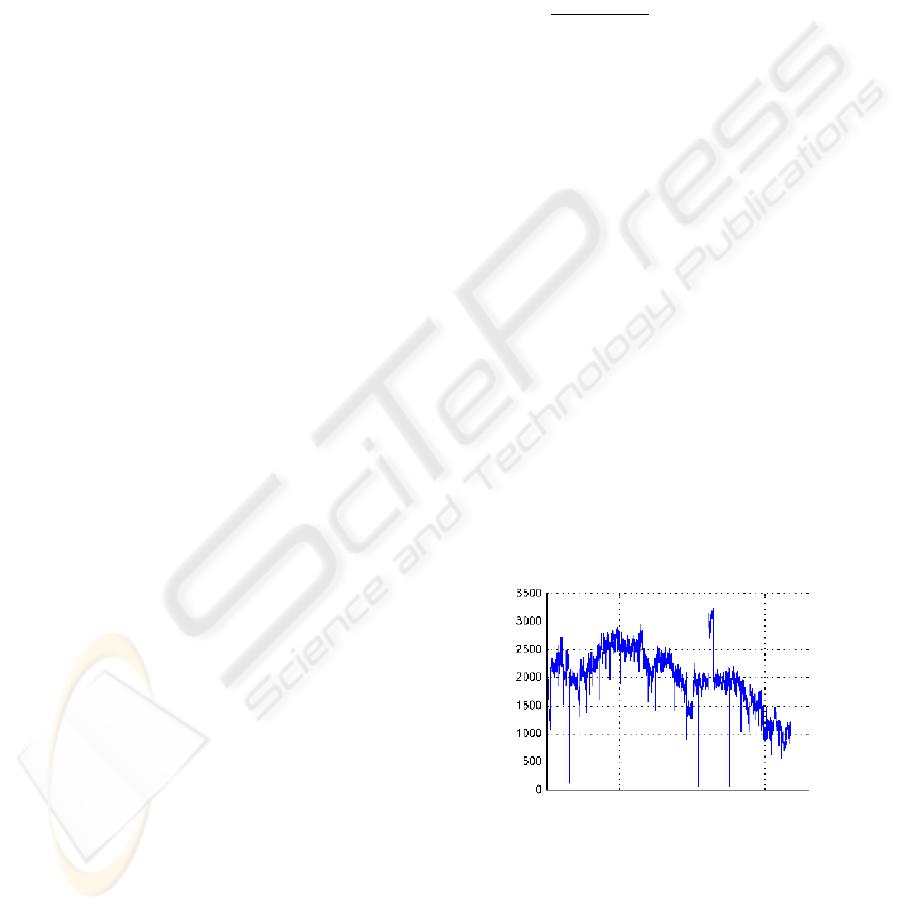

After analysis of the laparoscopic video, we have

observed that it contains at most 10017 RGB color

bins over the whole sequence with a mean of 1997

RGB triples in each frame. Therefore, the RGB his-

togram is mostly empty with 99,94% of the 256

3

RGB

bins that are not used. Figure 1, shows the evolution

of RGB bins count in the training laparoscopic chole-

cystectomy video.

Figure 1: Evolution of RGB bins count in the sequence.

3.2 Spherelet : Wavelet-based

Multi-resolution Analysis

In this section we propose a new multi-resolution

analysis of 3D objects modeled as a set of elementary

non intersected particles defined by their centers and

radius. The virtual model of the anatomical structure

GRAPP 2010 - International Conference on Computer Graphics Theory and Applications

140

is subdivided into a set of particles, that we will call

”‘Spherelet”’. The closest greatest sphere to the pre-

operative 3D model gravity center represents the root

of the Spherelet model. The Spherelet root is used

to initiate 2D/3D rigid registration. Hence, the root

definition has to ensure the most stability and less de-

formation during the whole sequence. The root repre-

sents the coarsest resolution level of the virtual model.

We suppose that

S

j

is the Spherelet at the resolution

level (j). We have :

S

j

=

S

j,1

S

j,2

. . . S

j,i

. . . S

j,n

j

t

(2)

where (S

j,i

) is the (i

th

) particle of the virtual model

at the resolution (j).(n

j

) is the length of the Spherelet

at the resolution level (j) denoting its particles count.

The initial resolution level is

S

0

and the coarsest one

is [S

r

] corresponding to the Spherelet root. The rela-

tion between two successive resolution levels is given

by:

S

j+1

= A

j+1

S

j

D

j+1

= B

j+1

S

j

(3)

with

D

j

represents the wavelet detail coeffi-

cients of the resolution level (j):

D

j

=

D

j,1

D

j,2

. . . D

j,i

. . . D

j,n

j

t

(4)

The

A

j

and

B

j

matrices are called the analysis

filters of the resolution level (j).

To reconstruct the superior resolution level we use

two matrices

P

j

and

Q

j

called synthesis filters.

The initial resolution level is given by:

S

j

= P

j+1

S

j+1

+ Q

j+1

D

j+1

(5)

The relation between the analysis and synthesis filters

is formulated by:

[A|B]

t

= [P|Q]

−1

then[A|B]

t

[P|Q] = I (6)

The detail information relative to eliminated parti-

cles contains their inter-distances or volume ratios in

the logarithmic scale. In the simplest case, the trans-

formation to an inferior resolution level (j) of the 3D

Spherelet volumetric model consists in replacing two

particles of the resolution level (j-1) by a representa-

tive one. We have then:

A

j

= [I|0]

B

j

= [−I|I] (7)

The

A

j

filter is used to select elements of the

next inferior resolution level and

B

j

to extract

wavelet coefficients of each level. Hence, the anal-

ysis process is formulated by:

S

r

= A

r

S

r−1

= A

r

A

r−1

. . . A

2

A

1

S

0

D

r

= B

r

S

r−1

= B

r

B

r−1

. . . B

2

B

1

S

0

(8)

Therefore, the synthesis filters are given by:

P

j

= [I|I]

t

Q

j

= [0|I]

t

(9)

Assuming that the initial Spherelet is com-

posed of (2

r

) spheres. We have, S

( j=0)

=

S

0,2

0

S

0,2

1

. . . S

0,i

. . . S

0,2

r

t

with n

( j=0)

= 2

r

and r

gives the number of levels to reach the coarsest repre-

sentation corresponding to the Spherelet root.

3.3 Anatomical Structures Tracking

We propose a tracking by detection method of di-

gestive organs using particles swarm optimization

(PSO). The classical PSO is a global search strat-

egy for optimization problems(Kennedy et al., 1995)

and it is based on the social evolution simulation of

an arbitrary swarm of particles based on the rules of

Newtonian physic. Assuming that we have an N-

dimensional problem, the basic PSO algorithm is for-

mulated by position x

m

(t) and velocity v

m

(t) vectors

representing the time evolution of M particles with

random affected initial positions. Hence, we have:

x

m

(t) = [x

1

(t)x

2

(t). . . x

N

(t)]

T

(10)

v

m

(t) = [v

1

(t)v

2

(t). . . v

N

(t)]

T

(11)

The evolution of the particles in the classical algo-

rithm is done by the following equations:

v

m

(t + 1) = f

m

i

v

m

(t) + f

m

c

[D

c

]

N

(x

m

(t

c

) − v

m

(t))

+ f

m

s

[D

s

]

N

(x

opt

(t

s

) − v

m

(t)) (12)

Thus, the new position of the particle m is given by:

x

m

(t + 1) = x

m

(t) + v

m

(t + 1) (13)

Where (v

m

(t)) and (v

m

(t + 1)) are, respectively, the

past and the new velocity vectors of the particle (m).

( f

m

i

) is the inertia factor of the particle m, ( f

m

c

) is

its the cognitive factor and ( f

m

s

) is the social factor.

([D

c

]

N

) and ([D

s

]

N

) are the N-dimensional diagonal

matrices composed of statistically independent nor-

malized random variables uniformly distributed be-

tween 0 and 1. (t

c

) is the iteration where the particle

m has reached its best position given by (x

m

). (t

s

) is

the generation that has found its best global particle-

member defined by its components (x

opt

).

The Spherelet root of each organ, mainly that of

the gallbladder, is determined in the 2D UV space of

the image by optimization of the following proposed

cost function:

F

Θ

=

|

1 − k

|

+

|

1 − d

|

(14)

with

k =

α

∑

i, j

I

b

(x)

, (15)

CALIBRATION-FREE MARKERLESS AUGMENTED REALITY IN MONOCULAR LAPAROSCOPIC

CHOLECYSTECTOMY

141

and

d =

∑

i, j

I

b

(x)

x

2

r

, (16)

where, α, models the priori-knowledge and , d, the

density of the particle.

The 2D/3D registrations is ensured by minimiz-

ing the distance between the projection of the 3D

Spherelet (S

3D

) of the virtual model reconstructed us-

ing preoperative slides and the 2D Spherelet (S

2D

)

in the laparoscopic image. Therefore, for each 3D

Spherelet particle we compute the pose using (PSO)

assuming the stability of previously computed coarse

levels registration. For each resolution level, the func-

tion to be minimized is given by:

F

Φ

=

|

Φ

3D

(x) − S

2D

|

(17)

where Φ

3D

is the rendering function of (S

3D

).

4 EXPERIMENTAL RESULTS

First, we have applied the method on synthetic im-

ages (made by hand) to validate detection and track-

ing method (Figure 2).

(a) (b)

Figure 2: Tracking of synthetic gallbladder.

Then, we have tried the method on real laparo-

scopic images as shown below (Figure 3).

(a) Original image. (b) Detection

(c) 2D/3D registration.

Figure 3: Real laparoscopic image augmentation (IRCAD).

5 CONCLUSIONS

In this paper we presented a novel method for aug-

menting images of video based laparoscopic chole-

cystectomy. A new statistical color model is proposed

to detect anatomical and pathological structures. A

new criterion is used to detect and track organs using

particles swarm optimization. A new wavelet based

multi-resolution analysis of 2D laparoscopic images

and 3D particles-modeled objects is used to register

the preoperative model within the real scene. Exper-

iments have shown the effectiveness of the proposed

method.

REFERENCES

Bittner, R. (2004). The standard of laparoscopic chole-

cystectomy. Langenbeck’s Archives of Surgery,

389(3):157–163.

Feuerstein, M., Mussack, T., Heining, S., and Navab, N.

(2008). Intraoperative laparoscope augmentation for

port placement and resection planning in minimally

invasive liver resection. IEEE Transactions on Medi-

cal Imaging, 27(3):355.

Kennedy, J., Eberhart, R., et al. (1995). Particle swarm op-

timization. In Proceedings of IEEE international con-

ference on neural networks, volume 4, pages 1942–

1948. Piscataway, NJ: IEEE.

Litynski, G. (1999). Endoscopic surgery: the history, the

pioneers. World journal of surgery, 23(8):745–753.

Nicolau, S., Garcia, A., Pennec, X., Soler, L., and Ayache,

N. (2005a). An augmented reality system to guide

radio-frequency tumour ablation. Computer Anima-

tion and Virtual Worlds, 16(1):1–10.

Nicolau, S., Goffin, L., and Soler, L. (2005b). A low

cost and accurate guidance system for laparoscopic

surgery: Validation on an abdominal phantom. In

Proceedings of the ACM symposium on Virtual real-

ity software and technology, page 133. ACM.

Reynolds, W. (2001). The first laparoscopic cholecystec-

tomy. JSLS, Journal of the Society of Laparoendo-

scopic Surgeons, 5(1):89–94.

Traverso, L. (1976). Carl Langenbuch and the first chole-

cystectomy. Am J Surg, 132(1):81–2.

GRAPP 2010 - International Conference on Computer Graphics Theory and Applications

142