CONTENT BASED IMAGE RETRIEVAL USING SPATIAL

RELATIONSHIPS BETWEEN DOMINANT COLOURS OF

IMAGE SEGMENTS

Hasitha Bimsara Ariyaratne and Koichi Harada

Graduate School of Information Engineering, Hiroshima University, Japan

Keywords: Content Based Image Retrieval, Dominant Colours, Text Index, Spatial Relationships.

Abstract: Content Based Image Retrieval (CBIR) is a quickly evolving area in computer vision and image processing

due to the ever increasing number of digital images. Therefore efficient indexing is a vital part in image

retrieval systems. Since the ultimate goal of any CBIR system is to simulate the Human visual system

(HVS), applying some of the fundamental concepts used in HVS for identifying images such as colour,

position size and shape could greatly help enhance the accuracy. Therefore, this research proposes a simple

yet effective text based indexing scheme that relies on spatial relationships among dominant colours of

image segments. A new connected component labelling approach along with an efficient graph based image

segmentation algorithm is used for segment identification. The indexing scheme is capable of identifying

both complete and partial image matches. Experiments carried out using different sets of images have

yielded promising results, validating the concept‟s viability for Content Based Image Retrieval.

1 INTRODUCTION

Due to various advancements in digital image

capturing, compression, storage and transmission

technologies, the number of digital images is

increasing rapidly. As a result, efficient image

searching and indexing techniques have become a

vital aspect in managing this ever-growing image

repository. Hence, image analysis, searching and

retrieval have become a popular, yet challenging

research topic in the area of computer vision and

image processing. Conventionally, images were

tagged and indexed using text as a Meta descriptor

(Chang & Hsu,1992), where images were indexed

and retrieved with text descriptions. Most of the

commercial and internet based image searching

services (i.e. Google Images, Yahoo Image search,

Microsoft Bing Image search etc..) still rely on this

approach. However, this type of indexing is both

labour intensive and error prone, since tagging is

usually done manually. Therefore, descriptions are

highly subjective and incomplete, for example in

some cases critical visual features of an image (i.e.

colour, shape, texture etc..) are discarded.

Furthermore, text based systems cannot be used in

certain application domains where an unknown

query image has to be compared with a collection of

stored images (i.e. Fingerprint databases, face

recognition databases etc.). Therefore, the focus of

recent studies in image searching has shifted from

conventional text based methods to content based

methods. In Content Based Image Retrieval (CBIR)

Systems, images are indexed based on visual content

of an image such as colour, Texture, Shape and

spatial information (Hiremath & Jagadeesh, 2007)

instead of user assigned text descriptions.

Content based Image Retrieval systems are

widely used by both personal and professional users.

Some of the common application areas include

forensic sciences (fingerprint, DNA, fibre, hair and

bullet fragment matching) (Eakins & Graham,1999),

medical imaging (X-Ray, CAT, CT scan images for

deceases diagnosis), publishing and engineering

(Venters & Cooper, 2001).

Image content can either contain low-level visual

attributes such as colour, texture, shape or high-level

visual objects such as people, vehicles and buildings.

A visual content descriptor is a feature that can be

used to extract and store these visual characteristics.

Colour is one of the most prominent visual

descriptors used in image retrieval. Colour

Histogram is a conventional colour based approach

that provide a reasonable accuracy for most CBIR

184

Bimsara Ariyaratne H. and Harada K. (2010).

CONTENT BASED IMAGE RETRIEVAL USING SPATIAL RELATIONSHIPS BETWEEN DOMINANT COLOURS OF IMAGE SEGMENTS.

In Proceedings of the International Conference on Computer Vision Theory and Applications, pages 184-189

Copyright

c

SciTePress

applications (Swain & Ballard, 1991), however the

lack of spatial features reduces its discriminative

power. As a solution, techniques like extended

histograms, augmented histograms (Chen & Wong,

1999) and colour correlograms (Huang et al., 1997)

were introduced. Even though these new methods

incorporate spatial relationships between colours,

they still compute a statistical generalization of

colour relations, which may not depict the actual

relationships. Hence they perform poorly when

partial images are concerned.

However, colour alone does not have a very

strong discriminative power to capture all the facets

of an image; therefore additional descriptors are

needed to enhance the accuracy of search results.

Psychological experiments have shown that the

Human Visual System (HVS) cognizes the world in

terms of high-level objects and their spatial

relationships, the „object-ontology‟ of the HVS can

be classified as follows (Liu et al., 2007):

Figure 1: Object ontology.

Since emulating the HVS is the ultimate goal of

any image processing technique, representing

images using above descriptors can greatly enhance

the efficiency of CBIR as well. Due to the

complexities of shape based calculations, remaining

three descriptors, namely colour, position and size

were adopted as the main content descriptors in this

research.

The main focus of this research was to

implement a new indexing scheme that can capture

spatial relationships of significant image segments

of an image based on their dominant colours.

Remainder of this paper is structured as follows:

Section 2 and 3 discusses about colour systems and

the importance of using dominant colours, followed

by a brief introduction to the image segmentation

algorithm used in this research. Section 5 explains

the process of creating a pallet of dominant colours

followed by an outline of the newly proposed

connected component labelling algorithm. Sections

7 and 8 provide an overview of the implementation

details and experimental results.

2 COLOUR SPACES

A colour space provides the ability to specify, create

and visualise colours; it is an abstract mathematical

model describing how colours can be represented as

points in a 3D space.

Many different colour systems are used to

represent colours in digital images, the most widely

used model is RGB, however, HSL/V, CMY/K and

CIELab (International Commission on

Illumination‟s Lightness, a, b colour component

model) are also used depending on different

applications and requirements.

Despite having so many different colour models,

only a handful of them such as CIELab have a

perceptually uniform colour space. In such a colour

space, a linear change of data results in a linearly

perceived colour change; in other words the

Euclidian distance between two colours should

represent the colour difference as perceived by the

human vision system (Shih et at., 2001).

Since this research focused on processing images

based on a reduced colour palette (dominant

colours), colour approximation was a vital part. For

this reason CIELab colour space was used for better

accuracy in calculating dominant colours.

3 DOMINANT COLOURS

Modern images contain millions of colours; but if

this number can be decreased to tens or hundreds

without losing a significant amount of the detail,

then both the storage size and computational power

required for processing images can be drastically

reduced.

In a typical image, most of the colours are simply

shades of a few basic colours. These basic colours

dominate the whole image while capturing the

essential details, hence called dominant colours.

Therefore, by processing an image with regard to

these dominant colours can help reduce the

processing and storage requirements without

significantly reducing the discriminative power of

the image. The process of deriving the dominant

colours is discussed in section 5.

4 IMAGE SEGMENTATION

Since this study focused on building an image index

based on objects and their spatial/colour

relationships, segmenting the image was a major

Object Ontology

Colour

Position

Size

Shape

CONTENT BASED IMAGE RETRIEVAL USING SPATIAL RELATIONSHIPS BETWEEN DOMINANT COLOURS

OF IMAGE SEGMENTS

185

task. There are many image segmentation algorithms

available today; among them Histogram based

methods, Edge detection methods, Region growing

methods and Graph based methods take prominence.

A recent study carried out on evaluating various

image segmentation algorithms has suggested a

particular Graph Based Image Segmentation

Algorithm (ISA) to be very efficient (Pantofaru et

al., 2005)(Felzenszwalb et al., 2004); therefore this

approach was adopted for the image segmentation

step of our research. The algorithm builds up an

undirected graph where

vertices = pixels

edges = connections between neighbouring

pixels with a weight

weight = similarity of the vertices connected by

an edge

The algorithm uses a minimum spanning tree

approach to segment the pixels in to clusters

depending on the weights associated with edges.

This algorithm has two user changeable

parameters σ and k. Where σ is the standard

deviation of the Gaussian filter used to smooth the

image in order to eliminate some of the compression

artefacts before computing the edge weights. K is a

variable in the threshold function that controls the

degree of the difference between two components

must vary in order for there to be evidence of a

boundary between them. The constant k therefore

can be used to specify the maximum and minimum

size of a component.

5 DOMINANT COLOUR PALLET

The colour pallet used in this research contains 42

colours. Since CIELab is a perceptually uniform

colour space, selecting points that are equally

distanced from each other provide a uniformly

distributed colour pallet. For simplicity, the space

was partitioned into a cubic grid, thereafter CIELab

colour values corresponding to vertices with a valid

RGB values were selected. Even though points of a

cube don‟t lie at equal distances to one another,

experiments have yielded good results on the

sparsity of the colours.

Further experiments were carried out on a

sample image set to obtain an optimized colour

palette. First, the images were segmented with the

ISA; afterwards all segments were converted in to

dominant colours using different colour pallets

containing different number of colours. Finally the

number of colours per image segment was counted

for each pallet. The pallet with the maximum

number of colours and minimum number of colours

per segment was chosen as the optimal palette.

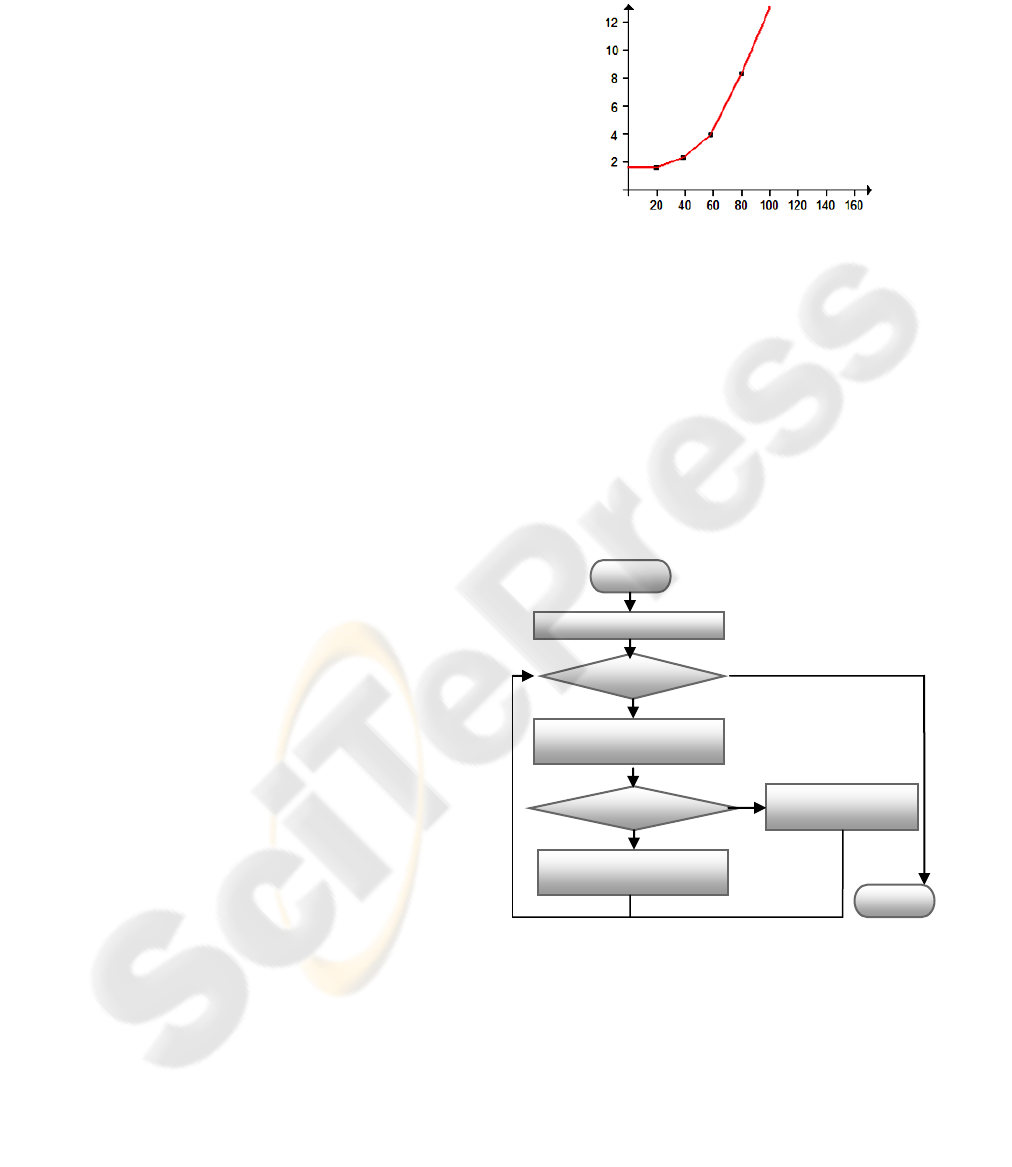

Figure 2 shows the experiment results:

Number of colours in the palette

Number of colours per segment

Figure 2: Colour palette size vs. segmentation accuracy.

6 CONNECTED COMPONENT

LABELLING

An image segmentation algorithm only splits an

image into multiple segments, but it does not help

identify individual objects nor extract essential high

level information about segments. Therefore, in

order to extract these additional information, a

component labelling algorithm was used.

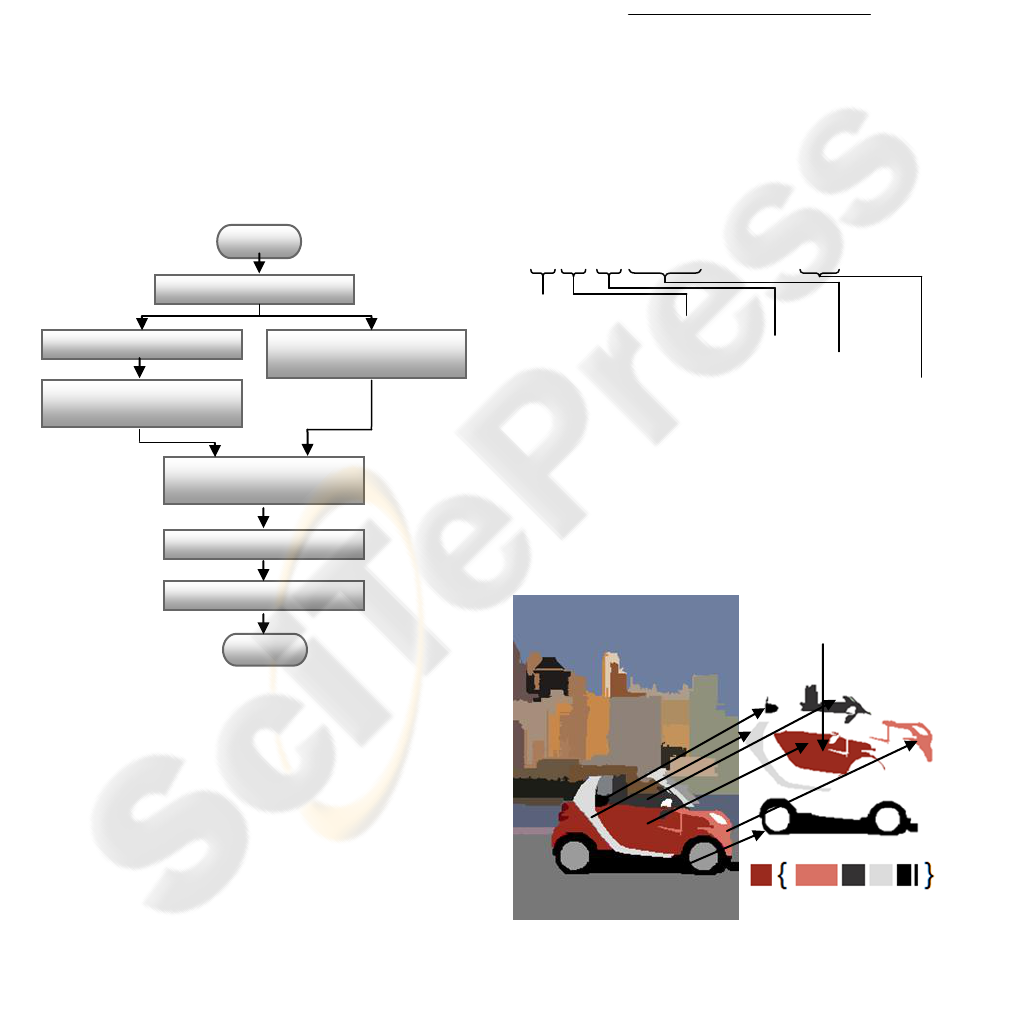

Figure 3: Flow chart of the proposed CCL algorithm.

Most of the Connected component labelling

methods in use today are for binary and grey scale

images (Wu et al., 2009), but this research proposes

a colour CCL algorithm based on image

segmentation. Figure 3 lists the flow chart of the

proposed CCL algorithm.

The first step of the algorithm segments the

image. This segmented image has all segments

Start

Segment Image

End of Img

Check new pixel in

raster order

New Object

Assign new Label

and trace contour

Assign the

existing label

Yes

End

No

No

Yes

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

186

coloured with a random but a unique colour, since

colour processing is not a part of the ISA. Then, the

image is scanned from top to bottom and left to

right, for each line in raster order. When a new

object (identified by a colour change) is

encountered, the algorithm assigns a new label (the

lowest unassigned numerical value) and start tracing

along the contour in the clockwise direction till it

reaches the starting point of the trace; while tracing,

all the pixels of that contour are given the same

label.

7 OVERALL SYSTEM

This section describes the overall design of the

system and how the techniques discussed previously

are applied in indexing an image. Figure 4 represents

the overall image processing module:

Figure 4: Flow chart of the image processing module.

Segment Image: Segments the image using the ISA

discussed previously.

Convert to Dominant Colours: converts a copy of

the image to dominant colours.

Find Objects and Trace Border: executed with

help from the proposed CCL algorithm.

Assign Dominant Colours to the Segments: using

the dominant colour image as a reference, all the

segments derived by the ISA are assigned a

dominant colour. For each segment all the

corresponding pixel values in the dominant colour

image are scanned, the colour which has the highest

pixel count is then assigned as the dominant colour

of the corresponding segment.

Analyse Relationships: In order to find the

surrounding objects of a given object, the contour is

traced to search for adjoining colour regions. While

tracing the contour, adjoining object IDs, and the

number of pixels along the shared boundary are

stored. Relationship between adjoining objects is

derived by:

Relationship =

Pixels of the common boundary

Length of the contour

(1)

This relationship value is stored as an attribute in

the final index as illustrated in Figure 5 to represent

the spatial relationships between adjoining image

segments.

Build Index: Finally, the index is constructed with

the colour of the object followed by its relationships

to the colours of surrounding objects in a formatted

string as shown in Figure 5.

Figure 5: A sample string index.

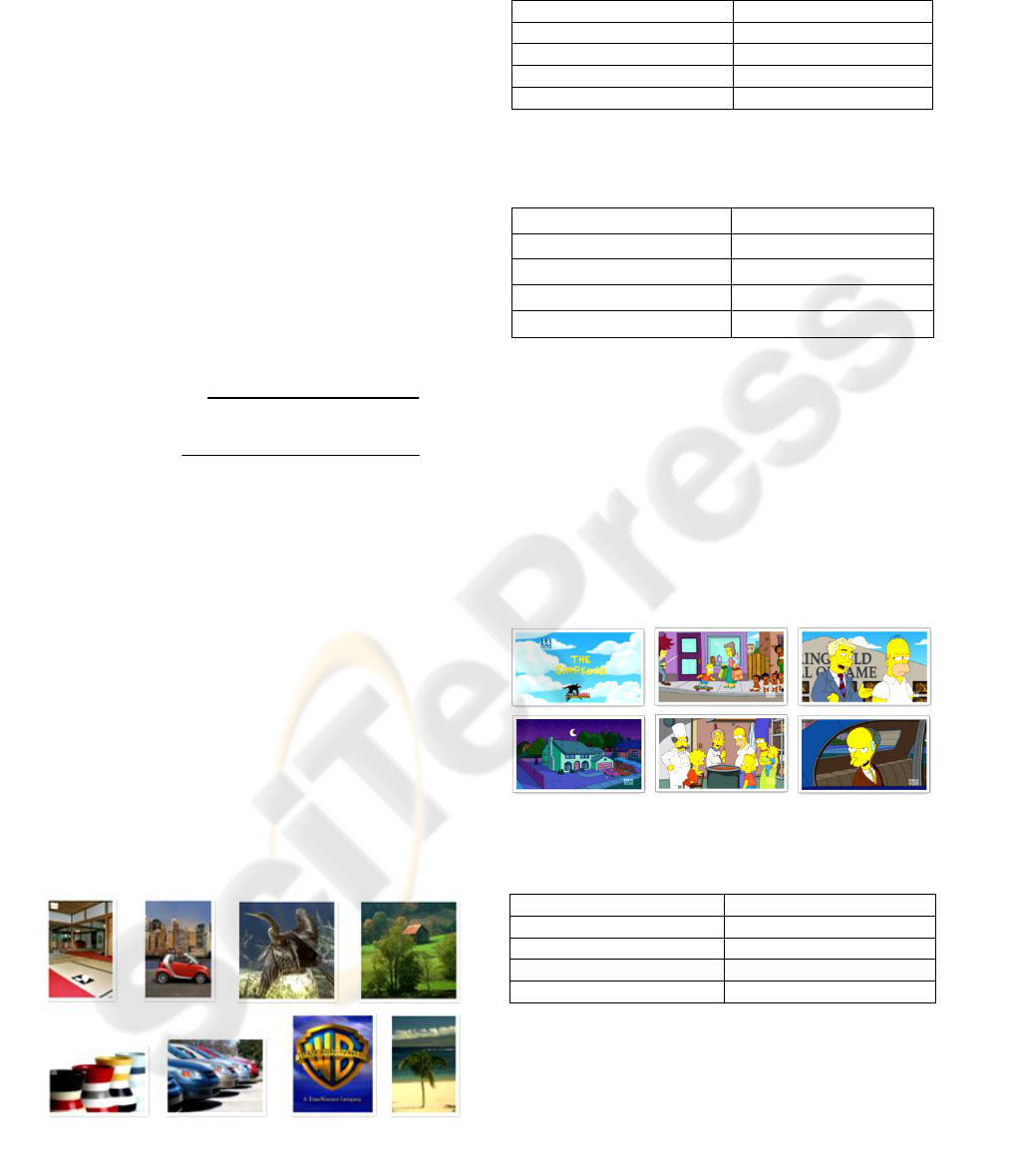

Figure 6 illustrates a high-level example of how

an image is segmented and indexed. The right most

section of the figure shows how the index is

represented in relation to the dark red colour door of

the car.

Figure 6: An illustration of how neighbouring image

segments are processed in relation to a particular segment.

Convert to dominant

colours

Stop

Load the Image

Segment image

Assign dominant

colours to segments

Find objects & trace

border

Analyse relationships

Build Index

Start

12{15:0.4,21:0.3,18:0.3,8:0.2,9:0.1}

Red door

12{15:0.5,19:0.3,36:0.2}&16{}&22{}..

Colour of the object

Colour of a surrounding object

Relationship

Next surrounding object

Next main object

CONTENT BASED IMAGE RETRIEVAL USING SPATIAL RELATIONSHIPS BETWEEN DOMINANT COLOURS

OF IMAGE SEGMENTS

187

8 IMAGE RETRIEVAL AND

EXPERIMENTAL RESULTS

This research mainly focused on implementing a

new indexing scheme for searching and retrieving

images based on a complete or a partial query

image. As a result a new string index similar to the

representation illustrated in Fig 6 was introduced.

Once a query image is given, an index is

generated for that image as detailed in section 7. The

generated index is then compared against other

indices in the database for number of matched image

segments. An image segment is considered equal or

matched if the string representation of the

considered segment (the dominant colour followed

by surrounding dominant colours and their

relationships) are matched. Image(s) with the

highest matching accuracy is(are) retrieved as the

query result(s).

MatchingAccuracy =

No:OfMatchedImgSegments

TotalNo:OfSegmentsInTheImg

(2)

QueryImageSize=

No:OfSegmentsInThePartialImg

No:OfSegmentsInTheCompleteImg

(3)

The proposed system was tested using three

different image sets:

1. 50 randomly selected images searched from

google image search

2. Screenshots taken from “The simpsons”

cartoon series.

3. 60 images from Corel Image database

which was pre classified by 30 human

subjects.

Experimental results have shown that matching

accuracy for unrelated images was averaging around

0.2 or below. Tables 1 and 2 lists the results carried

out on the first image set with different values for σ.

From these results it is evident that this technique

could produce satisfactory results for partial image

queries if the query image contained at least 50% of

the original content.

Figure 7: Sample images of Set 1.

Table 1: Search results for a set of 50 randomly selected

Google Images (σ = 0.6, k max = 1/150th of the image k

min = 1/300th of the image).

Query image size

Matching accuracy

100%

~1.00

75% ~ 100%

0.45~0.55

50% ~ 75%

0.30 ~ 0.45

25% ~ 50%

0.10 ~ 0.30

Table 2: Search results for a set of 50 randomly selected

Google images (σ = 0.3, k max = 1/150th of the image, k

min = 1/300th of the image).

Query image size/content

Matching accuracy

100%

~1.00

75% ~ 100%

0.35~0.50

50% ~ 75%

0.20 ~ 0.35

25% ~ 50%

0 ~ 0.30

The disparity in the results of Table 1 and 2

indicates that the matching accuracy heavily

depends on the Image Segmentation Algorithm

(ISA) and its parameters. Table 3 lists the results

carried out on the second dataset. Since cartoon

images are relatively simple and have a uniform

colour distribution, the image segmentation

algorithm performs well. As a result matching

accuracy is also higher. This is yet another

indication of the proposed studies dependability on

the image segmentation algorithm.

Figure 8: Sample images of set 2.

Table 3: Search results for cartoon images (σ = 0.6, k max

= 1/150th of the image k min = 1/300th of the image).

Query image size/content

Matching accuracy

100%

1.00

75% ~ 100%

0.55~0.65

50% ~ 75%

0.30 ~ 0.55

25% ~ 50%

0.10 ~ 0.30

We also tested the system for image

classification ability using 60 pre-classified images.

The test images had somewhat uniform colour

relationships within each group of images of. The

Classification accuracy is calculated for each

category N by averaging the matching accuracy of

VISAPP 2010 - International Conference on Computer Vision Theory and Applications

188

each image against all the n number of images in the

category.

Figure 9: Sample images of set 3.

ClassificationAcc

N

=

∑ MatchingAccWith

n

n

(4)

Table 4: Image classification accuracy (σ = 0.3, k max =

1/150th of the image k min = 1/300th of the image).

Category

Classification accuracy

1

0.75

2

0.65

3

0.80

4

0.40

5

0.50

6

1.00

Overall

0.68

Even though image classification was not an

objective of the original research project,

experimental results suggest that it has the potential

to classify images when there are common colour

relationships within groups of images.

9 FUTURE ENHANCEMENTS

As discussed earlier, performance of the ISA is a

main limitation; therefore a better ISA can improve

the overall accuracy. The indexing scheme proposed

in this study is relatively simple, even though the

results are promising, incorporating additional

parameters can improve the efficiency even further.

Optimizing the colour palette generation and

incorporating newer standards of CIELab colour

space may also yield better results.

10 CONCLUSIONS

The aim of this study was to explore the possibilities

of using high-level objects (image segments) with

dominant colours and spatial relationships to

enhance the efficiency of CBIR, especially with

regard to partial image matching capabilities.

Experimental results are a strong indication to the

viability of this approach.

REFERENCES

Chang,S. & Hsu,A. (1992). "Image information systems:

where do we go from here?". IEEE Transaction on

Knowledge and Data Engineering, Vol.5, No.5, 431-

442.

Chen, Y. & Wong, E. (1999). ”Augmented image

histogram for image and video similarity search”,

Proceedings of Society of Photographic

Instrumentation Engineers conference on Storage and

Retrieval for Image and Video Databases.

Eakins,J. & Graham,M.(1999).”Content-based Image

Retrieval”,University of Northumbria at Newcastle,

Library and Information Briefings,Bulletin Board for

Libraries Journal.

Felzenszwalb, P. & Huttenlocher, D. (2004). ”Efficient

Graph-Based Image Segmentation”, International

Journal of Computer Vision, 59, 2, 167-181.

Hiremath, P. & Jagadeesh, P. (2007). "Content Based

Image Retrieval Using Color, Texture and Shape

Features". Proceedings of the 15th International

Conference on Advanced Computing and

Communications, 780-784.

Huang, J. Kumar, S. Mitra,M. Zhu,W. Zabih, R. (1997).

”Image indexing using color correlograms”, IEEE

Computer Society conference on Computer Vision and

Pattern Recognition,762-768.

Liu, Y. Zhang, D. Lu, G. Ma, W. (2007). ”A survey of

contentbased image retrieval with high-level

semantics”, Proceedings of Pattern Recognition, 40,

262-282.

Pantofaru, C. & Hebert, M. (2005). “A Comparison of

Image Segmentation Algorithms,”. Technical report,

Robotics Institute of Carnegie Mellon University.

Shih, T. Huang, J. Wang, C. Hung, J. Kao, C. (2001). "An

Intelligent Content-based Image Retrieval System

Based on Colour". Proceedings of National Science

Council, 25, 4, 232-243.

Swain, M. & Ballard, D. (1991) ”Color indexing”,

International Journal of Computer Vision, 7, 1, 1132.

Venters, C. & Cooper, M. (2001). ”A Review of Content-

Based Image Retrieval Systems”, Joint Information

Systems Committee. England: JISC.

Wu, K. Otoo, E. Suzuki, K. (2009). ”Optimizing two-pass

connected-component labelling algorithms”,

Proceedings of Pattern Analysis and Applications, 12,

117-135.

CONTENT BASED IMAGE RETRIEVAL USING SPATIAL RELATIONSHIPS BETWEEN DOMINANT COLOURS

OF IMAGE SEGMENTS

189