A CONTEXT–AWARE ADAPTATION SYSTEM FOR SPATIAL

AUGMENTED REALITY PROJECTIONS

Anne Wegerich, Julian Adenauer

Research Training Group prometei, Technische Universit

¨

at Berlin, Germany

Jeronimo Dzaack, Matthias Roetting

Department of Human-Machine-Systems, Technische Universit

¨

at Berlin, Germany

Keywords:

Spatial Augmented Reality, Projection systems, Context–aware user adaptation, User interfaces.

Abstract:

To cover three-dimensional information spaces stationary or spatial Augmented Reality (sAR) systems involve

installed projection systems, Head-Up- and other displays. Therefore, information presentation techniques

for sAR contain three basic problems assigned to the questions which form, which screen position and which

physical location the information should have in 3D space. This paper introduces an approach and presents the

details of a corresponding system that concentrates on the location problem and the appropriate visualization

adaptation. It manages the information presentation for physical occlusions and difficult light conditions of

sAR floor projections with a light sensor matrix and a connected software for low and high-level context

integration. With changing the size, position, and orientation of the projection area and the content of the

presented information it implements a context-aware adaptation system for sAR.

1 INTRODUCTION

Augmented Reality (AR), the augmentation of the en-

vironment with virtual, computer generated informa-

tion, is used in many different areas. If the user is re-

quired to move in large areas, he or she needs to carry

a mobile presentation device or wear a head-mounted

display (HMD). But whenever the user’s movements

are restricted to a small, controlled area, this burden

can be loosened by realizing spatial or stationary AR

(sAR).

Whereas in mobile AR the information is typi-

cally presented on only one display device, sAR sys-

tems use many displays distributed in a small 3D

space. This bears the danger that the user is con-

fronted with incoherent and/or redundant information

because many displays or segregated (parts of) dis-

plays are used for showing one information. To avoid

this, a central system is required to automate the se-

lection and optimization of the information that is pre-

sented to the user.

Beside the display problems where on the screen

and in which form the sAR information should be pre-

sented (view and presentation management) the main

question especially for sAR is: Where (i.e. on which

display or projection area) should the information

be presented physically in a room (display manage-

ment)? Furthermore, the definition of AR by (Azuma

et al., 2001) requires that the virtual information has

to be combined with the real world in real time and it

has to have a content-related connection to the point

where it is presented (spatial registration).

Altogether information presentation with (s)AR

display technologies needs to attend to physical prob-

lems like edges, gaps, over-lappings of two or more

projections, and occlusions without loosing spatial

registration as a content-related requirement (Fig. 1).

Figure 1: Gaps and overlappings of three projections (gray)

in a room with particular occlusion (shadow and white col-

umn).

In this paper we address occlusions and we present

98

Wegerich A., Adenauer J., Dzaack J. and Roetting M. (2010).

A CONTEXT–AWARE ADAPTATION SYSTEM FOR SPATIAL AUGMENTED REALITY PROJECTIONS.

In Proceedings of the 7th International Conference on Informatics in Control, Automation and Robotics, pages 98-103

Copyright

c

SciTePress

a sAR system that handles the presence of occluding

objects and related light conditions (shadows or re-

flections) with regard to the AR definition. More pre-

cisely the system manages the context-aware recalcu-

lation of location, size, orientation, and visualization

of a projected information if a physical object (e.g.

the user himself) occludes it.

After showing some related work concerning the

display management and the measurement of environ-

mental context information we present the proposed

system in section 3 and 4. Afterwards we explain the

purpose of the software and how it will be integrated

in the further development of a sAR smart home sys-

tem.

2 DISPLAY MANAGEMENT AND

MEASUREMENT OF

ENVIRONMENTAL CONTEXT

Several solutions exist for recognizing over-lappings

and closing gaps in projected sAR scenarios. There-

fore, a movable mirror combined with a projector

is presented by (Pinhanez, 2001). So the presenta-

tion follows the user. Other rotatable projectors are

suggested by (Ehnes et al., 2004; Ehnes and Hirose,

2006). These projectors offer only one screen that

moves and does not produce any gap or overlapping.

To solve the problem of roaming between different

immovable projection systems these authors also de-

signed an architecture that handles overlappings with

selecting the best possible projection system (Ehnes

et al., 2005). However, they did not make a sugges-

tion for physical occlusions.

For the integration of high-level context a lot of

AR applications are based on image processing sys-

tems which are able to recognize states of the en-

vironment or the user. This is usually used to pro-

vide automated user support in communication, work,

or information processing. In AR applications this

recognition is very important but often limited to spe-

cial areas like city navigation or guidance in muse-

ums. However, an approach for a user-adaptation

with high-level context integration is missing. Espe-

cially for 3D information spaces new research results

give evidence that information visualization must be

adapted for different 3D presentation depths and op-

timal information perception of proposed virtual dis-

tances and perspectives (Drascic and Milgram, 1996;

Jurgens et al., 2006; Herbon and Roetting, 2007).

Separated from high-level context, environmen-

tal (or low-level) context registration is a field of re-

search that is well investigated. Therefore, the cur-

rent research is focused on new applications and com-

binations of them to facilitate the development of

new technologies especially in the scope of Human-

Machine-Interaction (HCI). Hence, there is a huge

amount of applications in which environmental con-

text data is collected with sensors. Especially for po-

pular social applications a lot of psychophysiological

data is measured to enable the automated recognition

of emotional states of the user. Such systems concen-

trate for example on areas like e-learning platforms

(Karamouzis and Vrettos, 2007), indirect or direct in-

teraction in multimedia applications like web pages,

virtual communities, and games (Ward and Marsden,

2003; Kim et al., 2008; Mahmud et al., 2007). Par-

ticularly in the scope of mixed reality games the use

of low-level-context is a popular approach because it

connects the real and the virtual world for the player

(Romero et al., 2004).

For these and other upcoming technologies ana-

lyzing context information and integrating it to de-

velop adaptable systems has led to context ontology

models which are generic or domain specific and al-

low the standardized use of context information and

the development of associated system or software ar-

chitectures (Chaari et al., 2007).

With the presented approach we combine these

context models with user-centred adaptation tech-

niques for sAR information visualization. With this

we want to optimize sAR systems, make them more

useful, and establish generic models for context inte-

gration.

3 SAR SYSTEM SETUP

The system is part of the development of a sAR smart

home environment which involves a number of dif-

ferent sAR devices. These are projection systems,

video-see-through and head-up displays (Bimber and

Raskar, 2005).

The proposed system is mainly based on an array

of light sensors on the floor to control the position,

size, and orientation of a projection. The sensors mea-

sure light conditions in the environment (low-level

context information). A connected software module

manages the context-related adaptation of the infor-

mation visualization based on the feedback of the re-

calculated presentation and sends the resulting image

to a projector. So the proposed low-level change of

the projection will be recalculated again if the new

projection got ambiguous in the current context. The

support for a user searching an object for example

could be showing a map with a target marker. If the

projection area (location) for map presentation has to

A CONTEXT-AWARE ADAPTATION SYSTEM FOR SPATIAL AUGMENTED REALITY PROJECTIONS

99

be changed because of difficult light conditions also

the size and orientation or the whole visualization

type of the new map have to be adapted. The first

change could have made it incomprehensible for the

user e.g. because of his current perspective.

The complexity of contentual changes increases if

high-level context information is taken into account.

If a projection area has to be changed, the content

adaptation software perhaps has to present the same

information with another visualization (content) to fa-

cilitate its perception. This assumption is based on a

lot of requirements determined by the abilities of the

user (background knowledge, cognitive capacity, ex-

perience, etc.) and the context. In the map example

this perhaps means to change the visualization from

a map to an arrow because the user currently is dis-

tracted and only can process simple visualizations in

his peripheral vision. So the presented system relates

to a complex network of decisions. Altogether the

software has to automate having the right informa-

tion presentation technique at the right position for

the conditions the context provides.

The system we introduce in this paper is the first

step towards a full home automation and support with

sAR displays. The hardware of the presented system

is a proof of concept and therefore is limited to a solu-

tion that includes one projector and a small projection

area . But the framework is extendable to larger pro-

jection areas and sensor arrays.

4 CONTEXT–AWARE

PRESENTATION SYSTEM

The task of the system we developed is to analyze

lighting conditions on the floor of a room to make

this surface usable for an sAR output of a projector

that is installed above it. Thus, it solves a part of

the problem to add information everywhere in a three-

dimensional space without losing the relevant content

of it and important parts of its formal representation.

Furthermore, the system provides the possibility to

adapt the visualization in terms of HCI criteria and

the upcoming research of three-dimensional percep-

tion.

The system consists of a light sensor matrix that

is integrated in a PVC floor coating, a connected mi-

crocontroller board which is connected to a PC, and a

Java-based software. The size of the PVC floor coa-

ting and the sensor array is a proof of concept and

could be extended for larger rooms and projections.

The presented system is able to change the size and

position of an at least possible projection area. This

is the first step to adapt the presented content which

depends on the resulting distance of the information

to the user and additionally on the properties of other

sAR devices which are in a similar distance, the type

of device, its orientation, and of course the type of in-

formation which has to be presented and a lot of other

context requirements.

After collecting information about the physical

context (occlusions or lighting conditions) the soft-

ware first evaluates possible projection areas and se-

lects one. Secondly it has to access the properties

of the selected area and analyzes further high-level

context information to change the visualization of the

content if needed.

4.1 Installation

The system consists of a sensor array for brightness

measuring in a certain physical space and a micro-

controller. It converts the analogue sensor data and

sends it to a connected PC which controls the output

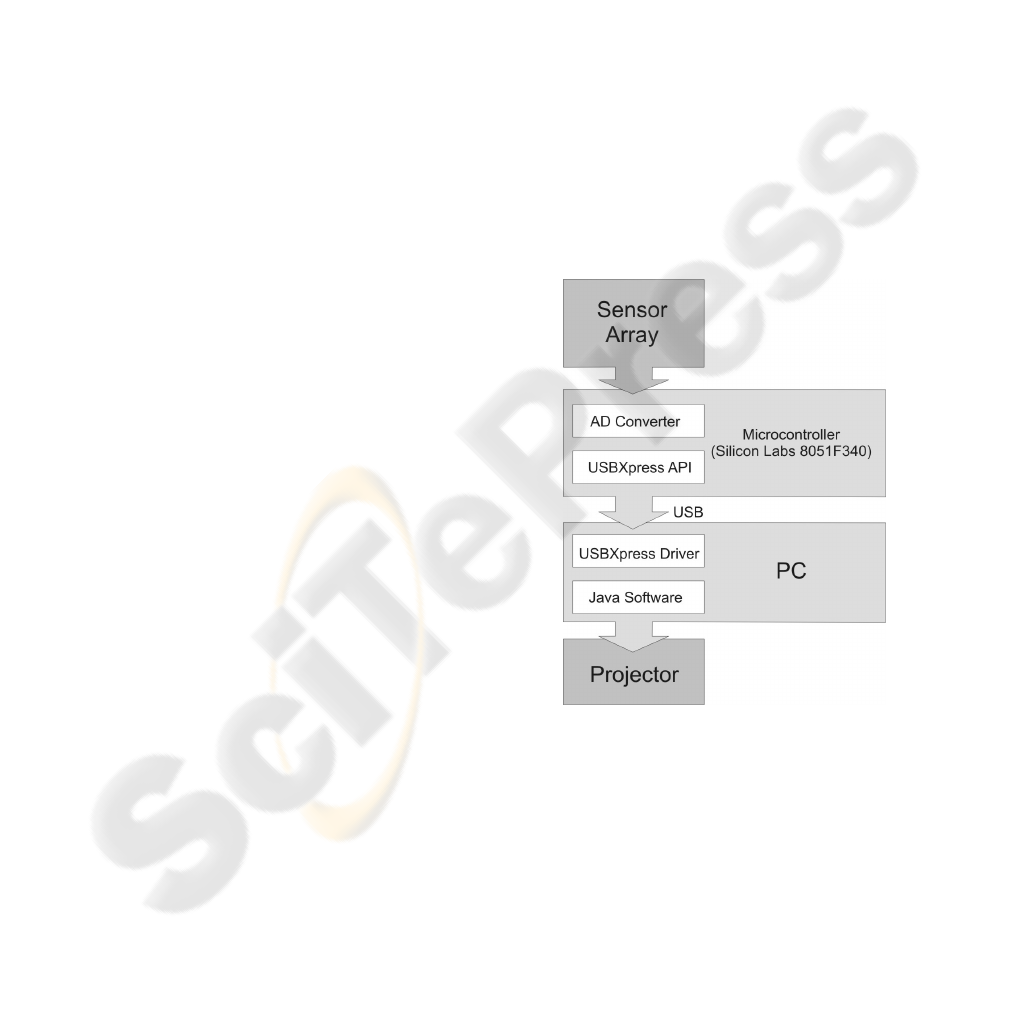

of a projector (see Figure 2).

Figure 2: Schema of the context–aware presentation sys-

tem.

A 0.75m × 1m PVC floor coating is divided into

twelve squares and a projector is placed 2.5m above

it. The projector is connected to a PC and projects

corresponding squares on the floor. In the middle of

each square, a light sensor of Type AMS104Y from

Panasonic’s NaPiCa-series is set. The sensor’s fea-

ture is a linear output and a built-in optical filter for

spectral response similar to that of the human eye.

Each sensor is connected to an input of a micro-

controller. The voltage over the resistor depends on

ICINCO 2010 - 7th International Conference on Informatics in Control, Automation and Robotics

100

the sensor’s photocurrent and is therefore directly de-

pendent on the amount of light on the sensor. This

voltage is being measured by a 10 bit analogue–to–

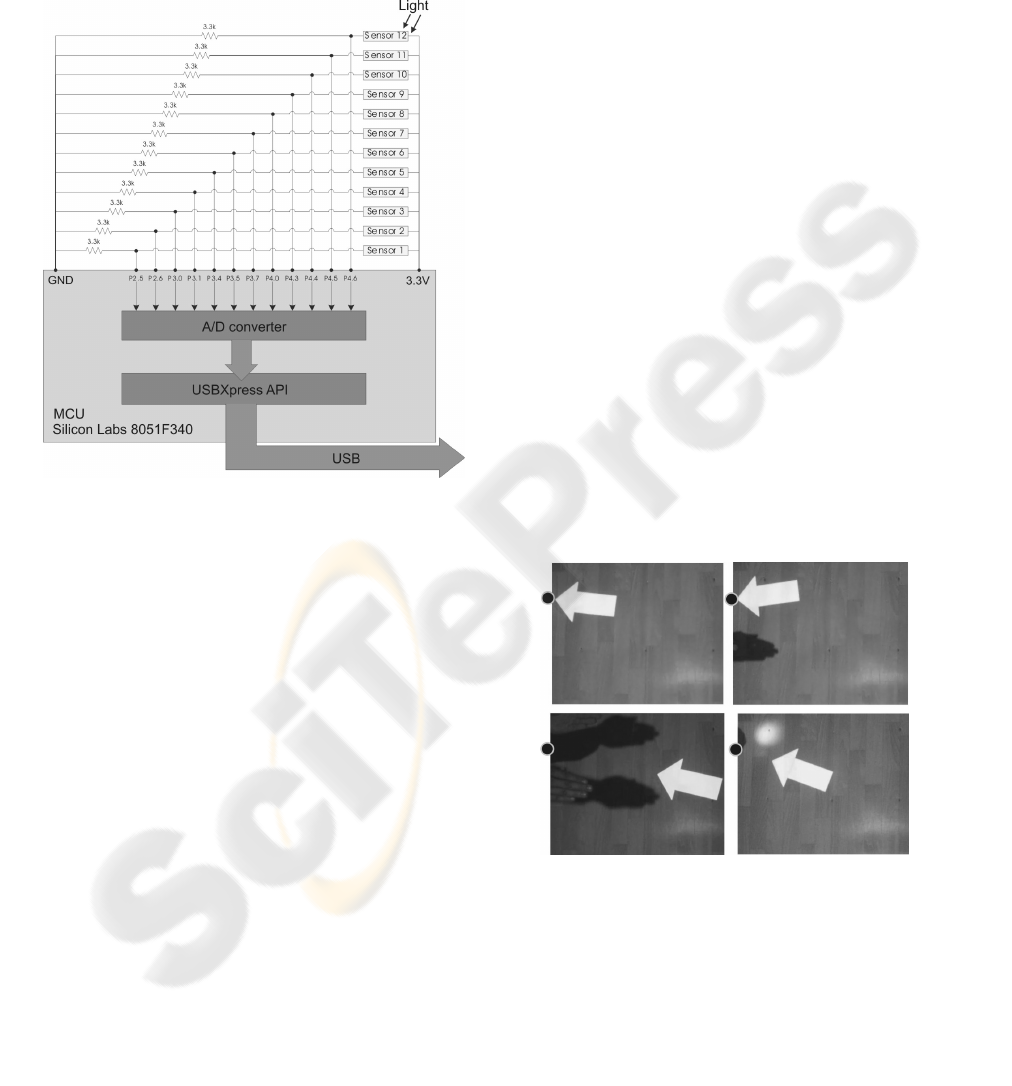

digital converter (ADC) which is part of the micro-

controller (see Figure 3 for more detail on the sensor

matrix).

Figure 3: Diagram of the components and connections of

the sensor matrix.

The microcontroller we used is an 8051F340 from

Silicon Labs. It features a built–in analogue multi-

plexer for the ADC, enabling the controller to con-

vert analogue voltages from 20 input pins. Moreover,

it has an onboard USB controller which can be used

with Silicon Labs’ USBXpress API for easy USB im-

plementation on client and host side. Via this USB

interface the controller board sends the digital sensor

values to a PC.

The PC is running a software that reads the con-

verted sensor data from the USB port. These values

are compared to predefined minimum and maximum

values. Value below minimum means, the sensor is

covered, so nothing should be projected here. A value

higher than the maximum, on the other hand means,

there is too much light on the area and the decreased

contrast averts a projection. Therefore, only squares

(one square per sensor) with light values in the range

between minimum and maximum are taken into con-

sideration, where information should be displayed.

From these squares that are in range the program

selects the ones that build up the largest coherent

quadrangular area according to an implemented hier-

archy. In the resulting connection of these squares

information could be displayed.

4.2 Functionality

The overall goal of the software component is the

adaptation of the projected superimposition with re-

gard to low-level and high-level data integration.

Therefore, it incorporates two steps of projection

recalculation: low-level and high-level data related

adaptation (currently only the first software part is

solved). The data is processed in real time. So the

projected information is always in a visible position

accepting a very small delay from the sensor data re-

quest.

In a first step the software calculates the most

appropriate size, position, and orientation for a pro-

jection that can be used to present text, icons, or (ren-

dered) images. It is made up of directly connected

projection squares whose sensors are not in a shadow

or in a direct light reflection. Then the new proposed

projection is adapted to the unchanged orientation of

the target (a text reading person or target position of

a pointing projection, etc.). After this step the infor-

mation has the correct orientation and is displayed in

the best possible projection area for the target (e.g.

the user). In Figure 4 this first adaptation step of the

system is demonstrated with an arrow pointing at a

designed target (red). This arrow is only one possible

usage of a projection area.

Figure 4: Examples for resulting projections (low–level re-

calculation) when sensors are covered or bright light falls on

them, arrow always points in a target direction (represented

by black dot).

The second part of the software uses the feedback

signals about the proposed projection area and the

current information visualization. It analyzes high-

level data (context information about the user, his dis-

tance to the projection, current state of the needed in-

A CONTEXT-AWARE ADAPTATION SYSTEM FOR SPATIAL AUGMENTED REALITY PROJECTIONS

101

formation, etc.) to recalculate whether the visualiza-

tion is still optimal for the user or not. If it is not, the

visualization changes to an alternative image or text

for a better understanding. Figure 5 shows an exam-

ple for the proposed reaction of the system. The resul-

ting visualization for a navigational hint in this case

is a map instead of an arrow. We based this approach

on findings from an indoor navigation experiment we

made (Wegerich et al., 2009). In this experiment pro-

jected maps were rated higher and caused better per-

formance when the target position is not visible for

the user.

Figure 5: Example for an adaptation scenario and 2 steps of

visualization recalculation a) shows the target and the start-

ing point of the user, b) first step of adaptation where the

projection area, size and orientation changes to the at least

possible squares with no light reflection and the correct di-

rection (low-level data integration), c) second step of adap-

tation; the visualization is changed to a map which shows

the unambiguous target position.

5 DISCUSSION

The proposed system component for the adaptation of

floor projections is a proof of concept. We presented

a solution for the problem of occlusions in physical

issues of information presentation with sAR. The sen-

sor matrix is not limited to the presented size and

amount of sensors. The usage of a higher resolution

could be achieved by integrating more light sensors.

Furthermore, this makes it possible to change the pro-

jection area not only to other rectangular forms but

to a more adaptive shape of the presented information

which we work on in the smart home scenario.

A higher resolution is also achieved with the usage

of cameras which are very common in AR applica-

tions. In most cases they are integrated in the cei-

ling or higher edges. So image processing software is

needed to solve perspectives and occlusions only the

camera sees, but not the user. When a user lifts an arm

and still can see the same area on the floor a camera

system would change the projection area because it

has difficulties to decide where the occlusion is in its

distance to the floor. So the advantage of floor-based

sensor matrices is that they measure the conditions at

the point where the projection will be with less effort

and as fast as a camera based system.

The presented sAR system component handles

the formal information representation and the display

management but at this point not the optimization of

the content because of its complexity. Other high-

level context information is needed to decide which

form the information should have. This will be the

result of the integration in the intended smart home

system. One of the applications of high-level con-

text integration is e.g. to pay attention to possible fast

moves of a user or to distances the area adaptation

causes. An arrow could be usable in this case but per-

haps a map of the room (and its cabinets) is better if

more detailed location information is needed.

6 FUTURE WORK

With the introduced system the foundation is made

for using context information and properties of three-

dimensional perception. It adapts the information vi-

sualization and furthermore solves parts of the display

management problem of sAR systems. The next step

is the development and evaluation of a more complex

context model that follows specified guidelines for 3D

information presentation and integrates different sAR

devices. The aim is the enhancement of the software

functionality on the basis of this model.

Afterwards, it will be combined with other

context-aware system components which together

form a user-adapted sAR presentation system. The

resulting system will present information in larger 3D

spaces where the form, position, additional interac-

tion parameters, and especially the content is selected

for 3D perception adaptation to the abilities of the

user.

The presented system also solves a technical part

of the Ubiquitous Spatial Augmented Reality (UAR)

requirement to make information available every-

where in a larger 3D space with using context. This

is an essential aspect of the underlying definition be-

cause of the so achieved connection between the con-

cepts of Ubiquitous Computing and Augmented Re-

ality. In this manner the presentation technology in

a 3D information space needs to be context-aware in

a more continuous way to adapt the presentation for

any location in the room. Furthermore the under-

lying context model has to integrate location-based

adaptation because of a possible dynamically chan-

ging environment. For example a projective sAR sys-

tem used for superimpositions on a working surface

should handle changing objects or tools and its posi-

tions on the surface.

Finally, we want to develop an ontology that de-

scribes and generalizes the automated decision of the

ICINCO 2010 - 7th International Conference on Informatics in Control, Automation and Robotics

102

adaptation system. Based on this ontology we will

develop an expert system to manage the rules of per-

ception and cognitive processing of 3D information

presentation in UAR environments and to solve the

high-level context integration. This will make the sys-

tem scalable and adaptable to different use cases and

also for mobile AR display applications.

ACKNOWLEDGEMENTS

We want to thank the DFG, the Department of

Human-Machine Systems Berlin, and prometei for

their support.

REFERENCES

Azuma, R., Baillot, Y., Behringer, R., Feiner, S., Julier,

S., and MacIntyre, B. (2001). Recent advances in

augmented reality. IEEE Comput. Graph. Appl.,

21(6):34–47.

Bimber, O. and Raskar, R. (2005). Spatial Augmented Re-

ality: Merging Real and Virtual Worlds. A K Peters,

Ltd.

Chaari, T., Ejigu, D., Laforest, F., and Scuturici, V.-M.

(2007). A comprehensive approach to model and use

context for adapting applications in pervasive environ-

ments. J. Syst. Softw., 80(12):1973–1992.

Drascic, D. and Milgram, P. (1996). Perceptual issues in

augmented reality. In Stereoscopic Displays and Vir-

tual Reality Systems III, Proceedings of SPIE, number

2653, pages 123–134.

Ehnes, J. and Hirose, M. (2006). Projected reality - content

delivery right onto objects of daily life. In ICAT, pages

262–271.

Ehnes, J., Hirota, K., and Hirose, M. (2004). Projected aug-

mentation - augmented reality using rotatable video

projectors. In ISMAR ’04: Proceedings of the 3rd

IEEE/ACM International Symposium on Mixed and

Augmented Reality, pages 26–35, Washington, DC,

USA.

Ehnes, J., Hirota, K., and Hirose, M. (2005). Projected

augmentation ii — a scalable architecture for multi

projector based ar-systems based on ’projected ap-

plications’. In ISMAR ’05: Proceedings of the 4th

IEEE/ACM International Symposium on Mixed and

Augmented Reality, pages 190–191, Washington, DC,

USA.

Herbon, A. and Roetting, M. (2007). Detection and process-

ing of visual information in three-dimensional space.

MMI Interactive Journal, 12:18–26.

Jurgens, V., Cockburn, A., and Billinghurst, M. (2006).

Depth cues for augmented reality stakeout. In

CHINZ ’06: Proceedings of the 7th ACM SIGCHI

New Zealand chapter’s international conference on

Computer-human interaction, pages 117–124, New

York, NY, USA. ACM.

Karamouzis, S. T. and Vrettos, A. (2007). A biocybernetic

approach for intelligent tutoring systems. In AIAP’07:

Proceedings of the 25th conference on Proceedings

of the 25th IASTED International Multi-Conference,

pages 267–271, Anaheim, CA, USA. ACTA Press.

Kim, Y. Y., Kim, E. N., Park, M. J., Park, K. S., Ko, H. D.,

and Kim, H. T. (2008). The application of biosignal

feedback for reducing cybersickness from exposure to

a virtual environment. Presence: Teleoper. Virtual En-

viron., 17(1):1–16.

Mahmud, A. A., Mubin, O., Octavia, J. R., Shahid, S.,

Yeo, L., Markopoulos, P., and Martens, J.-B. (2007).

amazed: designing an affective social game for chil-

dren. In IDC ’07: Proceedings of the 6th international

conference on Interaction design and children, pages

53–56, New York, NY, USA. ACM.

Pinhanez, C. S. (2001). The everywhere displays projector:

A device to create ubiquitous graphical interfaces. In

UbiComp ’01: Proceedings of the 3rd international

conference on Ubiquitous Computing, pages 315–331,

London, UK. Springer-Verlag.

Romero, L., SANTIAGO, J., and CORREIA, N. (2004).

Contextual information access and storytelling in

mixed reality using hypermedia. Comput. Entertain.,

2(3):12–12.

Ward, R. D. and Marsden, P. H. (2003). Physiological re-

sponses to different web page designs. Int. J. Hum.-

Comput. Stud., 59(1-2):199–212.

Wegerich, A., Dzaack, J., and Roetting, M. (2009). Opti-

mizing virtual superimpositions: User centered design

for a UAR supported smart home system. submitted.

A CONTEXT-AWARE ADAPTATION SYSTEM FOR SPATIAL AUGMENTED REALITY PROJECTIONS

103