PARAMETER SETTING OF NOISE REDUCTION FILTER USING

SPEECH RECOGNITION SYSTEM

Tomomi Abe

1

, Mitsuharu Matsumoto

2

and Shuji Hashimoto

1

1

Waseda university, 55N-4F-10A, 3-4-1 Okubo, Shinjuku-ku, Tokyo, 169-8555, Japan

2

The University of Electro-Communications, 1-5-1, Chofugaoka, Chofu-shi, Tokyo, 182-8585, Japan

Keywords:

Speech recognition system, Parameter optimization, Recognition-based approach, Nonlinear filter.

Abstract:

This paper describes parameter setting of noise reduction filter using speech recognition system. Parameter

setting problem is usually solved by maximization or minimization of some objective evaluation functions

such as correlation and statistical independence. However, when we consider a single-channel noisy signal, it

is difficult to employ such objective functions. It is also difficult to employ them when we consider impulsive

noise because its duration is very small to use this assumption. To solve the problems, we directly use a speech

recognition system as evaluation function for parameter setting. As an example, we employ time-frequency

ε-filter and Julius as a filtering system and a speech recognition system, respectively. The experimental results

show that the proposed approach has a potential to set the parameter in unknown environments.

1 INTRODUCTION

Although filtering plays an important role in acous-

tical signal processing, it is often necessary to de-

termine some filter parameters to obtain the desired

outputs. For instance, in utilizing comb filter (Lim

et al., 1978), it is necessary to estimate the pitch of the

speech signal as the parameter to reduce the noise sig-

nal adequately. ε-filter can reduce the acoustical noise

while preserving speech signal if the parameter ε is

adequately set (Harashima et al., 1982). To set the pa-

rameter adequately, many studies have been reported

in the past. Mrazek et.al. proposed de-correlation

criterion to select the optimal stopping time for non-

linear diffusion filtering (Mrazek and Navara, 2003).

Sporring et.al. studied the behavior of generalized en-

tropies, and employed it for determining the parame-

ters (Sporring and Weickert, 1999). Umeda employs

mutual information for blind deconvolution using in-

dependent component analysis (ICA) (Umeda, 2000).

We also reported a parameter optimization algorithm

based on signal-noise de-correlation (Matsumoto and

Hashimoto, 2009; Abe et al., 2009).

However, it is sometimes difficult to set objec-

tive criterion for parameter setting practically. For in-

stance, in the single-input single-output filtering sys-

tem, we only have a single-channel noisy signal, that

is, the original signal and noise are unknown. Al-

though we usually set the assumption with regard to

the relation between the signal and noise (e.g. decor-

relation between signal and noise, or statistically in-

dependence between signal and noise), as we only

have a single-channel noisy signal, it is not always

easy to use these assumptions in single-channel filter-

ing process. When we consider the impulsive noise

such as clapping and percussion sounds, it becomes

more difficult to employ the relation between signal

and noise because its duration is very small to use this

assumption. In other words, the signal-noise relation

often could not be used. However,these types of noise

often affects the recognition results seriously in spite

of its instantaneous corruption. Moreover, the tuned

filter output may not be always adequate for speech

recognition system because filter and speech recogni-

tion system are separately tuned using different crite-

rion.

To handle such cases, we pay attention to some

subjective information in acoustical signal. For ex-

ample, even when we cannot employ any objective

assumptions, if we can generate the known sounds

(words) from the system itself and use them as feed-

back, we can use the knowledge on the word to set

the parameters. We can tune the parameter so that

the filter output is the most target word-like by us-

ing speech recognition system. When we consider a

robot system for simple tasks such as a cleaning robot,

the number of the required words may be very small

(for instance, from a few words to 10 words) com-

387

Abe T., Matsumoto M. and Hashimoto S..

PARAMETER SETTING OF NOISE REDUCTION FILTER USING SPEECH RECOGNITION SYSTEM.

DOI: 10.5220/0003054903870391

In Proceedings of the International Conference on Fuzzy Computation and 2nd International Conference on Neural Computation (ICNC-2010), pages

387-391

ISBN: 978-989-8425-32-4

Copyright

c

2010 SCITEPRESS (Science and Technology Publications, Lda.)

Filter parameter

c

=

(

c

1

,

c

2

,…

c

N

)

c

(

c

1

,

c

2

,…

c

N

)

Feedback based

on some criterions

Filter FInput signal x(k langis tuptuO) y(k)

Figure 1: Parameter setting of filter system.

pared to that in the daily conversation. In these cases,

it may be better that the filter is over-tuned for the

objective words to make the speech recognition sys-

tem robust for these words, even though the speech

recognition system fails to recognize the other words.

To evaluate our criterion, we employ time-frequency

ε-filter (TF ε-filter) (Abe et al., 2007) and “Julius”

(Lee et al., 2001; Lee and Kawahara, 2009; Kawa-

hara et al., 2000) as a filtering system and a speech

recognition system, respectively.

This paper is organized as follows. In Sec.2,

we describe the concept of our approach and discuss

the merits of the proposed approach compared to the

other approaches. We also briefly explain the algo-

rithm of Julius. In Sec.3, we explain TF ε-filter to

clarify the problems. In Sec.4, we conduct some ex-

periments and show the results to confirm the effec-

tiveness of the proposed method. Discussions and

conclusion are given in Sec.5.

2 PARAMETER SETTING USING

SPEECH RECOGNITION

SYSTEM

Let us start with a typical parameter setting problem

to explain our approach clearly. Consider a filtering

system for acoustical signal processing as shown in

Fig.1. In Fig.1, x(k) is a filter input. y(k) is a filter

output of filter F. We assume that the filter F has N

parameters, which determine the filter characteristics.

The parameter set is defined as c = (c

1

, c

2

, ··· , c

N

).

Note that we do not mind the filtering types in Fig.1.

Parameter setting problem is usually solved as fol-

lows:

1. Definition of an Evaluation Function. At first,

we set an evaluation function to evaluate whether the

filter output is good or not. For instance, signal-noise

decorrelation or statistically independence of signals

are often used.

2. Maximization or Minimization of the Evalu-

ation Function. After setting evaluation function,

we set the filter parameters, and evaluate whether the

output is adequate or not by using evaluation func-

tion. The optimal parameters are obtained as the pa-

rameters, which maximize or minimize the evaluation

function.

Hence, it is considered that the main problem of

parameter setting is how to set the evaluation func-

tion.

As an example, let us consider a noise reduction

problem. The input signal includes not only the orig-

inal speech signal but also noise in this case. In the

filtering system, we only have a single-channel noisy

signal, that is, the original speech signal and noise

are unknown. Although we usually set the assump-

tion with regard to the relation between the signal and

noise (e.g. decorrelation between signal and noise, or

statistically independence between signal and noise),

as we only have a single-channel noisy signal, it is not

always easy to use these criterions in single-channel

filtering process. When we consider the impulsive

noise such as clapping and percussion sounds, it be-

comes more difficult to employ this approach because

its duration is very small to use this assumption. In

other words, the signal-noise relation often could not

be used. However, these types of noise often affects

the recognition results seriously in spite of its instan-

taneous corruption.

To solve the problem, we directly utilize a speech

recognition system to set the parameter of filter in-

stead of the relation between signal and noise. The

merits of this criterion are summarized as follows:

1. Simple Implementation. When we assume

some relations between signal and noise such as

decorrelation between signal and noise, or statisti-

cally independence between signal and noise, we

need at least two signals to evaluate the relation. It

is not always easy to employ the criterion when we

only have a single-channel output. On the other hand,

in our criterion, we only have to check whether the

output signal is target speech or not. The checking

process is simple, and does not require any other sig-

nals except the output.

2. Wide Application Range. Unlike objective eval-

uation function, this approach can be used regardless

of noise types. Our approach can handle not only the

noise occurring continuously like background noise

but also the noise occurring infrequently like impul-

sive noise such as clapping and percussion sounds.

It is also considered that we do not need to care

the recognition system itself. We can use any recog-

nition system and regard it as an black box. We do not

need to knowthe inside of the recognition system. We

just ask the recognition system, and it react to us like

ICFC 2010 - International Conference on Fuzzy Computation

388

an artificial person who has a unique personality. The

process is similar to the process of questionnaire to

human.

Even when we set the system in the unknown

space and can not set any objective assumptions, if we

have sufficient sample sounds and can generate them

there, we can set the filter system by tuning the pa-

rameter so that the filter output is the most generated

word-like.

3. Affinity of Recognition System. The parameter

is tuned so that the probability of the target speech in

speech recognition system is the highest. Hence, the

output may have some gaps from the objective per-

spective such as mean square error. However, when

we employ the total system combining the filtering

system and recognition system such as robot audi-

tory, these types of gaps may give us some merits

rather than demerit. Because the filter is tuned ade-

quately not from objective perspective but from sub-

jective perspective for recognition system itself. In

this research, we use the open-source speech recog-

nition software “Julius” (Lee et al., 2001; Lee and

Kawahara, 2009; Kawahara et al., 2000) as a speech

recognition system.

Let us define W, X, p(W), p(X) and p(X|W) as

the strings of the word, the input signal, the probabil-

ity of W, the probability of X and the posterior prob-

ability of X for W, respectively. Julius decodes the

word W from the input signal X by using the given

acoustic model p(X|W) and linguistic model p(W).

p(W|X), the posterior probability of W for X, is cal-

culated based on Bayes’ theorem as follows:

p(W|X) =

p(W) ∗ p(X|W)

p(X)

, (1)

for the word W. In Eq.1, p(X) is normalization factor

which has no effect on determination of the word W

and can be ignored. The estimated word

ˆ

W can be

obtained as the word which maximizes the posterior

probability p(W|X). It can be described as follows:

ˆ

W = argmax

W

p(W|X) (2)

= argmax

W

p(W) ∗ p(X|W)

= argmax

W

{log p(W) + log p(X|W)}.

We can assume that the linguistic model p(W) is iden-

tical when we conducted a simple word recognition.

The output of filter should become the most objective

word-like from the acoustic perspective without lin-

guistic model. In other words, the optimal parameter

c

opt

can be obtained as follows:

c

opt

= argmax

c

log p(X|W). (3)

3 TIME-FREQUENCY ε-FILTER

In this section, we briefly describe the TF ε-filter algo-

rithm. TF ε-filter is an improved ε-filter applied to the

complex spectra along the time axis in time-frequency

domain.

Let us define x(k) as the input signal sampled at

time k. In TF ε-filter, we firstly transform the input

signal x(k) to the complex amplitude X(κ, ω) by short

term Fourier transformation (STFT). κ and ω repre-

sent the time frame in the time-frequency domain and

the angular frequency, respectively. κ and ω are dis-

crete numbers. Next we execute a TF ε-filter, which is

an ε-filter applying to complex spectra along the time

axis in the time-frequency domain. In this procedure,

Y(κ, ω) is obtained as follows:

Y(κ, ω) =

Q

∑

i=−Q

1

2Q+ 1

X

′

(κ+ i, ω), (4)

where the window size of ε-filter is 2Q+ 1,

X

′

(κ+ i, ω) (5)

=

X(κ, ω) (||X(κ, ω)| − |X(κ+ i, ω)|| > ε)

X(κ+ i,ω) (||X(κ, ω)| −|X(κ +i, ω)|| ≤ ε),

and ε is a constant. Then, we transform Y(κ,ω) to

y(k) by inverse STFT.

By utilizing TF ε-filter, we can reduce not only

small amplitude stationary noise but also large am-

plitude nonstationary noise. It does not require the

model not only of the signal but also of the noise in

advance. It is easy to be designed and the calcula-

tion cost is small. Moreover, as it can reduce not only

Gaussian noise but also impulsive noise such as clap-

ping and percussion sounds, it is expected that it be-

comes a good example to show the effectiveness of

our approach. See the reference (Abe et al., 2007) if

the reader would like to know the details.

In TF ε-filter, ε is an essential parameter to reduce

the noise appropriately (Abe et al., 2009). If ε is set

at an excessively large value, the TF ε-filter becomes

similar to linear filter and smooths not only the noise

but also the signal. On the other hand, if ε is set to

an excessively small value, it does nothing to reduce

the noise. Due to these reasons, ε value should be set

adequately.

4 EXPERIMENT

To clarify the adequateness of the proposed method,

we conducted the experiments utilizing a speech sig-

nal with a noise signal. In the experiments, we

changed ε values in TF ε-filter, and investigate the

PARAMETER SETTING OF NOISE REDUCTION FILTER USING SPEECH RECOGNITION SYSTEM

389

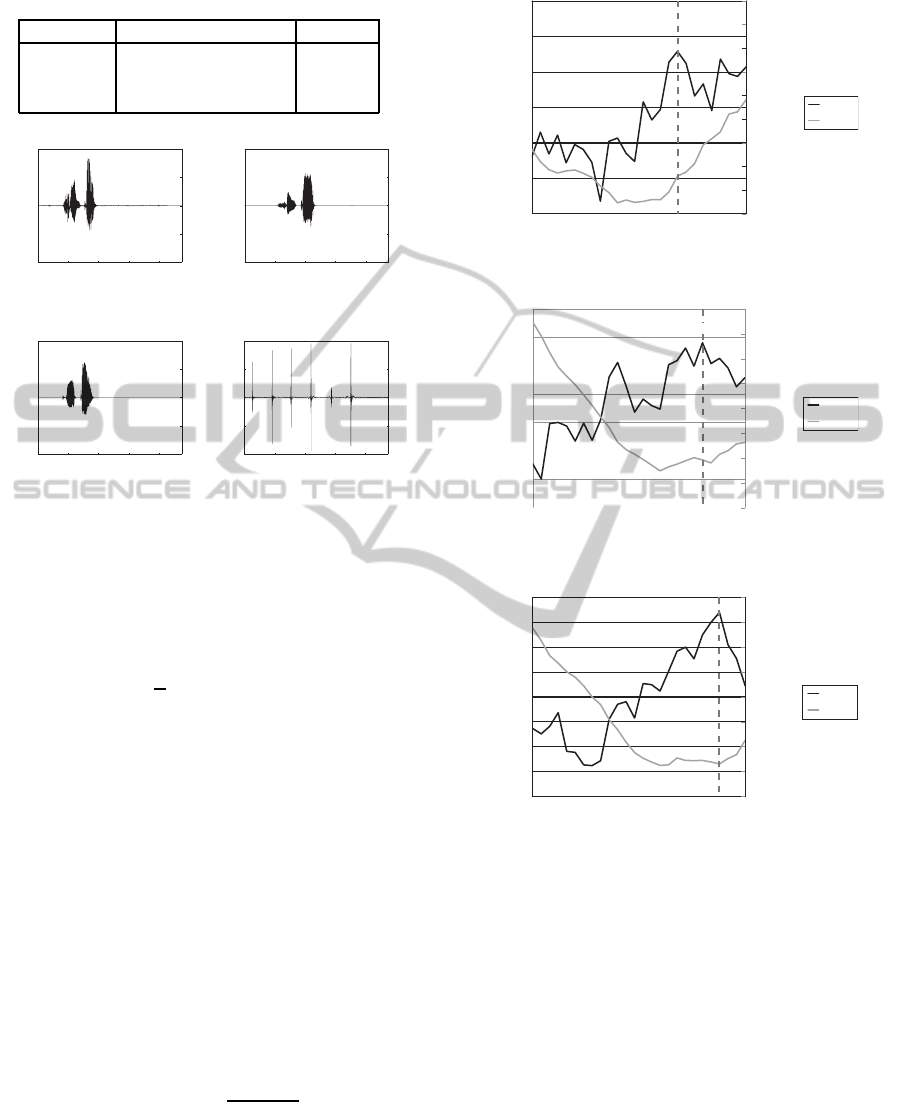

Table 1: Prepared speech files.

Word (Meaning) Gender

Speech 1 Senkai (Turning) Male

Speech 2 Senkai (Turning) Female

Speech 3 Koutai (Backdown) Male

0 0.5 1 1.5 2

−1

−0.5

0

0.5

1

Time[s]

Amplitude

(a) Speech 1.

0 0.5 1 1.5 2

−1

−0.5

0

0.5

1

Time[s]

Amplitude

(b) Speech 2.

0 0.5 1 1.5 2

−1

−0.5

0

0.5

1

Time[s]

Amplitude

(c) Speech 3.

0 0.5 1 1.5 2

−1

−0.5

0

0.5

1

Time[s]

Amplitude

(d) Noise.

Figure 2: Waveforms of the signals.

relation between p(X|W), and the mean square error

(MSE) between the originalspeech signal s(k) and the

filter output y(k). MSE is defined as follows:

MSE =

1

L

L

∑

k=1

(s(k) − y(k))

2

, (6)

where L is the signal length. As sound sources, we

prepared three Japanese speech signals as shown in

Table 1 and Figures 2(a)-(c). As shown in Table 1,

the first one and the second one are the same word

but pronounced by different persons to check the ro-

bustness of gender difference. The first one and the

third one are different words pronounced by the same

person to check the robustness of word difference.

The sound of clapping hands is used as the noise

signal. The waveform of the noise signal is shown

in Fig.2(d). We mixed each signal and the noise in

the computer. All the SNRs of the mixed signals are

1.4[dB]. SNR is defined as follows:

SNR = 10 · log

10

L

∑

k=1

s(k)

2

L

∑

k=1

n(k)

2

. (7)

The sampling frequency and quantization bit rate are

set at 16kHz and 16bits, respectively. We set the win-

dow size of ε-filter at 61.

-5900

-5850

-5800

-5750

1 1.5 2 2.5 3 3.5

-5700

-5650

-5600

ε

p(X|W)

0.82

0.83

0.84

0.85

0.86

0.87

0.88

0.89

0.9

0.91

MSE [*10-3]

p(X|W)

MSE

Figure 3: Speech 1.

-5700

-5650

-5600

-5550

-5500

-5450

-5400

-5350

1 1.5 2 2.5 3 3.5

ε

p(X|W)

0.7

0.72

0.74

0.76

0.78

0.8

0.82

0.84

0.86

MSE [*10-3]

p(X|W)

MSE

Figure 4: Speech 2.

-5750

-5700

-5650

-5600

-5550

-5500

-5450

-5400

-5350

1 1.5 2 2.5 3 3.5

ε

p(X|W)

0.7

0.72

0.74

0.76

0.78

0.8

0.82

0.84

0.86

MSE [*10-3]

p(X|W)

MSE

Figure 5: Speech 3.

Figures 3-5 show the experimental results. The

dashed line in the figures represents the auxiliary

lines, which show the maximal recognition probabil-

ity.

As shown in Figs.3-5, as is generally considered,

MSE became small with regard to ε, which maxi-

mizes p(X|W). The proposed criterion is also robust

for gender difference and word difference.

We also note that the parameter that minimizes

MSE does not exactly correspond to the parameter

that maximizes p(X|W). It is considered that speech

recognition system is biased by auditory model ob-

tained through learning process. Although many re-

searchers currently aim to eliminate such biases, and

evaluate the system performance by objective func-

tions, we think that it is necessary to take these types

ICFC 2010 - International Conference on Fuzzy Computation

390

of biases into consideration when we apply the filter-

ing system to recognition system.

5 DISCUSSIONS AND

CONCLUSIONS

In this paper, we proposed parameter setting of noise

reduction filter utilizing speech recognition system.

The algorithm is simple, and the adequate parameter

could be obtained throughout the experiments. As the

proposed method does not use the relation between

the signal and noise, it is expected that the application

range of the proposed method is large. By using our

method, even if we only have the single-channel noisy

signal, we can evaluate whether the parameter is ade-

quate or not. The proposed method does not require

to estimate the noise in advance.

Experimental results also give us some visions to

be considered with regard to our approach. For in-

stance, the nonlinear relation between the filter pa-

rameter and the recognition result is an important

problem. The obtained recognition result sometimes

drastically moves with a tiny parameter change due to

the nonlinearity between the filtering and recognition

system. Our approach will be more useful when a fil-

ter has the linear relationship between the parameter

change and the filtering error.

For future works, we would like to investigate the

relation between the adequate recognition system and

filtering system. Theoretical analyses are also re-

quired. We aim to apply this criterion to other sys-

tems. Applications for robot auditory will also be

considered.

ACKNOWLEDGEMENTS

This research was supported by Special Coordi-

nation Funds for Promoting Science and Technol-

ogy, by research grant from Support Center for

Advanced TelecommunicationsTechnology Research

(SCAT), by the Ministry of Education, Science,

Sports and Culture, Grant-in-Aid for Young Scientists

(B), 22700186, 2010. and by the Ministry of Edu-

cation, Science, Sports and Culture, Grant-in-Aid for

Young Scientists (B), 20700168, 2008. This research

was also supported by the CREST project “Founda-

tion of technology supporting the creation of digital

media contents” of JST, and the Global-COE Pro-

gram,“Global Robot Academia”, Waseda University.

REFERENCES

Abe, T., Matsumoto, M., and Hashimoto, S. (2007). Noise

reduction combining time-domain ε-filter and time-

frequency ε-filter. In J. of the Acoust. Soc. America.,

volume 122, pages 2697–2705.

Abe, T., Matsumoto, M., and Hashimoto, S. (2009). Param-

eter optimization in time-frequency ε-filter based on

correlation coefficient. In Proc. of SIGMAP2009.

Harashima, H., Odajima, K., Shishikui, Y., and Miyakawa,

H. (1982). ε-separating nonlinear digital filter and its

applications. In IEICE trans on Fundamentals., vol-

ume J65-A, pages 297–303.

Kawahara, T., Lee, A., Kobayashi, T., Takeda, K., Mine-

matsu, N., Sagayama, S., Itou, K., Ito, A., Yamamoto,

M., Yamada, A., Utsuro, T., and Shikano, K. (2000).

Free software toolkit for japanese large vocabulary

continuous speech recognition. In Proc. of ICSLP

2000.

Lee, A. and Kawahara, T. (2009). Recent development of

open-source speech recognition engine julius. In Proc.

of APSIPA-ASC 2009.

Lee, A., Kawahara, T., and Shikano, K. (2001). Julius

— an open source real-time large vocabulary recogni-

tion engine. In Proc. of EUSIPCO2001., pages 1691–

1694.

Lim, J. S., Oppenheim, A. V., and Braida, L. (1978). Eval-

uation of an adaptive comb filtering method for en-

hancing speech degraded by white noise addition. In

IEEE Trans. on Acoust. Speech Signal Process., vol-

ume ASSP-26, pages 419–423.

Matsumoto, M. and Hashimoto, S. (2009). Estimation of

optimal parameter in ε-filter based on signal-noise

decorrelation. In IEICE Trans. on Information and

Systems, volume E92-D, pages 1312–1315.

Mrazek and Navara, M. (2003). Selection of optimal stop-

ping time for nonlinear diffusion filtering. In Int’l

Journal of Computer Vision, volume 52, pages 189–

203.

Sporring, J. and Weickert, J. (1999). Information measures

in scale-spaces. In IEEE Transs on Information The-

ory, volume 45, pages 1051–1058.

Umeda, S. (2000). Blind deconvolution of blurred images

by using ica. In IEICE Trans. on Fundamentals, vol-

ume J83-A, pages 677–685.

PARAMETER SETTING OF NOISE REDUCTION FILTER USING SPEECH RECOGNITION SYSTEM

391