A Cognitive Approach to Modelling Semantic Sensor

Web Solutions

Agnes Korotij and Judit Kiss-Gulyas

University of Debrecen, Debrecen, Hungary

Abstract. Semantic sensor solutions are characterized by a lack of consensus

on what features make sensor networks semantic, and what services a semantic

layer should provide. Although authors emphasize the fact that humans

outperform software in managing inconsistent knowledge and unreliable sensor

data, no attempt has been made so far to construct a model of semantic sensor

networks inspired by human cognition. The aim of the present paper is to

investigate whether the structure and organisation of concepts and meaning in

the human mind (as proposed by cognitive linguists and psycholinguists) can

serve as a model for constructing ontologies and knowledge representations for

the semantic sensor web (hereafter SSW). We also aim to show how

multimodal sensory data can be integrated with these representations based on

contemporary findings in human perception. We suggest that SSW solutions

based on cognitive mechanisms and psychologically plausible knowledge

representations overcome the challenges that handling of fuzzy data and

inconsistent information generates at present.

1 Introduction

The Semantic Sensor Web (SSW) initiative targets the integration of unstructured

sensor data (e.g. GPS, timestamps, temperature, visual and auditory data) with

artificial knowledge repositories. Despite the growing interest in SSW solutions, there

seems to be no consensus on what features make a sensor network or web solution

semantic; such confusion has measurable effects on the performance of the system

and make interoperability of these networks difficult. For the most part, semantics

boils down to the use of semantic web representation techniques, specifically RDF

1

and OWL

2

(e.g. [18]). On other occasions, semantics is abused as a synonym for the

tagging or annotation of raw data. A. Seth, for example, talks about the SSW in the

context of annotating sensor data with spatial (where), temporal (when) and thematic

(what) metadata, which together constitute the semantic metadata [24]. In a later

work, Seth’s mention of semantics implies ‘anticipating when to gather and apply

relevant knowledge and intelligence’, ‘minimal explicit concern or effort on the

human’s part’, and ‘the meaningful representation and sharing of hypotheses and

background knowledge’ [26]. Although authors emphasize the fact that humans

outperform software in managing inconsistent knowledge and unreliable sensor data

1

Resource Description Framework (RDF). Available from: http://www.w3.org/RDF/

2

Web Ontology Language (OWL). Available from: http://www.w3.org/2004/OWL/

Korotij A. and Kiss-Gulyas J..

A Cognitive Approach to Modelling Semantic Sensor Web Solutions.

DOI: 10.5220/0003118500850094

In Proceedings of the International Workshop on Semantic Sensor Web (SSW-2010), pages 85-94

ISBN: 978-989-8425-33-1

Copyright

c

2010 SCITEPRESS (Science and Technology Publications, Lda.)

[25], no attempt has been made so far to construct a cognitively inspired model of

semantic sensor networks. (For one notable exception see [7].)

The aim of the present paper is to investigate whether the structure and

organisation of concepts and meaning in the human mind can serve as a model for

constructing knowledge representations (KRs) for the SSW, which at the same time

support the processing of sensor data. The paper explores the following questions:

i. What constitutes knowledge in the human mind? What are the basic

cognitive processes that underlie the organization of concepts?

ii. Do semantic web technologies (e.g. RDF and OWL) provide a plausible

model of human knowledge organization?

iii. How does the human mind integrate sensory data with conceptual

knowledge? Can the same principles of organization hold in different

modalities?

iv. What are the implications of a cognitive approach to modelling sensor

integration?

We suggest that SSW solutions built on cognitive mechanisms and psychologically

plausible KRs overcome the challenges that fuzzy and inconsistent data present.

2 Concepts in the Mind

In the context of the semantic web, knowledge is organized in ontologies, formal

representations of conceptualization. In this section, we examine the question of how

the human conceptual structure is organized. By conceptual structure we mean the

organization of concepts, and conceptual space refers to the representation level

where concepts are stored. We assume that knowledge constitutes conceptual

structure and information stored in the declarative memory.

2.1 The Representation of Concepts in the Mind

Understanding how language processing works sheds light on the mechanisms that

interact with conceptual structure. Processing language is supposed to take place at

different stages and involve three levels of representation [11]:

1. Subsymbolic level: information is directly related to sensor data;

2. Linguistic level: information is expressed by a symbolic language;

3. Conceptual level: prelinguistic, information is represented in a metric space

defined by a number of cognitive dimensions.

The linguistic level appears to be too specific given the assumption that mental

representations are not necessarily propositional in nature (see [21]). In order to cover

the representation of non-linguistic constructs, we propose that the linguistic layer

should be complemented with a more general, symbolic rule-based level, whose role

is to capture regularities of any form, linguistic or non-linguistic. Psycholinguistic

evidence has shown that an ad hoc, primary analysis of form measurably precedes

semantic interpretation [22], which supports Gärdenfors’ three-layer model: the

86

linguistic input discerned while reading (visual stimuli) or listening (auditory stimuli)

is processed by the rule-based system (syntax), and then mapped onto the conceptual

dimension. The order of the phases is not strongly sequential, non-sensible semantic

interpretations may feed back to the rule-based module for an alternative syntactic

analysis [10].

Semantic and conceptual information is stored in the mental lexicon [3]. Concepts

corresponding to word meanings are represented in the brain by cell assemblies

distributed over different areas depending on the semantic properties of the word.

These properties include sensory and motor attributes, which determine whether a

word represents an easily visualizable object, or stands for a performable action [6].

While motor regions are important in processing and naming movement related words

[27] and imagining movement [12], other areas seem to be specialized for categories

in which visual form is primary [27]. The representation of abstract words has only

recently gained attention in psycholinguistic circles [29]. Research results imply that

knowledge of abstract concepts is secondary to knowledge of concepts directly rooted

in perception (see [17]).

2.2 General Cognitive Processes in the Organization of Conceptual Space

Cognitive scientists assume that human cognition is composed of basic mechanisms

which underlie the various aspects of intelligent behaviour, including language

processing, spatial orientation, or the organization of concepts. Croft and Cruse

(2004) identify four cognitive abilities as primary in conceptualization: (1) attention,

(2) comparison, (3) perspective, and (4) constitution.

Attention is the focus of consciousness; it comprises the selection of relevant parts,

the granularity of the observed phenomena, and scanning. Attention is sensitive to the

statistical properties of the input irrelevant of its modality [13].

Comparing entities is basic to establishing relationships like hyponymy, synonymy

and antonymy. Categorization, metaphor and the figure-ground alignment are special

cases of comparison. Although both cognitive scientists and semantic web activists

make considerable efforts to uncover how categorization works, there is a major

discrepancy between the two approaches in the treatment of category membership.

For cognitive scientists, humans manage fuzzy categories which exhibit graded

membership [23]; for example, CHAIR is a more prototypical instance of

FURNITURE than LAMP. For the semantic web activist, category membership is

strictly defined, and members have equal status within the category (see Section 2).

Perspective captures the individual’s spatial and temporal location. As Table 1

shows, perspective is not central in the organization of content words; this process is

more salient in visual perception and the organization of function words.

Constitution unravels the composition of the entities perceived and thus plays a

role in identifying parts and wholes. It helps determine whether a group of

perceivably different entities form a coherent whole, and is also responsible for

breaking up large objects into smaller chunks.

Redundancy of cognitive mechanisms is crucial in human cognition, i.e. multiple

processes may be involved in a task at the same time [27]. An example of redundant

cognitive mechanisms at work has been observed in adult foreign language learning

87

[30]. When adults learn a second language, initial weaknesses in the grammatical

system can lead to compensatory storage of long phrases in the memory.

General cognitive processes organize conceptual space along various types of

relationships (see Table 1). Association is the primary means of organizing concepts.

Association is a weighted relationship between any two items, irrelevant of the

modality the items belong to (associations may exists between pictures and words, for

instance). The strength of associative links is heavily influenced by previous

experience: for example, co-occurrence of words, words and visual stimuli, visual

stimuli and other sensory input establish new or strengthen existing associations.

In an overview of lexical relationships, Cruse outlines the relationships that may

possibly exist between word meanings, including hyponymy, meronymy, synonymy

and antonymy [5]. These relationships may all be considered as special cases of

association. In hyponymy, one item is superordinate over another. Meronymy

corresponds to part-whole relations; however, meronymy is constrained to words

whose representation involves visual modality. Synonymy is the phenomenon when

words map onto similar concepts in the mind. Antonymy, i.e. oppositeness of meaning

is not fundamental to the structuring of the mental lexicon.

Table 1. An overview of semantic relations and their cognitive basis.

Name Description Example Cognitive basis

Association

Arbitrary relationship between

two items of any modality.

“news” – “coffee”;

smell of cinnamon –

“winter”

Attention

Hyponymy

One item is superordinate over

another; graded membership

“vehicle” – “car”

Comparison

(categorization)

Meronymy Part-whole relation. “hand” – “finger” Constitution

Synonymy

Words map onto similar

concepts.

“nice” – “handsome” Comparison

Antonymy

Words map onto concepts with

opposite attributes.

“nice” – “ugly” Comparison

3 On Psychologically Plausible Knowledge Representations

Based on the structure and organization of human conceptualization presented in

Section 1, we suggest that a psychologically plausible KR should:

(a) be able to represent weighted associations;

(b) be able to represent fuzzy categories;

(c) be able to represent part-whole relationships in the case of concepts that

correspond to visually perceivable objects;

(d) support direct links to items from other modalities, i.e. allow for

associating concepts directly with sensor data representations (e.g. images);

(e) be sensitive to co-occurrence of items in its environment and support the

update of association weights;

(f) be context-dependent, in the sense that information stored in knowledge

representations may vary in different applications.

As to the representation of semantic sensor data in SSW solutions, the two

prevailing trends are (1) OGC syntactic standards defined for the management of

88

sensory data [20], and (2) W3C semantic web standards [1]. Since OGC standards

lack semantic description, we limit our discussion to semantic web standards as the

only candidates for a cognitively inspired model of KR.

Ontology languages provide a means for representing terms and establishing

relationships among the entries. Compared against our criteria, none of the current

semantic web technologies (e.g. RDF, OWL) prove to be psychologically plausible.

Although these languages are able to represent categorization-based (“is-a”,

“generalization” or “inheritance”) relationships, they cannot model prototype effects,

and as a consequence, have problems in dealing with fuzzy categories. These

technologies do not make use of probability information in modelling relationships,

and do not allow for the distributed or overlapping representation of concepts either.

As to the representation of comparison-based relations, it is possible to define

structures (e.g. predicates) for the modelling of such special-purpose relationships.

Semantic web representation techniques also provide a means for attaching various

sensory data to concepts in the form of resources, which is a definite advantage.

Conceptual structure at the lowest level is best represented by vector space models

(VSM) or neural networks. Both representations provide a natural way for modelling

comparison (as the extent to which activated neurons overlap in a neural network, or

the distance of vectors corresponding to the concept feature combinations in VSMs).

These representations have the further advantage of providing a solution to the

symbol grounding problem [14].

Should semantic web technologies be discarded then, and be replaced by

distributed representations? In our view, formal ontologies are not incompatible with

low-level distributed representations. Despite their lack of psychological plausibility,

ontologies are nevertheless valid symbolic systems in the intermediate layer of

Gärdenfors’ model. Formal ontologies could exploit the advantages of neural

networks or VSMs with indices that point to these lower level structures.

4 Integrating Human Perception with Conceptual Knowledge

Semantic sensor networks need to make intelligent use of their knowledge stores

when processing sensor data. While human interaction with the environment involves

the processing of sensor data from five different modalities, artificial sensors capture

only a small proportion of all the possible environmental data, specifically spatial

location, time and physical details like humidity and temperature. Semantic sensor

networks should also provide a means for extracting information from pictures,

videos or voice recordings. In this section, we examine the way in which human

cognition integrates sensor data from multiple modalities with conceptual knowledge.

4.1 Cognitive Processes Involved in Human Perception

It has been assumed that ‘the cognitive abilities that we apply to speaking and

understanding language are not significantly different from those applied to other

cognitive tasks, such as visual perception, reasoning or motor activity’ [4]. In Marr’s

model of visual perception [19], the processing of visual stimuli is considered to be a

89

mechanism in which information and knowledge are represented and processed at

different levels of abstraction, ranging from sensory stimuli to symbolic encoding.

Although Marr intended his model to describe visual modality exclusively, it appears

to be compatible with Gärdenfors’ three levels of representation.

Attention as a cognitive mechanism plays an important role in processing sensory

data. Visual perception, for instance, has a pre-attentive and an attentive phase. At the

pre-attentive level, no distinctions are made between important and irrelevant parts

[8]. The focus of attention can be guided by the inherent characteristics of the visual

stimulus (e.g. a lonely building on the horizon), or free association (e.g. seeing a

flower may send the perceiver looking for a butterfly). Evidence from early cognitive

literature on vision suggest that viewer centred and object centred representations of

images coexist in human memory (e.g. [9]), which proves that perspective is also

fundamental in human vision.

4.2 Integrating Sensor Data with Conceptual Structure

The integration of sensor data with conceptual knowledge raises two fundamental

questions. First, how does the brain integrate data received from different senses?

And second, how are these data mapped onto conceptual space?

In the case of conflicting parallel inputs from different sensory domains, one

modality will tend to dominate the final perception depending on the type of the task

and the relative reliability of the source of the sensation [28]. Experiments show that

integrating multimodal sensory data is so fundamental to cognition that separate brain

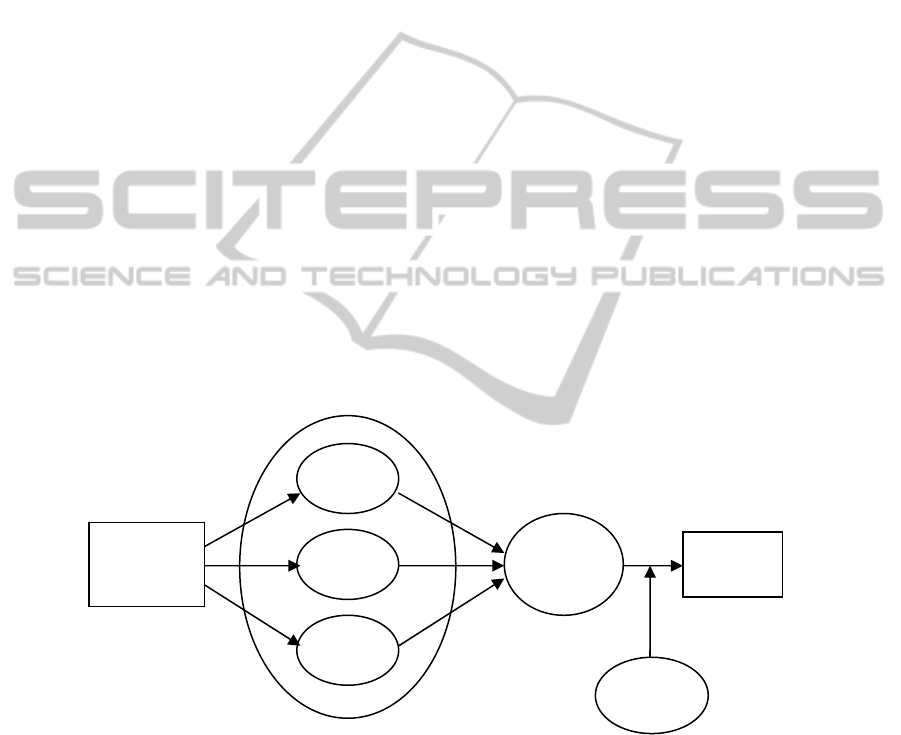

regions are dedicated to this task [2]. Fig. 1 shows a cognitive architecture of sensory

integration.

Fig. 1. The cognitive architecture of sensory integration based on Verhagen and Engelen

(2006).

As we have shown in Section 1, the organization of concepts in the human mind is

influenced by sensori-motor attributes. One account for this phenomenon is the

semantic hypothesis, which claims that the dissociations reflect differences in the

conceptual semantics of the words [27]. This implies that conceptualization is deeply

audition

vision

touch

etc.

Physical

world

Multi-

sensory

integration

perception

attention

90

rooted in perception, and strong associations exist between the representations of

words and mental footprints of sensory experience.

5 A Cognitive Architecture for Semantic Sensor Web Solutions

In this section, we wish to bring together into a coherent model the suggestions

introduced in previous sections. The rationale of the layered approach to the system

has been presented in Section 1, in Section 2 we have described the principles that

make knowledge representations psychologically plausible, and Section 3 has focused

on the interaction of sensory data and knowledge representations.

Architectures proposed by the SSW community are either limited in focus (see [16]

on pattern recognition, and [24] on metadata extraction for the SSW), or fail to define

the interconnections of the architectural components. Seth, for instance, proposes that

among others, computing for human experience should provide solutions for pattern

recognition, image analysis, casual text processing, sentiment and intent detection

[26]. Seth, however, does not explain how these components should be related to each

other or what their exact roles are.

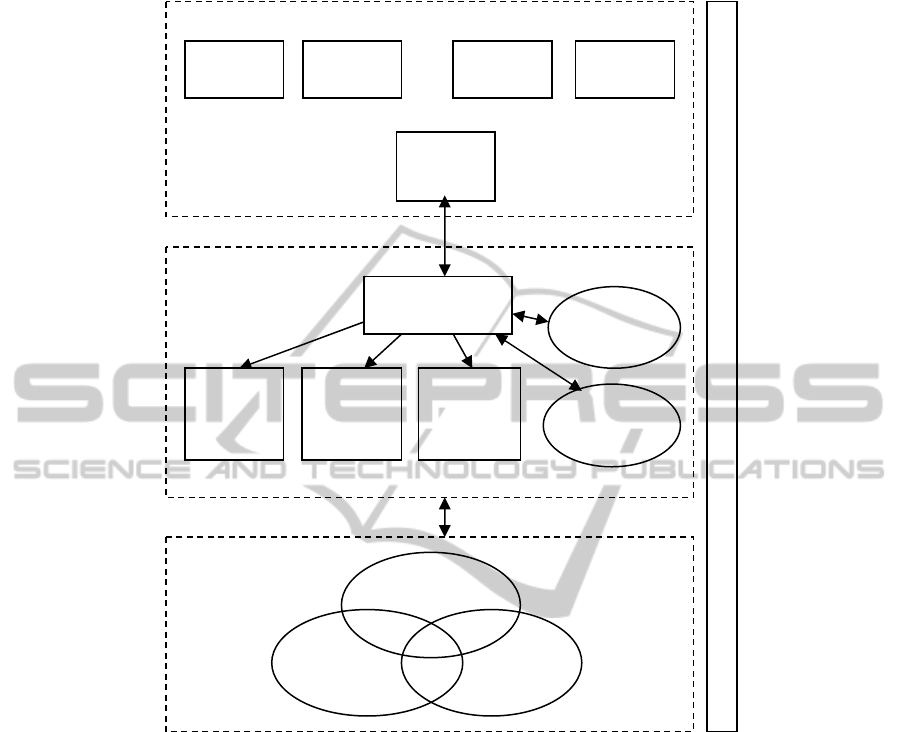

Based on evidence from cognitive neuroscience and psycholinguistics presented in

earlier parts of this paper, we propose that the following architectural components be

included in a SSW solution (see Fig. 2.):

• At the sensory level, SSW solutions should support the integration of

multiple sensor data with a separate integration module that helps decide

which data are more relevant and of better quality in the given situation.

• SSW solutions should provide redundant mechanisms for the solution of

various tasks, and use the best approach depending on the type of the task,

the context and background knowledge. A separate Selector module should

be responsible for deciding which mechanism should be preferred.

• SSW solutions should have rule-based modules to capture rules which may

apply to the operation of the system, and a declarative knowledge base which

subsumes the conceptual structure and caches solutions for frequently

occurring problems. (Note that Fig. 2. does not aim to illustrate all the

possible symbolic modules, it only shows examples of such components.) It

is preferable that the Selector first checks the availability of cached solutions,

and delegates the task to rule-based modules only in the case of a negative

response from the knowledge stores. The division between online

computation and the retrieval of complete solutions to recurring tasks is the

cornerstone of an efficient SSW solution.

• Knowledge representation technologies should combine symbolic ontologies

with low-level representations based on the principles outlined in Section 2.

• SSW solutions will benefit from automated learning based on the principles

of human concept acquisition. Learning should be sensitive to statistical

information inherent in the environment.

The architecture we propose is limited in its granularity: lower level

implementation details are out of the scope of the present paper and are subject to

future research. However, if future implementations were to be tested and validated

91

Fig. 2. A cognitive architecture for SSW solutions. The sensory level delegates the integrated

cross-modal input to symbolic processing. The Selector decides which method suits best the

given task, and chooses either a rule-based solution (represented as rectangles), or retrieves the

answer from the knowledge store, which comprises common sense knowledge and domain-

specific ontologies (represented as ellipses). Both rule-based methods and the knowledge store

rely on the conceptual level, which integrates conceptual, visual and other representations.

Cognitive processes underlie the workings of the system.

according to standard measures, we expect that systems built on the cognitive

architecture presented in this paper will be as efficient as solutions based on other

types of architectural patterns, and will perform better with fuzzy input than non-

cognitive systems. For most tasks, the separation of layers and the redundancy of

processes are not likely to cause significant performance overhead, since the system

operates with cached or—in the case of novel tasks—best-fit procedures. We expect

experimental findings to support our claim that a cognitively inspired SSW solution

will outperform traditional sensor systems when faced with conflicting, fuzzy or

Symbolic level

COGNITIVE PROCESSES: ATTENTION, COMPARISON, PERSPECTIVE, CONSTITUTION

Conceptual level

images

concepts

othe

r

sensory dat

a

Sensory level

Input from

SN 1

Input from

SN 2 …

Other

in

p

ut

Visual

in

p

ut

Multi-

sensory

integration

formal KR

(ontology)

common

sense

knowled

g

e

Pattern

recognition

Metadata

extraction

Selector

Syntactic

processing

92

deficient environmental data, because it will include the necessary strategies that

humans employ in their day-to-day interactions with the environment.

6 Conclusions

In this paper, we have explored the characteristics of the mechanisms that the human

mind employs to organize and structure conceptual knowledge. We have shown that

human knowledge representation subsumes three levels of abstraction, (1) the sensory

(subsymbolic), (2) the rule-based symbolic and (3) the conceptual level. At the

conceptual level, word meanings are distributed across several brain regions, which

overlap with areas activated during the processing of sensori-motor stimuli. These

evidence suggest that concepts are grounded in perception, and associations exist

across the mental representations of input from different modalities.

While RDF, OWL and other semantic web technologies fail to live up to the

criteria of a psychologically plausible knowledge representation, they are nevertheless

valid components of the symbolic layer of SSW solutions. In order to approximate

human conceptualization, KRs similar to neural networks or vector space models

should be positioned at the lowest level of the knowledge base architecture.

The same cognitive processes (attention, comparison, perspective and constitution)

underlie language processing, the organization of visual, auditory and other sensory

stimuli, and the construal of concept relations. The integration of sensory data from

multiple modalities involves the activation of dedicated brain regions and processes,

which entails that any SSW solution inspired by human cognition should provide

adequate sensor integration modules or processes.

References

1. Brunner, J., Goudou, J., Gatellier, P., Beck, J., Laporte, C. (2009) SEMbySEM: a

Framework for Sensors Management. In: SemSensWeb 2009 - 1st International Workshop

on the Semantic Sensor Web, 1 June 2009, Crete, Greece.

2. Calvert, G. (2001). Crossmodal processing in the human brain: insights from functional

neuroimaging studies. Cerebral Cortex 11, pp. 1110–1123.

3. Chomsky, N. (1988) Generative Grammar: Its Basis, Development and Prospects. Studies

in English Linguistics and Literature, Special Issue, Kyoto University of Foreign Studies.

4. Croft, D., Cruse, A. D. (2004) Cognitive Linguistics. Cambridge University Press, New

York.

5. Cruse, A. D. (2000) Meaning in Language. An Introduction to Semantics and Pragmatics.

Oxford University Press, New York.

6. Damasio, H., Grabowsky, T. J., Tranel, D., Hichwa, R. D., and Damasio, A. R. A neural

basis for lexical retrieval. Nature 380, pp. 499-505.

7. Dietze, S., Domingue, J. (2009) Bridging between Sensor Measurements and Symbolic

Ontologies through Conceptual Spaces. In: SemSensWeb 2009 - 1st International Workshop

on the Semantic Sensor Web, 1 June 2009, Crete, Greece.

8. Duncan, J. and Humphreys, G. (1989) Visual search and stimulus similarity. Psychological

Review 96, pp. 433-458.

93

9. Farah, M. J. K., Hammond, D., Levine, R. and Calvanio, R. (1988) Visual and spatial

mental imagery: dissociable systems of representation. Cognitive Psychology 20 (1988) pp.

439-462.

10. Fodor, J. D. and Ferreira, F. (eds.) (1998). Reanalysis in Sentence Processing. Dordrecht:

Kluwer Academic Publishers.

11. Gärdenfors, P. (1994) Three levels of inductive inference. In: Prawitz, D., Skyrms, B. and

Westerstahl, D. (eds.): Logic, Methodology, and Philosophy of Science IX (Elsevier

Science, Amsterdam, 1994).

12. Gerardin, E., Sirigu, A., Lehericy, S., Poline, J. B., Gaymard, B., Marsault, C., Agid, Y.,

and Le Bihan, D. (2000) Partially overlapping neural networks for real and imagined hand

movements. Cerebral Cortex 10, pp. 1093-1104.

13. Gómez, R. (2007) Statistical learning in infant language development. In Gaskell, M.G.

(ed.), The Oxford Handbook of Psycholinguistics, pp. 601-616. Oxford University Press,

New York.

14. Harnad, S. (1990) The Symbol Grounding Problem. Physica D 42, pp. 335-346.

15. Hayes, J. et al. (2009) Views from the coalface: chemo-sensors, sensor networks and the

semantic sensor web. In: SemSensWeb 2009 - 1st International Workshop on the Semantic

Sensor Web, 1 June 2009, Crete, Greece.

16. Jiang, J. et al. (2010) Collaborative Localization in Wireless Sensor Networks via Pattern

Recognition in Radio Irregularity Using Omnidirectional Antennas. Sensors 2010, 10(1),

pp. 400-427.

17. Lakoff, G. (1992) The contemporary theory of metaphor. In Andrew Ortony (ed.),

Metaphor and Thought (2nd edn.), pp. 202-51. Cambridge University Press, Cambridge.

18. Lewis, M., Cameron, D., Xie, S., Arpinar, I. B. (2006) ES3N: A Semantic Approach to

Data Management in Sensor Networks. Semantic Sensor Networks Workshop of the 5th

International Semantic Web Conference. 5-9 November, Athens, Georgia, USA.

19. Marr, D. (1982) Vision. Freeman, New York, 1982.

20. OGC (2007). Open Geospatial Consortium. Available from:

http://www.opengeospatial.org/

21. Paivio, A. (1990) Mental Representations: A Dual Coding Approach. Oxford University

Press, 1990.

22. Pulvermüller, F. (2007). Brain processes of word recognition as revealed by

neurophysiological imaging. The Oxford Handbook of Psycholinguistics, pp. 119-140.

Oxford University Press, New York.

23. Rosch, E., Lloyd, B.B. (eds) (1978) Cognition and Categorization. Lawrence Erlbaum

Associates, Publishers, (Hillsdale), 1978.

24. Seth, A., Hanson, C., Sahoo, S. S. (2008) Semantic Sensor Web. IEEE Internet Computing,

2008 July/August, pp. 78-83.

25. Seth, A. (2009) Citizen Sensing, Social Signals, and Enriching Human Experience. IEEE

Internet Computing, 2009 July/August, pp. 80-85.

26. Seth, A. (2010) Computing for Human Experience. Semantics-Empowered Sensors,

Services, and Social Computing on the Ubiquitous Web. IEEE Internet Computing, 2010

January/February, pp. 88-91.

27. Ullman, M. T. (2007) The biocognition of the mental lexicon. In Gaskell, M.G. (ed.),

The

Oxford Handbook of Psycholinguistics, pp. 267-287. Oxford University Press, New York.

28. Verhagen, J. V., Engelen, L. (2006) The neurocognitive bases of human multimodal food

perception: Sensory integration. Neuroscience and Biobehavioral Reviews 30, pp. 613–650

29. Wang, J. et al. (2010) Neural representation of abstract and concrete concepts: A meta-

analysis of neuroimaging studies. Human Brain Mapping.

30. Weinert, R. (1995) The Role of Formulaic Language in Second Language Acquisition: A

Review. Applied Llinguistics 16(2):180-205.

94