A COMPUTATIONAL MODEL FOR CONSCIOUS VISUAL

PERCEPTION AND FIGURE/GROUND SEPARATION

Marc Ebner

Eberhard-Karls-Universit¨at T¨ubingen, Wilhelm-Schickard-Institut f¨ur Informatik

Abt. Rechnerarchitektur, Sand 1, 72076 T¨ubingen, Germany

Stuart Hameroff

Departments of Anesthesiology and Psychology and Center for Consciousness Studies, The University of Arizona

Tucson, Arizona 85724, U.S.A.

Keywords:

Figure/ground segmentation, Spiking neurons, Consciousness, Gamma-oscillation, Lateral-coupling, Gap-

junctions.

Abstract:

The human brain is able to perform a number feats that researchers have not been able to replicate in artificial

systems. Unsolved questions include: Why are we conscious and how do we process visual information from

the input stimulus right down to the individual action. We have created a computational model of visual

information processing. A network of spiking neurons, a single layer, is simulated. This layer processes visual

information from a virtual retina. In contrast to the standard integrate and fire behavior of biological neurons,

we focus on lateral connections between neurons of the same layer. We assume that neurons performing the

same function are laterally connected through gap junctions. These lateral connections allow the neurons

responding to the same stimulus to synchronize their firing behavior. The lateral connections also enable the

neurons to perform figure/ground separation. Even though we describe our model in the context of visual

information processing, it is clear that the methods described, can be applied to other kinds of information,

e.g. auditory.

1 MOTIVATION

To fully understand how cognitive information pro-

cessing works, we will have to replicate all essential

functions of the brain either in simulation or by build-

ing an artificial artifact. Only if we are able to build

an artificial entity, which is able to perform similar

tasks as the human brain, then we have understood

how the brain actually works. Sensory perception,

motor control and learning are assumed to be a result

of the neural processing occurring inside the brain.

It is assumed that the processing occurs through so

called integrate-and-fire neurons. Such neurons in-

tegrate the electrical inputs received through axons

from other neurons. Once the activation of a neu-

ron reaches a certain threshold, then it fires. It itself

sends an electrical impulse along its axon. An essen-

tial marker of consciousness cognition is the synchro-

nized electrical activity of neurons inside a particu-

lar frequency band (30 to 90 Hz) of the electroen-

cephalogram (EEG), called gamma synchrony EEG

(Gray and Singer, 1989; Ribary et al., 1991). (Singer,

1999) gives a review on how gamma synchrony cor-

relates with perception and motor control. Gamma

synchrony is mediated largely by inter dendritic gap

junctions. According to the Hameroff ‘conscious pi-

lot’ model (Hameroff, 2010), synchronized zones of

activation move through the brain as gap junctions

open and close. The synchronized zones convert non-

conscious cognition, i.e. cognition on auto-pilot, to

consciousness.

(Kouider, 2009) has reviewed several different

neurobiological theories of consciousness. Many

seemingly different theories of consciousness (e.g.

Tononi and Edelman’s reentrant dynamic core hy-

pothesis (Tononi and Edelman, 1998) or Lamme’s lo-

cal recurrence theory (Lamme, 2006) assume recur-

rent processing of information. (Zeki, 2007) has put

forward the microconsciousness theory. He suggests

that multiple consciousnesses are distributed across

processing sites and that attributes such as color, form

or motion are eventually bound together giving rise to

112

Ebner M. and Hameroff S..

A COMPUTATIONAL MODEL FOR CONSCIOUS VISUAL PERCEPTION AND FIGURE/GROUND SEPARATION.

DOI: 10.5220/0003123201120118

In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing (BIOSIGNALS-2011), pages 112-118

ISBN: 978-989-8425-35-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

macroconsciousness possibly also involving linguis-

tic and communication skills which would then be a

unified form of consciousness.

With this contribution we focus on Zeki’s micro-

consciousness using recurrent information process-

ing. We present a theoretical model on how a sheet

of neurons interacts laterally through gap junctions to

perceive and represent a visual scene. Even though

we only show results for the processing of visual in-

formation, the same method can also be used to per-

ceive auditory or haptic information. In our model,

neurons are connected laterally throughgap junctions.

We assume that within a sheet of neurons, neurons

with a similar function are connected through such

gap junctions. Completely unrelated functions are not

connected through gap junctions. We model a single

sheet of laterally connected neurons processing visual

input from a virtual retina using real scenes as input.

The interconnected neurons are assumed to form

a resistive grid which is used to temporally and spa-

tially average the out-going spikes. The spatial av-

erage of the out-going signal is used as a feedback

signal which determines whether the gap junctions

between two adjacent neurons open or close. Gap

junctions open if the temporal average of the neu-

ron’s input is above the spatial average of the aver-

aged output, otherwise they close. This enables the

set of neurons to perform figure/ground separation. If

a gap junction between two adjacent neurons is open,

then these neurons synchronize their firing behavior.

Thus, eventually all of the neurons receiving input

from the “figure” will fire in synchrony. We show

how this works by presenting the sheet of neurons dif-

ferent photographs. The virtual retina gazes at these

photographs. As the object moves across the retina,

the object is extracted and tracked by a zone of syn-

chronized activity.

Our model only requires local connections be-

tween neurons. Global connections are not required.

Local connections have also been used by (Wang,

1995) and (K¨onig and Schillen, 1991) to establish

synchronous firing. Our model uses different firing

rates of segmented regions to distinguish between dif-

ferent objects, i.e. neurons of regions of different

sizes will be synchronized within a region but desyn-

chronized across regions. (Terman and Wang, 1995)

achieve desynchronization between different objects

using a global inhibitor while (Schillen and K¨onig,

1991) use long range excitatory delay connections.

(Zhao and Breve, 2008) have used chaotic oscillators

for scene segmentation. They segmented static input.

They worked with Wilson-Cowan neural oscillators

(Wilson and Cowan, 1972). In their model, neurons

which respond to the same object synchronize their

behavior while neurons responding to a different ob-

ject are in another chaotic orbit. (Eckhorn et al., 1990)

have simulated two one-dimensional layers of neu-

rons simulating results from cat visual cortex. They

also performed experiments with a moving stimulus.

However, they used long range feeding connections

between neurons of the same layer.

Our model is quite simple and shows how the syn-

chronized zones of activity, the neural correlate of

consciousness, arise and how they move around in a

sheet of neurons. In the brain, similar synchronized

zones of activity correlate with conscious perception

and control.

2 COMPUTATIONAL MODELING

Traditionally, only the spiking behavior of neurons

is modeled. The spiking behavior is assumed to be

the most relevant aspect of the neuron’s function. If

this is indeed the case, then the function of a neuron

can be replicated by only modeling the spiking be-

havior. Other aspects, such as interactions at the level

of neuro transmitters and ion channels are assumed to

be not relevant and can be omitted in the computa-

tional modeling. Eventually, large scale modeling of

all of the brain’s neurons may lead to a better under-

standing of how the brain functions (Izhikevich and

Edelman, 2008). The neuron is viewed as a functional

unit which integrates the input, and once a particular

threshold is reached, the neuron fires. This is the stan-

dard integrate and fire model (Gerstner and Kistler,

2002).

The input is received from axons of other neu-

rons. A voltage spike train travels along the axons.

This signal is received through the dendrites of a neu-

ron (and also the cell body/soma). The signal is inte-

grated over time, building up the so called activation

potential of the neuron. Once the activation potential

is high enough, i.e. above the firing-threshold, then

the neuron itself will fire. A spike is sent down along

the axon. This signal will then be received by other

neurons where the process continues.

The change of the activation potential V

i

of neu-

ron i is described by the followingequation (Thivierge

and Cisek, 2008)

τ

dV

i

dt

= −g

i

(V

i

− E

i

) + I

tonic

+ I

i

+

N

∑

j=1

w

ij

K

j

(1)

where τ is a time constant. Without any input, the cell

activation potential will slowly decay and eventually

reach the resting potential E

i

. The factor g

i

is the leak-

age conductance, i.e. the speed of the decay. An input

current from an external source can be modeled using

A COMPUTATIONAL MODEL FOR CONSCIOUS VISUAL PERCEPTION AND FIGURE/GROUND SEPARATION

113

the term I

i

. A tonic current can be specified through

I

tonic

. The input from other neurons K

j

is weighted

through factors w

ij

which describe the strength be-

tween the neurons i and j. This standard model lacks

an important ingredient: lateral connections between

neurons. Such lateral connections will allow a set of

neurons to perform figure/ground segmentation. They

will also cause neurons responding to the same object

to synchronize their firing behavior.

3 NEURON WITH LATERAL

CONNECTIONS

In our model, the neuron performs a temporal integra-

tion of the incoming spikes. The activation of the neu-

ron rises until a particular threshold is met. Once the

threshold is met, the neuron fires. This is exactly the

traditional integrate-and-fire-model. Extending the

standard model, a neuron is connected to nearby neu-

rons performing a similar function through gap junc-

tions. The connected neurons form a resistive grid

because each gap junction can be modeled as a resis-

tor (Herault, 1996; Veruki and Hartveit, 2002). This

connection between two neurons, i.e. the resistor, is

always there. It is an unconditional connection. How-

ever, a gap junction may also be in one of two states:

open or closed. Which state is chosen is voltage de-

pendent. (Traub et al., 2001) have also used a volt-

age dependent conductance of gap junctions in their

simulations. If a gap junction is open, then an addi-

tional resistive connection is created between the two

neurons allowing for the connected neurons to col-

lectively integrate their input. Because of this condi-

tional resistive coupling, connected neurons synchro-

nize their firing behavior. They fire in synchrony, i.e.

we obtain the so called gamma synchrony. The neu-

rons synchronize in the same way that chaotic or non-

linear electrical circuits synchronize if a signal is ex-

changed between them (Carroll and Pecora, 1991; ?;

Volos et al., 2008).

The output spikes of the neuron are temporally in-

tegrated and spatially averaged because of the resis-

tive grid. The spatially averaged output forms a feed-

back signal. This feedback signal is used to deter-

mine whether gap junctions open or close. We call

this feedback signal the sync-threshold. If the tempo-

ral average of the neuron’s output is above the sync-

threshold, then its gap junctions open. If the tempo-

ral average of the neuron’s output is below the sync-

threshold, then the gap junctions close.

Figure 1 shows our model of a neuron with lat-

eral connections. The neuron illustrates the different

functional components. The temporal averaging (in-

of output

axon

spike

thresholding

resistive coupling

spatial averaging

temporal averaging

generation

unconditional

to nearby neuron

of output

conditional

resistive coupling

to nearby neuron

temporal

integration

of input

dendrites

sync−threshold

gap junction mode:

open or closed

of input

spatial averaging

Figure 1: Artificial neuron. Each neuron is laterally con-

nected to other neurons (via gap junctions) which perform

a similar function.

dicated by the box “

R

dt”) occurs first. The spines

extending laterally to nearby neurons illustrate lateral

connections caused by gap junctions. In the illus-

tration, we have connections to four other neurons.

It is of course clear that these connections are not

necessarily uniformly distributed in an actual neuron.

For the biological neuron, the gap junctions are ac-

tually found between dendrites of neighboring neu-

rons. The darker lateral connections (see Figure 1)

form the conditional resistive grid which can be in

one of two states: open or closed. These connections

cause connected neurons to fire in synchrony (indi-

cated by the box “

R

dx”). If the neuron activation is

sufficiently high (indicated by the “Threshold”-box),

then the neuron fires (indicated by the box with the

spike), sending a spike down the axon. The outgo-

ing spikes are temporally integrated (indicated by the

upper “

R

dt”-box). This signal is also spatially inte-

grated (indicated by the outer circular ring). If this

spatially integrated signal is above the temporally in-

tegrated signal, then the gap junctions open otherwise

they close. Even though the we model the behavior

just described using only a single neuron, it could be

that these functions are actually spread over multi-

ple neurons inside a cortical column. (Mountcastle,

1997) gives an excellent review of the columnar orga-

nization of the neocortex.

4 EXPERIMENT ON VISUAL

FIGURE/GROUND

SEPARATION

In order to test our model, we have created a virtual

retina to provide visual input to a simulated sheet of

1000 neurons. The neurons are randomly distributed

over a 100× 100× 10 area. Each neuron i receives in-

put from the corresponding position of the retina with

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

114

a small random displacement. Let (x

i

,y

i

,z

i

) be the

position of the neuron. We assume coordinates to be

normalized to the range [0,1]. Then neuron i will re-

ceive its visual input from position (wx

i

+ x

r

,hy

i

+ y

r

)

where w and h are the width and height of the original

image in pixels and x

r

and y

r

are random offsets from

the range [−1,1]. The third dimension (z) is not really

required and the model would also work if we spread

out our sheet of neurons exactly on a two-dimensional

plane. However, biological neurons are assumed not

be perfectly positioned on a two-dimensional plane.

The processing that we simulate is assumed to

take place inside some higher area of the visual cor-

tex. It could take place in V1. However, accord-

ing to (Crick and Koch, 1995) humans do not ap-

pear to be aware of the processing occurring inside

V1. Thus, we assume that higher visual areas are in-

volved. Higher visual areas have to be involved any-

way if other features such as form or motion are used

(Zeki, 1978; Zeki, 1993).

The retinal receptors (cones) respond to light in

the red, green and blue parts of the spectrum (Dart-

nall et al., 1983). The visual stimulus is transformed

by the time it has reached the visual cortex by color

opponent and double-opponentcells. The visual stim-

ulus is processed inside a rotated coordinate sys-

tem where the three axes are: bright-dark, red-green,

and yellow-blue (Tov´ee, 1996). In our experiments,

we focus on the bright-dark stimulus, the lightness.

Lightness L is computed from R, G, B, non-linear

pixel intensities using (Poynton, 2003)

L = 0.299R+ 0.587G+ 0.114B. (2)

Figure 2 shows the algorithm that is run by each

neuron. Each neuron i is described by the follow-

ing state variables: activation a

i

, threshold t

i

, output

voltage o

i

, temporal average of out-going spikes ˜a

i

,

spatial average ¯a

i

of temporal average. The initial-

ization of these variables can actually be arbitrary as

the activation and the output voltage slowly decay to

zero. The parameters used by the algorithm are set

as follows: α

a

= 0.01 decay of the activation poten-

tial, α

o

= 0.5 decay of the output spiking voltage,

α

t

= 0.01 temporal averaging factor, α

s

= 0.0001 spa-

tial averaging factor, ε = 0.001 activation leakage to

adjacent neurons upon firing, γ = 0 reduction of fir-

ing threshold, ω = 1.999 factor for over-relaxation,

∆t

r

= 10 refractory period of neuron, N

s

number of

neurons in sub-network, w

ij

= 1 weight between neu-

rons i and j. For the experiments described here, we

have used positive unit weights. The weights can of

course in principle be positive or negative. Negative

weights would correspond to inhibitory signals which

reduce the input to a neuron. Using neural learning,

(01) o

i

= (1− α

o

)o

i

// decay of output

(02) a

i

= (1− α

a

)a

i

// decay of activation

(03) a

i

= a

i

+ α

a

∑

j

w

ij

o

j

// integrate input

(04) if Neuron i fired within ∆t

r

return

(05) N = { j|Neuron j is laterally connected to

(06) neuron i via open gap junction }

(07) a

′

= a

i

; n = 1 // initialize spatial averaging

(08) ∀ j ∈ N : if Neuron j did not fire within ∆t

r

(09) { a

′

= a

′

+ a

j

; n = n+ 1 }

(10) a

i

= a

′

/n // spatial averaging completed

(11) // distribute sp. avg to neighboring neurons

(12) ∀ j ∈ N : if Neuron j did not fire within ∆t

r

(13) { a

j

= a

i

; }

(14) a

i

= max[−1,a

i

] // limit activation

(15) t

i

= max[0,1 − γ · N

s

] // comp. fire-threshold

(16) if (a

i

> t

i

) {// does the neuron fire?

(17) a

i

= 0 // reset activation

(18) o

i

= 1− ε|N| // output rises to 1

(19) ∀ j ∈ N : a

j

= a

j

+ ε // distribute leakage

(20) }

(21) ˜a

i

= (1− α

t

) ˜a

i

+ α

t

o

i

// temporal average

(22) ¯a

′′

= ¯a

i

// save previous result

(23) ¯a

′

=

1

1+|N|

∑

j∈N

¯a

i

// compute spatial average

(24) ¯a

i

= (1− α

s

) ¯a

′

+ α

s

˜a

i

// add temp. average

(25) ¯a

i

= (1− ω) ¯a

′′

+ ω ¯a

i

// use over-relaxation

(26) if ( ˜a

i

> ¯a

i

) open gap junctions

(27) else close gap junctions

Figure 2: Algorithm run by each neuron i.

the weights can be dynamically tuned to a given prob-

lem. However, for our sample problem, we do not

require neural learning. The dendritic input of a sim-

ulated neuron j is the lightness L at the corresponding

position (x

j

,y

j

) of the virtual retina. Thus we have,

o

j

= L(x

j

,y

j

).

The neuron performs temporal integration of the

input and also spatial integration through the resis-

tive grid formed by laterally connected neurons. The

feedback signal of the output (again temporally in-

tegrated) performs an adaptive determination of the

threshold which can be used to separate figure from

ground. Since we use lightness as an input, areas

with high lightness correspond to the object and areas

with low lightness will correspond to the background.

Such a separation can of course also be achieved with

a standard integrate and fire neuron which is not lat-

erally connected to other neurons. However, in this

case, the response of the neuron will no longer be

adaptiveon the input. Because of the output feedback,

our grid of neurons is able to tune its response to the

available input. Instead of performing a bright-dark

separation, our model can also be used to perform a

separation with respect to color, motion or texture. In

A COMPUTATIONAL MODEL FOR CONSCIOUS VISUAL PERCEPTION AND FIGURE/GROUND SEPARATION

115

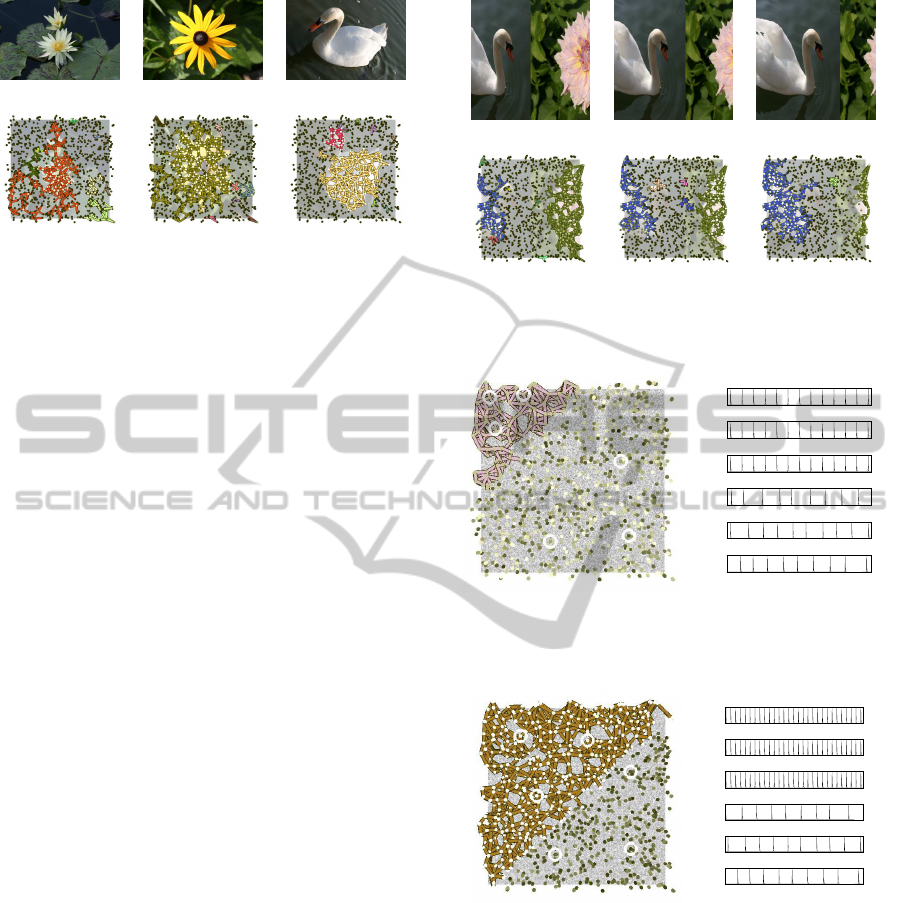

(a) (b) (c)

(d) (e) (f)

Figure 3: Figure/ground separation. (a)-(c) Input images.

(d)-(f) The sheet of neurons responds to input from a simu-

lated retina.

this case, the sheet of neurons would be located in an

area receiving input from V4 (color) or V5 (motion).

Or it could be used for adaptive thresholding of audi-

tory information.

The sheet of neurons receives input from the vir-

tual retina. A number of still images are moved across

the virtual retina. Figure 3(a)-(c) shows three of the

images that the retina was exposed to. The input im-

age is shown in the background. Each dot in the fore-

ground corresponds to a single neuron of our sheet of

neurons which receives input from the virtual retina.

The output of the sheet of neurons is shown in Fig-

ure 3(d)-(f) for the same three images. Open gap

junctions are drawn as colored lines between neu-

rons. All connections which belong to the same clus-

ter are drawn with the same color. This indicates syn-

chronous firing. The neurons responding to the “fig-

ure” have their gap junctions open. Thus, the figure

has been separated from the ground. Figure 4 shows

how the same sheet of neurons respond to a mov-

ing stimulus. Even though the object moves across

the retina, the same sub-network (as indicated by the

color of the sub-network) responds to the same stimu-

lus. Higher visual areas are able to process this stim-

ulus using visual servoing techniques (Chaumette and

Hutchinson, 2006; Chaumette and Hutchinson, 2007)

for behaviors such as grasping.

Figure 5 and Figure 6 shows the output of the

same sheet of neurons when the gap junctions of sev-

eral neurons were deliberately opened and a random

input was provided to the virtual retina. The neurons

located within the connected sub-network synchro-

nize their firing behavior. In order to demonstrate the

effect of the size of the sub-network on the firing fre-

quency, the parameter γ was set to 0.001. Hence, the

firing frequency depends on the size of the connected

sub-network. This can be seen when comparing the

spiking frequencies of neurons (b), (c), (d) shown in

Figure 5 and Figure 6. Neurons from the connected

(a) (b) (c)

(d) (e) (f)

Figure 4: Response to moving stimulus. The connected

sub-network follows the object.

V

s

V

s

V

s

V

s

V

s

(a)

V

s

(g)

(d)

(e)

(f)

(b)

(c)

Figure 5: (a) random input stimulus. (b-d) synchronous fir-

ing behavior of 3 neurons from the upper left area. (e-g)

asynchronous firing of 3 neurons with closed gap junctions.

V

s

V

s

V

s

V

s

V

s

(a)

V

s

(g)

(d)

(e)

(f)

(b)

(c)

Figure 6: (a) random input stimulus. (b-d) synchronous fir-

ing behavior of 3 neurons from the upper left area. (e-g)

asynchronous firing of 3 neurons with closed gap junctions.

sub-network shown in Figure 5 have a smaller fir-

ing frequency because the size of the sub-network

is smaller compared to the size of the sub-network

shown in Figure 6. Neurons from the connected sub-

network shown in Figure 6 have a higher firing fre-

quency. The difference in the firing frequency can be

used to discern different objects by higher visual ar-

eas.

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

116

5 CONCLUSIONS

In standard neural modeling, neurons are assumed to

show an integrate-and-fire-behavior. We have devel-

oped a computer simulation which also takes lateral

connections between neurons into account. Neurons

having the same function, i.e. neurons responding to

input in the same way, are assumed to be laterally con-

nected through gap junctions. The neurons integrate

their input. If this activation of the neuron is large

enough, then the neuron fires. The generated spike

train of the neuron is temporally and spatially inte-

grated. If the temporal average of the output is above

the average spatial average, then the neuron opens its

gap junctions to nearby neurons. Once this happens,

then the connected neurons synchronize their firing

behavior. This is a marker of consciousness. We

have shown how a virtual sheet of neurons responds

to visual input on a simulated retina segmenting fig-

ure from ground. Higher brain areas can use this data

for behaviors such as reaching or grasping.

REFERENCES

Carroll, T. L. and Pecora, L. M. (1991). Synchronizing

chaotic circuits. IEEE Trans. on Circuits and Systems,

38(4):453–456.

Chaumette, F. and Hutchinson, S. (2006). Visual servo con-

trol part I: Basic approaches. IEEE Robotics & Au-

tomation Magazine, 13(4):82–90.

Chaumette, F. and Hutchinson, S. (2007). Visual servo con-

trol part II: Advanced approaches. IEEE Robotics &

Automation Magazine, 14(1):109–118.

Crick, F. and Koch, C. (1995). Are we aware of neural

activity in primary visual cortex? Nature, 375:121–

123.

Dartnall, H. J. A., Bowmaker, J. K., and Mollon, J. D.

(1983). Human visual pigments: microspectrophoto-

metric results from the eyes of seven persons. Proc.

R. Soc. Lond. B, 220:115–130.

Eckhorn, R., Reitboeck, H. J., Arndt, M., and Dicke, P.

(1990). Feature linking via synchronization among

distributed assemblies: Simulations of results from cat

visual cortex. Neural Comput., 2:293–307.

Gerstner, W. and Kistler, W. (2002). Spiking Neuron Mod-

els. Cambridge University Press, Cambridge, UK.

Gray, C. M. and Singer, W. (1989). Stimulus-specific neu-

ronal oscillations in orientation columns of cat visual

cortex. PNAS, 86:1698–1702.

Hameroff, S. (2010). The “conscious pilot” – dendritic syn-

chrony moves through the brain to mediate conscious-

ness. Journal of Biological Physics, 36:71–93.

Herault, J. (1996). A model of colour processing in the

retina of vertebrates: From photoreceptors to colour

opposition and colour constancy phenomena. Neuro-

computing, 12:113–129.

Izhikevich, E. M. and Edelman, G. M. (2008). Large-scale

model of mammalian thalamocortical systems. PNAS,

105(9):3593–3598.

K¨onig, P. and Schillen, T. B. (1991). Stimulus-dependent

assembly formation of oscillatory responses: I. syn-

chronization. Neural Comput., 3:155–166.

Kouider, S. (2009). Neurobiological theories of conscious-

ness. In Banks, W. P., editor, Encyclopedia of Con-

sciousness, pages 87–100. Elsevier.

Lamme, V. A. F. (2006). Towards a true neural stance

on consciousness. Trends in Cognitive Sciences,

10(11):494–501.

Mountcastle, V. B. (1997). The columnar organization of

the neocortex. Brain, 120:701–722.

Pecora, L. M. and Carroll, T. L. (1990). Synchronization in

chaotic systems. Phys. Rev. Lett., 64(8):821–824.

Poynton, C. (2003). Digital Video and HDTV. Algorithms

and Interfaces. Morgan Kaufmann Publishers.

Ribary, U., Ioannides, A. A., Singh, K. D., Hasson, R.,

Bolton, J. P. R., Lado, F., Mogilner, A., and Llin´as,

R. (1991). Magnetic field tomography of coherent

thalamocortical 40-hz oscillations. PNAS, 88:11037–

11041.

Schillen, T. B. and K¨onig, P. (1991). Stimulus-dependent

assembly formation of oscillatory responses: II.

desynchronization. Neural Comput., 3:167–178.

Singer, W. (1999). Neuronal synchrony: A versatile code

for the definition of relations? Neuron, 24:49–65.

Terman, D. and Wang, D. (1995). Global competition and

local cooperation in a network of neural oscillators.

Physica D, 81:148–176.

Thivierge, J.-P. and Cisek, P. (2008). Nonperiodic synchro-

nization in heterogeneous networks of spiking neu-

rons. The Journal of Neuroscience, 28(32):7968–

7978.

Tononi, G. and Edelman, G. M. (1998). Consciousness and

complexity. Science, 282:1846–1851.

Tov´ee, M. J. (1996). An introduction to the visual system.

Cambridge University Press, Cambridge.

Traub, R. D., Kopell, N., Bibbig, A., Buhl, E. H., LeBeau, F.

E. N., and Whittington, M. A. (2001). Gap junctions

between interneuron dendrites can enhance synchrony

of gamma oscillations in distributed networks. The

Journal of Neuroscience, 21(23):9478–9486.

Veruki, M. L. and Hartveit, E. (2002). All (rod) amacrine

cells form a network of electrically coupled interneu-

rons in the mammalian retina. Neuron, 33:935–946.

Volos, C. K., Kyprianidis, I. M., and Stouboulos, I. N.

(2008). Experimental synchronization of two resis-

tively coupled Duffing-type circuits. Nonlinear Phe-

nomena in Complex Systems, 11(2):187–192.

Wang, D. (1995). Emergent synchrony in locally coupled

neural oscillators. IEEE Trans. on Neural Networks,

6(4):941–948.

A COMPUTATIONAL MODEL FOR CONSCIOUS VISUAL PERCEPTION AND FIGURE/GROUND SEPARATION

117

Wilson, H. R. and Cowan, J. D. (1972). Excitatory and in-

hibitory interactions in localized populations of model

neurons. Biophysical Journal, 12:1–24.

Zeki, S. (1993). A Vision of the Brain. Blackwell Science,

Oxford.

Zeki, S. (2007). A theory of micro-consciousness. In Vel-

mans, M. and Schneider, S., editors, The Blackwell

companion to consciousness, pages 580–588, Malden,

MA. Blackwell Publishing.

Zeki, S. M. (1978). Review article: Functional specialisa-

tion in the visual cortex of the rhesus monkey. Nature,

274:423–428.

Zhao, L. and Breve, F. A. (2008). Chaotic synchronization

in 2D lattice for scene segmentation. Neurocomput-

ing, 71:2761–2771.

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

118