A “CONTEXT EVALUATOR” MODEL FOR A MULTIMODAL

USER INTERFACE IN DRIVING ACTIVITY

Jesús Murgoitio, Arkaitz Urquiza, Maider Larburu and Javier Sánchez

Robotiker - Tecnalia, Parque Tecnológico-Modulo 202, E-48170 Zamudio, Spain

Keywords: User interface, Automotive, Multimodality, Context evaluation, Ambient intelligence.

Abstract: Focused on new HMI for vehicles, this paper explains the methodology followed by Tecnalia to provide an

evaluation of the context, based on sensors data, in order to achieve the optimum usability and ubiquity

levels at any given moment, and finally to optimize the driving task from the safety point of view. The paper

is divided into three sections. Firstly, there is an introduction in which the main concepts, multimodality and

Ambient intelligence, are explained (section 1), and this is followed by the two projects related to the work

shown here (section 2). In section 3 we will go on to describe the methodology used to obtain an evaluation

of the context, as is necessary for a car HMI with multimodal capabilities. Finally, a particular “Context

evaluator” is displayed in order to illustrate the methodology and report the work carried out.

1 INTRODUCTION

In order to decrease the number of accidents

together with sustainable aspects, MARTA project

(here and after MARTA) kicked off in September

2007. MARTA, is an initiative coming from the

Spanish automotive industry, leaded by Tier 1

Ficosa International and contributed by relevant

partners such as SEAT Technical Centre and first

telecommunications operator Telefónica. The project

is supported by the Spanish Ministry of Science and

Innovation through CENIT Program managed by the

public organisation Centre for the Development and

Industrial Technology (CDTI). MARTA is focused

in the study of the scientific bases and technologies

of transportation for the 21rst Century that will lead

to an ITS (Intelligent Transportation System). In

particular, the main objective of MARTA is vehicle-

to-vehicle and vehicle-to-infrastructure communi-

cations, in order to develop new technological

services and solutions to improve road mobility.

These intelligent systems of the future will

contribute to decrease the number of accidents, to

assess drivers so as to reduce energy consumption

and finally, to provide on-board services for better

information and minimizing the traffic congestion.

SEAT Technical Centre has an important and

participative role in MARTA, as vehicle integral

development center of SEAT car manufacturer. This

paper is referred to Tecnalia research specifically

developed under SEAT Technical Centre HMI

research activities inside MARTA in which Tecnalia

is involved in close collaboration with Cidaut R&D

Center and Rücker-Lypsa Engineering.

Developments in recent years in the field of

electronics and applications in the automotive sector

(today around a third of the overall cost of cars is

due to electronics) have made it possible to integrate

within vehicles new technologies such as mobile

phones, global positioning (GPS) or driving assistive

systems (ADAS), among others.

Thus, as new applications and functionalities are

being added to cars, the number of devices is

increasing, as is the quantity of information for the

vehicle driver. Consequently, there is an increasing

demand for more efficient control architectures and

technologies, especially for managing the data

provided to the driver by the HMI (Human Machine

Interface) mainly in order to not disturb him from

his main activity, driving, and to maintain and/or

improve the safety for everybody involved: driver,

car passengers and pedestrians.

Since so much information can have a strong

negative influence if it is not correctly handled and

displayed, particularly in terms of decreasing safety,

Tecnalia has been working on a particular

methodology and the corresponding “Context

Model” solution, oriented to evaluating the context

and then providing information to take decisions

about “what data” to show, “where” (screens, head-

617

Murgoitio J., Urquiza A., Larburu M. and Sánchez J..

A “CONTEXT EVALUATOR” MODEL FOR A MULTIMODAL USER INTERFACE IN DRIVING ACTIVITY.

DOI: 10.5220/0003183206170624

In Proceedings of the 3rd International Conference on Agents and Artificial Intelligence (ICAART-2011), pages 617-624

ISBN: 978-989-8425-40-9

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

up displays, speakers, haptic pedals, and so on), and

“when”, taking the following two essential concepts

into account:

Multimodality: Multimodality is regarded as the

characteristic which allows for human-machine

interaction on two levels: Firstly, users with

different skills can inter-actuate with machines via

adapted Input/Output devices. Furthermore, the

output can be adapted to the user context for

different environments, e.g. driving activity. Both of

these extend and improve the HMI.

AmI: AmI is the abbreviation for the concept of

“Ambient Intelligence”, the words chosen by ISTAG

(Information Societies Technology Advisory Group)

as a guiding vision to give an overall direction to

Europe’s Information Societies Technology

program. AmI stresses the importance of social and

human factors, and it is at the forefront of a process

which introduces technology into people’s lives in

such a way that its introduction never feels like a

conscious learning curve: no special interface is

needed because human experience is already a rich

‘manual’ of ways of interfacing to changing systems

and services.

So, focusing on vehicle driving and taking

advantage of new technological solutions, e.g. voice

recognition, head-up displays, touch screens,

cameras, haptic pedals, and so on, Tecnalia proposes

a methodology designed to provide an evaluation of

the context based on several sensors data in order to

obtain the best usability and ubiquity levels for each

particular moment on every occasion and finally

optimize the driving task, mainly from the safety

point of view. Moreover, a particular model applied

within a project is also explained.

2 BACKGROUND

With respect to all the above mentioned, Tecnalia is

currently working on several projects to apply

paradigms such as multimodality and AmI to car

HMI and two of these are the following:

MIDAS (Oct’08-Sep’11): “Multimodal

Interfaces for Disabled and Ageing Society”.

MIDAS is an ITEA2 project aimed at improving the

autonomy, independence, and self-esteem of elderly

people and of people with various different kinds of

disabilities (visual-audition-mobility handicaps) by

introducing the most advanced technologies in their

everyday life by means of simple, predictable and

unobtrusive multimodal interfaces. MIDAS is

focused on home and driving scenarios,

representative of the indoor and outdoor

environments and Tecnalia is involved in driving

scenarios, mainly in the application of multimodality

to car HMI with a view to simplifying the user

interface.

MARTA (Jul’07-Dec’10): “Automation and

mobility for advanced transport networks”. National

project in Spain focused on mobility and transport

systems and how to apply ICT technologies to the

automotive sector. Tecnalia is involved in technical

& functional specifications, system designs and

developments related to HMI (Human Machine

Interface) within the vehicle, taking multimodality

concepts into account.

Based on the activities carried out and the

developments obtained up to now within these

projects, the following sections explain the context

evaluation methodology used in both (section 3),

and shows the specific “Context evaluator” module

prepared for the MARTA project (section 4).

3 CONTEXT EVALUATION:

METHODOLOGY

Driving activity is mainly a visual task but the

integration of new devices, information and safety

systems make it mandatory to have new modes to

inter-actuate with the vehicle, without reducing

safety levels of car occupants and indeed even

improving it.

Therefore a combination of different modes

(visual, haptic, voice,…) to access the information

related to the driving task has a strong influence on

driver alertness, and it appears to be very important

for the vehicle to have enough knowledge about the

current situation (Ambient) and to provide a model

of the context which is capable of helping with

decision taking about “what”, “where” and “how” to

show the information and command the vehicle

(HMI).

From this point of view, Tecnalia applies a

methodology based on four steps oriented to

preparing a model capable of reporting on any given

occasion summarised information to help with the

taking of decisions related to the HMI:

Actor’s Definition: This first step is aimed at

detecting the main entities to be considered within

the final model, and reducing the real world to the

most significant elements. In effect, this means a

modelling process which aims at the best abstraction

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

618

of a system capable of being as simple as possible

but without forgetting the essential data.

Sensors Selection and Grouping: Once the

problem has been reduced to the main actors, data

should be collected from all sensors or devices

capable of reporting information in the overall

system and related to each actor. This step is

completed with a grouping process to define specific

groups of sensors, each of which is related to a

particular actor (one actor could have more than one

group of associated sensors), but providing a well-

structured part of the actor behaviour.

Machines States Design: The third step of this

methodology is to design a machine state for each

group of sensors as previously defined. This

involves defining the main important states which

could be taken into account based on the different

range of values provided by each sensor in each

group of sensors.

Summarized States Definition: Finally, a

minimum software interface is defined in order to

provide the current status of the context according to

the particular resumed states coming from the

combination of states for each group of sensors and

taking each actor into account.

The next figure shows graphically the abstraction or

modelling process explained above:

Figure 1: Modelling process.

The following section illustrates this methodology

explaining the particular context evaluator designed

within the MARTA project (the same methodology

is being used in the MIDAS project).

4 MARTA

“CONTEXT EVALUATOR”

4.1 Actors

Actors are the system reference points for assessing

the current actual situation. So, taking the previously

explained methodology into account, three different

actors were considered to model the MARTA

context: Driver, vehicle and environment:

Driver: the person who handles the vehicle on

the roads. This is the most unpredictable component

inside a car; the system must be fully aware of the

driver’s condition.

Vehicle: the artefacts that the people use to move

from point A to point B.

Environment: this entity includes all

climatological conditions; in this case these will be

reduced to three: rain, fog and daylight.

This group of three actors defines a three dimensions

space (3D) due to the fact that each actor state is

defined by only one group of sensors, and the

corresponding machine state within the MARTA

context evaluator. This means that the MARTA

context model is fully defined by three coordinates

reported according to each state for the three

machine states corresponding to the three groups of

sensors, each of which is linked to each of the above

mentioned actors.

4.2 Sensors Grouping

Sensors are the group of devices which are able to

provide basic information (car speed, acceleration

etc.), both directly acquired from transducers or via

communication protocols available in the system,

e.g. typical CAN bus in vehicles.

Within the MARTA concept, the sensors

collection is shown below ordered by actor:

Apart from these sensors, an additional sensor

known as “ignition” is used to establish whether the

vehicle engine is switched on or off for each actor.

All this information collected by means of these

sensors enables us to prioritize any given message

over another depending on the context or the driving

situation for the car HMI (user interface) in the

MARTA project. In this way the real world is

reduced to three different groups of sensors, and the

corresponding machines states, and all the different

states for each group are explained in the next

section.

4.3 Machine States

As has been mentioned before, each group of

sensors has its own machine states representing the

behaviour of the related actor. Therefore, the

corresponding machines states designed for the

MARTA project are shown the following three sub-

sections for Driver, Vehicle and Environment.

A "CONTEXT EVALUATOR" MODEL FOR A MULTIMODAL USER INTERFACE IN DRIVING ACTIVITY

619

Table 1: Sensors.

Actor Sensor Units Meaning

Driver Drowsiness on/off

OK

Driver state (fatigue)

Fatigue

Vehicle

Speed Km/h

Stopped

Type of road derived from the car speed

Urban road

Extra-Urban road

Rural road or highway

Angular

Speed

m/s

2

Straight road or light curve

Type of road derived from the angular speed

Hard curve

Environment

Windscreen

wipers

Gears

(0-3)

No rain

Weather – Rain

Light rain

Hard rain

Fog lamps on/off

Good visibility

Visibility – (weather – fog)

Fog

Car lights on/off

Day

Visibility – (day/night)

Night

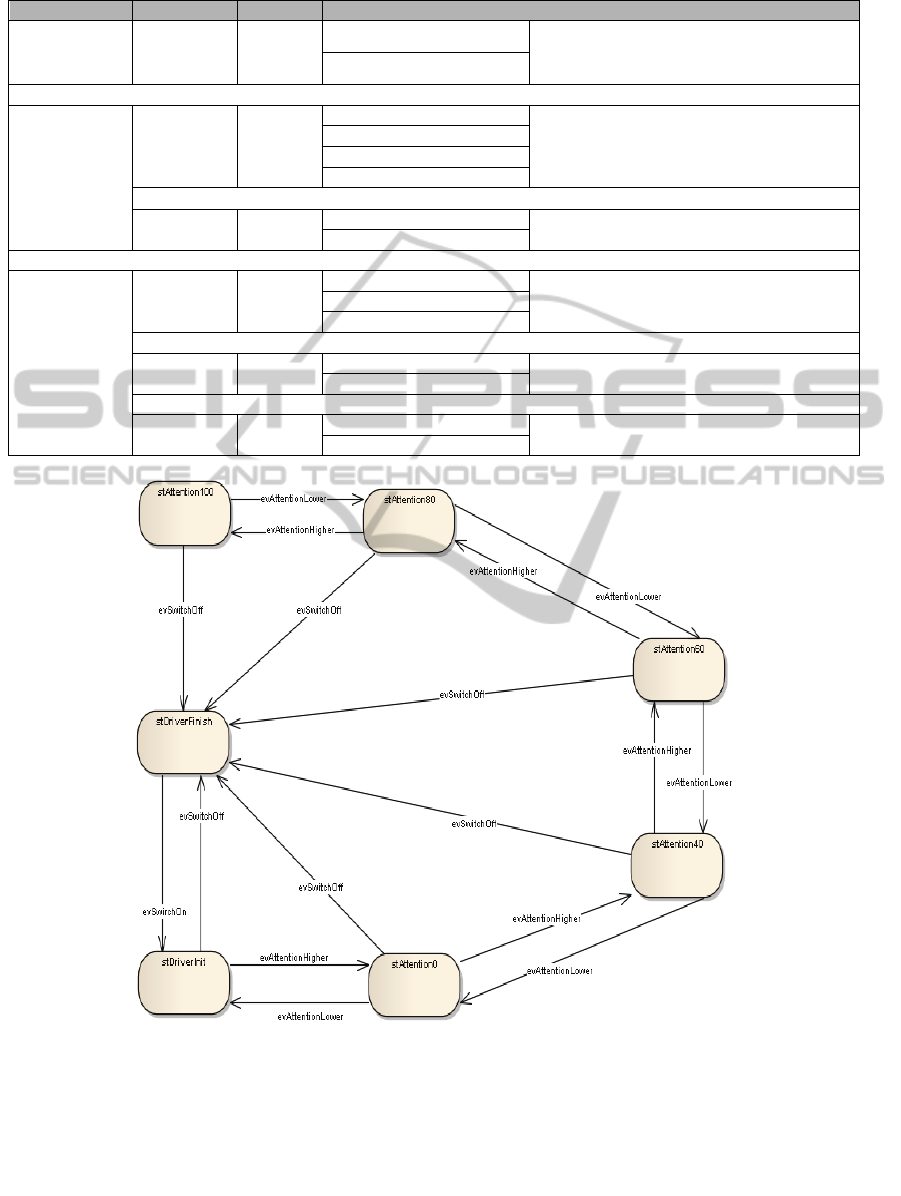

Figure 2: “Driver” Machine states.

4.3.1 Driver

The driver states are based on the drowsiness sensor,

and taking into account the different levels and the

information given by this sensor, the machine state

for the MARTA context evaluator is as follows. As

the sensor provided is able to detect four different

levels, the following machine states have been

designed taking seven different states into account:

The meaning of some of the “events” is

explained in the following table:

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

620

Table 2: Driver - Events.

Driver

Id Events Meaning

999 evSwitchOff Ignition key turned to “Off”

210 evAttentionLower

A

ttention level has decrease

d

200 evAttentionHigher Attention level has increased

The meaning of some of the “states” is explained in

the following table:

Table 3: Driver - States.

Driver

Id States Meaning

210 stDriverInit Vehicle switched off

240 stAttention60

Driver with low level of

drowsiness

260 stAttention100

Driver with optimal attention

level

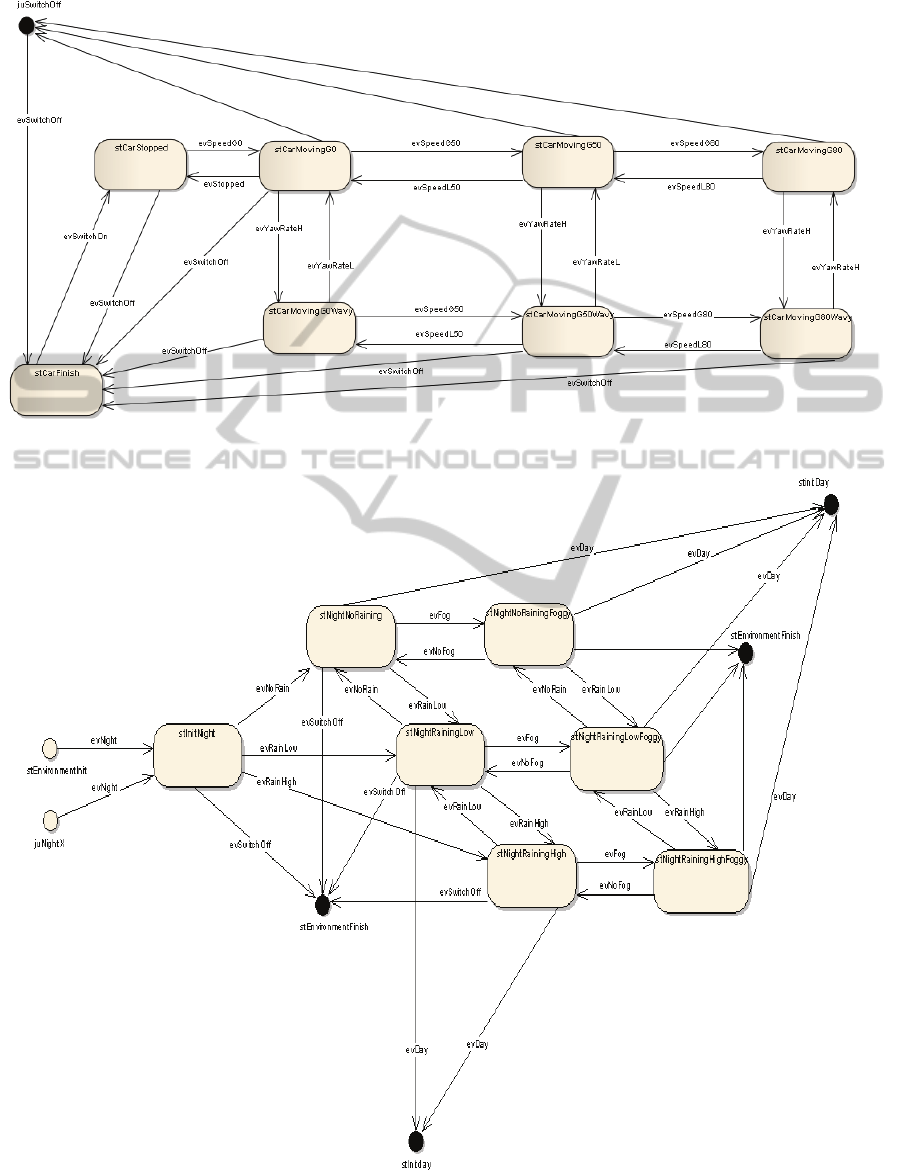

4.3.2 Vehicle

The vehicle states are mainly based on two sensors

(speed and angular speed), and taking the

information given by these sensors into account, the

following machine state has been designed for the

MARTA context evaluator:

The vehicle states take into account the speed

and the angular speed sensors, and the combined

information from these sensors generates the

machine states shown in “Appendix” – figure 4.

The meaning of some of the “events” is

explained in the following table:

Table 4: Vehicle - Events.

Vehicle

Id Events Meaning

20 evSwitchOn Ignition key turned to “On”

120 evSpeedG80 Vehicle overtakes 80 km/h

150 evYawRateH Angular Speedy greater than 2,5 m/s

2

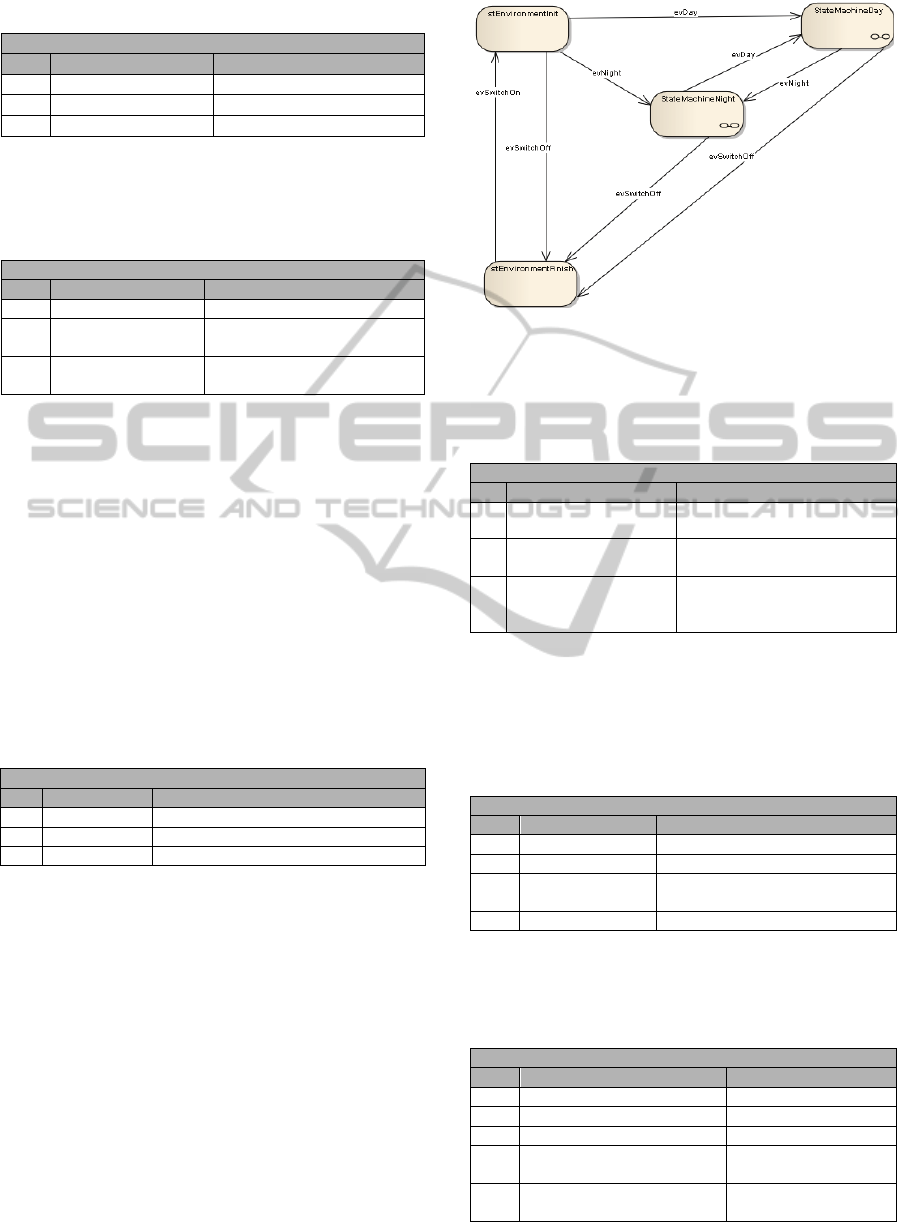

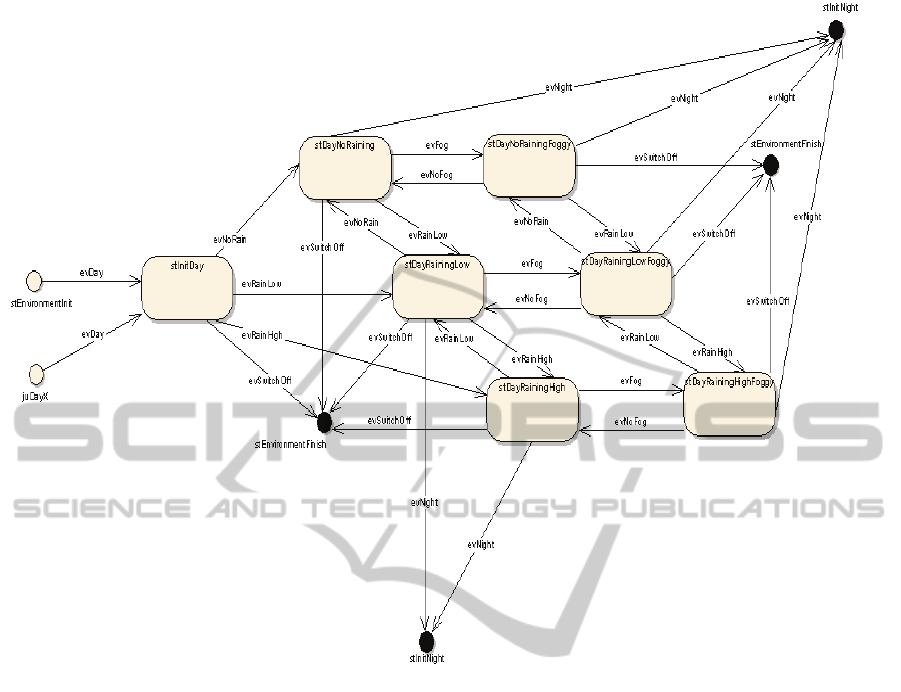

4.3.3 Environment

The “environment” machine states uses mainly the

information given by four sensors (windscreen

wipers, fog lamps and car lights).

The possible states are collected by three figures:

the first one is the general one (see figure 3), and the

next two correspond to the “night” and “day”

concepts according to the car lights button position,

meaning that if the car lights are switched on it is

supposed that the environment light is not sufficient

and the driver is driving at night. Otherwise the

driver is driving during the day (see “Appendix” -

figures 5 and 6).

Figure 3: General part of “Environment” Machine states.

The meaning of some of the “states” is explained in

the following table:

Table 5: Vehicle - States.

Vehicle

Id States Meaning

110 stCarStopped Vehicle switched “On”

160 stCarMovingG80

Vehicle moving

speed (km/h)>80

150 stCarMovingG50Wavy

Vehicle moving

50<Speed (km/h)<=80

and hard curve

In the same way as for the “Driver” and “Vehicle”

actors, the meaning of some of the “events” defined

in the previously mentioned machines states for the

“Environment” are explained in the following table:

Table 6: Environment - Events.

Environment

Id Events Meaning

20 evSwitchOn Ignition key turned to “On”

305 evNight Car lights turned on (Night)

320 evRainLow

Windscreen wipers turned on

with low speed

360 evNoFog Fog lamps turned off (No Fog)

The meaning of some of the “states” is explained in

the following table:

Table 7: Environment - States.

Environment

Id States Meaning

310 stEnvironmentInit Vehicle switched off

999 stEnvironmetFinish Vehicle switched on

360 stInitNight Start of the “Night”

375 stNightRainingLow

Night / Light Rain /

No Fog

380 stNightRainingLowFoggy

Night / Light Rain /

Fog

A "CONTEXT EVALUATOR" MODEL FOR A MULTIMODAL USER INTERFACE IN DRIVING ACTIVITY

621

4.4 Interface

The interface of the MARTA “Context evaluator”

system has the following methods to change the

state of a sensor and to retrieve information from it:

mGetContext(): return a vector of values that

contains information about the actual state of the

actors.

mGetResumeContext(): return a context of the

vehicle determined by an integer, where:

0–> ignition off, 1–>ignition on (car stopped), 2–>

favourable, 3 –> risky, 4 –> critical;

mReset(): assign the state Id=“999” to all actors,

resetting all machines states.

mSetSensor(int IdSensor, int Level): assign a

specific level (Level) to a sensor (IdSensor).

5 CONCLUSIONS

The main conclusions which could be mentioned

related to this work, the concept of “Context

evaluation”, the necessity for new HMI for cars, and

specially when it is applied the multimodality are the

followings:

Developments in recent years in the field of

electronics and applications in the automotive sector

have made it possible to integrate within vehicles

new technologies (mobile phones, GPS or ADAS).

The quantity of information for the vehicle driver

is increasing due to new applications and

functionalities are being added to cars.

Much information can have a strong negative

influence in terms of decreasing safety.

Consequently, there is an increasing demand for

more efficient control architectures and

technologies, especially for managing the data

provided to the driver by the HMI in order to not

disturb him from driving.

Tecnalia propose a particular methodology and

the corresponding “Context Model” solution,

oriented to evaluating the context and help on taking

decisions about “what data” to show, “where”, and

“when”, taking two essential concepts into account:

Multimodality and Ambient Intelligence.

Tecnalia “Context model” methodology is based

on four steps oriented to preparing a model capable

of reporting on any given occasion summarised

information to help with the taking of decisions

related to the vehicle HMI: Actors’ definition,

Sensors selection and grouping, Machine States

design, and Summarized states definition.

ACKNOWLEDGEMENTS

All activities reported in this paper have been

collected from tasks carried out within two R&D

projects supported by “Ministerio de Industria,

Turismo y Comercio” of Spain: MIDAS (TSI-

020400-2008-26) and MARTA (CENIT).

REFERENCES

David J. Wheatley, Joshua B. Hurwitz. The use of a multi-

modal interface to integrate in-vehicle information

presentation. User Centered Research, Motorola Labs,

Schaumburg, Illinois, USA, 2001.

Roberto Pieraccini, Krishna Dayanidhi, Jonathan Bloom,

Jean-Gui Dahan, Michael Phillips, Bryan R.

Goodman, K. Venkatesh Prasad. A Multimodal

Conversational Interface for a Concept Vehicle. The

Id: Graduate Faculty, Psychology Society Bulletin,

Volume 1, Nº1, 2003.

Gregor McGlaun, Frank Althoff, Hans-Wilhelm Ruhl,

Michael Alger, Manfred Lang. A Generic Operation

Concept for an Ergonomic Speech MMI Under Fixed

Constraints in The Automotive Environment. Institute

for Human-Machine Communication, Technical

University of Munich.

Alfonso Ortega, Federico Sukno, Eduardo Lleida,

Alejandro Frangi, Antonio Miguel, Luis Buera,

Ernesto Zacur. AV@CAR: A Spanish Multichannel

Multimodal Corpus for In Vehicle Automatic Audio-

Visual Speech Recognition. Communication

Technologies Group and Computer Vision Group,

Aragon Institute of Engineering Research (I3A),

University of Zaragoza, Spain, 2004.

Kai Richter, Michael Hellenschmidt. Interacting with the

Ambience: Multimodal Interaction and Ambient

Intelligence. Position Paper to the W3C Workshop on

Multimodal Interaction, 19-20 July 2004.

Fabio Paternò. Multimodality and Multi-device Interfaces.

ISTI-CNR, Pisa, Italy, 2004.

Frank Althoff, Gregor McGlaun, Manfred Lang, Gerhard

Rigol. Comparing an Innovative 3D and a Standard

2D User Interface for Automotive Infotainment

Applications. Institute for Human-Machine

Communication, Technical University of Munich.

2003.

Murgoitio J, Fernández JI. Car driver monitoring by

networking vital data. Advanced Microsystems for

automotive applications, AMAA 2008; 37-48.

Sanchez Pons Francisco, Sanchez Fernadez David, Saez

Tort Marga, Gonzalez Garcia Pilar, Robleda

Rodriguez Ana Belen. Data Fusion Strategies for Next

Generation ADAS: Towards Full Collision Avoidance.

FISITA 2010, 30 May-4 July 2010.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

622

APPENDIX

Figure 4: “Vehicle” Machine states.

Figure 5: “Environment–Night” Machine states.

A "CONTEXT EVALUATOR" MODEL FOR A MULTIMODAL USER INTERFACE IN DRIVING ACTIVITY

623

Figure 6: “Environment–Day” Machine states.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

624