IDENTIFICATION AND RECONSTRUCTION OF COMPLETE GAIT

C

YCLES FOR PERSON IDENTIFICATION IN CROWDED SCENES

Martin Hofmann, Daniel Wolf and Gerhard Rigoll

Institute for Human-Machine Communication, Technische Universit¨at M¨unchen

Arcisstr. 21, Munich, Germany

Keywords:

Gait recognition, Person identification, Occlusions, Gait cycle reconstruction, Graph cuts.

Abstract:

This paper addresses the problem of gait recognition in the presence of occlusions. Recognition of people

using their gait has been an active research area and many successful algorithms have been presented. However

to this point non of the methods addresses the problem of occlusion. Most of the current algorithms need a full

gait cycle for recognition. In this paper we present a scheme for reconstruction of full gait cycles, which can be

used as preprocessing step for any state-of-the-art gait recognition method. We test this on the TUM-IITKGP

gait recognition database and show a significant performance gain in the case of occlusions.

1 INTRODUCTION

Person identification using gait information has be-

come an established field of research. In order to

identify people, current applications successfully use

physiologic features such as face, iris and fingerprint.

However it is also possible to detect people using be-

havior based features such as voice, dialect, signature

and gait. The main advantage of using gait features

over other features (like face, iris, fingerprint) is the

possibility to identify people from large distances and

without the person’s direct cooperation. For exam-

ple, in low resolution images, a person’s gait signa-

ture can be extracted, while the face is not even visi-

ble. Also no direct interaction with a sensing device

is necessary, which allows for undisclosed identifica-

tion. Thus gait recognitionhas great potential in video

surveillance, tracking and monitoring.

A major challenge for recognition of people using

gait is that almost all current approaches (Han and

Bhanu, 2006)(Lee and Grimson, 2001)(Wang et al.,

2003) need a sequence of the video, where a complete

gait cycle of the walking person is visible. A complete

gait cycle is a sequence starting with one foot forward

and ending with the same foot forward.

In most databases this is not a problem, because

the databases are constructed to always show the com-

plete gait cycles. However in actual real world ap-

plications, fully visible gait cycles can not always be

guaranteed. Assume for example an airport, a train

station or other crowded places. In these scenarios,

the gait cycles can be corrupted due to occlusions by

other walking pedestrians. Current approaches fail in

these cases.

We thus present a method to overcome these lim-

itations. Therefore we propose a preprocessing stage,

which effectively performs motion segmentation on

the input video and reconstructs synthetic complete

gait cycles using partial information available from

the corrupted and occluded input sequences.

In principle our methods consists of two separate

parts: First, all walking people are detected, tracked

and accurately segmented. Thus the first part can be

thought of as an application of motion segmentation.

Once people are segmented in each frame, in the sec-

ond part, the gait cycle of each person can be analyzed

and complete gait cycles can be reconstructed.

We test our method on the TUM-IITKGP gait

recognition database (Hofmann et al., 2011). This

database features sequences where each person is

completely visible and other sequences where each

person is occluded by other pedestrians. On the two

baseline algorithms, we show that our preprocessing

greatly increases recognition results in the case of oc-

clusions.

In Section 2 and 3 we present the two processing

parts. We show results in Section 4 and we conclude

in Section 5.

594

Hofmann M., Wolf D. and Rigoll G..

IDENTIFICATION AND RECONSTRUCTION OF COMPLETE GAIT CYCLES FOR PERSON IDENTIFICATION IN CROWDED SCENES.

DOI: 10.5220/0003329305940597

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2011), pages 594-597

ISBN: 978-989-8425-47-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

2 PERSON TRACKING

AND SEGMENTATION

In this section we present our motion segmentation

method, which is used to detect, track and accurately

segment a varying number of people in the input

videos.

We use a graph based image segmentation tech-

nique. Here an image is represented as an undirected

graph. Nodes correspond to pixels and a cut through

connecting edges reveils the segmentation. A simi-

lar approach for motion segmentation is for example

(Bugeau and P´erez). Our approach, however, is dif-

ferent in that we incorporate an explicit counting of

people using mean shift.

We define P the set of all pixels in a frame and

L the set of possible labels. We denote by l(p) ∈ L

the labeling for a specific pixel p ∈ P and l(P) =

{l(p)|p ∈ P } the set of all label assignments. N

denotes the standard 4-connected neighborhood and

consists of the corresponding pixel pairs (p, q). With

these definitions, the Energy definition becomes:

C(l(P)) =

∑

p∈P

C

D

ata

(l(p))

+ γ·

∑

(p,q)∈N

C

S

mooth

(l(p), l(q))

+ δ·C

Labels

(l(P )) (1)

The data term describes of assigning labels to indi-

vidual nodes. The smoothness term ensures smooth-

ness of the resulting objects and the label term dis-

courages the use of too many (small) objects. The

data and smoothness term are explained in detail be-

low, the label term C

Labels

= |L

′

| is the number of la-

bels used in the final assignment.

2.1 Data Term

The data term C

Data

(l(p)) describes the costs asso-

ciated with assigning label l(p) to pixel p. In our

work, the data term consists of a term defined by

background subtraction and a term defined by optical

flow.

C

Data

(l(p)) = α ·C

BS

(l(p)) +C

OF

(l(p)) (2)

For the background model, we use the first frame

of the sequence (which is always empty in the used

database). We use the YCbCr color space and take

the absolute difference in intensity I(p) as the mea-

sure. We do efficient shadow suppression by setting

I(p) = 0 (thus background) for pixels, whose Cr and

Cb values are similar to the background model but

Figure 1: Predicting label assignment using optical flow.

may have a significant intensity difference. Let l

H

be

the label of the background and L

V

be the set of all

other labels representing people. Then the costs for

labeling pixel p with label l becomes

C

BS

(l(p)) =

(

I(p) f¨ur l = l

H

(1− I(p)) f¨ur l ∈ L

V

(3)

The background term C

BS

described above is able

to separate background from moving objects, how-

ever naturally it is not possible to distinguish between

multiple walking people. Thus we additionally incor-

porated an optical flow term C

OF

, which (1) separates

objects moving in different directions and at the same

time (2) ensures consistent tracking of objects (i.e.

keeping the objects identity)

We first apply mean shift clustering on the out-

put from optical flow (Farneb¨ack, 2002). This not

only finds the number of objects in the frame, but

also gives a rough estimate on where the respective

objects can be found. More specifically we use a 3-

dimensional mean shift, with x, y and φ dimensions.

Here φ correspondsto the direction of the flow at pixel

p. We set the size of the used mean shift vector to ap-

proximately the expected height and width of a person

([80 × 200] Pixels), as well as 80

◦

for the flow direc-

tion.

Because the mean shift clustering is independent

from previous frames, the labels from the mean shift

clustering are arbitrary and have to be matched to the

labeling of the energy formulation. This is illustrated

in Figure 1. First, a predicted labeling is calculated

from the previous frame using the optical flow esti-

mate v(p) at each pixel:

ˆ

l(p + v(p)) = l(p). Then

each mean shift cluster is assigned the label which

best fits to the predicted labellings.

Let P

l

be the set of pixels in the mean shift cluster

which best fits the original pixels in cluster l. Then

the contribution to the data term is defined as

C

OF

(l(p)) =

(

−β f¨ur p ∈ P

l

0 sonst

(4)

Thus, assigning a given label is encouraged by β,

if a corresponding object has been found by the mean

shift clustering.

IDENTIFICATION AND RECONSTRUCTION OF COMPLETE GAIT CYCLES FOR PERSON IDENTIFICATION IN

CROWDED SCENES

595

2.2 Smoothness Term

The smoothness term models the similarity of the

color of adjacent pixels p

1

and p

2

. We set it as fol-

lows:

C

Smooth

(l(p

1

), l(p

2

)) =

(

0 l(p

1

) = l(p

2

)

e

−

kc(p

1

)−c(p

2

)k

2

σ

2

l(p

1

) 6= l(p

2

)

(5)

Here, c(p

i

) is the color vector of pixel p

i

.

2.3 Energy Minimization

For minimizing the energy of 1, we use the α-

expansion algorithm (Boykov et al., 2001)(Kol-

mogorov and Zabih, 2004) including label term (De-

long et al., 2010). Thus we seek to find the optimal

labeling

ˆ

l(P) = argminC(l(P )).

3 GAIT RECONSTRUCTION

The second part of the paper describes how to de-

tect occlusions and how to compensate for them. The

main idea is to replace silhouettes, which are com-

pletely or partly occluded, by silhouettes from other

frames, which are not occluded. To this end, two steps

are necessary: First all silhouettes which are occluded

are found. Then the gait period is calculated and lastly

so called ”reconstruction frames” are searched and

used to replace the corrupted silhouettes.

3.1 Detection of Occlusions

A good feature to detect occluded frames is the num-

ber of pixels in the silhouette. Figure 2 shows the

number of pixels in a tracked silhouette. It can be

seen that frames between 145 and 150 are definitely

occluded. In order to find the precise range of oc-

cluded frames we first calculate the median M

P

of

pixels. Silhouettes which have less than

1

2

· M

P

pix-

els are definitely occluded. From these silhouettes we

search backward and forward until the number of pix-

els goes above0.95·M

P

and all pixels in this range are

also considered part of the occluded range.

3.2 Measuring the Gait Period

For the last step of finding reconstruction frames, it is

necessary to have a good estimate of the gait period

T. In order to find the gait period, for each frame,

the lower half of the silhouette is correlated with the

lower parts of the silhouettes in all other frames. The

difference in frame number to the frame with the best

0

2000

4000

6000

8000

10000

12000

number of pixels

100 110 120 130 140 150 160 170 180 190 200

frame number

number of pixels

nr of pixels

median

Figure 2: Number of pixels in a silhouette.

match is recorded in a list. The median frame differ-

ence in this list yields the gait period we are looking

for. This relatively simple method performs very re-

liably and correctly finds the gait period for all the

sequences in the database.

3.3 Finding Reconstruction Frames

The goal is to reconstruct the silhouettes which are

corrupted by occlusions. The procedure is best de-

scribed using Figure 3. Here, frames 48-50 (red) are

the occluded frames which are to be reconstructed.

We seek to find silhouettes from other frames which

are similar to the occluded ones. This is possible, be-

cause it can be assumed that the sequence consists of

multiple gait cycles, which are very similar to each

other. Thus the corrupted frames can be replaced by

corresponding frames from another gait cycle. Of

course, the corrupted frames themselves cannot be

used to find corresponding frames. Thus we take the

last non-corrupted frame (frame 47 in Figure 3) and

search for a best match using normalized correlation.

It is important to note that this search may not be

performed on all other available frames, but only on

those which are in a search region, either one or more

gait periods ahead or in the past. This is necessary

to avoid incorrect matches for example to a silhouette

where the opposite foot is extended. Thus, the possi-

ble search ranges are S

k

= [kT − ∆, kT + ∆], ∀k 6= 0.

Once the best matching frame is found, the corre-

sponding frames are copied to replace the corrupted

frames.

one gait period back

one gait period forward

regular framesearch window

search window

last non-occluded frame

occluded frames

Figure 3: Search area for finding the reconstruction frames.

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

596

4 RESULTS

To our knowledge, not much work has focused on

gait recognition in the presence of occlusions. How-

ever it is a very important issue when moving to-

wards a real working gait recognition system. There

are many gait recognition databases, e.g. HumanID,

UCSD, CMU Mobo, Soton, CASIA, and others (Hof-

mann et al., 2011). We use the TUM-IITKGP gait

recognition database (Hofmann et al., 2011), because

it focuses on gait recognition with occlusions. This

database features 35 individuals which are recorded

in six different configurations (Regular, hands-in-

pocket, backpack, gown, dynamic occlusions, static

occlusions). In this paper we use the first configura-

tion for training and focus on the last two configura-

tions for recognition. For all our experiments we set

[α = 200, β = 80, γ = 30, δ = 40.000].

We compare our results to the two baseline algo-

rithms described in (Hofmann et al., 2011). In Table

1 evaluation results are shown. The first algorithm

(based on color histograms) is clearly outperformed.

The second baseline algorithm (based on Gait Energy

Image) could not work without the preprocessing we

propose in this paper.

Table 1: Top 1 recognition rates for Baseline 1 (Color His-

togram) and Baseline 2 (Gait Energy Image).

dynamic

occlusions

static

occlusions

Baseline 1 43.7% 70.0%

Baseline 1 + ours 84.3% 87.5%

Baseline 2 - -

Baseline 2 + ours 67.2% 72.7%

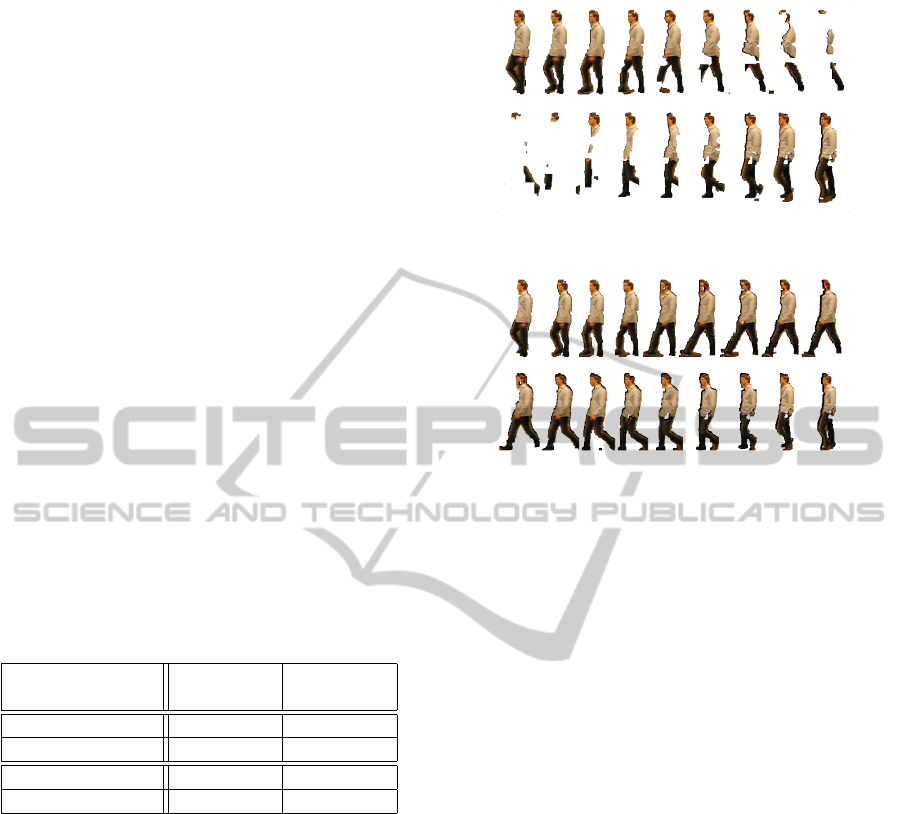

Qualitative results of our proposed reconstruction

method are shown in Figure 4. It can be seen that the

corrupted sequence is nicely reconstructed.

5 CONCLUSIONS

In this paper we have shown a preprocessing stage

that allows to reconstruct complete gait cycles such

that gait recognition is possible in spite of occlusions.

In principle this preprocessing can be applied to any

gait recognition algorithm. In our experiments we

have shown that a preprocessing like ours is in fact

beneficial in the case of occlusions.

(a) Sequence with occlusions

(b) Reconstructed sequence

Figure 4: Example of reconstruction (b) of gait cycle which

was originally (a) corrupted by occlusions.

REFERENCES

Boykov, Y., Veksler, O., and Zabih, R. (2001). Efficient ap-

proximate energy minimization via graph cuts. IEEE

TPAMI, Number 20(12):1222-1239.

Bugeau, A. and P´erez, P. Track and cut: Simultaneous

tracking and segmentation of multiple objects with

graph cuts.

Delong, A., Osokin, A., Isack, H. N., and Boykov, Y.

(2010). Fast approximate energy minimization with

label costs. CVPR.

Farneb¨ack, G. (2002). Polynomial Expansion for Orienta-

tion and Motion Estimation. PhD thesis, Link¨oping

University, Sweden.

Han, J. and Bhanu, B. (2006). Individual recognition using

gait energy image. IEEE TPAMI, Volume 28, Number

2.

Hofmann, M., Sural, S., and Rigoll, G. (2011). Gait recog-

nition in the presence of occlusion: A new dataset and

baseline algorithms. In International Conferences on

Computer Graphics, Visualization and Computer Vi-

sion (WSCG).

Kolmogorov, V. and Zabih, R. (2004). What energy func-

tions can be minimized via graph cuts? IEEE TPAMI,

Number 26(2):147-159.

Lee, L. and Grimson, W. E. L. (2001). Gait analysis for

recognition and classification. MIT Artificial Intelli-

gence Lab, Cambridge.

Wang, L., Tan, T., Ning, H., and Hu, W. (2003). Silhouette

analysis-based gait recognition for human identifica-

tion. IEEE TPAMI, Vol. 25, No. 10.

IDENTIFICATION AND RECONSTRUCTION OF COMPLETE GAIT CYCLES FOR PERSON IDENTIFICATION IN

CROWDED SCENES

597