LAYOUT FOR LEARNING

Designing an Interface for Students Learning to Program

Suzan Badri

Academic Development Centre, Kingston University, London, U.K.

James Denholm-Price, James Orwell

Faculty of Computing, Information Systems and Mathematics, Kingston University, London, U.K.

Keywords:

Content delivery, Presentation, Programming.

Abstract:

Many students have difficulty learning to program. It is conjectured that this difficulty may be increased by

a disorganisation of the resources available to the student while they are learning. The unfamiliarity of terms

and concepts, and frustration with mysterious errors, is exacerbated by the struggle with multiple windows and

the attempt to memorize patterns which would be better viewed concurrently. As a consequence, a layout for

learning programming is proposed: the aim of the proposal is to ensure that the students can easily arrange for

the relevant resources to be displayed concurrently, without further manipulation of the application windows.

Three types of resource are considered: the editor, the question sheet (instructions) and further reference

resources such as glossaries, descriptions of concepts and common tasks. An HTML template is proposed

to accommodate these last two types of resource. It is designed to allow all three materials to be positioned

and selected and thereby allow for the concurrent display of the relevant resources. An evaluation of these

proposals is presented, and the prospects for further development are considered.

1 INTRODUCTION

Over the past decade, on-line learning materials have

become commonplace – especially for Information

Technology subjects such as Computer Programming.

This ‘E-learning’ material has facilitated worldwide

interaction and the exchange of information between

field experts and learners. It created instant access to

global resources, increased communication, and en-

abled the possibility to share and publish information

to a global audience (Dabbagh, 2005).

The effectiveness of the e-Learning material de-

pends on many factors. These include: the relevance

and quality of the material; the extent of support from

peers and instructors; and the fitness of the IT hard-

ware used for this purpose. The format and layout

of the e-learning material is also an important factor.

This is especially true in a subject area such as Com-

puter Programming, which students often find bewil-

dering and frustrating. A short review of previous

work in this area is provided in Section 2.

E-learning resources are usually the product of a

process that involves developing content, storing and

managing content, packaging content, student sup-

port and assessment (Govindasamy, 2001). This pa-

per proposes a simple layout styling for instructions

and reference material. The design considerations are

discussed in Section 3.The focus is: how the mate-

rial is presented to the student, and how the interac-

tion is planned. A simple html implementation is pre-

sented in Section 4. The emphasis is on straightfor-

ward, easily packaged material, without requirements

for server-side functions, since these may inhibit the

applicability and sustainability of the material. The

on-going evaluation is described in Section 5. A dis-

cussion of further work and some concluding remarks

are provided in Section 6.

2 PREVIOUS WORK

2.1 e-Learning Theories and Systems

Developers of e-learning resources should consider

the usability and accessibility of the resources as a

324

Badri S., Denholm-Price J. and Orwell J..

LAYOUT FOR LEARNING - Designing an Interface for Students Learning to Program.

DOI: 10.5220/0003346403240332

In Proceedings of the 3rd International Conference on Computer Supported Education (CSEDU-2011), pages 324-332

ISBN: 978-989-8425-49-2

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

priority. These considerations may reduce or prevent

the digital divide’ (Ardito et al., 2006). The digital di-

vide is a concept that describes the difference between

those who have access to on-line resources and those

who have not; or between users and non-users. The

digital inequality is an extension to describe the in-

equality in having tools, equipments, resources, sup-

port, skills, and cognitive abilities for those who are

already on-line (DiMaggio and Hargittai, 2001).

One framework for e-learning, proposed by Dab-

bagh (Dabbagh, 2005), proposed three components:

Pedagogical models, Instructional and learning strate-

gies and Learning technologies. Pedagogical models

are cognitive models or theoretical structures orig-

inated from the knowledge about cognitive process

that helps the learner to encode information into their

memory. They are based on learning theory and they

provide a link from theory to practice. The instruc-

tional and learning strategies are derived from the

Pedagogical models: the instructor and the designer

can use these strategies to plan how the learner can en-

gage with learning process. The result of translating

a pedagogical model into a strategy can result into a

number of activates for the learner; the learning tech-

nologies are the tools to present a case or a problem

using graphics, video, audio, animation and hypertext

(Dabbagh, 2005).

The aim of developing an educational tool should

be to help students to learn, therefore it is essential

that the tool must be engaging, support the learn-

ing outcome, intuitive and natural (Ardito et al.,

2006). The way in which the tasks are presented to

the learner should follow effective usability guide-

lines, and effective evaluation methodologies to im-

plement usable interfaces. Often, e-learning systems

are electronic transcript of the traditional material

could be presented through unfriendly system, con-

fusing menu, many clicks, different screens, unrelated

tags. This may reduce the learner’s motivation and at-

titude. Arguably, much of students’ activity within

Virtual Learning Environment is administrative, such

as reading announcements and downloading lecture

notes.

The success of e-learning resources depends on

the level of its usability: if the system isn’t usable,

students can be distracted, and instead of trying to

learn how to use it, they only use it to obtain content.

As students are learning new concepts, they need an

easy-to-use system with updated content. Staff, on the

other hand, will be looking for a system that they can

keep the content updated (Ardito et al., 2006).

2.2 Challenges Specific to Learning

Programming

The activity of learning to programme is itself a skill

(Wolf, 2003). Learning a programming language re-

quires familiarity with a set of skills and processes

(Jenkins, 2002). Jenkins describes that the hierarchy

of skills starts with learning the basics of the syntax,

semantics, structure which leads to learning a specific

style. A typical approach for programmers is as fol-

lows: the process starts with a specification; this is

translated into an algorithm, the algorithm is mapped

to a set of instructions based on previous experience

and then finally the algorithm is translated into pro-

gram code. The process of learning to programme

has been described to be ‘systematic, incremental and

non-linear’. Beginners are encouraged to start with

small lines of code, test them, and sometimes re-think

the prior structure of the programme (Bennedsen and

Caspersen, 2005).

First-year university students are usually taught

programming by a weekly lecture and practical ses-

sion. The lectures introduce them to the theory which

covers concepts, program syntax and methods. The

students are required to complete practical sessions

which require learning a combination of skills: the

structure and syntax of the programming language,

the integrated development environment (IDE) and

the development process. Students must also solve

a series of questions and this is usually is part of the

module assessment.

In the practical sessions, students must simultane-

ously marshal the necessary on-line documents and

editors, switching their attention between them as

necessary. They are faced with a limited screen size

and they are typically novice users of the tools. The

user may become lost and confused while trying to

find the relevant information (Lif et al., 2001). Some

students may face what is known as ‘cognitive over-

load’ (Mayer and Moreno, 2003): a term used to de-

scribe what learners, in particular beginners, can face

when asked to use multiple systems. An increase in

learners’ cognitive load may result in a decrease in

students’ motivation to learn (Wolf, 2003).

Jenkins discusses ‘cognitive factors’ (Jenkins,

2002): the motivation to learn is one of these factors

that Jenkins considers may explain why students find

programming difficult. He suggests that some stu-

dents have natural motivation to learn programming,

while some have a motivation to succeed and endure

a successful career and other are pressured by their

social surroundings and families.

It has been suggested by Hodges that students who

are motivated to learn could have a better chance to

LAYOUT FOR LEARNING - Designing an Interface for Students Learning to Program

325

succeed compared with those who are not. Students

who are learning well will have a higher level of mo-

tivation to learn in the future (Hodges, 2004).

Jenkins also suggests that students’ learning style

is another cognitive factor in the difficulties found

by students to learn to program. However, with

a traditional classroom one teacher to many stu-

dents, it’s difficult to have an individualised learn-

ing style (Wolf, 2003). The creators of the iweaver’

project have designed a tool to accommodate indi-

vidual learning styles in an adaptive e-learning envi-

ronment that teaches the Java programming language.

The project was an e-learning resource with dynam-

ically adapted digital content might be an effective

method for personalised learning.

2.3 Existing Approaches

Here, the e-learning resources and specific projects

used in computer programming will be discussed.

The dynamic approach in teaching programming is

to use or to integrate e-learning resources. Record-

ings of teaching materials is one approach, this can be

achieved either by setting a lecture theatre for record-

ing the teacher while they demonstrate the solutions,

this will involve the use on an IDE, on-line documen-

tation, testing and debugging. These recording could

also be streamed.

2.3.1 Videos of Screen Captures

These are pre-recorded tutorials and solutions to pro-

gramming problems using screen capture software.

They are known as ’process recording’ (Bennedsen

and Caspersen, 2005) or Screencasts (Lee et al.,

2008). The teacher talks through the problem and the

student can follow on their own pace and view the

record over a number of times. This type of resources

has been proved to be popular and successful with stu-

dents and teachers (Bennedsen and Caspersen, 2005).

There is a number of recording software, such as

Camtesia, Captivate and Window Media Encoder and

Editor, there is no need to extra software or a special

studio to create these tutorials. The videos can be de-

livered independently or embedded in a web-page and

delivered within a Virtual Learning Environment.

2.3.2 i weaver

The’ i weaver’ project was developed to highlight the

importance of students’ different learning styles in

an adaptive e-learning environment that teaches Java

programming language (Wolf, 2003). This project

used an inventory to assess the students’ learning

styles, it used a 118 multiple choice question based on

the Dunn & Dunn learning style model. Before enter-

ing the learning environment, the students receive an

analysis of their learning style profile. When the stu-

dents enter the learning environment they presented

with two out of four media experience (Visual text,

visual pictures, interactive animations and auditory

media experience.) This is to decrease the cognitive

overload (Wolf, 2003). The learning content are di-

vided into modules, the modules have units, learners

can repeat a unit using a different media experience

or move to the next unit. The learner is asked to rate

their experience and overall satisfaction. The set of

multiple choices tests are also conducted after each

module.

2.3.3 BlueJ

The BlueJ software is an integrated development envi-

ronment. It is designed to teach introductory Java pro-

gramming language using a set of resources, exam-

ples and resources based on a pedagogical approach.

The creators of BlueJ aimed to make the environ-

ment an object oriented through using visual methods

that help to represent objects and classes and the re-

lationship between them. They demonstrate that their

method of visualisation and the frequent interaction

with single objects and methods can give the students

a better understating of the Java language. They also

claim that BlueJ has a simple user interface with min-

imal distractions. This can also help to increase stu-

dents’ understanding of the programming principles

(K

¨

olling, 2008)

3 DESIGN CONSIDERATIONS

The point of departure, for designing the on-screen

layout of workshop material, is that the student should

easily be able to arrange for all relevant information

to be concurrently displayed. We consider there to be

three main elements of this material:

1. The set of workshop questions, possibly including

snippets of source code or starting programs.

2. Reference material, for example glossaries, ‘lec-

ture note’-style descriptions of concepts, and in-

structions how to complete common tasks.

3. The ‘development environment’: this could be a

text editor with compile command or command

line, or an ‘integrated development environment’

Using previous observations of students’ interaction

with learning material, the following issues were

noted:

CSEDU 2011 - 3rd International Conference on Computer Supported Education

326

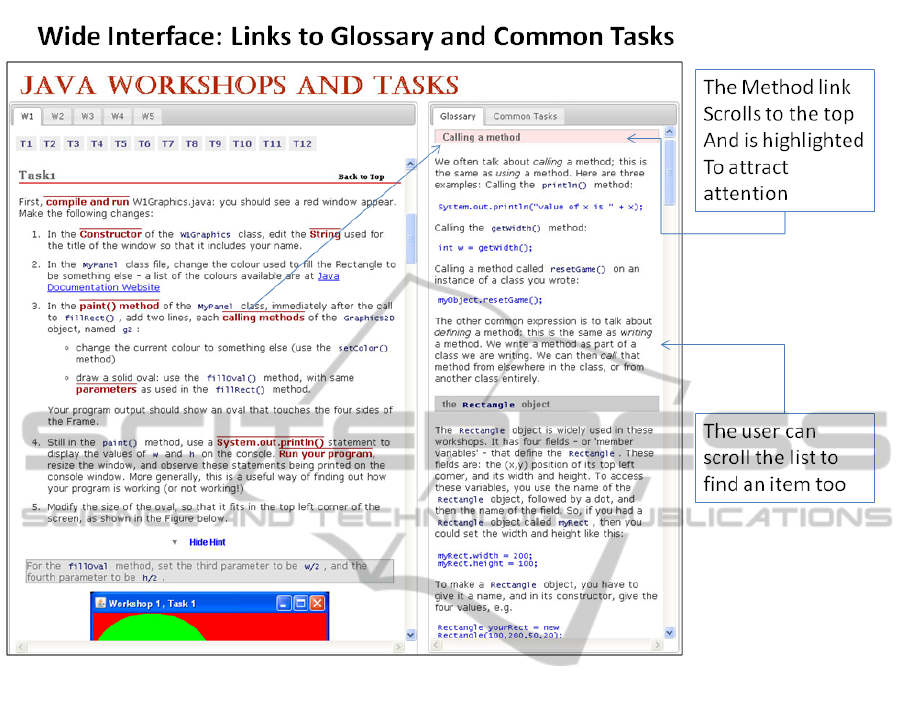

Figure 1: The proposed two-column interface with some example content. Left: Workshop instructions (tasks) with hints that

can be revealed. Right: two tabs of content containing a glossary of terms, and descriptions of common tasks and concepts.

1. Many students would have difficulty in locating

the relevant supporting material. For example,

they would not always locate the exact lecture

slides that provide the help that they need (nor

recognize those slides if they had located them).

Or they would use an internet search, and then

use retrieved material that was not always appro-

priate (such as integrating some more advanced

techniques that they had not yet covered).

2. Most students would sometimes have difficulty in

organising all the material that they had located.

For example, relatively few students would at-

tempt to organise the windows side-by-side; in-

stead they alternate their attention between full

screen windows.

3. Many students would seem to encounter less dif-

ficulties in learning computer programming if the

task of locating the relevant information, and ar-

ranging it on-screen, were easier.

4. A programming task typically has a series of com-

ponents which must be competed in order. De-

termining the amount of detail that should be

presented to the student is a difficult balance.

Too much detail, and the overall objective be-

comes obscured and the student becomes reliant

on word-by-word instructions. Too little detail,

and students not knowing how to start the task are

left without any clear route.

A general point about the on-screen layout of material

can be made. The aspect ratio of computer screens is

‘landscape’, and increasingly so: from 4:3 to 16:9,

with HD resolution of 1920 by 1080. This is use-

ful for arrangement of multiple columns of content,

side by side. We plan for the three elements of ma-

terial listed to each be displayed in a separate column

of the student’s display. The ‘development environ-

ment’ is a separate (single column) application win-

dow, occupying one-third of the display width. How-

ever, there are advantages for integrating the work-

shop questions, and reference material, into a single

web browser window, having two columns and occu-

pying two-thirds of the display.

These advantages are several-fold. Firstly, as a

single window on the student’s display, it is easier

LAYOUT FOR LEARNING - Designing an Interface for Students Learning to Program

327

for the student to organise and in particular trivial

to arrange to that both questions and reference ma-

terial are visible. Secondly, hyperlinks can be used

to the Workshop content area to the relevant parts of

reference material, which would be displayed concur-

rently in the reference content area. Finally, there is

arguably an advantage in making an explicit require-

ment on the content author, to provide adequate refer-

ence material.

Thus, the first design decision is a two-column or-

ganisation of the content, with question-specific ma-

terial on the left and general reference material on the

right. The second design decision is to provide a fa-

cility for detail or hints about a particular task to be

initially hidden, and only revealed when requested by

the student. This allows the overall flow of the tasks

to be viewed, and also encourages students to attempt

the questions before revealing the hint and using the

source code it provides.

The design of the interface will reflect the learning

methods for programming which is a combination of

a surface’ and ‘deep’ methods (Jenkins, 2002). The

surface learning style can help to memorise the syn-

tax of the language; the deep learning style gives the

learner the ability to spot a problem and find a solu-

tion based on previous experiences. The design of the

interface will also consider the use of multimedia.

The interface will present some information si-

multaneously and take into account the relationship

between the presentation of information and decision

making and how results from research indicates that

simultaneous presentations of information can lead to

a faster decision making (Lind, 1994).

The other important step is to provide accessible

e-learning resources to a wider audience, users with

different cultural background, cognitive capabilities,

and technical skills. Successful resources will gener-

ate meaningful learning: this term is used to define

the interaction between pedagogical models, instruc-

tional strategies, and learning technologies (Dabbagh,

2005).

3.1 Examples of Similar Designs

Graphical User Interface Applications have several

conventions for organising areas of content. Perhaps

the most sophisticated is the ‘Integrated Development

Environment’ (IDE), used by developers of computer

programs and html content. These environments pro-

vide multiple views of the same content and also pro-

vide links between different content areas. Access

to documentation and associated literature is also in-

cluded in the design. One issue here is that familiarity

with this environment itself takes some time and for

the novice programmer this aspect alone can be a sig-

nificant barrier (K

¨

olling, 2008).

For the more general user, the family of document

preparation applications (such as Microsoft Word,

Powerpoint) provide some more conventions for mul-

tiple content areas. These can use a ’summary’ and

’detail’ area for rapid navigation around a single large

document (or set of slides). There is also facility for

comparing different versions of the same document

side by side. With some applications, the ‘help’ fa-

cility explicitly resizes the original window to ensure

that the user can view this material alongside the ex-

isting window.

In html documents, there are some increasingly

established conventions for the organisation of con-

tent, e.g. internal navigation along the top and

the left of the page, with external and related links

in a right side bar. The use of two column for-

mat for a single document is discouraged, because

the scrollbar control makes it unweildy to navigate.

Only one example was found of two column for-

mat used for two documents in the proposed man-

ner (http://www.xml.com/axml/testaxml.htm). Here,

however, the material in the right ‘reference‘ column

was a collection of footnotes. In the proposed ap-

proach, the reference material is a more organised

body of content that can be read through and browsed

independently.This design, together with indicative

content, is shown in Fig. 1.

4 IMPLEMENTATION

4.1 Content Structure

The proposed design encourages the student to com-

plete the programming tasks using two window

browsers, the interactive workshops window and the

programming editor window. This simultaneous way

of organisation of windows may encourage the stu-

dent to focus on learning and completing the tasks

with to learn about the topic and complete the tasks

by limiting the destruction of multiple open windows.

The use of Frameset technology was considered

and ultimately rejected for this project. This is be-

cause the status of Framesets uncertain in the HTML

5 standard, there are issues regarding accessibility and

visibility of the resources.

The interface has two versions: a ‘Wide Interface’

and a ‘Small Interface’. The Wide Interface is de-

signed to give the student maximum view of content

and navigation. The Small Interface is designed for

smaller screens and other mobile devices such as the

CSEDU 2011 - 3rd International Conference on Computer Supported Education

328

‘ipad’. The Small Interface is presents the same con-

tent but with a different layout and naviagation.

In the design for a wide screen, the interface will

be presented as a webpage with two column struc-

ture. The left side column will contain a list of tasks

for each workshop. Each task could have hints’ that

would help the student to complete the task. The de-

fault status for the hint will be closed, this is to give

the student the option to view the hint or not.

The right column of the interface contain a tab

menu, the menu has two items, the Glossary and the

Common tasks. The glossary items are terminologies

that the student can refer to, such as, the definition of a

Constructor or the definition of a Method. The Com-

mon Tasks, are a set of instructions that are common

to the process of solving tasks, for example, How to

Create a Constructor’ is a common task that a number

of tasks will require creating a constructor.

4.2 Styling and Interaction

Both columns have independent scrollbars, the user

can scroll down the tasks column without effecting

the Glossary and Common Tasks column. The links

from the tasks column target the appropriate tab and

anchors the correct item, a distinctive animation and

background colour is used to attract the user attention,

the animation are colour fades in a settle motion, this

way the user is aware of what they have clicked.

In the design for the small screen display, the in-

terface is a one column web-page. The tasks are dis-

played with the same style as the Wide Layout. The

Glossary items and the Common Tasks pop out using

a hyper link. Or the user can scroll down the page and

locate an item.

The transition between the two styles is deter-

mined by the use of the appropriate Cascading Style

Sheet using Media Query, this allows the layout to be

changed to suit the screen or a device without chang-

ing the content.

The interface design has separate content from

style approach. The content are tagged and styled us-

ing HTML and CSS, to update or edit the content, the

teacher can use any HTML editor. All the defined

styles are stored in the CSS file, the Jquery code is

stored in a separate file.

5 EVALUATION STRATEGY

A usability study was an essential component of the

development of the Wide and the Small Interfaces.

The goals for this study were: to establish which In-

terface is preferred by users; and to highlight the de-

sign issues in order to improve efficiency, user sat-

isfaction and the effectiveness of the Interface as a

learning tool. During the development of the Inter-

face, several evaluation methods were being used.

Firstly, a Heuristic Evaluation’ (Nielsen, 1994) was

carried out during the iterative design process. This

helped to identify some elementary usability prob-

lems which were fixed during the design and imple-

mentation phase. Secondly, a Systematic Usability

Evaluation’ methodology was employed. This com-

bines a rigorous functional inspection with user-based

evaluation. Some studies (Ardito et al., 2006) have

outlined that these methods are complementary and

they can be combined for obtaining a reliable eval-

uation process. Thirdly, the Wide and the Small In-

terfaces were evaluated using an Inspection Method’,

using usability guidelines and requirements to test the

actual Interface. At first, simple test content was used,

moving on to indicative content when appropriate.

This method was designed to measure learn-ability,

ease of use, efficiency, helpfulness, satisfaction and

positive attitude towards programming.

A User-based Study was organised: students were

invited to participate in the study. The students were

asked to complete a number of programming tasks us-

ing three Interfaces. These three Interfaces were as

follows:

• The existing resources (Microsoft Word and Pow-

erPoint documents accessed via Blackboard)

• The Wide Interface

• The Small Interface.

Their performance was captured using the Morae’

software (Techsmith, 2010). This software can be

used to observe the user while they attempt to com-

plete the tasks: to capture their interaction during the

usability test and to record their comments. The soft-

ware can also be used to design questionnaires, re-

lease them when the user completes a task and store

all the gathered results. The results can be analyzed

and presented using a report template generated by

the software. All the participants were asked to ver-

balise their thoughts and decisions while they com-

plete the tasks. They were asked to complete three

sets of questionnaires, one before they start the tasks,

one after each task and a final set of questions and an

interview at the end of the test. This gave them the

opportunity to express which Interface they preferred

and give recommendations for improvements. The

observation during the study helped to collect data to

measure the participants’ task success rate, time taken

to complete the task using each Interface and to record

help, assistance or errors made by the participant.

The gathered data from the usability study were

LAYOUT FOR LEARNING - Designing an Interface for Students Learning to Program

329

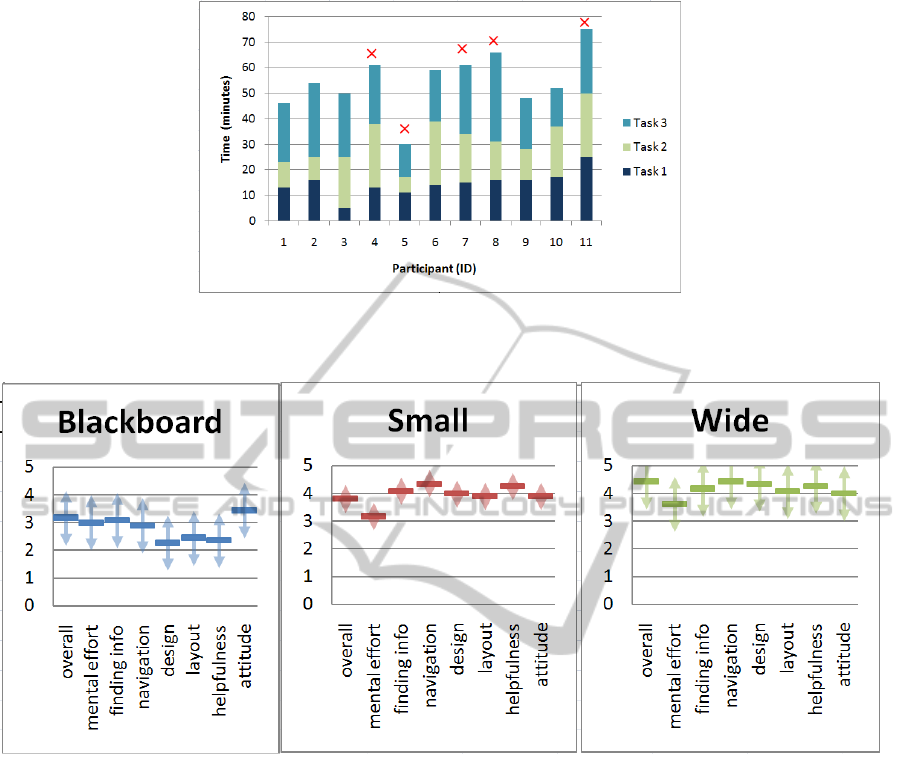

Figure 2: Time on Task is shown for each participant using different colours; the participants who didn’t complete Task 3 are

shown with an x’.

Figure 3: Arrows indicating one standard deviation either side of the mean response from all participants.

organised, analysed and presented into categories that

reflected the requirements and the actual usability

study and some additional area based on the partic-

ipants’ experience. The raw data collected from the

surveys were based on rating values given to a certain

criteria, such as the mental effort or the ease of use

for each Interface. These data were used to make a

graphical representation of the results. Another type

of data was collected through observing the partici-

pants, interviews after each task and the feedback that

each participant gave at the end of the study.The par-

ticipants will be referred to as P’ with their allocated

number.

One example of the performance indicators mea-

sured in the trials, was the Time on Task’ and Task

Success’. The participants’ ability to complete the

tasks on time varied considerably. They all man-

aged to complete Task 1 and Task 2 within (or nearly

within) the allocated time. The allocated time was

20 minutes: extra time of 5 minutes was given when

needed. Task 3 took longer, and it wasn’t completed

by five participants as shown in Fig. 2 (the red x indi-

cated the participants who didn’t complete Task 3).

Fig. 3 shows the mean response (over all 11 par-

ticipants) from the questionnaire, for each of the cate-

gories listed above, for each of the three Interfaces.

We can see that, in all categories, the same order

of preference is maintained. The Wide Interface is

most preferred, followed by the Small Interface. The

Blackboard Interface is least preferred. In the follow-

ing Section, we consider whether this preference is

statically significant.

5.1 Statistical Significance Analysis

The responses from participants indicated some pref-

erence towards the Wide and Small Interfaces (in that

order). Some further analysis can be undertaken to es-

CSEDU 2011 - 3rd International Conference on Computer Supported Education

330

Table 1: Significance tests for comparison of the three In-

terfaces.

t 1-tail 2-tail

Bb vs Small 1.606 6.2% 12.4%

Bb vs Wide 4.146 0.025% 0.05%

Small vs Wide 1.898 3.6% 7.2%

tablish whether the participants’ responses indicates a

significantly different attitude to the three different In-

terfaces. To perform this significance test’, we will es-

timate the probability that the mean responses would

differ by at least as much as was actually observed,

given the Null Hypothesis’, i.e. that all the responses

were really drawn from the same distribution, and the

observed differences were just down to random vari-

ations.

The number of participants (11) is quite small for

this type of analysis. For this reason we do not at-

tempt to look for significant differences in the vari-

ance. So the alternative hypothesis is that the differ-

ent Interfaces prompt the participants to give a differ-

ent mean level of response (between 0 and 5), with a

common variance. We will only apply the test to the

overall’ question, to give an indication of the signif-

icance of the results. Therefore there are three sig-

nificance tests to be performed, all using the overall’

question responses: Blackboard vs. Small, Black-

board vs. Wide, and Small vs Wide. The Student

T’ distribution is used to test whether the two mea-

sured mean response values are significantly different.

Writing the Blackboard and Small mean responses as

µ

b

and µ

s

, with variances σ

2

b

and σ

2

s

, the test statistic

t

bs

will be defined as

t

bs

=

µ

b

− µ

s

σ

bs

p

2/n

(1)

Where

σ

bs

=

s

σ

2

b

+ σ

2

s

2

(2)

and n is the number of participants, 11. Correspond-

ing expressions can be derived for the other two sig-

nificance tests, t

bw

and t

sw

. Thes t values are then used

to obtain the p-values, which is the probability of ob-

taining this difference (or more), given the Null Hy-

pothesis. These values can be either one-tailed (look-

ing only one way for a difference) or two-tailed (look-

ing both ways for a difference). In all cases the num-

ber of degrees of freedom (used in calculating the p-

values) is 2n − 2 = 20. These values are expressed as

percentages in Table 1.

The last two columns indicate that the only highly

significant comparison that could be made is between

the responses about the Blackboard Interface and the

responses about the Wide Interface: there is only a

0.05% chance that this degree of difference would

have been generated by chance. The other compar-

isons are on the threshold of being significant: more

participants would be needed to conclusively test the

hypotheses that, for example, the Small Interface is

preferred over the Blackboard, or that the Wide Inter-

face is preferred over the Small one. Fig. 3 depicts the

mean values of all responses, adding arrows to indi-

cate one standard deviation in the sets of responses. It

is interesting to note that the response to the Small In-

terface has smaller variance than the other two. This

may be for example due to the fact that it was always

the first component of the usability trial.

6 CONCLUSIONS AND FURTHER

WORK

This paper has investigated how the layout materials

and resources can have an impact on the effectiveness

on a programming activity. An Interface has been de-

veloped, to allow Programming Tasks and Learning

Material to be presented in a single browser. The lec-

turer’s observation of the students’ interaction had a

big influence in the design, implementation and eval-

uation of the project. The feedback from the students

has given useful guidelines for future improvements

and evaluations. During the design of this learning ex-

perience every effort was made to increase students’

motivation to understand and learn more about pro-

gramming.

To ensure that this is a sustainable resource, the

academic content needs to be easily edited and ap-

pended. There is a balance of arguments on this

point. On one hand, a relatively simple HTML mark-

up file (with style sheets and scripts stored elsewhere)

provides a standalone resource, easily deployed and

hand-edited. On the other hand, the avoidance of con-

tent duplication, the provision for authors unfamiliar

with HTML, the requirements for concurrent editing

and version control, all mitigate in favour of some

central content management system that uses server-

side processing to dynamically generate the page.

The current design includes two of the three re-

quired resources. If server-side processing is in-

cluded, it would be possible to include other resources

such as a program editor within the overall design.

This has the advantages of further simplifying and

controlling the layout of the learning resources, al-

lowing student work to be centrally stored and moni-

tored, and also providing a simple Interface with web

programming environments.

The reference resources currently are Glossary

LAYOUT FOR LEARNING - Designing an Interface for Students Learning to Program

331

and Common Tasks. Further resources could also

be considered for inclusion such as third party ref-

erence texts, web-logs (blogs’) for discussion by stu-

dents about the current task, and other learning me-

dia such as audio descriptions and animations of the

problem and the solution.

The development of two Interfaces gives the stu-

dents a choice. Students with access to a wide screen

can choose between the Wide and the Small Interfaces

by simply resizing the browser. The Wide Interface

will be appropriate for students who wish to see the

Learning Material all the time. The Small Interface

will be appropriate for students who wish to see the

Learning Material when they need help. Also, stu-

dents who wish or have to use smaller screens, the

Small Interface would be more suitable.

A usability study was conducted using three dif-

ferent layouts of materials and resources to investigate

the impact on the effectiveness on a programming ac-

tivity. The use of the Interface to solve the tasks and

find relevant information may depend on specific dif-

ferences between students. However, all the partici-

pants managed to use the Interfaces and organise the

IDE window as required and anticipated. The way the

participants arranged their windows when attempting

to solve the Task using the Blackboard Interface was

also as predicted. The problems included too many

open windows at one time, and difficulty finding help

for the Blackboard Interface.

During the usability study, participants lacking in

prior knowledge used the resources more than stu-

dents who had a higher prior knowledge level. One

of the main observations was the use of Learning Ma-

terial as a help system: it is important to understand

how the help is being used, as this is in itself a skill.

The Interface should have a valuable help system,

rich in content. Help-seeking behaviour may reflect

students’ attitudes about learning, their achievements

and goals. Learners can be helped to be more produc-

tive and encouraged towards an independent way of

thinking and problem-solving.

The students’ preferences for the Interface dif-

fered in accordance with their level of experience, in-

terest and expectations. The overall experience of us-

ing the Wide and Small Interfaces by the students was

positive. The fundamental principal is that the lay-

out of resources should be maintained to reflect their

role in the student’s learning activity: the editor, the

instructions, and the background materials that assist

them. It is hoped that this structure can provide a use-

ful learning framework.

REFERENCES

Ardito, C., Costabile, M., Marsico, M., Lanzilotti, R.,

Levialdi, S., Roselli, T., and Rossano, V. (2006). An

approach to usability evaluation of e-learning appli-

cations. Universal Access in the Information Society,

4(3):270–283.

Bennedsen, J. and Caspersen, M. (2005). Revealing

the programming process. ACM SIGCSE Bulletin,

37(1):186–190.

Dabbagh, N. (2005). Pedagogical models for E-Learning:

A theory-based design framework. International Jour-

nal of Technology in Teaching and Learning, 1(1):25–

44.

DiMaggio, P. and Hargittai, E. (2001). From the Digital Di-

videtoDigital Inequality: Studying internet use as pen-

etration increases. Princeton Center for Arts and Cul-

tural Policy Studies, Working Paper, 15.

Govindasamy, T. (2001). Successful implementation of e-

Learning:: Pedagogical considerations. The Internet

and Higher Education, 4(3-4):287–299.

Hodges, C. (2004). Designing to motivate: motivational

techniques to incorporate in e-learning experiences.

The Journal of Interactive Online Learning, 2(3):1–7.

Jenkins, T. (2002). On the difficulty of learning to program.

In Proceedings of the 3rd Annual Conference of the

LTSN Centre for Information and Computer Sciences,

pages 53–58.

K

¨

olling, M. (2008). Using bluej to introduce programming.

Reflections on the Teaching of Programming, pages

98–115.

Lee, M., Pradhan, S., and Dalgarno, B. (2008). The effec-

tiveness of screencasts and cognitive tools as scaffold-

ing for novice object-oriented programmers. Journal

of Information Technology Education, 7:62–80.

Lif, M., Olsson, E., Gulliksen, J., and Sandblad, B.

(2001). Workspaces enhance efficiency–theories, con-

cepts and a case study. Information Technology &

People, 14(3):261–272.

Lind, M. (1994). Effects of sequential and simultaneous

presentations of information. Report no. 9, CMD, Up-

psala University.

Mayer, R. and Moreno, R. (2003). Nine ways to reduce

cognitive load in multimedia learning. Educational

psychologist, 38(1):43–52.

Nielsen, J. (1994). Usability inspection methods. In Con-

ference Companion on Human Factors in Computing

Systems, pages 413–414. ACM.

Techsmith, M. (2010). Usability Testing for Software and

Websites. http://www.techsmith.com/morae.asp.

Wolf, C. (2003). iWeaver: towards ‘learning style’-based e-

learning in computer science education. In Proceed-

ings of the fifth Australasian conference on Comput-

ing education-Volume 20, pages 273–279. Australian

Computer Society, Inc.

CSEDU 2011 - 3rd International Conference on Computer Supported Education

332