THE CLOUD@HOME ARCHITECTURE

Building a Cloud Infrastructure from Volunteered Resources

Antonio Cuomo

Dip. di Ingegneria, Universit`a degli Studi del Sannio, Benevento, Italy

Giuseppe Di Modica

Dip. di Ingegneria Elettrica, Elettronica ed Informatica, Universit`a di Catania, Catania, Italy

Salvatore Distefano

Dip. di Matematica, Universit`a di Messina, Messina, Italy

Massimiliano Rak

Dip. di Ingegneria dell’Informazione, Seconda Universit`a di Napoli, Aversa, Italy

Alessio Vecchio

Dip. di Ingegneria dell’Informazione, Universit`a di Pisa, Pisa, Italy

Keywords:

Cloud computing, Volunteer computing, IAAS, SLA, QoS, Sensor, Mobile agent.

Abstract:

Ideas coming from volunteer computing can be borrowed and incorporated into the Cloud computing model.

The result is a volunteer Cloud where the infrastructure is obtained by merging heterogeneous resources of-

fered by different domains and/or providers such as other Clouds, Grid farms, clusters, and datacenters, till

single desktops. This new paradigm maintains the benefits of Cloud computing (such as service oriented inter-

faces, dynamic service provisioning, guaranteed QoS) as well as those of volunteer computing (such as usage

of idle resources and reduced costs of operation). This paper describes the architecture of Cloud@Home, a sys-

tem that mixes both worlds by providing mechanisms for aggregating, enrolling, and managing the resources,

and that takes into account SLA and QoS requirements.

1 INTRODUCTION

The core idea of this work is the implementation of

a volunteer Cloud, where the infrastructure is built

on top of resources voluntarily shared (for free or

by charge) by their owners or administrators (Cloud

Providers or CPs) following a volunteer comput-

ing approach. Since this new paradigm merges

Volunteer and Cloud computing goals, it is named

Cloud@Home (C@H for short). It can be consid-

ered a generalization and a maturation of the @home

philosophy, knocking down the (hardware and soft-

ware) barriers of Volunteer computing by allowing the

providers to share more general services. According

to this new paradigm, the user resources are not

only a passive interface to Cloud-based services, but

can actively contribute to create a larger infrastruc-

ture. To make this possible the different providers

must be able to interoperate with each other. The

Cloud@Home paradigm could be also applied to

commercial Clouds, establishing an open computing-

utility market where users can both buy and sell their

resources as services. This can be done at different

scales: from the single contributing user, who shares

his/her desktop, to research groups, public adminis-

trations, social communities, small and medium en-

terprises, which share their computing resources with

the Cloud.

424

Cuomo A., Di Modica G., Distefano S., Rak M. and Vecchio A..

THE CLOUD@HOME ARCHITECTURE - Building a Cloud Infrastructure from Volunteered Resources.

DOI: 10.5220/0003391404240430

In Proceedings of the 1st International Conference on Cloud Computing and Services Science (CLOSER-2011), pages 424-430

ISBN: 978-989-8425-52-2

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

The main goals of Cloud@Home are:

• a) Volunteer Cloud. Cloud@Home aims at pro-

viding mechanisms and tools for the manage-

ment of the resources contributed by different

providers. This is useful to reconvert the invest-

ments made on Grid computing and similar dis-

tributed systems into Cloud computing infrastruc-

ture, and/or to combine internal resources with ex-

ternal resources for managing peaks or bursts of

workload;

• b) Interoperability among Clouds. Implementa-

tion of mechanisms and tools for achieving inter-

operability among different Clouds. In this way,

any Cloud, either commercial as Amazon EC2 or

Azure, or open, based on Eucalyptus, Nimbus and

similar, can share resources in C@H, thus becom-

ing a CP for the latter. This will allow to imple-

ment a large cross-Cloud infrastructure able, for

example, to reduce costs by mixing free volunteer

CPs with commercial CPs;

• c) Integration of Sensing Elements. Defini-

tion and implementation of mechanisms and tools

for building a Cloud where sensing elements can

participate in a Infrastructure-as-a-Service (IaaS)

perspective. The set of virtualizable resources can

be extended, for instance, to the sensing peripher-

als available on mobile devices. In this way, the

sensing infrastructure can be virtualized and of-

fered on a volunteer-based approach. The global

result is a sort of virtualized computer where pro-

cessing, storage, and input/output (in the form of

sensing peripherals) are all composed through the

aggregation of heterogeneous resources. To the

best of our knowledge this is one of the first at-

tempts to actively involve sensors into Cloud in-

frastructures.

The final aim of the Cloud@Home project is

therefore the design and implementation of a software

substrate able to manage such a complex infrastruc-

ture and achievethe previously mentioned goals. This

requires to face several sub-problems that include: i)

aggregation of heterogeneous resources; ii) monitor-

ing and managing of resources according to speci-

fied QoS requirements; iii) guaranteing the quality

offered by volunteers through the use of flexible Ser-

vice Level Agreements (SLAs); iv) offering the ag-

gregated resources in a service-oriented perspective,

as typical of the Utility/Cloud computing approach.

The remainder of the paper is organized as fol-

lows: Section 2 introduces the C@H architecture;

Sections 3, 4 and 5 detail the specific parts of such

architecture; Section 6 draws the conclusions.

Microsoft

Azure

C@H Cloud

Provider k

Eucalyptus

Amazon EC2

IBM Cloud

Yet Another

Cloud Provider

C@H Cloud

Provider n

Cloud

Provider i

User

C@H

FrontEnd

Figure 1: Resource aggregation from many Cloud

Providers.

2 OVERVIEW

Cloud@Home aims at collecting infrastructure re-

sources (computing power, storage and sensor) from

many different resource providers and offering them

through a uniform interface, under an IaaS perspec-

tive. As depicted in Figure 1 the resources to be of-

fered are gathered from different providers, which can

vary from typical Cloud Providers to PCs hosting a

dedicated software or smartphones offering their sen-

sors.

We define Resource Owner any private or pub-

lic subject that owns resources (basically, hardware

systems such as computational and storage elements,

or sensing devices such as smartphones) and that al-

lows Cloud@Home to access them. In the context of

Cloud@Home, the role of Cloud Provider is extended

to also include the Resource Provider, who may of-

fer many different types of resources using an as-a-

Service approach. The main goal of Cloud@Home is

to provide a set of tools to build up a new, enhanced

provider of resources (namely, a C@H Provider) who

is not yet another classic Cloud Provider, but rather

acts as a resource aggregator: it collects resources

from many different C@H Providers, who rely on dif-

ferent technologies and adopt heterogeneous resource

management policies, and offers such resources to the

users. As an example, the C@H Provider aims at ag-

gregating virtual machines obtained from a commer-

cial Cloud Provider like Amazon EC2 and virtual ma-

chines that run on the PCs of a university lab.

According to this approach we identify two

Cloud@Home actors, with the following roles:

C@H User: interacts with the C@H Provider in or-

der to obtain the resources. C@H Users are unaware

of the nature of the obtained resources. From the

C@H Users point of view, the heterogeneity of the

Cloud Providers from which resources are collected

is completely hidden: they simply demand resources

from a C@H Provider, which in turn is in charge of

THE CLOUD@HOME ARCHITECTURE - Building a Cloud Infrastructure from Volunteered Resources

425

collecting and delivering the needed (virtualized) re-

sources.

C@H Admin: builds up and manage the C@H

Provider. The C@H Admin is the manager of the

C@H infrastructure and, in particular, is respon-

sible for its activation, configuration and mainte-

nance. There can be just one C@H Admin per C@H

Provider.

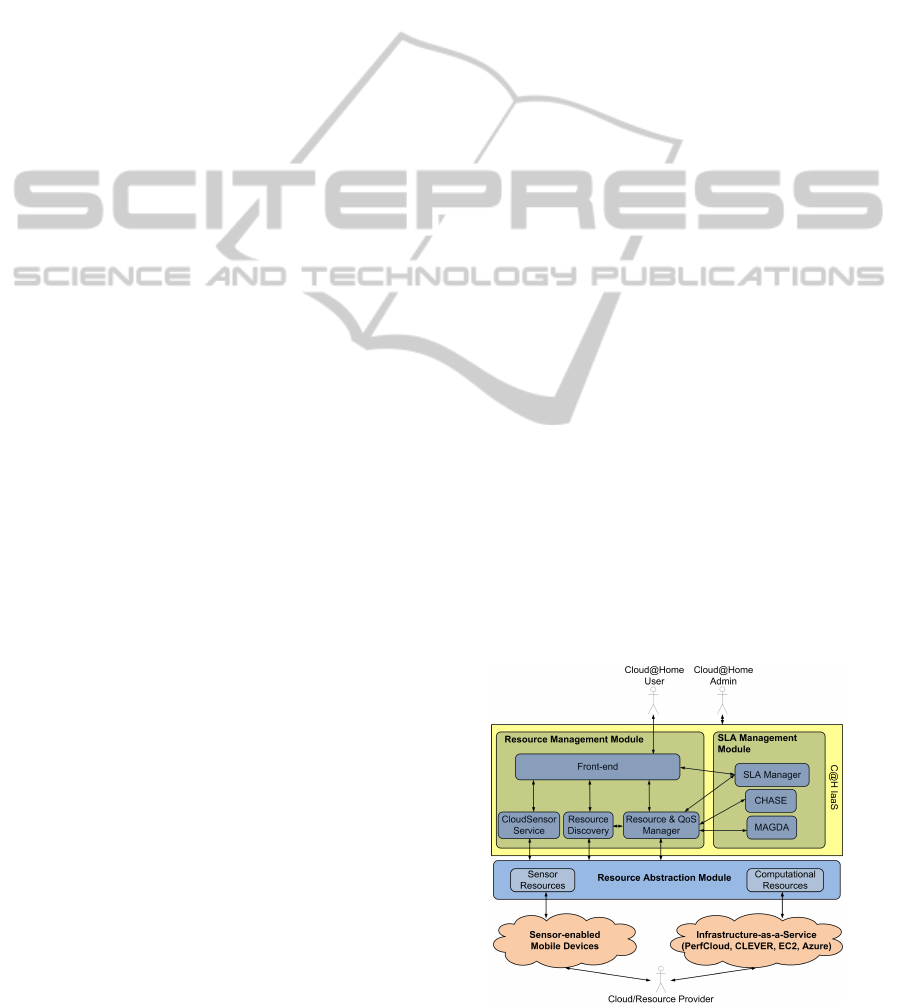

The C@H Provider offers, along with basic ser-

vices dedicated to the collection of volunteer-based

and commercial resources, a set of tools and services

for the Service Level Agreement (SLA) management,

monitoring and enforcement. The whole set of func-

tionalities supplied by the C@H Provider is organized

into three modules. The Resource Abstraction Mod-

ule hides the heterogeneity of resources collected

from Cloud Providers (computing/storage elements,

sensor devices) and offer the C@H User a uniform

way to access them. The module provides a layer of

resource virtualization which abstracts the resource

from the specific provider it is taken from. Thanks

to specific virtualization functionalities, resources can

be received from personal computers (in terms of

computing power and storage) and from mobile hand-

held devices (in terms of sensed data). On top of the

Resource Abstraction Module, C@H provides tools

for the management of the QoS to be delivered to the

C@H Users. Formal guarantees on the performance

of resources are offered through the SLA Manage-

ment Module. C@H Users are given the possibil-

ity to negotiate the quality level of the requested re-

sources, and are offered contracts (SLAs) that provide

guarantees on the resource provision. The negotiation

process relies on a performance prediction tool to as-

sess the sustainability of users’ requests. A mobile

agent-based monitoring function can also be activated

to collect information on the actual performance of

the delivered resources. The Resource Management

Module is responsible for the provision of resources.

A Resource Discovery tool collects and indexes the

resources available for the C@H Users. The enforce-

ment of SLAs is carried out by the Resource and QoS

Manager, which basically takes care of resource man-

agement (allocation, de-activation, re-allocation) ac-

cording to the SLA goals. A Frontend acts as an inter-

face that, on the one end gathers the users’ requests,

and on the other dispatches them to the appropriate

system module.

In Figure 2 the C@H system architecture is intro-

duced. In the topmost part of the figure the main com-

ponents of the C@H IaaS are shown. At the bottom,

the resource providers are also shown. The modules

that have been described so far are implemented in

terms of C@H Components. C@H Components are

able to interact with each other through standardized,

service-oriented interfaces. Such a choice enables

flexible component deploying strategies. Pushing the

virtualization paradigm to its limits, a single C@H

Component can even be offered as a customized vir-

tual machine hosted by any Cloud Provider. The pre-

sented approach can be described as a PaaS Cloud

system which offers basic components in order to

build up a Cloud@Home Provider according to the

IaaS model.

3 RESOURCE ABSTRACTION

Cloud@Home classifies the resources in terms of the

kind of resource managed or the resource classes. At

the state of the art Cloud@Home supports two differ-

ent resource classes: Computational resources, that

are managed in terms of Virtual Machines and/or Vir-

tual Clusters, and Sensor resources, that are managed

by a service-based interface.

Cloud@Home mainly acts as an intermediary, ac-

quiring resources from different resource providers

(eventually Cloud Providers) and delivering them to

users. Cloud@Home provides two acquisition modal-

ities for obtaining resources from Cloud Providers:

charged if they are obtained from commercial Cloud

Providers for charge; open if obtained from Cloud

Providers delivering resources for free (like academic

clusters, eventually subjected to internal administra-

tion policies and restrictions); volunteer if obtained

from Resource Owners which voluntarily support

Cloud@Home (like a laboratory providing machines

out of working time). Cloud@Home offers differ-

ent ways of setting up appropriate Providers for the

computing resource classes. To enable the integra-

tion of Charged Resource Providers, Cloud@Home

Figure 2: The C@H system architecture.

CLOSER 2011 - International Conference on Cloud Computing and Services Science

426

can interact with some of the main commercial Cloud

platforms, like Amazon EC2 and Microsoft Azure.

Open and Volunteer computing resources are sup-

ported through specific frameworks.

In order to implement a first prototype of

Cloud@Home, we choose to adopt two distinct

frameworks, namely the PerfCloud (Mancini et al.,

2009) and CLEVER (Tusa et al., 2010). PerfCloud

addresses the problem of transforming typical aca-

demic facilities like clusters or GRIDs into Cloud Re-

source Providers, so it is suited to provide the de-

livery of Open Computational Resources. CLEVER

aims at providing Virtual Infrastructure Management

services and suitable interfaces to enable the integra-

tion of high-level features such as Public Cloud Inter-

faces, Contextualization, Security and Dynamic Re-

sources provisioning, adopting a strongly decentral-

ized approach, leveraging on P2P protocols and fault-

tolerance mechanisms in order to support host volatil-

ity.

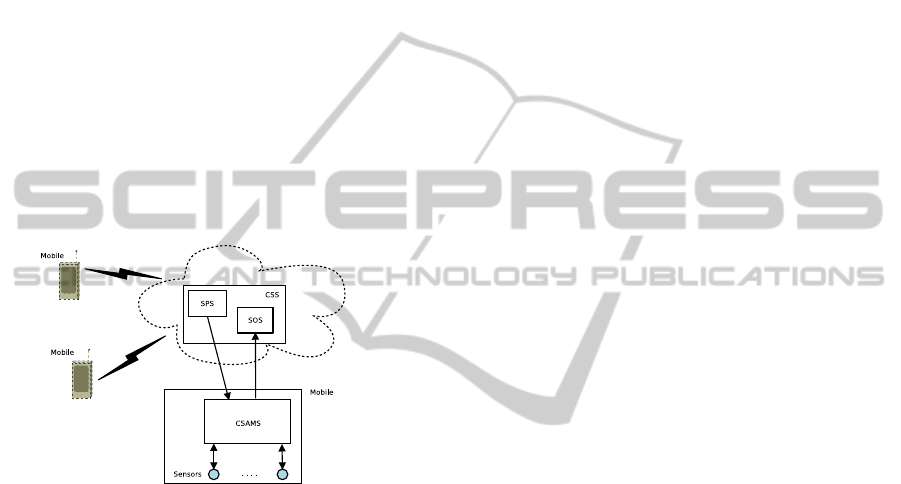

Figure 3: Architecture of the CloudSensor subsystem.

CloudSensor is the subsystem of Cloud@Home

that defines the mechanisms enabling the integration

of the sensors commonly available on smartphones,

such as the accelerometer, GPS, microphone, etc.,

within the Cloud infrastructure. In this way, appli-

cations and services will be able to access a possibly

large set of heterogeneous sensors through a service-

based interface. CloudSensor (Figure 3) is composed

of two subsystems: the CloudSensor Service (CSS)

and the CloudSensor Acquisition and Management

System (CSAMS). CSS is hosted within the “fixed”

cloud infrastructure and receives the acquisition re-

quests coming from client applications (i.e, the ap-

plications that make use of sensing information). Re-

quests are forwarded to CSAMS, executed on the mo-

bile device, that provides the sensor resource abstrac-

tion. CSAMS is in charge of receiving the requests

coming from the fixed Cloud, evaluating the feasibil-

ity of the request (with respect to the user’s prefere-

ces), carrying out the sensing activity, buffering and

then transferring the result to the Cloud.

4 RESOURCE MANAGEMENT

Cloud@Home providers can manage different kind

of resources, mainly acting as just intermediaries

i.e., delegating the management to resource owners

or Cloud Providers. Thus Cloud@Home providers

search for cloud/resource providers and, on behalf of

the final C@H Users, acquire resources that eventu-

ally will be delivered to them.

The Frontend component has two main roles in

the Cloud@Home architecture: i) it acts as an access

point for the C@H Users in order to let them acquire

the resources; ii) it provides the reference links to all

the Cloud@Home components, thus operating as a

glue entity for the Cloud@Home architecture. In or-

der to provide a user-friendly and intuitive interface to

the system, the external side of the Frontend will be

developedas an extensible Web Portal that can be also

deployed into a virtual machine, in order to simplify

the infrastructure access point setup for the C@H Ad-

min. Internally, the C@H Frontend hosts the clients

for all the other C@H components: these clients can

be easily configured by uploading a configuration file

containing the references (URL, IP address, WS End-

point Reference) to all the active components. The

C@H Web portal side of the Frontend contains some

simple predefinedWeb modules able to start the C@H

components clients and invoke the C@H services.

The Resource & QoS Manager (RQM) component

implements the (physical and virtual) resources man-

agement in C@H. It is responsible for the manage-

ment of the Cloud resources and services needed to

achieve the application requirements imposed by the

SLA manager. This task is therefore performed by

also taking into account QoS requirements specified

during the SLA negotiation process with the C@H

User. The RQM has also an important role dur-

ing the negotiation phase whose responsible is the

SLA manager. During the negotiation it identifies

a possible resources configuration by querying the

Resource Discovery component which, in collabora-

tion with CHASE, will provide an estimation of the

performance that can be guaranteed by such a con-

figuration. Other important RQM functionalities are

the management of virtual machines and virtual clus-

ters (creation/destruction/migration) and the coordi-

nation of monitoring activities, according to the QoS

requirements to satisfy. The QoS monitoring is imple-

mented by invoking the service offered by MAGDA,

which can deploy an autonomous agent network able

to monitor the resources and the QoS they provide.

Based on both the monitoring data thus obtained and

the information provided by CHASE, the RQM will

specify the enforcement policy that is able to ensure

THE CLOUD@HOME ARCHITECTURE - Building a Cloud Infrastructure from Volunteered Resources

427

the attainment of the requested QoS.

The Resource Discovery component carries out

the discovery task. It has to manage the dynam-

ics of the CPs that voluntarily contribute to the

C@H by providing their physical resources, since

they can asynchronously join and leave the infras-

tructure, without any notification. It therefore has

to implement mechanisms and tools for the volun-

tarily resources/CP enrolment and management. The

discovery tool manages the list of the registered re-

source/cloud providers, as well as that of the kind and

amount of resources they are willing to offer C@H.

The CloudSensor Service accepts the sensing re-

quests coming from the client applications, dispatches

the requests to the relevant mobile devices, and re-

turns the results to the client applications. CSS is

made accessible to the client applications by means

of the Frontend. CSS is designed according to the

guidelines of the Sensor Web Enablement (SWE) ar-

chitecture (Botts et al., 2008), specified by the Open

Geospatial Consortium, and it is composed of two

subsystems. A first service, implemented as an in-

stance of the Sensor Planning Service (SPS) of the

SWE architecture, receives the sensing requests from

client applications. This service acts as a broker be-

tween client applications and the set of mobile devices

able to perform the sensing activity. A second service,

implemented as an instance of the Sensor Observation

Service (SOS) of the SWE architecture, provides an

interface useful to request, filter and retrieve the re-

sults of the sensing activity. It acts as a decoupling

element thanks to its storage facility.

5 SERVICE LEVEL AGREEMENT

MANAGEMENT

One of the most important problem to consider in

Cloud contexts is the Service Level Agreement man-

agement. The volunteer contribution in C@H dra-

matically complicates the SLA management task, and

therefore new strategies must be adopted to satisfy

the QoS requirements. In C@H the SLA manage-

ment is implemented through the interaction of three

components: the SLA Manager, the CHASE and the

MAGDA, briefly described in the following.

The SLA Manager component aggregates and of-

fers C@H Users the templates of the SLAs pub-

lished by the Cloud Providers that the C@H interacts

with. The C@H User can refer to these templates

to start a negotiation procedure with the SLA Man-

ager, that will eventually produce an SLA. Since re-

sources (which are the object of the SLA) are virtual-

ized, and can be provided by several, different Cloud

Providers adoptingdifferentmanagementpolicies, we

argue that SLAs are a fundamental means to guaran-

tee the QoS in such a heterogeneous environment. A

rigid vision of the SLA is not suitable to this context.

In order to face possible fluctuations of the resources’

availability, and to best satisfy the dynamic need and

expectations of the C@H users, the SLA must be flex-

ible and adaptable to the context (DiModica et al.,

2007). The SLA Manager will adopt a new flexi-

ble scheme of negotiation and re-negotiation of the

QoS. According to this scheme, whenever violations

on the SLA’s guarantees are about to occur, run-time

(i.e., during the service provisioning) re-negotiations

and modifications of the guarantees are allowed to

take place. On a successful re-negotiation, the SLA

would be accordingly modified and the QoS level is

adjusted; should the negotiation fail, the SLA would

not get modified and would still apply on the resource

provisioning. In the context of voluntarily offered re-

sources, resources providers can deliberately decide

to share or un-share their resources at any time, the

fluctuation of the resources’ availability is a big issue

to face with. Flexible SLAs aim at preserving the con-

tinuity of the resource provisioning, which for sure

is a goal for both the C@H Users and the provider

of resources. Finally, when the agreement has been

reached (i.e., both the end user and the C@H provider

agree on the SLA terms), the SLA is passed to the

RQM, who is responsible for its enforcement. The

SLA Manager will also provide the end user with an

end point reference through which the SLA can be

monitored.

CHASE is the component in charge of delivering

reliable predictions on the performance of the applica-

tions running in the Cloud. It evolves from an existing

framework for the autonomic performance manage-

ment of service oriented architectures (Casola et al.,

2007). CHASE main components are an optimiza-

tion engine and a trace-driven discrete event simula-

tor. Applications can be modeled through a formal-

ized description of the application components, for

which an XML based language, MetaPL, can be used.

In the context of this project, CHASE plays multiple

roles. Firstly, it is invoked during the service negoti-

ation phase: when the SLA Manager needs to eval-

uate the sustainability of user requests, it transmits

to the RQM component a formalized description of

the QoS Level explicitly requested by the user. The

RQM forwards this description to the CHASE sim-

ulator, along with an application description and in-

formation about the current state of system, in terms

of resource availability and load. CHASE uses the

application description to build traces that can be fed

to the simulator, the status information to update the

CLOSER 2011 - International Conference on Cloud Computing and Services Science

428

platform description and the QoS description to build

a parametrized objective function to be minimized (or

maximized, depending on the nature of the parame-

ters). The optimization engine drives the simulator to

explore the space of possible configurations and in-

forms the RQM whether a configuration meeting the

demands has been found or not. CHASE can also be

invoked by the RQM component in case that the mon-

itoring system is alerting that the QoS agreed in the

SLA is at risk of violation: by performing new simu-

lations in the up-to-date setting, it informs the RQM

if alternative scheduling decisions (like migrating or

adding more VMs) can solve the problem or if the

violation is unavoidable, in which case the SLA will

have to be renegotiated or terminated.

The MAGDA component is a service hosted into

a virtual machine and implementing the MAGDA

(Aversa et al., 2007) mobile agent platform (a mobile

agent is a Software Agent with an added feature: the

capability to migrate across the network together with

its own code and execution state, following both pull

and a push execution model (Xu and Wims, 2000)).

It is developed using and extending the JADE, a FIPA

compliant agent platform developed by TILAB (Bel-

lifemine et al., 2001). The MAGDA Platform hosts a

set of agents able to perform different kind of bench-

marks, that vary from simple local data sampling (ac-

tual CPU usage, memory available, ...) to distributed

benchmarking (evaluating distributed data collection,

or evaluating the global state with snapshot algo-

rithms). Moreover the mobile agents are able to up-

date an archive of the measurement in order to per-

form historical data analysis. In the Cloud@Home

context, the migration capabilities are of help to carry

on an on-site (i.e., on the resource itself) monitoring.

RQM and SLA-oriented components use the mobile

agents in order to monitor the delivered resources.

Performance indexes are collected and used to en-

force the SLAs. In the case that the C@H User explic-

itly requests the monitoring service, mobile agents

are instructed to migrate to the place where the re-

sources have been activated and start monitoring their

performance at application level. It is also possible

to customize the monitoring procedure and remotely

check the state of the resources, following either a

push (agents autonomously provides information on

resources) or a pull (agents are polled in order to get

information on resources) communication model.

6 CONCLUSIONS

The idea of exploiting unused computing and storage

resources is at the base of the Volunteer computing

as well, according to which private users’ resources

are voluntarily aggregated to serve distributed, mostly

scientific, applications. This work grounds on the

idea of exploiting voluntarily offered resources (data

sensed from mobile devices, single desktops, private

or public Companies’ Data Centers) to build general

purpose clouds that will then re-offer the resources

on an ”as-a-service” basis. The main objective of

the work is to propose a framework (Cloud@Home)

for the integration of both commercial and volunteer-

based clouds, aggregating resources from heteroge-

neous environment, and offering users extra services

to monitor and guarantee the quality of the provided

resources. The architecture of Cloud@Home has

been described in the paper. In the future, we are plan-

ning to integrate the security requirements among the

service level objectives to be guaranteed, and to add

a module for the C@H Users’ billing and accounting

management.

ACKNOWLEDGEMENTS

The work described in this paper has been par-

tially supported by the MIUR-PRIN 2008 project

“Cloud@Home: a New Enhanced Computing

Paradigm”.

REFERENCES

Aversa, R., Martino, B. D., Rak, M., and Venticinque,

S. (2007). A framework for mobile agent platform

performance evaluation. In WOWMOM, pages 1–8.

IEEE.

Bellifemine, F., Poggi, A., and Rimassa, G. (2001). Jade: a

fipa2000 compliant agent development environment.

In Agents, pages 216–217.

Botts, M., Percivall, G., Reed, C., and Davidson, J. (2008).

Ogc sensor web enablement: Overview and high level

architecture. In Nittel, S., Labrinidis, A., and Stefani-

dis, A., editors, GeoSensor Networks, volume 4540 of

Lecture Notes in Computer Science, pages 175–190.

Springer Berlin / Heidelberg.

Casola, V., Mancini, E. P., Mazzocca, N., Rak, M., and

Villano, U. (2007). Building autonomic and secure

service oriented architectures with MAWeS. In Au-

tonomic and Trusted Computing, Lecture Notes in

Computer Science, volume 4610, pages 82–93, Berlin

(DE). Springer-Verlag.

DiModica, G., Regalbuto, V., Tomarchio, O., and Vita, L.

(2007). Enabling re-negotiations of sla by extending

the ws-agreement specification. Services Computing,

IEEE International Conference on, 0:248–251.

Mancini, E. P., Rak, M., and Villano, U. (2009). Perfcloud:

Grid services for performance-oriented development

THE CLOUD@HOME ARCHITECTURE - Building a Cloud Infrastructure from Volunteered Resources

429

of cloud computing applications. In WETICE, pages

201–206.

Tusa, F., Paone, M., Villari, M., and Puliafito, A. (2010).

Clever: A cloud-enabled virtual environment. In

Computers and Communications (ISCC), 2010 IEEE

Symposium on, pages 477 –482.

Xu, C.-Z. and Wims, B. (2000). A mobile agent based push

methodology for global parallel computing. Concur-

rency - Practice and Experience, 12(8):705–726.

CLOSER 2011 - International Conference on Cloud Computing and Services Science

430