A SIMULATED ENVIRONMENT FOR ELDERLY CARE ROBOT

Syed Atif Mehdi, Jens Wettach

Robotics Research Lab, Department of Computer Sciences

University of Kaiserslautern, Kaiserslautern, Germany

Karsten Berns

Robotics Research Lab, Department of Computer Sciences

University of Kaiserslautern, Kaiserslautern, Germany

Keywords:

Elderly care, Simulated environment, Indoor robot.

Abstract:

The population of elderly people is increasing steadily in developed countries. Among many other techno-

logical methodologies, robotic solutions are being considered for their monitoring and health care services. It

is always desired to validate all aspects of robotic behavior prior to their use in an elderly care setup. This

validation and testing is not easily possible in real life scenario as tests are needed to be performed repeatedly

under same environmental conditions and controlling different parameters of a real environment is a difficult

requirement to achieve. Developing and using 3D simulations is the most beneficial solution in such scenarios,

where different parameters can be adjusted and different experiments with identical environmental conditions

can be conducted. In this paper development of a simulated environment for an autonomous mobile robot,

ARTOS, has been presented. The simulated environment imitates a real apartment and consists of different

rooms with a variety of furniture. To make the situation more realistic, an animated human character is also

developed to validate the robotic behavior. As an application scenario, searching of the human character by the

robot in the simulated environment is presented, where the simulated human walks through different rooms

and the robot tries to find him.

1 INTRODUCTION

With the increase of elderly population in developed

countries, it is becoming necessary to use modern

technologies to maintain their standard of living and

to provide them with better health care services. The

technological advancement can make it possible to

detect an accident to the elderly person at home or

even report the accident immediately to the care-

givers. Besides several monitoring devices being in-

stalled at home, robots are also being used to monitor

the aged person. The added benefits of using robots,

besides others, are that they can

• help the elderly person in performing different

tasks at home

• be a companion to the elderly person

• act as an interaction partner

• be tele-operated in case of an emergencysituation.

Elderly care robots have to work in a very delicate

environment and they have to deal not only with the

Figure 1: Autonomous Robot for Transport and Service

(ARTOS).

uncertainty of the environment but also with the un-

certainty in regards to the elderly person. It is, there-

fore, fundamentally desired to extensively validate the

working of these robots. Testing and validation of the

robotic behavior is not possible in the real environ-

ment since any malfunction can harm the elderly per-

son. Moreover, it is almost impossible to conduct the

test cases repeatedly with same environmental condi-

tions to re-generate and improve the results. There-

fore, it becomes necessary to develop a simulated en-

562

Atif Mehdi S., Wettach J. and Berns K..

A SIMULATED ENVIRONMENT FOR ELDERLY CARE ROBOT.

DOI: 10.5220/0003411605620567

In Proceedings of the 1st International Conference on Pervasive and Embedded Computing and Communication Systems (SIMIE-2011), pages 562-567

ISBN: 978-989-8425-48-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

vironment that is as close to the real scenarios as pos-

sible and should provide all the necessary parameters

that can influence the working of the robot.

Autonomous Robot for Transport and Service

(ARTOS), see fig. 1, is being developed to provide

help to the elderly person in transporting different

objects within a home environment and to render

tele-operation service between care-givers and the el-

derly person. It can move autonomously to differ-

ent rooms, avoiding collisions and planning paths be-

tween closely placed furniture and door ways.

In this paper, the development of a simulated

apartment-like environment to observe the behavior

of ARTOS in the humanly environment is being pre-

sented. The simulation and visualization is based on

SimVis3D (Braun et al., 2007) (Wettach et al., 2010)

and the simulation of a human character complying

to H-Anim

1

standards as discussed in (Schmitz et al.,

2010).

This paper has been organized in the following

way. First a summary of related work is presented in

section 2. The development of the simulation is dis-

cussed in section 3 with subsections describing dif-

ferent aspects of simulation. A brief account of ex-

periment is presented in section 4. Finally, section 5

presents the conclusion and the future work.

2 RELATED WORK

Developing a close-to-reality simulation for robotic

environments requires a simulation framework that

features a range of sensor systems and robotic plat-

form. It must be capable of handling user defined

structures and have possibilities of extension.

A variety of simulation frameworks are avail-

able for developing a 3D simulated environment

for robots, but most of them are limited in their

functionality and provide little room for extension.

Gazebo (Koenig and Howard, 2004) is a 3D simu-

lator for multiple robots. It contains several models

of real robots with a variety of sensors like, camera,

laser scanner etc. Robots and sensors are defined as

plugins and the scene is described in XML format.

SimRobot (Laue et al., 2005) uses predefined

generic bodies to construct a robot and allows a set of

sensors and actuators that can be used. It uses ODE

to simulate dynamics. Based on SimRobot, (Laue

and Stahl, 2010) have modeled and simulated an as-

sisted living environment to evaluate maneuvering of

an electric wheelchair.

EYESIM (Koestler and Braeunl, 2004) is a 3D

1

http://www.h-anim.org

simulation tool for EyeBots. It provides different sen-

sors like camera or bumper, but does not support dy-

namics. UASRSim (Wang et al., 2003) is a simulation

tool based on the Unreal Tournament game engine.

The 3D scenes can be modeled using Unreal Editor

and dynamics are calculated by Karma engine.

Usually robotic simulations do not include simu-

lation of a human character. But in case of a house-

hold robot, human interaction cannot be avoided at

all. Therefore, in case the robot has to work among

the human being, it is necessary to evaluate the be-

havior of the robot in the simulation.

Greggio et al. simulate a humanoid robot in

(Greggio et al., 2007) using UASRSim simulation.

Similarly, (Hodgins, 1994), focuses on simulating the

running of human beings. Thalmann discusses the au-

tonomy of a simulated character in (Thalmann, 2004).

But these simulations are independent and do not por-

tray the needs of a household environment.

Although most of the simulation frameworks sup-

port a realistic 3D simulation of robots with standard

sensors and support for system dynamics, there is still

a need of a more flexible, allowing usage of custom

objects, and extensible framework like SimVis3D.

Besides supporting a variety of robots and environ-

ments, the framework in hand is able to realize dif-

ferent movements of autonomous human characters,

discussed in section 3.4.

3 DEVELOPED ENVIRONMENT

The goal of ARTOS is to search, monitor and in-

quire health of an elderly person and in case of any

emergencysituation alert the care-giversand establish

a communication channel between the resident and

the care-givers. For this purpose, autonomous nav-

igation, obstacle avoidance, path planning and tele-

operation have been implemented for ARTOS and

have been tested in a real environment developed at

IESE, Fraunhofer (Mehdi et al., 2009). However, test-

ing the methodologies for searching and monitoring

the human being is not an easy task in the real envi-

ronment. A slight change in the environmental con-

ditions may result in a complete different robotic be-

havior. Therefore, to thoroughly validate a particular

behavior of the robot it is very important to conduct

the experiments in exact identical situations. In such

scenario it seems judicious to develop a simulated en-

vironment that is as close to the real environment as

possible and also, besides providing static environ-

ment information, provides the dynamics of a real en-

vironment.

In order to illustrate the environment developed

A SIMULATED ENVIRONMENT FOR ELDERLY CARE ROBOT

563

<part file="artos/vis_obj/iese_.wrl" name="LAB" attached_to="ROOT" pose_offset="0 0 0 0 0 0" />

<part file="hanim/yt_002b.wrl" name="Model" attached_to="LAB" pose_offset="4 2 0 90 0 180" />

<part file="artos/vis_obj/artos.iv" name="ARTOS" attached_to="LAB" pose_offset="0 0 0 0 0 0" />

<element name="artos_pose" type="3d Pose Tzyx" position="5 5 0" orientation="0 0 -90"

angle_type="rad" attached_to="ARTOS"/>

...

Figure 3: A snippet of XML description for the scene shown in fig. 4

Figure 2: Overview of SimVis3D.

and demonstrate the flexibility and the capabilities of

SimVis3D, the following subsections will discuss the

SimVis3D framework (section 3.1), the development

of simulated apartment (section 3.2) and the simu-

lated robot (section 3.3). The simulated human being

that is used to facilitate understand the behavior of the

robot in the simulation is discussed in section 3.4.

3.1 SimVis3D

SimVis3D is an open source framework based on the

widely used 3D rendering library Coin3D

2

that rely

on OpenGL for accelerated rendering. It is compat-

ible to Open Inventor and is capable of generating

complex simulation and visualization for robots and

their environments. It was designed to allow users to

create custom scenes by using basic building blocks

in a meaningful situation. It can be used to visualize

and simulate a variety of environmental situations.

Figure 2 depicts the main components of

SimVis3D framework. The visualization module is

responsible for visualizing the environments, robots

and human characters. It also shows the robot’s view

of the world. The simulation module simulates and

generates the data for actuators and sensors. Cur-

rently, it is capable of simulating different kinds of

actuators (stepper motors, servo motors etc.), distance

sensors (laser scanners, ultrasound and PMD

3

cam-

2

http://www.coin3d.org

3

Photonic Mixer Device

(a) (b)

Figure 4: Different views from camera in the environment

showing (a) simulated human character and (b) simulated

robot.

eras), tactile sensors, vision sensors and acoustics (see

(Schmitz et al., 2010) for the last aspect). The physics

engine module based on the NEWTON dynamics en-

gine has been used for vehicle kinematics and biped

walking.

SimVis3D uses the scene graph data structure to

store and render the graphics in three-dimensional

scene. The scene graph data structure is populated

from an XML file containing scene description. Fig-

ure 3 gives a glimpse of the XML file to define a scene

and objects in this scene. The part adds arbitrary

3D objects stored as Open Inventor models in exter-

nal files and in .wrl files written in VRML

4

. These

subgraphs are inserted at the anchor nodes defined by

the attached to attribute. The element is used to de-

fine parameters, including pose offset, position etc.,

for that particular object defined in attached to. The

scene developed using the XML scene description file

is depicted in fig. 4 showing different views of the

camera. This camera can be placed anywhere in the

scene to observe any particular aspect of the robotic

behavior.

3.2 3D Model of Apartment

A real apartment has been established at IESE, Fraun-

hofer to conduct experiments with the real robot. Its

area is 60m

2

and is equipped with furniture neces-

sary for the apartment. Corresponding to this real

apartment, a 3D model has been developed using

4

Virtual Reality Modeling Language

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

564

DNA

Elderly Person

DEMOCENTER

CareGiver

MONA

2

9

6

1

m

m

835mm

4444mm

1140mm

2

1

1

2

m

m

iCup

Set Top Box

Camera

TV

RFID

AmiCooler

(a) (b)

Figure 5: (a) Assisted living lab at IESE Fraunhofer and

(b) Visualization of assisted living lab.

Blender

5

. It was ensured that the dimensions of dif-

ferent rooms of the 3D model matches the real envi-

ronment. Figure 5 shows the layout of the real apart-

ment and the 3D model of the assisted living facility

at IESE, Fraunhofer. A variety of furniture have been

added to the visualization to make it closer to the re-

ality.

The visualization of these 3D models is carried

out using SimVis3D. The XML description file con-

taining the mounting position of different objects and

desired parameters is used to place the furniture at ap-

propriate places in the 3D scene. The objects, e.g. fur-

niture, are inserted to the mounting point ”LAB” with

parameters specifying their location and orientation.

Currently these are static objects in the scene and the

human or the robot cannot move the furniture from

their defined location.

3.3 Simulated Robot

The robot for visualization is composed of chassis,

wheels, camera and laser scanner. According to the

scene description in fig. 3 it has been introduced as

”ARTOS” object in the visualized ”LAB” environ-

ment. A 3D pose element ”artos pose” is attached to

the robot to be able to move and rotate it in its work-

ing space.

The control structure for the movement of ARTOS

in the simulated environment is based on the MCA2-

KL

6

framework. It is noteworthy that it is the same

control structure that is being used by the real ARTOS

and nothing needs to be changed for simulating sen-

sors and actuators. Like the real robot, the simulated

ARTOS is equipped with a simulated pan-tilt camera

and a simulated laser range finder. Figure 6a shows

the view from the camera of the robot and fig. 6b

shows the range of laser scanner.

For an autonomous navigation of the simulated

ARTOS, the laser scanner is used to generate a grid-

5

http://www.blender.org

6

http://rrlib.cs.uni-kl.de/mca2-kl

(a) (b)

Figure 6: A simulated view of environment with simulated

robot’s (a) camera, (b) laser scanner.

map of the environment. This grid-map maintains

the information of the obstacles and is used to gener-

ate path for navigation avoiding these obstacles. For

detecting human being in the simulation, the pan-tilt

camera of the robot is used to detect the face of the

human using a Haar Cascade classifier (see fig. 6a).

3.4 Simulation of Human

In order to simulate the animated character close to

the real human being, different body movements have

to be defined. This requires a detailed description

of the human being which may offer possible body

part movements. To incorporate such level of articu-

lation, the well established human modeling standard

H-Anim has been used. This standard defines a speci-

fication for defining interchangeable human figures to

be used for simulation environments. An avatar

7

con-

forming to the H-Anim modeling standards has been

used to visualize different movements of the human.

Human body movements have been divided into

two categories, namely simple movements and com-

plex movements. Simple movements are those which

are generated using a 3D modeling tool like Blender.

These movements are independent of each other and

have a definite time for execution. These movements

include, walking, falling on the ground, standing up

from the fall, sitting on a chair and standing up from

a chair (see fig. 7). Complex movements, on the other

hand, are a combination of simple movements, for ex-

ample walking from one room to the other requires

a combination of several simple walk motions. For

complex movements it becomes necessary to ensure

that the body of the character is in a position from

where it can perform the next simple movement. Var-

ious other movements, both simple and complex, can

easily be defined and incorporated in the same man-

ner.

For autonomous movements in more humanly

way, the simulated character walks in the environment

from one place to the other. One approach can be to

7

Avatars based on H-Anim are available at http://

www.h-anim.org/Models/H-Anim1.1/

A SIMULATED ENVIRONMENT FOR ELDERLY CARE ROBOT

565

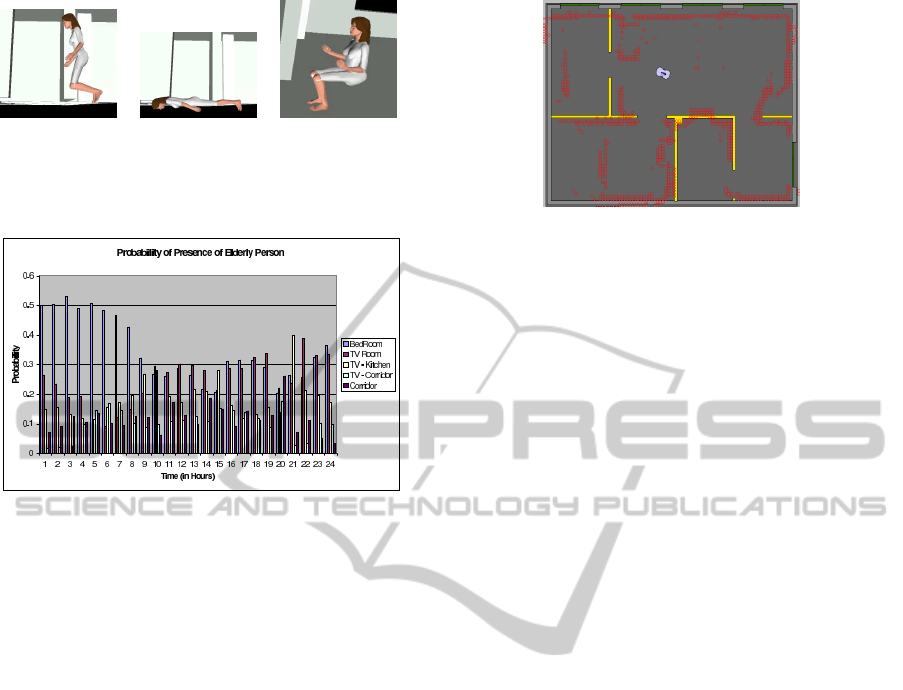

(a) (b) (c)

Figure 7: Dynamic postures of human character (a) In-

termediate posture for falling human, (b) Human fall and

(c) Sitting posture.

Figure 8: Probability of presence of human being in differ-

ent rooms at different times.

randomly select a room and move the simulated hu-

man in that room. In order to make this movement

more realistic, probabilities of presence of a human

being in different rooms have been generated. These

probabilities represent the presence of a human be-

ing at different places in the apartment based on time,

see fig. 8. Using these probabilities make it possible

to move the simulated character based on some pat-

tern that represents the real human being and thus the

movement to different rooms is not completely ran-

dom, although destinations in a particular room are

still random.

Moreover, it is also possible, that the simulated

human performs different postures while moving

from one place to the other. These postures may

include, sitting, standing, falling, getting up etc. A

random selection of such movements may result in a

chaotic movement pattern where after falling on the

ground the character may start walking without get-

ting up. Therefore, different probabilities are assigned

to different movements. In this way, selection be-

tween different movements ensures that no unrealistic

movement may occur and co-occurrences of move-

ments are regulated.

4 EXPERIMENTS AND RESULTS

The idea is to test the visualized and simulated envi-

ronment for its effectiveness and level of details with

Figure 9: Grid-map of the environment generated using

simulated laser scanner.

respect to the real situations that are required for ob-

serving different actions performed by the robot. As

an application scenario searching the human being by

the robot in the environment has been developed, see

(Mehdi and Berns, 2010) for details. The task of the

robot is to find the human being as early as possible

with minimal navigation necessary. To accomplish

this task, the robot has to drive autonomously in the

simulated environment and detect the human face us-

ing the camera. For autonomous navigation, it is nec-

essary that the grid-map is build using the simulated

laser scanner, containing information about the obsta-

cles in the scene, and the path is planned avoiding

these obstacles. Figure 9 shows the grid-map gener-

ated for the visualized environment where red blocks

show the detected obstacles.

In order to measure the performance of the robot

for searching the human, certain points are marked

as reference points in the environment. To make the

scenario more interesting, it is not always possible to

view the human character from these reference points

even if the simulated human is present around the

same area. This is consistent with a real life situa-

tion where sometimes it is not feasible to identify the

human being due to lightening conditions or orienta-

tion of the human or the robot. In this case the desired

behavior of the robot is that it should move to another

place and try to find the human there.

The experimental results show that the robot au-

tonomously navigates to different locations to find the

human character in the simulation. In some cases, due

to orientation and positioning of the human, the robot

was not able to find the human in the environment but

in such cases it navigated to the other rooms as was

desired.

5 CONCLUSIONS

This paper has presented a close-to-reality simulation

of a typical household scenario with a simulated hu-

man character and a service robot. The simulation

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

566

and visualization are based on the SimVis3D frame-

work. Due to flexibility of this framework static fur-

niture objects as well as dynamic human with typical

motion patterns could easily be realized. Moreover,

different sensor systems and actuators for the robot

can easily be employed. As a practical scenario to

underline the need of a simulation environment a se-

ries of tests have been performed where the robot had

to search the human being in different situations.

In order to make the simulated human more re-

alistic, future work will include collision detection

and effects of collision to the human and the environ-

ment. Besides, additional standard motion patterns of

the human character will be developed to increase the

level of realism during testing of methodologies be-

ing developed for the robot. This will be assessed

by consecutive real world experiments under simi-

lar conditions. Future developments concerning the

robotic platform concentrate on identifying different

postures of the human and on detecting and handling

unexpected changes in the human behavior. With re-

spect to the SimVis3D framework, the integration of

physics engine for static objects is the next task to ac-

complish.

ACKNOWLEDGEMENTS

We are thankful to HEC Pakistan and DAAD Ger-

many for funding of Syed Atif Mehdi. We also like

to thank IESE, Fraunhofer for support in conducting

experiments in Assisted Living Lab.

REFERENCES

Braun, T., Wettach, J., and Berns, K. (2007). A customiz-

able, multi-host simulation and visualization frame-

work for robot applications. In 13th International

Conference on Advanced Robotics (ICAR07), pages

1105–1110, Jeju, Korea.

Greggio, N., Silvestri, G., Menegatti, E., and Pagello,

E. (2007). A realistic simulation of a humanoid

robot in usarsim. In Proceeding of the 4th Interna-

tional Symposium on Mechatronics and its Applica-

tions (ISMA07), Sharjah, U.A.E.

Hodgins, J. K. (1994). Simulation of human running. In

IEEE International Conference on Robotics and Au-

tomation, volume 2, pages 1320–1325, San Diego,

CA. IEEE Computer Society Press.

Koenig, N. and Howard, A. (2004). Design and use

paradigms for gazebo, an open-source multi-robot

simulator. In : IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS), pages 2149–

2154, Sendai, Japan.

Koestler, A. and Braeunl, T. (2004). Mobile robot simula-

tion with realistic error models. In IEEE International

Conference on Robotics and Automation (ICRA).

Laue, T., Spiess, K., and Refer, T. (2005). A general phys-

ical robot simulator and its application in robocup. In

Proc. of RoboCup Symposium., Bremen, Germany.

Laue, T. and Stahl, C. (2010). Modeling and simulating

ambient assisted living environments a case study. In

Ambient Intelligence and Future Trends-International

Symposium on Ambient Intelligence (ISAmI 2010),

volume 72 of Advances in Soft Computing, pages 217–

220. Springer Berlin / Heidelberg.

Mehdi, S. A., Armbrust, C., Koch, J., and Berns, K. (2009).

Methodology for robot mapping and navigation in as-

sisted living environments. In PETRA ’09: Proceed-

ings of the 2nd International Conference on PErvasive

Technologies Related to Assistive Environments, num-

ber ISBN: 978-1-60558-409-6, Corfu, Greece. ACM,

New York, NY, USA.

Mehdi, S. A. and Berns, K. (2010). Behaviour based search-

ing of human using mdp. In The European Confer-

ence on Cognitive Ergonomics (ECCE 2010), num-

ber ISBN: 978-94-90818-04-3, pages 349–350, Delft,

The Netherlands. Mediamatica, Delft University of

Technology, The Netherlands.

Schmitz, N., Hirth, J., and Berns, K. (2010). A simulation

framework for human-robot interaction. In Proceed-

ings of the International Conferences on Advances in

Computer-Human Interactions (ACHI), pages 79–84,

St. Maarten, Netherlands Antilles.

Thalmann, D. (2004). Control and autonomy for intelligent

vritual agent behaviour. In Lecture Notes in Computer

Science, pages 515–524. Springer Berlin / Heidelberg.

Wang, J., Lewis, M., and Gennari, J. (2003). A game engine

based simulation of the nist urban search and rescue

arenas. In Proceedings of the 2003 Winter Simulation

Conference.

Wettach, J., Schmidt, D., and Berns, K. (2010). Simulat-

ing vehicle kinematics with simvis3d and newton. In

2nd International Conference on Simulation, Model-

ing and Programming for Autonomous Robots, Darm-

stadt, Germany.

A SIMULATED ENVIRONMENT FOR ELDERLY CARE ROBOT

567