ANOMALY-BASED SPAM FILTERING

Igor Santos, Carlos Laorden, Xabier Ugarte-Pedrero, Borja Sanz and Pablo G. Bringas

S

3

Lab, DeustoTech - Computing, Deusto Institute of Technology, University of Deusto

Avenida de las Universidades 24, 48007, Bilbao, Spain

Keywords:

Computer security, Spam filtering, Anomaly detection, Text classification.

Abstract:

Spam has become an important problem for computer security because it is a channel for the spreading of

threats such as computer viruses, worms and phishing. Currently, more than 85% of received e-mails are

spam. Historical approaches to combat these messages, including simple techniques such as sender blacklist-

ing or the use of e-mail signatures, are no longer completely reliable. Many solutions utilise machine-learning

approaches trained using statistical representations of the terms that usually appear in the e-mails. However,

these methods require a time-consuming training step with labelled data. Dealing with the situation where

the availability of labelled training instances is limited slows down the progress of filtering systems and offers

advantages to spammers. In this paper, we present the first spam filtering method based on anomaly detection

that reduces the necessity of labelling spam messages and only employs the representation of legitimate e-

mails. This approach represents legitimate e-mails as word frequency vectors. Thereby, an email is classified

as spam or legitimate by measuring its deviation to the representation of the legitimate e-mails. We show

that this method achieves high accuracy rates detecting spam while maintaining a low false positive rate and

reducing the effort produced by labelling spam.

1 INTRODUCTION

Electronic mail (e-mail) is a useful communication

channel. However, as usually happens with all use-

ful media, it is prone to misuse. In the past decade,

spam, or unsolicited bulk e-mail, has become a sig-

nificant problem for e-mail users: a huge amount of

spam arrives in people’s mailboxes every day. When

this paper was written, 87.6% of all e-mail messages

were spam, according to the Spam-o-meter website.

1

Spam not only is very annoying to every-day e-mail

users, but also constitutes an important computer se-

curity problem that costs billions of dollars in produc-

tivity losses (Bratko et al., 2006). Moreover,spam can

be used as a medium for phishing (i.e., attacks that

seek to acquire sensitive information from end-users)

(Jagatic et al., 2007) or the spread of malicious soft-

ware (e.g., computer viruses, Trojan horses, spyware

and Internet worms) (Bratko et al., 2006).

Because of the magnitude of the spam problem,

several spam filtering systems have been proposed by

both the academia and the industry. The simplest

methods for junk message filtering are often based on

blacklisting or signatures (Carpinter and Hunt, 2006).

1

http://www.junk-o-meter.com/stats/index.php

Blacklisting is a very simple technique that is

broadly used in most filtering products. More

specifically, these systems filter e-mails from certain

senders, whereas whitelisting (Heron, 2009) deliv-

ers e-mail from specific senders in order to reduce

the number of misclassified legitimate e-mails (also

known as ‘ham’ by the spam community). Another

popular approach for these so-called banishing meth-

ods is based on DNS blacklisting, in which the host

address is checked against a list of networks or servers

known to distribute spam (Jung and Sit, 2004; Ra-

machandran et al., 2006).

In contrast, signature-based systems create a

unique hash value (i.e., a message digest) for each

known spam message (Kołcz et al., 2004). The main

advantage of this type of methods is that they rarely

produce false positives and they are usually very fast

to compute. Examples of signature-based spam filter-

ing systems are: Cloudmark

2

, a commercial imple-

mentation of a signature-based filter that integrates

with the e-mail server, and Razor

3

, another filtering

system that uses a distributed and collaborative ap-

proach in order to deliver signatures (Carpinter and

2

http://www.cloudmark.com

3

http://razor.sourceforge.net

5

Santos I., Laorden C., Ugarte-Pedrero X., Sanz B. and G. Bringas P..

ANOMALY-BASED SPAM FILTERING.

DOI: 10.5220/0003444700050014

In Proceedings of the International Conference on Security and Cryptography (SECRYPT-2011), pages 5-14

ISBN: 978-989-8425-71-3

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Hunt, 2006).

However, these simple methods have several

shortcomings. First, blacklisting methods have a very

high rate of false positives, making them unreliable as

a standalone solution (Mishne et al., 2005). Second,

signature-based systems are unable to detect spam un-

til the junk message has been identified, properly reg-

istered and documented (Carpinter and Hunt, 2006).

In order to find a solution to this problem, the

research community has undertaken a huge amount

of work. Since machine-learning approaches have

succeeded in text categorisation problems (Sebas-

tiani, 2002) and spam filtering can be stated as a text

categorisation problem, these techniques have been

broadly adopted in spam filtering systems.

Consequently, substantial work has been dedi-

cated to the Na¨ıve Bayes classifier (Lewis, 1998),

with a high number of studies regarding anti-spam fil-

tering confirming it effective (Androutsopoulos et al.,

2000c; Schneider, 2003; Androutsopoulos et al.,

2000a; Androutsopoulos et al., 2000b; Seewald,

2007).

Another broadly embraced machine-learning

technique is Support Vector Machine (SVM) (Vap-

nik, 2000). The advantage of SVM is that its accuracy

does not degrade evenwhen many features are present

(Drucker et al., 1999). Therefore, such approaches

have been adopted to junk mail filtering (Blanzieri

and Bryl, 2007; Sculley and Wachman, 2007). Like-

wise, Decision Trees that classify by means of auto-

matically learned rule-sets (i.e., tests) (Quinlan, 1986)

have also been employed for spam filtering (Carreras

and M´arquez, 2001).

All of these machine-learning-based spam filter-

ing approaches have been termed as statistical ap-

proaches because they rely on an statistical rep-

resentation of terms within the messages (Zhang

et al., 2004). Machine-learning approaches model e-

mail messages using the Vector Space Model (VSM)

(Salton et al., 1975), an algebraic approach for In-

formation Filtering (IF), Information Retrieval (IR),

indexing and ranking. This model represents nat-

ural language documents in a mathematical manner

through vectors in a multidimensional space formed

by the words composing the message.

Machine-learning classifiers require a high num-

ber of labelled e-mails for each of the classes (i.e.,

spam and legitimate e-mails). However, it is quite

difficult to obtain this amount of labelled data for a

real-world problem such as spam filtering issue. To

generate these data, a time-consuming task of analy-

sis is mandatory, and in the process, some spam mes-

sages can avoid filtering.

In light of this background, we propose here the

first method that applies anomaly detection to spam

filtering. This approach is able to determine whether

an e-mail is spam or not by comparing word fre-

quency features with a dataset composed only of legit-

imate e-mails. If the e-mail under inspection presents

a considerable deviation to what it is considered as

usual (legitimate e-mails), it can be considered spam.

This method does not need updated data about spam

messages, and thus, it reduces the efforts of labelling

messages, working, for instance, only with a user’s

valid inbox folder.

Summarising, our main findings in this paper are:

• We present an anomaly-based approach for spam

filtering.

• We propose different deviation measures to deter-

mine whether an e-mail is spam or not.

• We show that labelling efforts can be reduced in

the industry, while still maintaining a high rate of

accuracy.

The remainder of this paper is organised as fol-

lows. Section 2 provides the background regarding

the representation of e-mails based on the VSM. Sec-

tion 3 details our anomaly-based method. Section 4

describes the experiments and presents results. Sec-

tion 5 discusses the implications of the obtained re-

sults and shows its limitations. Finally, Section 6 con-

cludes the paper and outlines avenues for future work.

2 VECTOR SPACE MODEL FOR

SPAM FILTERING

Spam filtering software attempts to accurately clas-

sify email massages into 2 main categories: spam or

legitimate messages. To this end, we use the informa-

tion found within the body and subject of an e-mail

message and discard every other piece of informa-

tion (e.g., the sender or time-stamp of the e-mail). To

represent messages, we start by removing stop-words

(Wilbur and Sirotkin, 1992), which are words devoid

of content (e.g., ‘a’,‘the’,‘is’). These words do not

provide any semantic informationand add noise to the

model (Salton and McGill, 1983).

Afterwards, we represent the e-mails using an In-

formation Retrieval (IR) model. Formally, let an IR

model be defined as a 4-tuple [E ,Q , F ,R, (q

i

,e

j

)]

(Baeza-Yates and Ribeiro-Neto, 1999) where:

• E , is a set of representations of e-mail;

• Q , is a set of representations of user queries;

• F , is a framework for modelling e-mails, queries

and their relationships;

SECRYPT 2011 - International Conference on Security and Cryptography

6

• R(q

i

,e

j

) is a ranking function that associates a real

number with a query q

i

, (q

i

∈ Q ) and an e-mail

representation e

j

, (e

j

∈ E ). This function is also

called a similarity function.

Let E be a set of text e-mails e, {e : {t

1

,t

2

,...t

n

}},

each one comprising an n number of t terms. We con-

sider w

i, j

a weight for term t

i

in an e-mail e

j

, whereas

if w

i, j

is not present in e, then w

i, j

= 0. Therefore, an

e-mail can be represented as a vector, starting from its

origin, of index terms ~e

j

= (w

1, j

,w

2, j

,...w

n, j

).

On the basis of this formalisation, we can apply

several IR models. Commonly,spam filtering systems

use Vector Space Model (VSM). The VSM represents

natural language documents in an algebraic fashion

by placing the vectors in a multidimensional space.

This space is formed by only positive axis intercepts.

In addition, documents are represented as a term-by-

document matrix, where the (i, j)

th

element illustrates

the association between the i

th

term and the j

th

docu-

ment. This association reflects the occurrence of the

i

th

term in document j. Terms can represent different

text units (e.g., a word or phrase) and can also be in-

dividually weighted allowing terms to become more

or less important within a document or the entire doc-

ument collection as a whole.

Specifically, we use ‘term frequency - inverse

document frequency’ (t f − id f ) (McGill and Salton,

1983) to obtain the weight of each word, whereas the

weight of the i

th

word in the j

th

e-mail, denoted by

weight(i, j), is defined by:

weight(i, j) = t f

i, j

· id f

i

(1)

where the term frequency t f

i, j

(McGill and Salton,

1983) is defined as:

t f

i, j

=

m

i, j

∑

k

m

k, j

(2)

where m

i, j

is the number of times the word t

i, j

appears

in an e-mail e, and

∑

k

m

k, j

is the total number of word

in the e-mail e.

On the other hand, the inverse document fre-

quency id f

i

is defined as:

id f

i

=

|E |

|E : t

i

∈ e|

(3)

where |E | is the total number of documents and |E :

t

i

∈ e| is the number of documents containing the

word t

i, j

.

We apply relevance weights to each feature based

on Information Gain (IG) (Kent, 1983):

IG( j) =

∑

v

j

∈

R

∑

C

i

P(v

j

,C

i

) ·

P(v

j

,C

i

)

P(v

j

) · P(C

i

)

(4)

where C

i

is the i-th class, v

j

is the value of the j-th

interpretation, P(v

j

,C

i

) is the probability that the j-th

attribute has the value v

j

in the class C

i

, P(v

j

) is the

probability that the j-th interpretation has the value v

j

in the training data, and P(C

i

) is the probability of the

training dataset belonging to the class C

i

. IG provides

a ratio for each feature that measures its importance

to consider if a sample is spam or not.

These weights were calculated from two datasets:

the LingSpam corpus

4

) composed of 480 spam e-

mails and 2,412 legitimate messages and the SpamAs-

sassin corpus

5

composed of 1,896 spam e-mails and

4,150 legitimate spam. These weights are useful to

obtain a better distance rating among samples.

3 ANOMALY MEASURES

Anomaly detection models what it is a normal mes-

sage and every deviation to this model is considered

anomalous. Through the word frequency features

of the VSM described in the previous section, our

method represents legitimate e-mails as points in the

feature space. When an e-mail is being inspected our

method starts by computing the values of the point in

the feature space. This point is then compared with

the previously calculated points of the legitimate e-

mails.

To this end, distance measures are required. In this

study, we have used the following distance measures:

• Manhattan Distance: This distance between two

points v and u is the sum of the lengths of the

projections of the line segment between the two

points onto the coordinate axes:

d(x,i) =

n

∑

i=0

|x

i

− y

i

| (5)

where x is the first point; y is the second point;

and x

i

and y

i

are the i

th

component of the first and

second point, respectively.

• Euclidean Distance: This distance is the length

of the line segment connecting two points. It is

calculated as:

d(x,y) =

n

∑

i=0

q

v

2

i

− u

2

i

(6)

where x is the first point; y is the second point;

and x

i

and y

i

are the i

th

component of the first and

second point, respectively.

4

Available at: http://nlp.cs.aueb.gr/software and datasets/

lingspam public.tar.gz

5

Available at: http://spamassassin.org/publiccorpus

ANOMALY-BASED SPAM FILTERING

7

By means of these measures, we are able to com-

pute the deviations between e-mails and the legiti-

mate e-mails. Since we have to compute this mea-

sure with the points representing legitimate e-mails,

a combination metric is required in order to obtain

a final distance value which considers every measure

performed. To this end, our system employs 3 simple

metrics:

• The mean value calculated from every distance

value in the training dataset.

• The lowest distance value from every distance

value in the training dataset.

• The highest value of the computed distances from

every distance value in the training dataset.

In this way, when our method inspects an e-mail a

final distance value is acquired, which will depend on

both the distance measure and the combination met-

ric.

4 EMPIRICAL VALIDATION

In order to validate our proposed method, we used

the SpamAssasin public corpus and the Ling Spam

Corpus.

Table 1: Comparison of the used dataset. The spam ratio

in both datasets does not follow the statistics of the number

of spam messages in the real world which is higher of the

85%. SpamAssasin dataset, however, has more real spam

and examples of obfuscated mails within it.

Feature SpamAssasin Ling Spam

No. Spam Messages 1,896 480

No. of Ham Messages 4,150 2,412

Spam %. 31,36% 16,60%

SpamAssassin corpus contains a total of 6,046

messages, of which 1,896 are spam and 4,150 are

legitimate e-mails. To adequate the dataset, we per-

formed a stop word removal based on an external

stop-word list.

6

Next, we constructed a file with the

resultant vector representations of the e-mails in order

to validate our method. We extracted the top 1,000

words using Information Gain (Kent, 1983).

Ling Spam consists of a mixture of both spam

and legitimate messages retrieved from the Linguis-

tic list, an e-mail distribution list about linguistics.

The dataset was preprocessed by removing HTML

tags, separation tokens and duplicate e-mails: only

the data of the body and the subject were kept. Ling

6

Available at: http://paginaspersonales.deusto.es/claorden/

resources/EnglishStopWords.txt

Spam comprises 2,892 different e-mails, of which

2,412 are legitimate e-mails obtained by download-

ing digests from the list and 480 are spam e-mails

retrieved from one of the authors of the corpus (for

a more detailed description of the corpus please re-

fer to (Androutsopoulos et al., 2000a; Sakkis et al.,

2003)). Junk messages represent approximately the

16% of the whole dataset, a rate close to the actual

rate (Cranor and LaMacchia, 1998; Sahami et al.,

1998; Sakkis et al., 2003). Stop Word Removal

(Wilbur and Sirotkin, 1992) and stemming (Lovins,

1968) were performed on the e-mails, creating 4 dif-

ferent datasets:

1. Bare: In this dataset, the e-mail messages were

pre-processed by the removal of HTML tags, sep-

aration tokens and duplicate e-mails.

2. Lemm: In addition to the removal pre-process

step, a stemming phase was performed. Stem-

ming reduces inflected or derived words to their

stem, base or root form.

3. Stop: For this dataset, a stop word removal task

was performed. This process removes all stop

words (e.g., common words like ‘a’ or ‘the’).

4. Lemm stop: This dataset uses the combination of

both stemming and stop-word removal processes.

We used the bare dataset and we performed a stop

word removal based on the same stop-word list as for

SpamAssasin corpus.

Specifically, we followed the next configuration

for the empirical validation:

1. Cross Validation. For the SpamAssasin dataset,

we performed a 5-fold cross-validation (Kohavi,

1995) to divide the dataset composed of legitimate

e-mails (the normal behaviour) into 5 different di-

visions of 3,320 e-mails for representing normal-

ity and 830 for measuring deviations within legit-

imate e-mails. In this way, each fold is composed

of 3,320 legitimate e-mails that will be used as

representation of normality and 2,726 testing e-

mails, from which 830 are legitimate e-mails and

1,896 are spam.

With regards to Ling Spam dataset, we also per-

formed a 5-fold cross-validation (Kohavi, 1995)

forming 3 different divisions of 1,930 e-mails and

two divisions of 1,929 e-mails for representing

normality and other 3 divisions of 482 e-mails and

2 of 483 for measuring deviations within legiti-

mate e-mail. In this way, each fold is composed of

1,930 or 1,929 legitimate e-mails that will be used

as representation of normality and 963 or 962 test-

ing e-mails, from which 483 or 482 were legit-

imate e-mails and 480 were spam. The number

SECRYPT 2011 - International Conference on Security and Cryptography

8

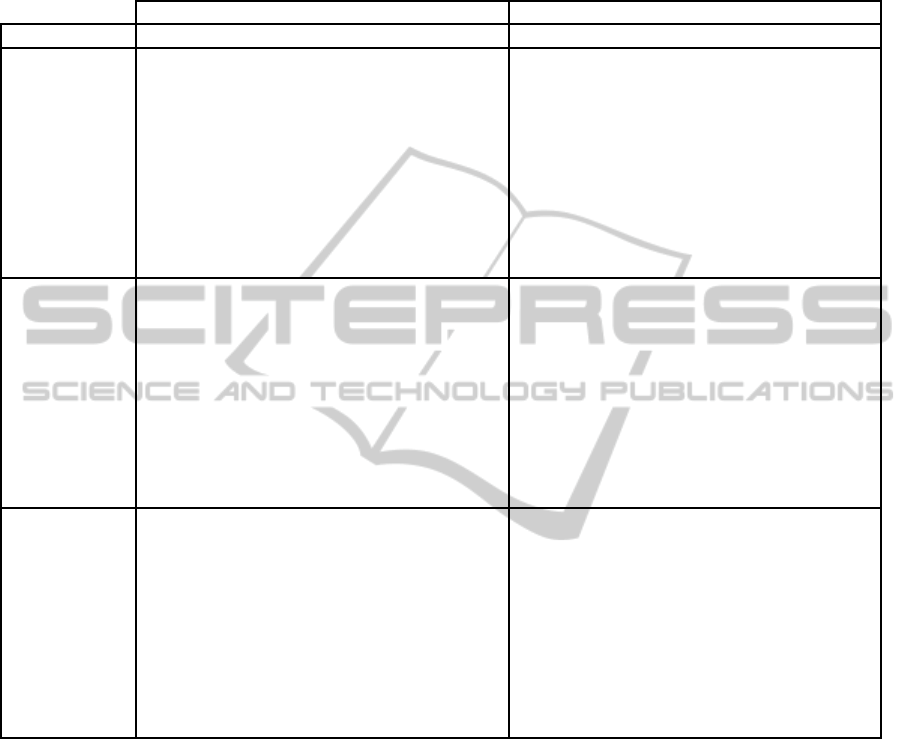

Table 2: Results for different combination rules and distance measures using Spam Assasin corpus. The abbreviation ‘Thres’.

stands for the chosen threshold. The results in bold are the best for each combination rule and distance measure. Our

method is able to detect more than the 90% of the spam messages while maintaining a high precision (a low number of

legitimate messages are misclassified). In particular, the best results were obtained with minimum distance combination rule,

the Manhattan distance and a 1.32493 of threshold: a 95.40% of precision, a 93.86% of recall and a 94.62% of f-Measure.

Manhattan Distance Euclidean Distance

Combination Thres. Prec. Rec. F-Meas. Thres. Prec. Rec. F-Meas.

Mean

1.15978 69.56% 100.0% 82.05% 1.70013 69.64% 100.00% 82.10%

1.58697 70.55% 99.86% 82.68% 1.91763 76.14% 97.77% 85.61%

2.01417 79.44% 98.91% 88.11% 2.13512 87.74% 81.04% 84.26%

2.44136 91.03% 92.85% 91.93% 2.35262 93.56% 56.53% 70.48%

2.86856 97.01% 76.18% 85.34% 2.57011 94.93% 30.63% 46.32%

3.29575 98.70% 50.44% 66.76% 2.78761 93.64% 12.57% 22.17%

3.72295 99.39% 27.62% 43.22% 3.00510 94.44% 6.81% 12.71%

4.15014 99.22% 14.84% 25.82% 3.22260 95.19% 3.13% 6.07%

4.57734 99.32% 7.71% 14.31% 3.44009 97.10% 1.41% 2.79%

5.00453 100.00% 4.55% 8.70% 3.65759 100.00% 0.93% 1.84%

Maximum

3.39114 69.55% 100.00% 82.04% 3.41015 69.67% 100.00% 82.12%

3.81833 69.61% 99.89% 82.05% 3.55333 72.99% 97.66% 83.54%

4.24553 69.90% 98.50% 81.77% 3.69652 83.86% 82.23% 83.04%

4.67272 71.69% 91.74% 80.48% 3.83970 93.57% 55.42% 69.61%

5.09992 83.51% 67.62% 74.73% 3.98288 95.87% 29.86% 45.54%

5.52711 94.94% 35.43% 51.61% 4.26925 95.33% 6.46% 12.09%

5.95431 99.56% 14.48% 25.29% 4.12607 94.76% 12.01% 21.33%

6.38150 100.00% 5.84% 11.04% 4.41243 95.19% 2.92% 5.67%

6.80870 100.00% 0.96% 1.90% 4.55562 97.18% 1.46% 2.87%

6.25298 100.00% 7.49% 13.94% 4.69880 100.00% 1.01% 2.01%

Minimum

0.04335 69.61% 100.00% 0.44679 0.44679 69.76% 100.00% 82.18%

0.47054 74.51% 99.85% 85.34% 0.76440 70.42% 99.92% 82.62%

0.89774 87.75% 98.89% 92.99% 1.08201 74.92% 99.78% 85.58%

1.32493 95.40% 93.86% 94.62% 1.39962 92.10% 94.00% 93.04%

1.75213 97.91% 79.88% 87.98% 1.71723 98.74% 68.61% 80.96%

2.17932 98.92% 54.14% 69.98% 2.03484 99.63% 28.54% 44.38%

2.60652 99.49% 26.88% 42.32% 2.35245 99.65% 6.02% 11.36%

3.03371 99.91% 11.43% 20.52% 2.67006 98.88% 1.86% 3.64%

3.46091 100.00% 3.18% 6.15% 2.98767 98.78% 0.85% 1.69%

3.04013 100.00% 11.31% 20.32% 3.30528 100.00% 0.36% 0.71%

of legitimate e-mails varied in the two last folds

because the number of legitimate e-mails was not

divisible by 5.

2. Calculating Distances and Combination Rules.

We extracted the aforementioned characteristics

and employed the 2 different measures and the 3

different combination rules described in Section

3 to obtain a final measure of deviation for each

testing evidence. More accurately, we applied the

following distances:

• Manhattan Distance.

• Euclidean Distance.

For the combination rules we have tested the fol-

lowings:

• The Mean Value.

• The Lowest Distance.

• The Highest Value.

3. Defining Thresholds. For each measure and

combination rule, we established 10 different

thresholds to determine whether an email is spam

or not. These thresholds were selected by first es-

tablishing the lowest one. This number was the

highest possible value with which no spam mes-

sages were misclassified. The highest one was se-

lected as the lowest possible value with which no

legitimate spam messages were misclassified.

In this way, the method is configurable in both

reducing false positives or false negatives. It is

important to define whether it is better to clas-

sify spam as legitimate or to classify legitimate

ANOMALY-BASED SPAM FILTERING

9

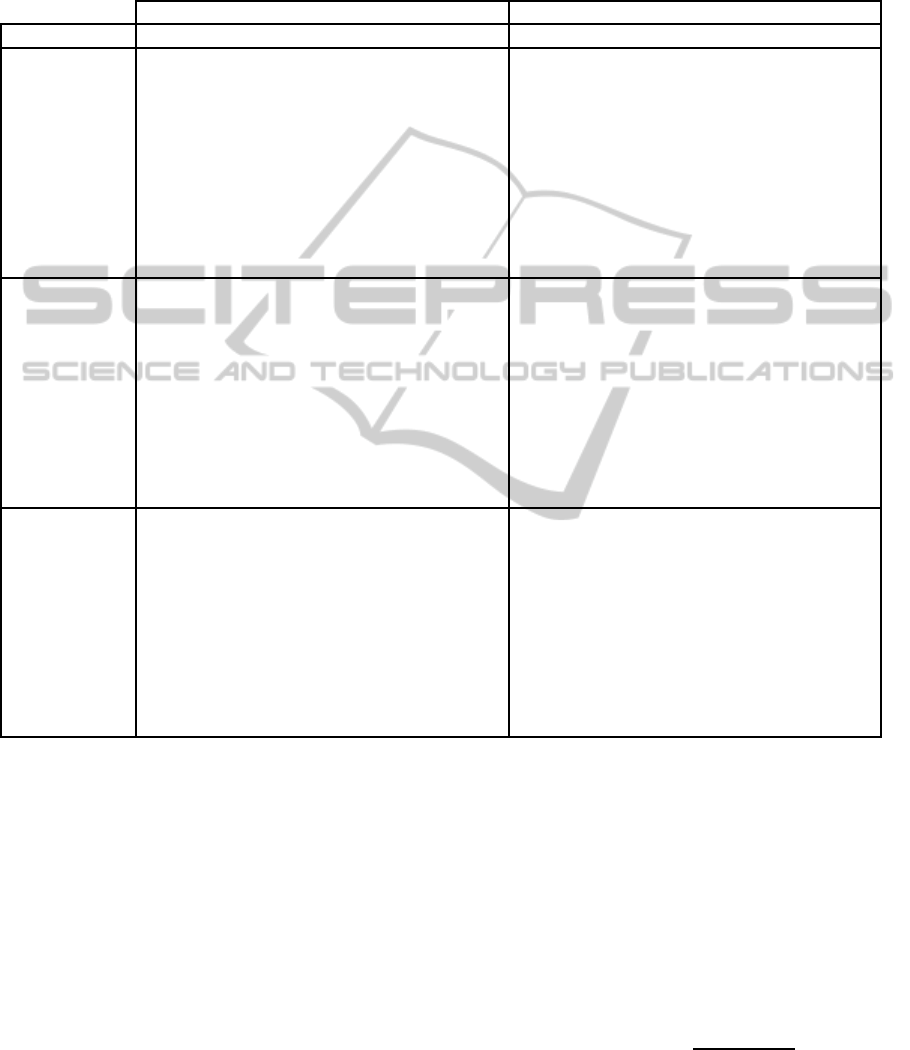

Table 3: Results for different combination rules and distance measures using LingSpam corpus. The abbreviation ‘Thres’.

means the chosen threshold. The results remarked in bold are the best for each combination rule and distance measure. Using

this dataset, our method also can detect more than the 90% of the spam messages whereas maintaining a high precision (a

low number of legitimate messages are misclassified). The best results were obtained with the Euclidean Distance, the mean

combination rule and 2.59319 as the threshold. In particular, a 92.82% of precision, a 91.58% of recall and a 92.20% of

f-measure. These results are a little lower than when using SpamAssasin.

Manhattan Distance Euclidean Distance

Combination Thres. Prec. Rec. F-Meas. Thres. Prec. Rec. F-Meas.

Mean

1.86313 49.87% 100.00% 66.56% 1.87061 49.91% 100.00% 66.58%

2.23637 50.04% 99.79% 66.65% 2.11147 0.50357 99.79% 66.94%

2.58960 51.02% 97.21% 66.92% 2.35233 68.39% 98.33% 80.67%

2.95284 56.07% 94.29% 70.32% 2.59319 92.82% 91.58% 92.20%

3.31608 65.86% 84.08% 73.87% 2.83405 97.31% 52.71% 68.38%

3.67931 79.18% 73.54% 76.26% 3.07490 98.54% 19.75% 32.91%

4.04255 92,40% 62.29% 74.42% 3.31576 98.33% 7.38% 13.72%

4.40579 97.89% 52.13% 68.03% 3.55662 98.84% 3.54% 6.84%

4.76902 99.68% 39.38% 56.45% 3.79748 96.15% 1.04% 2.06%

5.13226 100.00% 29.88% 46.01% 4.03834 100.00% 0.62% 1.24%

Maximum

3.69053 49.89% 100.00% 66.56% 3.22709 49.93% 100.00% 66.60%

4.05377 50.08% 99.79% 66.69% 3.41297 50.80% 99.96% 67.37%

4.41700 51.01% 98.46% 67.21% 3.59886 53.35% 99.33% 69.41%

4.78024 54.65% 95.00% 69.39% 3.78474 54.89% 96.13% 69.88%

5.14348 63.06% 85.13% 72.45% 3.97063 59.97% 86.63% 70.87%

5.50671 76.23% 74.29% 75.25% 4.15651 85.95% 79.29% 82.49%

5.86995 89.49% 61.38% 72.81% 4.34240 97.23% 58.50% 73.05%

6.23319 99.03% 46.58% 63.36% 4.52828 97.76% 18.17% 30.64%

6.59642 100.00% 33.08% 49.72% 4.71417 98.08% 6.38% 11.97%

6.95966 100.00% 20.71% 34.31% 4.90005 100.00% 2.46% 4.80%

Minimum

0.09919 50,29% 100.00% 66.93% 0.69584 50.68% 100.00% 67.26%

0.46243 51.02% 99.79% 67.52% 1.00615 50.98% 99.79% 67.48%

0.82566 52.04% 96.88% 67.71% 1.31645 51.95% 99.79% 68.33%

1.18890 55.16% 94.17% 69.57% 1.62676 59.70% 98.33% 74.30%

1.55214 58.31% 84.17% 68.89% 1.93707 87.51% 93.13% 90.23%

1.91537 65.82% 74.38% 69.84% 2.24737 97.54% 62.79% 76.40%

2.27861 74.10% 63.54% 68.42% 2.55768 99.00% 20.67% 34.20%

2.64185 85.06% 54.79% 66.65% 2.86799 99.42% 7.13% 13.30%

3.00508 94.66% 43.54% 59.65% 3.17829 96.97% 1.33% 2.63%

3.84820 100.00% 23.13% 37.56% 3.48860 100.00% 0.29% 0.58%

as spam. In particular, one may think that it is

more important to detect more spam messages

than to minimise false positives. However, for

commercial reasons, one may think just the op-

posite: a user can be bothered if their legitimate

messages are flagged as spam. To improve these

errors, we can apply two techniques: (i) whitelist-

ing and blacklisting or (ii) cost-sensitive learning.

White and black lists store a signature of an e-mail

in order to be flagged either as spam (blacklist-

ing) or legitimate messages (whitelisting). On the

other hand, cost-sensitive learning is a machine-

learning technique where one can specify the cost

of each error and the classifiers are trained taking

into account that consideration (Elkan, 2001). We

can adapt cost-sensitive learning for anomaly de-

tection by using cost matrices.

4. Testing the Method. To evaluate the results, we

measured the most frequently used for spam: pre-

cision (Prec.), recall (Rec.) and f-measure (F-

meas.). We measured the precision of the spam

identification as the number of correctly classified

spam e-mails divided by the number of correctly

classified spam e-mails and the number of legiti-

mate e-mails misclassified as spam:

Precision =

N

s→s

N

s→s

+ N

l→s

(7)

where N

s→s

is the number of correctly classified

spam and N

l→s

is the number of legitimate e-mails

SECRYPT 2011 - International Conference on Security and Cryptography

10

misclassified as spam.

Additionally, we measured the recall of the spam

e-mail messages, which is the number of correctly

classified spam e-mails divided by the number of

correctly classified spam e-mails and the number

of spam e-mails misclassified as legitimate:

Recall =

N

s→s

N

s→s

+ N

s→l

(8)

We also computed the F-measure, which is the

harmonic mean of both the precision and recall,

simplified as follows:

F

-

measure =

2N

m→m

2N

m→m

+ N

m→l

+ N

l→m

(9)

Table 2 shows the obtained results for SpamAs-

sasin corpus for the different distances, combination

rules and thresholds. The best results were obtained

with the Manhattan Distance, the minimum combina-

tion rule and a 1.32493 threshold: a 95.40% of preci-

sion, a 93.86% of recall and a 94.62% of f-measure.

Table 3 shows the obtained results for LingSpam cor-

pus using different distances, combination rules and

thresholds. Using this dataset, the best configuration

was the one performed with the Euclidean Distance,

the mean combination rule and 2.59319 as the thresh-

old: the method achieved a 92.82% of precision, a

91.58% of recall and a 92.20% of f-measure.

The fact that the best results were obtained with

the minimum distance, which is obviously the most

conservative configuration for distance, highlights a

possible topic of discussion regarding what should be

called anomaly in e-mails. As we aforementioned,

currently more than the 85% of the e-mails are spam

and, therefore, in terms of normality, receiving a le-

gitimate e-mail is an anomalous.

5 DISCUSSION

The final results show that this method achieves high

levels of accuracy. In addition, it can minimise the

number of legitimate e-mails that are misclassified

and is also able to detect a high number of spam

messages. Nevertheless, several points of discussion

are important regarding the suitability of the proposed

method.

The VSM assumes that every term is independent,

which is, at least from the linguistic point of view,

not completely true. Despite the fact that e-mails are

usually represented as a sequence of words, there are

relationships between words on a semantic level that

also affect e-mails (Cohen, 1974). Specifically, we

can find several linguistic phenomena in natural lan-

guages(Polyvyanyy, 2007):

• Synonyms: Two or more words are interchange-

able because of their similar (or identical) mean-

ing (e.g., ‘buy’ and ‘purchase’) (Carnap, 1955).

• Hyponyms: Specific instances of a more general

word (e.g., ‘spicy’ and ‘salty’ are hyponyms of

‘flavour’)(Cruse, 1975).

• Metonymy: The substitution of one word for

another with which it is associated (e.g., ‘po-

lice’ instead of ‘law enforcement’) (Radden and

K¨ovecses, 1999).

• Homography: Words with the same orthography

but different meaning (e.g., ‘bear’: ‘to support and

carry’ and ‘an animal’) (Ming-Tzu and Nation,

2004).

• Word-groups: Clusters of words that have se-

mantic meaning when they are grouped (e.g.,

‘New York City’).

Thus, our representation cannot handle the ex-

isting linguistic phenomena in natural languages

(Becker and Kuropka, 2003). In fact, attacks exist

that evade spam filtering systems through the use of

synonyms (Karlberger et al., 2007), which our model

is not capable of defeating.

As a solution, the Topic-based Vector Space

Model (TVSM) (Becker and Kuropka, 2003) and the

enhanced Topic-based Vector Space Model (eTVSM)

(Kuropka, 2004) have been proposed in the last few

years. The TVSM represents documents using a

vector-representation where axes are topics rather

than terms and, therefore, terms are weighted based

upon how strongly related they are to a topic. In

contrast, the eTVSM uses an ontology to represent

the different relations between terms and, in this way,

provides a richer natural language retrieval model that

is able to accommodate synonyms, homonyms and

other linguistic phenomena (Awad et al., 2008).

There is also a problem derived from IR and Nat-

ural Language Processing (NLP) when dealing with

semantics: Word Sense Disambiguation (WSD). A

spammer may evade our method by explicitly ex-

changing the key words of the mail with other pol-

yseme terms and thus avoid detection. WSD is

considered necessary in order to accomplish most

natural language processing tasks (Ide and V´eronis,

1998). We propose the study of different WSD tech-

niques (a survey of different WSD techniques can

be found in (Navigli, 2009)) capable of providing a

more semantics-aware spam filtering system. Never-

theless, a semantic approach for spam filtering will

have to deal with the semantics of different languages

ANOMALY-BASED SPAM FILTERING

11

(Bates and Weischedel, 1993) and thus be language-

dependant.

Besides, our method has several limitations due to

the representation of e-mails. In this way, because

most of the spam filtering techniques are based on

the frequencies with which terms appear within mes-

sages, spammers have started modifying their tech-

niques to evade filters.

For example, Good Word Attack is a method that

modifies the term statistics by appending a set of

words that are characteristic of legitimate e-mails,

thereby bypass spam filters. Nevertheless, we can

adopt some of the methods that have been proposed in

order to improve spam filtering, such as Multiple In-

stance Learning (MIL) (Dietterich et al., 1997). MIL

divides an instance or a vector in the traditional su-

pervised learning methods into several sub-instances

and classifies the original vector based on the sub-

instances (Maron and Lozano-P´erez, 1998). Zhou et

al. (Zhou et al., 2007) proposed the adoption of multi-

ple instance learning for spam filtering by dividing an

e-mail into a bag of multiple segments and classifying

it as spam if at least one instance in the corresponding

bag was spam.

Another attack, known as tokenisation, works

against the feature selection of the message by split-

ting or modifying key message features, which ren-

ders the term-representation as no longer feasible

(Wittel and Wu, 2004).

All of these attacks, which spammers have been

adopting, should be taken into account in the con-

struction of future spam-filtering-systems.

In our experiments, we used a dataset that is very

small in comparison to the real-world size. As the

dataset size grows, the issue of scalability becomes

a concern. This problem produces excessive storage

requirements, increases time complexity and impairs

the general accuracyof the models (Cano et al., 2006).

To reduce disproportionate storage and time costs, it

is necessary to reduce the size of the original training

set (Czarnowski and Jedrzejowicz, 2006).

To solve this issue, data reduction is normally

considered an appropriate preprocessing optimisation

technique (Pyle, 1999; Tsang et al., 2003). This type

of techniques have many potential advantages such as

reducing measurement, storage and transmission; de-

creasing training and testing times; confronting the

problem of dimensionality to improve prediction per-

formance in terms of speed, accuracy and simplicity;

and facilitating data visualisation and understanding

(Torkkola, 2003; Dash and Liu, 2003). Data reduc-

tion can be implemented in two ways. Instance se-

lection (IS) seeks to reduce the number of evidences

(i.e., number of rows) in the training set by select-

ing the most relevant instances or by re-sampling new

ones (Liu and Motoda, 2001). Feature selection (FS)

decreases the number of attributes or features (i.e.,

columns) in the training set (Liu and Motoda, 2008).

It is also important to consider efficiency and

processing time. Our system compares each e-mail

against a big dataset. Despite Euclidean and Man-

hattan distances are easy to compute, more time-

consuming distance measures like Mahalanobis dis-

tance will take too much time to process every e-mail

under analysis.

6 CONCLUDING REMARKS

Spam is a serious computer security issue that is not

only annoying for end-users, but also financially dam-

aging and dangerous to computer security because of

the possible spread of other threats like malware or

phishing. The classic machine-learning-based spam

filtering methods, despite their ability to detect spam,

have a very time-consuming step of labelling e-mails.

In this paper, we presented a spam filtering system

that is inspired in anomaly detection systems. Us-

ing this method, we are able to reduce the number

of required labelled messages and, therefore, reduce

the efforts for the filtering industry. Our experiments

show that this approach provides high percentages of

spam detection whilst keeping the number of misclas-

sified legitimate messages low. Besides, this method

works only with legitimate e-mails and, therefore, it

can be trained using the inbox of a user.

Future versions of this spam filtering system will

move in five main directions:

1. We will focus on attacks against statistical spam

filtering systems such as tokenisation or good

word attacks.

2. We plan to include the semantics of this method

with more linguistic relationships.

3. We will improve the scalability of the anomaly

method in order to reduce the number of distance

computations required.

4. We will study the feasibility of applying Word

Sense Disambiguation techniques to this spam fil-

tering method.

5. We will deeply investigate in what has to be con-

sidered an anomaly in the e-mail filtering prob-

lem, comparing whether is better to consider spam

or legitimate as an anomalous e-mail.

SECRYPT 2011 - International Conference on Security and Cryptography

12

REFERENCES

Androutsopoulos, I., Koutsias, J., Chandrinos, K.,

Paliouras, G., and Spyropoulos, C. (2000a). An eval-

uation of naive bayesian anti-spam filtering. In Pro-

ceedings of the workshop on Machine Learning in the

New Information Age, pages 9–17.

Androutsopoulos, I., Koutsias, J., Chandrinos, K., and Spy-

ropoulos, C. (2000b). An experimental comparison

of naive Bayesian and keyword-based anti-spam fil-

tering with personal e-mail messages. In Proceedings

of the 23

rd

annual international ACM SIGIR confer-

ence on Research and development in information re-

trieval, pages 160–167.

Androutsopoulos, I., Paliouras, G., Karkaletsis, V., Sakkis,

G., Spyropoulos, C., and Stamatopoulos, P. (2000c).

Learning to filter spam e-mail: A comparison of a

naive bayesian and a memory-based approach. In Pro-

ceedings of the Machine Learning and Textual Infor-

mation Access Workshop of the 4

th

European Confer-

ence on Principles and Practice of Knowledge Dis-

covery in Databases.

Awad, A., Polyvyanyy, A., and Weske, M. (2008). Semantic

querying of business process models. In IEEE Inter-

national Conference on Enterprise Distributed Object

Computing Conference (EDOC 2008), pages 85–94.

Baeza-Yates, R. A. and Ribeiro-Neto, B. (1999). Mod-

ern Information Retrieval. Addison-Wesley Longman

Publishing Co., Inc., Boston, MA, USA.

Bates, M. and Weischedel, R. (1993). Challenges in natural

language processing. Cambridge Univ Pr.

Becker, J. and Kuropka, D. (2003). Topic-based vector

space model. In Proceedings of the 6th International

Conference on Business Information Systems, pages

7–12.

Blanzieri, E. and Bryl, A. (2007). Instance-based spam

filtering using SVM nearest neighbor classifier. Pro-

ceedings of FLAIRS-20, pages 441–442.

Bratko, A., Filipiˇc, B., Cormack, G., Lynam, T., and Zupan,

B. (2006). Spam filtering using statistical data com-

pression models. The Journal of Machine Learning

Research, 7:2673–2698.

Cano, J., Herrera, F., and Lozano, M. (2006). On the combi-

nation of evolutionary algorithms and stratified strate-

gies for training set selection in data mining. Applied

Soft Computing Journal, 6(3):323–332.

Carnap, R. (1955). Meaning and synonymy in natural lan-

guages. Philosophical Studies, 6(3):33–47.

Carpinter, J. and Hunt, R. (2006). Tightening the net: A

review of current and next generation spam filtering

tools. Computers & security, 25(8):566–578.

Carreras, X. and M´arquez, L. (2001). Boosting trees for

anti-spam email filtering. In Proceedings of RANLP-

01, 4th international conference on recent advances in

natural language processing, pages 58–64. Citeseer.

Cohen, D. (1974). Explaining linguistic phenomena. Hal-

sted Press.

Cranor, L. and LaMacchia, B. (1998). Spam! Communica-

tions of the ACM, 41(8):74–83.

Cruse, D. (1975). Hyponymy and lexical hierarchies.

Archivum Linguisticum, 6:26–31.

Czarnowski, I. and Jedrzejowicz, P. (2006). Instance reduc-

tion approach to machine learning and multi-database

mining. In Proceedings of the Scientific Session orga-

nized during XXI Fall Meeting of the Polish Informa-

tion Processing Society, Informatica, ANNALES Uni-

versitatis Mariae Curie-Skłodowska, Lublin, pages

60–71.

Dash, M. and Liu, H. (2003). Consistency-based search

in feature selection. Artificial Intelligence, 151(1-

2):155–176.

Dietterich, T., Lathrop, R., and Lozano-P´erez, T. (1997).

Solving the multiple instance problem with axis-

parallel rectangles. Artificial Intelligence, 89(1-2):31–

71.

Drucker, H., Wu, D., and Vapnik, V. (1999). Support vector

machines for spam categorization. IEEE Transactions

on Neural networks, 10(5):1048–1054.

Elkan, C. (2001). The foundations of cost-sensitive learn-

ing. In Proceedings of the 2001 International Joint

Conference on Artificial Intelligence, pages 973–978.

Heron, S. (2009). Technologies for spam detection. Net-

work Security, 2009(1):11–15.

Ide, N. and V´eronis, J. (1998). Introduction to the special

issue on word sense disambiguation: the state of the

art. Computational linguistics, 24(1):2–40.

Jagatic, T., Johnson, N., Jakobsson, M., and Menczer, F.

(2007). Social phishing. Communications of the ACM,

50(10):94–100.

Jung, J. and Sit, E. (2004). An empirical study of spam traf-

fic and the use of DNS black lists. In Proceedings of

the 4th ACM SIGCOMM conference on Internet mea-

surement, pages 370–375. ACM New York, NY, USA.

Karlberger, C., Bayler, G., Kruegel, C., and Kirda, E.

(2007). Exploiting redundancy in natural language

to penetrate bayesian spam filters. In Proceedings of

the 1

st

USENIX workshop on Offensive Technologies

(WOOT), pages 1–7. USENIX Association.

Kent, J. (1983). Information gain and a general measure of

correlation. Biometrika, 70(1):163–173.

Kohavi, R. (1995). A study of cross-validation and boot-

strap for accuracy estimation and model selection. In

International Joint Conference on Artificial Intelli-

gence, volume 14, pages 1137–1145.

Kołcz, A., Chowdhury, A., and Alspector, J. (2004). The

impact of feature selection on signature-driven spam

detection. In Proceedings of the 1

st

Conference on

Email and Anti-Spam (CEAS-2004).

Kuropka, D. (2004). Modelle zur Repr¨asentation

nat¨urlichsprachlicher Dokumente-Information-

Filtering und-Retrieval mit relationalen Datenbanken.

Advances in Information Systems and Management

Science, 10.

ANOMALY-BASED SPAM FILTERING

13

Lewis, D. (1998). Naive (Bayes) at forty: The independence

assumption in information retrieval. Lecture Notes in

Computer Science, 1398:4–18.

Liu, H. and Motoda, H. (2001). Instance selection and con-

struction for data mining. Kluwer Academic Pub.

Liu, H. and Motoda, H. (2008). Computational methods of

feature selection. Chapman & Hall/CRC.

Lovins, J. (1968). Development of a Stemming Algorithm.

Mechanical Translation and Computational Linguis-

tics, 11(1):22–31.

Maron, O. and Lozano-P´erez, T. (1998). A framework for

multiple-instance learning. Advances in neural infor-

mation processing systems, pages 570–576.

McGill, M. and Salton, G. (1983). Introduction to modern

information retrieval. McGraw-Hill.

Ming-Tzu, K. and Nation, P. (2004). Word meaning in aca-

demic English: Homography in the academic word

list. Applied linguistics, 25(3):291–314.

Mishne, G., Carmel, D., and Lempel, R. (2005). Block-

ing blog spam with language model disagreement. In

Proceedings of the 1

st

International Workshop on Ad-

versarial Information Retrieval on the Web (AIRWeb),

pages 1–6.

Navigli, R. (2009). Word sense disambiguation: a survey.

ACM Computing Surveys (CSUR), 41(2):10.

Polyvyanyy, A. (2007). Evaluation of a novel information

retrieval model: eTVSM. MSc Dissertation.

Pyle, D. (1999). Data preparation for data mining. Morgan

Kaufmann.

Quinlan, J. (1986). Induction of decision trees. Machine

learning, 1(1):81–106.

Radden, G. and K¨ovecses, Z. (1999). Towards a theory of

metonymy. Metonymy in language and thought, pages

17–59.

Ramachandran, A., Dagon, D., and Feamster, N. (2006).

Can DNS-based blacklists keep up with bots. In Con-

ference on Email and Anti-Spam. Citeseer.

Sahami, M., Dumais, S., Heckerman, D., and Horvitz, E.

(1998). A Bayesian approach to filtering junk e-mail.

In Learning for Text Categorization: Papers from the

1998 workshop, volume 62, pages 98–05. Madison,

Wisconsin: AAAI Technical Report WS-98-05.

Sakkis, G., Androutsopoulos, I., Paliouras, G., Karkaletsis,

V., Spyropoulos, C., and Stamatopoulos, P. (2003).

A memory-based approach to anti-spam filtering for

mailing lists. Information Retrieval, 6(1):49–73.

Salton, G. and McGill, M. (1983). Introduction to modern

information retrieval. McGraw-Hill New York.

Salton, G., Wong, A., and Yang, C. (1975). A vector space

model for automatic indexing. Communications of the

ACM, 18(11):613–620.

Schneider, K. (2003). A comparison of event models for

Naive Bayes anti-spam e-mail filtering. In Proceed-

ings of the 10

th

Conference of the European Chap-

ter of the Association for Computational Linguistics,

pages 307–314.

Sculley, D. and Wachman, G. (2007). Relaxed online SVMs

for spam filtering. In Proceedings of the 30

th

annual

international ACM SIGIR conference on Research and

development in information retrieval, pages 415–422.

Sebastiani, F. (2002). Machine learning in automated

text categorization. ACM computing surveys (CSUR),

34(1):1–47.

Seewald, A. (2007). An evaluation of naive Bayes variants

in content-based learning for spam filtering. Intelli-

gent Data Analysis, 11(5):497–524.

Torkkola, K. (2003). Feature extraction by non paramet-

ric mutual information maximization. The Journal of

Machine Learning Research, 3:1415–1438.

Tsang, E., Yeung, D., and Wang, X. (2003). OFFSS: op-

timal fuzzy-valued feature subset selection. IEEE

transactions on fuzzy systems, 11(2):202–213.

Vapnik, V. (2000). The nature of statistical learning theory.

Springer.

Wilbur, W. and Sirotkin, K. (1992). The automatic identifi-

cation of stop words. Journal of information science,

18(1):45–55.

Wittel, G. and Wu, S. (2004). On attacking statistical spam

filters. In Proceedings of the 1

st

Conference on Email

and Anti-Spam (CEAS).

Zhang, L., Zhu, J., and Yao, T. (2004). An evaluation of sta-

tistical spam filtering techniques. ACM Transactions

on Asian Language Information Processing (TALIP),

3(4):243–269.

Zhou, Y., Jorgensen, Z., and Inge, M. (2007). Combating

Good Word Attacks on Statistical Spam Filters with

Multiple Instance Learning. In Proceedings of the

19

th

IEEE International Conference on Tools with Ar-

tificial Intelligence-Volume 02, pages 298–305. IEEE

Computer Society.

SECRYPT 2011 - International Conference on Security and Cryptography

14