IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE

SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF

SYSTEMS

Lawrence John

1

, Patricia M. McCormick

2

, Tom McCormick

2

, Greg McNeill

3

and John Boardman

4

1

Analytic Services, Inc., 2900 South QuincyStreet STE 800, Arlington, VA., U.S.A.

2

Alpha Informatics, Ltd., 7900 Sudley Road STE 711, Manassas, VA, U.S.A.

3

ExoAnalytic Solutions, Inc, 20532 El Toro Road STE 303, Mission Viejo, CA, U.S.A.

4

School of Systems and Enterprises, Stevens Insitute of Technology, Castle Point on Hudson, Hoboken, NJ, U.S.A.

Keywords: System of Systems, Extended Enterprise, Game Theory, Prisoner’s Dilemma, Stag Hunt, Agent-based

Modelling, Complex Dynamical Systems, Institutional Analysis, Organizational Norms, Organizational

Behaviour, Systems Thinking.

Abstract: An extended enterprise is both a system of systems (SoS) and a complex dynamical system. We characterize

government-run joint and interagency efforts as “government extended enterprises” (GEEs) comprising sets

of effectively autonomous organizations that must cooperate voluntarily to achieve desired GEE-level

outcomes. Our research investigates the proposition that decision makers can leverage four “canonical

forces” to raise the levels of both internal GEE cooperation and SoS-level operational effectiveness,

changing the GEE's status as indicated by the "SoS differentiating characteristics" detailed by Boardman

and Sauser. Two prior papers described the concepts involved, postulated the relationships among them, and

discussed the n-player, iterated "Stag Hunt" methodology applied to execute a real proof-of-concept case

(the U.S. Counterterrorism Enterprise's response to the Christmas Day Bomber) in an agent-based model.

This paper presents preliminary conclusions from data analysis conducted as a result of ongoing testing of

the simulation.

1 INTRODUCTION

On Christmas Day 2009, 19-year old Farouk

Abdulmutallab and a few supporters exposed

significant flaws in an extended enterprise

comprising at least “1,271 government organizations

and 1,931 private companies” and a combined

budget in excess of $75 billion (Priest and Arkin

2010). Yet, according to the findings in the

Executive Summary of the Report of the Senate

Select Committee on Intelligence (SSCI 2010), this

leviathan failed because the odds were stacked

against it; members chose not to share critical

information that would have foiled the plot—they

chose to not cooperate.

We believe the discipline of systems engineering

— specifically, system of systems (SoS) engineering

— has both the ability and the responsibility to help

future decision makers understand why this

happened and how to recognize and prevent similar

failures in “networks of peers.” A systems engineer

might describe such a problem:

Consider a system of systems: a

heterogeneous network of autonomous

nodes, each with its own “private” goals,

that exists to serve one or more “public”

goals. The nodes must cooperate to produce

preferred SoS-level outcomes, but it

underperforms due to a lack of internal

cooperation — intentionally or not, some

nodes effectively place their goals ahead of

the network’s goals.

While examples of networks that fit this

description abound—in industry (e.g., standards

consortia, corporate alliances), the non-profit sector

(e.g., collections of community service organiza-

tions), the military (e.g., Services trying to jointly

field capabilities or conduct operations), and

government at all levels (e.g., cabinet departments or

legislative committees with overlapping

67

John L., M. McCormick P., McCormick T., McNeill G. and Boardman J..

IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF SYSTEMS.

DOI: 10.5220/0003655900670076

In Proceedings of the International Conference on Knowledge Management and Information Sharing (KMIS-2011), pages 67-76

ISBN: 978-989-8425-81-2

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

jurisdictions, collections of international, federal

and/or state organizations working in one more

domains) — decision makers appear to lack sound,

theory-based approaches and methods to generate,

promote and sustain the required level of

cooperation in enterprises like them. This may be

especially true for networks of high-level

government organizations, where market forces

cannot punish recalcitrant members.

2 BACKGROUND

Inspired by the works of Hume (2000 [1739-1740])

and Smith (1790), our first paper (John et al., 2011a)

details cooperation-related concepts from many

disciplines to investigate two propositions: 1. a set

of “canonical forces” (Sympathy, Trust, Fear, and

Greed) affects the dynamics of SoS operating under

conditions of need and uncertainty; and 2.

understanding these forces may enable network

leaders to address SoS performance issues caused by

lack of cooperation among the component systems.

Our unit of analysis is the “Government

Extended Enterprise” (GEE). Our definition of a

GEE extends concepts described in (Fine, 1998;

Davis and Spekman, 2004) to include “the entire set

of collaborating [entities], both upstream and

downstream, from [initial inputs] to [end-use

decisions, policies and actions], that work together

to bring value to [the nation]”. Thus, GEEs are sets

of relatively autonomous government enterprises

that must achieve enough “propensity to cooperate”

(Axelrod, 1997) to produce the voluntary

cooperation that is a prerequisite for coordinated

action; they face the “Hobbesian paradox” that lies

at the heart of social dilemmas (Van Lange et al.,

2007).

2.1 Central Concepts

“Cooperation” is “individual behaviour that incurs

personal costs in order to engage in a joint activity

that confers benefits exceeding the costs to other

members of one’s group” (Bowles and Gintis, 2003)

and “costly behaviour performed by one individual

that increases the payoff of others” (Boyd and

Richerson, 2009).

We postulate (John et al., 2011a) four “canonical

forces:” Sympathy, conceived by Hume and Smith

as the “fellow feeling,” that brings individuals

together (V. Ostrom, 2005); Trust, a three-part

relationship (a trusts b with respect to x) (Hardin,

2006) reflecting “one’s willingness to be vulnerable

to another’s actions with the belief that the other will

perform as expected” (Jarvenpaa et al., 1998;

Ridings et al., 2002), that was critical to primitive

man’s survival as a species (Bowles and Gintis,

2003, 2011); Fear, “the cognition of an expected

deprivation,” (Parsons and Shils, 2001), specifically

of being viewed as a failure, or of incurring a

business or political loss or cost, and loss of control

(Van Dijk et al., 2008), which “induces … focus on

events that are especially unfavourable” (Shefrin,

2002 citing Lopes, 1987) and erodes SoS cohesion

by causing components to act to further their private

goals in preference to the network’s public goals;

and Greed for success, power, budget or influence

Simon (1997 [1945], (Skinner, 1965) that

encourages on private goals, reducing propensity to

cooperate, and reinforcing the effects of Fear.

Because they are open systems (Von Bertalanffy,

1950; Weiner, 1948), GEEs are subject to Need,

primarily for resources through the competitive

federal budget process (Garrett, 1998), and

Uncertainty that produces both Fear and the

potential for profit, subject to risk tolerance

(Williams, 2002; Wohlstetter, 1962; Prange, 1981).

2.2 SoS Differentiating Characteristics

We leverage five characteristics by which Boardman

and Sauser (2006, 2008) differentiate systems of

systems from systems of components: Autonomy

(A), both a component system’s native ability to

make independent choices (an “internal” system-

level property conveyed by its nature as a holon),

and more importantly, the fact that other members of

the system of systems “respect” this ability by

permitting the component to exercise it; Belonging

(B), a direct reflection of the components’

recognition of a shared mission or shared (but not

merely coincident) interests; Connectivity (C), “the

agility of structure for essential connectivity in the

face of a dynamic problematique that defies

prescience” (Boardman and Sauser, 2008, 158-159);

Diversity (D), “noticeable heterogeneity; having

distinct or unlike elements or qualities in a group,”

(Boardman and Sauser (2008, 157) that reflects the

impact of the law of requisite variety (Ashby, 1956)

on systems of systems; and Emergence (E): the

ability to “match the agility of the problematique”

by adding new responses based on “auxiliary

mechanisms for anticipation” (Boardman and

Sauser, 2008, 160-161).

Our prior paper (John et al., 2011a) discusses the

postulated relationships between the forces and these

characteristics in detail, summarizing them in a table

KMIS 2011 - International Conference on Knowledge Management and Information Sharing

68

that uses a five-point nominal scale to indicate both

how strongly “positive,” “neutral,” or “negative” a

force is with respect to a characteristic, and whether

the characteristic requires or is inimical to the force.

These values drive the cooperative model selected

by the agents in the simulation described in Section

6, below, and support tracking the resulting chain of

causality. Table II in the same paper describes the

relationships between the levels of the Boardman-

Sauser characteristics and a component’s

“Cooperation Model” — cooperate or “co-opetate”

(attributed to Novell founder Ray Noorda).

John et al. (2001a, Table III) uses a five-point

nominal scale to indicate the postulated impact of

changes in the Boardman-Sauser characteristics

levels on the “cooperation model” each agent uses in

the game, expressed as the force “favouring” or

“disfavouring” a choice. Our work captures and

measures these changes and the resulting SoS-level

behaviour. The same paper discusses the impact of

two other potentially important factors: History of

Behaviour and Leadership.

3 THEORY AND APPROACH

Recent research (Boardman and Sauser, 2006; 2008;

DiMario et al., 2009; Gorod et al., 2008; Baldwin

and Sauser, 2009; Epelbaum et al., 2011) has posited

and attempted to quantify how collections of

systems that should work together become more

manifestly SoS as their levels of the characteristics

rise. We theorize that in action situations that

demand cooperation, assuming increasing the level

of cooperation improves the operational

performance of the SoS, each organization’s

Probability of Cooperation with an emerging

coalition is the result of the interaction of the

proposed forces, each organization’s principles-

based strategy and a set of behavioural factors.

Informed by noted cooperation scholars

(Axelrod, 1997; E. Ostrom, 2005; 2007; Pacheco et

al., 2009; Poteete et al., 2010; Gintis, 2009a; Bowels

and Gintis, 2011) our methodology applies game

theory in an agent-based simulation of a complex

adaptive system and a real-world case (see John et

al., 2011b for a detailed description). Our approach

centres on a “Stag Hunt” game (Shor, 2010a;

Skyrms, 2004) that treats information in the GEE as

a “common-pool resource” (Poteete et al., 2010) and

establishes payoff-driven (Hicks) and risk-dominant

equilibria (Nash) that correspond with the GEE’s

public and private goals. The GEE cannot succeed if

key nodes fail to cooperate by sharing information in

ways that meet the requirements in the unclassified

Executive Summary of the SSCI report (SSCI,

2010).

3.1 Hypotheses and Assumptions

Testing has led us to refine the previously declared

set of hypotheses (John et al., 2011b). Given a SoS

(“S”) — the GEE — comprising Executive Agent

“a

1

” and autonomous components “a

2

” though “a

n

”,

operating under conditions of uncertainty and with

knowledge of each others’ history of behavior with

respect to themselves:

Hypothesis 1. a

n

’s levels of Probability of

Cooperation with a

1

, will be:

1a. positively correlated with a

n

’s level of

Risk Tolerance,

1b. positively correlated with a

n

’s level of

Sympathy and Trust with respect to a

1

,

1c. positively correlated with a

1

’s History of

Behavior,

1d. negatively correlated with a

n

’s level of

Greed

1e. negatively correlated with a

n

’s level of

Fear.

Hypothesis 2. S’s level of Belonging will be:

2a. positively correlated with S’s level of

Sympathy (where the value of Sympathy is the

median of the values for S’s members)

2b. positively correlated with S’s level of

Trust, (where the value of Trust is the median of

the values for S’s members)

2c. negatively correlated with S’s levels of

Greed (where the value of Greed is the median of

the values for S’s members)

2d. negatively correlated with S’s level of

Fear (where the values of Fear is the median of

the values for S’s members).

Hypothesis 3. S’s level of EE Belonging, will be

positively correlated with key components’

aggregate Probability of Cooperation.

Hypothesis 4. S’s level of EE Connectivity, will

be positively correlated with key components’

aggregate Probability of Cooperation.

Hypothesis 5. S’s level of EE Diversity will be

positively correlated with key components’

aggregate Probability of Cooperation.

Hypothesis 6. S’s level of EE Emergence will be

positively correlated with key components’

aggregate Probability of Cooperation.

Continuing research has led us to add an eighth

assumption — that all of the player’s

cooperate/defect decisions must comply with the

IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF

SYSTEMS

69

letter and intent of U.S. law and policy — to our

prior list (John et al., 2011b, Section III.B. The new

assumption enables us to explicitly incorporate the

deontic component of social decision making —

rules about what one must, may not and should do

(Stamper et al., 2000; E. Ostrom, 2005; Filipe and

Fred, 2008).

3.2 Validation and Data Analysis

Process

A Review Panel — a multi-disciplinary set of

experts with long experience as both operators and

executives in the organizations and domains — will

set the model’s initial conditions to account for the

fact that agent-based models are sensitive to initial

conditions (Windrom et al., 2007; Miller and Page,

2007).

Data analysis centres on the use of non-

parametric statistical processes. These are

appropriate for data generated by the agent-based

model because one cannot make useful assumptions

a priori about the distribution of the data.

3.3 Sample Case

Our second paper (John et al., 2011b provides a

detailed explanation of the sample case, which

covers an 18-month period comprising five discrete

decision points where the SSCI Report found that

components of the GEE could have foiled the attack

by sharing information they already possessed.

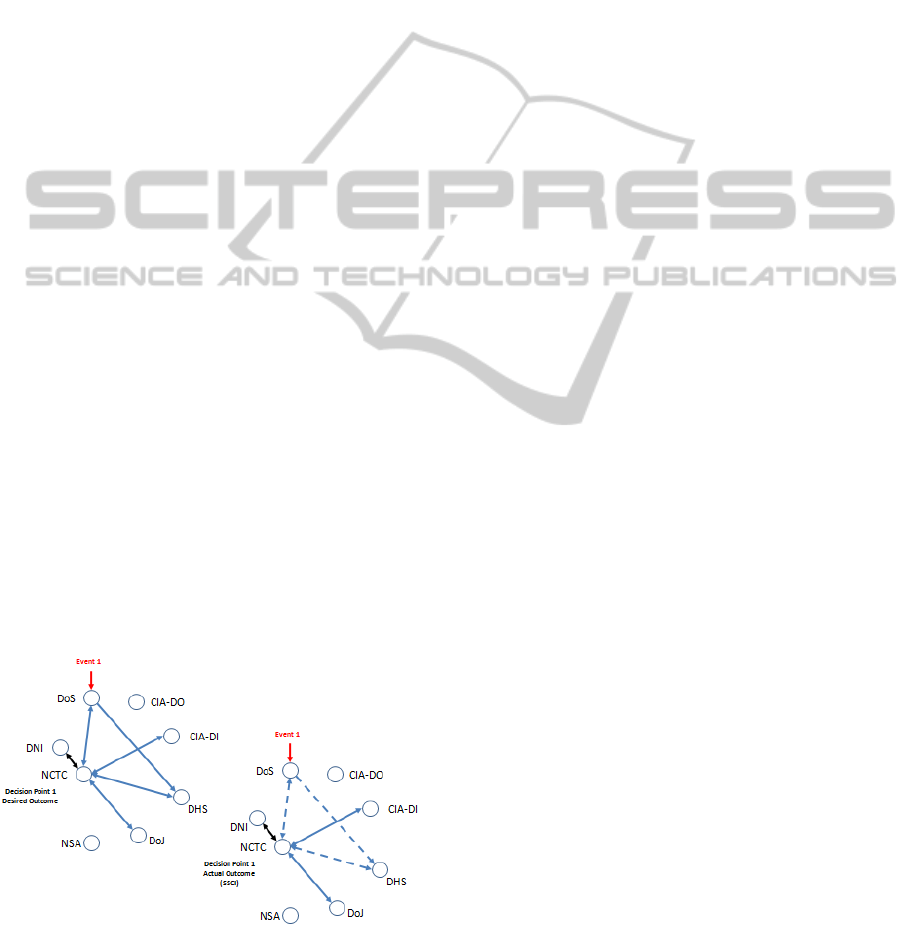

Figure 1 illustrates the core operational issue

reported by the SSCI (example, at Event #1), in

which solid blue arrows represent expected

information flows with full information sharing, and

dashed blue arrows represent desirable flows that did

not occur.

Figure 1: Desired and actual Information Flows for

Decision Point #1.

3.4 Agent-based Simulation

Our second paper (John et al., 2011b) provides a

detailed description of the computational agent

based simulation used in our research, a process

used in a wide variety of domains in the physical

and social sciences, including studies of cooperation

(Gintis, 2009a; Metrikopoulos and Moustakas,

2010) and complex adaptive systems (Gintis, 2009a;

Miller and Page, 2007). Of note, we eliminate the

potential impact of signalling issues (Gintis, 2009a)

by assuming that all choices are made

simultaneously, an assumption that approximates the

impact of effective administrative information

security procedures.

We chose the Stag Hunt over the more widely

used iterated Prisoner’s Dilemma (Axelrod, 1997; E.

Ostrom, 2005; Mertikopoulos and Moustakas, 2010;

Shor, 2010b) because the former provides two

equilibria that can be viewed as “satisfactory” —

one risk-dominant (the Nash equilibrium) that

satisfies private goals, and one payoff-dominant (the

Pareto-optimal Hicks equilibrium) that satisfies

public goals — in a non-zero sum game (Pacheco et

al., 2009; Poteet et al., 2010; Shor, 2010a; b).

Similar decision making challenges exist in artificial

intelligence and network switching (Wolpert, 2003).

3.5 Factors Governing Behaviour

Behaviour within and among organizations is

governed by “institutional statements”—rules,

norms and shared strategies (E. Ostrom, 2005, 2007;

Gintis, 2009a, Bowles and Gintis, 2011), informed

by knowledge — the deontic, axiological and

epistemological components of social decision

making (Stamper et al., 2000, Filipe and Fred,

2008). At a practical level they are embodied in a set

of behavioural factors that represent key inputs to

decision making, and can be described

algorithmically. Our second paper (John et al.,

2011b) presents our core algorithm (Equation 1),

defines the eight factors that affect an agent’s

Propensity to Cooperate (P

c

) and details the

processes by which the model leverages them. The

characteristics are: F1 Level of Risk, F2 Payoff to

the Sharing Agent, F3 Payoff to the Receiving

Agent, F4 History of Behaviour, F5 Risk Tolerance,

F6 Perceived Level of Need, F7 Perceived Level of

Damage Due to Disclosure (a powerful analogue to

“Subtractability of Flow” in common-pool resource

problems (E. Ostrom, 2010)), and F8 Sharing

Agent’s Perceived Level of Confidence in the

Information.

KMIS 2011 - International Conference on Knowledge Management and Information Sharing

70

P

c

= (F4*F6) ((F1+F2+F3+F5)/4) ((F7+F8)/2) +3 (1)

3.6 Principle-driven Strategies

Principles — the sum of an organization’s values,

standards, ideals, precepts, beliefs, morals and ethics

— drive the strategy that drives decisions by helping

decision makers “to establish whether a decision is

right or wrong” (Miner, 2006, 109-126); they are the

axiological component of social decision making.

Our second paper (John et al., 2011b) describes the

process by which we leveraged aspects of Vroom’s

image theory (based on Maslow and Herzberg)

(Miner, 2005, 94-113) to derive and leverage the six

alternative (self-regarding, neutral or and self-

regarding) principles that underlie the information

sharing decision making strategies of US CT

Enterprise components, thereby enabling us to create

a game strategy profile (Gintis, 2009a;

Mertikopoulos and Moustakas, 2010) consisting of

11 strategies and to establishing weighting

coefficients for the behavioural factors used by

Equation 1 to calculate P

c

for each situation.

3.7 Simulation Toolset

This effort uses Systems Effectiveness Analysis

Simulation (SEAS), an agent-based, complex

adaptive systems simulation that is part of the Air

Force Standard Analysis Tool Kit (SEAS, 2010).

SEAS agents incorporate the components of social

decision making by functioning at the physical,

information, and cognitive levels to maintain

awareness of their situations, and by leveraging a set

of simple, principle-based behaviour rules that

incorporate the impact of norms to make decisions

“on the fly.”

SEAS data will enable us to infer the impact of

the forces on the Boardman-Sauser characteristics

and set the stage for root cause analyses.

4 ANALYSIS

4.1 Boundary Conditions

Exploring boundary conditions (i.e., the outcomes

produced by agents adopting extreme strategies) is a

key step in the use of agent-based models (Miller

and Page, 2007). Our initial exploration of the game

matrices for agents employing a “pure” strategy

(e.g., always share if it favors the GEE, or always

favor their own organization), verifies that the “Stag

Hunt” game is a good simulation for this problem.

Extremely cooperation-friendly strategies produced

payoff-dominant results, and cooperation-

antagonistic produced risk-dominant results. We

used the results of these initial analyses to select the

applicable ranges and effects of the Decision

Making Freedom Factors. Based on tests to date, a

normalized P

c

of 0.8 appears to represent a “ceiling”

below which agents will always refuse to cooperate,

while values above 1.225 represent a “floor” above

which agents will always cooperate. Approximately

20% of calculated values fall in one of these two

areas. In general, test data indicates that these values

manifest at higher force levels.

4.2 Data Analysis Process

The team first conducted exploratory data analysis

and a series of statistical tests to establish the

presence of significant patterns within the data. The

objective of the tests is to determine whether the

observed outcomes vary as expected (i.e., directly

with the changes in the levels of impact of the

canonical forces) and reliably refute the null

hypotheses. Because we expected the data generated

by our experiment to take the form of nonparametric

statistics (e.g., non-normal or multi-modal

distributions), tests include the Mann-Whitney U

test, used to determine if a difference exists between

two groups, and the Kruskal-Wallis test, which,

because it does not require an assumption of

normality, is the non-parametric analog to a one-way

analysis of variance. We are prepared to run other

parametric and nonparametric tests as the data and

emerging research questions require.

We began testing a limited version of the SEAS

simulation in early March 2010. These tests focused

on verifying that the simulation manipulates data

and computes results in accordance with our design,

and that the design itself contains no egregious

errors. To this end, we chose a subset (43 cases) of

the possible combinations (256 cases) of integer-

value force levels, designed to support linear

regression analysis of simulator results. The testing

regime runs the entire five-event scenario for each

strategy in 200 blocks, with each block including

112 opportunities for cooperation.

Table 1 is a small sample of the simulator output.

A “1” in the “Share Sender?” and or “Share

Receiver?” column indicates that the computed

probability of cooperation resulted in that agent

deciding to share (“cooperate”). The Score is the

payoff the sharing agent earned from each decision.

IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF

SYSTEMS

71

Table 1: Simulator Output (Unprocessed).

Sharer Receiver

Share

Sender?

Share

Receiver?

Score

DOS_

CA

DHS_CBP 1 0 0

DOS_

CA

NCTC 1 1 10

A macro cleans and orders the data, computes

summary statistics (the number of “share” decisions;

and minimum, maximum, mean and median values

for probability of cooperation and payoff by block),

then transfers the ordered data to another workbook

with one tab per strategy (Table 2).

Table 2: Partial Simulator Output (Unprocessed).

ST_0

Trust = 1

all others = 0

ST_1

#

Sender

Share

Opps.

Receiver

Share

Opps.

Score

Sender

Share

Opps.

1 0.678571 0.660714 139 0.696429

2 0.625 0.758929 180 0.642857

3 0.723214 0.741071 179 0.767857

The macro computes additional summary

statistics (minimum, 25th percentile, average, 75th

percentile, and maximum Prob

c

values) for each case

and plots them vs. force configuration using a “box

and whiskers” format. It also counts the frequency of

Prob

c

values in a series of ranges for plotting by

force configuration and strategy in a three-

dimensional “ribbon chart” format. We also use the

“box and whiskers” format to plot linear regressions

for the impact of forces on Prob

c

and line charts to

plot the impact of strategies or forces on payoff.

We will follow a recommended best practice for

computational simulations (Miller and Page, 2007)

by running all 256 cases against the set of

“practical” strategies chosen by the Review Panel to

ensure we understand how the simulation behaves in

all of the combinations the actual case study may

present, and the root causes for these behaviors. We

will also analyze comparative plots of the manually

computed values of P

c

versus the simulator-

computed values of Probability of Cooperation

based on integer values assigned to each of the force

configurations to begin to illuminate the space

between the data points to support interpolation in

future versions of the simulation. Interpolation

across strategies may be problematic.

4.3 Addressing Threats to Validity

Executing 200 Monte Carlo trials of each of force

configuration (a “case”) produces a statistical

confidence above .95 for each set of results. John et

al. (2011b, Section IV) discusses our approach to

addressing internal and external validity, face and

construct validity, criterion validity, and construct

validity.

5 PRELIMINARY RESULTS

The following are preliminary conclusions, some of

which may have significant implications for GEE

members.

We are demonstrating the ability to encapsulate

agents’ belief systems in key model elements and

leverage that encapsulation to produce internally

self-consistent results. This means the experiment

may offer a useful evaluation of the postulated

relationship between the forces and the SoS

characteristics.

The neutral strategies are, by their nature as firm,

all-purpose decision making heuristics, essentially

insensitive to the forces. While preliminary results

demonstrate that the forces are capable of impacting

decision making, the effect appears to be significant

only when the decision makers’s principles evidence

some level of preference for public or private goals.

In general when considering the force individually,

Sympathy tends to have the greatest impact,

followed in descending order by Greed, Trust and

then Fear.

The neutral strategies produce results that are

predictable, but uninteresting. Moreover, Kruskal-

Wallis testing indicates that some strategies produce

sufficiently similar results that we can eliminate

some and reduce the mass of data to be analyzed.

The combination of Trust and Sympathy at Level 3

(with other forces = 0) has produced anomalous

results with two strategies; further investigation is

required. We have yet to evaluate the interaction

effects among the forces in complicated force

configurations (for example, each force at a different

level), but must do so, as we expect these conditions

to be firmly in play in the case study.

We see preliminary indications of an

unexpectedly dynamic relationship between the

forces and strategies. Strategies tend to dominate

Level 1 forces, but Level 3 forces (and, presumably,

their interaction effects) dominate most strategies.

Fear appears to play a major role only when added

to other forces — it appears to dampen the impact of

KMIS 2011 - International Conference on Knowledge Management and Information Sharing

72

many strategies.

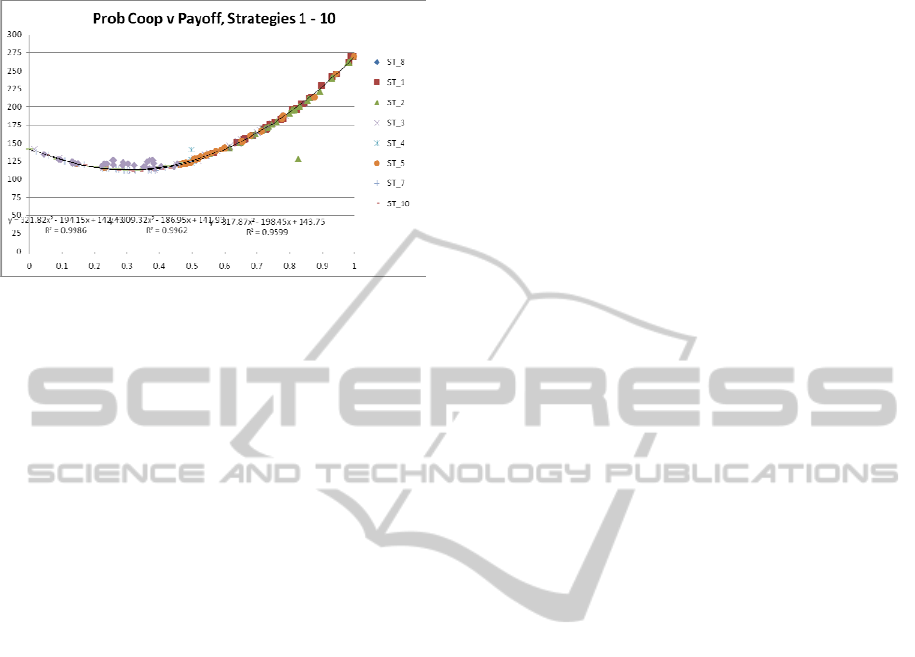

Figure 2: Median Payoff at Probability of Cooperation.

Figure 2 plots median gross payoff as a function

of probability of cooperation by strategy (10 of 11

strategies). We believe it clearly indicates that

agents engaged in repeated interactions within a Stag

Hunt situation will generally earn higher cumulative

payoffs if they choose to cooperate. One could also

infer from this that (assuming no externalities to the

contrary) the same is true of one-shot Stag Hunt

situations, but further research is required. It must be

noted, however, that the current version of the

simulation does not feature live play of situational

(Need and Uncertainty) and behavioral factors

(detailed in Section VI.C.) that could have a

profound impact on decision making. If the plotted

results persist, however, they should lead GEE

members who are uncertain of whether they should

choose to cooperate to do so. Because the only

strategies that produce better-than-minimum payoffs

from defecting are those held by agents whose

principles motivate strongly against cooperation,

these results also indicate that GEEs seeking new

members may be able to safely incorporate agents

with principles that are uncertain or neutral.

6 LIMITATIONS AND FUTURE

WORK

We recognize this effort is essentially a proof-of-

concept, based on a single case. The case may not

generalize as fully as we hope; other cases may lack

a convenient set of findings to use as a measuring

stick for evaluating the relationship of the forces to

the characteristics, or the SSCI’s root cause analysis

may be flawed. It is also possible that the

assumptions and abstractions we have used to

simplify the problem may contain important

complexities or factors our work fails to recognize.

For example, this effort assumes that agents do not

learn — they will not change decision making

strategies in the course of a case. We also eliminate

the effects of information transfer time, and

differences in individual capabilities and authorities

by assuming that when any member of an

organizational element gains access to a piece of

information, the entire element gains access and

understanding immediately, and is authorized to act

on that understanding. Moreover, the process used in

our tests to date lacks explicit recognition of the

individual fitness costs that qualify an act as one of

altruistic cooperation (Bowles and Gintis, 2011).

The choice of game is not without some

controversy. On 12 December 2009, Gintis posted a

review (Gintis, 2009b) of Skyrms’ (2004) view that

“Many modern thinkers have focused on the

prisoner’s dilemma [as a simple exemplar of the

central problem of the social contract], but I believe

that this emphasis is misplaced. The most

appropriate choice is not the prisoner’s dilemma, but

rather the stag hunt.” Gintis’ objection hinges on his

view that Skyrms has fallen prey to the “Folk

Theorem of Repeated Games” whose “central

weakness is that it is only an existence theorem with

no consideration of how the Nash equilibrium can

actually be instantiated as a social process….Rather,

… strategic interaction must be socially structured

by a choreographer—a social norm with the status of

common knowledge ….” Following Simon’s (1997

[1945]) view of the behaviour of individuals within

an organization and leveraging a mechanism

articulated in Bowles and Gintis (2011), we believe

there is reason to view cultural transmission of

norms within an agency as powerful enough to

establish the Nash equilibrium.

Gintis’ (2009a) discussion of “Epistemic Game

Theory and Social Norms” summarizes what he

views as a long-standing schism within the

behavioral sciences and further emphasizes the

importance of the socially-developed norm as the

“choreographer” of individual and group behaviors.

Earlier in the same book, Gintis also questions the

need for those applying game theory to social

dilemmas to eschew the “rational actor” model in

favor of bounded rationality. He contends that

explicitly accounting for each agent’s beliefs,

preferences and constraints allows for rational, self-

regarding agents that operate with defined limits

with respect to their knowledge and their own

perspective (their utility function). Our model

addresses this by explicitly including the impact of

decision maker principles in the strategy formulation

IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF

SYSTEMS

73

process.

Nevertheless, we remain convinced that this area

presents fertile ground for important research,

including the creation of a tool set and analysis

process that can be useful in the continuing

development of cooperation theory for systems of

systems. Additional research into the sensitivity of

P

c

, Prob

c

, and Payoff to the level of decision maker

bias embodied in the strategies (self-regarding or

other-regarding) may be very illuminating. For

example, do one or more of these outputs vary in a

linear fashion, a step function or some other way?

We are working to identify other suitable cases

involving both public and private extended

enterprises so we can validate and expand our

analytic capabilities.

This effort is only one step on a much longer

journey toward what we call “The Science of

Belonging.” We believe the understanding that can

be derived from such a science will be crucial in a

world full of autonomous software systems. Future

work must establish how decision makers can

change the levels of the forces in their extended

enterprises — the specific “levers” decision makers

can pull — as well as how they can accurately

measure the resulting amount of change in the

Boardman-Sauser characteristics. We must also

ascertain the existence and impacts of other useful

forces and characteristics.

ACKNOWLEDGEMENTS

The authors wish to thank two anonymous reviewers

for comments that inspired improvements in this

paper.

REFERENCES

Ashby, W. R. (1956). An Introduction to Cybernetics,

London: Chapman & Hall.

Axelrod, R. (1997). The Complexity of Cooperation:

Agent-Based Models of Competition and

Collaboration, Princeton: Princeton University Press,

1997.

Baldwin, W. C. and B. Sauser (2009). “Modeling the

Characteristics of System of Systems,” IEEE

International Conference on System of Systems

Engineering, pp.1-6.

Boardman, J. and B. Sauser (2006). “System of Systems –

the meaning of of,” Proceedings of the 2006

IEEE/SMC International Conference on System of

Systems Engineering, IEEE.

Boardman, J. and B. Sauser (2008). Systems Thinking:

Coping with 21st Century Problems, Boca Raton: CRC

Press, pp. 155-161.

Bowles, S. and H. Gintis (2003). “The Origins of Human

Cooperation,” in Peter Hammerstein (ed.) The Genetic

and Cultural Origins of Cooperation, Cambridge: MIT

Press.

Bowles, S. and H. Gintis (2011). A Cooperative Species:

Human Reciprocity and Its Evolution, Princeton:

Princeton University Press.

Boyd, R. and P. J. Richerson (2009). “Culture and the

evolution of human cooperation,” Philosophical

Transactions of The Royal Society Biological Sciences,

364, pp. 3281-3288.

Davis, E. W. and R. E. Spekman (2004). The Extended

Enterprise: Gaining Competitive Advantage through

Collaborative Supply Chains, Upper Saddle River, NJ:

Financial Times Prentice Hall, p. 20.

DiMario, M. J., J. T. Boardman and B. J. Sauser (2009).

“System of Systems Collaborative Formation,” IEEE

Systems Journal, 3, (September).

Epelbaum, S., M. Mansouri, A. Gorod, B. Sauser, A.

Fridman (2011). “Target Evaluation and Correlation

Method (TECM) as an Assessment Approach to Global

Earth Observation System of Systems (GEOSS),”

International Journal of Applied Geospatial Research,

vol. 2, no. 1, pp. 36-62.

Filipe, J. B. L., and A. L. N. Fred (2008). “Collective

Agents and Collective intentionality Using the EDA

Model,” micai, pp. 211-220, 2007 Sixth Mexican

International Conference on Artificial Intelligence,

Special Session.

Fine, C. H. (1998). Clockspeed: Winning Industry Control

in the Age of Temporary Advantage, New York:

Perseus Books.

Garrett, E. (1998). “Rethinking the Structures of

Decisionmaking in the Federal Budget Process,”

Harvard Journal on Legislation, (Summer) 35, pp.

409-412.

Gintis, H. (2009a). The Bounds of Reason: Game Theory

and the Unification of the Behavioral Sciences,

Princeton: Princeton University Press.

Gintis, H. (2009b), Review of B. Skyrms, The Stag Hunt

and the Evolution of Social Structure, http://www.

amazon.com/gp/cdp/member-reviews/A2U0XHQB7M

MH0E?ie=UTF8&display=public&sort_by=MostRece

ntReview&page=6, accessed 1 February 2011.

Gorod, A., B. Sauser and J. Boardman (2008). “System-of-

systems engineering management: A review of modern

history and a path forward,” IEEE Systems Journal, 2,

(December), pp. 484-499.

Hardin, R. (2006). Trust, Maldin, MA: Polity Press.

Hume, D. (2000 [1739-1740]). In D. F. Norton and M. J

Norton (eds.), A Treatise of Human Nature,

Oxford/New York: Oxford University Press.

Jarvenpaa, S. L., K. Knoll and D. E. Leidner (1998). “Is

anybody out there? Antecedents of trust in global

virtual teams,” Journal of Management Information

Systems, 14, pp. 29-64.

John, L., P. M. McCormick, T. McCormick, and J.

Boardman (2011a). “Self-organizing Cooperative

KMIS 2011 - International Conference on Knowledge Management and Information Sharing

74

Dynamics in Government Extended Enterprises:

Essential Concepts,” Proceedings of the 2011 IEEE

International Systems Conference, IEEE.

John, L., P. McCormick, T. McCormick, G. McNeill, J,

Boardman (2011b). “Self-Organizing Cooperative

Dynamics in Government Extended Enterprises:

Experimental Methodology,” Proceedings of the 6th

IEEE International Systems Conference, IEEE.

Lopes, L. (1987). “Between Hope and Fear: The

Psychology of Risk.” Advances in Experimental Social

Psychology, vol. 20, pp. 255-295.

Mertikopoulos, P. and A. L. Moustakas (2010). “The

Emergence of Rational Behavior in the Presence of

Stochastic Perturbations,” The Annals of Applied

Probability, 20, pp. 1359-1388.

Miller, J. and S. Page (2007). Complex Adaptive Systems:

An introduction to Computational Models of Social

Life, Princeton MJ: Princeton University Press, 2007.

Miner, J. B. (2006). Organizational Behavior 2: Essential

Theories of Process and Structure, Armonk, NY: M. E.

Sharpe, Inc., pp.109-126.

Miner, J. B. (2005). Organizational Behavior 1: Essential

Theories of Motivation and Leadership, Armonk, NY:

M. E. Sharpe, Inc., pp. 94-113.

“need,” OED (2009). Oxford English Dictionary (2nd

Edition) Online, Oxford: Oxford University Press,

retrieved 7 October 2009.

Ostrom, E. (2005). Understanding Institutional Diversity,

Princeton: Princeton University Press.

Ostrom, E. (2007) “An Assessment of the Institutional

Analysis and Development Framework,” in Sabatier,

Paul A. (ed.) Theories of the Policy Process Second

Edition, Boulder, CO: Westview Press.

Ostrom, E (2010). Personal communication, “Request for

Opinion on Subtractability of Value as a replacement

for Subtractability of Flow in Information Exchanges,”

Fri 6/25/2010 8:06 AM.

Ostrom, E., R. Gardner, and J. Walker (1994). Rules,

Games, and Common-Pool Resources, Ann Arbor:

University of Michigan Press.

Ostrom, V. (2005). “Citizen Sovereigns: The Source of

Contestability, the Rule of Law, and the Conduct of

Public Entrepreneurship,” PS: Political Science and

Politics, (January), pp. 13-17.

Pacheco, J. M., F. C. Santos, M. O. Souze, B. Skyrms

(2009). “Evolutionary dynamics of collective action in

n-person stag hunt dilemmas,” Proceedings of the

Royal Society Biological Sciences, (January 22), 276

(1655), pp. 315-321.

Parsons, T. and E. A. Shils (2001). Toward a General

Theory of Action: Theoretical Foundations for the

Social Sciences, New Brunswick, NJ: Transactions

Publishers, p.134.

Poteete, A. R., M. A. Janssen and E. Ostrom (2010).

Working Together: Collective Action, the Commons,

and Multiple Methods in Practice, Princeton: Princeton

University Press.

Prange, G. W. (1981). At Dawn We Slept, New York:

McGraw-Hill.

Priest, D. and W. M. Arkin (2010). “Top Secret America:

A Washington Post Investigation,” accessed 10 Feb

2011 at http://projects.washingtonpost.com/top-secret-

america/articles/a-hidden-world-growing-beyond-

control.

Ridings, C. M., D. Gefen, and B. Arinze (2002). “Some

antecedents and effects of trust in virtual

communities,” Journal of Strategic Information

Systems, 11, pp. 271-295.

SEAS (2010). “What is SEAS?” http://teamseas.com/

content/view/55/71/, accessed 12 October 2010.

Shefrin, H. (2002). Beyond Greed and Fear, New York:

Oxford University Press, pp. 120-121.

Shor, M. (2010a). "Prisoners’ Dilemma," Dictionary of

Game Theory Terms, Game Theory.net, http://www.

gametheory.net/dictionary/games/PrisonersDilemma.ht

ml accessed: 12 October 2010.

Shor, M. (2010b). "Stag Hunt," Dictionary of Game

Theory Terms, Game Theory.net, www.gametheory.

net/dictionary/games/StagHunt.html accessed: 12

October 2010.

Simon, H. A. (1990). “Invariants of Human Behavior,”

Annual Review of Psychology, 41, pp. 1-19.

Simon, H. A. (1997 [1945]). Administrative Behavior: A

Study of Decision-Making Processes in Administrative

Organizations, Fourth Edition, New York: The Free

Press, pp 206-207.

Skinner, B. F. (1965). Science and Human Behavior, New

York: The Free Press, 1965, p. 361.

Skyrms, B. (2004). The Stag Hunt and the Evolution of

Social Structure, Cambridge: Cambridge University

Press.

Smith, A. (1790). The Theory of Moral Sentiments (6th

Edition), London: A. Millar, http://www.econlib.org/

library/Smith/smMS1.html.

SSCI (2010). Senate Select Committee on Intelligence,

“Unclassified Executive Summary of the Committee

Report on the Attempted Terrorist Attack on Norwest

Airlines Flight 253,” Senate Select Committee on

Intelligence, May 18, 2010.

Stamper, R., K. Liu, M. Hafkamp and Y. Ades (2000).

“Understanding the Roles of Signs and Norms in

Organizations – a Semiotic Approach to Information

Systems Design,” Behaviour and Information

Technology, 19(1), pp. 15-27.

“trust,” OED (2009). Oxford English Dictionary (2nd

Edition) Online, Oxford: Oxford University Press,

retrieved 7 October 2009.

“uncertainty,” OED (2009). Oxford English Dictionary

(2nd Edition) Online, Oxford: Oxford University Press,

retrieved 7 October 2009.

von Bertalanffy, L. (1950). “The Theory of Open Systems

in Physics and Biology,” Science, III, 2872, pp.23-29.

Wiener, N. (1948), Cybernetics. New York: Wiley.

Williams, J. (2002). In Cynthia Grabo, Anticipating

Surprise: Analysis for Strategic Warning, Washington

DC: Center for Strategic Intelligence Research, Joint

Military Intelligence College.

Windrum, P., G. Fagiolo, and A. Moneta (2007).

“Empirical Validation of Agent-Based Models:

Alternatives and Prospects,” Journal of Artificial

IMPACT OF BEHAVIORAL FORCES ON KNOWLEDGE SHARING IN AN EXTENDED ENTERPRISE SYSTEM OF

SYSTEMS

75

Societies and Social Simulation, vol. 10, no.2, 8.

Wohlstetter, R. (1962). Pearl Harbor: Warning and

Decision, Stanford, CA: Stanford University Press.

Wolpert, D. H. (2003). “Collective Intelligence,” in David

B. Fogel and Charles Robinson (eds.) Computational

Intelligence: The Experts Speak, New York: (IEEE)

Wiley, Chapter 17.

KMIS 2011 - International Conference on Knowledge Management and Information Sharing

76