MULTI-REGULARIZATION PARAMETERS ESTIMATION

FOR GAUSSIAN MIXTURE CLASSIFIER BASED

ON MDL PRINCIPLE

Xiuling Zhou

1,2

, Ping Guo

1

and C. L. Philip Chen

3

1

The Laboratory of Image Processing and Pattern Recognition, Beijing Normal University, Beijing, 100875, China

2

Artificial Intelligence Institute, Beijing City University, Beijing, China

3

The Faculty of Science & Technology, University of Macau, Macau SAR, China

Keywords: Gaussian classifier, Covariance matrix estimation, Multi-regularization parameters selection, Minimum

description length.

Abstract: Regularization is a solution to solve the problem of unstable estimation of covariance matrix with a small

sample set in Gaussian classifier. And multi-regularization parameters estimation is more difficult than

single parameter estimation. In this paper, KLIM_L covariance matrix estimation is derived theoretically

based on MDL (minimum description length) principle for the small sample problem with high dimension.

KLIM_L is a generalization of KLIM (Kullback-Leibler information measure) which considers the local

difference in each dimension. Under the framework of MDL principle, multi-regularization parameters are

selected by the criterion of minimization the KL divergence and estimated simply and directly by point

estimation which is approximated by two-order Taylor expansion. It costs less computation time to estimate

the multi-regularization parameters in KLIM_L than in RDA (regularized discriminant analysis) and in

LOOC (leave-one-out covariance matrix estimate) where cross validation technique is adopted. And higher

classification accuracy is achieved by the proposed KLIM_L estimator in experiment.

1 INTRODUCTION

Gaussian mixture model (GMM) has been widely

used in real pattern recognition problem for

clustering and classification, where the maximum

likelihood criterion is adopted to estimate the model

parameters with the training samples (Bishop, 2007)

(Everitt, 1981). However, it often suffers from small

sample size problem with high dimensional data. In

this case, for d-dimensional data, if less than d+1

training samples from each class is available, the

sample covariance matrix estimate in Gaussian

classifier is singular. And this can lead to lower

classification accuracy.

Regularization is a solution to this kind of

problem. Shrinkage and regularized covariance

estimators are examples of such techniques.

Shrinkage estimators are a widely used class of

estimators which regularize the covariance matrix by

shrinking it toward some positive definite target

structures, such as the identity matrix or the diagonal

of the sample covariance (Friedman, 1989);

(Hoffbeck, 1996); (Schafer, 2005); (Srivastava,

2007). More recently, a number of methods have

been proposed for regularizing the covariance

estimate by constraining the estimate of the

covariance or its inverse to be sparse (Bickel, 2008);

(Friedman, 2008); (Cao, 2011).

The above regularization methods mainly

concern various mixture of sample covariance

matrix, common covariance matrix and identity

matrix or constraint the estimate of the covariance or

its inverse to be sparse. In these methods, the

regularization parameters are required to be

determined by cross validation technique. Although

the regularization methods have been successfully

applied for classifying small-number data with some

heuristic approximations (Friedman, 1989);

(Hoffbeck, 1996), the selection of regularization

parameters by cross validation technique is very

computation-expensive. Moreover, cross-validation

performance is not always well in the selection of

linear models in some cases (Rivals, 1999).

Recently, a covariance matrix estimator called

Kullback-Leibler information measure (KLIM) is

developed based on minimum description length

112

Zhou X., Guo P. and Chen C..

MULTI-REGULARIZATION PARAMETERS ESTIMATION FOR GAUSSIAN MIXTURE CLASSIFIER BASED ON MDL PRINCIPLE.

DOI: 10.5220/0003669301120117

In Proceedings of the International Conference on Neural Computation Theory and Applications (NCTA-2011), pages 112-117

ISBN: 978-989-8425-84-3

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

(MDL) principle for small number samples with

high dimension data (Guo, 2008). The KLIM

estimator is derived theoretically by KL divergence.

And a formula for fast estimation the regularization

parameter is derived. However, since multi-

parameters optimization is more difficult than single

parameter optimization, only a special case that the

regularization parameters are taken the same value

for all dimensions is considered in KLIM. Though

estimation of regularization parameter becomes

simple, the accuracy of covariance matrix estimation

is decreased by ignore the local difference in each

dimension. This will result in decreasing the

classification accuracy of Gaussian classifier finally.

In this paper, KLIM is generalized to KLIM_L

which considers the local difference in each

dimension. Based on MDL principle, the KLIM_L

covariance matrix estimation is derived for the small

sample problem with high dimension. Multi-

regularization parameters in each dimension are

selected by the criterion of minimization the KL

divergence and estimated efficiently by two-order

Taylor expansion. The feasibility and efficiency of

KLIM_L are shown by the experiments.

2 THEORETICAL

BACKGROUND

2.1 Gaussian Mixture Classifier

Given a data set

1

{}

N

ii

D

x which will be classified.

Assume that the data point in

D is sampled from a

Gaussian mixture model which has k component:

1

,(, ) ( , )

k

jjj

j

pG

mxxΣ

with 0

j

,

1

1

k

j

j

,

(1)

where

T1

/2 1/2

,

11

(,) exp{( ) ( )}

2(2 ) | |

jj j j j

d

j

G

mmmx Σ x Σ x

Σ

(2)

is a general multivariate Gaussian density function.

x

is a random vector, the dimension of

x

is d and

1

{, , }

k

j

jjj

m Σ is a parameter vector set of

Gaussian mixture model.

In the case of Gaussian mixture model, the

Bayesian decision rule

arg max ( | , )

j

jpj

x is

adopted to classify the vector

x

into class j

with

the largest posterior probability

(|, )pj x . After

model parameters

estimated by the maximum

likelihood (ML) method with expectation-

maximization (EM) algorithm (Redner, 1984), the

posterior probability can be written in the form:

,

ˆ

ˆ

ˆ

(,)

ˆ

(|, )

ˆ

(, )

jjj

G

pj

p

mx Σ

x

x

,

1, 2, ,jk

.

(3)

And the classification rule becomes:

arg min ( )

jj

jd

x , 1, 2, ,jk ,

(4)

where

T1

ˆˆ

ˆ

ˆ

ˆ

()()()ln||2ln

jjjjjj

d

X mmx Σ x Σ

.

(5)

This equation is often called the discriminant

function for the class

j (Aeberhard, 1994).

Since clustering is more general than

classification in the mixture model analysis case, we

consider the general case in the following.

2.2 Covariance Matrix Estimation

The central idea of the MDL principle is to represent

an entire class of probability distributions as models

by a single “universal” representative model, such

that it would be able to imitate the behaviour of any

model in the class. The best model class for a set of

observed data is the one whose representative

permits the shortest coding of the data. The MDL

estimates of both the parameters and their total

number are consistent; i.e., the estimates converge

and the limit specifies the data generating model

(Rissanen, 1978); (Barron, 1998). The codelength

(probability distribution or a model) criterion of

MDL involves in the KL divergence (Kullback,

1959).

Now considering a given sample data set

1

{}

N

ii

D

x generated from an unknown density

()p X , it can be modelled by a finite Gaussian

mixture density

(, )p

x , where is the parameter

set. In the absence of knowledge of

()p x

, it may be

estimated by an empirical kernel density estimate

()

h

p x obtained from the data set. Because these two

probability densities describe the same unknown

density

()p x , they should be best matched with

proper mixture parameters and smoothing

parameters. According to MDL principle, the model

parameters should be estimated with minimized KL

divergence

(, )KL h

based on the given data drawn

from the unknown density

()p x

(Kullback, 1959),

MULTI-REGULARIZATION PARAMETERS ESTIMATION FOR GAUSSIAN MIXTURE CLASSIFIER BASED ON

MDL PRINCIPLE

113

()

()ln

(, ) d

(, )

h

h

p

KL h p

p

x

xx

x

(6)

with

1

1

/2 1/2

1

() ,

1

(,)

11

exp ( ) ( )

2

(2 ) | |

N

hih

i

N

T

ih i

d

i

h

pG

N

N

xxxW

xx W xx

W

.

(7)

Here

h

W is a dd dimensional diagonal matrix

with a general form,

12

(, , , )

hd

diag h h hW ,

(8)

where

i

h , 1, 2, ,id are smoothing parameters

(or regularization parameters) in the nonparametric

kernel density. In the following this set is denoted

as

1

{}

d

ii

hh

. The Eq. (6) equals to zero if and only

if

() (, )

h

pp xx.

If the limit

0h , the kernel density function

()

h

p x becomes a

function, then Eq. (6) reduces

to the negative log likelihood function. So the

ordinary EM algorithm can be re-derived based on

the minimization of this KL divergence function

with the limit

0h . The ML-estimated parameters

are shown as follows:

1

ˆ

1

ˆ

(| , )

N

jii

i

j

pj

n

m xx,

T

1

ˆˆ

1

ˆˆ

(|,)()()

N

jiijij

i

j

pj

n

mmΣ xx x .

(9)

The covariance matrix estimation for the

limit

0h

is shown as follows. By minimizing Eq.

(6) with respect to

j

Σ

, i.e., setting

(, )/ 0

j

KL hΣ , the following covariance

matrix estimation formula can be obtained:

T

()

()

ˆˆ

ˆ

(|, )( )( )d

ˆ

ˆ

(|, )d

hjj

j

h

ppj

ppj

mmxxx x x

Σ

xxx

.

(10)

In this case, the Taylor expansion is used for

(|, )pj x at

i

xxwith respect to x and it is

expanded to first order approximation:

T

ˆˆ

ˆ

(|, ) (| , ) ( ) (| , )

iixi

pj pj pj xxxxx

(11)

with

ˆˆ

(| , ) (|, )|

i

xi x

pj pj

xx

xx.

On substituting the above equation into Eq. (10)

and according to the properties of probability density

function, the following approximation is finally

derived:

ˆ

()

jhj

h Σ W Σ .

(12)

The estimation in Eq. (12) is called as KLIM_L in

the paper, where

ˆ

j

Σ

is the ML estimation

when

0

h

, taking the form of Eq. (9).

3 MULTI- REGULARIZATION

PARAMETERS ESTIMATION

BASED ON MDL

3.1 Regularization Parameters

Selection

The regularization parameter set h in the Gaussian

kernel density plays an important role in estimating

the mixture model parameter. Different

h

will

generate different models. So selecting the

regularization parameters is a model selection

problem. In the paper the similar method as in (Guo,

2008) is adopted based on MDL principle to select

the regularization parameters in KLIM_L.

According to the principle of MDL, it should be

with the shortest codelength to select a model. When

0h

, the regularization parameters h can be

estimated with the minimized KL divergence

regarding

h with ML estimated parameter

ˆ

,

*

arg min ( )hJh ,

ˆ

() (, )Jh KLh

. (13)

Now a second order approximation for estimating

the regularization parameter

h is adopted here.

Rewrite the

()

J

h as:

0

() () ()

e

J

hJhJh

,

(14)

where

0

()() ln (, )d

h

Jh p p

xxx

,

() ()() ln d

ehh

Jh p p

xxx

.

Replacing

ln ( , )p

x with the second order term of

Taylor expansion into the integral of

0

()

J

h and

resulting in the following approximation of

0

()

J

h ,

0

1

1

1

() ln(,)

1

trace( ( ln ( , )))

2

N

i

i

N

hi

i

Jh p

N

p

N

xx

x

Wx

(15)

For very sparse data distribution, the following

approximation can be used:

NCTA 2011 - International Conference on Neural Computation Theory and Applications

114

1

1

T1

() () , ,

,

11

ln ( , ) ln ( , )

11

( , )[ ln ln 2 ln | |

22

1

() ()]

2

N

h h ih ih

i

N

ih h

i

ih i

pp G G

NN

d

GN

N

xx xxW xxW

xx W W

xx W xx

.

(16)

Substituting the Eq. (16) into ()

e

J

h , it can be got:

() ()() ln d

1

ln ln 2 ln | |

22 2

ehh

h

Jh p p

dd

N

xxx

W

.

(17)

So far, the approximation formula of ()

J

h is

obtained:

1

1

( ) trace( ( ln ( , )))

2

1

ln | |

2

N

hi

i

h

Jh p

N

C

xx

Wx

W

,

(18)

where C is a constant irrelevant to h .

Let

ln ( , )

dd i

Hp

xx

x . Taking partial

derivative of

()

J

h to

h

W and letting it be equal to

zero, the rough approximation formula of

h is

obtained as follows:

1

1

1

()

N

h

i

diag H

N

W .

(19)

3.2 Approximation for Regularization

Parameters

The Eq. (19) can be rewritten as follows:

1

11

11

() ( ln(,))

NN

h i

ii

diag H diag p

NN

xx

Wx

with

1

11 T1

11

1T1

11

ln ( , )

{(|,)[ ( )( ) ]

(| , ) ( ) (| , )( ) }

N

i

i

Nk

ijjijijj

ij

kk

ijij iijj

jj

p

pj

pj pj

xx

mm

mm

x

x ΣΣxx Σ

x Σ xxxΣ

Considering hard-cut case (

(| , ) 1or0

i

pj x ) and

using the approximations

T

1

ˆ

(| , )( )( )

N

iijij jj

i

pj n

mmxx x Σ

,

1

(| , )

N

ij

i

pj n

x ,

1

ˆ

jj

I

ΣΣ and

11

1

k

jj

j

ΣΣ,

it can be obtained:

11

()

h

diag

W Σ .

(20)

Suppose the eigenvalues and eigenvectors of the

common covariance matrix

Σ are

k

and

k

,

1

kd

, where

T

12

(, ,, )

kkk kd

, then there

exists:

1

d

T

kkk

k

Σ ,

11

1

d

T

kkk

k

Σ .

(21)

Substituting Eq. (21) into Eq. (20) and using the

average eigenvalue

1

()/trace()/

d

i

i

dd

Σ

to

substitute each eigenvalue of matrix

Σ

(only in the

denominator), it can be obtained:

2

1

2

()

trace ( )

h

d

diag

W Σ

Σ

with

[]

ij d d

Σ

.

Finally, the regularization parameters can be

approximated by the following equation:

2

2

11

trace ( ) 1 1

(,, )

h

dd

diag

d

Σ

W

.

(22)

3.3 Comparison of KLIM_L with

Regularization Methods

The four methods (KLIM_L, KLIM, RDA

(Regularized Discriminant Analysis, Friedman, 1989)

and LOOC (Leave-one-out covariance matrix

estimate, Hoffbeck, 1996)) are all regularization

methods to estimate the covariance matrix for small

sample size problem. They all consider ML

estimated covariance matrix with the additional

extra matrices.

KLIM_L is derived by the similar way as KLIM

under the framework of MDL principle. Meanwhile,

the estimation of regularization parameters is similar

to KLIM based on MDL principle. KLIM_L is a

generalization of KLIM. Multi-regularization

parameters are included and estimated in KLIM_L

while one regularization parameter is estimated in

KLIM. For every

ii

(

1

id

), if it is taken

by

1

trace( )

d

Σ

, then (trace( ) / )

hd

dW Σ I . It will

reduce to the case of

hd

hWI in KLIM,

where

trace( ) /hd

Σ .

KLIM_L is derived based on MDL principle

while RDA and LOOC are heuristically proposed.

They differ in mixtures of covariance matrix

considered and the criterion used to select the

regularization parameters.

MULTI-REGULARIZATION PARAMETERS ESTIMATION FOR GAUSSIAN MIXTURE CLASSIFIER BASED ON

MDL PRINCIPLE

115

Different computation time costs are required in

the four regularization discriminant methods. The

time costs of them are sorted decreasing in the

following order: KLIM, KLIM_L, LOOC and RDA.

This will be validated by the following experiments.

4 EXPERIMENT RESULTS

In this section, the classification accuracy and time

cost of KLIM_L are compared with LDA (Linear

Discriminant Analysis, Aeberhard, 1994), RDA,

LOOC and KLIM on COIL-20 object data (Nene,

1996).

COIL-20 is a database of gray-scale images of 20

objects. The objects were placed on a motorized

turntable against a black background. The turntable

was rotated through 360 degrees to vary object pose

with respect to a fix camera. Images of the objects

were taken at pose intervals of 5 degrees, which

corresponds to 72 images per object. The total

number of images is 1440 and the size of each image

is 12

8×128.

In the experiment, the regularization parameter

h of KLIM is estimated by trace( ) /hd Σ . The

parameter matrix

h

W of KLIM_L is estimated by

Eq. (22). In RDA, the values of

and

are

sampled in a coarse grid, (0.0, 0.25, 0.50, 0.75, 1.0),

resulting in 25 data points. In LOOC, the four

parameters are taken according to the table in

(Hoffbeck, 1996). Six images are randomly selected

as training samples from each class to estimate the

mean and covariance matrix. And the remaining

images are employed as testing samples to verify the

classification accuracy. Since the dimension of

image data is very high of 128×128, PCA is adopted

here to reduce the data dimension. Experiments are

performed with five different numbers of

dimensions. Each experiment runs 25 times, and the

mean and standard deviation of classification

accuracy are reported as results. The results of

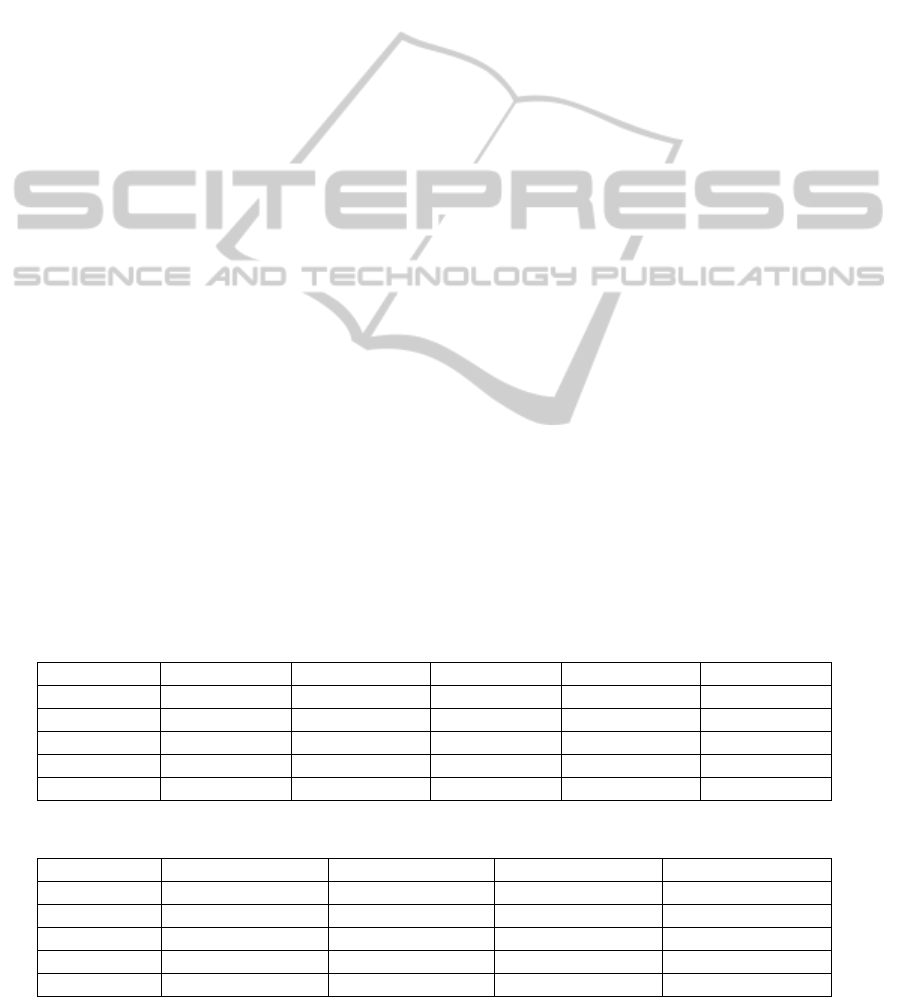

experiment are shown in table 1 table 2.

In the experiment, the classification accuracy of

KLIM_L is the best among the five compared

methods, while the classification accuracy of KLIM

is the second best. The classification accuracy of

LOOC is the worst among the compared methods

except in dimension 80, where the classification

accuracy of LOOC is higher than that of LDA.

Considering the time cost of regularization

parameters estimating, KLIM_L needs a little more

time to estimate the regularization parameters than

KLIM needs, while RDA and LOOC need much

more time than KLIM_L needs. The experimental

results are consistent with the theoretical analysis.

5 CONCLUSIONS

In this paper, the KLIM_L covariance matrix

estimation is derived based on MDL principle for

the small sample problem with high dimension.

Under the framework of MDL principle, multi-

regularization parameters are estimated simply and

directly by point estimation which is approximated

by two-order Taylor expansion. KLIM_L is a

generalization of KLIM. With the KL information

measure, total samples can be used to estimate the

regularization parameters in KLIM_L, making it less

computation-expensive than using leave-one-out

cross-validation method in RDA and LOOC.

Table 1: Mean classification accuracy on COIL-20 object database.

classifier LDA RDA LOOC KLIM KLIM_L

80 81.6(2.6) 87.2(2.2) 82.2(1.8) 87.7(1.8) 90.0(2.2)

70 83.6(2.2) 86.4(1.8) 81.2(2.8) 87.0(1.7) 87.9(1.8)

60 85.0(2.2) 86.8(2.2) 82.8(2.3) 87.7(1.4) 88.1(1.6)

50 85.0(2.5) 86.3(2.4) 80.3(2.9) 87.5(2.0) 87.8(2.3)

40 86.9(1.7) 86.9(1.7) 80.6(3.2) 87.6(1.3) 87.6(1.6)

Table 2: Time cost (in seconds) of estimating regularization parameters on COIL-20 object database.

classifier RDA LOOC KLIM KLIM_L

80 62.0494 1.8286 3.8042e-005 8.1066e-005

70 48.8565 1.5147 3.4419e-005 7.8802e-005

60 34.2189 1.0275 2.9890e-005 5.2534e-005

50 23.9743 0.7324 2.9890e-005 4.9817e-005

40 16.2443 0.4987 3.0796e-005 4.9364e-005

NCTA 2011 - International Conference on Neural Computation Theory and Applications

116

KLIM_L estimator achieves higher classification

accuracy than LDA, RDA, LOOC and KLIM

estimators on COIL-20 data set. In the future work,

the kernel method combined with these

regularization discriminant methods will be studied

for small sample problem with high dimension and

the selection of kernel parameters will be

investigated under some criterion.

ACKNOWLEDGEMENTS

The research work described in this paper was fully

supported by the grants from the National Natural

Science Foundation of China (Project No.

90820010, 60911130513). Prof. Guo is the author to

whom the correspondence should be addressed, his

e-mail address is pguo@ieee.org

REFERENCES

Bishop, C. M., 2007. Pattern recognition and machine

learning, Springer-Verlag New York, Inc. Secaucus,

NJ, USA.

Everitt, B. S., Hand, D., 1981. Finite Mixture

Distributions, Chapman and Hall, London.

Friedman, J. H., 1989. Regularized discriminant analysis,

Journal of the American Statistical Association, vol.

84, no. 405, 165–175.

Hoffbeck, J. P. and Landgrebe, D. A., 1996. Covariance

matrix estimation and classification with limited

training data, IEEE Transactions on Pattern Analysis

and Machine Intelligence, vol. 18, no. 7, 763–767.

Schafer, J. and Strimmer, K., 2005. A shrinkage approach

to large-scale covariance matrix estimation and

implications for functional genomics, Statistical

Applications in Genetics and Molecular Biology, vol.

4, no. 1.

Srivastava, S., Gupta, M. R., Frigyik, B. A., 2007.

Bayesian quadratic discriminant analysis, J. Mach.

Learning Res. 8, 1277

–1305.

Bickel, P. J. and Levina, E., 2008. Regularized estimation

of large covariance matrices, Annals of Statistics, vol.

36, no. 1, 199–227.

Friedman, J., Hastie, T., and Tibshirani, R., 2008. Sparse

inverse covariance estimation with the graphical lasso,

Biostatistics, vol. 9, no. 3, 432–441.

Cao, G., Bachega, L. R., Bouman, C. A., 2011. The Sparse

Matrix Transform for Covariance Estimation and

Analysis of High Dimensional Signals. IEEE

Transactions on Image Processing, Volume 20, Issue

3, 625 – 640.

Rivals, I., Personnaz, L., 1999. On cross validation for

model selection, Neural Comput. 11,863

–870.

Guo, P., Jia, Y., and Lyu, M. R., 2008. A study of

regularized Gaussian classifier in high-dimension

small sample set case based on MDL principle with

application to spectrum recognition, Pattern

Recognition, Vol. 41, 2842~2854.

Redner, R. A., Walker, H. F., 1984. Mixture densities,

maximum likelihood and the EM algorithm, SIAM

Rev. 26, 195

–239.

Aeberhard, S., Coomans, de Vel, D., O., 1994.

Comparative analysis of statistical pattern recognition

methods in high dimensional settings, Pattern

Recognition 27 (8), 1065

–1077.

Rissanen, J., 1978. Modeling by shortest data description,

Automatica 14, 465

–471.

Barron, A., Rissanen, J., Yu, B., 1998. The minimum

description length principle in coding and modeling,

IEEE Trans. Inform. Theory 44 (6), 2743

–2760.

Kullback, S., 1959. Information Theory and Statistics,

Wiley, New York.

Nene, S. A., Nayar, S. K. and Murase, H., 1996. Columbia

Object Image library(COIL-20). Technical report

CUCS-005-96.

MULTI-REGULARIZATION PARAMETERS ESTIMATION FOR GAUSSIAN MIXTURE CLASSIFIER BASED ON

MDL PRINCIPLE

117