STACKED CONDITIONAL RANDOM FIELDS EXPLOITING

STRUCTURAL CONSISTENCIES

Peter Kluegl

1,2

, Martin Toepfer

1

, Florian Lemmerich

1

, Andreas Hotho

1

and Frank Puppe

1

1

Department of Computer Science VI, University of W¨urzburg, Am Hubland, W¨urzburg, Germany

2

Comprehensive Heart Failure Center, University of W¨urzburg, Straubm¨uhlweg 2a, W¨urzburg, Germany

Keywords:

CRF, Stacked graphical models, Structural consistencies, Collective information extraction, Rule learning.

Abstract:

Conditional Random Fields (CRF) are popular methods for labeling unstructured or textual data. Like many

machine learning approaches these undirected graphical models assume the instances to be independently

distributed. However, in real world applications data is grouped in a natural way, e.g., by its creation context.

The instances in each group often share additional consistencies in the structure of their information. This

paper proposes a domain-independent method for exploiting these consistencies by combining two CRFs in

a stacked learning framework. The approach incorporates three successive steps of inference: First, an initial

CRF processes single instances as usual. Next, we apply rule learning collectively on all labeled outputs of one

context to acquire descriptions of its specific properties. Finally, we utilize these descriptions as dynamic and

high quality features in an additional (stacked) CRF. The presented approach is evaluated with a real-world

dataset for the segmentation of references and achieves a significant reduction of the labeling error.

1 INTRODUCTION

The vast availability of unstructured and textual data

increased the interest in automatic sequence labeling

and content extraction methods over the last years.

One of the most popular techniques are Conditional

Random Fields (CRF) and their chain structured vari-

ant linear chain CRF. CRFs model conditional proba-

bilities with undirected graphs and are trained in a su-

pervised fashion to discriminate label sequences. Al-

though CRFs and related methods achieve remarkable

results, there remain many possibilities to increase

their accuracy.

One aspect of improvement has been the relax-

ation of the assumption that the instances are inde-

pendent and identically distributed. Relational and

non-local dependencies of instances or interesting en-

tities have been in the focus of collective informa-

tion extraction. Due to the fact that these dependen-

cies need to be represented in the model structure,

approximate inference techniques like Gibbs Sam-

pling (Finkel et al., 2005) or Belief Propagation (Sut-

ton and McCallum, 2004) are applied. They achieved

significant improvements, but at the cost of a compu-

tationally expensive inference. It has been shown by

several approaches that combined models based only

on local features and exact inference can match the

results of complex models while still being efficient.

Kou and Cohen (Kou and Cohen, 2007) used stacked

graphical learning to aggregate the output of a base

learner and to add additional features based on related

instances to a stacked model. Another example is Kr-

ishnan and Manning (Krishnan and Manning, 2006)

who exploit label consistencies with a two-stage CRF.

However, all these approaches take only similar to-

kens or related instances into account while the con-

sistencies of the structure are ignored.

Semi-structured text like any other data is always

created in a certain context. This may often lead

to structural consistencies between the instances in

this creation context. While these instances are lo-

cally homogeneously distributed in one context, the

dataset is globally still heterogeneous and the struc-

ture of information is possibly contradictory. The bib-

liographic section of a scientific publication, for ex-

ample, applies a single style guide and its instances,

that are the references, share a very similar structure,

while their structure might differ for different style

guides. Previously published approaches, c.f., (Gul-

hane et al., 2010) represent structural properties di-

rectly in a higher-order model and thus suffer from an

computationally expensive inference and furthermore

apply a domain-dependent model.

In this paper, we propose a novel and domain-

240

Kluegl P., Toepfer M., Lemmerich F., Hotho A. and Puppe F. (2012).

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 240-249

DOI: 10.5220/0003706602400249

Copyright

c

SciTePress

independent method for exploiting structural consis-

tencies in textual data by combining two linear chain

CRFs in a stacked learning framework. After the in-

stances are initially labeled, a rule learning method is

applied on label transitions within one creation con-

text in order to identify their shared properties. The

stacked CRF is then supplemented with high quality

features that help to resolve possible ambiguities in

the data. We evaluate our approach with a real world

dataset for the segmentation of references, a domain

that is widely used to assess the performance of in-

formation extraction techniques. The results show a

significant reduction of the labeling error and confirm

the benefit of additional features induced online dur-

ing processing the data.

The rest of the paper is structured as follows: First,

Section 2 gives a short introduction in the background

of the applied techniques. Next, Section 3 describes

how structural consistencies can be exploited with

stacked CRFs. The experimental results are presented

and discussed in Section 4. Section 5 gives a short

overview of the related work and Section 6 concludes

with a summary of the presented work.

2 BACKGROUND

The presented method combines ideas of linear chain

Conditional Random Fields (CRF), stacked graphi-

cal models and rule learning approaches. Thus, these

techniques are shortly introduced in this section.

2.1 Linear Chain Conditional Random

Fields

Linear chain CRF (Lafferty et al., 2001) are a chain

structured case of discriminative probabilistic graph-

ical models. The chain structure fits well with se-

quence labeling tasks, naturally reflecting the inher-

ent structure of the data while providing efficient in-

ference. By modeling conditional distributions, CRFs

are capable of handling large numbers of possibly in-

terdependent features.

Let x be a sequence of tokens x = (x

1

, . . . , x

T

) re-

ferring to observations, e.g. the input text split into

lexical units, and y = (y

1

, . . . , y

T

) a sequence of la-

bels assigned to the tokens. Taking x and y as argu-

ments, let f

1

, . . . , f

K

be real valued functions, called

feature functions. To keep the model small, we re-

strict the linear chain CRF to be of Markov order one,

i.e. the feature functions have the form f

i

(x, y) =

∑

t

f

i

(x, y

t−1

, y

t

, t). A linear chain CRF of Markov or-

der one has K model parameters λ

1

, . . . , λ

K

∈ R, one

for each feature function, by which it assigns the con-

ditional probability

P

λ

(y|x) =

1

Z

x

exp

T

∑

t=1

K

∑

i=1

λ

i

· f

i

(y

t−1

, y

t

, x, t)

!

,

to y with a given observation x. The feature func-

tions typically indicate certain properties of the input,

e.g. capitalization or the presence of numbers, while

the model parameters weight the impact of the feature

functions on the inference. The partition function Z

x

normalizes P

λ

(y|x) by summing over all possible la-

bel sequences for x. The properties of a token x

t

in-

dicated by feature functions usually consider a small

fixed sized window around x

t

for a given state transi-

tion. In the following, we will use the terms feature

and feature function interchangeably.

2.2 Stacked Graphical Models

Stacked Graphical Models is a general meta learn-

ing algorithm, c.f., (Kou and Cohen, 2007; Krishnan

and Manning, 2006). First, the data is processed by

a base learner with conventional features represent-

ing local characteristics. Subsequently, every single

data instance is expanded by information about the

inferred labels of related instances. In a second stage,

a stacked learner is provided this extra information.

The process of aggregating and projecting the pre-

dicted information on instance features to support an-

other stacked learner can be repeated several times.

Stacked Graphical Models have two central ad-

vantages: The approach enables to model long range

dependencies among related instances and the infer-

ence for each learner remains effective. If the base

learner and the stacked learner are both linear chain

CRFs, then the inference time is only twice the time

of a single CRF plus the effort to determine the aggre-

gate information.

Similar to Wolpert’s Stacked Generalization

(Wolpert, 1992), Stacked Graphical Models use a

cross-fold technique in order to avoid overfitting.

That is, each instance x of the training data for the

stacked learner F

k

at level k, is classified by a model

for F

k−1

that was trained on data that did not contain

x. As a result, the stacked learners get to know real-

istic errors of their input components that would also

occur during runtime. However, Stacked Generaliza-

tion and Stacked Graphical Models are essentially dif-

ferent approaches. In short, Stacked Generalization

learns a stacked learner to combine the output of sev-

eral different base learners on a per instance basis. In

contrast, Stacked Graphical Models utilize a stacked

learner to aggregate and combine the output of one

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES

241

base learner on several instances, thus supporting col-

lective inference.

2.3 Rule Learning

In this paper, we propose to utilize rule-based descrip-

tions as an intermediate step of our general approach,

cf. Section 3. For this task we will transfer the data

into a tabular form of attribute value pairs and learn

rules on this data representation. While over the last

decades a large amount of rule learning approaches

have been proposed, we will concentrate on two main

approaches in this paper:

Ripper (Cohen, 1995) is probably the currently

most popular learning algorithm for learning a set of

rules. Ripper learns rules one at a time by growing

and pruning each rule and then adds them to a result

set until a stop criterion is met. After adding a rule

to the result, examples covered by this rule are then

removed from the training data. Ripper is known to

be on par regarding classification performance with

other learning algorithms for rule sets, e.g., C 4.5, but

is computationally more efficient.

As an alternative, we utilize Subgroup Discov-

ery (Kl¨osgen, 1996) (also called Supervised Descrip-

tive Rule Discovery or Pattern Mining) to describe

structural consistencies. In this approach, an exhaus-

tive search for the best k conjunctive patterns in the

dataset with respect to a pre-specified target concept

and a quality function, e.g., the F

1

measure, is per-

formed. Additionally different constraints on the re-

sulting patterns can be applied, e.g., on the maximum

number of describing attribute value pairs or the min-

imum support for a rule. While the resulting rules are

not intended to be used directly as a classifier, a re-

lated approach using patterns based on improvement

of the target share and additional constraints has re-

cently been successfully applied as an intermediate

feature construction step for classification tasks (Batal

and Hauskrecht, 2010).

3 METHOD

For introducing the proposed method, we first moti-

vate the problem. Then, the stacked inference, the in-

duction of the structural properties and the parameter

estimation are presented.

3.1 Problem Description

Recap the inference formula of CRFs (c.f. Sec-

tion 2.1). From the model designers’ perspective,

the classification process is mainly influenced by the

choice of the feature functions f

i

. The feature func-

tions need to provide valuable information to dis-

criminate labels for all possible kinds of instances.

This works well when the feature functions encode

properties that have the same meaning for inference

across arbitrary instances. For example in the domain

of reference segmentation, some special words have

a strong indicative meaning for a certain task: The

word identity feature “WORD=proceedings” always

suggests labeling the token as “Booktitle”. Thus, the

learning algorithm will fix the corresponding weights

to high values, leading the inference procedure into

the right direction. Some features, however, violate

the assumption of a consistent meaning. Their va-

lidity depends on a special context or is restricted

through long range dependencies. In our example of

reference extraction, the feature that indicates colons

might suggest an author label if the document finishes

author fields with colons. However, other style guides

define a different structure of the author labels. Con-

sequently, the learning algorithm assigns the weights

to average the overall meaning. On the one hand,

this yields good generalization given enough training

data. On the other hand, averagingthe weights of such

features restricts them to stay behind their discrimina-

tive potentials. If we knew that a certain feature has a

special meaning inside the given context, we could do

better by increasing (or decreasing) the weights, dy-

namically adapting to the given context. This proce-

dure on some particular features cannot be performed

independently of the remaining weights. Hence, we

apply a different approach in this paper. Instead of

changing the model parameters, we learn the weights

of additional feature functions describing these struc-

tural and context dependent consistencies.

Structural consistencies can be found in many do-

mains, especially when several instances are created

within one creation context. Besides the already men-

tioned segmentation of references, where the knowl-

edge about the applied style guide can greatly in-

crease the accuracy, there are many other examples.

The segmentation of physicians’ letters severely de-

pends on the identification of headlines. Each author

applies different and often contradictory layouts for

the headlines using word processing software. How-

ever, the headlines within a letter rely always on an

identical structure. By providing information about

this consistency additionally to common keywords,

the headlines can be accurately identified. As an-

other example, the labeling of interesting entities in

curriculum vitae, e.g., the employer, relies on large

dictionaries. Their incompleteness can be neglected

when exploiting the uniform composition of one cur-

riculum vitae. Besides these examples there are many

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

242

other domains like content extraction from websites

or recognition of handwriting that allow an increase

of accuracy by exploiting structural consistencies.

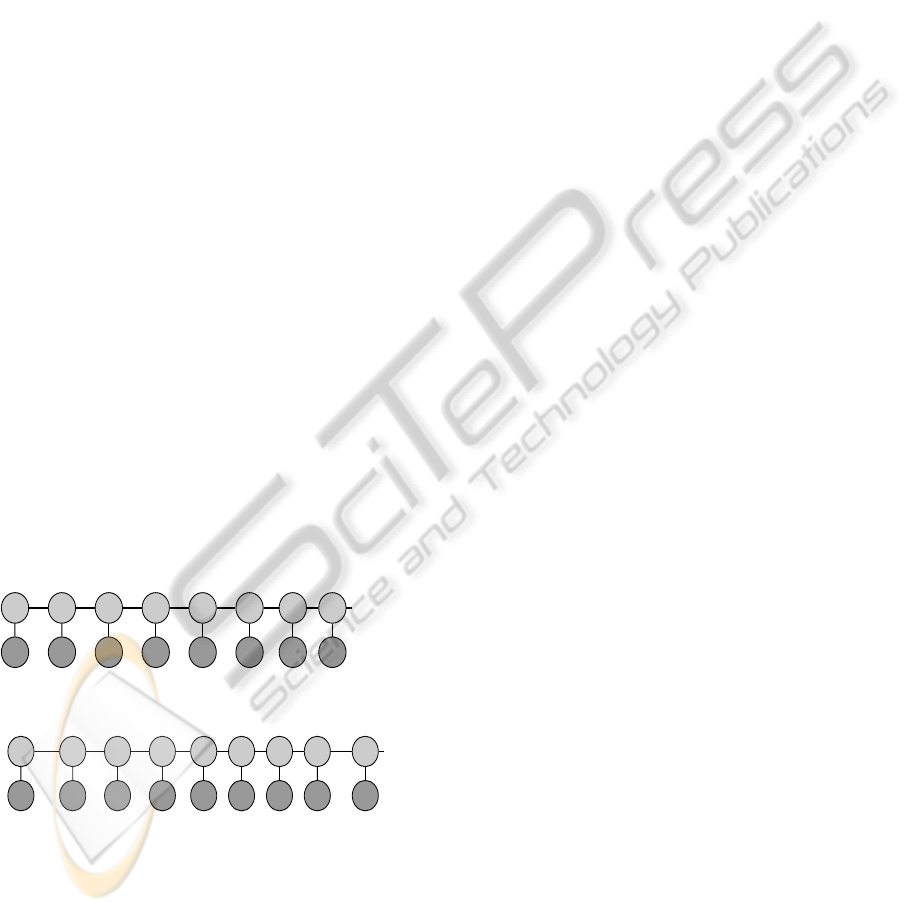

3.2 Stacked Inference

Sequence labeling methods like CRFs assign a se-

quence of labels y = (y

1

, . . . , y

T

) to a given sequence

of observed tokens x = (x

1

, . . . , x

T

). Figure 1 con-

tains two examples to illustrate the assignment. Let

crf(x, Λ, F) = y be the function that applies the CRF

model with the weights Λ = {λ

1

, . . . , λ

K

} and the set

of feature functions F = { f

1

, . . . , f

K

} on the input se-

quence x and returns the labeling result y. The set of

model weights must of course correspond to the set

of feature functions. Since the CRF processes this se-

quence of tokens in one labeling task, we call x an

instance. All instances together form the dataset D

which is split in a disjoint training and testing sub-

set. An information or entity consists often of several

tokens and are encoded by a sequence of equal la-

bels. We assume here that the given labels already

specify an unambiguous encoding. An instance it-

self may contain multiple entities specified by arbi-

trary amount of labels, one label for each token of

the input sequences. Furthermore, we assume that

the dataset D = {C

1

, . . . , C

n

} can be completely and

disjointly partitioned into subsets of instances x that

originate from the same creation context C

i

. Similar

to the relational template in (Kou and Cohen, 2007),

we imply that a trivial context template exists for the

assignment of the context set. Staying with the previ-

ous examples, the reference section of this paper de-

fines a context C with 22 instances.

Author Author Author Author Author Author

Date Date

.. .

y

1

y

2

y

3

y

4

y

5

y

6

y

7

y

8

.. .

x

1

x

2

x

3

x

4

x

5

x

6

x

7

x

8

.. .

Cohen

,

W . W .

(

1995

.. .

Author Author Author Author

Date Date Date Date

Title

.. .

y

1

y

2

y

3

y

4

y

5

y

6

y

7

y

8

y

9

.. .

x

1

x

2

x

3

x

4

x

5

x

6

x

7

x

8

x

9

.. .

McCallum

,

A

.

(

2003

)

.

Efficiently

.. .

Figure 1: The start of the fourth and the fourteenth reference

of the reference section of this paper displayed as a linear

chain with correct labels. The indices for x and y start at 1

for each instance.

In stacked graphical learning, several models can

be stacked in a sequence. Experimental results, e.g.,

of Kou (Kou and Cohen, 2007), have shown that this

approach already convergeswith a depth of two learn-

ers and no significant improvementsare achievedwith

more iterations of stacking. Therefore, we only ap-

ply stacked graphical learning with CRF in a two-

stage approach like Krishnan and Manning (Krish-

nan and Manning, 2006). In order to extract enti-

ties collectively, we define the stacked inference task

on the complete set of instances x in one context C.

The two CRFs, however, label the single instances

within that context separately as usual. The follow-

ing algorithm summarizes the stacked inference com-

bined with online rule learning. Section 3.3 describes

the rule learning techniques for the identification of

structural consistencies and how the “meta-features”

f

m

are induced. Details about the estimation of the

weights (e.g., Λ

m

) are discussed in Section 3.4.

1. Apply base CRF

Apply crf (x, Λ, F) = ˆy on all instances x ∈ C in

order to create the initial label sequences ˆy.

2. Learn structural consistencies

Create a tabular database T by combining all in-

stances x ∈ C, their corresponding labeled se-

quences ˆy and a feature set F

′

⊆ F. Learn classifi-

cation rules for the target attributes and construct

a feature function f

m

∈ F

m

for each discovered

rule.

3. Apply stacked CRF

Apply crf(x, Λ∪ Λ

m

, F ∪ F

m

) = y again on all in-

stances x ∈ C in order to create the final label se-

quences y.

3.3 Learning Structural Consistencies

during Inference

First, the overall idea how structural consistencies are

learned during the inference is addressed. The techni-

cal details are then described after a short example.

We apply rule learning techniques on all (probably

erroneously) label assignments ˆy∈ C of the base CRF.

The rules are learned in order to classify certain label

transitions and, thus, describe the shared properties of

the transition within the context C. The labeling er-

ror in the input data is usually eliminated by the gen-

eralization of the rule learning algorithm. The label

transition is optimally described by a single pattern

that covers the majority of transitions despite of erro-

neously outliers. The learned rules are then used as

binary feature functions in the same context C: They

return 1 if the the rule applies on the observed token

x

t

, and 0 otherwise. We gain therefore additional fea-

tures that indicate label transitions if the instances are

consistently structured. Even if the learned rules are

misleading due to erroneously input data or missing

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES

243

Author Author Author Author Author Author Author Author Author Author Title

.. .

Author Author Author Author

Date Date

Booktitle

.. .

y

1

y

2

y

3

y

4

y

5

y

6

y

7

y

8

y

9

y

10

y

11

.. .

y

38

y

39

y

40

y

41

y

42

y

43

y

44

.. .

x

1

x

2

x

3

x

4

x

5

x

6

x

7

x

8

x

9

x

10

x

11

.. .

x

38

x

39

x

40

x

41

x

42

x

43

x

44

.. .

Kl¨osgen

,

W .

(

1996

)

.

Explora

:

A

.. .

,

R

.

,

editors

,

Advances

.. .

Figure 2: Two excerpts of the ninth reference with erroneous labeling: The date (token x

5

to x

8

) and the begin of the title

(token x

9

and x

10

) was falsely labeled as author, e.g., due to the high weight of the colon for the end of an author. The editor

was additionally labeled as an author (up to token x

41

) and date (token x

42

and x

43

).

consistency of the instances, their discriminative im-

pact on the inference is yet weighted by the learning

algorithm of the stacked CRF.

This process is illustrated by a simple example

concerning the author label, but can also be applied

to any other label. Let the reference section of this

paper be processed by the base CRF that classified all

instances but one correctly as in Figure 1. For some

reasons the base CRF missed the date and editor and

misclassified the tokens x

5

to x

10

and the tokens x

18

to

x

43

in the ninth reference (c.f. Figure 2). The input of

the rule learning now consists of 22 transitions from

author to date whereas one transition is incorrect. In

this case, a reasonable result of the rule learning is

the rule “if the token x

t

is a period and followed by a

parenthesis, then there is a transition from author to

date at t”. Converted to a feature function, this rule

returns 1 for token x

4

and 0 for all other tokens of the

reference in Figure 2. The weight of this new fea-

ture function is then estimated by the stacked CRF.

Therefore, the stacked CRFs’ likelihood of an transi-

tion from author to date is increased at the token x

4

and decreased at the token x

41

due to the presence or

absence of the meta-features.

In general, any classification method can be ap-

plied to learn indicators for the structural consisten-

cies. In this work, we restrict ourselves to tech-

niques for supervised descriptive rule discovery be-

cause their learning and inference algorithm are effi-

cient and the resulting rules can be interpreted. This

allows studies about the properties of good descrip-

tions of structural consistencies. We disregarded the

usage of the Support Vector Machines (Cortes and

Vapnik, 1995) because several models need to be

trained and executed during the stacked inference.

For inducing the meta-features, first one tabular

database T = (I, A) is created for each context C as

the input of the rule learning techniques described in

Section 2.3. The database is constructed using all in-

stances x ∈ C, their corresponding initially labeled

sequences ˆy and a feature set F

′

⊆ F. Each indi-

vidual of I corresponds to a single token of the in-

stances in C. The set of attributes A consists of the

possible labels and a superset of F

′

: When classifi-

cation methods are applied on sequential data, the at-

tributes are also added for a fixed window, e.g., the at-

tribute “WORD@-1=proceedings” indicates that the

token before the current individual equals the string

“proceedings”. Hence, this superset contains the fea-

ture functions F and additionally their manifestation

in window defined by the window size w. The cells

in the tabular database T are filled with binary values.

They are set to true if the feature or label occurs at

this token and to false otherwise.

In a next step, the target attributes for the rule

learning are specified. In this work, we apply the tran-

sition of two different labels. Here, the target attribute

is set on all transitions of two dedicated labels in the

initially labeled result ˆy of the context C.

Finally, the set of learned rules are transformed to

the set of binary feature functions F

m

that return true,

if the condition of the respective rule applies.

3.4 Parameter Estimation

The weights of two models need to be estimated for

the presented approach: the parameters of the base

model and of the stacked model. The base model

needs to be applied on the training instances for the

estimation of the weights of the stacked model, i.e.,

step 1 and step 2 of the stacked inference in Sec-

tion 3.2 need to be performed on the training set. If

the weights of the base model are estimated as usual

using the labeled training instances, then it produces

unrealistic prediction on these instances and the meta-

features of the stacked model are over fitted resulting

in a decrease of accuracy. Since the base model is op-

timized in this case on the training instances, it labels

these instances perfectly. The learned rules create op-

timal descriptions of the structural consistencies and

the stacked model assigns biased weights to the meta-

features. This is of course not reproducible when pro-

cessing unseen data.

The simple solution to this problem is a cross-

fold training of the base model for the training of the

stacked CRF as described in Section 2.2 and success-

fully applied by several approaches (Kou and Cohen,

2007; Krishnan and Manning, 2006). Training of the

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

244

base model in a cross-fold fashion is also a very good

solution for the presented approach, but we simply

decrease the accuracy of the model by reducing the

training iterations. Thus, only one model needs to be

trained for the learning phase of the stacked model.

For the testing phase or common application however,

a single base model learned with the default settings

is applied.

The model of the stacked CRF is trained depen-

dent on the base model and the creation context C

that are both applied to induce the new features on-

line during the stacked inference. The weights Λ =

{λ

1

, . . . , λ

K

} and Λ

m

= {λ

m

1

, . . . , λ

m

M

} of the stacked

CRF are estimated to maximize the conditional prob-

ability on the instances of the training dataset:

P

λ

y|x, C, crf(x, Λ

′

, F)

=

1

Z

x

exp

T

∑

t=1

K

∑

i=1

λ

i

· f

i

(y

t−1

, y

t

, x, t)

+

T

∑

t=1

M

∑

j=1

λ

m

j

· f

m

j

y

t−1

, y

t

, x, t, C, crf (x, Λ

′

, F)

!

The resulting model still relies on the normal fea-

tures functions but is extended with dynamic and high

quality features that help to resolve ambiguities and

substitute for other missing features. These meta-

features possess the same meaning in the complete

dataset, but change their interpretation or manifes-

tation dependent on the currently processed creation

context. They provide overall a very good descrip-

tion of the structural consistencies and are often alone

sufficient for a classification of the entities.

A short example: The induced feature function for

the transition of the author to the date are set to very

high weights for the corresponding state transition of

the learned model. As illustrated in the example of

Section 3.3, this feature function returns 1 in the refer-

ence section of this paper for a token which is a period

and is followed by a parenthesis. In other reference

sections with a different style guide applied, the fea-

ture function for this state transition returns 1, if the

token is a colon and is followed by a capitalized word.

However,both examples refer only to exactly one fea-

ture function that dynamically adapts to the currently

processed context.

4 EXPERIMENTAL RESULTS

The presented approach is evaluated in the domain

of reference segmentation. The common approach is

to separately process the instances, namely the refer-

ences. Within these references, the interesting entities

need to be identified. Since all tokens of a reference

are part of exactly one entity, one speaks of a segmen-

tation task. In this section, we introduce the overall

settings and present the experimental results.

4.1 Datasets

All available and commonly used datasets for the seg-

mentation of references are a listing of references

without their creation context and are thus not ap-

plicable for the evaluation of the presented approach.

Therefore, a newdataset was manually annotated with

the label set of Peng and McCallum (Peng and Mc-

Callum, 2004) concerning the fields Author, Bookti-

tle, Date, Editor, Institution, Journal, Location, Note,

Pages, Publisher, Tech, Title and Volume. The re-

sulting dataset contains 566 references in 23 docu-

ments extracted only of complete reference sections

of real publications. The amount of instances is com-

parable to previously published evaluations in this do-

main, c.f., (Peng and McCallum, 2004; Councill et al.,

2008).

Two different sets of features are used in the ex-

perimental study: The basic features are applied for

all evaluated models and correspond to the features

of well-known evaluations in this domain, c.f., (Peng

and McCallum, 2004; Councill et al., 2008). For an

extensive definition of the set of basic features, we

refer to the dataset that contains all applied basic fea-

tures. Only a part of the basic features is used for the

induction of the meta-features, omitting ngram and

token window features. This restriction is justified

with their minimal expressiveness for the identifica-

tion of the structure in relation to the increase of the

search space.

The dataset with all applied basic features can be

freely downloaded

1

.

4.2 Implementation Details

The machine learning toolkit Mallet

2

is used for an

implementationof the CRF in the presented approach.

For rule learning, we integrated two different meth-

ods. We chose a subgroup discovery implementation

3

because of the multifaceted configuration options that

allow a deep study of the approach’s limits. Addi-

tionally, we applied an established association rule

learner Ripper (Cohen, 1995) for a comparable im-

plemention

4

.

1

http://www.is.informatik.uni-wuerzburg.de/staff/

kluegl peter/research/

2

http://mallet.cs.umass.edu

3

http://sourceforge.net/projects/vikamine/

4

http://sourceforge.net/projects/weka/

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES

245

Table 1: Overview of the evaluated models.

CRF A single CRF trained on the same data and features.

STACKED CRF A two-stage CRF approach. The predictions of the base CRF are added as

features to the stacked CRF.

STACKED+DESCRIPTIVE The default approach of stacked CRF combined with subgroup discovery for

rule learning. Only transitions between the labels Author, Title, Date and Pages

that commonly occur in most references are considered.

STACKED+RIPPER A stacked CRF combined with the association rule learner Ripper. Only the

four most common labels are addressed.

STACKED+MORE A stacked approach using subgroup discovery that additionally learns the tran-

sitions of the labels Booktitle, Journal and Volume.

STACKED+MAX A stacked approach using subgroup discovery that considers the transitions of

all labels for the rule learning task.

We used only the default parameters for the CRF

and all evaluated models were trained until conver-

gence. Only for the training of the stacked model, the

iterations of the base model was reduced to 50 itera-

tions. For the default configuration of both rule learn-

ing tasks, we set the window size w = 1. Additionally

for the default setting of the subgroup discovery, we

used a quality function based on the F

1

measure, se-

lected only one rule for each description of a label,

restricted the length of the rules to maximal three se-

lectors, and set an overall minimum threshold of the

quality of a rule equal to 0.5.

The presented approach is overall easy to imple-

ment and only established standard methods are used.

Its inference is still efficient in contrast to complex

models with approximate inference techniques.

4.3 Performance Measure

The performance is measured with commonly used

methods of the domain. Let tp be the number of

true positive classified tokens and define f n and f p

respectively for false negatives and false positives.

Since punctuations contain no target information in

this domain, only alpha-numeric tokens are consid-

ered. Precision, recall and F

1

are computed by:

precision =

tp

tp+ f p

, recall =

tp

tp+ f n

,

F

1

=

2· precision· recall

precision+recall

.

4.4 Results

The presented approach is compared to two base line

models in a five fold cross evaluation. Four different

settings of stacked CRFs combined with a rule learn-

ing technique are investigated. A detailed description

of all evaluated models is given in Table 1. The docu-

ments of the dataset are randomly distributed over the

five folds.

The results of the experimental study are depicted

in Table 2. Only marginal differences can be ob-

served between the two base line models CRF and

STACKED CRF. This indicates that the normal stack-

ing approach cannot exploit the structural consisten-

cies or gain much advantage of the predicted labels.

Table 2: F

1

scores averaged over the five folds.

average F

1

Base Line

CRF 91.3

STACKED CRF 91.8

Our Approach

STACKED+DESCRIPTIVE 94.0

STACKED+RIPPER 93.6

Variants of DESCRIPTIVE

STACKED+MORE 94.1

STACKED+MAX 93.6

All of our stacked models combined with rule

learning techniques significantly outperform the base

line models using a one-sided, paired t-tests on the F

1

scores of the single references (p ≪ 0.01). Compar-

ing the results of STACKED+DESCRIPTIVE that only

considers the consistencies of four labels to the base

line CRF, our approach achieves an average error re-

duction of over 30% on the real-world dataset.

The lower F

1

scores of STACKED+RIPPER can be

explained by its learning algorithm. The Ripper im-

plementation applies a coverage-based learning in or-

der to create a set of rules, which together classify the

target attribute. This can lead to a reproduction of er-

rors of the predicted labels in the description of the

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

246

structure. In the domain of reference segmentation a

single description of the structure is preferable. How-

ever, in other domains where disjoint consistencies of

one transition can be found, a covering algorithm for

inducing the rules performs probably better.

The second configuration with a subgroup discov-

ering technique STACKED+MORE considers the tran-

sition between seven labels and is able to slightly in-

crease the measured F

1

score compared to our default

model STACKED+DESCRIPTIVE. STACKED+MAX

that induces rules for all labels achieves only an aver-

age error reduction of 26% compared to a single CRF.

This is mainly caused by misleading meta-features for

rare labels. The task of learning consistencies from a

minimal amount of examples is error-prone and can

decrease the accuracy, especially if the examples are

labeled incorrectly.

Table 3 provides closer insights in the benefit of

the presented approach using the author label as an

example. STACKED+DESCRIPTIVE is able is signifi-

cantly improve the labeling accuracy for all folds but

one. The third fold contains an unfavorable distribu-

tion of style guides between the training and testing

set for the author. If the initial base CRF labels a la-

bel systematically incorrectly, then the rule learning

cannot induce any valuable and correct descriptions

of the structure. Nevertheless, an average error reduc-

tion of over 50% is achievedfor identifying the author

of the reference.

Table 3: F

1

scores of the author label.

CRF STACKED+ error

DESCRIPTIVE reduction

Fold 1 97.7 99.6 82.6%

Fold 2 97.0 99.2 73.3%

Fold 3 96.4 96.5 2.8%

Fold 4 97.1 98.8 58.6%

Fold 5 89.5 95.1 53.3%

average 95.5 97.8 51.6%

To our knowledge, no domain-independent ap-

proach was published that can be utilized for a com-

parable model. As comparison, we applied the skip-

chain approach of (Sutton and McCallum, 2004) with

factors for capitalized words and additionally for

identical punctuation marks, but no improvementover

the base line models could be measured. Furthermore,

the feature induction for CRFs (McCallum, 2003) was

integrated, but resulted counter-intuitively in a de-

crease of the accuracy.

The performance time of the presented approach

for one fold averaged over the five folds is sev-

eral times faster than a higher-order model with skip

edges, about nine times faster using the subgroup dis-

covery and about fourteen times faster using Ripper.

The difference in speed is less compared to previously

published evaluations (Kou and Cohen, 2007). This is

mainly caused by the fact that the rule learning is nei-

ther optimized for this task nor for the domain, e.g.,

by pruning the attributes.

The presented approach significantly outperforms

the common CRF without any additional domain

knowledge, integrated matching methods with a bib-

liographic database or other jointly performed tasks

like entity resolution. Nevertheless, the approach

stays way behind its potential. The meta-features

specify most of the time a perfect classification of the

boundaries and transitions, but the stacked CRF still

labels the entities erroneously. To provide the knowl-

edge on a simple feature level may not sufficient to

adapt the model to the structure of the current creation

context.

5 RELATED WORK

In the following, we give a brief overview on re-

lated work coming from different domains with con-

text consistencies, attempts utilizing complex graph-

ical models and stacked graphical models for collec-

tive informationextraction, and approaches on feature

induction.

Especially for Named Entity Recognition (NER)

modelling long-distance dependencies is crucial. The

labeling of an entity is quite consistent within a given

document, however, conclusive discriminating fea-

tures are sparsely spread across the document. As a

consequence, leveraging predictions of one instance

to disambiguate others is essential. Bunescu et al.

(Bunescu and Mooney, 2004), Sutton et al. (Sutton

and McCallum, 2004) and Finkel et al. (Finkel et al.,

2005) extended the commonly applied linear chain

CRF to higher order structures. The exponential in-

crease in model complexity enforces to switch from

exact to approximate inference techniques. Stacked

graphical models (Kou and Cohen, 2007; Krishnan

and Manning, 2006) retain exact inference as well as

efficiency by using linear chain CRF.

In Kou and Cohen’s Stacked Graphical Models

framework (Kou and Cohen, 2007), information is

propagated by Relational Templates C. Although

each C may find related instances and aggregate their

labels in a possibly complex manner, they utilize

rather simple aggregators, e.g. COUNT and EX-

ISTS. Likewise, the approach of Krishnan and Man-

ning (Krishnan and Manning, 2006) uses straightfor-

ward but for NER efficient aggregate features, con-

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES

247

centrating on the label predictions of the same entity

in other instances. In contrast to Stacked Graphical

Models, they also include corpus-level features, ag-

gregating predictions across documents. In this paper,

we use data mining techniques to determine rich con-

text sensitively applied features. Rather than simply

transferring labels of related instances, e.g., by major-

ity vote aggregation, we exploit structural properties

of a given context. We represent the gathered context

knowledge by several meta features which are con-

ceptually independent of the label types.

A semi-supervised approach on exploiting struc-

tural consistencies of documents has been taken by

Arnold and Cohen (Arnoldand Cohen, 2008) who im-

prove domain adaption by conditional frequency in-

formation of the unlabeled data. They show that dif-

ferences in the frequency distribution of tokens across

different sections in biological research papers can

provide useful information to extract protein names.

Counting frequencies can be done efficiently and the

experimental results suggest that these features are

robust across documents. However, in general unla-

beled data is not enough to model the context struc-

ture, e.g., frequency information can be noisy or dif-

ferences in the frequency distribution may be caused

non-structural. We propose to mine the distributions

of predicted labels and their combinations with ob-

served features to capture context structure.

Yang et al. use structural consistencies for in-

formation extraction from web forums (Yang et al.,

2009). They employ Markov Logic Networks

(Richardson and Domingos, 2006) with formulas to

encode the assumed structural properties of a typical

forum page, e.g., characteristic link structures or tag

and attribute similarities among different posts and

sites. Since context structure is represented inside of

the graphical model, inference and learning have to

fight model complexity. Another example for con-

tent extraction from websites that exploits related in-

stances is Gulhane et al. (Gulhane et al., 2010). They

assume two properties of web information: The val-

ues of an attribute distributed over different pages are

similar for equal entities and the pages of one web-

site share a similar structure due to the creation tem-

plate. In contrast to those two approaches, the work

presented in this paper relies on no structural knowl-

edge previously known about the domain.

McCallum contributed an improvement for CRF

applications through feature induction (McCallum,

2003). Based on a given training set useful combina-

tions of features are computed, reducing the number

of model parameters. The feature induction of our ap-

proach is performedonline during processing the doc-

ument and applies flexible data mining techniques to

specify the properties of consistent label transitions.

Learning Flexible Features for Conditional Random

Fields (Stewart et al., 2008) is an approach by Stew-

ard et al. that also induces features. They propose

Conditional Fields Of Experts (CFOE) as CRF aug-

mented with latent hidden states.

6 CONCLUSIONS

We have presented a novel approach for collective

information extraction using a combination of two

CRFs together with rule learning techniques to induce

new features during inference. The initial results of

the first CRF are exploited to gain information about

the structural consistencies. Then, the second CRF

is automatically adapted to the previously unknown

composition of the entities. This is achieved by

changing the manifestation of its features dependent

on the currently processed set of instances. To our

best knowledge, no similar and domain-independent

approach was published that is able to exploit the

structural consistencies in textual data. The results

on a real-world dataset for the segmentation of refer-

ences indicate a significant improvement towards the

commonly applied models.

For future work, better ways to include the high

quality descriptions need to be found for exploiting

the structural consistencies. One possibility is the

Generalized Expectation Criteria of Mann and Mc-

Callum (Mann and McCallum, 2010) that allow to

learn with constraints that cover more expressive and

structural dependencies than the underlying model.

Another approach that can solve this problem is to use

a complex model for joint inference despite the more

expensive inference. Instead of performing two dif-

ferent task in the same inference for segmentation and

matching (Poon and Domingos, 2007; Singh et al.,

2009), a joint model can be used to infer the labeled

sequence together with the description of the struc-

tural properties.

REFERENCES

Arnold, A. and Cohen, W. W. (2008). Intra-document Struc-

tural Frequency Features for Semi-supervised Domain

Adaptation. In Proceeding of the 17th ACM con-

ference on Information and knowledge management,

pages 1291–1300. ACM.

Batal, I. and Hauskrecht, M. (2010). Constructing Clas-

sification Features using Minimal Predictive Patterns.

In Proceedings of the 19th ACM International Con-

ference on Information and Knowledge Management,

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

248

CIKM ’10, pages 869–878, New York, NY, USA.

ACM.

Bunescu, R. and Mooney, R. J. (2004). Collective Informa-

tion Extraction with Relational Markov Networks. In

Proceedings of the 42nd Annual Meeting on Associa-

tion for Computational Linguistics, ACL ’04, Strouds-

burg, PA, USA. Association for Computational Lin-

guistics.

Cohen, W. W. (1995). Fast Effective Rule Induction. In Pro-

ceedings of the Twelfth Int. Conference on Machine

Learning, pages 115–123. Morgan Kaufmann.

Cortes, C. and Vapnik, V. (1995). Support-vector networks.

Machine Learning, 20(3):273–297.

Councill, I., Giles, C. L., and Kan, M.-Y. (2008). ParsCit:

an Open-source CRF Reference String Parsing Pack-

age. In Proceedings of the Sixth International Lan-

guage Resources and Evaluation (LREC’08), Mar-

rakech, Morocco. ELRA.

Finkel, J. R., Grenager, T., and Manning, C. (2005). In-

corporating non-local Information into Information

Extraction Systems by Gibbs Sampling. In Pro-

ceedings of the 43rd Annual Meeting on Association

for Computational Linguistics, ACL ’05, pages 363–

370, Stroudsburg, PA, USA. Association for Compu-

tational Linguistics.

Gulhane, P., Rastogi, R., Sengamedu, S. H., and Tengli,

A. (2010). Exploiting Content Redundancy for Web

Information Extraction. Proc. VLDB Endow., 3:578–

587.

Kl¨osgen, W. (1996). Explora: A Multipattern and

Multistrategy Discovery Assistant. In Fayyad, U.,

Piatetsky-Shapiro, G., Smyth, P., and Uthurusamy, R.,

editors, Advances in Knowledge Discovery and Data

Mining, pages 249–271. AAAI Press.

Kou, Z. and Cohen, W. W. (2007). Stacked Graphical Mod-

els for Efficient Inference in Markov Random Fields.

In Proceedings of the 2007 SIAM Int. Conf. on Data

Mining.

Krishnan, V. and Manning, C. D. (2006). An Effective two-

stage Model for Exploiting non-local Dependencies in

Named Entity Recognition. In Proceedings of the 21st

International Conference on Computational Linguis-

tics and the 44th annual meeting of the Association

for Computational Linguistics, ACL-44, pages 1121–

1128, Stroudsburg, PA, USA. Association for Compu-

tational Linguistics.

Lafferty, J., McCallum, A., and Pereira, F. (2001). Condi-

tional Random Fields: Probabilistic Models for Seg-

menting and Labeling Sequence Data. Proc. 18th In-

ternational Conf. on Machine Learning, pages 282–

289.

Mann, G. S. and McCallum, A. (2010). Generalized Ex-

pectation Criteria for Semi-Supervised Learning with

Weakly Labeled Data. J. Mach. Learn. Res., 11:955–

984.

McCallum, A. (2003). Efficiently Inducing Features of

Conditional Random Fields. In Nineteenth Confer-

ence on Uncertainty in Artificial Intelligence (UAI03).

Peng, F. and McCallum, A. (2004). Accurate Information

Extraction from Research Papers using Conditional

Random Fields. In HLT-NAACL, pages 329–336.

Poon, H. and Domingos, P. (2007). Joint Inference in In-

formation Extraction. In AAAI’07: Proceedings of the

22nd National Conference on Artificial intelligence,

pages 913–918. AAAI Press.

Richardson, M. and Domingos, P. (2006). Markov Logic

Networks. Machine Learning, 62(1-2):107–136.

Singh, S., Schultz, K., and McCallum, A. (2009). Bi-

directional Joint Inference for Entity Resolution

and Segmentation Using Imperatively-Defined Factor

Graphs. In Proceedings of the European Conference

on Machine Learning and Knowledge Discovery in

Databases: Part II, ECML PKDD ’09, pages 414–

429. Springer-Verlag.

Stewart, L., He, X., and Zemel, R. S. (2008). Learning Flex-

ible Features for Conditional Random Fields. IEEE

Trans. Pattern Anal. Mach. Intell., 30(8):1415–1426.

Sutton, C. and McCallum, A. (2004). Collective Segmen-

tation and Labeling of Distant Entities in Information

Extraction. In ICML Workshop on Statistical Rela-

tional Learning and Its Connections to Other Fields.

Wolpert, D. H. (1992). Stacked Generalization. Neural Net-

works, 5:241–259.

Yang, J.-M., Cai, R., Wang, Y., Zhu, J., Zhang, L., and

Ma, W.-Y. (2009). Incorporating Site-level Knowl-

edge to Extract Structured Data from Web Forums. In

Proceedings of the 18th international conference on

World wide web, pages 181–190. ACM.

STACKED CONDITIONAL RANDOM FIELDS EXPLOITING STRUCTURAL CONSISTENCIES

249