ON IMPROVING SEMI-SUPERVISED MARGINBOOST

INCREMENTALLY USING STRONG UNLABELED DATA

∗

Thanh-Binh Le and Sang-Woon Kim

Department of Computer Engineering, Myongji University, 449-728, Yongin, South Korea

Keywords:

Semi-supervised MarginBoost, Incremental learning strategy, Dissimilarity-based classifications.

Abstract:

The aim of this paper is to present an incremental learning strategy by which the classification accuracy of

the semi-supervised MarginBoost (SSMB) algorithm (d’Alch´e Buc, 2002) can be improved. In SSMB, both a

limited number of labeled and a multitude of unlabeled data are utilized to learn a classification model. How-

ever, it is also well known that the utilization of the unlabeled data is not always helpful for semi-supervised

learning algorithms. To address this concern when dealing with SSMB, in this paper we study a means of

selecting only “small” helpful portion of samples from the additional available data. More specifically, this is

done by performing SSMB after incrementally reinforcing the given labeled training data with a part of strong

unlabeled data; we train the classification model in an incremental fashion by employing a small amount of

“strong” samples selected from the unlabeled data per iteration. The proposed scheme is evaluated with well-

known benchmark databases, including some UCI data sets, in two approaches: dissimilarity-based classifica-

tion (DBC) (Pekalska and Duin, 2005) as well as conventional feature-based classification. Our experimental

results demonstrate that, compared to previous approaches, it achieves better classification accuracy results.

1 INTRODUCTION

MarginBoost (Mason, 2000) aims at improving the

classification performance of an ensemble classifier

designed with weak classifiers by means of linear

combination. By introducing a means of generat-

ing the MarginBoost in a semi-supervised approach,

semi-supervised MarginBoost (SSMB) was proposed

(d’Alch´e Buc, 2002). In SSMB, a large amount of

unlabeled data, U, together with labeled data, L, are

used to build better classifiers. That is, the algorithm

exploits the samples of U in addition to the labeled

counterparts to improve the performance on a classi-

fication task, leading to a performance improvement

of the supervised learning algorithm with a multitude

of unlabeled data.

However, it is also well known that U does not

always help during SSMB learning. Specifically, it

is not guaranteed that adding U to the training data,

T, i.e., T = L ∪ U, leads to a situation in which we

can improve the classification performance. There-

fore, if we can know more about confidence levels in-

volved in classifying U, we could choose some of the

∗

This work was supported by the National Research

Foundation of Korea funded by the Korean Government

(NRF-2011-0002517).

informative data and include it when training weak

classifiers. This idea has been used in SemiBoost

(Mallapragada, 2009), where the authors measured

the pairwise similarity to guide the selection of a sub-

set of U at each iteration and to assign labels to them.

To improve the performance of SSMB further, in

this paper we propose a modified SSMB algorithm in

which we use the discriminating unlabeled data in an

incremental fashion rather than in batch mode (Cesa-

Bianchi, 2006). In both SemiBoost and the modified

SSMB, some instances of the strong unlabeled data

are selected from the given U and are then used to

train the classification model in addition to L. How-

ever, the two algorithms differ in how they construct

T. In the present SSMB, the cardinality of T is in-

creased incrementally as the iterations are repeated,

while, in SemiBoost, the cardinality of T is always

the same when executing the learning iterations.

The main contribution of this paper is that

it demonstrates that the classification accuracy of

SSMB can be improved by incrementally utilizing a

portion of the unlabeled data as well as the labeled

training data. Also, an evaluation of the proposed

scheme has been performed in two fashions: tradi-

tional feature-based classification (Fukunaga, 1990)

and recently developed dissimilarity-based classifica-

tion (Pekalska and Duin, 2005).

265

Le T. and Kim S. (2012).

ON IMPROVING SEMI-SUPERVISED MARGINBOOST INCREMENTALLY USING STRONG UNLABELED DATA.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 265-268

DOI: 10.5220/0003721202650268

Copyright

c

SciTePress

2 SSMB IMPROVED

In SSMB, an ensemble classifier, g

t

, is designed

with weak classifiers, h

τ

∈ H , by means of a lin-

ear combination, as follows: g

t

(x) =

∑

t

τ=1

α

τ

h

τ

(x),

where α

τ

is a normalized step-length. For the train-

ing data, T = L∪U, where L = {(x

i

,y

i

)}

n

l

i=1

and U =

(x

j

)

n

u

j=1

, the algorithm minimizes the cost func-

tion C defined with any scalar decreasing function

c of the margin ρ: C (g

t

) =

∑

n

l

i=1

c(ρ

L

(g

t

(x

i

),y

i

)) +

∑

n

u

i=1

c(ρ

U

(g

t

(x

i

))), where ρ

L

(g

t

(x

i

),y

i

) = y

i

g

t

(x

i

)

and ρ

U

(g

t

(x

i

)) = g

t

(x

i

)

2

. Here, the criterion quan-

tities for L and U, J

L

t

and J

U

t

, are expressed as:

J

L

t

=

∑

x

i

∈L

w

t

(i)y

i

h

t+1

(x

i

), (1)

J

U

t

=

∑

x

i

∈U

w

t

(i)

∂ρ

U

(g

t

(x

i

))

∂g

t

(x

i

)

h

t+1

(x

i

), (2)

where w

t

(i) is computed as follows:

w

t

(i) =

c

′

(ρ

L

(g

t

(x

i

),y

i

))

∑

x

j

∈T

w

t−1

( j)

, if x

i

∈ L,

c

′

(ρ

U

(g

t

(x

i

)))

∑

x

j

∈T

w

t−1

( j)

, if x

i

∈ U.

(3)

An algorithm for SSMB is formalized as follows:

1. Initialization: g

0

(x) = 0; w

0

(i) =

1

n

l

+n

u

,i =

1,··· , n

l

+ n

u

.

2. Compute predicted labels ofU using the nearest

neighbor (NN) rule.

3. Do the following steps while increasing t by

unity from 1 to t

1

per epoch:

(a) Learn the gradient direction h

t

for T while

maximizing J

T

t

(= J

L

t

+ J

U

t

) computed with (1, 2).

(b) If J

T

t

≤ 0, then exit and return g

t

(x); otherwise,

go to the next sub-step.

(c) After computing g

t+1

(x) = g

t

(x)+α

t

h

t

(x), up-

date the weights w

t+1

(i) with (3) for the next iteration.

As mentioned previously, the unlabeled data do

not always help in SSMB learning processes. In par-

ticular, when the cardinality of the unlabeled data is

much larger than that of labeled data, the situation

is much worse. To overcome the limitation based

on this, we expand SSMB using classification con-

fidence of the unlabeled data as SemiBoost does. The

present SSMB and SemiBoost select the strong ex-

amples from unlabeled data based on the confidence

level. However, two algorithms differ in terms of

the following points: how they select the strong sam-

ples from the unlabeled data and how they determine

pseudo-labels of the selected unlabeled data. In Semi-

Boost, 10% of the entire unlabeled data set is repeat-

edly selected based on the confidence levels, while in

the present SSMB, 10% of the currently available un-

labeled data is selected incrementally. Also, the two

Figure 1: A comparison of the training data sets of SSMB,

improved SSMB, and SemiBoost learning algorithms.

algorithms are different in how they determine the

class labels of the selected unlabeled data. The for-

mer determines the pseudo-labels based on the simi-

larity matrix, but the latter determines them based on

the NN rule. On the basis of what we have briefly

discussed, an algorithm for the present SSMB is for-

malized as follows:

1. This step is the same as Step 1 in SSMB.

2. For all x

i

,x

j

∈ T, compute a similarity matrix,

S (i, j) = exp(−kx

i

− x

j

k

2

2

/σ

2

), where σ is a scale pa-

rameter, and predicted labels of U using NN rule.

3. Repeat the following steps while increasing t

by unity from 1 to t

1

per epoch: First, using a “sam-

pling”, obtain new training data, T

t

, from available

unlabeled data, T

u

, in addition to L. Next, for T

t

, re-

peat the three sub-steps of Step 3 of SSMB ten times.

In the above sampling process, we first choose a

portion of the strong data from T

u

(i.e., 10%; T

10

u

)

according to the confidence levels based on S . Then,

we update T

u

← T

u

− T

10

u

and n

u

← |T

u

|. Fig.1 shows

a comparison of the training data set T

t

of SSMB, the

modified (and improved) SSMB, and SemiBoost.

3 EXPERIMENTAL RESULTS

The proposed scheme was tested and compared with

conventional methods. This was done by performing

experiments on the well-known benchmark databases

of Nist389, mfeat-fac, and mfeat-kar, as well as other

multivariate data sets cited from the UCI Machine

Learning Repository

2

.

In this experiment, the data sets are initially split

into three parts: labeled training sets, labeled test sets,

and unlabeled data at a ratio of 20 : 10 : 70. The train-

ing and test procedures are then repeated ten times

and the results obtained are averaged. Specifically,

the classifications are performed in two fashions:

feature-based classification (FBC) and dissimilarity-

based classification (DBC). In DBC, the classifica-

2

http://www.ics.uci.edu/∼mlearn/MLRepository.html.

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

266

tion process is not based on the feature measurements

of individual object samples but rather on a suitable

dissimilarity measure among the individual samples.

Therefore, in this experiment, after measuring the dis-

similarity among paired samples with the Euclidean

distance, the classifications were performed on the

constructed dissimilarity matrix. In the interest of

compactness, the details of DBC are omitted here, but

can be found in (Pekalska and Duin, 2005).

Conventional SSMB and the newly proposed

SSMB (which are referred to as SSMB-original and

SSMB-improved, respectively) were performed with

numbers of weak learners ranging from 10 to 50 in in-

crements of 5 at a time. This was repeated ten times.

The scalar decreasing function employed for the mar-

gin ρ was c(x) = e

−x

. In particular, the step-length

α

t

=

1

4

ln

1−ε

t

ε

t

, where ε

t

=

∑

i

w

t

(i)δ(y

i

g

t

(x

i

),−1)

was commonly used for both SSMBs. For all of

the boosting algorithms, a decision-tree classifier

was used as the weak learner and implemented with

Prtools (Duin and Tax, 2004).

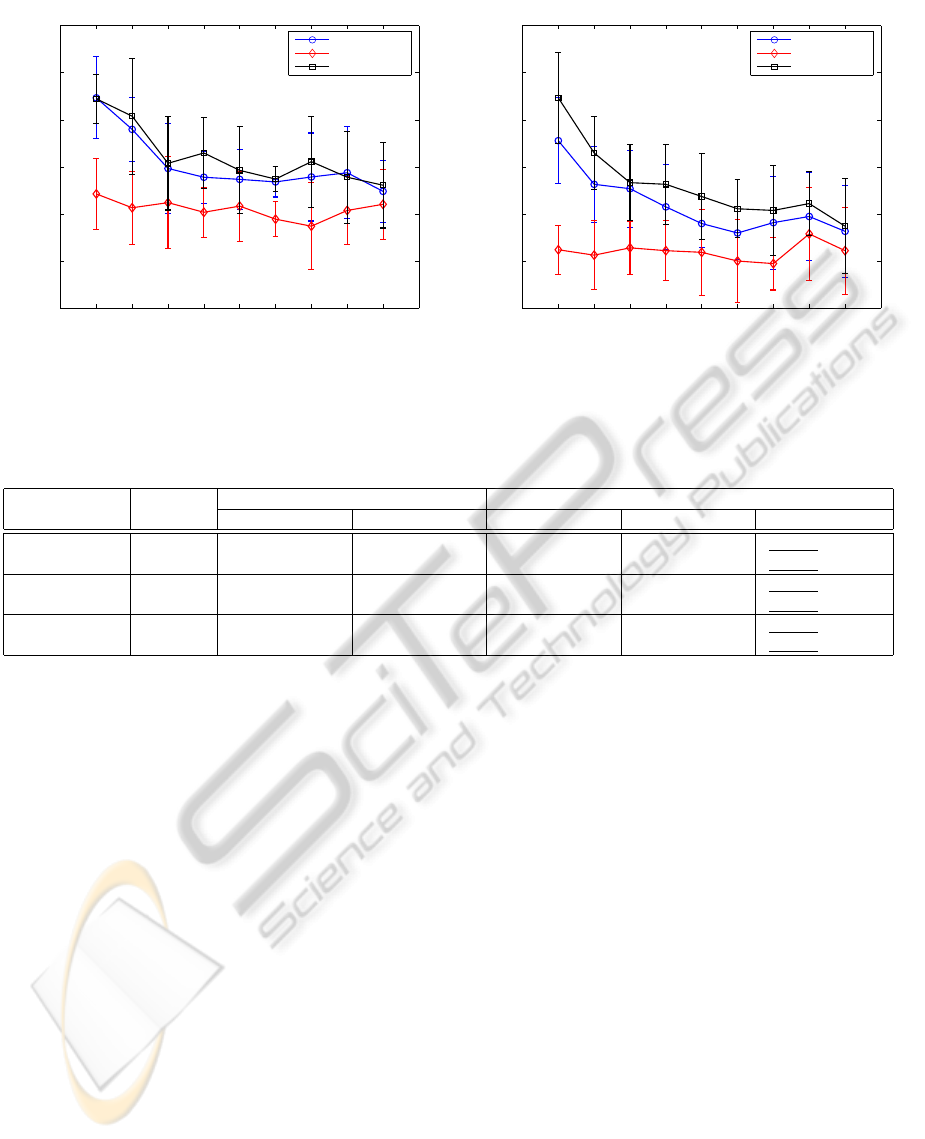

First, the experimental results obtained with the

two classifying approaches, FBC and DBC, for the

Nist389, mfeat-fac, and mfeat-kar databases were in-

vestigated. Fig. 2 shows a comparison of the error

rates (and standard deviations) of SemiBoost, SSMB-

original, and SSMB-improved, obtained with the two

classifying approaches for Nist389. Here, in the inter-

est of brevity, the other results are omitted. Also, to

reduce the computational complexity, the dimension-

ality of all of the data sets was reduced to 10 values

using a principal component analysis (PCA).

From the figures shown in Fig. 2, it can be ob-

served that, in general, the classification accuracies

of SSMB, estimated with FBC and DBC, can be im-

proved. This is clearly shown in the error rates of the

ensemble classifiers, as represented by the red lines

(dashed and solid lines with the ♦ marker) and the

black and blue lines (dashed and solid lines with the

and ⊖ markers, respectively). For all three data sets

and for each repetition, the rank of achieving the low-

est error rate is always identical in the order of SSMB-

improved, SSMB-original, and SemiBoost. That is,

the winner is always the SSMB-improved. In addi-

tion, it should be pointed out that the improvements

of the two methods of DBC and FBC were similar.

According to the different number of repetitions, the

increase and/or decrease in the error rates of the two

approaches appeared to be consistent.

Furthermore, the following is an interesting is-

sue to investigate: Is the classification accuracy of

the improved SSMB algorithm better (or more robust)

than those of conventional schemes when changing

the amount of the selected strong data? To answer

this question, for the data sets, we repeated the ex-

periment with four different strong data sizes (i.e.,

T

5

u

,T

10

u

,T

15

u

,T

20

u

) and ten repetitions, as was done

previously under the same experimental conditions.

The experimental results in this case showed that the

error rates obtained with the four differently sized in-

stances of strong unlabeled data are similar.

Table 1 shows a numerical comparison of the error

rates obtained with AdaBoost, MarginBoost, Semi-

Boost, and the original and improved SSMB algo-

rithms for the three data sets. Here, two supervised

boosting algorithms, AdaBoost and MarginBoost,

were employed as a reference for comparison. These

supervised algorithms were trained with only 20%

of the labeled training data and were evaluated with

10% of the labeled test data, while the three semi-

supervised algorithms, SemiBoost, SSMB-original,

and SSMB-improved, were trained with 70% of the

unlabeled training data as well as 20% of the labeled

data. They were also evaluated also with 10% of

the labeled test data. For all of the boosting algo-

rithms, the number of weak classifiers was identical,

at t

1

= 50. In the table, the estimated error rates that

increase and/or decrease more than the sum of the

standard deviations are underlined.

To investigate the advantage of incrementally us-

ing strong unlabeled data further and especially to de-

termine which types of significant data sets are more

suitable for the scheme, we repeated the experiment

with a few UCI data sets. From the results obtained,

as in Table 1, it should be noted again that the classi-

fication accuracy of the SSMB algorithm can be gen-

erally improved when utilizing the unlabeled data in

an incremental learning fashion. However, the pro-

posed scheme does not work satisfactorily with low-

dimensional data sets. That is, for high-dimensional

data sets, the difference in the error rates of SSMB-

original and SSMB-improved schemes is relatively

large, whereas the difference in the error rates for low-

dimensional data sets is marginal.

4 CONCLUSIONS

In an effort to improve the classification performance

of SSMB, in this paper we used an incremental learn-

ing strategy with which the SSMB can be imple-

mented efficiently. We first computed the similarity

matrix of labeled and unlabeled data and, in turn, se-

lected a small amount of strong unlabeled samples

based on their confidence levels. We then trained a

classification model using the selected unlabeled sam-

ples as well as labeled samples in an incremental fash-

ion. The proposed strategy was evaluated with well-

ON IMPROVING SEMI-SUPERVISED MARGINBOOST INCREMENTALLY USING STRONG UNLABELED DATA

267

10 15 20 25 30 35 40 45 50

0.04

0.06

0.08

0.1

0.12

0.14

0.16

Ensemble sizes (T)

Estimated error rates

Nist389 (FBC)

SSMB−original

SSMB−imprved

SemiBoost

10 15 20 25 30 35 40 45 50

0.06

0.08

0.1

0.12

0.14

0.16

0.18

Ensemble sizes (T)

Estimated error rates

Nist389 (DBC)

SSMB−original

SSMB−imprved

SemiBoost

Figure 2: A comparison of the estimated error rates (standard deviations) obtained with the FBC and DBC approaches for

Nist389: (a) left and (b) right; (a) and (b) are of FBC and DBC, obtained with SemiBoost and the two SSMB algorithms.

Table 1: A numerical comparison of the error rates (standard deviations) obtained with two supervised schemes (i.e., AdaBoost

and MarginBoost) and three semi-supervised schemes (i.e., SemiBoost, SSMB-original, and SSMB-improved) for the three

data. Here, three numbers in brackets of the first column represent the dimensions d, samples n, and classes c, respectively.

data sets classifier supervised learning semi-supervised learning

(d/n/c) types AdaBoost MarginBoost SemiBoost SSMB-original SSMB-imprved

Nist389 FBC 0.0648(0.0130) 0.0648(0.0130) 0.0730(0.0155) 0.0696(0.0142) 0.0496(0.0122)

(1024/1500/3) DBC 0.0563(0.0134) 0.0537(0.0139) 0.0574(0.0129) 0.0652(0.0165) 0.0400(0.0110)

mfeat-fac FBC 0.0131(0.0033) 0.0131(0.0036) 0.0136(0.0038) 0.0156(0.0033) 0.0099(0.0022)

(216/2000/10) DBC 0.0258(0.0035) 0.0258(0.0034) 0.0270(0.0054) 0.0280(0.0044) 0.0227(0.0046)

mfeat-kar FBC 0.0236(0.0025) 0.0236(0.0025) 0.0234(0.0033) 0.0224(0.0030) 0.0166(0.0027)

(64/2000/10) DBC 0.0167(0.0029) 0.0166(0.0029) 0.0175(0.0025) 0.0178(0.0021) 0.0134(0.0024)

known benchmark databases, including some UCI

data sets, in two ways: traditional feature-based clas-

sification and newly developed dissimilarity-based

classification schemes. Our experimental results

demonstrate that the classification accuracy of SSMB

was improved by employing the proposed learning

method. Although we have shown that SSMB can

be improved in terms of classification accuracy, many

tasks remain. One of them is to improve the classifi-

cation efficiency by selecting an optimized or nearly

optimized number of unlabeled samples for the incre-

mental learning process. The significant data sets best

suited for the scheme should be determined. There-

fore, the problem of theoretically investigating the ex-

perimental results obtained with the proposed SSMB

remains to be solved.

REFERENCES

Cesa-Bianchi, N., G. C. Z. L. (2006). Incremental algo-

rithms for hierarchical classification. Journal of Ma-

chine Learning Research, 7:31–54.

d’Alch´e Buc, F., G. Y. A. C. (2002). Semi-supervised

marginboost. In Advances in Neural Information Pro-

cessing Systems, volume 14, pages 553–560. the MIT

press.

Duin, R. P. W., J. P. d. D. P. P. P. E. and Tax, D. M. J. (2004).

PRTools 4: a Matlab Toolbox for Pattern Recognition.

Delft University of Technology, The Netherlands.

Fukunaga, K. (1990). Introduction to Statistical Pattern

Recognition, 2nd. Academic Press, San Diego, CA.

Mallapragada, P. K., J. R. J. A. K. L. Y. (2009). Semiboost:

Boosting for semi-supervised learning. IEEE Trans.

Pattern Anal. and Machine Intell., 31(11):2000–2014.

Mason, L., B. J. B. P. L. F. M. (2000). Functional gradient

techniques for combining hypotheses. In Advances in

Large Margin Classifiers. the MIT press.

Pekalska, E. and Duin, R. P. W. (2005). The Dissimilarity

Representation for Pattern Recognition: Foundations

and Applications. World Scientific, Singapore.

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

268