OPERATIONS ON CONVERSATIONAL MIND-GRAPHS

Jayanta Poray and Christoph Schommer

Department of Computer Science and Communication, University of Luxembourg

6, Rue Coudenhove-Kalergi, L-1359, Luxembourg City, Luxembourg

Keywords:

Adaptive information management, Learning, Associative memories, Linguistic processing, Graph operations.

Abstract:

Mind-graphs define an associative-adaptive concept of managing information streams, like for example words

within a conversation. Being composed of vertices (or cells; representing external stimuli like words) and undi-

rected edges (or connections), mind-graphs adaptively reflect the strength of simultaneously occurring stimuli

and allow a self-regulation through the interplay of an artificial ‘fever’ and ‘coldness’ (capacity problem).

With respect to this, an interesting application scenario is the merge of information streams that derive from a

conversation of k conversing partners. In such a case, each conversational partner has an own knowledge and

a knowledge that (s)he shares with other. Merging the own (inside) and the other’s (outside) knowledge leads

to a situation, where things like e.g. trust can be decided. In this paper, we extend this concept by proposing

extended mind-graph operations, dealing with the merge of sub-mind-graphs and the extraction of mind-graph

skeletons.

1 INTRODUCTION

Today, the exchange of textual information by elec-

tronic devices is very popular. It ranges from simple

short-term messages to collections of long-term con-

versations, which have obtained by several months or

years. And in fact, the produce of textual information

within a conversation is a non-deterministic process,

which requires a linguistic preparation of the texts,

and a computational finesse, if the generated informa-

tion is to be accumulated or summarised with regards

to the content. As one of the most promising research

topic in the next years, the exploration of chats inside

social networks belongs to this category (Tuulos and

Tirri, 2004).

Some research works have been done in the field

of information accumulation, but the handling of a dy-

namic conversation within an adaptive framework has

mostly been solved by associative graphs and the rep-

resentation of information within these graphs. For

example, a text summarisation method LexRank has

been suggested by (Radev, 2004), where each ver-

tex corresponds to the extracted topic from the in-

put text and connection to the relation between sev-

eral topics. In (Poray and Schommer, 2009) it has

been shown that each conversing person can receive

an understanding of its partner, if all incoming tex-

tual stimuli are linguistically processed and then put

to an associative framework (mind-graph): the idea

is that strong and weak connections – which emerge

depending on the intensity and frequency of the sig-

nals – then finally lead to even such associative mind-

graphs, which do not only reflect a textual conversa-

tion but moreover support a mental representation of

the conversing partner.

As per the general continuation of this approach,

a refinement concerns the categorisation of the infor-

mation – which occurs during a conversation – into

several categories, which we call a) known, b) mu-

tual, and c) unknown information (Figure 1). Cate-

gory a) refers to information that is already aware by

a person before a conversation takes place and that

is already inside the associative mind-graph; b) refers

to a common information between several conversing

partners: it evolves over time and is then sent to the

associative framework. Finally, c) Unknown informa-

tion refers to information, which is not aware by a

person before the conversation.

Unknown Information

A B A B

AB

Mutual InformationKnown Information

Figure 1: Three types of information during textual conver-

sation (explanation see in text).

In this context, it is fair to confront the mind-graph

511

Poray J. and Schommer C..

OPERATIONS ON CONVERSATIONAL MIND-GRAPHS.

DOI: 10.5220/0003749905110514

In Proceedings of the 4th International Conference on Agents and Artificial Intelligence (ICAART-2012), pages 511-514

ISBN: 978-989-8425-95-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

framework to the Extended Mind Theory, which has

been presented by (among others, but mainly) (Clark

and Chalmers, 1998). Here, it is suggested that exter-

nal entities should be handled separately in the form

of an active externalism and that mostly the internal

and external information can not be separated, but

considered as a coupled system.

2 RELATED WORK

The role of machine learning for automated text clas-

sification (or categorization) is discussed (among oth-

ers) in (?) and (Ikonomakis et al., 2005). They

clearly point out that an intersection of research fields

like information extraction, text retrieval, summariza-

tion, question-answering et cetera exist particularly

in those cases, where the inductive process of learn-

ing has been motivated by the texts. With respect to

the set-up of the relation among social communities,

(Ziegler and Golbeck, 2006) show how today’s online

communities allow their users to find the co-relation

to measure trust and interests.

In association with the representation of the text

based models, graphs are proven very useful. As

an example (Haghighi et al., 2005) has developed a

novel graph matching model for sentence inference

from texts. Many related approaches regarding the

graph representation for texts and documents have

been proposed since last few years (e.g., (Schenker

et al., 2003) and (Hensman, 2004)). Recently, (Jin

and Srihari, 2007) used a novel graph based text rep-

resentation model capable to capture a) term order b)

term-frequency c) term co–occurrance, and d) term

context in a document; then test has been performed

for a specific text mining task. The state–of–the–art

of our proposed graph similarity based text represen-

tation model is mostly motivated by these research ef-

forts.

3 OPERATIONS ON

MIND-GRAPH

With respect to the life-cycle process of a textual

information, raw conversational text data is treated

firstly as the linguistic pre-processer. This includes

a tokenization, the elimination of stop-words, a res-

olution of pronouns, and others. A temporary stor-

age space, termed as Short Term Memory (STM),

which contains this filtered information, is then used.

For each set of conversational text (document), in-

formation is represented as an undirected graph ((Jin

and Srihari, 2007)), called mind-graphs (g={V ,E}),

which on their way represent a pre-processed con-

versational text by a set of vertices (V ) and a set of

weighted edges (E).

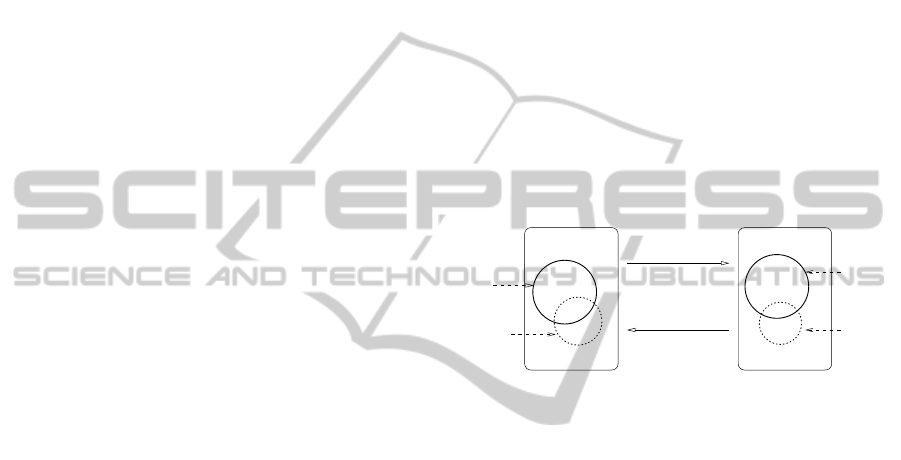

3.1 Inside and Outside

A mind-graph assimilates textual components and as-

signs each component to a vertex. Components that

occur together are consequently bidirectionally con-

nected and logistically managed inside a STM (Short

Term Memory). It is connected to the LTM (Long

Term Memory), which administers those mind-graphs

that have proven a stability over time ((Poray and

Schommer, 2010)). Concerning the “self” (Inside)

and the “other” (Outside) as mentioned in section 1,

Figure 2 reflects this situation, where each conversa-

tional partner keeps an own information as Inside and

newly obtained information as Outside.

Person 2

Outside

Inside

Inside

Outside

Conversation

Textual

Person 1

Figure 2: During textual conversation each person store

their information as Inside and Outside.

3.2 Merge

In a conversation between two or more individuals,

the merge of the (prepared) stimuli is one of the es-

sential task and consequence. Here, the grade of simi-

larity between more than one type of information (see

Section 1) has to be considered. In an extended situ-

ation of Figure 1, a merge (function) actually repre-

sents the similarity (intersection) between two types

of information.

Definition 1. A merge function µ for two mind-graphs

g ∈ G and g

0

∈ G (where G is the set of mind-graphs)

is defined as a one-to-one mapping among them, es-

timating the maximum common (similar) attributes

among two mind-graphs.

µ(g, g

0

) : g → g

0

This similarity measurement using merge function

reflects the amount of mutual information between In-

side and Outside.

A mind-graph g = (V, E, λ, ∆) consists of a set

of vertices (V ) and a set of edges (E). Here, λ :

V → L

V

represents the identifier of a vertex, such that

ICAART 2012 - International Conference on Agents and Artificial Intelligence

512

λ(m) 6= λ(n), ∀m, n ∈ V, m 6= n and ∆ : E → R

+

, where

∆ is the number of traffic observed in the labeled

graph g. Here, each mind-graph can be considered

as the assemble of the different sub-graphs. There-

fore, for n different mind-graphs g

1

,. . . ,g

n

, a mutual

similarity is given by their maximum common sub-

mind-graph (mcs(g

1

, . . . , g

n

). Following (Bunke and

Shearer, 1998), the distance (d) between these two

mind-graphs is then defined as follows:

d(g

1

, . . . , g

n

) = 1 −

|mcs(g

1

, . . . , g

n

)|

max{|g

1

|, . . . , |g

n

|}

As an example (see Figure 3), for two mind-

graphs g and g

0

having the number of vertices |g|, |g

0

|

respectively, also |mcs(g, g

0

)| represent the number of

vertices for their maximum common sub-mind-graph

mcs(g, g

0

). Since |g| = 6, |g

0

| = 4 and |mcs(g, g

0

)| = 3,

d(g, g

0

) = 0.5. Having the similarity as the comple-

ment of the the distance d between g and g

0

, the rela-

tion is then

µ(g, g

0

) = 1 − d(g, g

0

)

c)

A

B

C

D

E

F

B

C

E

b)

a)

G

C

B

E

Figure 3: Mind-graphs a) g and; b) g

0

; and their c) maximal

common sub-graph, mcs(g, g

0

).

3.3 Extraction of Skeletons

As described in (Poray and Schommer, 2009), a skele-

ton is a mind-graph with strong connections (thresh-

old) between its vertices. The extraction procedure

follows an algorithm, which is described in the fol-

lowing. It follows two steps. In a first step, the graph

potential for the weighted mind-graph is computed

whereas in the second step the actual extraction is

done:

(Step1)

Require: The set of weighted nodes {ω

1

, . . . , ω

m

}

Require: The set of weighted connections

{ω

i j

}, i, j ∈ {1, . . . , m}&i 6= j

α

p

←

1

m

∑

m

i=1

ω

i

β

p

←

1

n

∑

n

k=1

ω

i j

, where, n =| ω

i j

|, i, j ∈ {1, . . . , m}

and i 6= j

Compute the graph potential : δ

p

←

α

p

+β

p

2

and (Step 2)

Require: The skeleton threshold δ

s

if δ

p

≥ δ

s

then

Mind-graph g

p

← skeleton

if g

p

← skeleton then

f (g

p

) : g

p

(STM) → g

p

(LTM) fiers

end if

end if

First, α

p

, which denotes the average value of all

weighted nodes ω

i

and β

p

, which denotes the average

value of all weighted connections ω

i j

, are considered.

Thereafter, the graph potential δ

p

, which is the overall

weighted average of that graph is obtained. Then (in

second step), the graph potential δ

p

is compared with

some pre-defined skeleton threshold δ

s

and finally as

per its grade, the skeletons are identified.

3.4 Mind-graph Normalization

Sometimes, the mind-graphs need to be managed

properly, such that the complexity of the graph always

keep below a certain threshold value and maintain its

healthy status. To keep the mind-graph in a consistent

(normalized) state, it is advisable to consider only the

connections, which do not a threshold value. The pro-

cess of decomposition, join and selection is motivated

to resolve this issue.

As an example in figure 4 the mind-graph G1 is

estimated as the “over graph threshold value” with

too many complex connections. First this is decom-

posed into five subgraphs A,B,C,D and E. Suppose

among these five sub-graps B and C are again pointed

as “over graph threshold value”. Therefore, only rest

three sub-graphs are taken for join. Similarly, in the

selection phase the mind-graphs AD and DE are con-

sidered. Again the mind-graph AE not selected as it

is in “over graph threshold value” state.

Selection

A

B

C

D

E

AD

G1

DE

AE

AD

DE

Decomposition

Join

Figure 4: The data flow for Decomposition, Join and Selec-

tion for the mind-graph.

The algorithm for this described technique is pre-

sented below, where only the candidate mind-graphs

g

r

with “below graph threshold value” are stored in-

side the graph storage stack

˜

G.

OPERATIONS ON CONVERSATIONAL MIND-GRAPHS

513

Require: The mind-graph g

p

with its set of weighted

nodes

˜

N = {ω

1

, . . . , ω

m

} and their weighted con-

nections

˜

C.

Ensure:

˜

G ←

/

0

1: Compute the graph potential δ

p

of the input graph

g

p

2: for r = 2 to m do

3:

˜

N ← {ω

i

}, where |

˜

N |= r and i ∈ {1, . . . , m}

4:

˜

C ← {ω

i j

}, where i, j ∈ {1, . . . , m}(i 6= j)

5: δ

r

← Compute graph potential for all sub-

graphs formed with

˜

N and

˜

C

6: if δ

r

≥ δ

p

then

7: DECOMPOSITION

8: JOIN

9: Get the candidate graph g

r

10:

˜

G ← g

r

11: end if

12: end for

13: SELECTION (of the candidate graph(s) ( ≤ δ

p

)

from

˜

G)

These two techniques are motivated to extract

the skeleton mind-graph and manage the mind-graph

complexity for complex connections. Also there ex-

ists some other techniques or can be formalized these

as per some other specific need of the mind-graphs.

4 CONCLUSIONS

In this work we used graphs (mind-graphs) to rep-

resent the coupling between the knowledge in the

course of textual conversation. The similarity mea-

sures between the mind-graphs have been considered

for information representation. Also, the algorithms

associated to the mind-graphs extraction and normal-

ization have been formalized. Initial experimental

framework has been established. It works with test

sentences, where extracted word cells and their asso-

ciated neighbor cells (form the mind-graphs) explic-

itly defined. Currently, we continue the test with a

larger corpus.

ACKNOWLEDGEMENTS

The current work has been performed at the Univer-

sity of Luxembourg within the project EAMM. We

thank all project members for their support and en-

gagement.

REFERENCES

Bunke, H. and Shearer, K. (1998). A graph distance met-

ric based on the maximal common subgraph. Pattern

Recogn. Lett., 19:255–259.

Clark, A. and Chalmers, D. (1998). The extended mind. In

Analysis, volume 58, pages 7–19.

Haghighi, A. D., Ng, A. Y., and Manning, C. D. (2005).

Robust textual inference via graph matching. In Pro-

ceedings of the conference on Human Language Tech-

nology and Empirical Methods in Natural Language

Processing, HLT ’05, pages 387–394. Association for

Computational Linguistics.

Hensman, S. (2004). Construction of conceptual graph rep-

resentation of texts. In Proceedings of the Student Re-

search Workshop at HLT-NAACL 2004, HLT-SRWS

’04, pages 49–54. Association for Computational Lin-

guistics.

Ikonomakis, M., Kotsiantis, S., and Tampakas, V. (2005).

Text classification: a recent overview. In Proceedings

of the 9th WSEAS International Conference on Com-

puters, pages 125:1–125:6.

Jin, W. and Srihari, R. K. (2007). Graph-based text rep-

resentation and knowledge discovery. In Proceedings

of the 2007 ACM symposium on Applied computing,

SAC ’07, pages 807–811. ACM.

Poray, J. and Schommer, C. (2009). A cognitive mind-map

framework to foster trust. In Proceedings of the 2009

Fifth International Conference on Natural Computa-

tion - Volume 05, ICNC ’09. IEEE Computer Society.

Poray, J. and Schommer, C. (2010). Managing conversa-

tional streams by explorative mind-maps. In Proceed-

ings of the ACS/IEEE International Conference on

Computer Systems and Applications - AICCSA 2010.

IEEE Computer Society.

Radev, D. R. (2004). Lexrank: Graph-based lexical cen-

trality as salience in text summarization. Journal of

Artificial Intelligence Research, 22.

Schenker, A., Last, M., Bunke, H., and Kandel, A. (2003).

Classification of web documents using a graph model.

In Seventh International Conference on Document

Analysis and Recognition, pages 240–244.

Sebastiani, F. (2002). Machine learning in automated text

categorization. ACM Comput. Surv., 34:1–47.

Tuulos, V. and Tirri, H. (2004). Combining topic

models and social networks for chat data mining.

In Web Intelligence, 2004. WI 2004. Proceedings.

IEEE/WIC/ACM International Conference, pages 206

– 213.

Ziegler, C.-N. and Golbeck, J. (2006). Investigating interac-

tions of trust and interest similarity. Decision Support

Systems, 43(2):460 – 475.

ICAART 2012 - International Conference on Agents and Artificial Intelligence

514