EMODS: A NOVEL EVOLUTIONARY METAHEURISTIC BASED IN

THE AUTOMATA THEORY FOR THE MULTIOBJECTIVE

OPTIMIZATION OF COMBINATORIALS PROBLEMS

Elias David Nino Ruiz

1,2

and Anangelica Isabel Chinchilla Camargo

2

1

Virginia Polytechnic Institute and State University, Blacksburg, Virgina, U.S.A.

2

Universidad del Norte, Barranquilla, Atlantico, Colombia

Keywords:

Combinatorial optimization, Metaheuristic, Evolutionary rules, MultiObjective optimization, Traveling

salesman problem.

Abstract:

This paper states a novel Evolutionary Metaheuristic based in the Automata Theory for the Multiobjective

Optimization of Combinatorial Problems named EMODS. The proposed algorithm uses the natural selection

theory to explore the feasible solutions space of a Combinatorial Problem. Due to this, local optimums are

avoided. Also, EMODS takes advantage in the optimization process from the Metaheuristic of Deterministic

Swapping to avoid finding unfeasible solutions. The proposed algorithm was tested using well known instances

from the TSPLIB with three objectives. Its results were compared against four Multiobjective Simulated

Annealing inspired Algorithms using metrics from the specialized literature. In every case, the EMODS

results on the metrics were always better and in some of those cases, the distance from the Real Solutions was

4%.

1 INTRODUCTION

Combinatorial optimization is a branch of optimiza-

tion. Its domain is optimization problems where the

set of feasible solutions is discrete or can be reduced

to a discrete one, and the goal is to find the best pos-

sible solution (Yong-fa and Ming-yang, 2004). In this

field it is possible to find a lot of problems denomi-

nated NP - Hard such as Multi-depot vehicle routing

problem (Lim and Wang, 2005), delivery and pickup

vehicle routing problem with time windows (Wang

and Lang, 2008), multi-depot vehicle routing problem

with weight-related costs (Fung et al., 2009), Railway

Traveling Salesman Problem (Hu and Raidl, 2008),

Heterogeneous, Multiple Depot, Multiple Traveling

Salesman Problem (Oberlin et al., 2009) and Travel-

ing Salesman with Multi-agent (Wang and Xu, 2009).

One of the most classical problems in combinato-

rial optimization is the Traveling Salesman Problem

and it has been analyzed for years (Sauer and Coelho,

2008) either in a mono or multi - objective way. Al-

though several algorithms have been implemented to

solve TSP, there is no one that optimal solves it in a

polynomial time.

This paper is structured as follows. In Section 2

some fundamentals concepts such as Multiobjective

Optimization and Genetic algorithms are reviewed. In

Section 3, a novel evolutionary metaheuristic is de-

fined on the MODS template. Lastly, in Section 4,

the metaheuristic proposed is tested and its results are

analyzed.

2 PRELIMINARIES

2.1 Multi - objective Optimization

The multi - objective optimization consists in two or

more objectives functions to optimize and a set of

constraints (Glover and Laguna, 1997):

Opt. F(X) = { f

1

(X), f

2

(X), f

3

(X), ..., f

n

(X)} (1)

Subject to

H(X) = 0 (2)

G(X) ≤ 0 (3)

X

l

≤ X ≤ X

u

(4)

X is the set of desition variables of the problem.

F(X) is the set of objective functions.n is the num-

ber of objective functions. H(X) and G(X) are the

constraints of the problem. Finally, X

l

and X

u

are the

lower and upper bound, respectively, of X.

399

Nino Ruiz E. and Chinchilla Camargo A..

EMODS: A NOVEL EVOLUTIONARY METAHEURISTIC BASED IN THE AUTOMATA THEORY FOR THE MULTIOBJECTIVE OPTIMIZATION OF

COMBINATORIALS PROBLEMS.

DOI: 10.5220/0003754003990404

In Proceedings of the 1st International Conference on Operations Research and Enterprise Systems (ICORES-2012), pages 399-404

ISBN: 978-989-8425-97-3

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

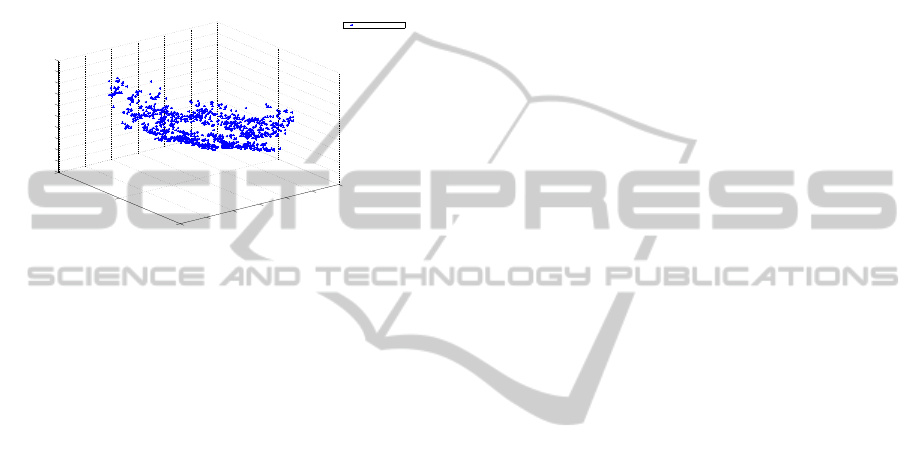

2.2 Pareto Front

As well known, a Pareto Front is a set of nondomi-

nated solutions; it means that all the solutions of the

PF are optimal. In the particular case of the three - ob-

jective optimization of a combinatorial problem, the

PF will be in ℜ

3

as can be seen in figure 1. Each

point of this set represents a solution for the problem.

Therefore, the dimension of the Pareto Front depends

on the number of objectives of the problem.

0.4

0.6

0.8

1

1.2

1.4

1.6

x 10

5

0.5

1

1.5

x 10

5

0.5

0.6

0.7

0.8

0.9

1

1.1

1.2

1.3

1.4

1.5

x 10

5

f1

f2

f3

No Dominated Solutions

Figure 1: Pareto Front for a particular three - objective prob-

lem.

2.3 Genetic Algorithms

Genetic Algorithms (GA) are algorithms inspired in

the natural selection theory. They consist in three

steps as follows:

Step 1. Selection. Select solutions from a popula-

tion.

Step 2. Crossover. Cross the selected solutions

avoiding local optimums.

Step 3. Mutation. Perturbs the new solutions found

for increasing the population.

The most known Genetic Algorithms from the

literature (Glover and Laguna, 1997) are the Non-

Dominated Sorting Genetic Algorithm (NSGA - II)

and the Strength Pareto Evolutionary Algorithm 2

(SPEA2). NSGA II use a no - dominated sort for

sorting the solutions in different Pareto Sets. Con-

sequently, it demands a lot of time, but it allows a

global verification of the solutions for avoiding the

Local Optimums. On the other hand, SPEA2 is an

improvement of SPEA. The difference with the first

version is that SPEA2 works using strength for ev-

ery solution according to the number of solutions

that it dominates. Consequently, at the end of the

iterations, SPEA2 has the non dominated solutions

stronger avoiding Local Optimums.

2.4 Metaheuristic Of Deterministic

Swapping

Metaheuristic Of Deterministic Swapping (MODS)

(Ni˜no et al., 2011) is a local serach strategy that ex-

plores the Feasible Solution Space of a Combinatorial

Problem supported in a data structure named Multiob-

jective Deterministic Finite Automata (MDFA) (Ni˜no

et al., 2010). A MDFA is a data structure that al-

lows the representation of the feasible solution space

of a Combinatorial Problem. Formally, a MDFA is

defined as follows:

M = {Q, Σ,δ,Q

0

,F(X)} (5)

Where Q represents all the set of states of the Au-

tomata (Feasible Solution Space),Σ is the input alpha-

bet that is used for δ (transition function) to explore

the feasible solution space of a Combinatorial Prob-

lem, in other words δ perturbs the solutions for find-

ing news, Q

0

contains the Initial set of States (Initial

Solutions) and F(X) are the Objectives to optimize.

The main algorithm set the MDFA to the Com-

binatorial Problem and explores it using a search di-

rection based in the elitist set of solutions (Q

∗

). The

elitist solutions are solutions that, when were found,

dominated at least one solution from Q

φ

.

The template algorithm of MODS is defined as

follow:

Step 1. Create the initial set of solutions Q

0

using a

heuristic relative to the problem to solve.

Step 2. Set Q

φ

as Q

0

.

Step 3. Select a random state q ∈ Q

φ

or q ∈ Q

∗

Step 4. Explore the Neighborhood of q using δ and

Σ. Add to Q

φ

the solutions found that are not domi-

nated and add to Q

∗

those solutions that dominated at

least one element from Q

φ

.

Step 5. Check stop condition, go to 3.

3 EVOLUTIONARY

METAHEURISTIC OF

DETERMINISTIC SWAPPING

EMODS, Evolutionary Metaheuristic of Determinis-

tic Swapping, is a framework that allows the Multiob-

jective Optimization of Combinatorial Problems. Its

framework is based on MODS template therefore its

steps are the same: create Initial Solutions, Improve

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

400

the Solutions (Optional) and Execute the Core Algo-

rithm.

Alike MODS, Q

0

has the Initial Solutions (states)

of the Combinatorial Problem. Each state has a vector

solution. Those are created using the well known Nest

Neighbor Heuristic. Hence, a new function is created

based in the Weighted Sum Metric therefore a weight

is assigned to each Objective Function of the problem

(This is a classic manner for multiobjective optimiza-

tion(Pretorius and Helberg, 2004)) as follows:

F(X)=

n

∑

i=1

α

i

· f

i

(X) (6)

Subject to

n

∑

i=1

α

i

= 1 (7)

Where n is the number of objective functions. The

weights (α

i

) values are randomly assigned to each

function. Once this step has been concluded, the Nest

Neighbor Heuristic is applied to (1) for creating the

Initial Solutions. The Core Algorithm is defined as

follows:

Step 1. Set θ as the maximum number of iterations,

β as the maximum number of state selected in each

iteration, ρ as the maximum number of perturbations

by state and Q

φ

as Q

0

Step 2. Selection. Randomly select a state q ∈ Q

φ

or q ∈ Q

∗

Step 3. Mutation. Set N as the new solutions found

as result of perturbing q. Add to Q

φ

and Q

∗

according

to the next equations:

Q

φ

= Q

φ

∪ {q}

⇐⇒

6 ∃r ∈ Q

φ

/q ≺ r

(8)

(Q

∗

= Q

∗

∪ {q}) ⇐⇒

∃r ∈ Q

φ

/r ≺ q

(9)

Remove the states with dominated solutions for each

set.

Step 4. Crossover. Randomly select states from Q

φ

and Q

∗

. Generate a random value k, cross the solu-

tions in a k-position as can be seen in figure 2.

Step 5. Check stop condition, go to 3.

One of the most important steps in the EMODS

algorithm is step 4. There, the algorithm applies an

Evolutionary Strategy based in the crossover step of

Genetic Algorithms for avoiding Local Optimums as

can be seen in 2. Due to the crossover is not always

made in the same point (the k value is randomly gen-

erated in each state analyzed) the variety of solutions

found are diverse avoiding local solutions.

Figure 2: Crossover step from EMODS. Cross in the k

th

-

position.

4 EXPERIMENTAL STUDIES

4.1 Experimental Settings

4.1.1 Test Instances and Parameters

EMODS was tested using the Three Objective Trav-

eling Salesman Problem (3-TSP). Formally, TSP is

defined as follows:

min

n

∑

i=1

n

∑

j=1

C

ij

· X

ij

(10)

Subject to:

n

∑

j=1

X

ij

= 1,∀i = 1, . . . , n (11)

n

∑

j=1

X

ij

= 1,∀ j = 1,...,n (12)

∑

i∈κ

∑

j∈κ

X

ij

≤ |κ| − 1, ∀κ ⊂ {1, ... , n} (13)

X

ij

= 0,1∀i, j (14)

Where C

ij

is the cost of the path X

ij

and κ is any

nonempty proper subset of the cities 1,...,m. (10) is

the objective function. The goal is the optimization of

the overall cost of the tour. (11), (12) and (14) fulfills

the constrain of visiting each city only once. Lastly,

Equation (13) set the subsets of solutions, avoiding

cycles in the tour.

The test was made using well known Three - Ob-

jective Traveling Salesman Problem (3TSP) instances

from from TSP LIB(Heidelberg, ). The instances

contains problems of 100 cities. Each city is rep-

resented as a point in the space, so the distance is

computed using the euclidean distance between each

pair of points. Each algorithm was run 10 times, the

best nondominated solutions were selected for each of

one. The true solution was constructed using the best

nondominated solutions of all the sets.

4.1.2 Algorithms in Comparison

EMODS was compared against four MOSA algo-

rithm, the algorithms are: Multiobjective Simulated

EMODS: A NOVEL EVOLUTIONARY METAHEURISTIC BASED IN THE AUTOMATA THEORY FOR THE

MULTIOBJECTIVE OPTIMIZATION OF COMBINATORIALS PROBLEMS

401

Annealing (CMOSA), Ulungu Multiobjective Sim-

ulated Annealing (UMOSA), Search Multiobjective

Simulated Annealing (SMOSA) and Evolutionary

Multiobjective Simulated Annealing (EMOSA). The

most good-perfomance of them is for Evolutionary

Multiobjective Simulated Annealing as can be seen

in(Li and Landa-Silva, 2008).

4.2 Experimental Results

4.2.1 Performance Metrics

There are metrics that allow measuring the quality of

a set of optimal solutions and the performance of an

Algorithm(Jingyu et al., 2007). Most of them use two

Pareto Fronts. The first one is PF

true

and it refers to

the real optimal solutions of a combinatorial problem.

The second is PF

know

and it represents the optimal so-

lutions found by an algorithm.

Generation of Nondominated Vectors (GNDV).

It measures the number of Nondominated Solutions

generated by an algorithm.

GNDV = |PF

know

| (15)

A higher value for this metric is desired.

Generational Distance (GD). This metric mea-

sures the distance between PF

know

and PF

true

. It al-

lows to determinate the error rate of a set of solutions

relative to the real solutions.

GD =

1

|PF

know

|

·

|PF

know

|

∑

i=1

d

i

!

(1/p)

(16)

Where d

i

is the smallest Euclidean distance be-

tween the solution i of FP

know

and the solutions of

FP

true

. p is the dimension of the combinatorial prob-

lem, it means the number of objective functions.

Inverse Generational Distance (IGD). This is an-

other distance measurement between FP

know

and

FP

true

IGD =

1

|PF

true

|

·

|PF

know

|

∑

i=1

d

i

!

(17)

Where d

i

is the smallest Euclidean distance between

the solution i of PF

know

and the solutions of PF

true

.

Spacing (S). It measures the range variance of

neighboring solutions in PF

know

S =

1

|PF

know

| − 1

2

·

|PF

know

|

∑

i=1

d − d

i

2

!

(1/p)

(18)

Where d

i

is the smallest Euclidean distance between

the solution i of PF

know

and the rest of solutions of

PF

know

. d is the mean of all d

i

. p is the dimension of

the combinatorial problem.

A value closer to 0 for this metric is desired. A

value of 0 means that all the solutions are equidistant.

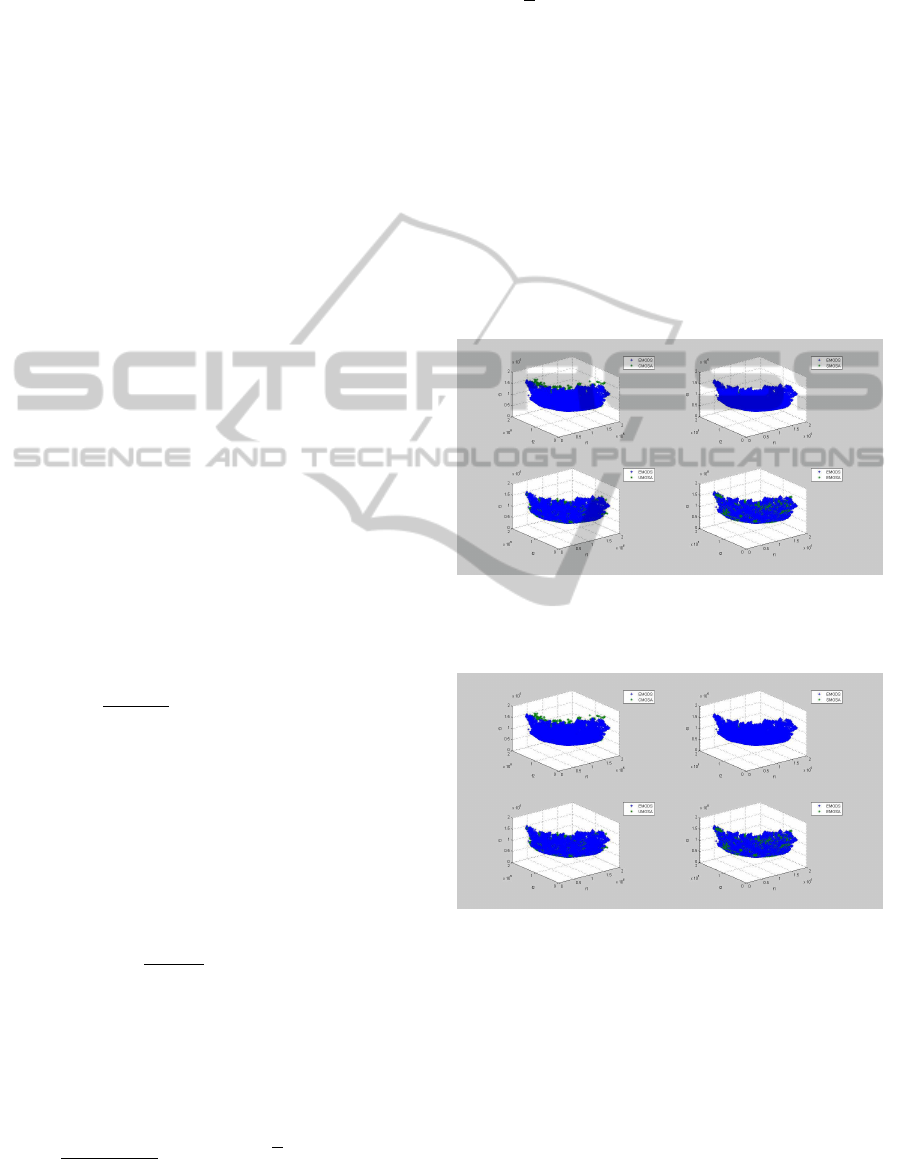

4.2.2 Results Analysis

Figures 3 and 4 show a graphical comparison be-

tween EMODS Pareto Front and the rest of Com-

pared Algorithms Pareto Fronts for the instances

KROABC100 and KROBCD100 respectively. In ad-

dition, in tables 3 and 4 is measured the performance

of the algorithms for each mentioned instance respec-

tively.

Figure 3: Graphical comparison between EMODS Pareto

Front and the rest of Algorithms Pareto Fronts for the

KROABC100 instance.

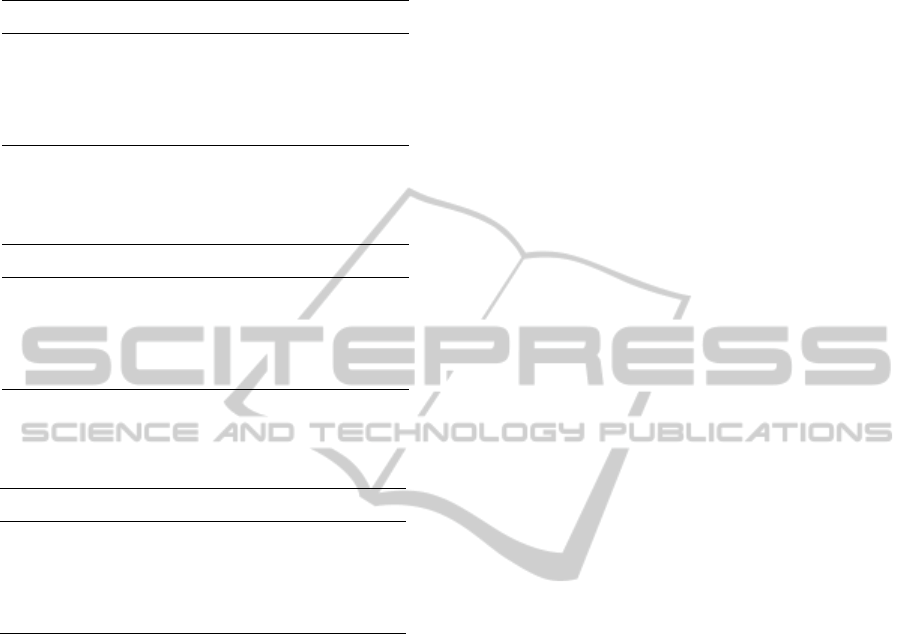

Figure 4: Graphical comparison between EMODS Pareto

Front and the rest of Algorithms Pareto Fronts for the

KROBCD100 instance.

In the first instance, as can be seen in the figure 5,

most of the times, the EMODS solutions dominated

the compared algorithms solutions. It can be corrob-

orate in table 3 where EMODS generated 34077 so-

lutions in its Pareto Front having the lowest GD only

of 0,05 (5%). In the second case, EMODS had the

best performance as can be seen in figure 6 and Table

4. EMODS generated 34824 solutions in its Pareto

Front having a distance from the Real Pareto Front

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

402

Table 1: Measuring algorithms performance for the

KROABC100 instance with multi - objective optimization

metrics.

GVND SPACING GD IGD

SMOSA 1095 0,0599588 26,5312636 36172,0838

CMOSA 1817 0,04035959 14,3588074 29172,6591

UMOSA 2564 0,03498623 3,4396281 3333,40773

EMOSA 3194 0,03144919 2,38276567 2482,34369

EMODS 34077 0,01365555 0,05108237 129,865643

Table 2: Measuring algorithms performance for the

KROBCD100 instance with multi - objective optimization

metrics.

GVND SPACING GD IGD

SMOSA 1097 0,05749539 25,2829385 33752,4241

CMOSA 1795 0,04647346 14,1155524 28168,342

UMOSA 2472 0,03581236 3,46956584 3227,63056

EMOSA 3143 0,03150265 2,3144672 2321,81408

EMODS 34824 0,01307551 0,04979096 131,915227

Table 3: Average measuring algorithms performance for the

KROABC100 and KROBCD100 instances with multi - ob-

jective optimization metrics.

GVND SPACING GD IGD

SMOSA 1097 0,05749539 25,2829385 33752,4241

CMOSA 1795 0,04647346 14,1155524 28168,342

UMOSA 2472 0,03581236 3,46956584 3227,63056

EMOSA 3143 0,03150265 2,3144672 2321,81408

EMODS 34824 0,01307551 0,04979096 131,915227

only of 0.4 (4%).

Lastly, the metrics values in the table 5 are aver-

aged. It can be seen the superiority of EMODS solu-

tions having the lowest error distance (GD) value of

4.6%. In addition, in all the cases, the values of the

metrics applied to EMODS solutions are distant from

the others and those show the best performance.

5 CONCLUSIONS

A novel metaheuristic named EMODS was proposed.

EMODS is an Evolutionary Metaheuristic to solve

Combinatorial Problems. It is based in the Natu-

ral Selection Theory for avoiding Local Optimums.

Besides, it is based in MODS algorithm to repre-

sent the feasible solution space under the Automata

Theory. Hence, a search direction is used to ex-

plore the feasible solution space (Multiobjective De-

terministic Finite Automata). The proposed algo-

rithm was tested against metaheuristic from the spe-

cialized literature using well known instances from

the TSPLIB. EMODS showed the best performance

in all the metrics worked and in some cases the error

rate of EMODS was 4%.

REFERENCES

Fung, R., Tang, J., and Zhang, J. (2009). A multi-depot

vehicle routing problem with weight-related costs. In

Computers Industrial Engineering, 2009. CIE 2009.

International Conference on, pages 1028 –1033.

Glover, F. and Laguna, M. (1997). Tabu Search. Kluwer

Academic Publishers, Norwell, MA, USA.

Heidelberg, U. O. Tsplib - office research group dis-

crete optimization - university of heidelberg. http://

comopt.ifi.uni-heidelberg.de/software/TSPLIB95/.

Hu, B. and Raidl, G. (2008). Solving the railway traveling

salesman problem via a transformation into the classi-

cal traveling salesman problem. In Hybrid Intelligent

Systems, 2008. HIS ’08. Eighth International Confer-

ence on, pages 73 –77.

Jingyu, Y., Chongguo, L., Zhi, W., Lei, D., and Demin, S.

(2007). Diversity metrics in multi-objective optimiza-

tion: Review and perspective. In Integration Tech-

nology, 2007. ICIT 07. IEEE International Conference

on, pages 553–557.

Li, H. and Landa-Silva, D. (2008). Evolutionary multi-

objective simulated annealing with adaptive and com-

petitive search direction. In Evolutionary Com-

putation, 2008. CEC 2008. (IEEE World Congress

on Computational Intelligence). IEEE Congress on,

pages 3311 –3318.

Lim, A. and Wang, F. (2005). Multi-depot vehicle routing

problem: a one-stage approach. Automation Science

and Engineering, IEEE Transactions on, 2(4):397 –

402.

Ni˜no, E. D., Ardila, C., Donoso, Y., and Jabba, D. (2010). A

novel algorithm based on deterministic finite automa-

ton for solving the mono-objective symmetric travel-

ing salesman problem. International Journal of Arti-

ficial Intelligence, 5(A10):101 – 108.

Ni˜no, E. D., Ardila, C., Donoso, Y., Jabba, D., and Barrios,

A. (2011). Mods: A novel metaheuristic of determin-

istic swapping for the multi objective optimization of

combinatorials problems. Computer Technology and

Application, 2(4):280 – 292.

Oberlin, P., Rathinam, S., and Darbha, S. (2009). A trans-

formation for a heterogeneous, multiple depot, multi-

ple traveling salesman problem. In American Control

Conference, 2009. ACC ’09., pages 1292 –1297.

Pretorius, W. and Helberg, A. (2004). Application of

an adapted evaluation process using numerical amp;

qualitative weighted sum techniques. In AFRICON,

2004. 7th AFRICON Conference in Africa, volume 1,

pages 367 –372 Vol.1.

Sauer, J. and Coelho, L. (2008). Discrete differential evolu-

tion with local search to solve the traveling salesman

problem: Fundamentals and case studies. In Cyber-

netic Intelligent Systems, 2008. CIS 2008. 7th IEEE

International Conference on, pages 1 –6.

EMODS: A NOVEL EVOLUTIONARY METAHEURISTIC BASED IN THE AUTOMATA THEORY FOR THE

MULTIOBJECTIVE OPTIMIZATION OF COMBINATORIALS PROBLEMS

403

Wang, S.-Q. and Xu, Z.-Y. (2009). Ant colony algorithm

approach for solving traveling salesman with multi-

agent. In Information Engineering, 2009. ICIE ’09.

WASE International Conference on, volume 1, pages

381 –384.

Wang, Y. and Lang, M. (2008). Study on the model and

tabu search algorithm for delivery and pickup ve-

hicle routing problem with time windows. In Ser-

vice Operations and Logistics, and Informatics, 2008.

IEEE/SOLI 2008. IEEE International Conference on,

volume 1, pages 1464 –1469.

Yong-fa, Q. and Ming-yang, Z. (2004). Research on a

new multiobjective combinatorial optimization algo-

rithm. In Robotics and Biomimetics, 2004. ROBIO

2004. IEEE International Conference on, pages 187

–191.

ICORES 2012 - 1st International Conference on Operations Research and Enterprise Systems

404