USER INDEPENDENT SYSTEM FOR RECOGNITION OF HAND

POSTURES USED IN SIGN LANGUAGE

Dahmani Djamila, Benchikh Soumia and Slimane Larabi

LRIA, Computer Science Department, University of Science and Technology Houari-Boumedienne,

BP32 El-Alia, Algiers, Algeria

Keywords: Hand posture recognition, Moments, Shape, Sign language.

Abstract: A new signer independent method of recognition of hand postures of sign language alphabet is presented in

this paper. We propose a new geometric hand postures features derived from the convex hull enclosing the

hand’s shape. These features are combined with the discrete orthogonal Tchebichef moments, and the Hu

moments. The Tchebichef moments are applied on the external and internal edges of the hand’s shape.

Experiments, based on two different hand posture data sets, show that our method is robust at recognizing

hand postures independent of the person performing them. The system obtains a good recognition rates, and

also performs well compared to other hand user independent posture recognition systems.

1 INTRODUCTION

The most important way of communication in the

deaf community is the sign language. The goal of

the sign language recognition is to transcribe

automatically the gestures of the sign language into

significant text or speech. The sign language is a

collection of gestures, movements, postures, and

facial expressions corresponding to letters and words

in natural languages. The works in automatic Sign

Language Recognition (SLR) research has

happening about twenty years ago particularly for

American (Starner and Pentland, 1996), and

Australian (Kadous, 1996). Since lot of systems

have been developed for different sign languages

including: Arabic sign language (Al-Jarrah and

Halawani, 2001), French sign language (Aran et al.,

2009). German sign language (Dreuw et al., 2008)

Sign language recognition (SLR) can be classed

into isolated SLR and continuous SLR and each can

be further classified into signer-dependent and

signer-independent systems. These systems can be

divided into major classes. The first class relies on

electromechanical devices that are used to measure

the different gesture parameters. Such systems are

called glove based systems. These systems have

disadvantages to be complicated and less natural.

The second class exploits machine vision and

processing techniques to create visual based hand

gesture and posture recognition systems. This

second class is the class of vision based systems. A

variety of methods and algorithms has been used for

solving the problem of SLR, include distance

classifiers, template matching, conditional random

field model (CRF) dynamic time warping model

(DTW), Bayesian network, neural networks, fuzzy

neural networks, Hidden Markov models, geometric

moments, Discrete Cosine Transformation (DCT).

Size functions.

However, the accuracy of most methods treating

the problem of hand posture recognition depends on

the training data set of the system used. Most

performance measures where results of signer

dependent experiments are carried out by testing the

system on subjects that were also used to train the

system. This is due to the anatomic particularity of

each person. An ideal system of hand posture

recognition should be able to give a good

recognition separately from the training data set.

Consequently, several user independent hand

posture recognition systems were developed. Most

of these systems perform their training data with

different subjects from the subjects of the test data:

In (Al-Roussan and Hussain, 2001) the authors

developed a system to recognize isolated signs for

28 alphabets from Arabic sign language (Arsl) using

colored gloves for data collection and adaptive

neuro-fuzzy inference systems (ANTFS) method. A

Recognition rate of 88%was achieved. Later, and on

a similar work the recognition rate increased to

93.41 using polynomial networks (Assalaeh and Al-

Roussan, 2005). (Treisch and Von der Malsburg,

2002) proposed a method using elastic graph

581

Djamila D., Soumia B. and Larabi S. (2012).

USER INDEPENDENT SYSTEM FOR RECOGNITION OF HAND POSTURES USED IN SIGN LANGUAGE.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 581-584

DOI: 10.5220/0003785005810584

Copyright

c

SciTePress

matching with a recognition rate of 92.9% for

classifying 10 hand postures. (Kelly et al., 2010)

proposed a user independent system of hand posture

recognition based on the use of weighted eigenspace

size functions, the method achieved a recognition

rate of 93.5 tested on Treisch data set.

This paper proposes a Recognition system for

static sign language alphabets. The system is user

independent and uses no gloves or any other

instrumented devices. We propose a new hand

posture features extracted from the convex hull

enclosing the hand’s shape. These features are

combined with the discrete orthogonal Tchebichef

moments and the geometric Hu moments. The

experiments are based on two separate data sets. The

system obtains a good recognition rate for all data

sets.

2 PROPOSED APPROACH

The proposed system is designed to recognize static

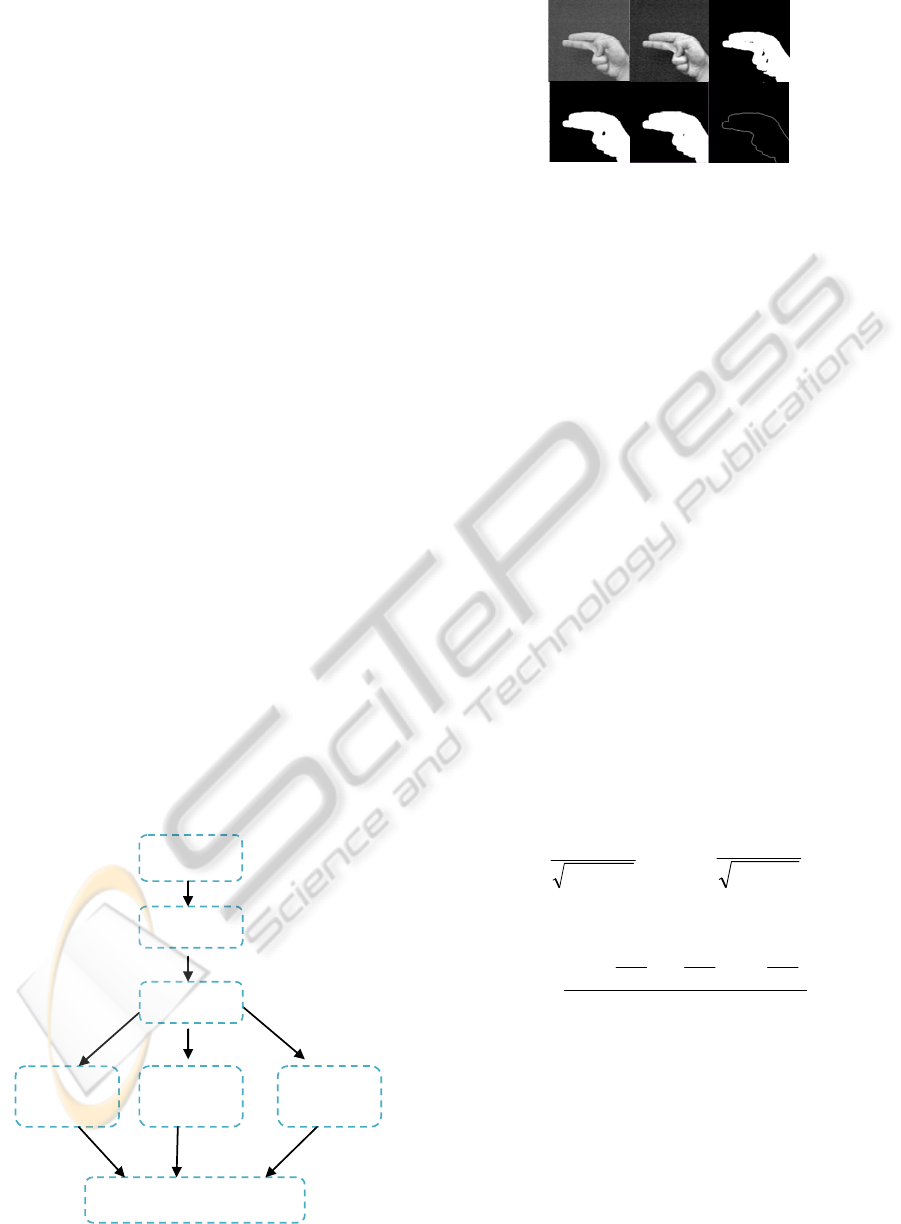

signs of sign language alphabets. The Figure1 shows

the block diagram of the proposed recognition

system.

2.1 Preprocessing

To extract the external contour of the images, we

followed these steps at first the image segmentation

is carried out using the global threshold filter, after

the morphological operations (erosion and dilation)

are applied to the image and finally using the region

of the hand gesture we extract the external hand

contour. As we can see in the Figure2.

The internal and external detection process is

performed using the adaptive threshold filter.

Parallel combination with the majority vote

Geometric features

classification

Hu moments

classification

Tchebichef

moments

classification

Features Extraction

Preprocessing

Image acquisition

Figure 1: Proposed system.

Figure 2: Example of contour extraction from G from

Treisch data set.

2.2 Features Extraction

In the proposed approach, three types of the feature

vectors are computed, the orthogonal Tchebichef

moments calculated from the internal and external

edges of the hand, the geometrical features vectors,

and the statistical features in this Hu moments

calculated the external hand contour.

2.2.1 The Tchebichef Orthogonal Moments

Orthogonal moments are good signal descriptors

with their low order components are sufficient to

provide discriminant power in pattern or object

recognition.

The discrete Tchebichef moments

pq

T

of order

()

qp +

of

()

NM ×

discrete space image are defined

as in (Mukundan et al., 2001):

pq

T

),()(

~

)(

~

1

0

1

0

yxfytxt

q

M

x

N

y

p

∑∑

−

=

−

=

=

(1)

Where

)(

~

xt

p

and

)(

~

yt

q

are the normalized

Tchebichef polynomials defined as

),(

~

)(

)(

~

Mp

xt

xt

p

p

ρ

=

;

),(

~

)(

)(

~

Nq

yt

yt

q

q

ρ

=

(2)

With

12

)1)...(

2

1)(

1

1(

),(

~

2

2

2

2

2

+

−−−

=

p

M

p

MM

M

M

ρρ

(3)

The discrete Tchebichef polynomials

)(xt

p

can be

found in (Erdelyi et al., 1953).

2.2.2 The Geometric Features Vectors

To describe hand posture we propose some

geometric features using the convex hull enclosing

the hand shape. This geometric feature set contains

three features:

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

582

1) The Relative Area

The relative area represents the ratio between the

area of the hand shape and the area of the convex

hull. It is defined as:

hull

shape

Area Re

Convex

hand

Area

Area

l =

(4)

2) The Relative Minimum Distance

This feature is defined as:

Lg

D

l

min

min

D Re =

(5)

Where

min

D represents the distance between the

center of gravity of the hand and the point

min

P

.The

point

min

P is the closest vertex of the convex hull

from the center of gravity of the hand, and

Lg the

length of the bounding box enclosing the hand

shape.

3) The relative Maximum Distance

This feature is defined as:

Lg

D

l

max

max

D Re =

(6)

Where

max

D represents the distance between the

center of gravity of the hand and the point

max

p .

The point

max

p is the furthest vertex of the convex

hull from the center of gravity of the hand.

The Figure 3 illustrates this features.

:

The bounding box.

:

The convex hull.

:

The center of gravity of the

hand

.

:

The point

min

P

.

:

The point

max

p

.

Figure 3: Illustration of the geometric features.

2.2.3 Hu Moments

Hu moments (Hu, 1962), which are a reformulation

of the non-orthogonal centralized moments, are a set

of transition, scale, and rotation invariant moments.

3 DATA COLLECTION

We evaluate our techniques using two separate data

sets.

3.1 Arabic Sign Language Database

The data set consists of 30 hand signs from the

Arabic sign language (Arsl) alphabet.

The hand postures were performed by 24

different subjects against uniform background and

with different scaling.

3.2 Jochen-Treisch Static Hand

Postures Database

The second data set is a benchmark database called

the Jochen-Treisch static hand postures database

(Treisch and Von Der Malsburg, 2002). The system

proposed was tested on hand images with dark and

light backgrounds.

4 EXPERIMENTAL RESULTS

The classification of the hand postures is performed

using the parallel combination with the majority

vote. The Table1 presents the performance of the

system for the Treisch data set with the scenario of

(Just et al., 2006) 8 subjects for training dataset and

16 subjects for the test dataset with light and dark

background respectively.

Table 1: Classification performance for the Treisch

database (light and dark background).

Backgrounds Recognition rate

Light background 97,5%

Dark background 88,6%

Global recognition rate 93.1

The classification of Arsl sign language

alphabets achieved a recognition rate of 94.67%,

with the scenario of 4 subjects for training data set

and 20 subjects for the test data set. The result

obtained by the proposed system performs well

knowing that the best recognition rate obtained until

now in Arabic sign language alphabet is equal to

93,41 (Assaalaeh and Al-Roussan, 2005), and the

system developed used colored gloves.

5 CONCLUSIONS

In this paper we present a new signer independent

USER INDEPENDENT SYSTEM FOR RECOGNITION OF HAND POSTURES USED IN SIGN LANGUAGE

583

system of hand postures recognition. It is a vision

based system that uses no glove or any other

devices. The method is based on the use of

geometrical and statistical features. The system

proposed performs well in the classification of hand

postures from the Arabic sign language alphabets.

A recognition rate of 94.67% was achieved. We

showed good performance of our system on the

benchmark Treisch hand postures database.

REFERENCES

Al-Jarrah, O., Halawani, A., 2001. Recognition of gestures

in Arabic sign language using neuro-fuzzy systems.

Artif. Intell. 133 (1-2), 117-138. ISSN 0004-3702.

Al-Roussan, M., Hussain M., 2001 Automatic Recognition

of Arabic Sign Language Finger spelling. In

International Journal of computers and their

applications (IJCA). Special issue on Fuzzy Systems

8(2) pages 80-88.

Aran, O., Burger, T., Caplier, A. Akarun, L., 2009. A

belief-based sequential fusion approach for fusing

manual signs and non-manual signals, Pattern

Recognition 42, 812–822.

Assalaeh, K., Al-Roussan, M. 2005. Recognition of

Arabic Sgn Language Alphabet using Polynomial

Classifier. In EURASIP journal on Applied Signal

Processing 13 pages 2136-2145.

Dreuw, P., Stein, D., Desealers, T., Rybach, D., Zahedi,

M., Ney, H. 2008: Spoken Language Processing

Techniques for Sign Language Recognition and

Translation, In Technology and Disability. Vol 20.

Amesterdam.

Erdelyi, W., Magnus, F., Oberthtingger, F., Tricomi, F.,

1953 Higher Transcendental functions, Vol 2

McGraw-Hill, New York, USA.

Hu, M.-K., 1962. Visual pattern recognition by moment

invariants, information theory. IEEE Trans. 8 (2),

179–187. ISSN 0018-9448.

Just, A., Rodriguez, Y., Marcel, S., 2006. Hand posture

classification and recognition using the modified

census transform. In: 7th Internat. Conf. on Automatic

Face and Gesture Recognition, FGR, pp. 351–356.

doi: 10.1109/FGR.2006.62.

Kadous, M. W. 1995: Recognition of Australian Sign

Language Using Instrumented Gloves. Bachelor’s

thesis, University of New South Wales.

Kelly, D., McDonald, J., Markham, C. 2010: A person

independent system for recognition of hand postures

used in sign language. In Pattern Recognition Lett.

31.1359-1368.

Mukundan, R., Ong, S. H., Lee, P. A., 2001. Image

analysis by Tchebichef moments. In IEEE Trans.on

image processing 10(9) 01357-1364.

Starner, T., Pentland, A. 1996: Real-Time American Sign

language Recognition from video Using Hidden

Markov Models. In MIT Media Laboratory Perceptual

Computing Section Technical Report N°375.

Triesch, J., Von der malsuburg, C. 2002: Classification of

hand postures against complex backgrounds using

elastic graph matching. In Image Vision Comput. 20

(13-14), 937-943.

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

584