OPTICAL FLOW ESTIMATION WITH CONFIDENCE MEASURES

FOR SUPER-RESOLUTION BASED ON RECURSIVE ROBUST

TOTAL LEAST SQUARES

Tobias Schuchert and Fabian Oser

Fraunhofer IOSB, Fraunhoferstr. 1, Karlsruhe, Germany

Keywords:

Optical flow, Motion estimation, Super resolution, Confidence measures.

Abstract:

In this paper we propose a novel optical flow estimation method accompanied by confidence measures. Our

main goal is fast and highly accurate motion estimation in regions where information is available and a con-

fidence measure which identifies these regions. Therefore we extend the structure tensor method to robust

recursive total least squares (RRTLS) and run it on a GPU for real-time processing. Based on a coarse-to-fine

framework we propagate not only the motion estimates to finer scales but also the covariance matrices, which

may be used as confidence measures. Experiments on synthetic data show the benefits of our approach. We ap-

plied the RRTLS framework to a real-time super-resolution method for deforming objects which incorporates

the confidence measures and demonstrates that propagating the covariances through the pyramid improves

super-resolution results.

1 INTRODUCTION

Many methods for motion estimation between two or

more images have been proposed in the last years. In

2007 the Middlebury benchmark (Baker et al., 2011)

was proposed, where to date about 50 motion esti-

mation methods have been compared (including im-

plementations of the well known optical flow meth-

ods of (Horn and Schunck, 1981), and (Lucas and

Kanade, 1981)). The benchmark consists of several

synthetic and real world sequences and handles many

different use cases. Also different masks for disconti-

nuities and untextured regions are available and used

for comparing the algorithms for these special prob-

lems. For more details on optical flow methods we

refer to the surveys in (Barron et al., 1994) and (We-

ickert et al., 2006).

Nevertheless there exist applications where it is

important to know, where an estimation is reliable and

where not. In other applications a confidence measure

may help to improve further processing steps. For ex-

ample (1) a precise estimation of unknown motions,

e.g. cell growth, may be heavily affected by a smooth-

ness constraint, as small important motions may be

over-smoothed or (2) estimation of super-resolution

images from image sequences based on motion esti-

mates may include the reliability of the motion esti-

mates to weight the different image and motion pa-

rameters.

In this paper we present a total least squares (TLS)

framework for optical flow estimation. In order to

cope with discontinuities and large motions we devel-

oped a robust recursive TLS in a coarse-to-fine frame-

work. We estimate motion and the reliability of these

motions on each pyramid step and propagate the es-

timation results and the confidence measures through

the different scales.

The Middlebury benchmark (Baker et al., 2011)

gives an overview of current state-of-the art motion

estimation techniques. Most of these techniques are

based on variational approaches which yield full flow

fields and high accuracy. Moreover there exist ef-

ficient parallel implementation frameworks for vari-

ational methods making them real-time efficient on

current GPUs

1

(Werlberger et al., 2009).

Local methods consider only neighbouring im-

age regions and are therefore even more suited for

parallelization. However, only few local methods

are present on the Middlebury benchmark website.

Mostly as reference methods and with unsatisfying

results, although they proved to yield high accuracy

(cmp. (Haussecker and Spies, 1999)). Recently (Senst

et al., 2011) proposed a local method for optical flow

estimation and feature tracking implemented on a

1

Graphics Processing Unit

463

Schuchert T. and Oser F. (2012).

OPTICAL FLOW ESTIMATION WITH CONFIDENCE MEASURES FOR SUPER-RESOLUTION BASED ON RECURSIVE ROBUST TOTAL LEAST

SQUARES.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 463-469

DOI: 10.5220/0003785904630469

Copyright

c

SciTePress

GPU. This method is based on ordinary least squares

and does not provide confidence measures.

To the best of our knowledge none of the methods

on the Middlebury website yields special reliability

measures for motion estimates. The publicly avail-

able version of (Werlberger et al., 2009) offers the

possibility to calculate a geometric reliability mea-

surement for each estimate. There the flow field is

compared in both directions for inconsistency and the

result is mapped to a probability distribution. Another

method is to compute the inverse of the variational

energy in a local region, to identify regions, where

the energy is still large (Bruhn and Weickert, 2006).

There exist a number of confidence measures for lo-

cal methods, e.g. spatial coherence or corner measure

(cmp. (Haussecker and Spies, 1999)). (Kondermann,

2009) compared different confidence measures for lo-

cal and global methods, but did not find a satisfy-

ing one. Therefore Kondermann proposed two confi-

dence measures based on motion statistics from sam-

ple data and a hypothesis test, which yield superior

results compared to the two methods mentioned be-

fore. These measures are applicable to all optical flow

fields (local/global) afterwards. However, these mea-

sures must be trained before and are therefore no al-

ternatives in our case as learning motion statistics is

expensive and training data must be available.

Based on the structure tensor approach (Foerst-

ner and Guelch, 1987) we developed a novel ro-

bust recursive total least squares framework, which

allows accurate motion estimation in structured re-

gions and computing the covariance matrix of each

estimate. This framework is related to the robust to-

tal least squares method presented by (Bab-Hadiashar

and Suter, 1998), but compared to their method we

(1) combine the structure tensor approach with a ro-

bust function and do not use LMSOD or LMedS for

outlier detection, which speeds up computation with-

out loss of accuracy, (2) use a coarse-to-fine frame-

work to handle large motions, and (3) use the covari-

ance matrix based on the approximation of (Nestares

et al., 2000) as a reliable confidence measure. There-

fore we propagate not only the motion estimates but

also the covariance matrices through the different

pyramid steps, similar to a Kalman filter approach

(cmp. (Simoncelli, 1999)). In experiments we demon-

strate the applicability of our approach.

In the first section of this paper the optical flow

estimation framework is presented. This includes

how the structure tensor approach is combined with

a robust implementation and how it is integrated in

a coarse-to-fine framework based on recursive total

least squares (TLS). Then in Sec. 3 computation and

distribution of covariance matrices on different scales

of the pyramid are shown. Experiments in Sec. 4 show

the accuracy of the optical flow estimator on synthetic

sinusoidal sequences and the Middlebury benchmark

and is compared to the method of (Werlberger et al.,

2009), one of the fastest global estimator, which is

still ranked high in the Middlebury benchmark. Sec-

tion 5 shows application of the new framework for

super-resolution. We show how the covariance is in-

corporated into the super-resolution framework im-

proving the results on real images. A summary and

an outlook on future work follows in Sec. 6.

2 ESTIMATION FRAMEWORK

We present an extended version of the well known

structure tensor method (Foerstner and Guelch, 1987)

for optical flow estimation. On the one hand we inte-

grate a robust function to handle outliers following

(Black and Anandan, 1996) and (Schuchert, 2010)

and on the other hand we include the renormaliza-

tion technique of (Kanatani, 1996) to avoid systematic

bias following (Chojnacki et al., 2001). This modified

structure tensor method is then embedded in a coarse-

to-fine strategy based on recursive total least squares

(cmp. (Boley et al., 1996)). In the following we ex-

plain these steps in more detail.

The structure tensor approach is based on the

brightness constancy constraint equation (BCCE)

g

x

u

x

+ g

y

u

y

+ g

t

= g

T

p = 0 (1)

with image gradients g = (g

x

,g

y

,g

t

)

T

, optical flow

u = (u

x

,u

y

)

T

and parameter vector p = (p

1

, p

2

, p

3

)

T

.

The parameter vector and the optical flow vector are

related by u = 1/p

3

(p

1

, p

2

)

T

. It is assumed that so-

lution vector

˜

p approximately solves all constraint

equations in the local neighbourhood Λ with size N

and therefore g

T

i

p with i ∈ [1,N] only approximately

equals 0. We get

g

T

i

p = e

i

∀i ∈

{

1,...,N

}

(2)

with errors e

i

which have to be minimized by the

sought solution

˜

p. We minimize e in a weighted 2-

norm

p = argmin kek

2

2

, with

kek

2

2

=

∑

N

i=1

p

T

g

i

w

i

g

T

i

p

p

T

p

=: p

T

Jp

(3)

where w

i

is the weight for the i-th constraint and

where matrix J is called structure tensor. In general

Gaussian weights are used to reduce the influence of

constraints far away from the center pixel. The addi-

tional constraint |p| = 1 is introduced to avoid the triv-

ial solution p = 0. An eigenvalue analysis yields the

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

464

minimum solution, i.e. the eigenvector to the smallest

eigenvalue of J.

In order to reduce the influence of outliers in the

solution we introduce a robust function ρ into the es-

timation

p = argmin kek

ρ

, with

kek

ρ

=

N

∑

i=1

ρ(e

i

,σ) =

N

∑

i=1

ρ

g

T

i

p,σ

(4)

with a variable parameter σ. As robust function we

choose the Lorentzian

ρ

Lor

(x,σ) = log

1 +

1

2

x

σ

2

. (5)

where σ is adapted following (Black and Anandan,

1996). We iteratively solve the structure tensor

method and update the weights according to (4).

(Chojnacki et al., 2001) presented a new deriva-

tion of the renormalization method of Kanatani. We

use this method in our robust total least squares

framework as follows. Starting from the standard TLS

estimation p

T LS

= p

0

we iteratively solve the general-

ized eigenvalue problem

M

p

k

ξ = λ N

p

k

ξ (6)

with p

k+1

= ξ

min

the smallest eigenvector to the

smallest eigenvalue λ

min

. The matrices are given by

M

p

k

=

N

∑

i=1

A

i

p

T

k

C

i

p

k

(7)

and

N

p

k

=

N

∑

i=1

C

i

p

T

k

C

i

p

k

, (8)

where A is the unsmoothed structure tensor, i.e. J with

w

i

= 1 ∀i ∈

{

1,...,N

}

, and C is the covariance matrix

of the image gradients. The iteration is stopped, when

|p

k

− p

k−1

| < ε where ε is a small fixed threshold. In

case of a translational motion model the covariance

matrix C

i

is constant in a neighbourhood, so the de-

nominator can be excluded from the sum, which sim-

plifies computation. For further details we refer to

(Chojnacki et al., 2001).

For estimation of large distances a coarse-to-fine

framework based on Gaussian pyramids is used. In

order to propagate the result through the scales we use

recursive TLS following (Boley et al., 1996). Then

the structure tensor on the current scale l is achieved

by

J

l

=

ˆ

J

l

+ β

ˇ

J

l−1

(9)

with recursive weighting factor β.

ˆ

J

l

is the standard

structure tensor of the current scale, but with warped

temporal gradients g

t

. These temporal gradients are

computed following (Simoncelli, 1999), i.e. the filters

are sheared in the direction of the flow. The temporal

derivatives g

t

then include the motion estimate from

the previous scale and therefore

ˇ

J

l−1

contains only

the 2× 2 spatial structure tensor of the previous scale.

The last row and column of

ˇ

J

l−1

are set to zeros.

3 COVARIANCE ESTIMATION

Following (Nestares et al., 2000) and (Schuchert

et al., 2010) we approximate the error covariance ma-

trices of the optical flow estimates by the inverse of

the Hessian, given by

H =

γ

σ

2

n

||p||

2

S −

1

||p||

2

p

T

Jp

I

(10)

where S is given by the 2 × 2 spatial structure tensor,

I is an identity matrix of the same size as S. The pa-

rameter γ is proportional to the signal to noise ratio.

We set this parameter for all our experiments to γ = 1.

The Covariance matrix is propagated through the

different scales analogue to the structure tensor using

the recursive technique proposed in (9).

4 EXPERIMENTS

We evaluated our algorithm (RRTLS) on synthetic

sinusoidal sequences and on the Middlebury bench-

mark (Baker et al., 2011). We used 3 × 3 Scharr

filters (Scharr, 2005) for spatial gradient estimation.

The image pyramid starts by sizes of 20 pixel on the

smaller side and we used a downsampling factor of

0.85 for all sequences. The recursive weighting factor

is set to β = 0.4 for both, the structure tensor and the

covariance matrix.

We compare our results with the Anisotropic-Huber-

L1 method proposed in (Werlberger et al., 2009). This

algorithm is among the best and fastest algorithms

on the Middlebury benchmark and is named AHL1

in the remainder of this paper. AHL1 is based on a

variational framework using a smoothness term in or-

der to estimate motion also in regions where no or

not much data is available. The method is public-

ity available and offers the possibility to calculate the

geometric confidence measure (cmp. Sec. 1). We use

the proposed high accuracy parameters from (Werl-

berger et al., 2009) and leave all other parameters in

the standard configuration for all sequences. We use

two frames for experiments with AHL1 and three im-

ages for RRTLS wherever possible. (Werlberger et al.,

2009) stated that the two frames version of their esti-

mator yields better results, on the Middlebury bench-

mark. However RRTLS produced better results, es-

OPTICAL FLOW ESTIMATION WITH CONFIDENCE MEASURES FOR SUPER-RESOLUTION BASED ON

RECURSIVE ROBUST TOTAL LEAST SQUARES

465

pecially more reliable covariance estimations, if three

frames are used.

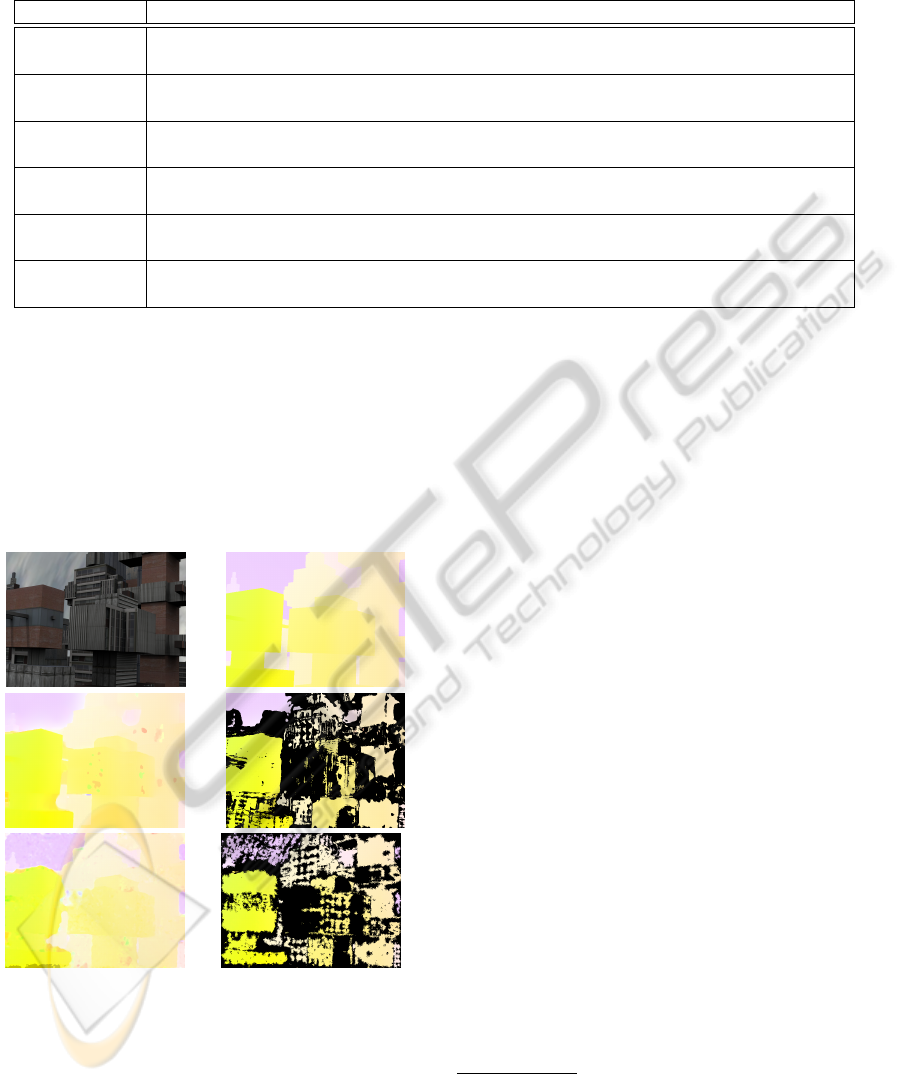

4.1 Sinusoidal Sequences

In order to evaluate the performance of our RRTLS

and its covariance estimation, we used two sinusoidal

patterns which move contrary to each other with a non

structured background and a non structured rectan-

gle in the middle (cmp. Fig. 1a). The colour coded

ground truth motion is displayed in Fig. 1b and the

corresponding colour code is shown in Fig. 1g. The

magnitude of the motion is below one pixel. Nev-

ertheless both algorithms are using the coarse-to-fine

framework. The estimates of AHL1 and of RRTLS are

shown in Fig. 1c and e, respectively.

The effect of the smoothness constraint in AHL1 can

be clearly seen as motion is also estimated in regions,

where neither structure nor motion is present. RRTLS

estimates no or only small motion in regions where

no motion is present, however at borders the estimates

are heavily degraded. Figure 1h shows the estimated

trace of the covariances from RRTLS coded with a

grey scale. White means low and black high confi-

dence.

The regions where estimates are highly unreliable are

clearly visible, i.e. white and grey. Also the AHL1

yields a geometric confidence measure. Figures 1d

and f show 50 percent of the best motion estimates

masked by the corresponding confidence measures of

AHL1 and RRTLS. The geometric confidence measure

of AHL1 masked some regions, where no structure is

available, but not all. Moreover It removes regions on

the patterns, where estimates should be reliable. Our

proposed method masks unreliable estimates much

better and keeps most of the regions on the patterns.

We also tested other confidence measures for

structure tensor methods, proposed in (Haussecker

and Spies, 1999), where the corner measures c

c

yields

best results. The corner measure c

c

is the difference

between the total coherence measure and the spatial

coherence measure and is computed from the eigen-

values of the structure tensor method.

Table 1 summarizes average endpoint errors

(cmp. (Baker et al., 2011)) for 50% masks by the trace

of the propagated covariance, the trace of the covari-

ance only on the finest grid, i.e. not propagated, and

the corner measure. Errors are calculated for different

noise standard deviations σ

n

. The propagated covari-

ance shows to be clearly the most reliable confidence

measure.

a b

c d

e f

g h

Figure 1: Sinusoidal pattern data with noise and estimated

flow and confidence measure. a: one image of the input se-

quence, b: is the true flow (colour coded), c: flow estimates

of AHL1 and d: masked flow estimates of AHL1 using their

confidence mask. e and f are flow estimates of RRTLSand

the masked results with the RRTLSconfidence measure. g

and h show the colour code and the estimated covariance

grey scale coded of our algorithm, respectively.

Table 1: Comparison of different confidence measures. Av-

erage endpoint error for the 50% most reliable estimates of

RRTLS on the sinusoidal pattern sequence.

Confidence measure σ

n

= 0 σ

n

= 1 σ

n

= 5

propagated covariance 0.008 0.024 0.075

covariance 0.012 0.179 0.212

corner measure (c

c

) 0.032 0.161 0.208

4.2 Middlebury Benchmark

We evaluated our algorithm on the well known Mid-

dlebury benchmark (Baker et al., 2011). We are not

interested in achieving overall best results on this

benchmark, but to have best results in regions where

the confidence of our measurement is high, i.e. where

the trace of the estimated covariance matrix is low.

Figure 2 shows the input image of the Urban3 se-

quence (Fig. 2a), the colour coded ground truth flow

(Fig. 2d) and the estimated flows of AHL1 (Fig. 2c)

and RRTLS(Fig. 2e). In Fig. 2d and f only the best

50% of the estimates according to their confidence

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

466

Table 2: Average Endpoint Error on the Middlebury data set using standard masks (disc and untext) and masks based on

confidence measures. Results in brackets are for the 2 frame RRTLS, because only 2 frames are available.

Algorithm Mask Dim Grove2 Grove3 Hyd RW Urban2 Urban3 Venus

AHL1 all 0.175 0.161 0.641 0.202 0.155 0.389 0.721 0.332

RRTLS (sym) all (0.375) 0.207 0.751 0.449 0.271 1.159 0.707 (0.931)

AHL1 disc 0.269 0.473 1.222 0.502 0.528 1.734 2.298 1.098

RRTLS (sym) disc (0.537) 0.562 1.494 1.053 0.758 2.358 2.797 (2.132)

AHL1 untext 0.200 0.138 0.631 0.129 0.137 0.402 0.778 0.350

RRTLS (sym) untext (0.438) 0.201 0.637 0.293 0.257 1.210 0.704 (1.215)

AHL1 95% 0.174 0.123 0.537 0.176 0.126 0.246 0.612 0.278

RRTLS (sym) 95% (0.352) 0.168 0.647 0.405 0.223 0.977 0.660 (0.855)

AHL1 75% 0.176 0.088 0.316 0.115 0.093 0.185 0.403 0.243

RRTLS (sym) 75% (0.262) 0.124 0.337 0.268 0.157 0.643 0.360 (0.599)

AHL1 50% 0.175 0.068 0.171 0.066 0.084 0.138 0.244 0.237

RRTLS (sym) 50% (0.209) 0.105 0.193 0.147 0.125 0.594 0.168 (0.339)

measures are displayed. The same effects as on

the sinusoidal sequence can be seen here. AHL1

over-smooths motion borders, whereas RRTLS yields

highly erroneous estimates in some parts of the im-

age, e.g. lower left border. Nevertheless the covari-

ance of these estimates is high and can be masked out,

whereas wrong estimates of AHL1 are not recognized,

e.g. the over-smoothing in the lower bottom left part

of the image.

a b

c d

e f

Figure 2: One sequence of the Middlebury benchmark. a:

one input image, b: true flow (colour coded), c and e: flow

estimates AHL1 and RRTLS respectively. d and f: masked

estimates using the corresponding confidence masks.

Table 2 shows results on the other Middlebury

benchmarks for both estimators. Additional to the

masks from Middlebury, i.e. disc (discontinuities) and

untext (untextured regions), the masks filter 90%,

75% and 50% of the best estimates according to the

confidence measure. It can be clearly seen, that AHL1

outperforms our method in almost all cases. Using

confidence masks, our method achieves on almost all

sequences considerable better results and the errors

get near or even below the results of AHL1. This

demonstrates the reliability of our covariance mea-

sure and that our estimator can compete with AHL1

in accuracy, if only 50% of the best estimates are con-

sidered. Moreover our method is more than 3 times

faster than AHL1 on images of size 640 × 480 pixel.

5 APPLICATION

A reliable confidence measure of the motion estima-

tion may be used to improve further processing steps.

Super-resolution from multiple images needs reliable

motion estimation. Most approaches assume con-

stant or affine motions over the whole image. These

assumptions do not hold for deformable surfaces,

i.e. faces. Moreover we have the problem, that not all

facial images in a sequences are achieved from front

view. Some super-resolution techniques therefore fil-

ter incoming images before computation in order to

reject images with large motions or achieved from dif-

ferent view points.

In order to cope with these problems, we integrated

the RRTLS-algorithm into a super-resolution frame-

work, based on the multi-frame super-resolution tech-

nique proposed by (Farsiu et al., 2003). There atmo-

spheric blur is neglected and a blur-warp-model

2

is

2

Farsiu starts with a warp-blur-model but translates it into a

blur-warp-model because of a translational motion assumption.

This is not the case for deformable surfaces like faces. However

(Wang and Qi, 2004) showed, that when motion is estimated on

low-resolution images, the warp-blur-model has a systematic error

and the blur-warp-model may be used.

OPTICAL FLOW ESTIMATION WITH CONFIDENCE MEASURES FOR SUPER-RESOLUTION BASED ON

RECURSIVE ROBUST TOTAL LEAST SQUARES

467

used

y

k

= DF

k

Hx + v

k

k ∈ [1, N] , (11)

where x is the high resolution image of size

[rM

1

× rM

2

], y

k

are the k low resolution images

of size [M

1

× M

2

] and v

k

is the additive system-

atic noise. The Blurring operator H is the Point-

Spread-Function of the camera (here a 5x5 Gaus-

sian filter), the warping-operator F

k

is modelled by a

r

2

M

1

M

2

× r

2

M

1

M

2

matrix and the downsampling-

factor D by a

M

1

M

2

× r

2

M

1

M

2

matrix. Using a L

P

-

norm we have to find the minimum of

ˆ

x = argmin

x

"

N

∑

k=1

kDF

k

Hx − y

k

k

p

p

#

. (12)

Farsiu estimates the minimum solution in two steps.

First the low-pass filtered high resolution image

z = Hx is determined by data fusion of the low-

resolution images based on motion estimates. The

low-resolution images are registered on the high reso-

lution grid and then filtered by a median for each high

resolution pixel. In the second step the image z is de-

blurred. We use the estimated covariance of RRTLS in

both steps. In step one the data is fused based on the

estimated motion. The median filter is realized as a

weighted median based on the trace of the covariance

estimates. In the second step the trace of the covari-

ance also influences the deblurring of the high resolu-

tion image when the single high resolution pixels are

weighted. For further details on the super-resolution

algorithm we refer to (Farsiu et al., 2003).

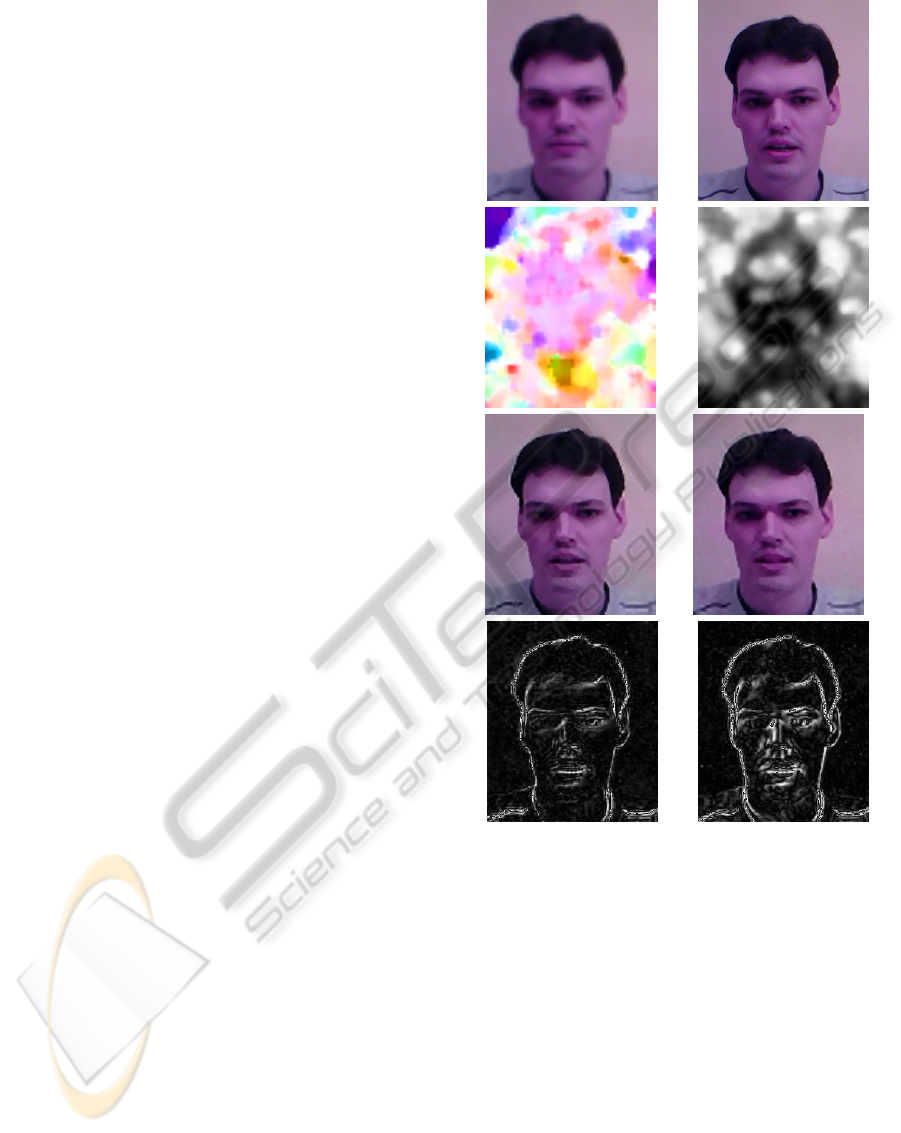

Figure 3 shows estimation results for a se-

quence of facial images. The low resolution images

(e.g. Fig. 3a) are computed from the high resolution

images (Fig. 3b) by downsampling, smoothing and

addition of noise. The sequence consists of 9 facial

images with small head movements and motion of

the lips (speaking) and 58 images showing parts of

the head or background only. All low-resolution im-

ages are referenced on the low-resolution image of

Fig. 3e. The estimated flow for one low resolution

image with RRTLS is depicted in Fig. 3c and the trace

of the corresponding covariance estimates in Fig. 3d.

Again white defines high and black low covariances.

Figures 3e and f show the estimated high resolution

images with and without using the covariance infor-

mation. In Fig. 3g and h the difference error between

the estimated super-resolution images and the original

high resolution image is shown for both algorithms.

The difference error is scaled by a factor of 6. Differ-

ences appear mostly in regions where larger motions

are present, i.e. at the sides of the head and in the re-

gion around the mouth. Using covariance informa-

tion for super-resolution highly improves the estima-

tion (cmp. Fig. 3g).

a b

c d

e f

g h

Figure 3: Results for super-resolution. a: One low res-

olution input image, b: high resolution image, c: esti-

mated flow with RRTLS, d: estimated covariance grey

value coded, e and f: high resolution images estimated by

our algorithm with and without using the covariance, re-

spectively, and g and h: difference images between com-

puted super-resolution and high resolution image.

6 SUMMARY

In this paper we presented a novel method for motion

estimation with confidence measures implemented

on GPU. The method is based on robust total-least

squares, embedded in a coarse-to-fine framework us-

ing a recursive algorithm. We have shown that the

estimated covariance matrices which are propagated

through the whole pyramid are well suited as confi-

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

468

dence measure (e.g. the trace of the covariance ma-

trix). Experiments on synthetic sequences showed the

good performance of this approach.

We used the estimated motion and confidence mea-

sures to improve a super-resolution method. How-

ever, motion estimates are still not as accurate as best

estimators in the Middlebury benchmark. In future we

plan for a improvements for large motions, more com-

plex motion models, e.g. affine motion models, and

motions under changing illumination, e.g. by consid-

ering gradient information or estimation of illumina-

tion changes.

REFERENCES

Bab-Hadiashar, A. and Suter, D. (1998). Robust optic flow

computation. International Journal of Computer Vi-

sion, 29(1):59–77.

Baker, S., Scharstein, D., Lewis, J., Roth, S., Black, M.,

and Szeliski, R. (2011). A database and evaluation

methodology for optical flow. International Journal of

Computer Vision, pages 1–31. 10.1007/s11263-010-

0390-2.

Barron, J. L., Fleet, D. J., and Beauchemin, S. S. (1994).

Performance of optical flow techniques. Int. Journal

of Computer Vision, 12:43–77.

Black, M. and Anandan, P. (1996). The robust estimation of

multiple motions: parametric and piecewise-smooth

flow fields. In Computer Vision and Image Under-

standing, volume 63(1), pages 75–104.

Boley, D., Steinmetz, E., and Sutherland, K. (1996). Recur-

sive total least squares: An alternative to using the dis-

crete kalman filter in robot navigation. In Reasoning

with Uncertainty in Robotics, volume 1093 of Lecture

Notes in Computer Science, pages 221–234. Springer

Berlin / Heidelberg. 10.1007/BFb0013963.

Bruhn, A. and Weickert, J. (2006). A confidence measure

for variational optic flow methods. In Properties for

Incomplete data, pages 283–298. Springer.

Chojnacki, W., Brooks, M. J., and Hengel, A. V. D. (2001).

Rationalising the renormalisation method of kanatani.

Journal of Mathematical Imaging and Vision, 14:21–

38.

Farsiu, S., Robinson, D., Elad, M., and Milanfar, P. (2003).

Fast and robust multi-frame super-resolution. IEEE

Transactions on Image Processing, 13:1327–1344.

Foerstner, W. and Guelch, E. (1987). A fast operator for de-

tection and precise location of distinct points, corners

and centers of circular features. In Proceedings of the

ISPRS Intercommission Workshop on Fast Processing

of Photogrammetric Data, pages 281–305.

Haussecker, H. and Spies, H. (1999). Motion. In Handbook

of Computer Vision and Applications, volume 2, chap-

ter 13, pages 309–396. Academic Press, 1 edition.

Horn, B. and Schunck, B. (1981). Determining optical flow.

Artificial Intelligence, 17:185–204.

Kanatani, K. (1996). Statistical Optimization for Geometric

Computation: Theory and Practice. Elsevier.

Kondermann, C. (2009). Postprocessing and Restoration of

Optical Flows. PhD thesis, Heidelberg University.

Lucas, B. D. and Kanade, T. (1981). An iterative image

registration technique with an application to stereo vi-

sion. In Proceedings of the 7th international joint

conference on Artificial intelligence - Volume 2, pages

674–679, San Francisco, CA, USA.

Nestares, O., Fleet, D. J., and Heeger, D. J. (2000). Likeli-

hood functions and confidence bounds for Total Least

Squares estimation. In Proc. IEEE Conf. on Computer

Vision and Pattern Recognition (CVPR’2000).

Scharr, H. (2005). Optimal filters for extended optical flow.

In Complex Motion, 1. Int. Workshop, G

¨

unzburg, Oct.

2004, volume 3417 of Lecture Notes in Computer Sci-

ence, Berlin. Springer Verlag.

Schuchert, T. (2010). Plant leaf motion estimation using

a 5D affine optical flow model. PhD thesis, RWTH

Aachen University.

Schuchert, T., Aach, T., and Scharr, H. (2010). Range flow

in varying illumination: Algorithms and comparisons.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 32:1646–1658.

Senst, T., Eiselein, V., Evangelio, R. H., and Sikora, T.

(2011). Robust modified l2 local optical flow esti-

mation and feature tracking. In IEEE Workshop on

Motion and Video Computing, pages 685–690, Kona,

USA.

Simoncelli, E. P. (1999). Bayesian multi-scale differential

optical flow. In Handbook of Computer Vision and

Applications, volume 2, chapter 14, pages 397–422.

Academic Press, San Diego.

Wang, Z. and Qi, F. (2004). On ambiguities in super-

resolution modeling. Signal Processing Letters, IEEE,

11(8):678–681.

Weickert, J., Bruhn, A., Brox, T., and Papenberg, N. (2006).

A survey on variational optic flow methods for small

displacements. In Mathematical Models for Registra-

tion and Applications to Medical Imaging, volume 10

of Mathematics in Industry, pages 103–136. Springer

Berlin Heidelberg.

Werlberger, M., Trobin, W., Pock, T., Wedel, A., Cremers,

D., and Bischof, H. (2009). Anisotropic huber-l1 opti-

cal flow. In Proceedings of the British Machine Vision

Conference (BMVC), London, UK.

OPTICAL FLOW ESTIMATION WITH CONFIDENCE MEASURES FOR SUPER-RESOLUTION BASED ON

RECURSIVE ROBUST TOTAL LEAST SQUARES

469