GPU ACCELERATED REAL-TIME OBJECT DETECTION ON HIGH

RESOLUTION VIDEOS USING MODIFIED CENSUS TRANSFORM

Salih Cihan Tek and Muhittin G¨okmen

Department of Computer Engineering, Istanbul Technical University, Maslak, Istanbul, Turkey

Keywords:

Object Detection, Face Detection, GPU Acceleration, CUDA.

Abstract:

This paper presents a novel GPU accelerated object detection system using CUDA. Because of its detection

accuracy, speed and robustness to illumination variations, a boosting based approach with Modified Census

Transform features is used. Results are given on the face detection problem for evaluation. Results show that

even our single-GPU implementation can run in real-time on high resolution video streams without sacrific-

ing accuracy and outperforms the single-threaded and multi-threaded CPU implementations for resolutions

ranging from 640× 480 to 1920× 1080 by a factor of 12-18x and 4-6x, respectively.

1 INTRODUCTION

Real-time object detection has been a very active

topic of research in the last decade. The primary rea-

son of this interest on the subject is the number of

its possible real-world applications both in commer-

cial and non-commercial systems. Most of the com-

plicated applications like virtual reality, surveillance,

video conferencing and robotics require the system to

be able to run in real-time, making the speed of the

object detector as important as the accuracy.

Numerous algorithms have been developed that

can run in real-time provided that the input resolu-

tion is relatively small. Most of them are descendants

of the object detection algorithm proposed in (Viola

and Jones, 2004), which is based on scanning the im-

age with a sliding window at multiple scales and us-

ing a cascaded classifier on each location to obtain

the locations containing the searched object. One ex-

ample of these algorithms is (Fr¨oba and Ernst, 2004),

the algorithm this research is based on. Even though

these algorithms are fast, they are not fast enough to

run in real time on video streams having a high res-

olution like 1280 × 720 without sacrificing the accu-

racy or generalization ability of the system in ques-

tion. Considerable amount of effort has been made

to speed up these algorithms, but algorithmic modi-

fications by themselves are not enough to get drastic

speed improvements required.

Another approach that is becoming increasingly

common is to accelerate the algorithms using the

GPU. In (Sharma et al., 2009), the authors followed

this approach by implementing the Viola-Jones algo-

rithm using CUDA. They achieved a detection rate of

19 Frames Per Second (FPS) on a video stream of res-

olution 1280 × 960 on a GTX 285 GPU. Although

there is an important increase in speed, having %81

accuracy and 16 false positives on the CMU test set

shows that a sacrifice has been made in the accuracy.

In (Hefenbrock et al., 2010), a multi-GPU implemen-

tation of the Viola-Jones algorithm is presented that

runs at 15.2 FPS at 640× 480 resolution on 4 Tesla

GPUs. Another CUDA accelerated Viola-Jones algo-

rithm given in (Obukhov, 2004) performs at 14 and 8

FPS on a GTX 480 for resolutions 1280 × 720 and

1920 × 1080, respectively. The scaling factors are

limited to be integers, which means that the detec-

tor will not be able to detect small faces accurately.

In (Harvey, 2009), the author achieved 2.8 FPS on a

single GTX 280 GPU and 4.3 FPS on a dual GTX

295 GPU on VGA image sizes with another CUDA

implementation of the Viola-Jones algorithm. (Her-

out et al., 2010) is the only GPU accelerated object

detection algorithm to date that uses a different fea-

ture and different classifier structure than (Viola and

Jones, 2004). The reported frame rate is 58 FPS for

1280 × 720 resolution on a GTX 280 GPU with no

information about the accuracy.

In this paper, we follow a similar approach, but

use a different algorithm. We present a GPU imple-

mentation of a boosting based object detection algo-

rithm that uses illumination-robust MCT (Modified

Census Transform) (Fr¨oba and Ernst, 2004) based

classifiers using CUDA. Our implementation per-

685

Cihan Tek S. and Gökmen M..

GPU ACCELERATED REAL-TIME OBJECT DETECTION ON HIGH RESOLUTION VIDEOS USING MODIFIED CENSUS TRANSFORM.

DOI: 10.5220/0003821606850688

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 685-688

ISBN: 978-989-8565-03-7

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

forms all major steps of the algorithm on the GPU and

hence requires minimum amount of memory transfers

between the host and the GPU. We evaluate the per-

formance of our implementation on face detection.

2 DETECTION ALGORITHM

MCT (Fr¨oba and Ernst, 2004) is a transform that

transforms the pixel values in a given neighborhood

N to a binary string. The binary values corresponding

to a pixel location is obtained by comparing the pixel

values in the neighborhood with their mean. Let N(x)

be a spatial neighborhood centered at the pixel loca-

tion x and I(x) be the mean of the pixel intensity val-

ues on this neighborhood. If

N

is the concatenation

operator, then the MCT can be defined as follows:

Γ(x) =

O

y∈N

ζ(I(x), I(y)) (1)

where the comparison function ζ(I(x), I(y)) is de-

fined as

ζ(I(x), I(y)) =

(

1, I(x) < I(y)

0, otherwise

(2)

In order to obtain memory efficient classifiers, we

use a neighborhood size of 3 × 3, which leads to 9

bit MCT values in the range of [0, 510]. These val-

ues correspond to indices γ of local structure kernels

that can encode structural information about the im-

age like edges, ridges, etc. in binary form.

A MCT based weak classifier h

x

consists of a co-

ordinate pair x = (x, y) relative to the origin of the

24×24 fixed size scanning window and a 511 element

lookup table. The lookup table contains a weight for

each γ such that 0 ≤ γ ≤ 510. The output a weak clas-

sifier gives on a window is determined by calculating

γ from the neighborhood centered at x and getting the

corresponding value from the lookup table.

The detector has a cascaded structure, where each

stage contains a strong classifier containing a number

of weak classifiers, each of which having a different

x position. H

j

(Γ), the strong classifier of the j. stage,

is defined as follows:

H

j

(Γ) =

∑

x∈W

′

h

x

(Γ(x)) (3)

where W

′

⊆ W is the set of unique positions used by

the weak classifiers h

x

in the strong classifier. A win-

dow W passes the j. stage if the sum of the responses

of the weak classifiers on that window is less than or

equal to the stage threshold T

j

. A window is classified

as the searched object if it passes all stages. The cas-

cade used in this work has 5 stages utilizing 10, 20,

40, 80, and 217 positions.

The detection process involves using the classifier

cascade at each location on the input image at multi-

ple scales. As in any other sliding window approach,

there are scanning parameters like horizontal and ver-

tical step sizes (∆x and ∆y), the scale factor (s) and the

starting scale. In this work, we choose ∆x = ∆y = 1,

s = 1.15 and use a starting scale of 1, which results in

a computationally demanding, fine-grained scanning

process and makes it possible to detect faces as small

as 24× 24 accurately.

3 CUDA IMPLEMENTATION

3.1 Preprocessing

In order for the detector to detect objects at various

sizes, the input image needs to be scanned in multiple

scales. In (Viola and Jones, 2004), this is performed

by scanning the same input image with up-scaled

classifiers. The approach we followed is to construct

an image pyramid and scan it using a fixed size win-

dow to prevent sparse global memory accesses that

would have been required by the former approach at

larger scales. The whole image pyramid is stored as

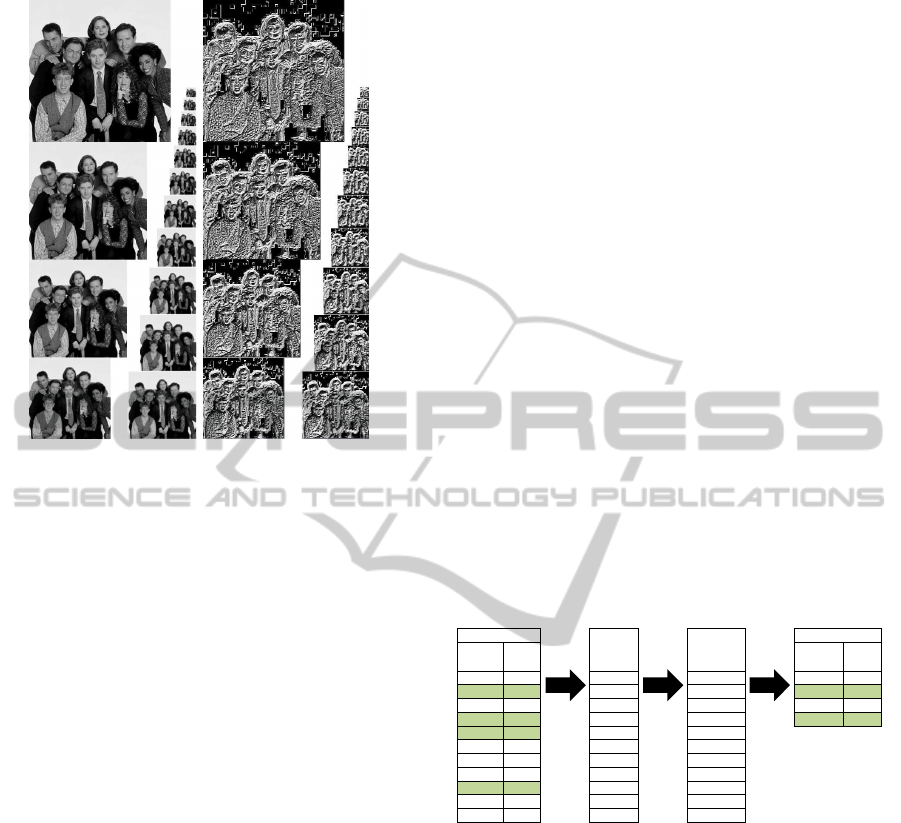

a single image as shown on the left in Figure 1. Even

though this layout has empty regions that increase the

number of windows scanned, it greatly simplifies the

scanning process described in the next section. The

regions having a constant gray level are easily elimi-

nated by the first stage of the cascade.

The image at each level of the pyramid is con-

structed by binding the image from the previous level

to a texture to take advantage of the hardware bilinear

interpolation capability of the GPU, and then down-

sampling it according to the scale factor.

Evaluation of a weak classifier becomes a simple

memory lookup when the MCT values are precom-

puted. To take advantage of this property, the whole

image pyramid is transformed with MCT beforehand

by launching a kernel with a block size of 16 × 16.

Each thread block pulls in a patch from the pyramid

in global memory to its dedicated shared memory be-

fore further processing. Each thread in a block pulls

in a single pixel from the global memory. Since the

MCT computation at the edges and corners requires

additional pixels, threads at the edges and corners

pull in additional pixels. After getting the data to

shared memory, threads in each block are synchro-

nized. Then each thread computes the value corre-

sponding to its location and writes the result to the

global memory. At this point, the original image pyra-

mid is no longer needed and hence can be discarded.

In the remaining sections, the term ”image pyramid”

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

686

Figure 1: Image pyramid and the result of transforming it

with MCT.

refers to the one containing the result of the MCT,

which is shown on the right side of Figure 1.

During the scanning process, the cascade data will

be heavily accessed and therefore the speed of access-

ing them is crucial for the performance. Since the

size of the constant memory is too small store a cas-

cade containing more than 32 weak classifiers, it is

stored in the texture memory as a 2D floating point

texture. Each row starts with a single floating point

value containing the x and y coordinate of the weak

classifier in its upper and lower 2 bytes, respectively.

Rest of the row contains the weights of the lookup

table. This approach helps to reduce texture cache

misses by making the accesses to the feature weights

as spatially local as possible in the 2D space.

3.2 Object Detection

The detection process involves scanning the image

pyramid with a fixed size sliding window, classifying

the window each location and writing results back to

the device memory. We found that the best perfor-

mance overall is obtained when making each thread

classify a single window on the image pyramid. As

a natural consequence of using a cascaded classifier

structure, the number of stages that will be evalu-

ated in a window cannot be predetermined. Threads

that classify their windows as negative in early stages

have to wait idle until all other threads in the same

block finish their tasks, only after which the process-

ing of a new block can be started. This results in

under-utilization of GPU resources. In order to over-

come this problem, we split the cascade into 2 smaller

groups and use 2 different detection kernels for scan-

ning. Each kernel uses one of the groups obtained in

the previous step for classification. After performing

a number of experiments, we found that the best loca-

tion for the split is after the 2nd stage.

The first detection kernel is launched with a grid

having as many threads as the number of windows

that needs to be scanned and a block size of 16× 16.

When a window is classified as the searched object,

its coordinates are written to the corresponding loca-

tion in a preallocated 1D array of floats that resides

in global memory. Each element of this array can

store the x and y coordinates of a window in its up-

per and lower two bytes, respectively. Since detec-

tions are rare, this array contains sparse data after the

execution of the kernel finishes. Most of its elements

still contain dummy values that are set when it is first

allocated. To solve this problem, we use a stream

compaction algorithm that copies all elements having

meaningful values to the beginning of the array. Then

we launch another detection kernel with a 1D grid of

thread blocks, each one containing a 1D array of 256

threads. Each thread classifies a single window whose

coordinates are fetched from the array generated by

the first kernel. The whole process is shown in Figure

2 for the first 10 windows in the input image.

Window

Origin

Thread

Id

Window

Origin

Thread

Id

0,0

0

-

1,0

1,0

0

1,0

1

1,0

3,0

3,0

1

2,0

2

-

4,0

4,0

2

3,0

3

3,0

8,0

8,0

3

4,0

4

4,0

-

5,0

5

-

-

6,0

6

-

-

7,0

7

-

-

8,0

8

8,0

-

9,0

9

-

-

10,0

10

-

-

1st Kernel

Output

Array

Compacted

Output

Array

2nd Kernel

Figure 2: Steps of scanning with 2 kernels. Window loca-

tions that are detected as positive are highlighted.

3.3 Utilizing Multiple GPUs

We have extended our implementation to reduce the

detection times even further on devices that have mul-

tiple GPUs. We spawn M + 1 CPU threads, where M

is the number of GPUs in the system. The main thread

acquires frames from the video stream and does pre-

liminary computations. Each one of the other threads

performs kernel launches and memory transfers be-

tween the GPU assigned to it and the host. Each

GPU generates several levels of the image pyramid

and scans only those levels. The input image and the

cascade data are copied to the memories of each GPU

separately. Distributing different levels of the pyra-

mid to different GPUs makes it possible to achieve

GPU ACCELERATED REAL-TIME OBJECT DETECTION ON HIGH RESOLUTION VIDEOS USING MODIFIED

CENSUS TRANSFORM

687

nearly linear speed-up when the levels each GPU will

process is carefully determined.

4 EXPERIMENTAL RESULTS

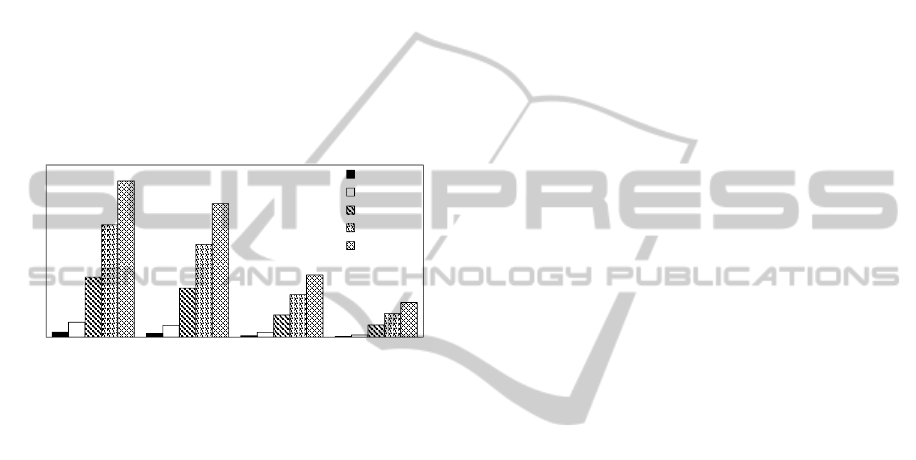

We have tested both the CPU and GPU implemen-

tations on the same desktop PC that contains a Intel

Core i5-2500k processor, 3 GTX 580 GPUs and 8GB

RAM. Figure 3 shows the average number of frames

processed per second by all implementations on video

streams of various sizes. These measurements in-

clude the time required to perform preprocessing and

memory copies between the host and the device. The

multi-threaded CPU implementation uses OpenMP to

distribute the processing to different cores.

14

11

4

2

42

34

14

6

171

140

64

35

320

264

121

68

445

380

177

99

0

50

100

150

200

250

300

350

400

450

640x480 720x540 1280x720 1920x1080

Frames Per Second

Resolution

CPU (1 thread)

CPU (4 threads)

1 GPU

2 GPUs

3 GPUs

Figure 3: Frame rates of GPU and CPU implementations on

various input resolutions.

As it can be seen from Figure 3, even the single-

GPU implementation outperformsthe single-threaded

and multi-threaded CPU implementations by a factor

of 12-18x and 4-6x, respectively. As the resolution

increases, so does the difference between the speed

of the GPU and CPU implementations, clearly show-

ing that a GPU, as a massively parallel processor, is

better suited to process high resolution videos than a

CPU. These results also show that, in contrast to the

CPU, the performance of the GPU based implementa-

tion scales nearly linearly with the number of GPUs.

We have also tested both our CPU and GPU im-

plementations on the CMU+MIT frontal face test set

(Rowley et al., 1998) to validate our results. The de-

tection rates for both implementations are measured

as %90.8 with 32 false positives, proving that our

GPU implementation generates the exact same results

with those of the CPU implementation.

5 CONCLUSIONS

We have presented an efficient GPU implementation

of a boosting based, real-time object detection sys-

tem utilizing MCT based classifiers using CUDA. We

have shown that even our single GPU implementa-

tion outperforms both the single-threaded and multi-

threaded CPU implementations runningon a high-end

CPU. We have pointed out that, because of their mas-

sively parallel architecture, GPUs are more suitable

for working with high resolution videos than CPUs.

Our implementation, with its ability to detect objects

in high resolution video streams in real-time, can eas-

ily be used in modern multimedia, entertainment and

surveillance systems.

ACKNOWLEDGEMENTS

This work is supported by ITU-BAP and TUBITAK

under the grants 34120 and 109E268, respectively.

REFERENCES

Fr¨oba, B. and Ernst, A. (2004). Face detection with the

modified census transform. In Proceedings of the

Sixth IEEE international conference on Automatic

face and gesture recognition, FGR’ 04, pages 91–96,

Washington, DC, USA. IEEE Computer Society.

Harvey, J. P. (2009). Gpu acceleration of object classifi-

cation algorithms using nvidia cuda. Master’s thesis,

Rochester Institute of Technology.

Hefenbrock, D., Oberg, J., Thanh, N. T. N., Kastner, R.,

and Baden, S. B. (2010). Accelerating viola-jones

face detection to fpga-level using gpus. In Proceed-

ings of the 2010 18th IEEE Annual International Sym-

posium on Field-Programmable Custom Computing

Machines, FCCM ’10, pages 11–18, Washington, DC,

USA. IEEE Computer Society.

Herout, A., Joth, R., Jurnek, R., Havel, J., Hradi, M., and

Zemk, P. (2010). Real-time object detection on cuda.

Journal of Real-Time Image Processing, 2010(1):1–

12.

Obukhov, A. (2004). Haar classifiers for object detection

with cuda. In Fernando, R., editor, GPU Gems: Pro-

gramming Techniques, Tips and Tricks for Real-Time

Graphics, chapter 33, pages 517–544. Addison Wes-

ley.

Rowley, H., Baluja, S., and Kanade, T. (1998). Neural

network-based face detection. IEEE Trans. Pattern

Anal. Mach. Intell., 20(1):23–38.

Sharma, B., Thota, R., Vydyanathan, N., and Kale, A.

(2009). Towards a robust, real-time face processing

system using cuda-enabled gpus. In High Perfor-

mance Computing (HiPC), 2009 International Con-

ference on, page 368377. IEEE.

Viola, P. and Jones, M. J. (2004). Robust real-time face

detection. Int. J. Comput. Vision, 57:137–154.

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

688