PAVEMENT TEXTURE SEGMENTATION USING LBP

AND GLCM FOR VISUALLY IMPAIRED PERSON

Sun-Hee Weon, Sung-Il Joo and Hyung-Il Choi

Department of Global Media, Soongsil University, Sangdo-dong, Seoul, South Korea

Keywords: Pavement Segmentation, LBP, GLCM, Texture Segmentation, Visually Impaired Person.

Abstract: This paper proposes about a method for region segmentation and texture extraction to classify pavement and

roadway region in the image that acquired from cameras equipped to the visually impaired person during a

walk. First, detect a road boundary line through the line detections technique using the Hough transform,

and obtain candidate regions of pavement and roadway. Second, extract texture feature in segmented

candidate region, and separated pavement and roadway regions as classified three levels according to

perspective scope in triangular model. In this paper, used rotation invariant LBP and GLCM to compare the

difference of texture feature that pavement with various precast pavers and relatively a roadway being

monotonous. Proposed method in this paper was verified that the analytical performance nighttime did not

deteriorate in comparison with the results from the daytime, and region segmentation performance was very

well in complex image has various obstacles and pedestrians.

1 INTRODUCTION

The rapid development of IT technology is

precipitating the contemporary transformation of

wired networks into wireless networks.

Concomitantly, research is actively underway to

develop various services using mobile terminal

devices such as PDAs, mobile phones, smart phones,

etc. which are adapted to the wireless network

environment, and furthermore, to develop wearable

computing devices and algorithms driven by various

forms of nanotechnology. Among these, vision

based systems are currently mainly applied for

augmented reality applications using smart phones

or navigations, etc. and numerous researches

relevant to these areas are ongoing amid heightened

international interest. However, the majority of such

related research work is focused only on systems for

use by non-disabled persons, while devices for

assisting disabled persons are not being taken under

consideration at present. Image processing and

computer vision technology is a field with very high

potential value for utilization as assistive devices for

the visually impaired. This is an important

technology for blind persons who had hitherto relied

on assistive walking sticks or guide dogs for walking,

offering the possibility of eliminating the risk factors

that may arise when such disabled persons walk

without separate guidance devices or guide persons.

Most of the systems which had been developed in

the past to serve as vision assistance devices that can

be worn by the blind employed ultrasonic sensors,

etc. to detect obstacles and transmit this information

to the user, and hence they were limited in their

capacity for information communication (Tuceryan

and Jain, 1993).

Moreover, they were also hampered by the

difficulty of identifying accurate information

regarding the situational conditions or the

environment during walking (Arvis et al., 2004).

This paper has developed a system for enabling

visually impaired persons to walk safely by using a

camera mounted onto mobile computers or smart

phones. Most of the pre-existing research into road

detection and recognition had been constituted of

efforts to develop applications for unmanned

vehicles or navigation, and hence priority was not

given to the subject of pavement detection and

recognition from the perspective of the pedestrian’s

position. Also, as can be seen in Fig. 1, pavements

are unlike roadways in that they are characterized by

a wide variety of patterns created by the paving

blocks, and they thus pose the problem that even the

same pattern may pose a high possibility of being

misrecognized depending on the perspective from

which its image is recorded.

335

Weon S., Joo S. and Choi H..

PAVEMENT TEXTURE SEGMENTATION USING LBP AND GLCM FOR VISUALLY IMPAIRED PERSON.

DOI: 10.5220/0003826903350340

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 335-340

ISBN: 978-989-8565-03-7

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

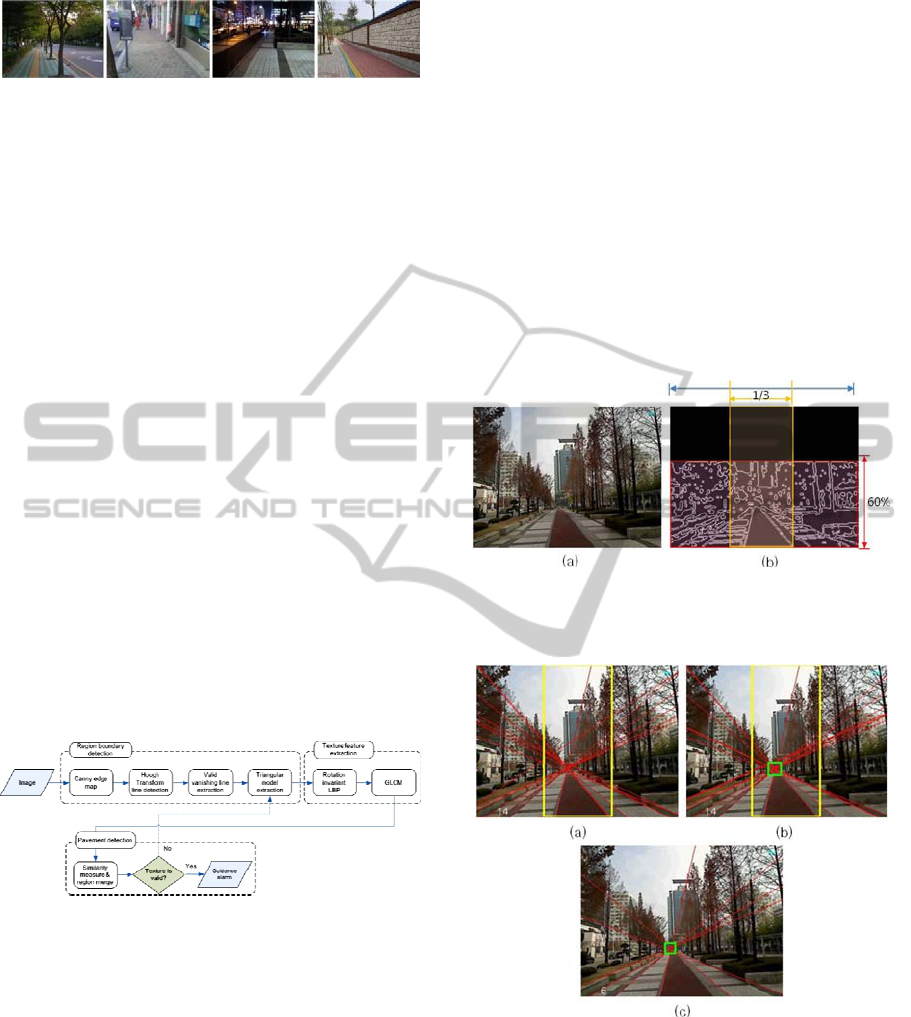

Figure 1: Different images configurations in the same

pavement situation.

To resolve the above problems, this paper proposes

a method of segmenting regions of the road using

LBP and GLCM, which are texture features

invariant under rotation. To distinguish the boundary

between roadways and pavement regions, the

optimal road line is detected using the Hough

transform, and this detected road line is used to

derive the Local Binary Pattern (LBP), the Gray

Level Co-Occurrence Matrix (GLCM) and the

texture feature information of the segmented regions.

Also, the segmented regions are distinguished into 3

stages of levels according to their distance, and the

similarity of the texture features between each of the

respective levels is measured, thereby distinguishing

the roadway and pavement regions.

Fig. 2 presents the proposed pavement detection

framework. The rest of this paper is organized as

follows: In section 2, we describe about step for

region boundary detection. Section 3 presents our

pavement detection method based on perspective

texture feature LBP and GLCM, and measure the

similarity between each level within triangular

model. Section 4 shows the experiment results and

finally, this paper concludes and discusses our future

research direction.

Figure 2: Pavement detection framework.

2 REGION BOUNDARY

SEGMENTATION

2.1 Road Boundary Detection

Within an outdoor natural image in which roadways

and pavements are simultaneously present, linear

components exist due to the numerous obstacles.

However, the road information conveyed by an edge

image obtained through a canny edge operator has

the characteristic of proceeding in the direction of

the center of the image. Also, when walking while

facing directly forward in accordance with the

characteristic manner of blind pedestrians, the road

is confirmed to be located within 60 % of the image,

and the pixel information for the upper 40 % can

then be eliminated. In order to secure edge detection

that sustains strongly under lighting and illumination,

first the edges for the specific RGB channels of the

input image are detected, and the noise is removed

using the logical disjunction (OR) operations and

morphological closing operations. Then the results

of the diagonal component edge detection are

obtained by means of the elimination of the

horizontal and vertical edge components. Fig. 3

shows the resulting image obtained in the edge

detection stage.

Figure 3: Resulting image obtained in edge detection step,

(a) original outdoor image, (b) canny edge detection result

within 60% of the image.

Figure 4: Process of detection for valid vanishing line, (a)

the candidate region for the location of the vanishing point,

(b) improved Hough line after eliminating invalid lines, (c)

optimal valid vanishing lines.

The Hough transformation algorithm is

performed to detect the boundary of the roads. Here,

the valid line is the diagonal component, and hence

the lines with slope of 20 or less are removed from

among all the extracted lines. In the natural image,

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

336

the pavement has a line parallel to the boundary

surface and the vanishing point (intersection point)

exists on the extension of the line according to the

perspective. The valid line for detecting the

boundary of the region is determined using these

features. Also, the line that is located on the

boundary of the pavement has a high probability of

existing within the vanishing block which has a size

of 20x20 and which is the candidate region for the

location of the vanishing point. Hence, lines for

which the vanishing point does not exist within this

region are judged to be invalid lines and are

eliminated. Fig. 4 displays the result of detecting the

optimal valid vanishing line component by means of

eliminating the invalid lines after generating the

vanishing block.

2.2 Region Split and Triangular Model

Extraction

The regions which have been separated by the valid

road lines are regarded as the primary interested

region. This paper designates all of these regions as

the triangular model, and the separated regions are

composed of an N number of small triangular

models. In these triangular models, the regions

which are determined to be identical by means of

similarity verification are merged in cases where

they lie adjacent. In the case of non-adjacent regions,

the respective surface areas are calculated so that the

regions which are smaller than the threshold value

can be submitted to a stage of elimination to extract

a single triangular model region. Fig. 5 shows the

small triangular model generated by separating the

extension lines of the valid lines of the pavement,

and the final triangular model merged though the

similarity verification stage.

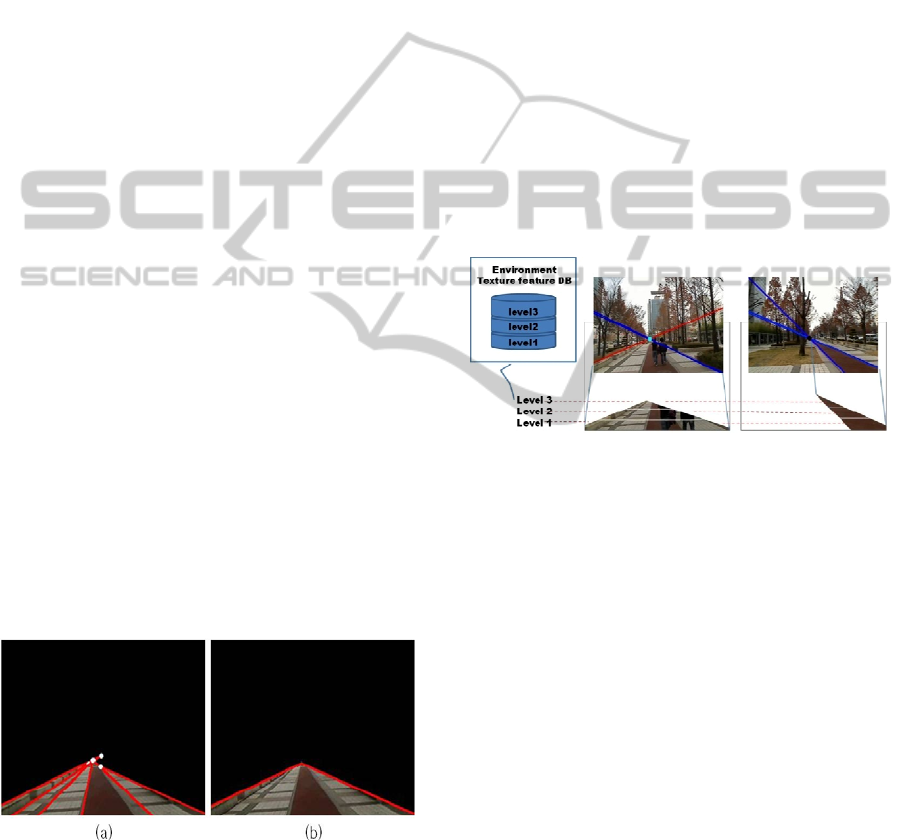

Figure 5: Separating the ROI and extracting a single

triangular model, (a) primary interested regions, (b) the

merged triangular model region.

3 TEXTURE FEATURE

EXTRACTION

3.1 Perspective Texture Feature

The extracted triangular model is segmented into 3

levels according to distance to derive the texture

information. This method is used to solve the

problem arising from the fact that the texture of

regions that are distant from the camera offer

relatively less quantities of information and are

inferior in clarity compared to the close regions. As

seen in Fig. 6, the close region is designated as level

1 and the distant region as level 3 to extract the

texture features for each respective level. Rotation

invariant LBP and GLCM are used to distinguish the

textures of the pavement and the roadway, and the

image is segmented into window blocks of size 8x8

for processing to extract more textural features from

the pattern.

Figure 6: Perspective level of triangular model.

To address the problem posed by the transformation

of the pattern of the paving blocks when the image is

rotated or repositioned by the pedestrian, the texture

features which hold up strongly under rotation are

extracted. The first feature utilized for this purpose

is the rotation invariant local binary pattern. The

derivation of the LBP follows that represented by

Ojala et al., g

corresponds to the gray value of the

center pixel of a local neighbourhood.

g

p0,⋯,P1

correspond to the gray values

of P equally spaced pixels on a circle of radius

RR 0 that form a circularly symmetric set of

neighbors.

Fig. 7 illustrates three circularly symmetric

neighbor sets for different values of P and R. And

then, a binomial weight 2

is assigned to each sigh

sg

g

, transforming the differences in a

neighbourhood into a unique LBP code :

LBP

,

x

,y

∑

sg

g

2

,

(1)

s

x

1x 0

0x 0

PAVEMENT TEXTURE SEGMENTATION USING LBP AND GLCM FOR VISUALLY IMPAIRED PERSON

337

Figure 7: Local Binary Pattern(LBP).

The rotation invariant local binary pattern applied in

this paper is one variant of the local binary pattern,

and uses equation (2) to submit the LBP code to a

circular rotation and generation is repeated until the

minimum value is obtained. In short, the rotation

invariant code is produced by circularly rotating the

original code until its minimum value is attained.

The LBPROT operator introduced by Pietikäinen et

al. (Vadivel et al., 2007) is equivalent to LBP

,

.

Rotation invariance here does not however account

for textural difference caused by changes in the

relative positions of a light source and the target

object. So, we extract another texture extraction

method to solve this problem using Gray level co-

occurrence matrix(GLCM).

LBP

,

minRORLBP

,

,i

|

i 0,1,…,P1 (2)

Haralick suggested the use of gray level co-

occurrence matrices (GLCM) for definition of

textural features. The values of the co-occurrence

matrix elements present relative frequencies with

which two neighboring pixels separated by distance

d appear on the image, where one of them has gray

level i and other j. Such matrix is symmetric and

also a function of the angular relationship between

two neighboring pixels. The co-occurence matrix

can be calculated on the whole image, but by

calculating it in a small window which scanning the

image, the co-occurence matrix can be associated

with each pixel. Haralick suggests 14 features

describing the two dimensional probability density

function p

,

. Four of the most popular commonly

used are listed in [Haralick 73] (Leitão et al., 2003).

They are Contrast, Correlation, Energy,

Homogeneity, and Energy and Homogeneity

features used to measure the uniformity of surface

texture in this paper.

Contrast

|

ij

|

p

i,j

,

(3)

Correlation

i

μ

i

μ

p

i,j

σ

σ

,

(4)

Energ

y

p

i,j

,

(5)

Homogeneit

y

pi,j

1

|

ij

|

,

(6)

3.2 Region Segmentation

The LBP and GLCM texture information of the

extracted triangular model is calculated to measure

the degree of similarity among the adjacent regions.

The regions are separated initially according to the

triangular model by merging the regions with high

degrees of similarity and removing the regions with

low similarity. By comparing the degrees of

similarity among the texture features within the

separated triangular model, analysis is performed on

the regions where the pavement and the roadway lie

adjacent or the regions that exceed the range of

recognition during walking. Through this analysis,

the secondary region is distinguished to guide the

walking movement. In the proposed method, the

local features which display the spatial information

of the images can be reflected with high quality by

comparing the respective pattern similarities of each

block unit within the triangular model as in equation

(7) as soon as the walking commences to measure

the similarity of the stored texture features of the

pavement and the query images that are input during

walking. In equation (7), L and CM are the

respective normalized values for the LBP and

GLCM blocks, and α and β are their respective

weighted values. The degree of similarity can be

compared by varying the weighted values according

to the information which is desired to be compared.

S

αLβCM, αβ1

(7)

Equation (8) represents the method for calculating

the similarity of the normalized LBP histogram

between the query image Q and the compared image

D. K is the number of bins, and N is the number of

road region models. In the current image, the

similarity between the LBP histogram and the stored

road region model is identified as S, and the road

model with the highest degree of similarity is

thereby detected.

S

minQ

m

,Dm

(8)

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

338

4 EXPERIMENT RESULTS

As for the image used for experiments in this paper,

images sized 320 x 240 were input and processed in

real-time. The average processing speed was

measured to be 18 frames per second. For the

experiment, outdoor images for both day and night

were recorded, and the resulting extractions of

texture features confirmed clear distinctions in

texture for pavements and roadways in both LBP

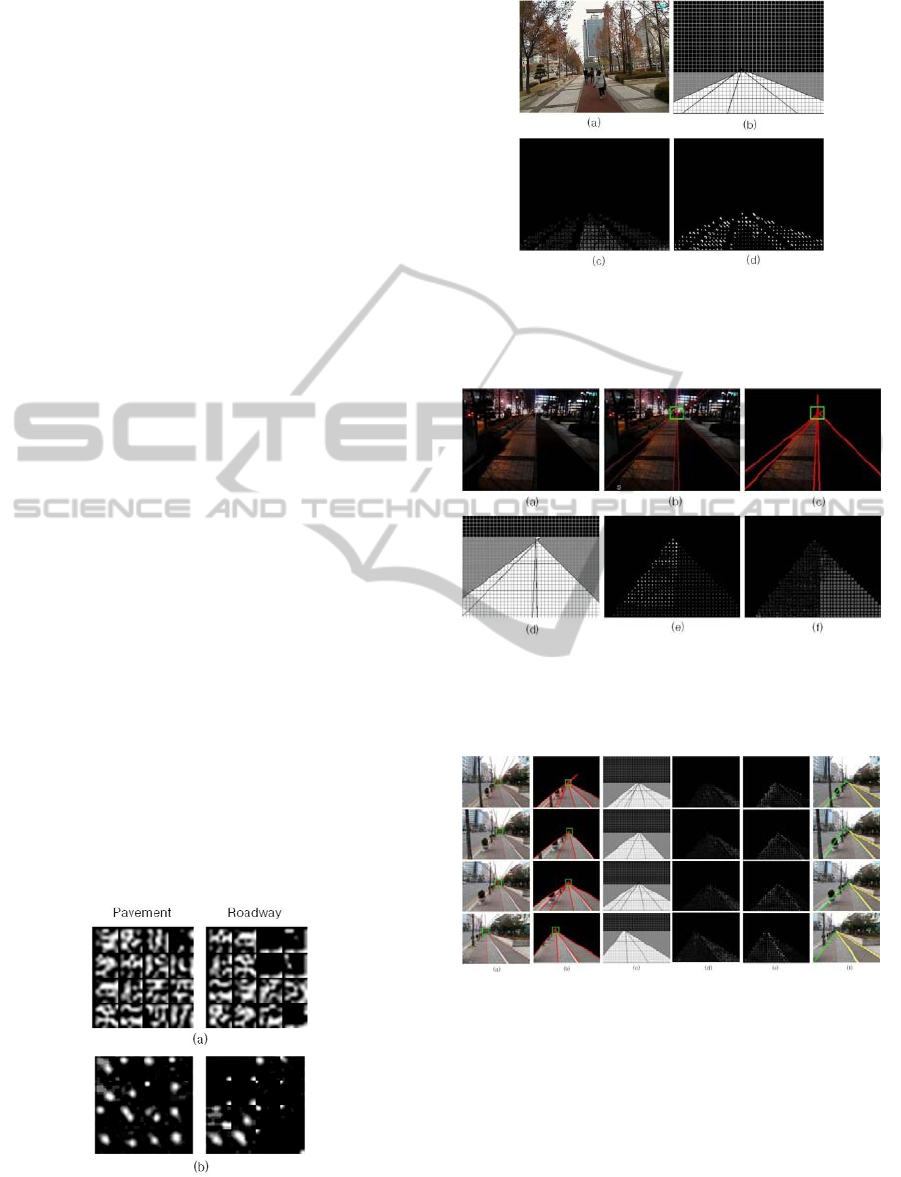

and GLCM. Fig. 8 presents the result of segmenting

the image into 8 x 8 window blocks and then

extracting the texture features of the valid blocks

located within the triangular model. It indicates that

while the unique pattern of the texture was able to be

extracted in the pavement regions, almost no

patterns could be extracted from the roadway

regions.

Fig. 9 exhibits the experiment using experimental

images in which the bicycle path and the pavement

region are adjacently located, wherein the bicycle

path was judged to be a roadway and the difference

in the texture features when compared with the

pavement region was sought. 3 stages of levels were

distinguished within the region which had been

segmented following the valid line, and the rotation

invariant LBP and GLCM were extracted for the

window blocks of each respective level identified

according to distance. In level 3, the number of

window blocks that remained valid was small and

this posed a difficulty in comparing texture features.

However, in the case of the bicycle path located in

the center of the image, it was confirmed that

sufficient extraction could be made of the texture

features to enable a comparison of similarity even in

level 3. Fig. 10 is the result of experiments on

images recorded in the nighttime. Because LBP and

GLCM utilize features which can express texture

Figure 8: Texture feature of pavement and roadway, (a)

8x8 window blocks based rotation invariant LBP, (b) 8x8

window blocks based GLCM.

Figure 9: Result of texture feature extraction within

triangular model, (a) original image, (b) triangular model

based on 8x8 window, (c) rotation invariant LBP, (d)

GLCM.

Figure 10: Pavement detection result with night outdoor

image: (a) original image, (b) valid hough line, (c) and (d)

region of triangular model, (e) rotation invariant LBP in

(d), (f) GLCM in (d).

Figure 11: Process of texture feature extraction in real

time image: (a) valid hough line detection, (b) region of

triangular model, (c) separated according to 8x8 window

block (d) rotation invariant LBP in triangular model, (e)

GLCM in triangular model, (f) pavement and roadway

detection result.

features well regardless of the conditions of lighting

or illumination, it was verified that the analytical

performance did not deteriorate in comparison with

the results from the daytime. Also, Fig. 11 exhibits

the results of experiments in continuous frames and

PAVEMENT TEXTURE SEGMENTATION USING LBP AND GLCM FOR VISUALLY IMPAIRED PERSON

339

demonstrates that in this case, even if the distance

texture of level 1 failed to be detected, because the

quantity of texture information increased with the

passage of time, the pavement situation could be

determined by analyzing the texture in level 2.

5 CONCLUSIONS

This paper has proposed a probabilistic estimation

method based on the rotation invariant texture

features of LBP and GLCM as a method for

distinguishing pavements and roadway regions in

outdoor images. It was confirmed that pavement and

roadway regions could be separated using a

relatively simple form of similarity comparison

between the regions when processing images in real-

time using this method. However, because there is

exists a great variety in the patterns of paving blocks,

and because comparisons of similarity become

challenging when a pavement region with a differing

pattern is confronted, there are plans for additional

research with the objective of resolving such

problems by focusing on the stage of updating and

recognizing texture features in real-time.

ACKNOWLEDGEMENTS

This research was supported by the Converging

Research Center Program funded by the Ministry of

Education, Science and Technology (2011K000667)

and the Soongsil University BK21 Digital Media

Division.

REFERENCES

Tuceryan M., and Jain A. K., (1993) Texture Analysis, in:

C. H. Chen, L. F. Pau, P. S. P.Wang (Eds.), Handbook

of Pattern Recognition and Computer Vision, World

Scientific Publishing Co., Singapore, pp.235-

276(Chapter2).

Ojala T., Pietikäinen M., and Mäenpää T. (2002)

Multiresolution Gray-Scale and Rotation Invariant

Texture Classification with Local Binary Patterns,

IEEE Transactions on Pattern Analysis and Machine

Intelligence 24, pp. 971-987.

Turtinen M., and Pietikäinen M. (2003) Visual Training

and Classification of Textured Scene Images, The 3rd

International Workshop on Texture Analysis and

Synthesis, pp. 101-106.

Arvis V., Debain C., Berducat M., and Benassi A. (2004)

Generalization of the cooccurrence matrix for colour

images: application to colour texture classification,

Image Anal Stereol Vol.23, pp. 63-72.

Vadivel A., Sural S., and Majumdar A. K. (2007) An

Integrated Color and Intensity Co-occurrence Matrix,

Pattern Recognition Letters, Vol.28, pp. 974-983.

Palm C. (2004) Color texture classification by integrative

co-occurrence matrices, Pattern Recognition, Vol.37,

pp. 965-976.

Guo Z., Zhang L., and Zhang D. (2010) Rotation invariant

texture classification using LBP variance(LBPV) with

global matching, Pattern Recognition, Vol.43, pp. 706-

719.

Leitão A. P., Tilie S., Mangeas M., JTarel.-P., Vigneron

V., and Lelandais S. (2003) Road Singularities

Detection and Classification, ESANN’2003

Proceedings – European Symposium on Artificial

Neural Networks(Belgium), pp. 301-306.

Zwiggelaar R. (2004) Texture Based Segmentation:

Automatic Selection of Co-Occurrence Matrices,

Proceedings – 17th ICPR’04, Vol.01, pp. 588-591.

Jia K., Liu N., Wang L., and Cheng L. (2009) Pavement

Scene Interpretation and Obstacle Detection by Large

Margin Image Labeling, Invited Workshop on Vision

and Control for Access Space (VCAS) @ ACCV.

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

340