CONTOURLET BASED MULTI-EXPOSURE IMAGE FUSION

WITH COMPENSATION FOR MULTI-DIMENSIONAL

CAMERA SHAKE

S. Saravi and E. A. Edirisinghe

Digital Imaging Group, Loughborough University, Loughborough, U. K.

Keywords: Image Registration, Multi-exposure Image Fusion, Translational and Rotational Camera Shake, Wavelet

Based Contourlet Transform, CPD, SIFT, RANSAC.

Abstract: Multi-exposure image fusion algorithms are used for enhancing the perceptual quality of an image captured

by sensors of limited dynamic range by rendering multiple images captured at different exposure settings.

One practical problem overlooked by existing algorithms is the compensation required for image de-

registration due to possible multi-dimensional camera shake that results within the time gap of capturing the

multiple exposure images. In our approach RANdom SAmple Consensus (RANSAC) algorithm is used to

identify inliers of key-points identified by the Scale Invariant Feature Transform (SIFT) approach

subsequently to the use of Coherent Point Drift (CPD) algorithm to register the images based on the selected

set of key points. We provide experimental results on set of images with multi-dimensional (translational

and rotational) to prove the proposed algorithm’s capability to register and fuse multiple exposure images

taken in the presence of camera shake providing subjectively enhanced output images.

1 INTRODUCTION

In the past decade there have been significant

developments in the field of High Dynamic Range

(HDR) imaging technology. However, the lack of

advances in image/video encoding algorithms and

display technology makes it important to find

alternatives to rendering HDR scenes using SDR

imagery. Multi-exposure image fusion involves the

fusion of multiple consecutive images of the same

scene taken at quick succession by a SDR camera. A

practical problem that arises is camera shake that

can cause severe de-registration of the multiple

images that invalidates the direct applicability of

many existing algorithms. To this effect we propose

the use of an image registration algorithm as a pre-

processing stage to multi-exposure image fusion.

A significant number of multi-exposure image

fusion algorithms have been proposed in the relevant

literature (Zafar, Edirisinghe, and Bez 2006 –

Alsam, 2010). However, only few algorithms focus

on the problem of camera shake (Tomaszewska and

Mantiuk, 2007 – Lee and Wey 2009).

Image fusion can take place on pixel-level,

feature-level, and decision level. In the literature,

image fusion has been based on pyramidal fusion,

contourlet fusion and wavelet fusion. The wavelet

transform (WT) results are acceptable in natural

images, but smooth edges cannot be detected

powerfully because it is restricted to three directions,

horizontal, vertical and diagonal. Contourlet

transform (CT) is a two dimensional transform

which has the capability to effectively represent

images containing curves and features. In CT, multi-

scale and multi-direction analyses are done

separately, using the Laplacian Pyramid (LP)

transform and then the Directional Filter Bank

(DFB). This method is redundant. WBCT (Eslami

and Radha, 2005) is a solution for above weakness

which is non-redundant and has a multi-resolution

structure. The advantage of using WBCT is that it

solves the problems of multi-scale localization,

directionality and anisotropy.

2 PROPOSED SYSTEM

The proposed system consists of two key parts, first

part uses a base image of a multi exposure image set

to register all remaining images and second part

fuses the registered, multi-exposure image set.

182

Saravi S. and A. Edirisinghe E..

CONTOURLET BASED MULTI-EXPOSURE IMAGE FUSION WITH COMPENSATION FOR MULTI-DIMENSIONAL CAMERA SHAKE.

DOI: 10.5220/0003836001820185

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 182-185

ISBN: 978-989-8565-03-7

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

2.1 Image-Registration

The proposed approach is based on the selection of a

significant set of matching points, i.e. key-points,

between a selected base image and an image to be

registered and subsequently using them to calculate

the transformation matrix for image registration.

2.1.1 SIFT based Key-point Selection

SIFT (Lowe, 2004) is an algorithm that is capable of

detecting and describing local features of an image.

It’s invariance to rotation, scale and translation has

made it a popular algorithm in many areas of

computer vision. It is also invariant to illumination

changes and robust to local geometric distortion.

In the proposed approach to image registration we

select a base image from amongst the set of multi-

exposure images and an image to be registered to it

and use SIFT to find significant key-points in both

images. Due to large number of feature points that

may be selected by SIFT in execution the matching

of key-points between two images to be registered; it

is likely that two non-corresponding points may

match as they result in the minimum distance.

Therefore reducing keypoint outliers prior to the

matching key-points will improve the reliability of

matching and hence the outcome of the final task.

2.1.2 Using RANSAC to Remove Matching

Point Outliers

RANSAC (Fischler and Bolles, 1981) algorithm is

an iterative method to approximate factors of a

mathematical form, from a set of experimental data

which include outliers. RANSAC is able to do

robust estimations of the model factors; it can

estimate the parameters with a high degree of

accuracy even when a significant amount of outliers

are present in the data set.

In our approach all SIFT key-points points

resulting from the stage described in section 2.1.1

from the base image and the image being registered

are fed to RANSAC algorithm. It fits a model to

these inlier points and tests the points from the

image being registered against the fitted model, and

if a point fits to the model it will be regarded as

inlier. The model is recalculated from all inliers and

then the error is estimated relative to the model. The

outlier key-points are finally removed from the key-

points of the image.

2.1.3 CPD Algorithm for Registration

In this section we describe the use of the CPD

(Myronenko, 2010) algorithm to register the images,

accordingly preparing them to be fused in the

subsequent stage of the proposed approach. CPD is

based on ‘Point Set Registration’ and aims to form

links between two given sets of points to find the

corresponding features and the necessary

transformation of these features that will allow the

images to be registered.

There are two methods for registering an image

in CPD, rigid and non-rigid point set approaches,

based on the transformation model principal. The

key characteristic of a rigid transformation is

“distance between points are preserved”, which

means it just can be used in the presence of

translation, rotation, and scaling, but not under

scaling and skew. Affine, the transformation we

have used in our work is a non rigid transformation,

which provides the opportunity of registering under

non-uniform scaling and skew.

2.2 Multi-Exposure Image Fusion

Once all images are registered to the base image we

use WBCT to identify regions of maximum energy

from within the multiple exposure images, with the

idea of combining these to form the perceptually

best quality fused image.

2.2.1 Multi-exposure Image Fusion

The basic idea of the fusion algorithm is to compare

the corresponding sub-bands of the WBCT

decomposition of each multi-exposure image set and

to determine the one with the highest energy, i.e.

most detailed. We propose the use of different fusion

rules depending on the frequency band of each sub-

band being fused, as follows:

Fusion of High Frequency Contourlet Sub-bands

:

The high frequency sub-bands contain details of an

image such as texture and edges where as the low

frequency sub-bands contains details of more

spread-out nature or fuzzy, such as background

information. By calculating the absolute energy of

high-frequency coefficients, the energy of a region

can be obtained. A higher value means sharper

changes. Region energy E of a high frequency sub-

band E

H

(where H = (l,m,n), l-level of wavelet

decomposition, m-LH, HL and HH bands of wavelet

decomposition, n-directional Contourlet sub-band)

of an image X can be obtained as follows:

E

(

)

=

f

(

)

(

,

)

∈

(

x,

y

)

(1)

f

H

(x,y) is the coefficient at location (x,y) of the

CONTOURLET BASED MULTI-EXPOSURE IMAGE FUSION WITH COMPENSATION FOR

MULTI-DIMENSIONAL CAMERA SHAKE

183

high frequency sub-band H=(l,m,n).

Considering that the sub-band of the multi-

exposure image having highest detail will have the

highest absolute energy, the H sub-band that

contributes towards the fused image’s H sub-band

can be defined as:

f

(

)

(

i,j

)

=

f

H

(Y)

, E

H

(Y)

=max(E

H

(i)

) |

i=1,2,...n

(2)

Where n is the number of exposures considered.

Fusion of Low Frequency Contourlet Sub-bands

:

The Low frequency sub-bands contain the fuzzy,

spread-out information. Thus the fusion rule adopted

is based on the region variances. The idea is to

divide each low frequency sub-band to i×j

rectangular sub regions and calculate the variance of

each sub region which can be obtained as follows:

V

(

)

(

x,

y

)

=(

f

(

)

(

,

)

∈

(

×

)

(i,j)(x,

y

)−

f

(

)

(

i,j

)

)

(3)

is the mean of all the coefficients in the

rectangular sub region i×j. Higher result in

variance corresponds to more details. Fusion of the

low frequent sub-bands can be obtained from

equation below:

f

(

)

(

i,j

)

=

f

L

(Y)

, E

L

(Y)

=max(E

L

(i)

) |

i=1,2,...n

(4)

Where n is the number of exposures considered.

Fusion of Low Frequency Wavelet Sub-bands

:

The low-pass wavelet sub-band of fused image is

calculated by averaging the low pass wavelet sub-

bands of the multiple exposure images, as follows:

A

(

)

(

i,j

)

=

∑

f

(

)

(i,j) ,

(

i,j

)

∈LL

(5)

Where is the number of the multi exposure

images and f

(

i,j

)

is a coefficient from the low pass

sub-band of the wavelet transform.

2.3 Reconstructing the Fused Image

After obtaining low and high frequency sub-bands of

CT and low frequency sub-band of WT as above, the

fused image is constructed using inverse WBCT.

3 EXPERIMENTAL RESULTS

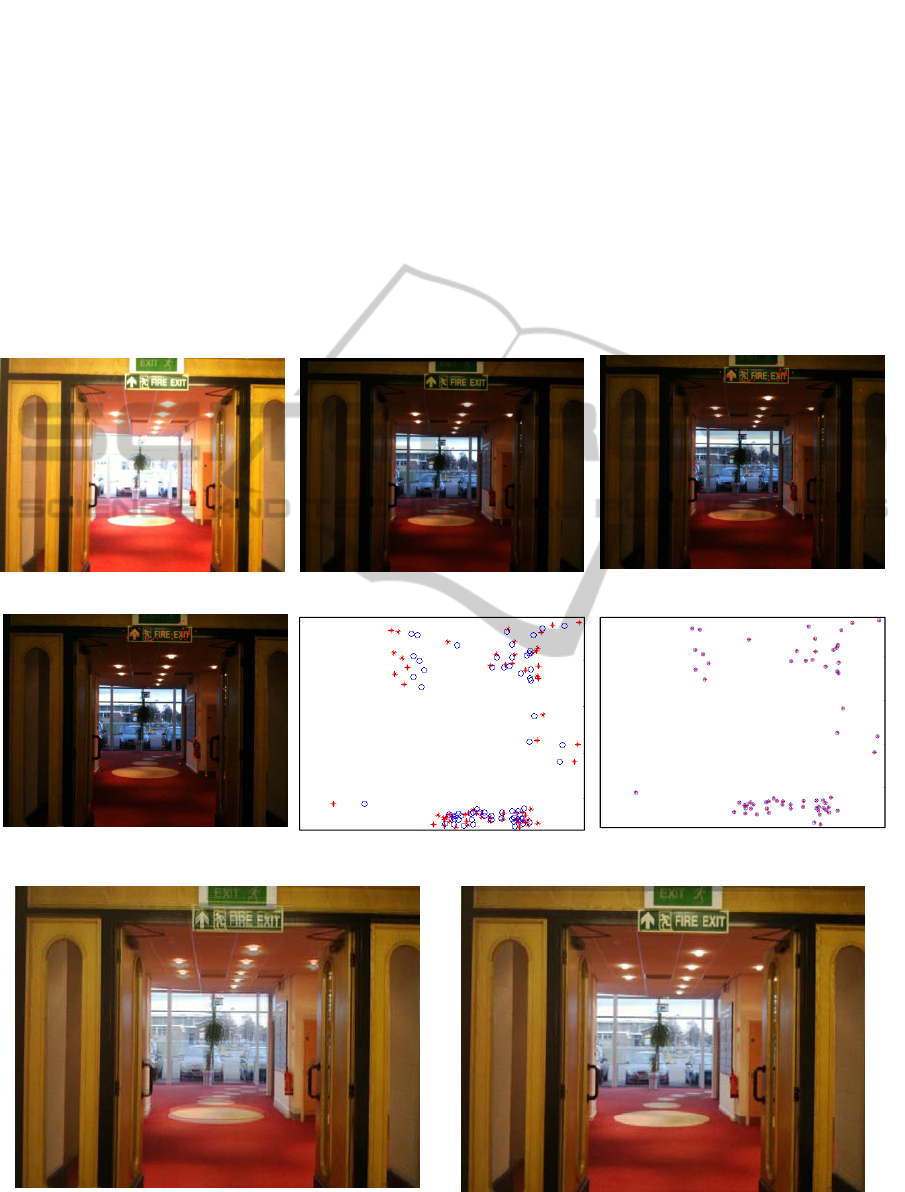

Experiments were conducted five sets of multi-

exposure images obtained with a hand held camera

(i.e. to add camera shake) for testing the image

registration prior to the multi-exposure image fusion.

The sample results illustrated in figure 1 for one

image set prove that the proposed approach is

capable of producing fused images of noticeably

good quality.

The images were taken allowing the free

movement of the camera, i.e. allowing shake. All

images were Gamma corrected before processing by

the proposed algorithms. Figure 1(a) is the base

image (over exposed) and Figure 1(b) is an

unregistered image of a different exposure setting

(under exposed), which is intended to be aligned

with the base image. The SIFT key feature points

found as a result of applying SIFT on the under-

exposed image is illustrated in Figure 1(c) [note the

SIFT key-points of the underexposed image is not

illustrated but could have been illustrated similarly].

Subsequently using the RANSAC algorithm (Figure

1(d)) the mismatched points are eliminated and

finally by using the CPD algorithm the two images

are registered [observe key-point matching with

[Figure 1(f)) and without (Figure 1(e)) prior

registration]. Figure 1(g) illustrates the fusion result

without prior registration of images indicating a

blurry and smudgy nature on some parts of the

image while Figure 1(h) illustrates the positive

impact of prior image registration using the

proposed algorithm. It can be seen that the quality

has been increased in the form of increased

sharpness and more details being observable.

4 CONCLUSIONS

In this paper a multi-exposure fusion approach has

been proposed that provides effective compensation

for camera shake. We have provided experimental

results on a standard set of multi-exposure images

with movement and a specifically captured

additional set of images to analyse the performance

of the proposed approach. Results indicate that the

proposed approach is capable of effective multi-

exposure image fusion under camera shake.

REFERENCES

Alsam, A. (2010). Multi Exposure Image Fusion. NIK.

Retrieved from: http://tapironline.no/fil/vis/344

Eslami, R. & Radha, H. (2005). Wavelet-based Contourlet

Packet Image Coding. Conf. on Image Proc.3189-

3192.doi:10.1109/CIP.2004.1421791

Fischler, M. & A., Bolles, R. (1981). Random sample

consensus: A paradigm for model fitting with

applications to image analysis and automated

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

184

cartography. 381-395. doi:10.1145/ 358669.358692

Lee, S. & Wey, H. (2009). Image registration for multi-

exposed HDRI and motion Deblurring. Computational

Imaging. doi:10.1117/12.805767

Lowe, D. (2004). Distinctive image features from scale

invariant keypoints. Intl. Computer Vision. 60(2). 91-

110.

Myronenko A., (2010). Point-Set Registration: Coherent

Point Drift. IEEE Trans. on Pattern Analysis and

Machine Intelligence, 32(12), 2262-2275

Tomaszewska, A. & Mantiuk, R., (2007). Image

registration for multi-exposure high dynamic range

image acquisition. Intl. Conf. on Computer Graphics,

Visualization & Vision. 49-56.

Zafar, I., Edirisinghe, E. & Bez, H. (2006). Multi-exposure

& focus image fusion in transform domain, IET Conf.

606.doi:10.1049/cp:20060600

APPENDIX

(a) Over Exposed (b) Under Exposed

(c) SIFT keypoint extraction on under

exposed image

(d) Keypoints that remain after RANSAC

(e) Keypoints before registration using

CPD

(f) Keypoints after registration using CPD

(g) Fused image without prior registration

(h) Fused image with prior registration

Figure 1: Experimental dataset.

CONTOURLET BASED MULTI-EXPOSURE IMAGE FUSION WITH COMPENSATION FOR

MULTI-DIMENSIONAL CAMERA SHAKE

185