THE DERIVATIVE MODEL APPROACH

TO IMPROVING ICT USABILITY

Ritch Macefield

Shannon-Weaver Ltd, 19 Cornovian Close, Perton, Wolverhampton, WV6 7NU, U.K.

Keywords: Human Computer Interaction, HCI, Usability, Mental Model, Conceptual Model, Derivative Model

approach, Unified Modelling Language, UML.

Abstract: This paper describes the novel “Derivative Model approach” to improving the usability of ICT systems,

along with a formal usability study to prove the concept of this approach. This approach is grounded in, and

makes contemporary, successful research carried out in the 1980s that applied thinking around conceptual

and mental models to the field of Human Computer Interaction (HCI). The study found initial evidence that

this approach might significantly improve usability in terms of task effectiveness but not in terms of task

efficiency. The study also found evidence that the benefits of the approach might improve along with task

complexity.

1 INTRODUCTION

It is generally accepted that we have made

considerable progress over the last decade in

improving ICT usability. For example, over this

period, the mean task completion rates for the

WWW based systems that pervade today in the area

of 78% (e.g., Nielsen (2010). However, with a 22%

task failure there remains significant room for

improvement.

It seemed to this author that much of the focus

for seeking progress in this area can be categorised

into two main areas. The first is by the continued

application of established interface design guidelines

such as those originated in Nielsen (1991). In other

words, we attempt to improve usability by making

the interface intrinsically more usability. The second

is by providing, or improving, one or more of the

following utilities: on-line help facilities, free text

search facilities and site maps (e.g., Nielsen, 1991;

2002; 2005). In other words, we attempt to improve

usability by augmenting the interface with well-

established user support utilities.

However, there is another approach to

progression in this problem area that is qualitatively

different to the two cited above – this is what the

author terms the Derivative Model approach. The

fundamental idea with this approach is that the

usability of a modern ICT system, such as a WWW

based system, might be improved if we provide the

user with a conceptual model of the system that is

derived directly from the conceptual model that was

used to design the system. The rationale being that

this provision might improve the accuracy of a

user’s mental model of the system and that, in

keeping with the ideas set out in Norman (1983),

this leads to an improvement in usability.

2 CONCEPTUAL MODELS AND

MENTAL MODELS

To understand the derivative model approach it is

first necessary to establish some founding principles

related to conceptual and mental models and, in

particular, how these ideas relate to ICT systems:

• A model of an artefact is some form of

abstraction that lacks the full detail or accuracy

present within the artefact itself; therefore, in

producing a model, some properties of the

artefact are ignored, simplified or distorted

(Macefield, 2005).

• A conceptual model implies an abstraction

concerned only with the key, or fundamental,

properties of an artefact. Such models are often

used to explain the basic principles of how

something works (Macefield, 2005).

• Most cognitive scientists agree that our

perception of the world is constructed from

190

Macefield R..

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY.

DOI: 10.5220/0003840001900199

In Proceedings of the 4th International Conference on Agents and Artificial Intelligence (ICAART-2012), pages 190-199

ISBN: 978-989-8425-95-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

mental models. We use these models to explain

our world, to anticipate events, and to reason.

This insight originated with Plato, was first

formalised by Craik (1943) and has been widely

applied to HCI thinking (e.g., Norman, 1983;

Johnson-Laird et al., 1983; Macefield, 2005).

• Norman (1983) crucially distinguished between

conceptual models; which exist in a concrete

form, e.g., a diagram and mental models; which

exist only in someone’s mind. Norman (1983)

further explained how a conceptual model can be

provided as an explanation of an ICT system

which the user will then interpret into a mental

model.

• Norman (1983) hypothesised that without being

provided with a conceptual model, users will

always develop a mental model to explain the

behaviour of an ICT system, but argued that, in

most cases, this model will be (highly)

inaccurate. Empirical research carried out by

Mayer & Bayman (1981) and Bayman & Mayer

(1983) supported this argument.

• Norman (1983) argued that, even if provided

with a conceptual model the resulting mental

model formed by the user will often differ, and

the two models are never likely to overlap

completely. Research carried out by Khella

(2002) supported this argument.

3 THE MODEL APPROACH

Using the principles set out in Section 2, researchers

in the 1980s hypothesised that ICT usability

generally improves along with the accuracy of a

user’s mental model. So, whilst accepting the

arguments in Norman (1983) that no mental and

conceptual models are ever likely to overlap

completely, they set out to improve the accuracy of

users’ mental models by providing users with

conceptual models of the ICT systems with which

they were interacting.

In some research initiatives, these models were

provided in the form of a metaphor, e.g., Borgman

(1986) used a card index metaphor to explain how a

library system worked, whilst other research used a

developer eye model whereby users were provided

with, e.g., the entity-relationship diagrams used to

design the system. These are both examples of what

the author terms the model approach to improving

usability.

The principal empirical studies that explored the

model approach were: Mayer & Bayman (1981),

Foss et al. (1982), Bayman & Mayer (1983), Kieras

& Bovair (1984), Borgman (1986) and Frese &

Albrecht (1988). These studies produced three

finding that are key to this paper:

• All of the studies found that the model approach

can lead to general improvements in usability

that are statistically significant.

• Four of the studies found that the effectiveness of

the approach increased along with tasks

complexity.

• The study by Kieras & Bovair (1984) found that

it was particularly important that the conceptual

model includes a “system topology”; which

defines the key components of the system and

how these components relate to each other. They

also argued that the importance of providing a

system topology increase along with task

complexity.

Although these findings were both interesting

and encouraging, work on the model approach

diminished at the end of the 1980s.

The main reason for this seems to be that,

despite many valiant attempts, researchers failed to

develop any generalised theory of user’s mental

models (e.g., Borgman, 1986; Carroll & Olson,

1988; Sasse, 1991).

This failure was critical because it remained

impossible to directly study a user’s mental model

and, consequently, impossible to prove, or even

explore, any causation mechanism that would

explain how providing a (particular) conceptual

model might have (beneficially) influenced a user’s

mental model. Put more simply: whilst we could

quite easily demonstrate that providing users with

(better) conceptual models can improve ICT

usability, these researchers demonstrated that we are

not able to explain how this happens, or even

demonstrate that this involves a user’s mental model

at all.

Of course, all researchers working in this area

would want to be able to explain any causation

mechanisms that led to their results. Therefore, it is

little surprise that many researchers have (perhaps

sometimes naively) been seduced down this path.

However, the reality is that we are presently limited

to conjecture to explain any causation mechanisms

with the model approach.

Despite this limitation, this author believes that

the model approach retains merit: just because we

might not understand, or be able to prove, how this

approach works, the fact that is does seem to work

makes it well worthy of attention.

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY

191

4 THE DERIVATIVE MODEL

APPROACH

Given the author’s belief in the fundamental merits

of the model approach, a research initiative was

established that set out to build on previous work in

this area by both adding some novel thinking and

making the approach more contemporary; in

particular, making it applicable to the WWW based

systems that are so pervasive today.

The first step in this initiative was to addresses

two key questions:

1. What might be the best type of conceptual

model to present to users as an explanation of

an ICT system?

2. Through what medium should this model be

communicated to users?

4.1 Type of Conceptual Model

In Section 3 it was explained that some of the

empirical studies that explored the model approach

in the 1980s used a metaphor as the conceptual

model.

The use of metaphors was rejected outright in

this research initiative. This was because ICT

systems benefit from concepts that have little or no

equivalency in the physical world. This can make

them limited, or even misleading, in their ability to

describe an ICT system. For example, with the

windows metaphor, it is easy to understand how a

user may (quite reasonably) conclude that an ICT

window cannot be resized because that is how things

work with physical windows.

Others studies described in Section 3 used a

developer eye model whereby users were provided

with models used to design the system. This

approach is superior to using metaphors in that is

can completely and accurately explain a system’s

conceptual model. However, these models have the

serious drawback that they (inevitably) involve

esoteric notations and formalism that we can not

expect the typical user to understand. For example,

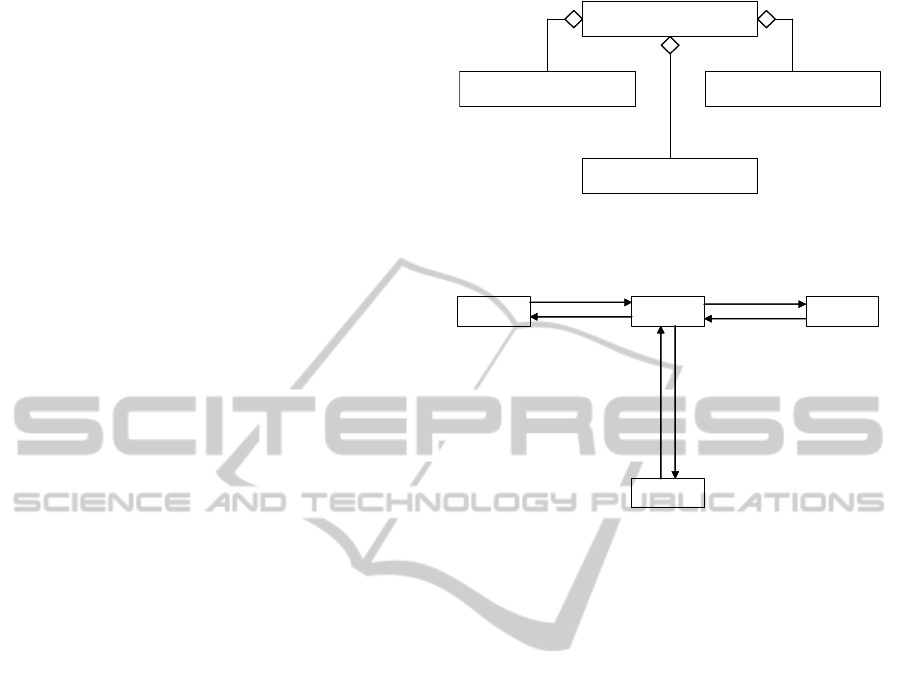

consider the Unified Modelling Language (UML)

Class Collaboration Diagram in Figure 1. As

explained by e.g., Hunt (2000), UML Class

Collaboration Diagram are often the tool of choice

for technical architects designing modern ICT

systems. However, it is easy to understand how the

typical user would be overwhelmed, frustrated or

confused if presented with such a diagram as an

explanation of a system.

<<Business>>

Car

<<Business>>

Door

1

2..5

<<Business>>

Wheel

1

4

<<Business>>

SunRoof

1

0, 1

Figure 1: Example of a UML Class Collaboration

Diagram.

Car DoorWheel

Sun Roof

Is used to make

Has 4

May have up to 5

Is used to make

Is used to make

May have one

Figure 2: Example UCCD Diagram derived from the UML

Class Collaboration Diagram shown in Figure 1.

To address this drawback of developer eye

models, the author sought a means by which these

(UML) Class Collaboration Diagrams could easily

be derived into a form that typical users might

understand, but without loosing any information or

accuracy contained within the model. It is this

feature of the author’s work that gave rise to the

term “derivative” within the derivative model

approach.

Meeting this challenge resulted in the idea of a

User-centred Class Collaboration Diagram

(UCCD), and an example of the UCCD which is

derived from the UML Class Collaboration Diagram

shown in Figure 1 can be seen in Figure 2.

As can be seen from figures 1 &2, the method

for deriving a UML Class Collaboration Diagram

into a UCCD is simply that:

• the class package names (in stereotypes the

class title) are removed,

• the class names are made bold,

• the text size is increased,

• each relationship is shown using two

unidirectional arrows,

• any multiplicity of the class collaborations are

explained using short phrases centred along the

association arrows,

• concatenated words are separated e.g., the class

title “SunRoof” is changed to “Sun Roof”.

ICAART 2012 - International Conference on Agents and Artificial Intelligence

192

In keeping with the advice in Kieras & Bovair

(1984), it can also be seen from Figures 1 & 2 that a

key feature of UCCDs is their ability to clearly

communicate the system topology.

The rationale for the UCCD shares some

similarity with “Object, View and Interaction

Design” (OVID) developed by Robert et al. (1998)

in that both UCCDs and OVID attempt to make

modelling ICT systems using the UML more

relevant to the discipline of HCI.

However, UCCDs and OVID differ greatly in

two important ways. Firstly, a UCCD is simply a

type of diagram for representing the conceptual

model of an ICT system, whereas OVID is a whole

method for actually designing ICT systems.

Secondly, in keeping with their primary purpose, the

diagrams used within OVID retain a high degree of

formalism and, in this author’s opinion, remain

esoteric to the point of making them unsuitable for

presentation to the typical user as an explanation of

an ICT system. In summary, OVID is (and was

designed to be) a device targeted at ICT system

designers, whereas UCCDs are a device targeted at

ICT system users.

4.2 Communicating the Conceptual

Model

Within the empirical studies cited Section 3 the

conceptual model was presented to users by either

face-to-face teaching or some form of hard copy

user manual. These communication media were

typical of ICT usage in the 1980s when these studies

were conducted; however they are clearly

inappropriate to e.g., the WWW based systems that

pervade today.

Given this, it was decided that the Derivative

Model approach would communicate the conceptual

model to users through self-explanatory video

presentations that used voice and screen capture

technology to explain the UCCDs in a ‘rich’ way.

The voice input for these presentations was

simply to read out the relationships on the UCCD

e.g., “A wheel is used to make a car”; with emphasis

being placed on the class name. The idea here being

that this makes the information more attractive and

easier to cognise for the user.

Importantly, it was anticipated that this

communication medium would have considerably

familiarity to modern ICT users since it is now

widely used to explain key features of ICT systems,

via online services such as YouTube and Vimeo.

However, some (arguably) novel thinking here was

that, rather than these video presentations being

provided externally to the system (through third

party services), the author envisaged them being

embedded, as a key featured, within the system

itself; perhaps as part of the system’s help facility.

Having developed the Derivative Model

approach in theoretical terms, the next stage in this

research initiative was to conduct a formal usability

study to act as an initial ‘proof of concept’ for the

approach.

5 INITIAL PROOF OF CONCEPT

USABILITY STUDY

The proof of concept usability study for the

Derivative Model approach was specifically

designed to have three key features as follows:

• In keeping with the overall goals for this

research initiative, the study used a modern

WWW based ICT system as the test artefact.

• Some of the empirical studies carried out in the

1980s (cited in Section 3) compared the model

approach with other approaches to improving

usability e.g., providing conventional training

manuals and various training methods. Other

studies compared the effectiveness of one type

of conceptual model to another. Another type of

study simply compared usability with and

without the provision of a conceptual model, so

that one of two test groups simply acted as a

neutral control. The proof of concept usability

study for the Derivative Model approach was of

this latter type. This is because a primary aim

of this study was to identify if the Derivative

Model approach might add sufficient value to a

modern ICT system such that system vendors

might consider the extra cost and time involved

in providing a conceptual model to be justified.

• In keeping with the findings of the empirical

studies cited in Section 3, the study

incorporated features to determine if any

benefits of the Derivative Model approach

might increase along with task complexity.

5.1 Test Artefact

In keeping with the overall research aims here, the

test artefact was a WWW based prototype e-

Learning developed using HyperText Mark-up

Language and Cascading Style Sheets which from

hereon will be referred to as “the prototype”.

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY

193

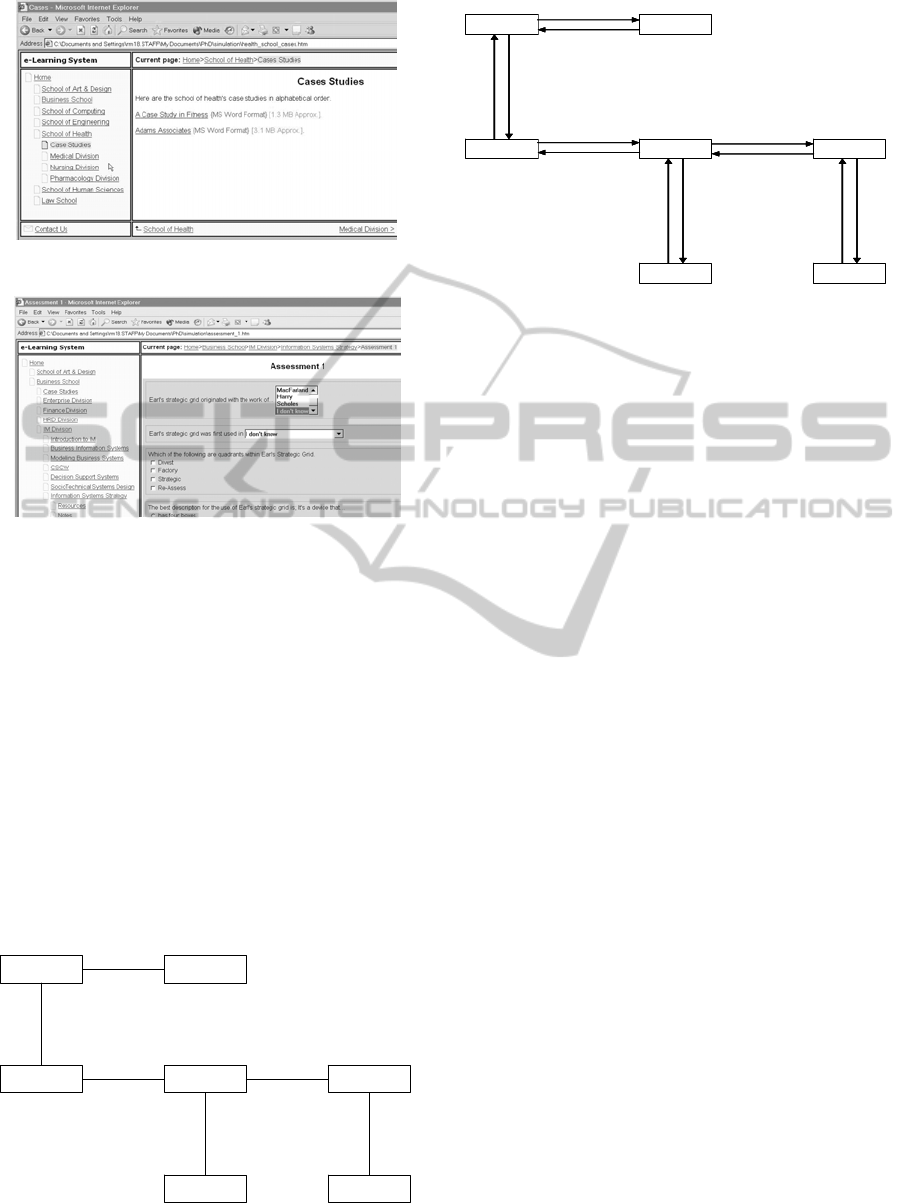

Figure 3: Example screenshot from prototype.

Figure 4: Example screenshot from prototype.

As illustrated in Figures 3 & 4, the prototype had a

hierarchal structure whereby a fictional university

was comprised of seven schools e.g., the “Business

School”. Each school had an area for case studies

and a number of divisions e.g., the “Information

Management Division”. Each division has a number

of modules (courses) e.g., “Information Systems

Strategy” and each module had a number of

resources e.g., notes and assessments.

5.2 Conceptual Model for Test Artefact

Figure 5 shows the UML Class Collaboration

Diagram used to design the Prototype, and Figure 6

shows the UCCD that was derived for the prototype

using the method set out in Section D.1.

<<Business>>

Module

0..*

<<Business>>

Assessment

<<Business>>

Division

<<Business>>

CaseStudy

<<Business>>

School

<<Business>>

Question

<<Business>>

Notes

1

1

1..*

1..*

1

1..*

0..*

0..3

1..*

1..*

1..*

Figure 5: UML Class Collaboration Diagram for

Prototype

.

Module

May have many

TestDivision

Case StudySchool

QuestionNotes

Belongs to one

Belongs to one

Has many

Has many

Belongs to one

M ay have up to 3

Is used on at least one

Are used on at least one

May have many

Is used on at least one

Has at least one

Figure 6: UCCD for Prototype.

In keeping with the ideas set out in Section 4.2, a

third party, who had been briefed on the Derivative

Model approach, used the UCCD illustrated in

Figure 6 to produce the necessary self-explanatory

video presentation using standard screen and voice

recording software.

The recording, editing and final run time for the

presentation was as follows:

Recording Time (mins.) 8

Editing Time (mins.) 4

Run Time (mins.) 1

From this data it can reasonably be concluded that

production of the self-explanatory video

presentations was not particularly time consuming.

The process was also not particularly onerous.

Similarly, the total run-time was very short,

implying that users viewing the presentation would

seem unlikely to find using them particularly

onerous.

5.3 Study Groups

There were three important features of the study

groups:

• In keeping with the study’s aim that it should

investigate any value that might be added by

the Derivative Model approach, the study had

an asymmetric design involving two groups of

participants. The control group (G1) used the

prototype without viewing the self-explanatory

presentations. By contrast, the experimental

group (G2) used the prototype shortly after

viewing the presentations.

• As the study was a proof of concept the author

was seeking quantitative results that were

statistically significant. Therefore, using the

advice provided by Macefield (2009), the study

group size was set to 12 participants, making

ICAART 2012 - International Conference on Agents and Artificial Intelligence

194

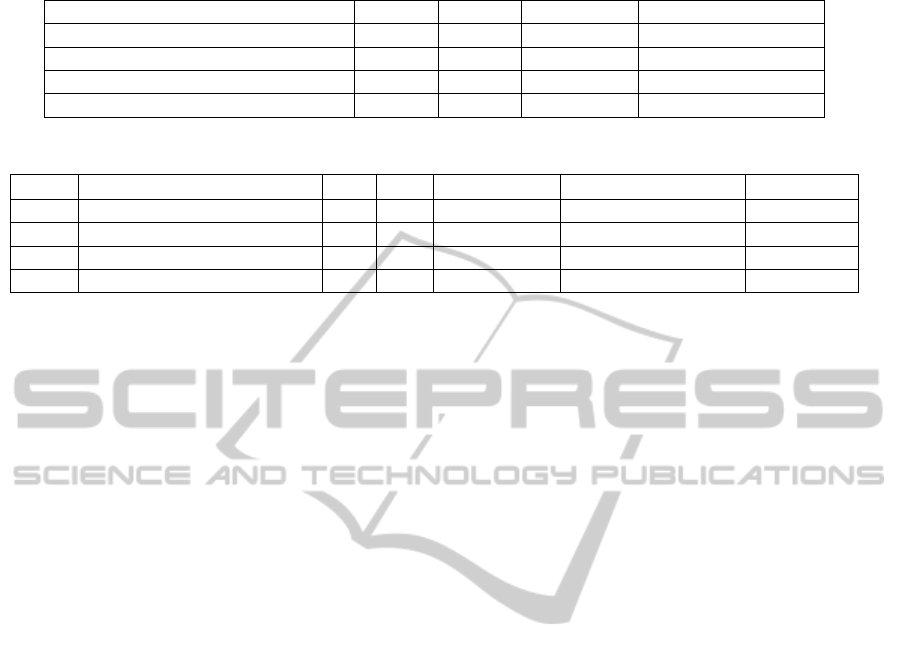

Table 1: Overall results for proof of concept usability study.

Metric G1 G2 Hypothesis Difference (pValue)

Efficiency (mean time, secs) 395 335 G1>G2 0.103

Satisfaction with efficiency (Median) 6 6.5 G1<G2 0.364

Effectiveness (Mean failures) 0.52 0.31 G1>G2

0.009

Satisfaction with effectiveness (Median) 5 6 G1<G2

0.017

Table 2: Results for measured tasks four and eight.

Task Metric G1 G2

Hypothesis Difference (pValue)

G1 & G2

4 Efficiency (mean time, secs) 53 43 G1>G2 0.36 48

4 Effectiveness (Mean failures) 0.46 0.25 G1>G2 0.24 0.35

8 Efficiency (mean time, secs) 136 121 G1>G2 0.25 129

8 Effectiveness (Mean failures) 0.77 0.16 G1>G2

0.004

0.47

24 participants for the study as a whole.

• To help increase the validity of the study, all

participants were recruited from a cohort of 1

st

year university students (in the UK) and

randomly assigned to one of the two study

groups. This was done whilst ensuring that

there was a broadly equal distribution of age

and gender across the groups. Similarly,

participants all had: English as their first

language, no disabilities in relation to ICT, and

were examined to ensure they had the requisite

baseline PC and internet skills.

5.4 Facilitation and Recording

The study consisted of 8 small tasks that were

indicative of using a modern e-Learning system e.g.

navigating to particular areas of the prototype,

locating a particular case study and completing a

simple on-line test. Four of these tasks were defined

as “measured tasks”. These were tasks to which

metrics were applied and which were specifically

designed to detect any affect of the Derivative

Model approach. The other four tasks were there to

provide a ‘warm up’ for participants and form a

coherent ‘link’ between the measured tasks, so that

the tasks ‘flowed’ better for the participants i.e.,

made the test a little more realistic.

In keeping with the study’s design features set

out at the beginning of this section, measured tasks

four and eight were specifically included to

investigate whether or not any benefits of the

Derivative Model approach increased along with

task complexity. These tasks were deliberately made

similar in that they both required participants to

navigate to a particular case study within the

prototype by clicking links. However, task eight was

designed to be significantly more complex than task

four in three ways:

• Completion of task four required a minimum of

two mouse clicks, whilst task eight required

three clicks.

• With task four, participants were provided with

the exact name of the case study to locate. By

contrast, the instruction to participants was

vaguer with task eight whereby participants

were simply asked to locate a case study

“related to fitness”.

• The breadth of the navigation across the

prototype’s structure was greatly increased with

task eight. Unlike task four, completion of task

eight required participants to navigate outside

of the “Businesses School”, where they were

located for all previous tasks in the test, and

into the “School of Health” i.e. it involved

navigating through a higher level in the

prototype’s hierarchy.

5.5 Metrics

The primary metrics used in the study assessed

usability in terms of how it is defined in ISO 9241-

11:1998 – effectiveness, efficiency and satisfaction

as follows:

• Efficiency data was collected by recording the

time taken to complete each task.

• Effectiveness was recorded using a binary value

if a participant failed a task. There were three

failure modes: The first was the participant

making more two errors with the task, which

were obviously of a fundamental nature e.g.,

looking for an on-line test in a “case studies”

section of the prototype. The second was the

participant exceeding the maximum time

allowed for the task; which was set very

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY

195

conservatively using data gained from pilot

testing. The final failure mode was the

participant giving up on the task.

• Satisfaction data was collected post-test, using

two questions from the ASQ questionnaire

developed by Lewis (1991). The first question

assessed satisfaction with effectiveness. The

second question assessed satisfaction with

efficiency.

5.6 Experimental Effects and Study

Critique

The study included the following features designed

to eliminate or minimise any confounding

experimental effects and maximise objectivity:

• The prototype was a bespoke (custom) artefact

produce specifically for this study. Therefore

none of the study participants could already be

familiar with any of its functionality.

• The prototype conformed to 28 well established

usability guidelines. This was to guard against

generic usability problems becoming an

effector in the study e.g. making some parts of

the prototype difficult (or even impossible) to

use by any participant.

• The study relied exclusively on quantitative

data measured post-test from the test recordings

and questionnaires. There was no interpretation

involved in the metrics and the study

deliberately excluded any verbal protocols.

• The moderator’s verbalizations were very

carefully scripted in considerable detail. This

included definition of all moderator inputs and

pre-emptive responses to participant’s request

for assistance. This script was applied

rigorously and consistently to all participants in

order to minimise variation in task moderation.

• A reasonable set of failure criteria for the

effectiveness metrics was clearly defined in

advance of the study and applied rigorously and

consistently to all participants by the

moderator.

• No performance feedback was provided to

participants by the moderator at any stage. This

was to protection against the “Parson’s

interpretation” of the Hawthorne effect

explained in Macefield (2007).

As explained in section 4.2, it was envisaged that

the self-explanatory presentations, inherent within

the Derivative Model approach, would be embedded

in some way into the ICT systems they explained

(possibly within a wider help facility). This raises

issues as to how users might be made aware of the

existence of these presentation and under what

circumstances they might be accessed by users.

Whilst these are important questions, they were

scoped out of this study and left as a matter for

further research. This was to ensure that these issues

did not become confounding factors in addressing

the core objectives for this stage of the research

initiative i.e., a proof of concept for the Derivative

Model approach.

Given this, the conceptual model was explicitly

presented simultaneously to all participants in G2 by

showing them the self-explanatory presentation

within in a class room setting. In keeping with the

run time for the presentation (stated in Section 5.2)

these sessions lasted approximately one minute.

6 RESULTS AND DISCUSSION

Table 1 shows the overall results for the study. The

effectiveness data was categorical and pValues for

this metric were determined using the Fisher Exact

Test. Values for efficiency data (interval) and

satisfaction data (ordinal) were determined using

Mann-Whitney U-test.

From Table 1 it can be seen that there was no

significant difference between G1 and G2 in either

efficiency or satisfaction with efficiency. However,

there were significant differences in both

effectiveness and satisfaction with effectiveness

(revise Section 5.6 for the definitions and metrics for

these satisfaction metrics).

Closer analysis of the results data revealed that

the vast majority of this difference between G1 and

G2, in terms of overall effectiveness metric, was due

to a large difference in performance across G1 and

G2 for measured task eight. Indeed, the only

statistically significant difference between G1 and

G2 for the effectiveness metric occurred with this

task.

This difference can be seen in Table 2 and was

interesting because, as set out in Section 5.4, the

primary reason for including task eight was to form

a comparison with task four, in order to help

determine if any benefits of the Derivative Model

approach increased along with task complexity.

Given this, the next step in the results analysis

was to determine if the test participants, as a whole,

found task eight (significantly) more complex than

task four as intended in the study’s design.

From the data in Table 2, it can be seen that,

across all participants, there was a very large

difference in the mean task completion time across

ICAART 2012 - International Conference on Agents and Artificial Intelligence

196

these tasks: 48 seconds for task four and 129

seconds for task eight (p=0.0005). From this, it

seems reasonable to conclude that participants

generally found task eight significantly more

challenging than task four. In turn, it seems

reasonable to argue that, in keeping with the study’s

design, this was due to the additional complexity

designed into task eight.

The next step in the analysis was to investigate

why there was no significant difference in task

efficiency across G1 and G2 with task eight, whilst

there was a significant difference in task

effectiveness. To do this the raw video data

generated from the study was reviewed in detail.

As stated in Section 5.4, task eight asked

participants to navigate to a case study related to

“fitness” within the prototype. Completion of task

seven left participants located within the “Cases

Studies” page of the “Business School” section of

the prototype. The link to the fitness case study was

(quite deliberately) not placed on this page; rather, it

was placed within the “Cases Studies” page of the

“School of Health” section. Therefore, completion

of this task first required participants to navigate to

the “School of Health” section using the menu to the

left of the page.

Review of the video data revealed that,

independent of their group, the vast majority of

participants engaged with task eight initially spent a

long time simply scrolling up and down the “Cases

Studies” page within the “Business School” section

(i.e., where they were located at the end of task

seven) before making any mouse clicks (or

performing any other type of action). It seemed that

most participants were searching for the correct link

within this page and were very reluctant to navigate

away. Indeed, across all participants, the mean time

taken to make the first mouse click accounted for

92% of the total mean time to complete, or fail with,

this task.

Of further importance, this review found that

those participants whose first mouse click was

correct (clicking on the “School of Heath” link in the

menu) would always go on to complete the task.

Further, they did this without any errors or making

any requests for assistance from the facilitator.

To summarise here, independent of group, it is

easy to argue that the key to effectiveness with task

eight was locating the first correct link, and that

most participants spent a long time looking for this

link in the wrong area of the prototype.

Other than this, the pattern of interaction with

task eight was quite different across G1 and G2.

After the initial search of the “Cases Studies” page

for the “Business School”, the majority of

participants in G1 either gave up on the task, made

multiple errors by clicking links that were (quite

obviously) wrong and/or made multiple requests for

assistance to the moderator; all of which triggered a

failure condition. By contrast, the majority of

participants in G2 eventually elected to widen the

scope of their search for the correct link, resulting in

them quickly completing the task.

Based on these findings, it seems easy to

conclude that participants in G2 benefitted from the

Derivative Model approach in the case of task eight.

This conclusion is consistent with the findings of

most of the empirical studies cited in Section 3, that

the usability benefit of providing a conceptual model

to users increase along with task complexity.

As explained in section 3, our lack of a general

theory of users’ mental models means that

exploration, or proof, of any causation mechanism

that might explain how these benefits arose in this

study is presently beyond us. Therefore, this aspect

of the study must be a matter for conjecture.

One such conjecture is that these benefits are

related to functional fixity, sometimes known as

“functional fixedness”. This phenomenon is often

explained in terms of a fable:

A man knows that he has dropped his wallet

somewhere

along the street between his home and

the neighbour he is visiting. It’s night and the street

is completely dark apart from a small area

illuminated by a security light in a shop window.

The man searches for his wallet for a long time

within this area but without success; distraught, he

stands there motionless. A stranger approaches and

enquires as to the man’s problem; she then asks why

the man has not looked anywhere else in the street –

the man replies “because this is the only place where

I can see”.

Put more formally, functional fixity occurs when

we get stuck with problems because we artificially

scope down our ‘problem space’ – hunting for a

solution in a space that is too small (see e.g.,

Dominowski & Dallob, 1995).

This phenomenon relates well to ideas of mental

and conceptual models within the context of

usability, because functional fixity can occur when a

user’s mental model is smaller in scope than the

conceptual model of the ICT system with which they

are interacting. Based on this, it is easy to conjecture

that, independent of group, the participants in this

study experienced a functional fixity ‘trap’ with task

eight whereby they got stuck trying to find the

necessary link within the wrong page and were

reluctant to widen the scope of their search.

However, participants in G2 were far more likely to

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY

197

ultimately escape this trap due to the better mental

model they had developed as a result of the

conceptual model provided to them within the

Derivative Model approach - knowledge that may

well have been outside their consciousness.

7 CONCLUSIONS AND FURTHER

RESEARCH

Conventional wisdom in user interface design is that

conceptual (structural) information, such as that

presented to the experimental group (G2) in this

study, is in the domain only of ICT developers, not

ICT users. Indeed, some might argue that a very

rationale of good user interface design is to isolate

users from such information. However, this research

initiative has made contemporary an alternative

perspective on improving ICT usability, that

originated in the 1980s, whereby we seek to leverage

users’ mental modelling capability specifically

through the provision of such information.

The proof of concept study within this initiative

was small in scope and leaves open many areas for

conjecture and further research. Key amongst these

are: whether the approach can scale to real

contemporary pervasive ICT systems and the tasks

that these systems involve, how (practically) the

conceptual information is best communicated to

users, and whether or not users would (want to)

make use of such information and in what

circumstances.

However, the study has provided evidence that

this approach may be a viable means of improving

task effectiveness with such systems, particularly as

task complexity increases. Therefore, this author

argues that the Derivative Model approach is worthy

of further research, within the wider context of this

alternative perspective on progressing ICT usability.

REFERENCES

Bayman, P. & Mayer, R. E. (1983). A diagnosis of

beginning programmers' misconceptions of BASIC

programming statements. Communications of the

Association for Computing Machinery (ACM). 26 (1),

677-9.

Borgman, C. L. (1986). The user’s mental model of an

information retrieval systems: an experiment on a

prototype online catalogue. International Journal of

Man-machine Studies. 24 (1), 47-64.

Carroll, J. M. & Olson J. R. (1988). Mental models in

human computer interaction. In: Helendar, M.

Handbook of Human Computer Interaction.

Amsterdam: Elsevier. 45-65.

Craik, K (1943). The nature of explanation. Cambridge:

Cambridge University Press.

Dominowski, R. L. & Dallob, P. (1995). Insight and

problem solving. In: Sterneberg R. J. & Davidson J. E.

The Nature of Insight. Cambridge, Mass: MIT Press.

33-62.

Foss, D. J., Rosson, M. B. & Smith, P. L. (1982).

Reducing manual labor: an experimental analysis of

learning aids for a text editor. In: Proceedings of the

Human Factors in Computing Systems Conference,

Gaithersburg, Maryland 15-17 March 1982, pp.332-6.

Frese, M. & Albrecht, K. (1988). The effects of an active

development of the mental model in the training

process: experimental: results in word processing.

Behaviours and Information Technology. 7 (1), 295-

304.

Hunt, J. (2000). The unified process for practitioners:

object-oriented design, UML and Java. London:

Springer.

Johnson-Laird, P. N. (1983). Mental models. Cambridge:

Cambridge University Press.

Khella, K. (2002). Knowledge and mental models in HCI.

Available: www.cs.umd.edu/class/fall2002/cmsc838s/

tichi/knowledge.html. Last accessed 19 February

2011.

Kieras, D. E. & Bovair, S. (1984). The role of a mental

model in learning to operate a device. Cognitive

Science. 8 (1), 255-73.

Lewis, J. R. (1991). Psychometric evaluation of an after-

scenario questionnaire for computer usability studies:

The ASQ. SIGCHI Bulletin. 23 (1), 78-81.

Macefield, R. C. P. (2005). Conceptual Models and

Usability. In: GHAOUI, C Encyclopaedia of Human

Computer Interaction. Texas: Texas: Idea Group Inc..

112-9.

Macefield, R. C. P. (2007). Usability Testing and the

Hawthorne Effect. Journal of Usability Studies. 2 (3),

145-54.

Macefield, R. C. P. (2009). How to specify the participant

group size for usability studies. Journal of Usability

Studies. 5 (1), 34-45.

Mayer, R. E. & Bayman, P. (1981). Psychology of

calculator languages: A framework for Describing

Difference in User’s Knowledge. Comm. ACM. 24 (8),

511-20.

Nielsen, J. (1991). Ten Good Deeds in Web Design.

Available: www.useit.com/alertbox/991003.html. Last

accessed 7 October, 2010.

Nielsen, J. (2002). Site Map Usability, 1st study.

Available: www.useit.com/alertbox/sitemap-usability-

first-study.html. Last accessed 7 October, 2010.

Nielsen, J. (2005). Ten Usability Heuristics. Available:

www.useit.com/papers/heuristic/heuristic_list.html.

Last accessed 7 October, 2010.

Nielsen, J. (2010). Progress in Usability: Fast or Slow?.

Available: www.useit.com/alertbox/usability-progress-

rate.html. Last accessed 7 October, 2010.

Norman, D. A. (1983). Some observations on mental

models. In: Gentner, D. & Stevens, A. L. Mental

Models. London: Erlbaum. 7-14.

ICAART 2012 - International Conference on Agents and Artificial Intelligence

198

Robert, D., Berry, D., Isensee, S. & Mullaly, J (1998).

Designing for the user with OVID. London:

MacMillan.

Sasse, M. A. (1991). How to T(r)ap Users' Mental Models.

In: Tauber, M. J. & Ackermann, D. Informatics and

Psychology Workshop. Amsterdam: Elsevier. 59-79.

THE DERIVATIVE MODEL APPROACH TO IMPROVING ICT USABILITY

199