AN INEXPENSIVE 3D STEREO HEAD-TRACKED DUAL VIEWING

SINGLE DISPLAY

Armando de la Re

1

, Eduardo Martorell

1

, Gustavo Rovelo

1

, Emilio Camahort

2

and Francisco Abad

2

1

Departamento de Sistemas Inform

´

aticos y Computaci

´

on, Universitat Polit

`

ecnica de Val

`

encia,

Camino de Vera S/N, 46022 Valencia, Spain

2

Instituto Universitario de Autom

´

atica e Inform

´

atica Industrial, Universitat Polit

`

ecnica de Val

`

encia,

Camino de Vera S/N, 46022 Valencia, Spain

Keywords:

3D, Stereo, Dual View, Head Tracking, Interactive Workbench.

Abstract:

Dual view displays allow two users to collaborate on the same task. Specialized computer systems have been

designed to provide each user with a private view of the scene. This paper presents two hardware config-

urations that support independent stereo viewing for two users sharing a common display. Both prototypes

are built using off-the-shelf parts: a 120Hz computer monitor and a 120Hz projector. The result is a func-

tional, responsive and inexpensive dual-view 3D stereo display system where both viewers see stereo on the

same display. We demonstrate our systems’ features with three demos: a competitive game, where each user

has a private stereoscopic view of the game table; a two-user multimedia player, where each user can watch

and hear a different stereoscopic video on the same display and a head-tracked dual stereo 3D model viewer

that provides each user with a correct perspective of the model. The latter demostrator also provides basic

gesture-based interaction.

1 INTRODUCTION

The popularization of 3D in movies and videogames

has driven down the cost of the devices used to im-

plement stereo applications. Modern GPUs provide

enough computing power to render scenes at the re-

quired frame rate, and paired with an emitter and

a pair of shutter glasses that allow the user to play

games in stereo at an affordable price. There are also

some displays that do not require glasses, but they

usually assume that the user is located at some pre-

defined location. Those systems are primarily used in

handheld video consoles, 3D cameras and some cell

phones. On the other hand, 3D stereo hardware has

not been standardized and there are different incom-

patible implementations.

Some applications require providing different

users with private views on the same display. We will

review several commercial implementations of those

applications, though it is hard to find commercial sys-

tems capable of providing two users with two differ-

ent stereoscopic views.

In this paper we present a solution that solves the

problem of reduced spatial resolution and incorrect

perspective for a two-user 3D display. We propose

a system that displays two stereoscopic images in the

same display. Our system can be implemented with a

3D monitor or a 3D projector. We also present three

demos to support our claims.

2 PREVIOUS WORK

Several commercial companies have been interested

in dual view displays. In 2005, Sharp announced

a dual view LCD display that used a parallax bar-

rier superimposed in the LCD to provide two views,

depending on the view direction (Physorg, 2005).

Land Rover launched the Range Rover Sport model

in 2010, a car with a dual view touch screen dis-

play http://www.landrover.com. This display also

uses a parallax barrier to separate each view. Sony has

announced the launch of the Playstation television by

the end of 2011 (Sony, 2011). It is expected to be a

3D dual view television with active glasses with their

own earphones (Ko et al., 2009). The TV can deliver

3D stereo to a single player or a private, fullscreen 2D

view for each user.

The Responsive Workbench uses a head track-

ing to render the scene from each user’s point of

503

de la Re A., Martorell E., Rovelo G., Camahort E. and Abad F..

AN INEXPENSIVE 3D STEREO HEAD-TRACKED DUAL VIEWING SINGLE DISPLAY.

DOI: 10.5220/0003848205030506

In Proceedings of the International Conference on Computer Graphics Theory and Applications (GRAPP-2012), pages 503-506

ISBN: 978-989-8565-02-0

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

view (Agrawala et al., 1997). The workbench had a

144Hz projector and custom modified shutter glasses

for implementing two separate stereoscopic views.

Since four different images are required for two

stereoscopic animations, each eye sees a 36Hz anima-

tion. When one user is seing the current image with

one eye, the other eye’s shutter is closed, as well as

the other user’s shutters.

De la Riviere, Kervengant, Dittlo, Courtois and

Orvain presented a multitouch stereographic tabletop

that uses head tracking to provide two users with their

own viewpoint (De la Rivi

`

ere et al., 2010). The sys-

tem is able to detect when a hand gets close to the

screen.

3 INEXPENSIVE TWO-USER 3D

STEREO MULTIMEDIA

DISPLAY

Our system is designed to be very affordable: we use

only off-the-shelf parts. Our design is based on the

work by (Agrawala et al., 1997).

3.1 Our Prototype

In this section we describe several hardware configu-

rations that support independent stereo views for two

users. Every configuration provides each user with a

60Hz refresh rate video stream for each eye.

The configurations are: 1) a 120Hz monitor, two

active glasses and anaglyphic filters, 2) a 120Hz pro-

jector, two active glasses and anaglyphic filters, 3)

two 60Hz projectors, two polarization filters, two po-

larized glasses and anaglyphic filters, 4) two 120Hz

projectors, two circular polarization filters, and two

active glasses with circular polarization filters and 5)

two 120Hz projectors, two active glasses and interfer-

ence filters.

Configurations 1, 2 and 3 provide relatively low

image quality, due to the use of anaglyphic filters.

They provide stereo monochromatic views to the

users. On the other hand, configurations 4 and 5 pro-

vide high-quality, full color images for each user.

The listed configurations are sorted according to

implementation cost. Configuration 1 is the most af-

fordable at around 800C, while configuration 5 costs

more than 6000 C. None of these costs include the

computer equipment.

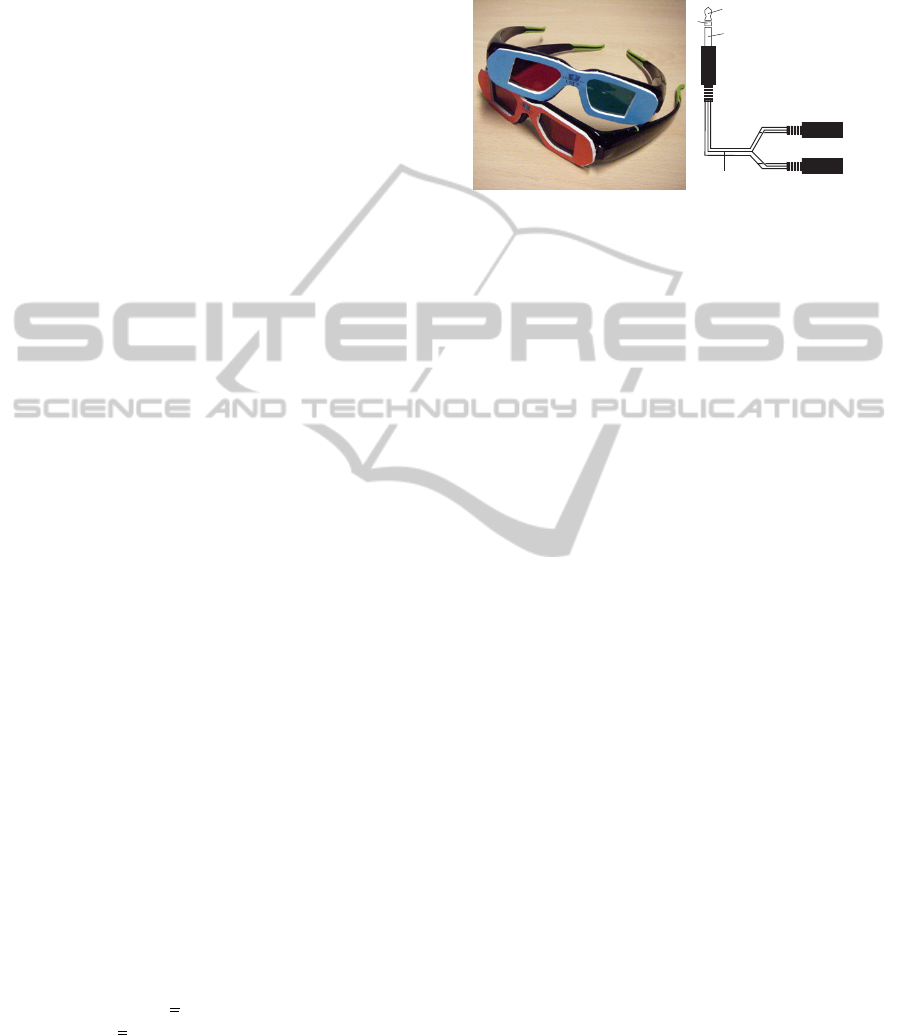

We have built the configurations 1 and 2. Figure 1

left, shows the two options for anaglyphic filtering

supported by our system. The first option uses op-

posite anaglyph filters for the users (red-blue for one

user and blue-red for the other). The second option

uses one color for each user (red-red lenses for one

user and blue-blue lenses for the other).

RL

L

R

L

R

L

R

GND

GND

Computer

RightUser

LeftUser

Figure 1: Left, our prototypes use two NVIDIA 3D Vi-

sion™active glasses with two attached anaglyphic filters.

Only one pair of each configuration is showed. Right, audio

splitter connection diagram.

We use standard quad-buffering for rendering the

animation. We render both users’ left eye in the left

color buffer, and vice versa. To compose one eye’s

view for both users, we convert the full color input

image into a grayscale image. Both users’ left images

are composed into the same color buffer using a red

color mask for one user, and a cyan color mask for

the other. Both images are rendered to that eye’s back

buffer.

We present an implementation that provides a pri-

vate audio channel for two users using only a stereo

sound card. With the simple audio splitter shown in

Figure 1, right, our implementation separates each

channel of the stereo sound card. A software mixer

has been created to take the stereo audio stream from

each video source, convert it to mono, and route it to

one channel of the stereo output.

3.2 Head Tracking

We use a Microsoft Kinect along with OpenNI-

NITE libraries (http://www.openni.org) to perform

head tracking. The Kinect is able to capture depth

images using an IR laser projector and an IR camera.

The depth images are analyzed by the OpenNI-NITE

libraries to detect users in the scene and get their po-

sition and pose. It requires calibrating the skeleton at

least once in each room configuration.

We use OpenSceneGraph (OSG) to render our

scenes. OSG is a scene graph manager based on

OpenGL (http://www.openscenegraph.org). The de-

fault coordinate system used by OSG is shown in

Figure 2. We define the origin of the World Coor-

dinate System (WCS) to be located at the center of

the screen. The XZ plane is parallel to the screen and

Y points into the screen. The Kinect defines its ori-

gin at the center of the IR camera and uses a right

GRAPP 2012 - International Conference on Computer Graphics Theory and Applications

504

handed coordinate system, with the positive X point-

ing to the left, the positive Y pointing up and the pos-

itive Z pointing out into the room. The Kinect mea-

sures the position of the skeleton joints in cm.

To transform the locations of the heads and hands

provided by the Kinect into the WCS, we have to lo-

cate and orient the Kinect with respect to the WCS,

as shown in Figure 2. M

k→w

= T

x y z

R

x

(α)

provides the transformation matrix required to trans-

form from the Kinect coordinate system to the WCS.

(x, y, z) represent the position of the origin of the

Kinect with respect to the WCS and α is the angle

between the WCS’s Y axis and Kinect’s Y axis. This

transformation assumes that both X axes are parallel.

If that is not the case, add another rotation about Y to

account for the difference.

60º

X

Y

Z

X

Y

Z

d

Kinect

OpenSceneGraph

Screen

h

w

Figure 2: The distance between the origin of the WCS

and the Kinect (d) is 36 cm (so, (x, y, z) in the equation is

(0, 0, 36). α is 120

◦

(90

◦

to account for the different orien-

tations of the Z axes plus 30

◦

due of the screen inclination).

Using the equation above, we can compute each

user’s head position in the WCS and therefore a vir-

tual camera can be located at that position to render

the scene from each user’s point of view. The advan-

tage of using the Kinect is that it also provides each

user’s hands positions and a gesture recognizer.

4 DEMO APPLICATIONS

Our demos run on a 3GHz Intel Core2 Duo proces-

sor, with 2 GB of RAM and an NVIDIA Quadro 600

graphics card, a Samsung 3D monitor model 2233RZ

and a Dell projector model S300w installed in a work-

bench. To implement stereoscopy we used two shutter

glasses NVIDIA 3D Vision, synchronized to a single

IR emitter.

4.1 The Battleship Game

In this video game, each user places a number of dif-

ferent battleships on a discrete grid. The battleship

positions of each player are unknown to the other

player.

Figure 3: The Battleship game. Top, unfiltered view of the

game, showing both players’ views. Bottom, cyan player’s

and red player’s views of the game.

We use OpenSceneGraph for user input and

graphic rendering. To separate the scenes of each

player we place two cameras, one at the root node

of each user’s scene graph. The images are rendered

from the two cameras as explained in Section 3.1.

Stereoscopy is handled automatically by OpenScene-

Graph allowing selection of the fusion and interpupil-

lary distances.

4.2 Two-user Stereo Video Player

Our second demo application allows two users to

watch and hear different video and audio sources si-

multaneously on the same display. The video sources

Figure 4: Stereo video player for two users. Top, photo-

graph of the monitor, showing both videos. Bottom, view-

ing the monitor through a red filter and blue filter.

AN INEXPENSIVE 3D STEREO HEAD-TRACKED DUAL VIEWING SINGLE DISPLAY

505

can be both regular 2D content or 3D stereo content.

The active stereo produces a double image made of

the images shown in the filtered views. When the ap-

plication is paused, each user is able to watch a stereo

still image due to the active shutter glasses (Figure 4).

Each user is able to hear the soundtrack of her video.

We implemented this application using OpenGL, ffm-

peg (http://ffmpeg.org) and SDL.

4.3 Stereo 3D Model Dual Viewer

Our third demo shows how to visualize a 3D model

in stereo for two users. Each user sees the correct

perspective from both eyes. It also provides a simple

interaction model to tag points of interest in the scene

or model.

Figure 5: The Stereo 3D model dual viewer. Top, unfiltered

view of the application, showing both users views. Center

and bottom, cyan and red user perspectives of the 3D model.

Using the head position, we transform the Kinect-

relative coordinates to the World Coordinate System

and we define the virtual cameras’ positions for each

user (see Figure 5). We perform the same transfor-

mation to the left hand position to control a virtual

cursor in the scene. This cursor allows the users to in-

troduce tags in a 3D location of the scene. When our

system detects a push gesture from the right hand of a

user, it creates a new tag in the current position of the

3D cursor. We use different colors to identify the user

that created the tag.

5 CONCLUSIONS AND FUTURE

WORK

We have implemented two configurations that can

provide two users with independent 3D stereoscopic

views sharing the same display, using two pairs of

active shutter glasses, anaglyphic filters and both a

120 Hz monitor and a 120Hz projector. For around

1000C, they can be built with off-the-shelf parts. We

also implemented head tracking using a Microsoft

Kinect. This enhances the 3D experience, since we

can render the proper points of view of the scene for

each user’s eyes. We have built three demo applica-

tions that generate two independent stereo animations

on the same screen.

Since our goal was affordability, some compro-

mises had to be made. The anaglyph passive filters

severely affect the color of the original images and

there is a significant loss of perceived brightness due

to the use of two-stage filtering. Still, our applica-

tions provide an interactive 3D stereo animation and

independent audio channels for each user. These are

the basic requirements to implement complex inter-

faces for collaborative applications without special-

ized hardware.

In future implementations, we plan to remove the

anaglyphic filtering to improve the color quality and

brightness using other types of filters.

ACKNOWLEDGEMENTS

This work was partially supported by grant ALFI-3D,

TIN2009-14103-C03-03 of the Spanish Ministry of

Science and Innovation and by the National Council

of Science and Technology of M

´

exico as part of the

Special Program of Science and Technology.

REFERENCES

Agrawala, M., Beers, A. C., McDowall, I., Fr

¨

ohlich, B.,

Bolas, M., and Hanrahan, P. (1997). The two-

user responsive workbench: support for collaboration

through individual views of a shared space. In SIG-

GRAPH ’97, pages 327–332.

De la Rivi

`

ere, J.-B., Kerv

´

egant, C., Dittlo, N., Courtois, M.,

and Orvain, E. (2010). 3d multitouch: when tactile

tables meet immersive visualization technologies. In

ACM SIGGRAPH 2010 Emerging Technologies, SIG-

GRAPH ’10, pages 2:1–2:1, New York, NY, USA.

ACM.

Ko, H. S., Paik, J. W., and Zalewski, G. (2009). Stereo-

scopic screen sharing method and apparatus. United

States Patent US 2010/0177172.

Physorg (2005). World’s first LCD that simultaneously dis-

plays different information in right and left viewing

directions. http://www.physorg.com/news5156.html.

Sony (2011). New 3D display and PS3 accessories debut at

E3. http://blog.us.playstation.com/2011/06/06/new-

3d-display-and-ps3-accessories-debut-at-e3.

GRAPP 2012 - International Conference on Computer Graphics Theory and Applications

506